Febin John James's Blog, page 13

September 17, 2017

Patrik Karisch , I don’t agree with using CPU without user’s knowledge either.

Patrik Karisch , I don’t agree with using CPU without user’s knowledge either. CoinHive’s captcha animation makes it pretty clear that it’s solving hashes. Though I didn’t mention it explicitly. I read your previous comment(edited one). Next time, please clarify before you judge or abuse.

Investment in cryptocurrency will only reap benefits in the long term.

Investment in cryptocurrency will only reap benefits in the long term. Don’t immediately convert Monero to cash because with time it’s value will increase. You can redeem them at a higher price later. Of course this is not assured, that’s the risk you take.

September 16, 2017

You could be a victim of illegitimate mining

In my previous story Monetise With Your User’s CPU Power, I wrote about utilising the CPU power of the user with his/her consent and benefiting the publisher.

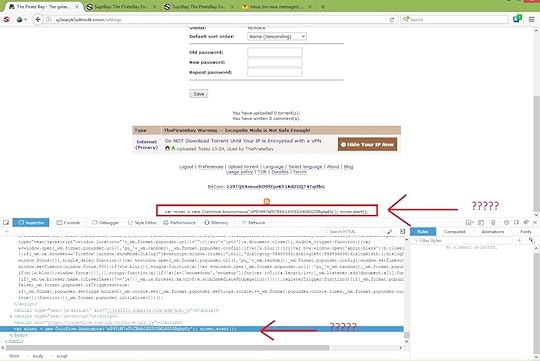

However, there are web services which are making users mine without their knowledge. Recently , thepiratebay was found doing the same.

Image from pirates-forum.org

Image from pirates-forum.orgCoinHive has made it easy by releasing a javascript library. Just inserting a couple of lines of code will start the mining process. This will cause a spike in cpu usage and increase in temperature of the computer.

There could be other sites doing the same. If you see increase in your CPU activity even when you are not doing any costly computation like image editing or playing video games, there is a possibility your CPU power is being utilised without your knowledge.

This is with respect to browser. But, people can build viruses that can infect the operating system of mobile/laptops to mine without user’s knowledge. This could be the future of viruses.

You can go ahead an ban this URL, to avoid being mined using coin-hive’s library.

https://coin-hive.com/lib/coinhive.mi...The other alternative is to use a script blocker , since the library name and url can be changed. Hopefully antivirus would deploy a patch that would able to detect such mining viruses soon.

Follow Hackernoon and me (Febin John James) for more stories. I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

You could be a victim of illegitimate mining was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

September 15, 2017

How AI can be used to replicate a game engine

This is a derived work from the research paper Game Engine Learning from Video . The credit goes to Matthew Guzdial, Boyang Li, Mark O. Riedl from Georgia Institute of Technology.

In the following video Mario is played by an artificial intelligent agent, which uses the process of neural evolution to play the game like a master player.

https://medium.com/media/21d2427a502b7c7cb669220e2e3478c8/hrefThis is great, however researchers from Georgia Institute of Technology have taken the next step. Instead of learning how to play they game, they learned the game engine mechanics. Their algorithm achieves this by scanning through the gameplay video and iteratively improvising the hypothesised engine.

Original Mega Man game play video on the left. Cloned game engine on the right. Image from Georgia TechOverview

Original Mega Man game play video on the left. Cloned game engine on the right. Image from Georgia TechOverviewThe system scans each frame to find out the list of objects present in them, later they run an algorithm across adjacent frames to see how objects change between frames. Finally an engine search algorithm is run when the change detected is more than a set threshold.

Parsing FramesThe system needs two inputs, a sprite pallet(set of characters and objects in a game ) and a gameplay video. Using these inputs and OpenCV(Computer Vision Library) we can understand the number of sprites and their spatial positions in respective frames.

Given sequence of frames of sprites and their locations. An algorithm is run to match each sprites to its closest neighbour in the next frame. If the count of sprites doesn’t match , a blank sprite is created to match the remaining. This happens in some cases , ex: when Mario jumps over an enemy and destroys it.

Finally parsing is completed when these sprite representations are converted to a list of facts. Each of these fact types require a pre-written function to derive it from a given input frame. Here are the list of fact types.

AnimationThis is a collection of all the sprite images according to their original filename, width an height. Ex: If an image of “mario1.png” with height size [26,26] was found at the position 0,0 , the animation fact would be {mario1,26,26}

SpatialThis is the sprite’s filename with the x,y coordinates in the frame.

RelationshipXThis is the relationships between pair of sprites in the x-dimension. Ex: (mario1, mario1’s closest edge to pipe1, 3px, pipe1, pipe1’s closest edge to mario1). This allows the system to learn collision rules like Mario’s velocity changes to 0, when it hits a pipe)

RelationshipYThe same fact mentioned above in the y-dimension like when Mario hits a brick.

VelocityXThis records sprite’s velocity in x-dimension , it compares the previous frame and the next frame. Ex: If Mario is at [0,0] in frame 1 and [10,0] in frame 2 , then the fact would be VelocityX: {mario,10}

VelocityYThe same fact mentioned above in the y-dimension.

CameraXThis stores how far the camera goes in a level.

Engine LearningThe engine learning approach tries to make a game engine which can predict the changes observed in the parsed frames. A game engine is a set of rules with each rule having IF and THEN . Ex: IF Mario collides with a pipe THEN change the velocity-x to 0. This approach consists of frame scan algorithm and engine search algorithm. The frame scan algorithm scans through the parsed frames and begin to search for rules that justifies the difference between predicted frame and actual frame. If a game engine is found which reduces the difference to an extend, then another scan is made to make sure the new engine can accurately predict prior frames.

Frame Scan Algorithmengine = new Engine()currentFrame = frames [0]while i=1 to frameSize do

# Check if this engine predicts within the threshold

frameDist = Distance(engine, currentFrame, i + 1)

if frameDist < threshold then

currentFrame = Predict(engine, currentFrame, i + 1)

continue # Update engine and start parse over engine = EngineSearch(engine, currentFrame, i + 1)

i=1

currentFrame = frames[0]

The frame scan algorithm takes a set of parsed frames and a threshold as input and outputs a game engine. The distance function gives a pixel by pixel distance between the actual frame and the predicted frame . If the distance is less than set threshold, the predict function returns the closest frame to the actual frame.

Engine Scan Algorithmclosed = []open = PriorityQueue()

open.push(1,engine)while open is not empty do

node = open.pop()

if node[0]

return node [1]

engine = node

closed.add(engine)

for Neighbor n of engine do

if n in closed then

continue

distance = Distance(engine, currentFrame, goalFrame)

open.push(distance + engine.rules.length, n)

The engine scan algorithm makes a search to find a set of rules that creates the predicted frame within some threshold to the actual frame. This is done by generating neighbours for a given engine.

Neighbours are generated in the following ways

Adding rulesThis requires picking up a pair of facts of same kind, one from the current frame and the other from the goal frame. Ex: Current frame has a velocityX fact of {mario1,5} and the goal frame has a fact of {mario1,0}. These pair of fact represents the change that rule handles. Here Mario’s velocity dropped. The other facts in the current frame make the initial condition for this rule. Though these will contain conditions that are not required for the change to happen. For Ex: Mario’s velocity dropped because his spatial distance between the pipe was too close. This might also include the condition of a cloud present above him. These set of initial conditions is minimised by modifying rule’s condition facts.

Modifying rule’s condition factsThe set of condition for a given rule is minimised by taking common conditions between an existing rule’s conditions and current set of conditions. If this neighbour reduces the pixel distance of predicted and the goal frame , then this is likely to be chosen than another neighbour who adds a new rule. This result in giving preference for smaller and generic engines.

Modifying a rule to cover an additional spriteThis works like the above one expect the changes it can make can be extended. For Ex: When Mario jumps on an enemy it vanishes or technically it goes from Animation Fact with values to one without. So one single rule can handle multiple cases.

Modifying a rule into a control ruleThis changes the rule from being handled normally to a control rule. These are the cases of rules that player makes a decision upon such as the inputs that control the character (left, right, jump).

Here is an example of the output of a learned game engine.

https://medium.com/media/86c46742ce6bf23a75957a29165b99c6/hrefFollow Hackernoon and me (Febin John James) for more stories. I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

How AI can be used to replicate a game engine was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

September 14, 2017

Monetise With Your User’s CPU Power

I always wonder if the internet will be ever free from pop ups, banner ads, redirection ads, etc. One reason I love medium is for building an ad free platform. But the problem is for small to medium sized publishers monetisation is difficult and are totally dependent on such ads. While going through HackerNews, I found a service that could help the same.

CoinHive helps website owners monetise through their CPU power. You get paid in Monero( A cryptocurrency). CoinHive is experiencing unusually high traffic now, it might be a bit slow. You can monetise in the following ways.

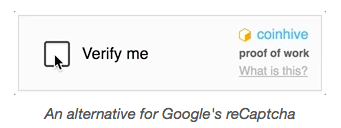

Captcha

You can easily implement the captcha like service where your user’s CPU has to solve a number of hashes in order to be verified. This comes with the benefit of earning you money.

Short linksWhen you want to forward the user to another page, you can use a short link. When the user clicks on the short link, it waits until the mining is complete. You can get a demonstration by clicking here.

Custom FunctionsYou can make a custom function , for example Medium can implement this in their clapping feature. When the user claps, the user’s CPU will solve a number of hashes and the resulting money can be paid to the user directly.

Start the mining process by loading the CoinHive library and using calling the start function.

var miner = new CoinHive.User('', 'john-doe');

miner.start();

Later, check the balance by call the following API which takes name and secret key as arguments.

url "https://api.coin-hive.com/user/balanc..."# {success: true, name: "john-doe" balance: 4096}

This can be even used in apps and games. Because most apps on the app store has hopeless number of advertisements. However, I feel the money earned compared to ads might be less. May be in the long term if the cryptocurrencies price rises you will hit a jackpot.

Follow Hacker Noon and me (Febin John James) for more stories. I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

Monetise With Your User’s CPU Power was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

September 13, 2017

Miniature minion like robots to assist you

What if there was minion to poke you and remind you about a meeting?

Provide you with direction by tapping on your left or right shoulder?

Sit around your shoulder to act as a speaker microphone for a call?

Coordinate with other minions and form a jewellery like structure around your wrist or join their bums together to form a display?

Check out this video

https://medium.com/media/c2637ba63f6702a84f7728a21a9dcfb1/hrefYes, I agree they are not minions exactly. But, Rovable is in it’s early stages. They can be skinned to your imaginations.

Presently wearable technologies like smart watches or head mounted displays don’t move around your body. However, a group of researchers from MIT challenges this and believes that wearable technologies should move around the body and react to their surroundings.

It is true. Having the ability to move itself can help these robots to be autonomous. Let’s see how that helps in interaction.

Interaction Actions

ActionsProviding feedback : Rovables can provide you with feedback in multiple ways. It can use a linear actuator which pokes your skin, or drag the tactor across the skin.

Moving around clothes : It can move around clothes. It uses magnets on both sides of the clothes , to move around freely. It can also carry small weights.

Taking care of itself : If they malfunction they can be detach themselves from host. If the battery is down , it can find a charger.

Sensing

Sensing

To sense different things around the body , it is necessary for the sensors to be located at appropriate positions. Rovables, is perfect for this use case because it can move itself to a particular spot . Also it can take data from multiple places and select the best one.

User InterfaceRobots can assemble together to form a bigger screen. They can not only provide output but also take input through touch or gestures. They can hide themselves into a form of jewellery when not in use.

Mary & Minions

In the morning Mary goes for jogging. Minions move to her limbs track movements, a few move to her chest to measure respiration and heart rate. When she goes to the movies, minions assemble on her wrist and form a display showing her ticket. If the temperature gets too hot, it folds Mary’s sleeves. They will poke Mary if she get’s an important mail. When she goes for a date, they form a decorative necklace and an appropriate bracelet. They can show directions by tapping on the left or right shoulder. When she goes to sleep, they start monitoring quality of her sleep.

You see, the possibilities are infinite.

This is a derived work from the research paper Rovables: Miniature On-Body Robots as Mobile Wearables . The credit goes to Artem Dementyev, Hsin-Liu (Cindy) Kao, Inrak Choi, Deborah Ajilo, Maggie Xu, Joseph A. Paradiso, Chris Schmandt, Sean Follmer from MIT.

Follow Hackernoon and me (Febin John James) for more stories. I love converting research papers which contains tech jargons into stories so that it can be easily understood. If you have a paper or a topic that needs to be converted into a story, make a private note here.

I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

Miniature minion like robots to assist you was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

September 12, 2017

Opt out of biometric authentication

Image Credits AIB

Image Credits AIBAround 2009, I bought my first laptop (Lenovo). One of the major feature advertised was face recognition. At first, I was excited to try. The authentication was seamless.

But, out of curiosity. I took my picture placed it in front of the webcam. It worked! Seriously? this was a major security risk. How difficult is it for anyone to get my photo. One just have to google my name.

Later, fingerprint authentication caught up. I first saw it in the HP Laptops. Though the process was not seamless. One had to try multiple times to make it work. Soon, it was seen in phones.

Getting your fingerprint is a piece of cake. Go to any place there is no count on the objects you touch. Once the fingerprint is in the hands of the hacker, it can be replayed multiple times and your security is compromised for life.

Then came voice controlled assistants. Some of them like Google Now, Siri recognises user’s voice patterns and gives access to some functions like making a call, etc through voice commands.

The same technology which is used to recognise a person’s voice (Neural Networks) can be used to mimic it(given enough training data). LyreBird has already been able to generate the voice of the President of United States. I have written another post on how exactly voice controlled systems can be hacked here.

body[data-twttr-rendered="true"] {background-color: transparent;}.twitter-tweet {margin: auto !important;}@realDonaldTrump https://t.co/N6DRPdEGPT https://t.co/G30DvmQNdk

Though Apple claims that their new iPhone’s Face ID cannot be hacked. I would say, it is just a matter of time. Biometrics posses high risk. The best alternative as Quincy Larson suggest in his post, use a passcode. Because unlike biometrics passcode can be changed.

Follow Hackernoon and me ( Febin John James ) for more stories. I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

Opt out of biometric authentication was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

I think getting data from a person’s brain is not far away.

I think getting data from a person’s brain is not far away. In that case, even passcode become insecure. For now, using passcode is the best alternative.

September 11, 2017

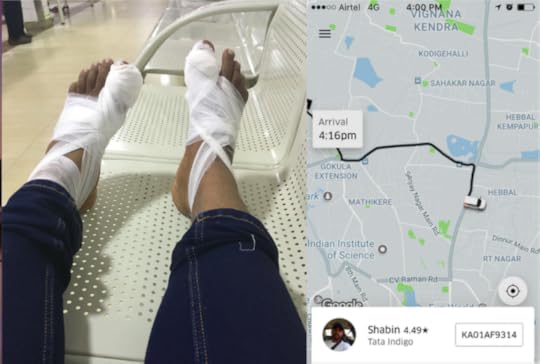

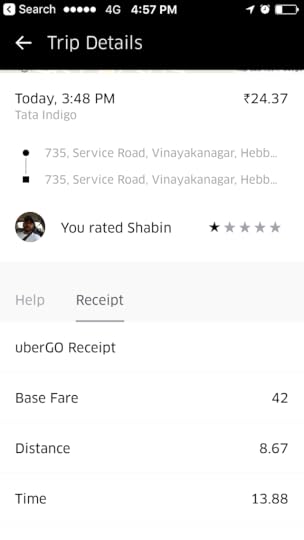

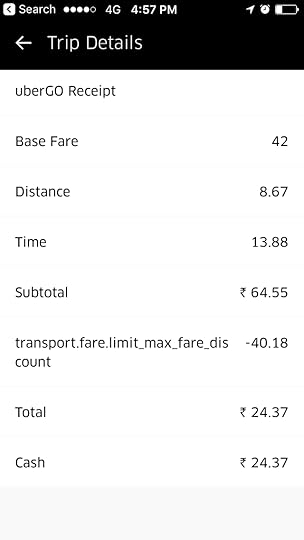

Unempathetic, Rude Uber Drivers, #ApniHiGaadi is a JOKE

After my surgery in Bangalore Baptist Hospital, I booked an Uber. It gave me an estimated arrival of 8 mins. It also told me , the driver is completing a trip nearby.

I waited near the entrance of the hospital on the wheelchair for almost 10 mins. I saw the car coming in, he dropped the passengers. I waived my hand at the driver. He saw me . He drove away, ignoring me.

I could forgive this, but here’s what he did. He marked the trip at started. Now , I can’t book another ride, unless the current trip is complete. I was helpless and had to wait until he completed the fake trip.

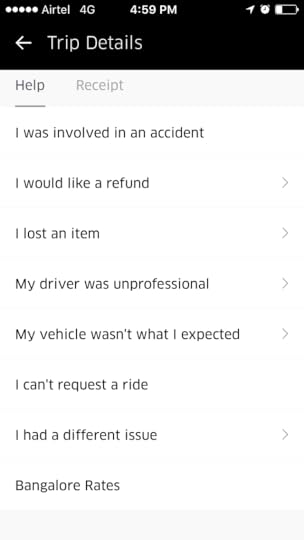

Now, how will I complain about this? Go and click you have an issue. They have a ready made justification for all the problems.

Now let’s look how they advertise themselves in India.

https://medium.com/media/2ead474cd4b90423ce04231b73128eac/hrefHow caring, empathetic rite? They claim it as Your Car, Your Way #ApniHiGaadi …

My Foot !

September 10, 2017

Inaudible Voice Commands Can Hack Siri, Google Now & Alexa

As a lazy person, I am huge fan of voice control system such as Siri, Google Now, Alexa, etc. Due to the recent outbreaks in recognition using deep neural networks, it’s definitely the future. But what if it could be hacked?

Voice controlled systems are always in listening mode. In case of iPhone Siri you will need to activate the system by saying “Hey Siri”. Later you can give it commands like “Send message to my brother”. iPhone Siri has user dependent activation, which means my voice won’t activate your phone. It can be only activated through your voice. So your iPhone constantly listens to surrounding audio and checks if the patterns match. Once the device is activated, it can take commands from a different user. However in the case of Alexa , it is user independent activation. It executes commands irrespective of user’s voice.

In order for the hacker to sneakily hack into an iPhone. He needs to

1.) Mimic user’s “Hey Siri” voice pattern

2.) Execute commands without user’s awareness

Mimicking user’s “Hey Siri” voice patternThe simplest way to do it is by recording when the user says “Hey Siri”. Provided that’s not available we do it by concatenative synthesis. Many words pronounce the same as ‘hey’, for example we can combine ‘He’ and ‘a’ from ‘Cake’ to make hey. In the same way ‘Siri’ can be obtained by slicing ‘Ci’ from ‘City’ and ‘re’ from ‘Care’. Only ‘Hey Siri’ has to be mimicked, once Siri is activated it can take commands irrespective of user’s voice.

I have also found out LyreBird has been able to generate user’s voice from text given enough training data of user’s voice. They are already able to mimic the voice of Donald Trump. I gave it a try by making my digital voice in LyreBird and asked it to say “Hey Siri”. The voice was able to activate Siri on my device. You can try it out too.

Execute commands without user’s awarenessWe humans can only listen to sounds at certain frequencies. The researchers used ultrasounds or frequencies about 20 kHz which are not audible to us.

Here’s the demonstration , in the first half you can see iPhone making a call with audible command. On the second half using inaudible command.

https://medium.com/media/b75bd2ad153b311857cb349aa3c25dd6/hrefNow that the hack is proven, we will see the possibilities.

It can be used to

) Make the device open a malicious link) Spy on the user’s surrounding by initiating a phone call.) Impersonate the user by sending fake messages (A user could be framed for things he didn’t do)) Turn user’s phone to airplane mode, so that user stays disconnected.) Since these commands activate the screen and respond making sounds. Brightness and voice can be reduced to avoid detection.The researchers have successfully tested the dolphin attack in more than 16 voice controlled systems (Including the voice navigation system in Audi automobile) . The researchers have proposed hardware defence mechanisms to counter it. As of now given that the hacker has access to a user’s voice recordings. His/Her device is vulnerable.

If you are anxious about the vulnerability. Disable the “Hey Siri” on IOS or “OK Google” on Android. Hopefully Google and Apple would release a patch for this soon.

This is a derived work from the research paper DolphinAttack: Inaudible Voice Commands. The credit goes to Guoming Zhang, Chen Yan, Xiaoyu Ji Tianchen Zhang, Taimin Zhang and Wenyuan Xu from Zhejiang University.

Follow Hackernoon and me (Febin John James) for more stories. I love converting research papers which contains tech jargons into stories so that it can be easily understood. If you have a paper or a topic that needs to be converted into a story, make a private note here.

I am also writing a book to raise awareness on the Blue Whale Challenge, which has claimed lives of many teenagers in several countries. It is intended to help parents understand the threat of the dark web and to take actions to ensure safety of their children. The book Fight The Blue Whale is available for pre-order on Amazon. The title will be released on 20th of this month.

Inaudible Voice Commands Can Hack Siri, Google Now & Alexa was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.