The Tocqueville Paradox: Why AI Progress Increases Discontent

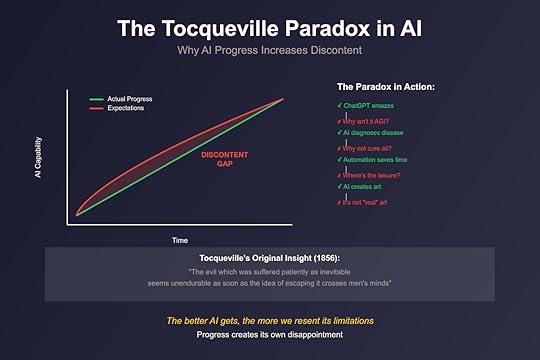

AI capabilities improve exponentially, yet user frustration grows even faster. Each breakthrough that should amaze instead disappoints. Every new model that surpasses previous limits somehow feels more limiting. This is the Tocqueville Paradox in artificial intelligence: the better things get, the more intolerable remaining problems become.

Alexis de Tocqueville observed that the French Revolution erupted not when oppression was worst, but when it was improving. As conditions improved, people’s expectations rose faster than reality could deliver. The same psychological dynamic now drives AI discontent: each improvement raises expectations exponentially, making remaining limitations feel unbearable.

The Original Revolutionary InsightTocqueville’s ObservationTocqueville studied why revolution occurred when it did, not earlier when conditions were objectively worse. His insight: improving conditions make remaining problems intolerable. When people see change is possible, they demand all change immediately.

The paradox operates through relative rather than absolute evaluation. People don’t compare their situation to history but to imagined possibilities. Once improvement begins, anything less than perfection feels like oppression.

This creates revolutionary conditions precisely when things are getting better. The most dangerous moment for a bad system is when it begins to reform. Progress doesn’t satisfy demands; it inflates them.

Expectation InflationThe mechanism is psychological rather than logical. Each improvement proves more is possible. If this changed, why not that? If now, why not immediately? Success breeds impatience, not satisfaction.

Expectation inflation accelerates through social comparison. Others seem to benefit more from improvements. Their gains highlight your remaining limitations. Progress for some feels like regression for others.

AI’s Expectation ExplosionThe Capability AccelerationAI capabilities improve at unprecedented speed. Models that seemed miraculous months ago now seem primitive. Tasks impossible last year are trivial today. Yet satisfaction decreases as capability increases.

Each breakthrough resets the baseline. When AI first produced coherent text, we were amazed. Now we’re frustrated it can’t perfectly reason. When it started coding, we celebrated. Now we’re angry it makes mistakes. Every achievement becomes the new minimum acceptable standard.

The acceleration makes patience impossible. Why wait for next year’s model when next month’s will be better? Why accept current limitations when they’re about to be solved? The speed of progress makes accepting current state feel like accepting defeat.

The Promise GapAI companies contribute to the paradox by overselling capabilities. Every model promises human-level performance. Every update claims breakthrough improvements. Reality, however impressive, disappoints against inflated promises.

The gap compounds through media amplification. Every incremental improvement gets portrayed as revolutionary. Every limitation gets framed as temporary. We’re told AI is almost perfect, making imperfection unbearable.

Marketing messages create impossible expectations. “AI will solve everything” becomes “Why hasn’t AI solved MY problem?” The promise of unlimited capability makes any limitation feel like betrayal.

The Comparison TrapUsers compare AI not to recent history but to imagined futures. Not to human capabilities but to theoretical perfection. Not to what’s possible but to what’s promised. Every comparison makes current AI feel inadequate.

Social media amplifies the trap. Others showcase AI successes while hiding failures. Their achievements highlight your struggles. Everyone else seems to have better AI, making yours feel worse.

The comparison extends to companies. If OpenAI can do this, why can’t others? If ChatGPT works there, why not here? Every successful use case makes unsuccessful ones feel like personal failures.

VTDF Analysis: Satisfaction DestructionValue ArchitectureTraditional value propositions assumed satisfaction increased with capability. More features meant happier users. Better performance meant greater satisfaction. AI breaks this assumption: increasing capability decreases satisfaction.

Value perception becomes relative rather than absolute. Users don’t evaluate what AI can do but what it can’t. Not how much it helps but how much more it should help. Value becomes defined by gaps rather than gains.

This inversion destroys traditional value metrics. User satisfaction scores decrease as capabilities increase. Complaint volumes grow with improvements. Better products create worse experiences.

Technology StackEvery layer of the stack contributes to expectation inflation. Faster inference makes slowness intolerable. Better models make mistakes unacceptable. Smoother interfaces make friction unbearable. Technical improvements create psychological frustrations.

The stack evolution accelerates expectation inflation. Monthly model updates train users to expect constant improvement. Weekly feature releases make stability feel like stagnation. The pace of change becomes the enemy of satisfaction.

Infrastructure improvements compound the problem. When AI responds in milliseconds, seconds feel like hours. When it handles complex tasks, simple failures seem inexcusable. Every capability improvement raises the bar for every other capability.

Distribution ChannelsDistribution strategies amplify the Tocqueville Paradox. Marketing emphasizes breakthrough capabilities. Sales promises transformation. Support deals with disappointment. Every touchpoint inflates expectations that reality deflates.

Channels compete on promise escalation. Each vendor must promise more than competitors. Each update must claim greater improvements. The distribution arms race creates an expectation bubble that must burst.

Customer success becomes customer disappointment management. Not helping users succeed but managing their frustration with limitations. Distribution channels become disappointment channels.

Financial ModelsFinancial models assume satisfaction drives retention. Happy users pay more and stay longer. But the Tocqueville Paradox inverts this: capability improvements that should increase satisfaction actually decrease it.

Pricing strategies compound the problem. Higher prices create higher expectations. Premium tiers promise premium experiences. Every price point becomes a disappointment point.

The model breaks when better products create worse experiences. Churn increases with improvements. Complaints grow with capabilities. Financial success becomes psychological failure.

Real-World FrustrationThe ChatGPT Disappointment CurveChatGPT’s evolution perfectly illustrates the Tocqueville Paradox. Initial amazement at coherent conversation became frustration with factual errors. Excitement about creative writing became anger about repetition. Every improvement highlighted remaining limitations.

User satisfaction peaked early then declined despite continuous improvements. Not because ChatGPT got worse but because expectations rose faster than capabilities. Success created its own failure.

The pattern repeats with each update. Initial excitement about new capabilities quickly becomes frustration with their limitations. The honeymoon period shrinks with each iteration.

The Enterprise DisillusionmentEnterprises experience acute Tocqueville effects. POCs demonstrate amazing possibilities. Pilots reveal frustrating limitations. Production deployments disappoint against inflated expectations. The journey from excitement to frustration accelerates with each stage.

IT departments face impossible expectations. If AI can do this, why not everything? If it works there, why not everywhere? Every successful use case makes unsuccessful ones seem like IT failure.

The paradox makes ROI calculation impossible. Benefits exist but satisfaction doesn’t. Value is created but appreciation isn’t. Objective success becomes subjective failure.

The Developer RageDevelopers experience extreme Tocqueville effects with AI coding assistants. Initial joy at code completion becomes rage at subtle bugs. Appreciation for boilerplate generation becomes frustration with architectural decisions. Every helpful feature makes unhelpful moments more intolerable.

The speed of coding with AI makes slowdowns unbearable. The ease of simple tasks makes complex ones feel impossible. AI assistance makes its absence feel like amputation.

Productivity increases while satisfaction decreases. Developers accomplish more but enjoy it less. The tools that should reduce frustration amplify it.

The Cascade MechanismsExpectation RatchetExpectations only ratchet upward. Each improvement becomes the new baseline. Each capability becomes the new minimum. Expectations rise irreversibly with each advancement.

The ratchet effect accelerates through memory asymmetry. We quickly forget previous limitations but permanently remember promised capabilities. Historical context disappears while future promises persist.

Competition drives the ratchet faster. Each company’s advancement raises expectations for all others. The entire industry gets judged by the best performer’s best moment.

Social AmplificationSocial dynamics amplify the Tocqueville Paradox. Online communities share frustrations more than satisfactions. Complaints get engagement. Success stories get skepticism. The social layer becomes a frustration amplifier.

Influencers contribute by showcasing edge cases. The amazing demo that doesn’t represent typical use. The perfect output from hundreds of attempts. Social media makes exceptional seem expected.

The amplification creates frustration cascades. One user’s complaint triggers others’ recognition of similar frustrations. Collective disappointment exceeds individual experience.

Innovation FatigueConstant improvement creates innovation fatigue. Users can’t keep up with changes. Each update requires relearning. Each improvement breaks existing workflows. Progress becomes exhausting rather than exciting.

The fatigue manifests as resistance. Users stick with older versions. They avoid new features. They resent rather than appreciate improvements. Innovation becomes the enemy of satisfaction.

Strategic ImplicationsFor AI DevelopersManage expectations more than capabilities. Under-promise and over-deliver rather than the opposite. Set realistic boundaries rather than claiming limitless potential. Expectation management matters more than feature development.

Embrace limitations explicitly. Make constraints clear and visible. Explain what AI can’t do before showing what it can. Transparency about limitations reduces frustration more than hiding them.

Slow down visible progress. Constant updates train users to expect impossibly rapid improvement. Batch improvements into meaningful releases. Sustainable pace beats breakneck speed.

For OrganizationsSet realistic timelines and goals. The Tocqueville Paradox makes aggressive AI targets self-defeating. Better to exceed modest goals than miss ambitious ones. Success requires managing psychological as well as technical factors.

Prepare for the disappointment phase. Every AI deployment will experience Tocqueville effects. Plan for the frustration that follows initial excitement. The trough of disillusionment is inevitable, not avoidable.

Focus on change management. The human side of AI deployment matters more than the technical side. Managing expectations, frustrations, and adaptations. Psychology trumps technology.

For UsersRecognize the paradox in yourself. Your frustration may reflect rising expectations rather than falling capabilities. Compare to history, not imagination. Perspective is the antidote to paradox.

Appreciate absolute rather than relative progress. What can you do now that was impossible before? Focus on gains rather than gaps. Gratitude counters frustration.

Adjust expectations to reality. Current AI is neither as good as promised nor as bad as it feels. Realistic expectations enable realistic satisfaction.

The Future of AI SatisfactionThe Maturity PlateauEventually, AI capabilities may plateau, allowing expectations to stabilize. When improvement slows, satisfaction might increase. Paradoxically, slower progress might create greater contentment.

But this assumes AI development actually slows. If capabilities continue exponential growth, expectations might never stabilize. We may face permanent Tocqueville conditions.

Psychological AdaptationHumans might develop psychological adaptations to rapid capability growth. New mental models for evaluating progress. Different frameworks for satisfaction. Evolution of expectation rather than just inflation.

This requires cultural change beyond individual adaptation. Social norms around AI evaluation. Media literacy about capability claims. Collective psychological evolution, not just personal adjustment.

The Post-Expectation EraWe might transcend expectation-based evaluation entirely. Judge AI by present utility rather than future potential. Value actual assistance over promised capability. Presence over promise.

This represents fundamental psychological shift. From future-oriented to present-focused. From comparative to absolute evaluation. A new relationship with technological progress itself.

Conclusion: The Progress TrapThe Tocqueville Paradox reveals a fundamental human truth: improvement creates discontent. The better AI gets, the more frustrated we become. The more it can do, the more intolerable what it can’t do.

This isn’t irrationality but human nature. We’re wired to adapt quickly to improvements and focus on remaining problems. What amazes us today frustrates us tomorrow.

The paradox means AI progress might decrease rather than increase satisfaction. Each breakthrough makes remaining limitations more frustrating. Each success makes failure less acceptable. We’re running on a hedonic treadmill where faster running doesn’t increase happiness.

Understanding the Tocqueville Paradox is essential for navigating AI’s psychological landscape. The challenge isn’t making AI better but managing human response to AI getting better. The revolution isn’t just technological but psychological.

As AI capabilities soar, remember: your frustration doesn’t mean AI is failing. It means it’s succeeding so fast that your expectations are outrunning reality itself.

The post The Tocqueville Paradox: Why AI Progress Increases Discontent appeared first on FourWeekMBA.