World Knowledge Improves AI Apps

Applications built on top of large-scale AI models benefit from the AI model's built-in capabilities without requiring app developers to write additional code. Essentially if the AI model can do it, an application built on top of it can do it as well. To illustrate, let's look at the impact of a model's World knowledge on an app.

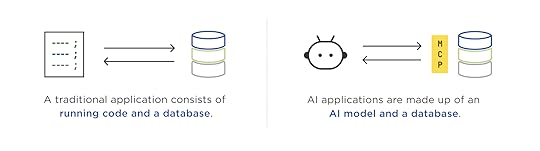

For years, software applications consisted of running code and a database. As a result, their capabilities were defined by coded features and what was inside the database. When the running code is replaced by a large language model (LLM), however, the information encoded in model's weights instantly becomes part of the capabilities of the application.

With AI apps, end users are no longer constrained by the code developers had the time and foresight to write. All the World knowledge (and other capabilities) in an AI model are now part of the application's logic. Since that sounds abstract let's look at a concrete example.

I created an AI app with AgentDB by uploading a database of NBA statistics spanning 77 years and 13.6 million play-by-play records. When I add the MCP link AgentDB makes for me to Anthropic's Claude, I have an application consisting of a database optimized for AI model use, and an AI model (Claude) to use as the application's brain. Here's a video tutorial on how to do this yourself.

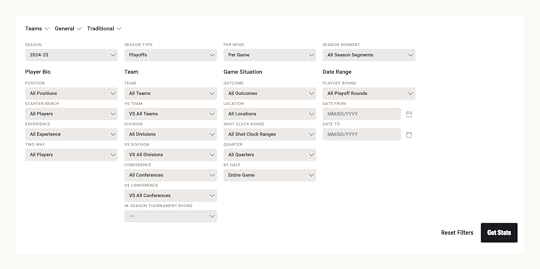

In the past a developer would need to write code to render the user interface for an application front-end to this database. That code would determine what kind of questions people could get answers to. Usually this meant a bunch of UI input elements to search and filter games by date, team, player, etc. The NBA's stats page (below) is a great example of this kind of interface.

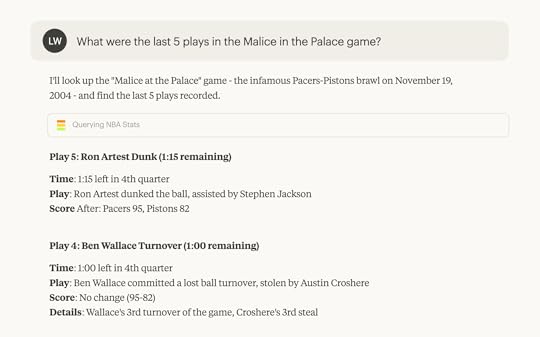

But no matter how much code developers write, they can't cover all the ways people might want to interact with information about the NBA's 77 years. For instance, a question like "What were the last 5 plays in the Malice in the Palace game?" requires either running code that can translate malice in the palace to a specific date and game or an extra field in the database for game nicknames.

When a large language model is an application's compute, however, no extra code needs to be written. The association between Malice in the Palace and November 19, 2004 is present in an AI model's weights and it can translate the natural language question into a form the associated database can answer.

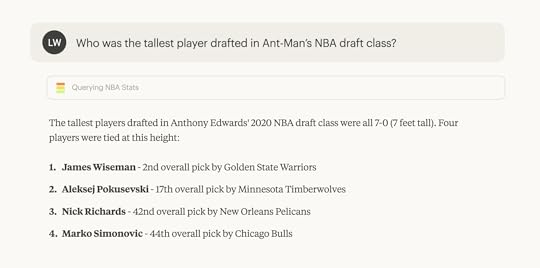

An AI model can use its World knowledge to translate people's questions into the kind of multi-step queries needed to answer what seem like simple questions. Consider the example below of: "Who was the tallest player drafted in Ant-Man’s NBA draft class?" We need to figure what player Ant-Man refers to, what year he was drafted, who else was drafted then, get all their heights, and then compare them. Not a simple query to write by hand but with AI acting as an application's brain... it's quick and easy.

World knowledge, of course, isn't the only capability built-in to large-language models. There's multi-language support, vision (for image parsing), tool use, and more emerging. All of these are also application capabilities when you build apps on top of AI models.

Luke Wroblewski's Blog

- Luke Wroblewski's profile

- 86 followers