The AI GPU Wars

NVIDIA’s Fortress Under Siege

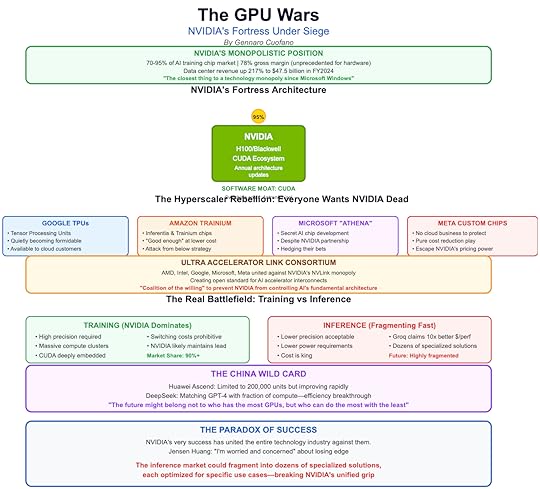

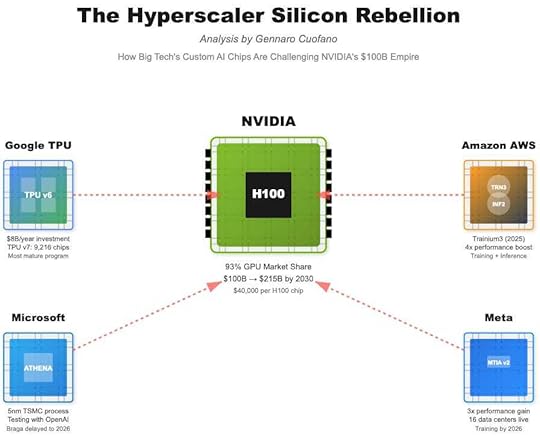

NVIDIA’s Fortress Under SiegeNVIDIA has built the closest thing to a technology monopoly since Microsoft’s Windows dominance, controlling 70-95% of AI training chips with margins that would make luxury brands envious. Their 73-75% gross margin on hardware is virtually unprecedented—Intel and AMD struggle to reach half that (at least not on advanced GPUs). But paradoxically, NVIDIA’s very success has united the entire technology industry against them.

The hyperscaler rebellion represents the most serious threat to NVIDIA’s dominance. Google’s Tensor Processing Units (TPUs) have quietly become a formidable alternative, powering much of Google’s own AI workload and increasingly available to cloud customers. Amazon’s Trainium and Inferentia chips attack from below, offering “good enough” performance at dramatically lower costs. Microsoft, despite its deep partnership with NVIDIA, is secretly developing its own AI chips codenamed “Athena.” Even Meta, which has no cloud business to protect, is designing custom chips simply to escape NVIDIA’s pricing power.

The formation of the Ultra Accelerator Link consortium in 2024 represents a technological “coalition of the willing” against NVIDIA’s NVLink monopoly. AMD, Intel, Google, Microsoft, Meta, and others have united to create an open standard for connecting AI accelerators. This isn’t just about creating alternatives—it’s about preventing NVIDIA from controlling the fundamental interconnect architecture that will define AI infrastructure for the next decade.

But NVIDIA isn’t standing still. Jensen Huang has committed to releasing new architectures annually instead of biannually, a pace that would have been considered impossible just years ago. The new Blackwell architecture promises dramatic efficiency improvements.

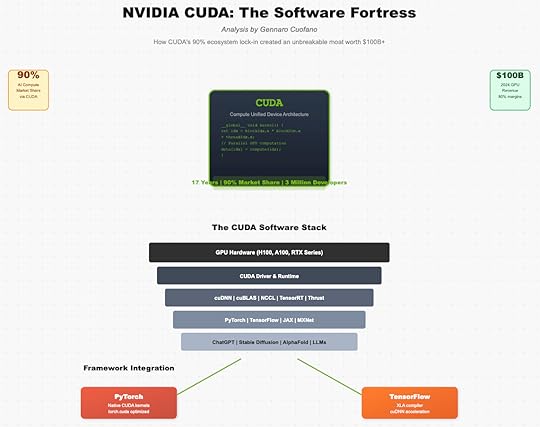

More cleverly, NVIDIA is making itself indispensable through software, not just hardware. Their CUDA ecosystem has become so deeply embedded in AI development that switching costs are astronomical.

The real battlefield is shifting from training to inference—the deployment of AI models for real-world tasks. While NVIDIA dominates training, inference presents different requirements: lower precision, lower power, lower cost. Companies like Groq claim 10x better inference performance per dollar. Cerebras offers wafer-scale chips optimized for specific inference workloads. The inference market could fragment into dozens of specialized solutions, each optimized for specific use cases.

China’s response adds another dimension to the GPU wars. Huawei’s Ascend chips, while currently limited to 200,000 units annually, represent China’s determination to achieve semiconductor sovereignty.

More importantly, Chinese companies are pioneering new approaches to AI efficiency that could make raw computational power less relevant. DeepSeek’s ability to match GPT-4 performance with a fraction of the compute suggests that the future might belong not to who has the most GPUs, but who can do the most with the least.

The emergence of quantum-classical hybrid computing adds a wild card to the GPU wars. While full quantum computing remains years away, quantum annealers and quantum-inspired algorithms could provide exponential speedups for specific AI workloads. The company that first successfully integrates quantum acceleration into AI training could instantly obsolete hundreds of billions in classical GPU infrastructure.

The post The AI GPU Wars appeared first on FourWeekMBA.