R. Scott Bakker's Blog, page 26

December 6, 2012

www.rscottbakker.com

Aphorism of the Day: To be oblivious is to be heroic for so long as your luck holds.

.

It’s supposed to be the other guy, that guy, the no-conscience shill who sees capital opportunities no matter where he turns. But no, it turns out I’m that bum. And now my name, the one I inherited from my grandfather, only to have rescinded when that all fell through, stranding me with the second, and a prescient mother who insisted I sign the ‘R’ on everything I did, leading to innumerable sideways comments, mostly from educators (because then, as now, I was big for my age), now my name, the little crane that has plucked me from every crowd, hauled my soul up by the hair every time I have sinned, has become computer code, commercial coordinates, pinning me like a butterfly, or better yet a beetle, too ugly to be decorative, yet calling out my wares all the same. Makes me feel webby.

I’ve gone on and on about how I needed a skull for Three Pound Brain, or at the very least a toupe, something to disguise my cerebral excesses, convince that steady stream of window shoppers that pass through these lobes (generally to flee), that I can actually write a ripping yarn as well. And now I’ve gone and done it. It’s the beta version, and I’m groping for quora, because this shit is like tear gas to me. It all feels obnoxious, like the real fifth element is greed. It all feels like I’m aping the moves of those far more graceful.

Forgive the semantic origami. Funny how tones come across you, how much defense you can pack into pixels on a screen. Art, like all great adaptations, fortifies.

On a different note, next Monday Three Pound Brain will feature an awesome post by Benjamin Cain, another soul bent on exploring the intersection between pulp culture and philosophical speculation on our incredible shrinking future. Think Spinoza, World War Z, and full-frontal Futurama. According to Ben, Nietzsche forgot to shoot God in the head…

December 4, 2012

The Philosopher and the Cuckoo’s Nest

Definition of Day - Introspection: A popular method of inserting mental heads up neural asses.

.

Question: How do you get a philosopher to shut up?

Answer: Pay for your pizza and tell him to get the hell off your porch.

I’ve told this joke at public speaking engagements more times than I can count, and it works: the audience cracks up every single time. It works because it turns on a near universal cultural presumption of philosophical impracticality and cognitive incompetence. This presumption, no matter how much it rankles, is pretty clearly justified. Whitehead’s famous remark that all European philosophy is “a series of footnotes to Plato” is accurate so far as we remain as stumped regarding ourselves as were the ancient Greeks. Twenty-four centuries! Keeping in mind that I happen to be one of those cognitive incompetents, I want to provide a sketch of how we theorists of the soul could have found ourselves in these straits, as well as why the entire philosophical tradition as we know it is almost certainly about to be swept away.

In a New York Times piece entitled “Don’t Blink! The Hazards of Confidence,” Daniel Kahneman writes of his time in the Psychology Branch of the Israeli Army, where he was tasked with evaluating candidates for officer training by observing them in a variety of tests designed to isolate soldiers’ leadership skills. His evaluations, as it turned out, were almost entirely useless. But what surprised him was the way knowing this seemed to have little or no impact on the confidence with which he and his fellows submitted their subsequent evaluations, time and again. He was so struck by the phenomenon that he would go on to study it as the ‘illusion of validity,’ a specific instance of the general role the availability of information seems to plays in human cognition–or as he would later term it, What-You-See-Is-All-There-Is, or WYSIATI.

The idea, quite simply, is that because you don’t know what you don’t know, you tend, in many contexts, to think you know all that you need to know. As he puts it in Thinking, Fast and Slow:

An essential design feature of the associative machine is that it represents only activated ideas. Information that is not retrieved (even unconsciously) from memory might as well not exist. [Our automatic cognitive system] excels at constructing the best possible story that incorporates ideas currently activated, but it does not (cannot) allow for information it does not have. (2011, 85)

As Kahneman shows, this leads to myriad errors in reasoning, including our peculiar tendency in certain contexts to be more certain about our interpretations the less information we have available. The idea is so simple as to be platitudinal: only the information available for cognition can be cognized. Other information, as Kahneman says, “might as well not exist” for the systems involved. Human cognition, it seems, abhors a vacuum.

The problem with platitudes, however, is that they are all too often overlooked, even when, as I shall argue in this case, their consequences are spectacularly profound. In the case of informatic availability, one need only look to clinical cases of anosognosia to see the impact of what might be called domain specific informatic neglect, the neuropathological loss of specific forms of information. Given a certain, complex pattern of neural damage, many patients suffering deficits as profound as lateralized paralysis, deafness, even complete blindness, appear to be entirely unaware of the deficit. Perhaps because of the informatic bandwidth of vision, visual anosognosia, or ‘Anton’s Syndrome,’ is generally regarded as the most dramatic instance of the malady. Prigatano (2010) enumerates the essential features of the syndrome as following:

First, the patient is completely blind secondary to cortical damage in the occipital regions of the brain. Second, these lesions are bilateral. Third, the patient is not only unaware of her blindness; she rejects any objective evidence of her blindness. Fourth, the patient offers plausible, but at times confabulatory responses to explain away any possible evidence of her failure to see (e.g., “The room is dark,” or “I don’t have my glasses, therefore how can I see?”). Fifth, the patient has an apparent lack of concern (or anosodiaphoria) over her neurological condition. (456)

Obviously, the blindness stems from the occlusion of raw visual information. The second-order ‘blindness,’ the patient’s inability to ‘see’ that they cannot see, turns, one might suppose, on the unavailability of information regarding the unavailability of visual information. At some crucial juncture, the information required to process the lack of visual information has gone missing. As Kahneman might say, since our automatic cognitive system is dedicated to the construction of ‘the best possible story’ given only the information it has, the patient confabulates, utterly convinced they can see even though they are quite blind.

Anton’s Syndrome, in other words, can be seen as a neuropathological instance of WYSIATI. And WYSIATI, conversely, can be seen as a non-neuropathological version of anosognosia. What I want to suggest is that philosophers all the way back to the ancient Greeks have in fact suffered from their own version of Anton’s Syndrome–their own, non-neuropathological version of anosognosia. Specifically, I want to argue that philosophy has been systematically deluded into thinking their intuitions regarding the soul in any of its myriad incarnations–mind, consciousness, being-in-the-world, and so on–actually provides a reliable basis for second-order claim-making. The uncanny ease with which one can swap the cognitive situation of the Anton’s patient for that of the philosopher may be no coincidence:

First, the philosopher is introspectively blind secondary to various developmental and structural constraints. Second, the philosopher is not aware of his introspective blindness, and is prone to reject objective evidence of it. Third, the philosopher offers plausible, but at times confabulatory responses to explain away evidence of his inability to introspect. And fourth, the philosopher often exhibits an apparent lack of concern for his less than ideal neurological constitution.

What philosophers call ‘introspection,’ I want to suggest, provides some combination of impoverished information, skewed information, or (what amounts to the same) information matched to cognitive systems other than those employed in deliberative cognition, without–and here’s the crucial twist–providing information to this effect. As a result, what we think we see becomes all there is to be seen, as per WYSIATI. If the informatic and cognitive limits of introspection are not available for introspection (and how could they be?), then introspection will seem, curiously, limitless, no matter how severe the actual limits may be.

Now the stakes of this claim are so far-reaching that I’m sure it will have to seem preposterous to anyone with the slightest sympathy for philosophers and their cognitive plight. Accusing philosophers of suffering introspective anosognosia is basically accusing them of suffering a cognitive disability (as opposed to mere incompetence). So, in the interests of making my claim somewhat more palatable, I will do what philosophers typically do when they get into trouble: offer an analogy.

The lowly cuckoo, I think, provides an effective, if peculiar, way to understand this claim. Cuckoos are ‘obligate brood parasites,’ which is to say, they exclusively lay their eggs in the nests of other birds, relying on them to raise their chick (who generally kills the host bird’s own offspring) to reproductive age. The entire species, in other words, relies on exploiting the cognitive limitations of birds like the reed warbler. They rely on the inability of the unwitting host to discriminate between the cuckoo’s offspring and their own offspring. From a reed warbler’s standpoint, the cuckoo chick just is its own chick. Lacking any ‘chick imposter detection device,’ it simply executes its chick rearing program utterly oblivious to the fact that it is perpetuating another species’ genes. The fact that it does lack such a device should come as no surprise: so long as the relative number of reed warblers thus duped remains small enough, there’s no evolutionary pressure to warrant the development of one.

What I’m basically saying here is that humans lack a corresponding ‘imposter detection device’ when it comes to introspection. There is no doubt that we developed the capacity to introspect to discharge any number of adaptive behaviours. But there is also no doubt that ‘philosophical reflection on the nature of the soul’ was not one of those adaptive behaviours. This means that it is entirely possible that our introspective capacity is capable of discharging its original adaptive function while duping ‘philosophical reflection’ through and through. And this possibility, I hope to show, puts more than a little heat on the traditional philosopher.

‘Metacognition’ refers to our ability to know our knowledge and our skills, or “cognition about cognitive phenomena,” as Flavell puts it. One can imagine that the ability of an organism to model certain details of its own neural functions and thus treat itself as another environmental problem requiring solution would provide any number of evolutionary benefits. It pays to assess and revise our approaches to problems, to ask what it is we’re doing wrong. It likewise pays to ‘watch what we say’ in any number of social contexts. (I’m sure everyone has that one friend or family member who seems to lack any kind of self-censor). It pays to be mindful of our moods. It pays to mindful of our actions, particularly when trying to learn some new skill.

The issue here isn’t whether we possess the information access or the cognitive resources required to do these things: obviously we do. The question is whether the information and cognitive resources required to discharge these metacognitive functions comes remotely close to providing us with what we need to answer theoretical questions regarding mind, consciousness, or being-in-the-world.

This is where the shadow cast by the mere possibility of introspective anosognosia becomes long indeed. Why? Because it demonstrates the utter insufficiency of our intuition of introspective sufficiency. It demonstrates that what we conceptualize as ‘mind’ or ‘consciousness’ or ‘being-in-the-world’ could very well be a ‘theoretical cuckoo,’ even if the information it accesses is ‘warbler enough’ for the type of metacognitive practices described above. Is a theoretically accurate conception of ‘consciousness’ required to assess and revise our approaches to problems, to self-censor, to track or communicate our moods, to learn some new skill?

Not at all. In fact, for all we know, the grossest of distortions will do.

So how might we be able to determine whether the consciousness we think we introspect is a theoretical cuckoo as opposed to a theoretical warbler? Since relying on introspection simply begs the question, we have to turn to indirect evidence. We might consider, for instance, the typical symptoms of insufficient information or cognitive misapplication. Certainly the perennial confusion, conundrum, and intractable debate that characterize traditional philosophical speculation on the soul suggest that something is missing. You have to admit the myriad explananda of philosophical reflection on the soul smack more than a little of Rorschach blots: everybody sees something different–astoundingly so, in some cases. And the few experiential staples that command a reasonable consensus, like intentionality or nowness, continue to resist analysis, let alone naturalization. One need only ask, What would the abject failure of transcendental philosophy look like? A different kind of perennial confusion, conundrum, and intractable debate? Sounds pretty fishy.

In other words, it’s painfully obvious that something has gone wrong. And yet, like the Anton’s patient, the philosopher insists they can still see! “What of the apriori?” they cry. “What of conditions of possibility?” Shrug. A kind of low-dimensional projection, neural interactions minus time and space? But then that’s the point: Who knows?

Meanwhile it seems very clear that something is rotten. The audience’s laughter is too canny to be merely ignorant. If you’re a philosopher, you feel it I suspect. Somehow, somewhere… something…

But the truly decisive fact is that the spectre of introspective anosognosia need only be plausible to relieve traditional philosophy of its transcendental ambitions. This particular skeptical ‘How do you know?’ unlike those found in the tradition, is not a product of the philosopher’s discursive domain. It’s an empirical question. We have been relegated to the epistemological lobby. Only cognitive neuroscience can tell us whether the soul we think we see is a cuckoo or not.

For better or worse, this happens to be the time we live in. Post-transcendental. The empirical quiet before the posthuman storm.

In retrospect, it will seem obvious. It was only a matter of time before they hung us from hooks with everything else in the packing plant.

Fuck it. The pizza tastes just as good, either way.

November 30, 2012

Truth, Evicted: Notes Toward Naturalizing the ‘View from Nowhere’

Aphorism of the Day: In the graphic novel of life, Truth denotes those panels bigger than the page.

.

In his latest book, Constructing the World, David Chalmers begins by invoking the famous ‘Laplacean intellect’ for whom the future and the past “would be present before its eyes” because it could access all the facts pertaining to structure and function in a deterministic universe. In order to pluck ‘Laplace’s demon’ from the all the damning criticism it has received regarding quantum indeterminacy and subjective facts, he provides it with the informatic access it needs to overcome these problems. What Chalmers is after is something he calls ‘scrutability,’ the notion that, in principle, there exists a compact class of truths from which all truths can be determined given a sufficiently powerful intellect.

In the actual world, we may suppose, one truth is that there are no Laplacean demons. But no Laplacean demon could know that there are no Laplacean demons. To avoid this problem, we could require the demon to know all the truths about its modified world rather than the actual world. But now the demon has to know about itself and a number of paradoxes threaten. There are paradoxes of complexity: to know the whole universe, the demon’s mind needs to be as complex as the whole universe, even though it is just one part of the universe. There are paradoxes of prediction: the demon will be able to predict its own actions and then try to act contrary to the prediction. And there are paradoxes of knowability: if there is any truth q that the demon never comes to know, perhaps because it has never entertains q, then it seems the demon could never know the true proposition that q is a truth that it does not know.

To avoid these paradoxes, we can think of the demon as lying outside the world it is trying to know. (xv)

Constructing the World is raised upon the notion that ‘scrutability’–the thesis that one can derive all truths from the proper set of partial truths, given the proper inferential resources–can provide hitherto unappreciated ways and means to approach a number of issues in philosophy. My concern, however, pretty much begins and ends with this single quote, this tale of truth evicted. Why? Because of the beautiful way it illustrates how the view from nowhere has to be, quite literally, from nowhere.

This is my hunch. I think that what we call ‘truth’ is simply a heuristic, a way for a neural system possessing certain, structurally enforced information access and cognitive processing constraints to conserve resources by exploiting those limitations. Truth, I want to suggest, is a product of informatic neglect, a result of the insuperable difficulty human brains have cognizing themselves as human brains, which is to say, as another causal channel in their causal environments.

Now all of this is wildly speculative, but I submit that the parallels are not simply interesting, but striking, enough to warrant investigation–no matter what one thinks of Blind Brain Theory. Who knows? it could lead to the naturalization of truth.

Neural systems are primarily environmental intervention machines, which is to say, the bulk of their resources are dedicated to inserting themselves in effective causal relationships with their environment. This requires them to be predictive machines, to somehow isolate causal regularities out of the booming, buzzing confusion of their environments. And this puts them in a pretty little pickle. Why? Because sensory effects are all they have to go on. They literally have to work backward from regularities in sensory input to regularities in their environment.

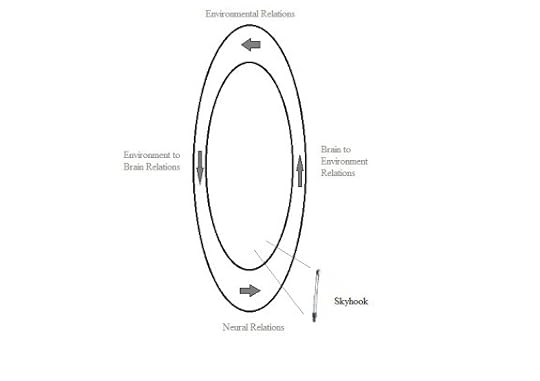

So let’s draw a distinction between two causal axes, the first, which we will call medial, pertaining to sensory and neural relations of cause and effect, the second, which we will call lateral, pertaining to environmental relations of cause and effect.

Depicted this way, the problem can be characterized as one of extracting lateral regularities (predictive power) from medial streams of information. With this distinction it’s easy to see that any system that overcomes this problem will suffer what might be called ‘global medial neglect.’ The point of the system is to literally allow the lateral regularities in its environment to drive its behavioural outputs in efficacious ways. The idea, in other words, is to ‘bind’ medial causal regularities to lateral causal regularities in a way that allows the system to predict and selectively ‘bind’ lateral causal regularities in its environment via behaviour. Neural systems are designed to be ‘environmentally orthogonal,’ to be the kind of parasitic information economies they need to be to effectively parasitize their environment. ‘Cognition,’ on this model, simply means ‘behaviourally effective medial enslavement.’ Environmental knowledge is environmental power.

So, to behaviourally command environmental systems, a neural system has to be perceptually commanded by those systems. The ‘orthogonal relationship’ between the neural and the environmental systems refers to the way the machinery of the former is dedicated to the predictive recapitulation of the latter via its ambient sensory effects (such as the reflection of light).

So far so good. We have medial neglect: the way the orthogonal relation between the neural and the environmental necessitates the blindness of the neural to the neural. Since the resources of the neural system are dedicated to modelling the environmental system, the neural system becomes the one part of its environment that it cannot model–at least not easily.

We also have what might be called encapsulation: the way information unavailable to the neural system cannot impact its processing. This means the neural system, at all turns and at all times, is bound by what might be called a ‘only game in town effect.’ At any given time, the information it has to go on is all the information it has to go on.

Now let’s define a ‘perspective’ as the sum of lateral information available to an environmentally situated neural system. This definition allows us to conceptualize perspectives in privative terms. Though a product of a neural system, a perspective would have no access to the neural intricacies of that system. A perspective, in other words, would have no real access to its own causal foundations. And this is just to say that a perspective on an environment would lack basic information pertaining to its natural relation to that environment.

Now a perspective, of course, is simply a view from somewhere. Given the definition above, however, we can see that it is a view from somewhere that has, for very basic structural reasons, difficulty with the ‘from somewhere.’ In particular, medial neglect means that neural system will be unable to situate its own functions in its environmental models. This means, curiously, that perspective, from the perspective of itself, will appear to both belong and not belong to its environments.

Another way to put this is to say that perspective, from the perspective of itself, will appear to be both a view from somewhere and a view from nowhere. The amazing thing about this account is that it appears to be from nowhere for the very same reason paradox pries the Laplacean demon out of somewhere into nowhere. Mechanism cannot admit a computational system that does not suffer medial neglect. The Laplacean demon, to know itself, would have to know to its knowing, which means it has another knowing to know, which means it has another knowing to know, and so on, and so on. The only way to escape this regress is to posit some kind of unknown knowing. This is why the Laplacean demon, in order to know the whole universe, has to stand outside the universe–or nowhere. It cannot itself be known.

Let’s unpack this with an example.

A ‘view from somewhere,’ you could say, is a situated information channel, or in other words, an information channel possessing any number of access and processing constraints. So an ancient Sumerian astrologer with Alzheimer’s, for instance, isn’t going to know much about the moon. A ‘view from nowhere,’ conversely, is a channel possessing no access or processing constraints. Laplace’s demon can tell you everything you could possibly want about the moon.

Here’s the thing. It’s not that we’re believing machines, it’s that we’re machines. Even though the ancient Sumerian astrologer with Alzheimer’s doesn’t know anything about the moon, don’t try telling him that. Information is mechanical, which means that so long as it’s effective, it’s canonical. Given medial neglect, our Sumerian friend will have difficulty situating his cognitive perspective in its time and place. Certainly he will admit that he lives at a certain time and place, that he is situated, but his cognitive perspective will seem to stand outside that time and place, especially since what he knows about the moon is entirely independent on where or when he happens to find himself.

He will confuse himself, in other words, for a Laplacean demon.

There’s the stuff you have to peer around, the flags that spur you to sample various positions and relations relative to said stuff. And there’s the stuff you’ve seen enough of, the stuff without flags. The problem is, without the flags, the ‘exploratory’ systems shut down, and the information becomes detached from contextual cues. ‘Somewhere’ fades away, leaving the information–a host of canonized heuristics–hanging in the nowhere of neglect. This is our baseline, all the information we have when initiating action. Thanks to medial neglect, this baseline almost entirely eludes our ability to make explicit.

This is where a cornucopia of natural explanatory possibilities suggest themselves.

1) This seems to explain, not so much why we each have our own ‘truth’ (the brute difference between our brains explains that), but why we have such difficulty recognizing that our disagreements merely pertain to those brute differences. This explains, in other words, why universalism is the assumptive default, and the ability to relativize our beliefs is a hard won cognitive achievement.

2) This seems to explain why implicit assumptions invariably trump explicit beliefs in the absence of conscious deliberation–why we’re more prone to act as we assume rather than act as we say.

3) Related to (1), this seems to explain why we suffer bias blindspots like those characteristic of asymmetric insight, why the cognitive limitations of the other tend to be glaring to the extent ours are invisible.

4) This seems to explain why ‘truth’ goes without saying, and why falsehood always takes the form of truth with a flag.

5) Perhaps this is the reason for the intuitive attraction of deflationary theories of truth: it poses in logical form the natural fact that information is sufficient unless flagged otherwise.

6) This seems to explain why contextualizing claims has the effect of relativizing them, which is to say, depleting them of ‘truth.’ Operators like, ‘standpoint,’ ‘vantage,’ ‘point-of-view,’ intuitively impose any number of informatic access and processing constraints on what was, previously, a virtual ‘view from nowhere.’

7) Perhaps this is the reason propositional attitudes short-circuit compositionality.

November 23, 2012

The Posthuman as Evolution 3.0

Aphorism of the Day: Knowing that you know that I know that you know, we should perhaps, you know, spark a doob and like, call the whole thing off.

.

So for years now I’ve had this pet way of understanding evolution in terms of effect feedback (EF) mechanisms, structures whose functions produce effects that alter the original structure. Morphological effect feedback mechanisms started the show: DNA and reproductive mutation (and other mechanisms) allowed adaptive, informatic reorganization according to the environmental effectiveness of various morphological outputs. Life’s great invention, as they say, was death.

This original EF process was slow, and adaptive reorganization was transgenerational. At a certain point, however, morphological outputs became sophisticated enough to enable a secondary, intragenerational EF process, what might be called behavioural effect feedback. At this level, the central nervous system, rather than DNA, was the site of adaptive reorganization, producing behavioural outputs that are selected or extinguished according to their effectiveness in situ.

For whatever reason, I decided to plug the notion of the posthuman into this framework the other day. The idea was that the evolution from Morphological EF to Behavioural EF follows a predictable course, one that, given the proper analysis, could possibly tell us what to expect from the posthuman. The question I had in my head when I began this was whether we were groping our way to some entirely new EF platform, something that could effect adaptive, informatic reorganization beyond morphology and behaviour.

First, consider some of the key differences between the processes:

Morphological EF is transgenerational, whereas Behavioural EF is circumstantial – as I mentioned above. Adaptive informatic reorganization is therefore periodic and inflexible in the former case, and relatively continuous and flexible in the latter. In other words, morphology is circumstantially static, while behaviour is circumstantially plastic.

Morphological EF operates as a fundamental physiological generative (in the case of the brain) and performative (in the case of the body) constraint on Behavioural EF. Our brains limit the behaviours we can conceive, and our bodies limit the behaviours we can perform.

Morphologies and their generators (genetic codes) are functionally inseparable, while behaviours and their generators (brains) are functionally separable. Behaviours are disposable.

Defined in these terms, the posthuman is simply the point where neural adaptive reorganization generates behaviours (in this case, tool-making) such that morphological EF ceases to be a periodic and inflexible physiological generative and performative constraint on behavioural EF. Put differently, the posthuman is the point where morphology becomes circumstantially plastic. You could say tools, which allow us to circumvent morphological constraints on behaviour, have already accomplished this. Spades make for deeper ditches. Writing makes for bottomless memories. But tool-use is clearly a transitional step, ways to accessorize a morphology that itself remains circumstantially static. The posthuman is the point where we put our body on the lathe (with the rest of our tools).

In a strange, teleonomic sense, you could say that the process is one of effect feedback bootstrapping, where behaviour revolutionizes morphology, which revolutionizes behaviour, which revolutionizes morphology, and so on. We are not so much witnessing the collapse of morphology into behaviour as the acceleration of the circuit between the two approaching some kind of asymptotic limit that we cannot imagine. What happens when the mouth of behaviour after digesting the tail and spine of morphology, finally consumes the head?

What’s at stake, in other words, is nothing other than the fundamental EF structure of life itself. It makes my head spin, trying to fathom what might arise in its place.

Some more crazy thoughts falling out of this:

1) The posthuman is clearly an evolutionary event. We just need to switch to the register of information to see this. We’re accustomed to being told that dramatic evolutionary changes outrun our human frame of reference, which is just another way of saying that we generally think of evolution as something that doesn’t touch us. This was why, I think, I’ve been thinking the posthuman by analogy to the Enlightenment, which is to say, as primarily a cultural event distinguished by a certain breakdown in material constraints. No longer. Now I see it as an evolutionary event literally on par with the development of Morphological and Behavioural EF. As perhaps I should have all along, given that posthuman enthusiasts like Kurzweil go on and on about the death of death, which is to say, the obsolescence of a fundamental evolutionary invention.

2) The posthuman is not a human event. We may be the thin edge of the wedge, but every great transformation in evolution drags the whole biosphere in tow. The posthuman is arguably more profound than the development of multicellular life.

3) The posthuman, therefore, need not directly involve us. AI could be the primary vehicle.

4) Calling our descendents ’transhuman’ makes even less sense than calling birds ‘transdinosaurs.’

5) It reveals posthuman optimism for the wishful thinking it is. If this transformation doesn’t warrant existential alarm, what on earth does?

November 20, 2012

Paradox as Cognitive Illusion

Aphorism of the Day: A blog knows no greater enemy than Call of Duty. A blogger, no greater friend.

.

Paradoxes. I’ve been fascinated by them since my contradictory youth.

A paradox is typically defined as a conjunction of two true, yet logically incompatible statements – which those of you with a smattering of ancient Greek will recognize in the etymology of the word (para, ‘beside,’ doxa, ‘holdings’). So in a sense, it would be more accurate to say that I’m fascinated by paradoxicality, that sense of ethereal torsion that you get whenever baffled by self-reference, as in the classic verbalization of Russell’s Set Paradox,

The barber in town shaves all those who don’t shave themselves. Does the barber shave himself?

Or the grandaddy of them all, the Liar’s Paradox,

This sentence is false.

Pondering these while doing my philosophy PhD. at Vanderbilt led me to posit something I called ‘performance-reference asymmetry,’ the strange way referring to the performance of the self-same performance seemed to cramp sense, whether the resulting formulation was paradoxical or not. As in, for instance,

This sentence is true.

This led me to the notion that paradoxes were, properly speaking, a subset of the kinds of problems generated by self-reference more generally. Now logicians and linguists like to argue away paradoxes by appeal to some interpretation of the ‘proper use’ of the terms you find in statements like the above. ’This sentence is true,’ plainly abuses the indexical function of ‘this,’ as well as the veridical function of ‘true,’ creating a little verbal golem that, you could argue, merely lurches in the semblance semantic life. But I’ve never been interested in the legalities of self-reference or paradox so much as the implications. The important fact, it seems to me, is that self-reference (and therefore paradox) is a defining characteristic of human life. Whatever else that might distinguish us from our mammalian kin, we are the beasts that endlessly refer to the performance of our referring…

Which is to say, continually violate what seems to be a powerful bound of intelligibility.

Now I know that oh-so-many see this as an occasion for self-referential back-slapping, an example of ‘human transcendence’ or whatever. For many, the term ‘aporia’ (which means ‘difficult passage’ in ancient Greek) is a greased pipeline delivering all kinds of super-rational goodies. I’m more interested in the difficulty part. What is it about self-reference that is so damn difficult? Why should referring to the performance of our referring exhibit such peculiar effects?

Now if we were machines, we simply wouldn’t have this problem. It seems to be a brute fact of nature that an information processing mechanism cannot model its modelling as it models. Why? Simply because its resources are engaged. It can model its modelling (at the expense of fidelity) after it has modelled something else. But only after, never as.

Thus, thanks to the irreflexivity of nature, the closest a machine can come to a paradox is a loop. Well, actually, not even that, at least to the extent that ‘loops’ presuppose some kind of circularity. An information processing mechanism can only model the performance of its modelling subsequent to its modelling, which is just to say the circle is never closed, thanks to the crowbar of temporality. So rather, what we have looks more like a spiral than a loop.

Machines can only ‘refer’ to their past states simply because they need their present states to do the ‘referring.’

Can you see the creepy parallel building? Here we have all these ancient difficulties referring to the performance of our referring, and information processing machines, meanwhile, are out-and-out incapable of modelling the performance of their modelling as they model. Could these be related? Perhaps our difficulty stems from the fact that we are actually trying to do something that is, when all is said and done, mechanically impossible.

But as I said above, one of the things that distinguishes us humans from animals is our extravagrant capacity for self-reference. The implicit assumption was that this is also what distinguishes us from machines.

But recall what I said above: information processing machines can only model their modelling – at the expense of fidelity – after they have modelled something else. Any post hoc models an information processing machine generates of its modelling will necessarily be both granular and incomplete, granular because the mechanical complexity required to model its modelling necessarily outruns the complexity of the model, and incomplete because ‘omniscient access’ to information pertaining to its structures and functions is impossible.

Now, of course, the life sciences tell us that the mental turns on the biomechanical – that we are machines, in effect. The reason we need the life sciences to tell us this is that the mental appears to be anything but biomechanical – which is to say, anything but irreflexive. The mental, in other words, would seem to be radically granular and incomplete. This raises the troubling but provocative possibility that our ’difficulty with self-reference’ is simply the most our stymied cognitive systems can make of the mechanical impossibility of modelling our modelling simultaneous to our modelling.

Like any other mechanism, the brain can only model its past states, and only in a radically granular and incomplete manner, no less. Because it can only cognize itself after the fact, it can never cognize itself as it is, and so cannot cognize the interval between. In other words, even though it can model time (and so easily cognize the mechanicity of other brains), it cannot model the time of modelling, and so has to remain utterly oblivious to its own irreflexivity.

It perceives a false reflexivity, and so is afflicted by a welter of cognitive illusions, enough to make consciousness a near magical thing.

Structurally enforced myopia, simple informatic neglect, crushes the mechanical spiral that decompresses paradoxical self-reference flat. Put differently, what I called ‘paradox in the living sense’ above arises because a brain shaped like this:

which is to say, an irreflexive mechanism that spirals through temporally distinct states, can only access and model this,

which is to say, an irreflexive mechanism that spirals through temporally distinct states, can only access and model this,

from the standpoint of itself.

from the standpoint of itself.

November 13, 2012

Hole for a Soul

Aphorism of the Day: The philosophy of mind possesses only one honest position: perplexity… or possibly, ’hands-against-the-wall.’

Just a note: Peter Hankins at Conscious Entities does a far better job at explaining the Blind Brain Theory than I’ve been able to manage. Conscious Entities has become something of an institution over the years, primarily because of the care, honesty, and brilliance Peter puts into his various readings and considerations. I’ve been following the blog religiously for some time now, and I’ve actually come to see it as, well, almost Augustinian, as a scientifically and philosophically informed dialogue between an honestly perplexed man and the global conversation on the nature of his soul. For someone like me, who seems to be forever running and tripping after the next idea, it’s always a reminder to take a deep breath, and remember the mystery. An ‘attitude check,’ you might say. Some of us have to work for our epistemic humility - and pretty damn hard in my case.

November 10, 2012

Meathooks: Dennett and the Death of Meaning

Aphorism of the Day: God is myopia, personality mapped across the illusion of the a priori.

.

In Darwin’s Dangerous Idea, Daniel Dennett attempts to show how Darwinism possesses the explanatory resources “to unite and explain everything in one magnificent vision.” To assist him, he introduces the metaphors of the ‘crane’ and the ‘skyhook’ as a general means of understanding the Darwinian cognitive mode and that belonging to its traditional antagonist:

Let us understand that a skyhook is a “mind-first” force or power or process, an exception to the principle that all design, and apparent design, is ultimately the result of mindless, motiveless mechanicity. A crane, in contrast, is a subprocess or a special feature of a design process that can be demonstrated to permit the local speeding up of the basic, slow process of natural selection, and can be demonstrated to be itself the predictable (or retrospectively explicable) product of the basic process. Darwin’s Dangerous Idea, 76

The important thing to note in this passage is that Dennett is actually trying to find some middle-ground, here, between what might be called the ‘top-down’ intuitions, which suggest some kind of essential break between meaning and nature, and ‘bottom-up’ intuitions, which seem to suggest there is no such thing as meaning at all. What Dennett attempts to argue is that the incommensurability of these families of intuitions is apparent only, that one only needs to see the boom, the gantry, the cab, and the tracks, to understand how skyhooks are in reality cranes, the products of Darwinian evolution through and through.

The arch-skyhook in the evolutionary story, of course, is design. What Dennett wants to argue is that the problem has nothing to do with the concept design per se, but rather with a certain way of understanding it. Design is what Dennett calls a ‘Good Trick,’ a way of cognizing the world without delving into its intricacies, a powerful heuristic selected precisely because it is so effective. On Dennett’s account, then, design really looks like this:

And only apparently looks like this:

Design, in other words, is not the problem–design is a crane, something easily explicable in natural terms. The problem, rather, lies in our skyhook conception of design. This is a common strategy of Dennett’s. Even though he’s commonly accused of eliminativism (primarily for his rejection of ‘original intentionality’), a fair amount of his output is devoted to apologizing for the intentional status quo, and Darwin’s Dangerous Idea is filled with some of his most compelling arguments to this effect.

Now I actually think the situation is nowhere near so straightforward as Dennett seems to think. I also believe Dennett’s ‘redefinitional strategy,’ where we hang onto our ‘folk’ terms and simply redefine them in light of incoming scientific knowledge, is more than a little tendentious. But to see this, we need to understand why it is these metaphors of crane and skyhook capture as much of the issue of meaning and nature as they do. We need to take a closer look.

Darwin’s great insight, you could say, was simply to see the crane, to grasp the great, hidden mechanism that explains us all. As Dennett points out, if you find a ticking watch while walking in the woods, the most natural thing in the world is to assume is that you’ve discovered an intentional artifact, a product of ‘intelligent design.’ Darwin’s world-historical insight was to see how natural processes lacking motive, intelligence, or foresight could accomplish the same thing.

But what made this insight so extraordinary? Why was the rest of the crane so difficult to see? Why, in other words, did it take a Darwin to show us something that, in hindsight at least, should have been so very obvious?

Perspective is the most obvious, most intuitive answer. We couldn’t see because we were in no position to see. We humans are ephemeral creatures, with imaginations that can be beggared by mere centuries, let alone the vast, epochal processes that created us. Given our frame of informatic reference, the universe is an engine that idles so low as to seem cold and dead–obviously so. In a sense, Darwin was asking his peers to believe, or at least consider, a rather preposterous thing: that their morphology only seemed fixed, that when viewed on the appropriate scale, it became wax, something that sloshed and spilled into environmental moulds.

A skyhook, on this interpretation, is simply what cranes look like in the fog of human ignorance, an artifact of myopia–blindness. Lacking information pertaining to our natural origins (and what is more, lacking information regarding that lack), we resorted to those intuitions that seemed most immediate, found ways, as we are prone to do, to spin virtue and flattery out of our ignorance. Waste not, want not.

All this should be clear enough, I think. As ‘brights’ we have an ingrained animus against the beliefs of our outgroup competitors. ‘Intelligent design,’ in our circles at least, is what psychologists call an ‘identity claim,’ a way to sort our fellows on perceived gradients of cognitive authority. As such, it’s very easy to redefine, as far as intentional concepts go. Contamination is contamination, terminological or no. And so we have grown used to using the intuitive, which is to say, skyhook, concept of design ‘under erasure,’ as continental philosophers might say–as a mere facon de parler.

But I fear the situation is nowhere quite so easy, that when we take a close look at the ‘skyhook’ structure of ‘design,’ when we take care to elucidate its informatic structure as a kind of perspectival artifact, we have good reason to be uncomfortable–very uncomfortable. Trading in our intuitive concept of design for a scientifically informed one, as Dennett recommends, actually delivers us to a potentially catastrophic implicature, one that only seems innocuous for the very reason our ancestors thought ‘design’ so obvious and innocuous: ignorance and informatic neglect.

On Dennett’s account, design is a kind of ‘stance’–literally, a cognitive perspective–a computationally parsimonious way of making sense of things. He has no problem with relying on intentional concepts because, as we have seen, he thinks them reliable, at least enough for his purposes. For my part, I prefer to eschew ‘stances’ and the like and talk exclusively in terms of heuristics. Why? For one, heuristics are entirely compatible with the mechanistic approach of the life sciences–unlike stances. As such, they do not share the liabilities of intentional concepts, which are much more prone to be applied out of school, and so carry an increased risk of generating conceptual confusion. Moreover, by skirting intentionality, heuristic talk obviates the threat of circularity. The holy grail of cognitive science, after all, is to find some natural (which is to say, nonintentional) way to explain intentionality. But most importantly, heuristics, unlike stances, make explicit the profound role played by informatic neglect. Heuristics are heuristics (as opposed to optimization devices) by virtue of the way they systematically ignore various kinds of information. And this, as we shall see, makes all the difference in the world.

Recall the question of why we needed Darwin to show us the crane of evolution. The crane was so hard to see, I suggested, because our limited informatic frame of reference–our myopic perspective. So then why did we assume design was the appropriate model? Why, in the absence of information pertaining to natural selection, should design become the default explanation of our biological origins as opposed to, say, ‘spontaniety’? When Origin of the Species was published in 1859, for instance, many naturalists actually accepted some notion of purposive evolution; it was natural selection they found offensive, the mindlessness of biological origins. One can cite many contributing factors in answering this question, of course, but looming large over all of them is the fact that design is a natural heuristic, one of many specialized cognitive tools developed by our oversexed, undernourished ancestors.

By rendering the role of informatic neglect implicit, Dennett’s approach equivocates between ‘circumstantial’ and ‘structural’ ignorance, or in other words, between the mere inability to see and blindness proper. Some skyhooks we can dissolve with the accumulation of information. Others we cannot. This is why merely seeing the crane of evolution is not enough, why we must also put the skyhook of intuitive design on notice, quarantine it: we may be born in ignorance of evolution, but we die with the informatic neglect constitutive of design.

Our ignorance of evolution was never a simple matter of ignorance, it was also a matter of human nature, an entrenched mode of understanding, one incompatible with the facts of Darwinian evolution. Design, it seemed, was obviously true, either outright or upon the merest reflection. We couldn’t see the crane of evolution, not simply because we were in no position to see (given our ephemeral nature), but also because we were in position to see something else, namely, the skyhook of design. Think about the two photos I provided above, the way the latter, the skyhook, was obviously an obfuscation of the former, the crane, not merely because you had the original photo to reference, but because you could see that something had been covered over–because you had access, in other words, to information pertaining to the lack of information. The first photo of the crane strikes us as complete, as informatically sufficient. The second photo of the skyhook, however, strikes us as obviously incomplete.

We couldn’t see the crane of evolution, in other words, not just because we were in position to see something else, the skyhook of design, but because we were in position to see something else and nothing else. The second photo, in other words, should have looked more like this:

Enter the Blind Brain Theory. BBT analyzes problems pertaining to intentionality and consciousness in terms of informatic availability and cognitive applicability, in terms of what information we can reasonably expect conscious deliberation to access, and the kinds heuristic limitations we can reasonably expect it to face. Two of the most important concepts arising from this analysis are apparent sufficiency and asymptotic limitation. Since differentiation is always a matter of more information, informatic sufficiency is always the default. We always need to know more, you could say, to know that we know less. The is why intentionality and consciousness, on the BBT account, confront philosophy and science with so many apparent conundrums: what we think we see when we pause to reflect is limned and fissured by numerous varieties of informatic neglect, deficits we cannot intuit. Thus asymptosis and the corresponding illusion of informatic sufficiency, the default sense that we have all the information we need simply because we lack information pertaining to the limits of that information.

This is where I think all those years I spent reading continental philosophy have served me in good stead. This is also where those without any background in continental thought generally begin squinting and rolling their eyes. But the phenomena is literally one we encounter every day–every waking moment in fact (although this would require a separate post to explain). In epistemological terms, it refers to ‘unknown-unknowns,’ or unk-unks as they are called in engineering. In fact, we encountered its cognitive dynamics just above when puzzling through the question of why natural selection, which seems so obvious to us in hindsight, could count as such a revelation prior to 1859. Natural selection, quite simply, was an unknown unknown. Lacking the least information regarding the crane, in other words, meant that the design seemed the only option, the great big ‘it’s-gotta-be’ of early nineteenth century biology.

In a sense, all BBT does is import this cognitive dynamic–call it, the ‘Only-game-in-town Effect’–into human cognition and consciousness proper. In continental philosophy you find this dynamic conceptualized in a variety of ways, as ‘presence’ or ‘identity thinking,’ for example, in its positive incarnation (sufficiency), or as ‘differance’ or ‘alterity’ in its negative (neglect). But as I say, we witness it everywhere in our collective cognitive endeavours. All you need do is think of the way the accumulation of alternatives has the effect of progressively weakening novel interpretations, such as Kant’s say, in philosophy. Kant, who was by no means a stupid man, could actually believe in the power of transcendental deduction to deliver synthetic a priori truths simply because he was the first. It’s interpretative nature only became evident as the variants, such as Fichte’s, began piling up. Or consider the way contextualizing claims, giving them speakers and histories and motives and so on has the strange effect of relativizing them, somehow robbing them of veridical force. Back in my teaching days, I would illustrate the power of unk-unk via a series of recontextualizations. I would give the example of a young man stabbing an old man, and ask my students if it’s a crime. “Yes,” they would cry. “What could be more obvious!” Then I would start stacking contexts, such as a surrounding mob of other men stabbing one another, then a giant arena filled with screaming spectators watching it all, and so on.

The Only-game-in-town Effect (or the Invisibility of Ignorance), according to BBT, plays an even more profound role within us than it does between us. Conscious experience and cognition as we intuit them, it argues, is profoundly structured ‘by’ unk-unk–or informatic neglect.

This is all just to say that the skyhook of design always fills the screen, so to speak, that it always strikes us as sufficient, and can only be revealed as parochial through the accumulation of recalcitrant information. And this makes plain the astonishing nature of Darwin’s achievement, just how far he had to step out of the traditional conceptual box to grasp the importance of natural selection. At the same time, it also explains why, at least for some, the crane was in the ‘air,’ so to speak, why Darwin ultimately found himself in a race with Wallace. The life sciences, by the middle of the 19th century, had accumulated enough ‘recalcitrant information’ to reveal something of the heuristic parochialism of intuitive design and its inapplicability to the life sciences as a matter of fact, as opposed to mere philosophical reflection a la, for instance, Hume.

Intuitive design is a native cognitive heuristic that generates ‘sufficient understanding’ via the systematic neglect of ‘bottom-up’ causal information. The apparent ‘sufficiency’ of this understanding, however, is simply an artifact of this self-same neglect: as is the case with other intentional concepts, it is notoriously difficult to ‘get behind’ this understanding, to explain why it should count as cognition at all. To take Dennett’s example of finding a watch in the forest: certainly understanding that a watch is an intentional artifact, the product of design, tells you something very important, something that allows you to distinguish watches from rocks, for instance. It also tells you to be wary, that other agents such as yourself are about, perhaps looking for that watch. Watch out!

But what, exactly, is it you are understanding? Design seems to possess a profound ‘resolution’ constraint: unlike mechanism, which allows explanations at varying levels of functional complexity, organelles to cells, cells to tissues, tissues to organs, organs to animal organisms, etc., design seems stuck at the level of the ‘personal,’ you might say. Thus the appropriateness of the metaphor: skyhooks leave us hanging in a way that cranes do not.

And thus the importance of cranes. Precisely because of its variable degrees of resolution, you might say, mechanistic understanding allows us to ‘get behind’ our environments, not only to understand them ‘deeper,’ but to hack and reprogram them as well. And this is the sense in which cranes trump skyhooks, why it pays to see the latter as perspectival distortions of the former. Design, as it is intuitively understood, is a skyhook, which is to say, a cognitive illusion.

And here we can clearly see how the threat of tendentiousness hangs over Dennett’s apologetic redefinitional project. The design heuristic is effective precisely because it systematically neglects causal information. It allows us to understand what systems are doing and will do without understanding how they actually work. In other words, what makes design so computationally effective across a narrow scope of applications, causal neglect, seems to be the very thing that fools us into thinking it’s a skyhook–causal neglect.

Looked at in this way, it suddenly becomes very difficult to parse what it is Dennett is recommending. Replacing the old, intuitive, skyhook design-concept with a new, counterintuitive, crane design-concept means using a heuristic whose efficiencies turn on causal neglect in a manner amenable to causal explanation. Now it seems easy, I suppose, to say he’s simply drawing a distinction between informatic neglect as a virtue and informatic neglect as a vice, but can this be so? When an evolutionary psychologist says, ‘We are designed for persistence hunting,’ are we cognizing ‘designed for’ in a causal sense? If so, then what’s the bloody point of hanging onto concept at all? Or are we cognizing ‘designed for’ in an intentional sense? If so, then aren’t we simply wrong? Or are we, as seems far more likely the case, cognizing ‘designed for’ in an intentional sense only ‘as if’ or ‘under erasure,’ which is to say, as a mere facon de parler?

Either way, the prospects for Dennett’s apologetic project, at least in the case of design, seem to look exceedingly bleak. The fact that design cannot be the skyhook it seems to be, that it is actually a crane, does nothing to change the fact that it leverages computational efficiencies via causal neglect, which is to say, by looking at the world through skyhook glasses. The theory behinds his cranes is impeccable. The very notion of crane-design as a deployable concept, however,is incoherent. And using concepts ‘under erasure,’ as one must do when using ‘design’ in evolutionary contexts, would seem to stand upon the very lip of an eliminativist abyss.

And this is simply an instance of what I’ve been ranting about all along here on Three Pound Brain, the calamitous disjunction of knowledge and experience, and the kinds of distortions it is even now imposing on culture and society. The Semantic Apocalypse.

.

But Dennett is interested in far more than simply providing a new Darwinian understanding of design, he wants to mint a new crane-coinage for all intentional concepts. So the question becomes: To what extent do the considerations above apply to intentionality as a whole? What if it were the case that all the peculiarities, the interminable debates, the inability to ‘get behind’ intentionality in any remotely convincing way–what if all this were more than simply coincidental? Insofar as all intentional concepts systematically neglect causal information, we have ample reason to worry. Like it or not, all intentional concepts are heuristic, not in any old manner, but in the very manner characteristic of design.

Brentano, not surprisingly, provides the classic account of the problem in Psychology From an Empirical Standpoint, some fifteen years after the publication of Origin of the Species:

Every mental phenomenon includes something as object within itself, although they do not all do so in the same way. In presentation something is presented, in judgement something is affirmed or denied, in love loved, in hate hated, in desire desired and so on. This intentional in-existence is characteristic exclusively of mental phenomena. No physical phenomenon exhibits anything like it. We can, therefore, define mental phenomena by saying that they are those phenomena which contain an object intentionally within themselves. 68

No physical phenomena exhibits intentionality, and likewise, no intentional phenomena exhibits anything like causality, at least not obviously so. The reason for this, on the BBT account, is as clear as can be. Most are inclined to blame the computational intractability of cognizing and tracking the causal complexities of our relationships. The idea (and it is a beguiling one) is that aboutness is a kind of evolved discovery, that the exigencies of natural selection cobbled together a brain capable of exploiting a preexisting logical space–what we call the ‘a priori.’ Meaning, or intentionality more generally, on this account is literally ‘found money.’ The vexing question, as always, is one of divining how this logical level is related to the causal.

On the BBT account, the computational intractability of cognizing and tracking the causal complexities of our environmental relationships is also to blame, but aboutness, far from being found money, is rather a kind of ‘frame heuristic,’ a way for the brain to relate itself to its environments absent causal information pertaining to this relation. It presumes that consciousness is a distributed, dynamic artifact of some subsystem of the brain and that, as such, faces severe constraints on its access to information generally, and almost no access to information regarding its own neurofunctionality whatsoever:

It presumes, in other words, that the information available for deliberative or conscious cognition must be, for developmental as well as structural reasons, drastically attenuated. And it’s easy to see how this simply has to be the case, simply given the dramatic granularity of consciousness compared to the boggling complexities of our peta-flopping brains.

It presumes, in other words, that the information available for deliberative or conscious cognition must be, for developmental as well as structural reasons, drastically attenuated. And it’s easy to see how this simply has to be the case, simply given the dramatic granularity of consciousness compared to the boggling complexities of our peta-flopping brains.

The real question–the million dollar question, you might say–turns on the character of this informatic attenuation. At the subpersonal level, ‘pondering the mental’ consists (we like to suppose anyway) in the recursive uptake of ‘information regarding the mental’ by ‘System 2,’ or conscious, deliberative cognition. The question immediately becomes: 1) Is this information adequate for cognition? and 2) Are the heuristic systems employed even applicable to this kind of problem, namely, the ‘problem of the mental’? Is the information (as Dennett seems to assume throughout his corpus) ‘merely compressed,’ which is to say, merely stripped to its essentials to maximize computational efficiencies? Or is it a far, far messier affair? Given that the human cognitive ‘toolkit,’ as they call it in ecological rationality circles, is heuristically adapted to troubleshoot external environments, can we assume that mental phenomena actually lie within their scope of application? Could the famed and hoary conundrums afflicting philosophy of mind and consciousness research be symptoms of heuristic overreach, the application of specialized cognitive tools to a problem set they are simply not adapted to solve?

Let’s call the issue expressed in this nest of questions the ‘Attenuation Problem.’

It’s worth noting at this juncture that although Dennett is entirely on board with the notion that ‘the information available for deliberative or conscious cognition must be drastically attenuated’ (see, for instance, “Real Patterns”), he inexplicably shies from any detailed consideration of the nature of this attenuation. Well, perhaps not so inexplicably. For Dennett, the Good Tricks are good because they are efficacious and because they are winners of the evolutionary sweepstakes. He assumes, in other words, that the Attenuation Problem is no problem at all, simply because it has been resolved in advance. Thus, his apologetic, redefinitional programme. Thus his endless attempts to disabuse his fellow travellers of the perceived need to make skyhooks real:

I know that others find this vision so shocking that they turn with renewed eagerness to the conviction that somewhere, somehow, there just has to be a blockade against Darwinism and AI. I have tried to show that Darwin’s dangerous idea carries the implication that there is no such blockade. It follows from the truth of Darwinism that you and I are Mother Nature’s artefacts, but our intentionality is none the less real for being an effect of millions of years of mindless, algorithmic R and D instead of a gift from on high. Darwin’s Dangerous Idea, 426-7

Cranes are all we have, he argues, and as it turns out, they are more than good enough.

But, as we’ve seen in the case of design, the crane version forces us to check our heuristic intuitions at the door. Given that the naturalization of design requires adducing the very causal information that intuitive design neglects to leverage heuristic efficiencies, there cannot be, in effect, any coherent, naturalized concept of design, as opposed to the employment of intuitive design ‘under erasure.’ Real or not, the skyhook comes first, leaving us to append the rest of the crane as an afterthought. Apologetic redefinition is simply not enough.

And this suggests that something might be wrong with Dennett’s arguments from efficacy and evolution for the ‘good enough’ status of derived intentionality. As it turns out, this is precisely the case. Despite their prima facie appeal, neither the apparent efficacy nor the evolutionary pedigree of our intentional concepts provide Dennett with what he needs.

To see how this could be the case, we need to reconsider the two conceptual dividends of BBT considered above, sufficiency and neglect. Since more information is required to flag the insufficiency of the information (be it ‘sensory’ or ‘cognitive’) broadcast through or integrated into consciousness, sufficiency is the perennial default. This is the experiential version of what I called the ‘Only-game-in-town Effect’ above. This means that insufficiency will generally have to be inferred against the grain of a prior sense of intuitive sufficiency. Thus, one might suppose, evolution’s continued difficulties with intuitive design, and science’s battle against anthropomorphic worldviews more generally: not only does science force us to reason around elements of our own cognitive apparatus, it forces us to overcome the intuition that these elements are good enough to tell us what’s what on their own.

Dennett, in this instance at least, is arguing with the intuitive grain!

Intentionality, once again, systematically neglects causal information. As Chalmers puts it, echoing Leibniz and his problem of the Mill:

The basic problem has already been mentioned. First: Physical descriptions of the world characterize the world in terms of structure and dynamics. Second: From truths about structure and dynamics, one can deduce only further truths about structure and dynamics. And third: truths about consciousness are not truths about structure and dynamics. “Consciousness and Its Place in Nature”

Informatic neglect simply means that conscious experience tells us nothing about the neurofunctional details of conscious experience. Rather, we seem to find ourselves stranded with an eerily empty version of what the life sciences tell us we in fact are, the asymptotic (finite but unbounded) clearing called ‘consciousness’ or ‘mind’ containing, as Brentano puts it, ‘objects within itself.’ What is a mere fractional slice of the neuro-environmental circuit sketched above, literally fills the screen of conscious experience, as it were, appearing something like this:

Which is to say, something like a first-person perspective, where environmental relations appear within a ‘transparent frame’ of experience. Thus all the blank white space around the arrow: I wanted to convey the strange sense in which you are the ‘occluded frame,’ here, a background where the brain drops out, not just partially, not even entirely, but utterly. Floridi refers to this as the ‘one-dimensionality of experience,’ the way “experience is experience, only experience, and nothing but experience” (The Philosophy of Information, 296). Experience utterly fills the screen, relegating the mechanisms that make it possible to oblivion. As I’ve quipped many times: Consciousness is a fragment that constitutively confuses itself for a whole, a cog systematically deluded into thinking it’s the entire machine. Sufficiency and neglect, taken together, mean we really have no way short of a mature neuroscience of determining the character of the informatic attenuation (how compressed, depleted, fragmentary, distorted, etc.) of intentional phenomena.

So consider the evolutionary argument, the contention that evolution assures us that intentional attenuations are generally happy adaptations: Why else would they be selected otherwise?

To this, we need only reply, Sure, but adapted for what? Say subreption was the best way for evolution to proceed: We have sex because we lust, not because we want to replicate our genetic information, generally speaking. We pair-bond because we love, not because we want to successfully raise offspring to the age of sexual maturation, generally speaking. When it comes to evolution, we find more than a few ‘ulterior motives.’ One need only consider the kinds of evolutionary debates you find in cognitive psychology, for instance, to realize that our intuitive sense of our myriad capacities need not line up with their adaptative functions in any way at all, let alone those we might consider ‘happy.’

Or say evolution was only concerned with providing what might be called ‘exigency information’ for deliberative cognition, the barest details required for a limited subset of cognitive activities. One could cobble together a kind of neuro-Wittgensteinian argument, suggest that we do what we do all well and fine, but that as soon as we pause to theorize what we do, we find ourselves limited to mere informatic rumour and innuendo that, thanks to sufficiency, we promptly confuse for apodictic knowledge. It literally could be the case that what we call philosophy amounts to little more than submitting the same ‘mangled’ information to various deliberative systems again and again and again, hoping against hope for a different result. In fact, you could argue that this is precisely what we should expect to be the case, given that we almost certainly didn’t evolve to ‘philosophize.’

In other words, how does Dennett know the ‘intentionality’ he and others are ‘making explicit’ accurately describes the mechanisms, the Good Tricks, that evolution actually selected? He doesn’t. He can’t.

But if the evolutionary argument holds no water, what about Dennett’s argument regarding the out-and-out efficacy of intentional concepts? Unlike the evolutionary complaint, this argument is, I think, genuinely powerful. After all, we seem to use intentional concepts to understand, predict, and manipulate each other all the time. And perhaps even more impressively, we use them (albeit in stripped down form)in formal semantics and all its astounding applications. Fodor, for instance, famously argues that the use of representations in computation provide an all-important ‘compatibility proof.’ Formalization links semantics to syntax, and computation links syntax to causation. It’s hard to imagine a better demonstration of the way skyhooks could be linked to cranes.

Except that, like fitting the belly of Africa into the gut of the Carribean, it never quite seems to work when you actually try. Thus Searle’s famous Chinese Room Argument and Harnad’s generalization of it into the Symbol Grounding Problem. But the intuition persists that it has to work somehow: After all, what else could account for all that efficacy?

Plenty, it turns out. Intentional concepts, no matter how attenuated, will be efficacious the degree to which the brain is efficacious, simply by virtue of being systematically related to the activity of the brain. The upshot of sufficiency and neglect, recall, is that we are prone to confuse what little information we have available for most all the information available. The greater neuro-environmental circuit revealed by third-person science simply does not exist for the first-person, not even as an absence. This generates the problem of metonymicry, or the tendency for consciousness to take credit for the whole cognitive show regardless of what actually happens neurocomputationally back stage. Now matter how mangled our metacognitive understanding, how insufficient the information broadcast or integrated, in the absence of contradicting information, it will count as our intuitive baseline for what works. It will seem to be the very rule.

And this, my view predicts, is what science will eventually make of the ‘a priori.’ It will show it to be of a piece with the soul, which is to say, more superstition, a cognitive illusion generated by sufficiency and informatic neglect. As a neural subsystem, the conscious brain has more than just the environment from which to learn; it also has the brain itself. Perhaps logic and mathematics as we intuitively conceive them are best thought of, from the life sciences perspective at least (that is, the perspective you hope will keep you alive every time you see your doctor), as kinds of depleted, truncated, informatic shadows cast by brains performatively exploring the most basic natural permutations of information processing, the combinatorial ensemble of nature’s most fundamental, hyper-applicable, interaction patterns.

On this view, ‘computer programming,’ for instance, looks something like:

where essentially, you have two machines conjoined, two ‘implementations’ with semantics arising as an artifact of the varieties of informatic neglect characterizing the position of the conscious subsystem on this circuit. On this account, our brains ‘program’ the computer, and our conscious subsystems, though they do participate, do so under a number of onerous informatic constraints. As a result, we program blind to all aetiologies save the ‘lateral,’ which is to say, those functionally independent mechanisms belonging to the computer and to our immediate environment more generally. In place of any thoroughgoing access to these ‘medial’ (functionally dependent) causal relations, conscious cognition is forced to rely what little information it can glean, which is to say, the cartoon skyhooks we call semantics. Since this information is systematically related to what the brain is actually doing, and since informatic neglect renders it apparently sufficient, conscious cognition decides it’s the outboard engine driving the whole bloody boat. Neural interaction patterns author inference schemes that, thanks to sufficiency and neglect, conscious cognition deems the efficacious author of computer interaction patterns.

where essentially, you have two machines conjoined, two ‘implementations’ with semantics arising as an artifact of the varieties of informatic neglect characterizing the position of the conscious subsystem on this circuit. On this account, our brains ‘program’ the computer, and our conscious subsystems, though they do participate, do so under a number of onerous informatic constraints. As a result, we program blind to all aetiologies save the ‘lateral,’ which is to say, those functionally independent mechanisms belonging to the computer and to our immediate environment more generally. In place of any thoroughgoing access to these ‘medial’ (functionally dependent) causal relations, conscious cognition is forced to rely what little information it can glean, which is to say, the cartoon skyhooks we call semantics. Since this information is systematically related to what the brain is actually doing, and since informatic neglect renders it apparently sufficient, conscious cognition decides it’s the outboard engine driving the whole bloody boat. Neural interaction patterns author inference schemes that, thanks to sufficiency and neglect, conscious cognition deems the efficacious author of computer interaction patterns.

Semantics, in other words, can be explained away.

The very real problem of metonymicry allows us to see how Dennett’s famous ‘two black boxes’ thought-experiment (Darwin’s Dangerous Idea, 412-27), far from dramatically demonstrating the efficacy of intentionality, is simply an extended exercise in question-begging. Dennett tells the story of a group of researchers stranded with two black boxes, each containing a supercomputer containing a database of ‘true facts’ about the world only in different programming languages. One box has two buttons labelled alpha and beta, while the second box has three lights coloured yellow, red, and green accordingly. A single wire connects them. Unbeknownst to the researchers, the button box simply transmits a true statement when the alpha button is pushed, which the bulb box acknowledges by lighting the red bulb for agreement, and a false statement when the beta button is pushed, which the bulb box acknowledges by lighting the green bulb for disagreement. The yellow bulb illuminates only when the bulb box can make no sense of the transmission, which is always the case when the researcher disconnect the boxes and, being entirely ignorant of any of these details, substitute signals of their own.

What Dennett wants to show is how these box-to-box interactions would be impossible to decipher short of taking the intentional stance, in which case, as he points out, the communications become easy enough for a child to comprehend. But all he’s really saying is that the coded transmissions between our brains only make sense from the standpoint of our environmentally informed brains–that the communications between them are adapted to their idiosyncrasies as environmentally embedded, supercomplicated systems. He thinks he’s arguing the ineliminability of intentionality as we intuitively conceive it, as if it were the one wheel required to make the entire mechanism turn. But again, the spectre of metonymicry, the fact that, no matter where our intentional intuitions fit on the neurofunctional food chain, they will strike us as central and efficacious even when they are not, means that all this thought experiment shows–all that it can show, in fact–is that our brains communicate in idiosyncratic codes that conscious cognition seems access via intentional intuitions. To assume that our assumptions regarding the ‘intentional’ capture that code without gross, even debilitating distortions, simply begs the question.