Lorin Hochstein's Blog, page 17

September 26, 2021

The strange beauty of strange loop failure modes

As I’ve posted about previously, at my day job, I work on a project called Managed Delivery. When I first joined the team, I was a little horrified to learn that the service that powers Managed Delivery deploy itself using Managed Delivery.

“How dangerous!”, I thought. What if we push out a change that breaks Managed Delivery? How will we recover? However, after having been on the team for over a year now, I have a newfound appreciation for this approach.

Yes, sometimes there’s something that breaks, and that makes it harder to roll back, because Managed Delivery provides the main functionality for easy rollback. However, it also means that the team gets quite a bit of practice at bypassing Managed Delivery when something goes wrong. They know how to disable Managed Delivery and use the traditional Spinnaker UI to deploy an older version. They know how to poke and prod at the database if the Managed Delivery UI doesn’t respond properly.

These strange loop failure modes are real: if Managed Delivery breaks, we may lose out on the functionality of Managed Delivery to help us recover. But it also means that we’re more ready for handling the situation if something with Managed Delivery goes awry. Yes, Managed Delivery depends on itself, and that’s odd. But we have experience with how to handle things when this strange loop dependency creates a problem. And that is a valuable thing.

September 17, 2021

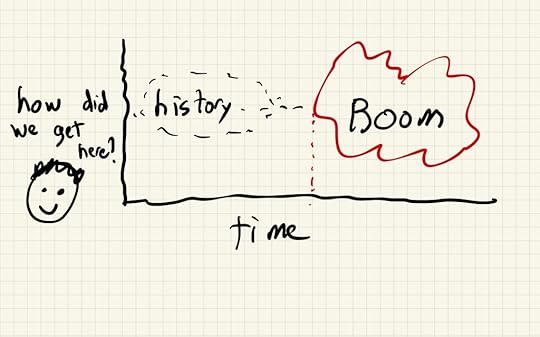

Live-drawing my slides during a talk

The other day, I gave an internal talk, and I tried an experiment. Using my iPad and the GoodNotes app, I drew all of my slides while I was talking (except the first slide, which I drew in advance).

“What font is that?” someone asked. It’s my handwriting

“What font is that?” someone asked. It’s my handwritingI’ve always been in awe of people who can draw, I’ve never been good at it.

“Where’s the bug”, it says. Not my best handwriting

“Where’s the bug”, it says. Not my best handwritingOver the years, I’ve tried doodling more. I was influenced by Dan Roam’s books, Julia Evans’s zines, sketchnotes, and most recently, Christina Wodtke’s Pencil Me In.

The words have stink lines, so you know they’re bad

The words have stink lines, so you know they’re badIf you’ve read my blog before, you’ve seen some of my previous doodles (e.g., Root cause of failure, root cause of success or Taming complexity: from contract to compact).

We need to complete the action items so it never happens again

We need to complete the action items so it never happens againWhen I was asked to present to a team, I wanted to use my drawings rather than do traditional slides. I actually hate using tools like PowerPoint and Google Slides to do presentations. Typically I use Deckset, but in this case, I wanted to do them all drawn.

A different perspective on incidents

A different perspective on incidentsI started off by drawing out my slides in advance. But then I thought, “instead of showing pre-drawn slides, why don’t I draw the slides as I talk? That way, people will know where to look because they’ll look at where I’m drawing.”

I still had to prepare the presentation in advance. I drew all of the slides beforehand. And then I printed them out and had them in front of me so that I could re-draw them during the talk. Since it was done over Zoom, people couldn’t actually see that I was working from the print-outs (although they might have heard the paper rustling).

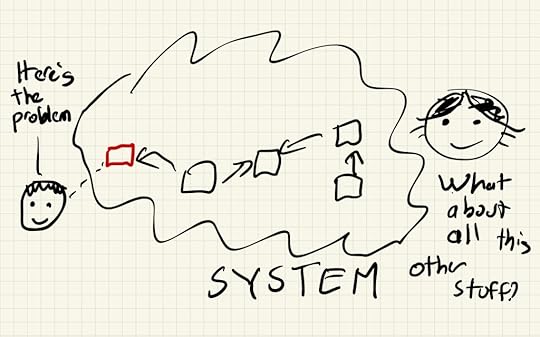

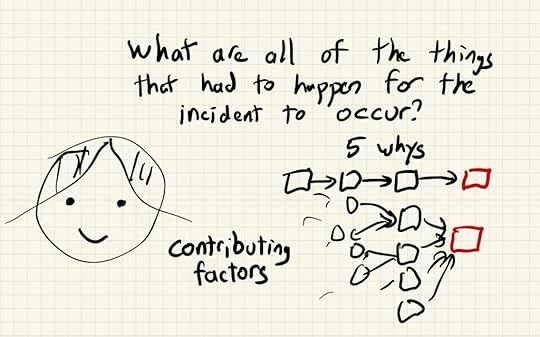

Contributing factors aren’t like root cause

Contributing factors aren’t like root causeOne benefit of this technique was that it made it easier to answer questions, because I could draw out my answer. When I was writing the text at the top, somebody asked, “Is that something like a root cause chain?” I drew the boxes and arrows in response, to explain how this isn’t chain-like, but instead is more like a web.

The selected images above should give you a sense of what my slides looked like. I had fun doing the presentation, and I’d try this approach again. It was certainly more enjoyable than futzing with slide layout.

September 12, 2021

Useful knowledge and improvisation

Eric Dobbs recently retold a story on twitter (a copy is on his wiki) about one of his former New Relic colleagues, Nicholas Valler.

At the time, Nicholas was new to the company. He had just discovered a security vulnerability, and then (unrelated to that security vulnerability), an incident happened and, well, I encourage you to read the whole story first, and then come back to this post.

Wow was that ever a great suggestion, @alicegoldfuss! What a story! 0/8

— Eric Dobbs (@dobbse) September 12, 2021

In the end, the engineers were able to leverage the security vulnerability to help resolve the incident. As is my wont, I made a snarky comment.

Root cause of success: unpatched security vulnerability https://t.co/RpWVOosvCg

— Lorin Hochstein (@norootcause) September 12, 2021

But I did want to make a more serious comment about what this story illustrates. In a narrow sense, this security vulnerability helped the New Relic engineers remediate before there was severe impact. But in a broader sense, the following aspects helped them remediate:

they had useful knowledge of some aspect of the the system (port 22 was open to the world)they could leverage that knowledge to improvise a solution (they could use this security hole to log in and make changes to the kafka configuration)The irony here is that it was a new employee that had the useful knowledge. Typically, it’s the tenured engineers who have this sort of knowledge, as they’ve accumulated it with experience. In this case, the engineer discovered this knowledge right before it was needed. That’s what make this such a great story!

I do think that how Nicholas found it, by “poking around”, is a behavior that comes with general experience, even though he didn’t have much experience at the company.

"I found a security hole and was in the process of figuring out how to report to security when the the network control plane was accidentally sheared, in particular leaving all our systems inaccessible by ssh." 3/8

— Eric Dobbs (@dobbse) September 12, 2021

But being in possession of useful knowledge isn’t enough. You also need to be able to recognize when the knowledge is useful and bring it to bear.

"I mentioned it to Dana and Alice very timidly: “I think I may know a way into our systems…”. I was pretty nervous because I figured security would be peeved." 7/8

— Eric Dobbs (@dobbse) September 12, 2021

These two attributes: having useful knowledge about the system and the ability to apply that knowledge to improvise a solution, are critical for being able to deal effectively with incidents. Applying these are resilience in action.

It’s not a focus of this particular story, but, in general, this sort of knowledge is distributed across individuals. This means that it’s the ad-hoc team that forms during an incident that needs to possess these attributes.

August 31, 2021

Inconceivable

Back in July, Ray Ashman at Mailchimp posted a wonderful writeup of an internal incident (h/t to SRE Weekly). It took the Mailchimp engineers almost two days to make sense of the failure mode.

The trigger was a change to a logging statement, in order to log an exception. During the incident, the engineers noticed that this change lined up with the time that the alerts fired. But, other than the timing, there wasn’t any evidence to suggest that the log statement change was problematic. The change didn’t have any apparent relationship to the symptoms they were seeing with the job runner, which was in a different part of the codebase. And so they assumed that the logging statement change was innocuous.

As it happened, there was a coupling between that log statement and the job runner. Unfortunately for the engineers, this coupling was effectively invisible to them. The connection between the logging statement and the job running was Mailchimp’s log processing pipeline. Here’s an excerpt from the writeup (emphasis mine):

Our log processing pipeline does a bit of normalization to ensure that logs are formatted consistently; a quirk of this processing code meant that trying to log a PHP object that is Iterable would result in that object’s iterator methods being invoked (for example, to normalize the log format of an Array).

Normally, this is an innocuous behavior—but in our case, the harmless logging change that had shipped at the start of the incident was attempting to log PHP exception objects. Since they were occurring during job execution, these exceptions held a stacktrace that included the method the job runner uses to claim jobs for execution (“locking”)—meaning that each time one of these exceptions made it into the logs, the logging pipeline itself was invoking the job runner’s methods and locking jobs that would never be actually run!

Fortunately, there were engineers who had experience with this failure mode before:

Since the whole company had visibility into our progress on the incident, a couple of engineers who had been observing realized that they’d seen this exact kind of issue some years before.

…

Having identified the cause, we quickly reverted the not-so-harmless logging change, and our systems very quickly returned to normal.

In the moment, the engineers could not conceive of how a change in behavior in the job runner could be affected by the modification of a log statement in an unrelated part of the code. It was literally unthinkable to them.

August 28, 2021

Software Misadventures Podcast

I was recently a guest on the Software Misadventures Podcast.

https://softwaremisadventures.libsyn.com/rssContempt for the glue people

The clip below is from a lecture from 2008(?) that then-Google CEO Eric Schmidt gave to a Stanford class.

Here’s a transcript, emphasis mine.

When I was at Novell, I had learned that there were people who I call “glue people”. The glue people are incredibly nice people who sit at interstitial boundaries between groups, and they assist in activity. And they are very, very loyal, and people love them, and you don’t need them at all.

At Novell, I kept trying to get rid of these glue people, because they were getting in the way, because they slowed everything down. And every time I get rid of them in one group, they’d show up in another group, and they’d transfer, and get rehired and all that.

I was telling Larry [Page] and Sergey [Brin] this one day, and Larry said, “I don’t understand what your problem is. Why don’t we just review all of the hiring?” And I said, “What?” And Larry said, “Yeah, let’s just review all the hiring.” And I said, “Really?” He said, “Yes”.

So, guess what? From that moment on, we reviewed every offer packet, literally every one. And anybody who smelled or looked like a glue person, plus the people that Larry and Sergey thought had backgrounds that I liked that they didn’t, would all be fired.

I first watched this lecture years ago, but Schmidt’s expressed contempt for the nice and loyal but useless glue people just got lodged in my brain, and I’ve never forgotten it. For some reason, this tweet about Google’s various messaging services sparked my memory about it, hence this post.

This @arstechnica article is a fab summary of how Google botched up its approach to messaging services over the years. Come for the TOC, stay for the painful story. Also, a reminder that success in one area online does not mean guaranteed success elsewherehttps://t.co/Zrf8fZtHYb pic.twitter.com/aOGZe8uvAa

— Subrahmanyam KVJ (@SuB8u) August 26, 2021

August 24, 2021

Remembering the important bits when you need them

I’m working my way through the Cambridge Handbook of Expertise and Expert Performance, which is a collection of essays from academic researchers who study expertise.

Chapter 6 discusses the ability of experts to recall information that’s relevant to the task at hand. This is one of the differences between experts and novices: a novice might answer questions about a subject correctly on a test, but when faced with a real problem that requires that knowledge, they aren’t able to retrieve it.

The researchers K. Anders Ericsson and Walter Kintsch had an interesting theory about how experts do better at this than novices. The theory goes like this: when an expert encounters some new bit of information, they have the ability to encode that information into their long-term memory in association with a collection of cues of when that information would be relevant.

In other words, experts are able to predict the context when that information might be relevant in the future, and are able to use that contextual information as a kind of key that they can use to retrieve the information later on.

Now, think about reading an incident write-up. You might learn about a novel failure mode in some subsystem your company uses (say, a database), as well as the details that led up to it happening, including some of the weird, anomalous signals that were seen earlier on. If you have expertise in operations, you’ll encode information about the failure mode into your long term memory and associate it with the symptoms. So, the next time you see those symptoms in production, you’ll remember this failure mode.

This will only work if the incident write-up has enough detail to provide you with the cues that you need to encode in your memory. This is another reason to provide a rich description of the incident. Because the people reading it, if they’re good at operations, will encode the details of the failure mode into their memory. If it happens again, and they read the write up, they’ll remember.

August 22, 2021

The power of framing a problem

I’m enjoying Marianne Bellotti’s book Kill It With Fire, which is a kind of guidebook for software modernization projects (think: migrating legacy systems). In Chapter Five, she talks about the importance of momentum for success, and how a crisis can be a valuable tool for creating a sense of urgency. This is the passage that really resonated with me (emphasis in the original):

Occasionally, I went as far as looking for a crisis to draw attention to. This usually didn’t require too much effort. Any system more than five years old will have at least a couple major things wrong with it. It didn’t mean lying, and it didn’t mean injecting problems where they didn’t exist. Instead, it was a matter of storytelling—taking something that was unreported and highlighting its potential risks. These problems were problems, and my analysis of their potential impact was always truthful, but some of them could have easily stayed buried for months or years without triggering a single incident.

Kill It With Fire, p88

This is a great explanation of how describing a problem is a form of power in an organization. Bellotti demonstrates how, by telling a story, she was able to make problems real for an organization, even to the point of creating a crisis. And a crisis receives attention and resources. Crises get resolved.

It’s also a great example the importance of storytelling in technical organizations. Tell a good story, and you can make things happen. It’s a skill that’s worth getting better at.

August 21, 2021

The local nature of culture

I’m really enjoying Turn the Ship Around!, a book by David Marquet about his experiences as commander of a nuclear submarine, the USS Santa Fe, and how he worked to improve its operational performance.

One of the changes that Marquet introduced is something he calls “thinking out loud”, where he encourages crew members to speak aloud their thoughts about things like intentions, expectations, and concerns. He notes that this approach contradicted naval best practices:

As naval officers, we stress formal communications and even have a book, the Interior Communications Manual, that specifies exactly how equipment, watch stations, and evolutions are spoken, written, and abbreviated …

This adherence to formal communications unfortunately crowds out the less formal but highly important contextual information needed for peak team performance. Words like “I think…” or “I am assuming…” or “It is likely…” that are not specific and concise orders get written up by inspection teams as examples of informal communications, a big no-no. But that is just the communication we need to make leader-leader work.

Turn the Ship Around! p103

This change did improve the ship operations, and this improvement was recognized by the Navy. Despite that, Marquet still got pushback for violating norms.

[E]ven though Santa Fe was performing at the top of the fleet, officers steeped in the leader-follower mind-set would criticize what they viewed as the informal communications on Santa Fe. If you limit all discussion to crisp orders and eliminate all contextual discussion, you get a pretty quiet control room. That was viewed as good. We cultivated the opposite approach and encouraged a constant buzz of discussions among the watch officers and crew. By monitoring that level of buzz, more than the actual content, I got a good gauge of how well the ship was running and whether everyone was sharing information.

Turn the Ship Around! p103

Reading this reminded me how local culture can be. I shouldn’t be surprised, though. At Netflix, I’ve worked on three teams (and six managers!) and each team had very different local cultures, despite all of them being in the same organization, Platform Engineering.

I used to wonder, “how does a large company like Google write software?” But I no longer think that’s a meaningful question. It’s not Google as an organization that writes software, it’s individual teams that do. The company provides the context that the teams work in, and the teams are constrained by various aspects of the organization, including the history of the technology they work on. But, there’s enormous cultural variation from one team to the next. And, as Marquet illustrates, you can change your local culture, even cutting against organizational “best practices”.

So, instead of asking, “what is it like to work at company X”, the question you really want answered is, “what is it like to work on team Y at company X?”

August 20, 2021

What do you work on, anyway?

I often struggle to describe the project that I work on at my day job, even though it’s an open-source project that even has its own domain name: managed.delivery. I’ll often mumble something like, “it’s a declarative deployment system”. But that explanation does not yield much insight.

I’m going to use Kubernetes as an analogy to explain my understanding of Managed Delivery. This is dangerous, because I’m not a Kubernetes user(!). But if I didn’t want to live dangerously, I wouldn’t blog.

With Kubernetes, you describe the desired state of your resources declaratively, and then the system takes action to bring the current state of the system to the desired state. In particular, when you use Kubernetes to launch a pod of containers, you need to specify the container image name and version to be deployed as part of the desired state.

When a developer pushes new code out, they need to change the desired state of a resource, specifically, the container image version. This means that a deployment system needs some mechanism for changing the desired state.

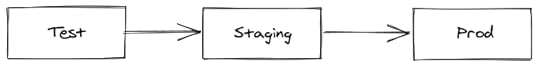

A common pattern we see is that service owners have a notion of an environment (e.g., test, staging, prod). For example, maybe they’ll deploy the code to test, and maybe run some automated tests against it, and if it looks good, they’ll promote to staging, and maybe they’ll do some manual tests, and if they’re happy, they’ll promote out to prod.

Example of deployment environments

Example of deployment environmentsImagine test, staging, and prod all have version v23 of the code running in it. After version v24 is cut, it will first be deployed in test, then staging, then prod. That’s how each version will propagate through these environments, assuming it meets the promotion constraints for each environment (e.g., tests pass, human makes a judgment).

You can think of this kind of promoting-code-versions-through-environments as a pattern for describing how the desired states of the environments changes over time. And you can describe this pattern declaratively, rather than imperatively like you would with traditional pipelines.

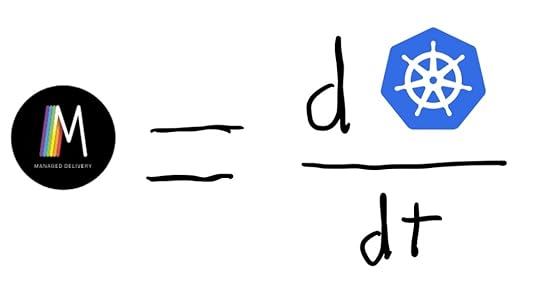

And that’s what Managed Delivery is. It’s a way of declaratively describing how the desired state of the resources should evolve over time. To use a calculus analogy, you can think of Managed Delivery as representing the time-derivative of the desired state function.

If you think of Kubernetes as a system for specifying desired state, Managed Delivery is a system for specifying how desired state evolves over time

If you think of Kubernetes as a system for specifying desired state, Managed Delivery is a system for specifying how desired state evolves over timeWith Managed Delivery, you can say express concepts like:

for a code version to be promoted to the staging environment, it mustbe successfully deployed to the test environmentpass a suite of end-to-end automated tests specified by the app ownerand then Managed Delivery uses these environment promotion specifications to shepherd the code through the environments.

And that’s it. Managed Delivery is a system that lets users describe how the desired state changes over time, by letting them specify environments and the rules for promoting change from one from environment to the next.