Markus Gärtner's Blog, page 23

February 25, 2012

Binary Kata

There are only 10 types of people in the world:

Those who understand binary

Those who don't

How is your binary today?

If you want to become one of the former group, here is a coding kata for you. I derived this from a tester at a client who is currently pursuing his Computer Science degree. While he was asking for help on an exercise, we came up with the idea, that this exercise would be a pleasant coding kata.

The kata itself is easy. Write a program which takes any floating point number and converts it into a string representation of the bits in a floating point format. As a suggestion you may want to start with the single-precision format where you have one bit for the sign, 8 bits for the exponent, and 23 bits for the fraction. If you seek some more challenge, go for double- or extended-precision.

Here are some values that you might want to consider:

0.0

123.456

Pi

1.9999999

-2.5521175E38

If you seek some help on how to convert these numbers, here is a web-based converter using a Java Applet.

February 19, 2012

Let's Test prequel with Johan Jonasson

As a prequel to the Let's Test conference in May, I interviewed some of the European context-driven testers. Today we have Johan Jonasson from the House of Test with us who also co-organized the conference.

Markus Gärtner: Hi Johan, could you please introduce yourself? Who are you? What do you do for work?

Johan Jonasson: I work as a test consultant, coach and trainer at House of Test, a multi-national company that offers context-driven consulting, training and outsourcing services throughout Scandinavia. I'm also engaged in different global and local community initiatives, like AST, EAST and SWET, which has lately resulted in me becoming involved in the community lead Let's Test conference on context-driven testing. I'm passionate about both gaining and spreading knowledge about testing and so try to spend as much time as I can advocating a growth mindset among testers.

Markus Gärtner: Out-sourcing of context-driven testing? How do you do that? Do you have to convince clients about your services?

Johan Jonasson: The way you present and provide a context-driven service doesn't really change that much if you do it as outsourcing compared to on-site work. We offer a flexible and adaptable outsourcing solution that is free from pre-packaged "best practices". Instead we look at the specific problem the client needs to solve and we tailor the entire solution, after that problem. The goal is to give the client what they need, and figure out what that need is in close collaboration with the client, not sell them a standard "one-size-fits-all" bogus solution. It means doing a bit more work up front, but we believe the pay off for the client is much greater than with non-context-driven outsourcing.

Markus Gärtner: How have you crossed the path of context-driven testing?

Johan Jonasson: I first came into contact with the community in 2007, when I first met James Bach and attended his Rapid Software Testing class. About a year later, I founded House of Test and joined the Association for Software Testing to be able to learn more about context-driven testing. Today I try to help the community grow in Europe, through conferences and workshops and also by helping out with the AST's BBST training program as an assistant instructor.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Johan Jonasson: One of the principles of the context-driven school is that people, working together, are the most important part of any project's context. This is something I always try to emphasize wherever I work. I always go to great lengths trying to promote effective communication and collaboration in the workplace, because I believe that when people stop communicating, it's only a matter of time before the project breaks down. Also, I'm not a big fan of trying to predict the future to any greater extent than maybe the coming few days. Being context-driven is about doing the best we can with what we get, and by working together in a project and staying flexible to change so that we can better counter the unknown unknowns or whatever other curve balls our surroundings choose to throw at us.

Markus Gärtner: How do you promote communication and collaboration? One of the biggest struggles in the testing world I see, is that testers should be kept separated, maybe even locked away from the rest of the development team. What is your answer to such claims?

Johan Jonasson: That's one of the ideas I try to abolish as part of my promoting communication and collaboration. I don't buy the argument about how separation of testers and programmers should help testers do more "bias free" blackbox testing. We have a ton of biases that we carry with us anyway that we need to become aware of and deal with and I don't (personally) see insight into the inner workings of a product to be a very problematic bias, unless you have a strong tendency for confirmation bias to begin with, but then there's your problem right there.

I think that isolating any part of a development teams is generally speaking a bad idea. I don't believe that information silos lead to any advantages for anybody in the long run and it certainly impacts the value I can provide as a tester if I'm kept in the dark or separated from programmers, architects or business analysts. Getting to know more about a product by communicating with the rest of the team or with the customer is crucial for me in order to craft a proper testing strategy for myself. Take for example the Heuristic Test Strategy Model which encourages testers to (aside from considering quality criteria) become aware of a wide range of factors in the project environment as well as numerous product elements in order to select appropriate methods, approaches and techniques to test the product both efficiently and effectively. I think that makes much more sense than separating certain people from the information.

Markus Gärtner: What are the challenges you see in Europe's testing culture? What are your replies to these challenges?

Johan Jonasson: I think one of the major challenges is that we've been focusing too long on planning, prediction, measuring, coverage and control as the only way of "assuring" quality. Testers in Europe have also been predisposed to only do "checking" for the longest time, with a high emphasis on confirming existing beliefs and explicit requirement rather than focusing on discovery and experimentation in their testing. In other words, the European testing culture has been dominated by only asking questions like "Does this function do what I expect it to do?" instead of asking more questions like "What happens if I do this?".

Testers have slowly started to come around to a more deliberate way of critiquing the products they test, rather than just verifying that they work as designed, but there's still a long way to go. I think we need to encourage more creativity and curiosity in the way we work, or we'll risk ending up in a situation where we as testers produce no more value than an automated script, and projects where "Black Swans" can roam freely and destroy entire development projects.

One of my replies to these challenges is to encourage human testers to do more heuristic based testing, and work more with high level test ideas rather than detailed test cases. This allows for the occasional serendipitous discovery of impossible to predict bugs which, if left undiscovered, can kill a product in the marketplace.

Markus Gärtner: Consider time-travelling being possible today. What would you change in the history of software testing, if you could?

Johan Jonasson: If time-travelling was possible, I'd probably travel forward and not backwards, because I'd like to see what "comes next" for us all. But if I were to travel back (and if I could convince myself to limit my tinkering to only software testing history…), I'd try to make sure more testers learned about the works of e.g. Jerry Weinberg and Cem Kaner at a much earlier point in time. I'd try to not touch too much else, or I'd probably risk causing too much "positive damage" to the present. Or maybe I'll just add some awareness of the difference between exploring and verifying too, and abolish the idea that testing doesn't require any skill, and… No, that's it. Better to quit while you're ahead. :-)

Markus Gärtner: Imagine the world in 20 years. What has happened to software testing

that will make us pursue this craft for the next two decades?

Johan Jonasson: I think testers are heading for a bright future, but we'll lose a few people on the way going there, but that's actually ok. The reason is that so much testing being done today is really fake testing, and that needs to stop. Fake testing can be done in many ways, but it's almost always recognizable by its obvious lack of conscious thought or drive. It's testing done as painting by numbers, going through the motions, all handed down scripts and no creativity. It's what's been called "ceremonious testing" by James Bach, or "the testing theater" by Ben Kelly. I believe we'll see less of this in the future. That probably means we'll see fewer testers, too, because quite frankly, testing requires a growth mindset in order for testers to keep up with the evolution of software development, and unfortunately that doesn't seem to strike a chord with all testers in the industry today.

I'm not saying that all testers need to learn how to code, because I don't think they do. But I'm saying that all testers need to at least read a book on software testing, any book really, or some blogs, or a few articles. And I think they need to share those experiences, and their experience from their day to day work, with other testers and learn from each other. And last but not least, I think this should be the first thing a junior tester gets encouraged to do in their career, not study for a multiple-choice test in order to get yet another piece of paper saying they've passed an exam.

Too few testers today seem eager to learn and develop existing or new skills and so they will probably be left behind as the people who pay for testing services become more informed. Right now, we often seem to be in a situation that Scott Barber has described as "The under-informed, leading the under-trained, to do the irrelevant." and I want to encourage testers to eliminate that as the status quo.

So are the people who pay money for testing services becoming more informed? I have seen some evidence of that, yes, but it's not happening fast enough. That's why I think community driven initiatives in the testing industry, like the peer workshops that we've started to adopt all over Europe, are so incredibly important. The community needs to come together and through discussion and debate forge the tools needed to explain to big businesses why software testing is worth doing well, and to spread that message far and wide. Otherwise, we'll be stuck competing for the spotlight with people advocating for more fake testing that "anybody can do, and that's why it's so cheap". Also, scores of potentially pretty good testers will continue to view testing as nothing more than a stepping stone towards getting a more "qualified" software development gig, and so they never bother to learn what real testing looks like, and they will carefully stay away from anything that might "infect them" with some passion for software testing, which makes it even less likely that they'll stick around and help develop the craft. In 20 years, we will have hopefully broken this cycle, or the cycle will have broken us. Either way, I think it's in our power to decide which future we want to see.

Markus Gärtner: What will happen after Let's Test? What do you plan to take away from it?

Johan Jonasson: After Let's Test 2012 we'll start planning for Let's Test 2013! The response from the testing community has been great and we have high expectations that this will be a really great and unique event that we can continue to build upon and improve for years to come. I'm hoping to take away many great learnings and experiences that are shared by all the awesome testers, both speakers and attendees, who are flying in from all over Europe and the rest of the world that I'll meet at the conference. I'm hoping that people who come to the conference will go home feeling energized and ready to either seek out some of the great local context-driven communities that exist all around Europe, or start new ones where they don't already exist. Finally, I also hope of course that we'll all leave Let's Test having made many new friends that we can continue to share experiences and debate new ideas with even after the conference ends. Another really cool thing with meeting places like this is that you get to meet people that you've maybe previously only known through their Twitter feed or blog, and looking at the list of people who've already registered and the list of speakers, Let's Test will surely give people that opportunity, too.

Markus Gärtner: One final question: If you knew one year in the past what you knew

today, would you still (or again?) organize a conference like Let's Test?

Johan Jonasson: Yes, without a doubt. It's a lot of work for sure, but it's really a privilege to be able to be involved in a thing like this, and to be part of all the discussions and see the positive reactions from the community. It's well worth it.

Markus Gärtner: Thanks for your time, Johan. I look forward meeting up with you at Let's Test, and meeting all the other context-driven folks there.

February 12, 2012

Let's meet, let's confer

Here are a bunch of dates on my schedule. I am always pleased to meet new people. So, let's confer.

23rd March: TestBash in Cambridge

1st-28th April: BBST Foundations (online)

16th-17th April: ScanDev in Göteborg

18th April: JAX in Mainz

7th-9th May: Let's Test in Stockholm

10th May: Karlsruher Entwicklertag

23rd-25th May: Testing in Scrum in München, a public three day course that I am offering

3rd-30th June: BBST Bug Advocacy (online)

14th-15th July: Test Coach Camp in San Jose, CA

16th-18th July: CAST 2012 in San Jose, CA

24th-28th July: Rapid Testing Intensive (most probably online)

2nd-4th August: SoCraTes in Rückersbach

10th-27th October: BBST Instructor Course (online)

17th-19th October: Testing in Scrum in Hamburg, a public three day course that I am offering

19th-22th November: Agile Testing Days in Potsdam

29th November – 1st December: XP Days Germany in Hamburg

Phew. Quite a list. There are a few dates missing, but I will wait to get confirmation on them before making them public.

In between, I hope to see the relese of my book "ATDD by Example". I just found out yesterday that Amazon.com put up the publishing dates as the 9th of June. But I am a bit skeptical about that.

January 31, 2012

Alternatives to Apprenticeships

Today I crossed my review comments for the Apprenticeship Patterns which I wrote back in 2009. I wrote a blog entry back at that time about My long road. Reflecting back over the past – maybe – 3 years, I noticed something I wanted to write about: alternatives to apprenticeships – most of them I came across at my current employer

January 29, 2012

Responses from the programmer and tester surveys

A while ago, I called for some participation on the state of our craft. I promised back then to present some intermediate answers in late January. Here they are.

Programer's survey

Up until know 183 people have filled out the survey.

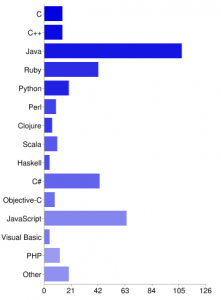

The first question in the programmer's survey is on the particular programming the programmers used.

The majority of programmers seem to use Java. There are some using JavaScript, C#, and Ruby. The minority of the participants so far use Visual Basic, Clojure, and Haskell. I think this is is relevant for the remaining answers – i.e. when it comes to the SOLID principles which mostly refer to object-oriented programming.

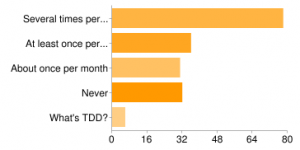

On the usage of TDD, I received some interesting answers so far.

43% use TDD regularly, 20% at least once per week, 17% about once per month. 17% never use TDD, and 3% don't even know what it is. This surprised me, as I thought that fewer people use TDD on a regular basis.

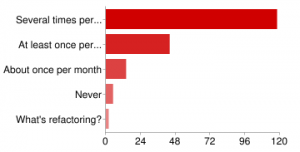

On refactoring the picture seems a bit better.

64% use refactoring several times per day; 24% use it at least once per week, 8% about once per month. 3% never use refactoring, while 1% does not know refactoring at all. More than 90% of the people who took the survey at least know about refactoring, and use it on a regular basis – ome more often than others. This didn't really surprise me, as today's IDEs – besides ones like XCode or Visual Studio – ship with support for automated refactorings. Probably that's why so many use them regularly.

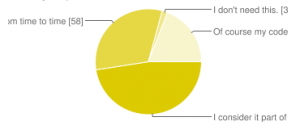

The final question in the programmer's survey referred to the SOLID principles.

48% consider it part of their work, while 32% think about some of the letters from time to time. 25 don't need SOLID, and 19% wondered about why I wrote it all uppercase. Surprisingly this number is also higher than the numbers I had thought of before sending out the survey. So I was again surprised.

Tester's survey

The tester survey was mainly motivated by Lee Copeland's Nine Forgettings. So far 104 people have filled it out.

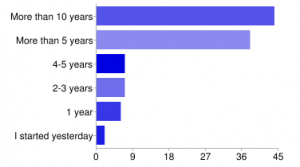

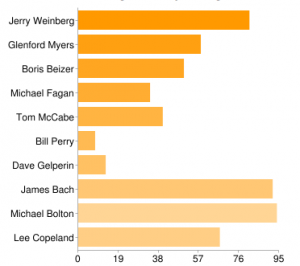

First of all I wanted to know about the experience in the field. I was interested in the experience of the people filling out the survey, and how this might correlate with the different viewpoints.

42% have more than 10 years of experience, 37% more than 5 years, 7% 4-5 years, 7% 2-3 years, 6% of about one year. 2% stated they started with testing just recently. This reflects a huge level of experience.

Next thing I asked for some testers in the past. Reflecting on Lee Copeland's presentation, I was interested in how many people actually know these testers.

90% know Michael Bolton, 88% James Bach, 78% Jerry Weinberg. Michael Fagan, who introduced inspections, know 33%, Dave Gelperin, who authored the IEEE-829 standard, know 13%, and Bill Perry, who founded SQE, know just 8% about. I think this reflects some worries from Lee, but I was still surprised by the knowledge about some of the past heads in software testing.

On the last book read by the tester, I collected together a bunch of books, which I found helpful in the past.

19% have read some other book, 18% Lessons Learned in Software Testing, 18% Agile Testing, and 13% Perfect Software… and other illusions about testing. On the other answers, one thing struck me. There were some people pointing to books as Growing Object-Oriented Software Guided by Tests, and Working Effectively with Legacy Code, or Continuous Delivery. I don't considered these as software testing books as to my understanding. I think this reflects the ambiguity that comes with the term software testing, but that could be just my interpretation.

Another thing struck me. 4% claimed they never have read a book on software testing.

On the book recommendations there were a few interesting points. At least two participants claimed that they read any of the ISTQB books last, and would not recommend any book on software testing. A lot of recommendations go out for Lessons Learned in Software Testing. Interesting in my data is also, that most of the participants who don't recommend a book, have read books like any of the ISTQB books, The Art of Software Testing, or Managing the Testing Process.

When I try not to overrate the data, I would claim that we do not have the same understanding of what good testing consists of – given the variety of responses I got.

Some thoughts

On the programmer's survey, I think my data is biased. I might have overestimated my reach with the survey here. I am quite sure that these data don't reflect the state of our software development craft.

On the tester's survey, I think my data is also biased. People reading my blog might actually be influenced by similar sources as I am. This means the context-driven and the Agile testing world. That's ok, but I hoped for a variety of responses here as well. I seem to have got such responses by accident, but would like to see more people filling out this form to gain more insights, maybe.

That's it for now. I hope to get some more responses, but don't put too much faith into it.

January 24, 2012

8 things you ought to know if you do not know anything about hiring a software tester

In a recent blog entry over at 8thLight's blog Angelique Martin points out to 8 things you ought to know if you do not know anything about hiring a software developer. Having been involved with the Software Craftsmanship movement since the early days, and 8thLight has played a major role in that movement early on, this list was compelling to me.

In short, Angelique reminds us to ask potential new employees for the development processes they used, their development practices, – particularly TDD, pair programming, short iterations, and continuous integration – and how they educated themselves and kept their claws sharp. She also points out that she would ask for a proof of their talent, how they estimated, how deadlines are met, and what they can say about the costs involved when developing software.

This list was so compelling to that I decided to put up a similar list with the things I was looking out for hiring a software tester. I believe there are some unique skills I would look for in a software tester that I would not necessarily look for in a programmer. So, here it is.

I would ask about their approach in different contexts. Agile software development is all around us. But still many companies also use more traditional approaches. In the end, one approach to testing might work in an Agile shop, while it would fail dramatically on a more traditional project. I would look for their adaptability to different contexts, and how they react to different situations. In today's world it's certainly not enough to know about the latest methodology, but also to apply different techniques as unique situations unfold. I would also look out for their tactics in fast-paced Agile iterations, and how they adapt to two week long sprints. What about one week? What about daily deployments?

I would ask about the testing techniques they could show me right now without preparation. My experience in our field is that testers visit a course or two – if they actually can do so. After that they use the 20 percent of testing knowledge that they need in their day job – without exercising their brain cells on the remaining 80 percent. Even worse, there is little interest in growing beyond these practices. If the potential new hire can share a lot of testing techniques without preparation, she certainly uses all of these different techniques regularly.

I would ask about their programming experiences. Fast-paced iterations call for test automation. Even if you don't work in an Agile context, programming knowledge will benefit you at creating different tools that assist your manual tests. In the end, you can talk to programmers on a different level, if you can at least read their code. Specifically I would ask the potential new tester if she could pair with me right now on some production code. I would also ask for experiences with TDD, pair programming, pair testing, continuous integration, and different automation tools and programming languages.

I would ask for heuristics they regularly use. Test automation alone is not enough. A good tester also needs to be aware of the oracles and heuristics by which we recognize a problem. A good tester also knows when to aim for more depth in their test sessions, or to aim for overview on a new product. These are covered in many of the mnemonics on software testing around. Maybe they even came up with their own mnemonic on aspects in their testing which they regularly use.

I would ask for a demonstration of their skills. This could mean to submit an example bug report as Lessons Learned in Software Testing points out. This certainly also means that I hand them some problem to test, and ask them to show me how they would test the particular application. Among the things I would look out for, is how they handle traps, and what basic assumptions about testing they follow through. I certainly would use one of the missions over at Testing Challenges.org. I would also spent an eye on how they try to clarify the mission, and how they involve the different stakeholders in their testing.

I would ask for how they work on their knowledge. What has been the latest book on software testing that they read? Which blogs do they read regularly? Did they invent a new testing technique (like the Black Viper testing)? Which conferences did they attend? Which online coaching opportunities did they take? Weekend Testing? Skype coaching? Miagi-Do? BBST? These are all examples of personal dedication to our craft. They also show a passion for life-long learning.

I would ask for how they collaborated. This includes collaboration with programmers like pair programming, but also with peers in pair testing sessions. I would also seek to get to know how they worked out specification details and acceptance tests with the customer. What is their approach to collaborate with programmers who don't give a thing about testing and testers? How do they show their unique value they bring to the software development process, and how do they get others engaged in what they do? Testers need not be alone in their daily struggle on quality, and certainly shouldn't be the gatekeeper about quality. I would look for a tester who understands that quality in the software is a team effort.

I would ask for their previous professional job. In the last year, I attended the second writing-about-testing workshop. During the introduction on the first day, everyone stated how she or he came into testing. Most of the participants didn't pick software testing as their first job. Eventually they found out about their passion for testing by accident. Since then I made it a habit to interview testers about their path to the profession. Most of them had interesting stories to tell, and even compelling insights about fields of expertise that suits any tester.

January 7, 2012

Tester Challenge Summary

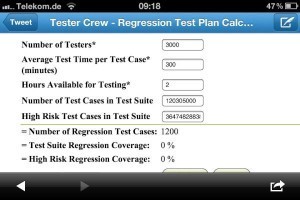

A while ago I put up a challenge for software testers. Here is the mission I used back then:

Product:

Regression Test Calculator

Mission:

Test the regression test calculator for any flaws you can find. You might gain bonus points, if you can find out how the calculation is done. Another set of bonus points if you can come up with a better approach.

In the meantime I found out that Ajay Balamurugadas actually found the link to the website, and sent it to James and Michael. I think he deserves some special kudos for this.

These are the responses I received.

I recevied some feedback over twitter. Tim Western challenged the purpose of the tool completely. He was not alone with this opinion. Justin Rohrman, and Ben Simo felt the same about the tool.

Michael Bolton noticed that "boundary" was mispelled in a link to another tool.

Peter H-L pointed out that you can actually get minus coverage. I wonder what minus coverage should mean. Maybe having tested a product less than untested at all.

jss mentioned that I should try one test case that takes one tester two hours. Two testers can execute this test in one hour. I think that calculator didn't know about Brooks' Law very well. Or the calculator also thinks that carrying out a baby takes two women 4.5 months.

Blog comments

I recevied four blog comments from my readers. One was actually a pingback from Rasmus Koorits who submitted his report via email. I will get to this in the next section.

Phil Kirkham played a bit with the calculator, but lost interest after getting the first division by zero error.

Jean-Paul Varwijk submitted his report on playing for fifteen minutes with the calculator. He found some interesting problems in just 15 minutes. I asked him for a condensed report. I hope he will reply on that.

Emile Zwiggelaar also went for a 15 minute session, and also found some interesting problems.

For all three reports, go back to the original blog entry, and read their comments. They found some quite interesting stuff.

I received exactly one report by email. It came from Rasmus Koorits, as I mentioned earlier. He had seven pages full of interesting stuff that he found. The report is very thorough. He showed great passion in the craft. I don't dare to put his report up here without having asked him beforehand. Maybe I will add this later. I think he deserves the most kudos for following up, and he did well on that.

Some endnotes

I intentionally didn't mention too much details about the bugs and problems. I hope that some readers will later dive into the topic, and submit a report on their own.

January 4, 2012

Some surveys on the state of our craft

One of my colleagues made a claim yesterday which I would like to put some numbers on. I raised the question on twitter, and received suspicious answers about the numbers of my colleague. Please forward this survey to anyone you know who is programming: http://www.shino.de/programmer-survey/ It consist of just four question, so you should be able to answer them in a few minutes.

Over twitter I also received the feedback that things are worse for testers. I would like to put numbers on that as well. Therefore I also put up an equally small survey for tester: http://www.shino.de/tester-survey/ Please forward this survey to anyone in the software business that you know of.

From time to time to I will publish some of the results. I aim for end of January for the first set of data.

January 2, 2012

Complexity Thinking and the MOI(J) Model

Last year I started to dive into the theory behind complexity thinking. What puzzled me ever since is the relationship between complexity thinking and the stuff that I learned from Jerry Weinberg. One sleepless night I stood up from bed, and searched my material from the PSL course. There I learned about a model that helps me leading people in different ways. While thinking over it, it occurred to me, that complexity thinking is a small subset of the MOI(J) model. Follow me on my mind-journey.

Complexity Thinking

Complexity Thinking evolves around three main concepts. That is, in each self-organizing there are several containers which determine the boundaries of smaller groups. Every organizational hierarchy could be thought of as such a container. But there are also others. In a matrix organization, containers also evolve around the project structures. Testers and programmers are also two different containers.

Containers themselves are defined by significant differences to other containers. There are also insignificant different between containers, and within containers themselves. Complexity Thinking states that the significant ones are meaningful for the work as a change agent.

The third concept has to do with exchanges. In order to self-organize, people in the system have to exchange their differences between each other. If there are too few exchanges, then the team will not be able to self-organize. The things we strive for as change artists are transformational exchanges, exchanges that yield a different behavior, a transformation.

As a change agent for self-organization this model helps us to find the right balance between containers, significant differences, and transformational exchanges. If people are stuck because they lack information from another department, organize an exchange between the two. If people self-organization is limited because the containers are not clear, then you shape these, or shape them differently.

MOI(J) model

The MOI(J) model consists of four aspects and two dimensions, on what leaders should take into account. The letters mean motivation, organization, information, and jiggle. The dimensions consist of observation and action.

In any group of people as a leader you can observe their motivation, how they are organized, and how information flows within the group, and beyond the boundaries. You can also observe whether the team is stuck in some particular sense.

Then you have different options to help the team, and lead them. You can change their motivation by introducing competition or by raising incentives. You can change their organization by promoting one of the team members. You can change their information flow by bringing in a colleague from that other department. Sometimes you can also jiggle the team, and show them new ways to handle the situation.

Now, complexity thinking appears to me to be a subset of the MOI(J) model. Transformational exchanges are changes that I may bring when I change their information flow. Significant differences can be mostly found in the way the team organizes their work, and what motivates them. Only the concept of containers is a bit vague for me in the MOI(J) model. It could have something to do with jiggling those boundaries once the team gets stuck. Yet, I found the MOI(J) model goes pretty beyond the Complexity Thinking model since it considers more variables for me as a system change agent.

December 30, 2011

A testing challenge

Yesterday James Bach rumored around a link to a test case execution time calculator. Besides the fact that it's complete non-sense to use such a thing for professional testing, I started to play around a bit. I ended up with a ridiculous result.

I think there is more to it. So, here is your mission, if you dare to accept it.

Product:

Regression Test Calculator

Mission:

Test the regression test calculator for any flaws you can find. You might gain bonus points, if you can find out how the calculation is done. Another set of bonus points if you can come up with a better approach.