Markus Gärtner's Blog, page 21

April 8, 2012

Let’s Test prequel with Martin Jansson

As a prequel to the Let’s Test conference in May, I interviewed some of the European context-driven testers. Today we have Martin Jansson, one of the test eye bloggers.

Markus Gärtner: Hi Martin, could you please introduce yourself? Who are you? What do

you do for work?

Martin Jansson: I am Martin Jansson. I live in Gothenburg with my family and has been there for the last 20 years. I have a house from 1919 that I train my house-fixing skills on. Having two kids I get little time focused on myself, still I spend some of that spare time to read, write and talk about testing. My personal goal is to boost the test community in western Sweden. I am co-founder of The Test Eye together with Rikard Edgren and Henrik Emilsson, who I’ve worked with on and off since 1998. Our initial idea was to keep in touch through a blog, but I guess it grew into something greater than our first intention.

I work for House of Test Consulting as consultant doing most anything related to testing that I am passionate about. I recently switched company and am now enjoying the freedom in deciding where I want to excelling in the test domain, but also affecting where we as a company want to head. I also enjoy working with so many passionate testers. The last years I have consulted at a client where I’ve have helped them evolve the test organization from traditional testing into a fast-paced testing style. This has been work in the actual test teams as well as at a managerial level. Working all levels at the same time is a lot of fun and brings good result.

Markus Gärtner: So, you transformed a whole testing organization on multiple levels. How did you do that? Anything the community can learn from your approach, maybe?

Martin Jansson: I have not transformed anything. I see product development as a social science. It is all about people. The people at my client on all levels are transforming the organization. My role is perhaps to act as a catalyst. Even though I believe that one person can affect a whole organization, but it is people in the organization that do the changes.

When I first started as a consultant at the client I worked as a line manager for testers and developers and had my whole line with me to the client. This made it possible to try new ways of working and show that things could be done differently. It also helped when the client wanted me to be test team lead. Another factor was also that the members of my team were excellent testers, it was easy to show greatness to our client. It helped a lot to have a test project manager that wanted to try new things and was interested in other approaches. During this time the whole organization was starting to change and move in a new direction so it was easier to maneuver and try out new things.

In times of major organizational change there are lots of opportunities. When many things are changing there is a lot of chaos so you need to hold on to a few threads of control. Here are a few things that I considered as a tester:

Know your stakeholders, prioritize the right things and deliver what is valuable to them

Do excellent testing; administration comes secondary (unless that is in fact the most important)

Another key success factor that I suggest more consultants do is to work actively with other consultants even if they are from other companies. By cooperating closely you will better help your client and you might be able to discuss common goals on what you are trying to achieve. I’ve seen many consultants that work in a way that makes them irreplaceable and a key person in the organization. By doing that you are in a way pushing down the employees so that you to some extent can guarantee an extended contract, which I think is wrong. I try to make myself replaceable and instead empower employees around me so that when I am not there it won’t be noticed. At the moment I am working and coordinating with Henrik Emilsson, who is training in agile testing to one part of the test organization, and Steve Öberg, who is coaching and training agile testing in a different part of the test organization. Henrik works at a different company than I do, but Steve and I work at House of Test together in Gothenburg. The three of us can together do things that benefit our client more than if we worked on our own.

During the last year I’ve worked as discipline driver in the test management team. This means that I am helping the organization in all kinds of test related situations. The tasks are ever changing, bringing on new contexts and new problems to solve. An example could be that in one team, testers were re-running the same test case several times just to show progress by counting test cases. The project management asked for progress and based their figures on amount of test cases run. I could then discuss with the project management that they get what they ask for and that it would be more valuable to ask other types of questions if they were interested in progress and in perceived quality of the system. By changing the way project management asked questions, the testers changed their behavior in how they tested and how they reported progress.

In big organizations there are so many things going on and making changes take time. Nothing is really done by just doing this and that. So when you want to see change, you really need to be patient and appreciate the small things. After a while the small things have summed up into a turning of the tide.

Markus Gärtner: How have you crossed the path of context-driven testing?

Martin Jansson: Honestly, I cannot remember how it happened. I’d rather say that I crossed paths with different people who had similar ideas like my own. When I worked at Spotfire in the early 2000, we were continuously investigating new test techniques and methods. I remember finding articles such as The Ongoing Revolution of Software Testing by Cem Kaner, which changed me and my team. Then we found a lot of material from James Bach and other great thinkers. We were not really doing scripted testing, rather following one-liner test ideas and using exploratory testing around the area. I’ve described this as a form of Check-Driven Testing. When we started to read about Exploratory Testing, it was interesting to get a name to what we partly had been doing even if we were not so structured.

When I joined the mailing list for context-driven testing I found even more material and great minds. I have not been involved in the discussion there, but we all do things that fit us best in how to contribute to a better test community, a mailing list is not optimal for me. Instead I blog and talk to people.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Martin Jansson: As discipline driver for testing at this client I affect how testing is done and try to affect where we should go with testing. I like the context-driven testing approach, it also fits well with what we are trying to achieve. In some cases, to express where we come from and where we are heading I use the schools of testing. I try to introduce my approach to testing that fit in their context, but it will always be affected with what I think and with what my experiences have taught me. For instance, I see the need for handling both testing and checking in an excellent and structured way.

Markus Gärtner: Let me put some context to context-driven testing. Consider a context where your test team consists of educated nurses. The team is applying Scrum with co-located testers on a C#-project for a Windows platform. They work on a product for doctor’s offices. Which kinds of testing would you suggest? What are the factors favoring one approach over another?

Martin Jansson: I will express a few of my thoughts. I interpret the context as having a group of people in my team that has the domain knowledge and have a good idea on what problem we are trying to solve with the product. I have little experience of the domain, but could bring my knowledge as a generalist tester. I would start by asking what our mission[s] was in this context. I would investigate the immediate need in the team on us and what is most prioritized. I would together with my testers plunge in and do some exploring/testing/learning what we have right now. I would try to outline and create a model (one to start with) of the product to easier discuss what we test. As soon as I am able to work more long term, I would have an introduction to testing, some training and exercises to enter the right mindset for the test team. I would investigate what other expectations we have on the team such as information/documentation, that could affect how we work.

It is hard to say what kinds of testing I would suggest. I am not sure what different kinds of testing is in this context. If you ask if I would focus on requirements, I would say no because that is only one of many coverage models. We would setup lists of things we need to check, some might be automatable and some might be used in checklists. We would probably try out some form of session-based testing if that works with the test team. I like to work that way and would see if it is possible and if it provides value to the team.

I have an exploratory approach to testing. I cannot change that approach or my perception on what testing is and what we could do. Still, if the client wants something that I do not believe in or that I cannot deliver I will discuss the implications and the possible value loss for the client. Another thing, I would see the nurses in my team as intelligent persons and I would do all I can to coach and share my experiences and skills to the team. I know that context changes and that we need to adapt to reality, so I would be open for trying new things as well as using methods/techniques that are proven to work in their context at a certain time in the past.

Markus Gärtner: Where do you see the biggest challenges as of today in the European testing community? How can we solve these problems?

Martin Jansson: I’ve blogged about one of the problems having to do with the perception of testers. How to get out of the rut… well as testers we need to aim for getting better as testers by learning and excelling in what we do and deliver, we need to work on how and what we communicate, we need to investigate in other sciences to become better, to name a few.

There is still a focus on verifying requirements (the written ones) and just that. Anything that is outside of this should not be tested, some say. If the focus of testing is in this little area we miss so much. In the training I do I usually talk about testing outside the map and lately about the Universe of behaviors based on Iain McCowatt’s reflections in Spec Checking and Bug blindness. As a natural consequence you enter the discussion with testing vs. checking. As I see it, we need to do both, but testers should focus on testing and we should try to automate as much of the checking as possible if that is valuable.

I worked with a friend of mine, who has been a developer for several years. During this time we have talked a lot about testing and he has even gone through the RST course with James Bach. His awareness of testing is great and according to him, he has tasted the sweetness of great collaboration between tester and developer as well as seen what great testing could do. Lately he has been working for a new client and has worked with testers from the traditional schools/approaches to testing. He expresses a noticeable change in what testing is delivering to him and the ambition on what they could and should do.

Developers should expect greatness from testers. We could be an excellent partner in making great products.

Markus Gärtner: Programmers should expect greatness from testers, you say. What are the benefits testers can bring to your development team? How can we train and coach testers, programmers, and managers about these values?

Martin Jansson: The exploratory approach promotes a tester that is interested in learning and wants to get better. It also implies that the tester can do all parts of testing, not execution of another person’s test instructions. By promoting this view of a tester and by showing (not just talking) what a great tester can do in a cross-functional team or in general in product development, we will be able to raise the expectations or perhaps alter them to something that requires and promotes great testing. This could also mean that the teams and organizations really do not want the low value added testing any longer.

I try to show how you quickly can get started testing in various projects at my client. I show this by taking a tiny testing area then sit down with a tester I am coaching/training. Based on this tiny (as far as we thought initially) area, I start asking questions and we discuss and mind map different aspects. I use different heuristics and talk about different coverage models. After a short while we naturally end up with a much bigger area than we initially thought. We consider what would be most valuable to the team and where our focus should be. We then start testing, where I let the tester drive while I sit in the back coaching/documenting. I continue to identify new questions but let the tester lead the way. We take breaks and check our initial map, using it as an overall report. In this coaching session, I try to spend 15 min to do the initial map, 1 hour for a test session and 15 min for a debrief/roundup summarizing the result in the mind map.

Someone used to the traditional way of working is usually amazed on how much we accomplished in such a short time. This is like a demo of how testing could be done, perhaps differently to some persons and organizations.

How do you sell this to the organization? I guess you need to be able to show your testing skills and back them up with theory, and vice versa.

Markus Gärtner: Consider Let’s Test is over. What has happened that made it a success?

Martin Jansson: When I talk about the conference’ I am most thrilled about the idea that we are co-located all at the same spot, that after we have conferred with the speakers and attendees, then we have many other events that take place well into the night. So the conference will almost be round-the-clock. My focus will be in the testlab (or perhaps Lets TestLab?) together with James Lyndsay. We aim for setting up a testlab that will tickle your minds and where we can practice our skills together.

When the conference is over I hope I’ve met lots of great testers whom I want to work with in the future at possible clients, that I’ve found new techniques in how to do better testing, that I am full of energy and lots of new ideas for future test work.

Markus Gärtner: Tell us more about the Let’s TestLab. As a conference visitor why would I spend time in the lab and miss all those great talks?

Martin Jansson: Glad that you brought that up! The core idea for Let’s TestLab is that we should not compete with other speakers. Still, there will be other events taking place during the evening, so that the attendees can choose what they are interested in. Let’s TestLab starts in the evening and continues into the night. This means that we will be able to have longer sessions, not just between breaks as it has been previously. This year me and James Lyndsay will have the test lab together.

We will not have as many laptops available as before, so it would be excellent if the conference participants could bring their own laptops and use them in the test lab. We also suggest that speakers backup their claims or theories in the lab after their sessions, and that they coordinate this with either me or James.

We will not have so much focus on sponsors and their tools in the Let’s TestLab. Instead it will be even stronger focus on testing and conferring. Watch the Let’s Test Conference Blog for new information about the test lab.

Markus Gärtner: Consider time travelling being possible now. 30 years from now, what will be different?

Martin Jansson: I assume you mean that it is only me that has the ability to do time travelling. I cannot predict the future. Still, I see that the awareness of testing is growing and that in 30 years it should probably have grown a bit further. But I am still afraid that the two futures of Software Testing painted by Rikard Edgren and Michael Bolton are still possible. The majority of the test profession is going for the dark future that Michael talks about. I just hope there are enough of us to promote a future which promotes the testing craft as something that requires intelligence and skill.

Markus Gärtner: Thanks for your answers. Looking forward to meeting you at the TestLab at Let’s Test.

Let's Test prequel with Martin Jansson

As a prequel to the Let's Test conference in May, I interviewed some of the European context-driven testers. Today we have Martin Jansson, one of the test eye bloggers.

Markus Gärtner: Hi Martin, could you please introduce yourself? Who are you? What do

you do for work?

Martin Jansson: I am Martin Jansson. I live in Gothenburg with my family and has been there for the last 20 years. I have a house from 1919 that I train my house-fixing skills on. Having two kids I get little time focused on myself, still I spend some of that spare time to read, write and talk about testing. My personal goal is to boost the test community in western Sweden. I am co-founder of The Test Eye together with Rikard Edgren and Henrik Emilsson, who I've worked with on and off since 1998. Our initial idea was to keep in touch through a blog, but I guess it grew into something greater than our first intention.

I work for House of Test Consulting as consultant doing most anything related to testing that I am passionate about. I recently switched company and am now enjoying the freedom in deciding where I want to excelling in the test domain, but also affecting where we as a company want to head. I also enjoy working with so many passionate testers. The last years I have consulted at a client where I've have helped them evolve the test organization from traditional testing into a fast-paced testing style. This has been work in the actual test teams as well as at a managerial level. Working all levels at the same time is a lot of fun and brings good result.

Markus Gärtner: So, you transformed a whole testing organization on multiple levels. How did you do that? Anything the community can learn from your approach, maybe?

Martin Jansson: I have not transformed anything. I see product development as a social science. It is all about people. The people at my client on all levels are transforming the organization. My role is perhaps to act as a catalyst. Even though I believe that one person can affect a whole organization, but it is people in the organization that do the changes.

When I first started as a consultant at the client I worked as a line manager for testers and developers and had my whole line with me to the client. This made it possible to try new ways of working and show that things could be done differently. It also helped when the client wanted me to be test team lead. Another factor was also that the members of my team were excellent testers, it was easy to show greatness to our client. It helped a lot to have a test project manager that wanted to try new things and was interested in other approaches. During this time the whole organization was starting to change and move in a new direction so it was easier to maneuver and try out new things.

In times of major organizational change there are lots of opportunities. When many things are changing there is a lot of chaos so you need to hold on to a few threads of control. Here are a few things that I considered as a tester:

Know your stakeholders, prioritize the right things and deliver what is valuable to them

Do excellent testing; administration comes secondary (unless that is in fact the most important)

Another key success factor that I suggest more consultants do is to work actively with other consultants even if they are from other companies. By cooperating closely you will better help your client and you might be able to discuss common goals on what you are trying to achieve. I've seen many consultants that work in a way that makes them irreplaceable and a key person in the organization. By doing that you are in a way pushing down the employees so that you to some extent can guarantee an extended contract, which I think is wrong. I try to make myself replaceable and instead empower employees around me so that when I am not there it won't be noticed. At the moment I am working and coordinating with Henrik Emilsson, who is training in agile testing to one part of the test organization, and Steve Öberg, who is coaching and training agile testing in a different part of the test organization. Henrik works at a different company than I do, but Steve and I work at House of Test together in Gothenburg. The three of us can together do things that benefit our client more than if we worked on our own.

During the last year I've worked as discipline driver in the test management team. This means that I am helping the organization in all kinds of test related situations. The tasks are ever changing, bringing on new contexts and new problems to solve. An example could be that in one team, testers were re-running the same test case several times just to show progress by counting test cases. The project management asked for progress and based their figures on amount of test cases run. I could then discuss with the project management that they get what they ask for and that it would be more valuable to ask other types of questions if they were interested in progress and in perceived quality of the system. By changing the way project management asked questions, the testers changed their behavior in how they tested and how they reported progress.

In big organizations there are so many things going on and making changes take time. Nothing is really done by just doing this and that. So when you want to see change, you really need to be patient and appreciate the small things. After a while the small things have summed up into a turning of the tide.

Markus Gärtner: How have you crossed the path of context-driven testing?

Martin Jansson: Honestly, I cannot remember how it happened. I'd rather say that I crossed paths with different people who had similar ideas like my own. When I worked at Spotfire in the early 2000, we were continuously investigating new test techniques and methods. I remember finding articles such as The Ongoing Revolution of Software Testing by Cem Kaner, which changed me and my team. Then we found a lot of material from James Bach and other great thinkers. We were not really doing scripted testing, rather following one-liner test ideas and using exploratory testing around the area. I've described this as a form of Check-Driven Testing. When we started to read about Exploratory Testing, it was interesting to get a name to what we partly had been doing even if we were not so structured.

When I joined the mailing list for context-driven testing I found even more material and great minds. I have not been involved in the discussion there, but we all do things that fit us best in how to contribute to a better test community, a mailing list is not optimal for me. Instead I blog and talk to people.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Martin Jansson: As discipline driver for testing at this client I affect how testing is done and try to affect where we should go with testing. I like the context-driven testing approach, it also fits well with what we are trying to achieve. In some cases, to express where we come from and where we are heading I use the schools of testing. I try to introduce my approach to testing that fit in their context, but it will always be affected with what I think and with what my experiences have taught me. For instance, I see the need for handling both testing and checking in an excellent and structured way.

Markus Gärtner: Let me put some context to context-driven testing. Consider a context where your test team consists of educated nurses. The team is applying Scrum with co-located testers on a C#-project for a Windows platform. They work on a product for doctor's offices. Which kinds of testing would you suggest? What are the factors favoring one approach over another?

Martin Jansson: I will express a few of my thoughts. I interpret the context as having a group of people in my team that

April 2, 2012

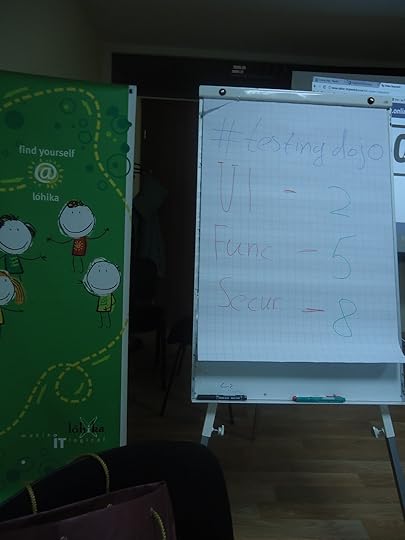

Testing Dojos in Kyiv, Ukraine

This is a guest blog entry from Andrii Dzynia. He contacted me a while ago on Testing Dojos, and wanted to run a session in Kyiv, Ukraine on his own. It seems to me they had a great time. This is a translation a translation by Andrii from the Ukraine. You will find the original blog entry on the bottom of this post.

It was great, fun and… well, just cool! Yep, I will write here about the first Testing Dojo in Kyiv, Ukraine.

QA Skills held it, and we were located in one of the Kyiv IT Company – Lohika Systems.

The dojo started with the introduction of the rules and our mission statement. The attendees were going to deal with touring heuristics FCC CUTS VIDS and pass as many of the tours as possible.

Testing Dojo Kyiv – Testing Heuristics

For each bug found the attendees received points to win these prizes.

In 15 minute iterations the teams tested in tours, and logged the issues they could find. It was hard for all to focus on testing and bug reporting to fit into this timeframe while still getting the best results.

But everything went fine and the winner is the Crashers team.

Do you want to know how they did it? These guys were changing their tactics every 15 minutes. First they were working in chaos, then they found out that this was a bad deal and started to do a little test design using mind-maps Also they did experiments with roles and came up with the formula: 2 testers, one observer, one reporter.

Each of the attendees went home with a lot of practical experience working in a test team. Some of them agreed that team work is better as one team and not just with a group of people testing in separate. Exploratory Testing with the heuristics mission helped them to focus and spend time only on the required tasks.

We are very thankful for Markus who answered a lot of questions before organizing this event! And we will repeat this in the near future.

Stay tuned!

Materials

1. Original article

2. About Heuristics from Jonathan Kohl

April 1, 2012

Let’s Test prequel with Alan Richardson

As a prequel to the Let’s Test conference in May, I interviewed some of the European context-driven testers. Today we have Alan Richardson, the evil tester and author of Selenium Simplified.

Markus Gärtner: Hi Alan, could you please introduce yourself? Who are you? What do you do for work?

Alan Richardson: Hi Markus, thanks for the interview.

I have tested software for a long time now. I started as a programmer writing testing tools for a test consultancy and given the natural evolution in an environment like that, I started testing and consulting through them. Then I went independent and have moved between independent status and permanent employment status a few times.

I have once again adopted independent status. I’ve worked as: manager, head of testing, tester, performance tester, automation specialist, test lead, etc. etc. I need to remain hands on, so I have to keep my programming skills, and my technical knowledge up to date, and most importantly, my testing skills up to date.

In a nutshell; I test stuff and I help people test stuff.

Markus Gärtner: You changed between employment and independent work quite a few times. What do you like about independent work? What do you like about an employment?

Alan Richardson: Employment usually comes with more opportunity for ownership. So I usually get the chance to build the process and the team and have more responsibility for the personal development of the people working there. Independence gives me more freedom and choices, and I gain more time to pursue the side projects on my todo list.

Markus Gärtner: How have you crossed the path of context-driven testing?

Alan Richardson: I got frustrated with the whole waterfall/structured testing approaches during my early baby tester years and started trying different ways of testing. My experiments required that I balance my demands of personal effectiveness and waste avoidance, against the company demands of following their mandated standard process. I learned early on to “game” my test approach and tailor what I did so that I bent rather than broke the rules. I view these as my first tentative steps towards contextual testing. I didn’t know of the context-driven label at this time, so I thought of myself as some sort of surreptitious efficiency engineer.

And as I went from site to site and role to role, I learned to take more responsibility for my personal test approach within the context of another person’s mandated test process. When I eventually did learn about exploratory testing and context-driven testing, they seemed like natural, well labeled ways for testers to approach their testing. Also, I had taken many of my personal testing process influences from psychotherapy, so many of the non-testing subjects I studied; cybernetics, systems theory, Virginia Satir etc. overlapped with the influences mentioned by the context-driven testing community.

As I progressed in my testing career and when I eventually had control over the test processes we used, I didn’t have to work around the test process anymore. I could tailor it for the context that I perceived around me and I tried to recruit and guide testers accordingly. As my career has moved into more Agile Software Development teams, a context-driven and exploratory test approach has become the expected norm.

Markus Gärtner: What did you learn from cybernetics and systems theory regarding testing? In a nutshell, what is the most useful (for you) concept you ran into?

Alan Richardson: Cybernetics affected me on a very wide and general level in terms of modeling systems and arming me with additional metaphors for approaching my modeling of context.

In terms of a single specific concept, I have taken a lot of value from the notion of Requisite Variety. You can find it in Ross Ashby’s Introduction to Cybernetics and extensively explored in Stafford Beer’s work.

Requisite Variety to me means that to survive in a system you have to have enough flexibility to deal with the inputs that the system you are working within will throw at you. There are pithier statements of Ashby’s Law available e.g. “only variety can absorb variety” but we can use normal language to describe this stuff as well, so I also think of it as “you have to be able to handle what life throws at you”.

As a concept it helps me in a number of ways. Is my process flexible enough? Do I have enough ways of thinking through a problem? Have I modelled the system from enough angles? How else could I respond?

When testing I use it to think; have I provided the application with enough variety of input to expose the limits of its ability to respond? And it prompts me to think through the scope of my dealing with the system in different ways.

Coupled with other notions from Cybernetics e.g. attenuation and amplification, I can expand my use of Requisite Variety and ask – has my process survived, not because of Requisite Variety, but because I attenuated the input i.e. I filtered the input, and changed it, rather than dealing with it raw? So did my process survive, not because it deserved to, but because it cheated and ignored input.

I also apply Requisite Variety to the context, as well as my dealings with the context. To remind myself that the context can exhibit Requisite Variety in the face of my madness. e.g. I could put together a very rigid process, that doesn’t handle the inputs of the real world, and doesn’t deal with the level of change in the environment but the context changes to allow my bad process to survive.

I think I see this a lot in human systems e.g. if a test process says “we can’t test under these circumstances, we need stable requirements”, and then the software process slows down, creates full requirements specifications that can only change every 6 months and only with agreed change requests. Then we are seeing a context (software development process) exhibit greater Requisite Variety than a sub system (testing). When really the testing sub system should have been declared non-viable until it exhibits the Requisite Variety required to survive in the context.

Requisite Variety shows up in Systems Thinking texts as well, but I like to get all retro and old fashioned.

Fundamentally, Requisite Variety reminds me to continually up my game so that I don’t have just enough variety to survive but have more than enough to allow me to succeed.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Alan Richardson: I don’t think I know how not to. I have to take context into account now. I’ve studied too much systems theory and cybernetics, so I tend to model everything as a System. Every role that I do requires me to gain an understanding of the people involved, the tools used, the technology in place, the goals sought, the skill sets and budgets available, the timescales etc. etc.

I assume that if I didn’t take context into account then when I come on to a new site I would whip out the templates I used before, and lay down all the rules that I had built up before or taken from an official standard or book. And despite blogging under the URL of eviltester.com, I’m not Evil enough to do that.

I try and start from nothing, and build up what we need. Clearly I bring in my beliefs and principles, but I make every effort to contextualise every decision that I take.

As an example, in my current role at the time of this interview, I recommended an automation tool that I have used in the past, and didn’t like. But contextually it seemed like a good fit for my current client given their goals and situation. Since it seems to fit the current context, I find I currently enjoy working with it (mostly). A side benefit, I think, of making a contextual decision.

Markus Gärtner: You say, that with more and more agile projects, a context-driven testing approach has become the norm. Does that mean that I should test context-driven especially on an agile project? Where does the world of agile development and the world of context-driven testing meet?

Alan Richardson: An Agile software process presents a context, like every other process. And I think we should approach software development processes contextually.

Agile provides the healthy challenge of a process under continual adaptation with the participants taking responsibility for the process, continually learning, improving and changing.

If I try to ignore those aspects of the context then I will fail very very quickly. Bringing me back to Requisite Variety, testing has to exhibit contextually appropriate variety.

Yes, I think you should approach an Agile project with a context-driven frame of mind.

Where do they meet? Well, I think of the Agile development world that I visit, as a contextually driven world. And if I imagine the context-driven testing world as a separate celestial body, then I imagine that they engage in friendly and copious intergalactic trade with each other and that the civilisations probably interbreed by now.

Markus Gärtner: Your blog is called “EvilTester”. Do you think, testers need to be evil? How evil do you consider you personally yourself? Why?

Alan Richardson: Ah ha! I think we should make sure we stick a capital E on the E word. It appears much more impressive that way.

Evil Tester is the cartoon character that I created as a release vent for my project and process frustrations early in my career. I used to scribble little cartoons – some of which I tidied up and put on the blog.

I found it entertaining to embrace the notion that if “system testing is a necessary Evil” then “Evil testing is a necessary system”, and I enjoyed creating a few catchy marketing slogans that empowered me to explore thinking differently.

For myself I think I’m using the E word in the sense of something that could earn the label ‘Evil’ from ‘others’ because of Heretical views, something that questions Dogma, and takes responsibility for its own actions and system of beliefs. But the H word doesn’t have the same impact as the E word.

I also consider it vital that I apply a sense of humour when I review and produce my work. The Evil Tester acts as a convenient Jester and Trickster figure to help me with that.

I don’t think too many testers wear badges on “Be Nice To Systems” day, it certainly isn’t a day that I celebrate. And I do think more testers need to expand their test approaches into areas that they might currently consider unthinkable. I will do what I can to tempt them into doing that.

Markus Gärtner: As you scribbled a few cartoons on your own, did you publish any testing related cartoons? Maybe there is some competition for Andy Glover, the cartoon tester coming up from your side?

Alan Richardson: I’ve gone down the self published route, so currently my blog is my cartoon publishing medium.

For anyone in the test community that wants to communicate visually through cartoons, Andy fills the position of the exemplar. I love the way his work continues to improve. I don’t think I provide competition to Andy. Andy has actually made it easy for me to slack off on the cartoons because I know that someone else is actively serving the test community in that way.

Most of my talks are heavily illustrated with cartoons, but I haven’t published them separately.

Ideally I’d like to try a collaboration with Andy but we haven’t found the time.

Markus Gärtner: Consider Let’s Test is over. It has been the most awesome conference you have been to – ever. What happened that made it the most awesome conference?

Alan Richardson: It was a whole bunch of things. The quality of the delegates, drawn to the notion of context, they all approached testing uniquely and talked and debated from experience rather than dogma and theory. Talking to people between sessions was an enlightening pleasure, we had to reluctantly stop talking when it came time to participate in a session. The speakers talked from the heart, and didn’t trot out the usual nonsense. And what a great line up, I was really excited to attend before the conference, and looking back I can see I wasn’t excited enough. My only regret was that I couldn’t hear everyone. But the social activities and evening events really helped bring us together so I managed to catch up with people in the evening that I couldn’t hear during the day. And then of course there was that thing, with that guy, where he had all that stuff, I still don’t know how he did that thing with the other thing, amazing, that was so funny.

Markus Gärtner: Imagine time travelling is possible now. How would you test your first time machine? Would you use it yourself? Would you travel to the future or the past?

Alan Richardson: First I’d set it to ‘now’ and see what happens.

If it still worked after doing that, then I’d set it to the past. I learned archery and fencing, grew my beard and hair, for this very ‘time-travelling will be invented in my lifetime’ contingency. Set it to 1784 and see if I could help make Cagliostro’s attempted scam, to part Marie Antionette from her diamond necklace, a success. At the very least I’d come back with a dashing new wardrobe, ready for my

next conference talk.

Markus Gärtner: Thanks for your time. Looking forward to meet you at Let’s Test in May.

Let's Test prequel with Alan Richardson

As a prequel to the Let's Test conference in May, I interviewed some of the European context-driven testers. Today we have Alan Richardson, the evil tester and author of Selenium Simplified.

Markus Gärtner: Hi Alan, could you please introduce yourself? Who are you? What do you do for work?

Alan Richardson: Hi Markus, thanks for the interview.

I have tested software for a long time now. I started as a programmer writing testing tools for a test consultancy and given the natural evolution in an environment like that, I started testing and consulting through them. Then I went independent and have moved between independent status and permanent employment status a few times.

I have once again adopted independent status. I've worked as: manager, head of testing, tester, performance tester, automation specialist, test lead, etc. etc. I need to remain hands on, so I have to keep my programming skills, and my technical knowledge up to date, and most importantly, my testing skills up to date.

In a nutshell; I test stuff and I help people test stuff.

Markus Gärtner: You changed between employment and independent work quite a few times. What do you like about independent work? What do you like about an employment?

Alan Richardson: Employment usually comes with more opportunity for ownership. So I usually get the chance to build the process and the team and have more responsibility for the personal development of the people working there. Independence gives me more freedom and choices, and I gain more time to pursue the side projects on my todo list.

Markus Gärtner: How have you crossed the path of context-driven testing?

Alan Richardson: I got frustrated with the whole waterfall/structured testing approaches during my early baby tester years and started trying different ways of testing. My experiments required that I balance my demands of personal effectiveness and waste avoidance, against the company demands of following their mandated standard process. I learned early on to "game" my test approach and tailor what I did so that I bent rather than broke the rules. I view these as my first tentative steps towards contextual testing. I didn't know of the context-driven label at this time, so I thought of myself as some sort of surreptitious efficiency engineer.

And as I went from site to site and role to role, I learned to take more responsibility for my personal test approach within the context of another person's mandated test process. When I eventually did learn about exploratory testing and context-driven testing, they seemed like natural, well labeled ways for testers to approach their testing. Also, I had taken many of my personal testing process influences from psychotherapy, so many of the non-testing subjects I studied; cybernetics, systems theory, Virginia Satir etc. overlapped with the influences mentioned by the context-driven testing community.

As I progressed in my testing career and when I eventually had control over the test processes we used, I didn't have to work around the test process anymore. I could tailor it for the context that I perceived around me and I tried to recruit and guide testers accordingly. As my career has moved into more Agile Software Development teams, a context-driven and exploratory test approach has become the expected norm.

Markus Gärtner: What did you learn from cybernetics and systems theory regarding testing? In a nutshell, what is the most useful (for you) concept you ran into?

Alan Richardson: Cybernetics affected me on a very wide and general level in terms of modeling systems and arming me with additional metaphors for approaching my modeling of context.

In terms of a single specific concept, I have taken a lot of value from the notion of Requisite Variety. You can find it in Ross Ashby's Introduction to Cybernetics and extensively explored in Stafford Beer's work.

Requisite Variety to me means that to survive in a system you have to have enough flexibility to deal with the inputs that the system you are working within will throw at you. There are pithier statements of Ashby's Law available e.g. "only variety can absorb variety" but we can use normal language to describe this stuff as well, so I also think of it as "you have to be able to handle what life throws at you".

As a concept it helps me in a number of ways. Is my process flexible enough? Do I have enough ways of thinking through a problem? Have I modelled the system from enough angles? How else could I respond?

When testing I use it to think; have I provided the application with enough variety of input to expose the limits of its ability to respond? And it prompts me to think through the scope of my dealing with the system in different ways.

Coupled with other notions from Cybernetics e.g. attenuation and amplification, I can expand my use of Requisite Variety and ask – has my process survived, not because of Requisite Variety, but because I attenuated the input i.e. I filtered the input, and changed it, rather than dealing with it raw? So did my process survive, not because it deserved to, but because it cheated and ignored input.

I also apply Requisite Variety to the context, as well as my dealings with the context. To remind myself that the context can exhibit Requisite Variety in the face of my madness. e.g. I could put together a very rigid process, that doesn't handle the inputs of the real world, and doesn't deal with the level of change in the environment but the context changes to allow my bad process to survive.

I think I see this a lot in human systems e.g. if a test process says "we can't test under these circumstances, we need stable requirements", and then the software process slows down, creates full requirements specifications that can only change every 6 months and only with agreed change requests. Then we are seeing a context (software development process) exhibit greater Requisite Variety than a sub system (testing). When really the testing sub system should have been declared non-viable until it exhibits the Requisite Variety required to survive in the context.

Requisite Variety shows up in Systems Thinking texts as well, but I like to get all retro and old fashioned.

Fundamentally, Requisite Variety reminds me to continually up my game so that I don't have just enough variety to survive but have more than enough to allow me to succeed.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Alan Richardson: I don't think I know how not to. I have to take context into account now. I've studied too much systems theory and cybernetics, so I tend to model everything as a System. Every role that I do requires me to gain an understanding of the people involved, the tools used, the technology in place, the goals sought, the skill sets and budgets available, the timescales etc. etc.

I assume that if I didn't take context into account then when I come on to a new site I would whip out the templates I used before, and lay down all the rules that I had built up before or taken from an official standard or book. And despite blogging under the URL of eviltester.com, I'm not Evil enough to do that.

I try and start from nothing, and build up what we need. Clearly I bring in my beliefs and principles, but I make every effort to contextualise every decision that I take.

As an example, in my current role at the time of this interview, I recommended an automation tool that I have used in the past, and didn't like. But contextually it seemed like a good fit for my current client given their goals and situation. Since it seems to fit the current context, I find I currently enjoy working with it (mostly). A side benefit, I think, of making a contextual decision.

Markus Gärtner: You say, that with more and more agile projects, a context-driven testing approach has become the norm. Does that mean that I should test context-driven especially on an agile project? Where does the world of agile development and the world of context-driven testing meet?

Alan Richardson: An Agile software process presents a context, like every other process. And I think we should approach software development processes contextually.

Agile provides the healthy challenge of a process under continual adaptation with the participants taking responsibility for the process, continually learning, improving and changing.

If I try to ignore those aspects of the context then I will fail very very quickly. Bringing me back to Requisite Variety, testing has to exhibit contextually appropriate variety.

Yes, I think you should approach an Agile project with a context-driven frame of mind.

Where do they meet? Well, I think of the Agile development world that I visit, as a contextually driven world. And if I imagine the context-driven testing world as a separate celestial body, then I imagine that they engage in friendly and copious intergalactic trade with each other and that the civilisations probably interbreed by now.

Markus Gärtner: Your blog is called "EvilTester". Do you think, testers need to be evil? How evil do you consider you personally yourself? Why?

Alan Richardson: Ah ha! I think we should make sure we stick a capital E on the E word. It appears much more impressive that way.

Evil Tester is the cartoon character that I created as a release vent for my project and process frustrations early in my career. I used to scribble little cartoons – some of which I tidied up and put on the blog.

I found it entertaining to embrace the notion that if "system testing is a necessary Evil" then "Evil testing is a necessary system", and I enjoyed creating a few catchy marketing slogans that empowered me to explore thinking differently.

For myself I think I'm using the E word in the sense of something that could earn the label 'Evil' from 'others' because of Heretical views, something that questions Dogma, and takes responsibility for its own actions and system of beliefs. But the H word doesn't have the same impact as the E word.

I also consider it vital that I apply a sense of humour when I review and produce my work. The Evil Tester acts as a convenient Jester and Trickster figure to help me with that.

I don't think too many testers wear badges on "Be Nice To Systems" day, it certainly isn't a day that I celebrate. And I do think more testers need to expand their test approaches into areas that they might currently consider unthinkable. I will do what I can to tempt them into doing that.

Markus Gärtner: As you scribbled a few cartoons on your own, did you publish any testing related cartoons? Maybe there is some competition for Andy Glover, the cartoon tester coming up from your side?

Alan Richardson: I've gone down the self published route, so currently my blog is my cartoon publishing medium.

For anyone in the test community that wants to communicate visually through cartoons, Andy fills the position of the exemplar. I love the way his work continues to improve. I don't think I provide competition to Andy. Andy has actually made it easy for me to slack off on the cartoons because I know that someone else is actively serving the test community in that way.

Most of my talks are heavily illustrated with cartoons, but I haven't published them separately.

Ideally I'd like to try a collaboration with Andy but we haven't found the time.

Markus Gärtner: Consider Let's Test is over. It has been the most awesome conference you have been to – ever. What happened that made it the most awesome conference?

Alan Richardson: It was a whole bunch of things. The quality of the delegates, drawn to the notion of context, they all approached testing uniquely and talked and debated from experience rather than dogma and theory. Talking to people between sessions was an enlightening pleasure, we had to reluctantly stop talking when it came time to participate in a session. The speakers talked from the heart, and didn't trot out the usual nonsense. And what a great line up, I was really excited to attend before the conference, and looking back I can see I wasn't excited enough. My only regret was that I couldn't hear everyone. But the social activities and evening events really helped bring us together so I managed to catch up with people in the evening that I couldn't hear during the day. And then of course there was that thing, with that guy, where he had all that stuff, I still don't know how he did that thing with the other thing, amazing, that was so funny.

Markus Gärtner: Imagine time travelling is possible now. How would you test your first time machine? Would you use it yourself? Would you travel to the future or the past?

Alan Richardson: First I'd set it to 'now' and see what happens.

If it still worked after doing that, then I'd set it to the past. I learned archery and fencing, grew my beard and hair, for this very 'time-travelling will be invented in my lifetime' contingency. Set it to 1784 and see if I could help make Cagliostro's attempted scam, to part Marie Antionette from her diamond necklace, a success. At the very least I'd come back with a dashing new wardrobe, ready for my

next conference talk.

Markus Gärtner: Thanks for your time. Looking forward to meet you at Let's Test in May.

March 27, 2012

18 challenge statements from Santhosh

Santhosh Tuppad sent around a little challenge for testers a few weeks ago. When I read through it, I couldn't resist the temptation to the challenge, even though I don't want to win that challenge; just to sharpen my saw at it. Here we go.

What if you click on something (A hyperlink) and to process or navigate to that webpage you need to be signed in? Currently, you are not signed in. Should you be taken to Sign up form or Sign in form? What is the better solution that you can provide?

Why would I need to sign in, anyways? Is it for the sake of getting my email adress to submit some more newsletters to my spam folder? Or is there some sort of cohort metrics running in the background? What other kind of information can I get from the webpage as a first time visitor? If this takes me longer than 10 seconds to grasp, I am no longer interested.

A better alternative would be a preview – like the tiny things I do with the "Continue reading" link on my blog. If I get the appetizer, and have become attracted by it, I might be more willing to join your sign up service, anyways. If I get two links to sign up and sign in I will be more pleased when I already have an account later.

Using "Close" naming convention to go back to the homepage is good or it should be named as "Cancel" or it is not really required because there is a "Home" link which is accessible. What are your thoughts?

I feel that I don't have enough contextual information to decide about "good" or what "should" happen. Provided, I want to give you a satisficing answer to your question, here are some of the questions, that I want to ask you:

Is the naming convention followed on the rest of the web sites? If no, then my answer does not really help you at all. Fix the inconsistency in your product first, maybe.

What kind of sub-dialog is closed when I hit it? If it is a preference screen, then close could indicate to save the changes to the preferences made. Then I would prefer something like "save" and "cancel", if the changes are not applied directly (like on a Mac). If I cancel a purchase of a 30,000 USD item, I would like to get this benefit as soon as possible, that I will actually not purchase the item.

Logout should be placed on top right hand side? What if it is on the top left hand side or in the left hand sidebar which is menu widget like "My Profile", "Change Password" etc. – Is it a problem or what is your thought process?

I don't know. Let's schedule a usability testing session, or at least add some tracking to this function to get the feedback whether people actually find it, or whether logout happens most of the time through implicit cookie deletions after the cookie holding time expired.

Current design of forgot password asks for username and security answer and then sends a link to e-mail inbox to set new password. How does "security answer" increase the cost of operations? Also, what questions do you frame for security questions?

What is our target audience? In case most of our users are expert IT users, we should come up with other questions for the password lost security question. If we have to deal with kindergarten kids, we should maybe come up with another set of questions, but less hard to answer.

Regarding the cost of operations, we will have to deal with people who lost their passwords, but also forgot their security answers. By then we will have more trouble in our support department. On the other we will also eventually end up with fewer misuse of accounts – but the security question might also provide a source of distracting our actual knowledge. It could be that we are too biased to rely on our security question that we don't see how many accounts are actually abused by too easy to answer "security" questions. Thereby we may loose many customers and our own reputation if the press gets a hold on it.

If you had to design "Forgot Password" working, how would you do it and why? You are free to give different many functional designs.

I would prefer sending out an email to the account owner, with a link to reset their password. To avoid abuse of the account, I would also add old password, new password twice validation for the changes to your password, and I would also add extra security for changing your email adress.

Why? As a user of multiple internet systems, I find this type of resetting my accounts' passwords most comfortable. Sure, I will have to remember that I need to change my email adress when once becomes obsolete (e.g. when changing jobs), but in the past few years such changes have become rare for me.

There is neither account lockout policy nor captcha for the login or security answer forms; what kind of problems do you see with the current implementation and what do you propose?

As I am still not sure why we would need a login function at all, I will ask a lot of questions, first. The answers might guide me to some of the following answers. If it's an online gaming community where you can chat with others, but nothing serious will get harmed (like stats earned in games), there might be a confusion when someone else signs up as myself. If it's an online banking system, then someone else might steal my money. If it's an online shopping platform, then I might order a lot of stuff, but that will never get shipped to me (by then I might know where I could look, actually). If it's my own blog, then too bad, I will start another one. :)

Well, it is about context and there are no best practices in general. What are your thoughts on usage of captcha? Where should they be used and why?

Captchas should be used where useful. If I maintain a web side where viewing impaired are exchanging their thoughts, then captchas might turn out contra-productive. If my web page theme does interfere with the proper display of the captcha, then I should change something, either the theme, or leave out captchas. If I want a lot of flooding users prevent to put spam on my blog, I should put that up on my blog. If captchas interfere with my blogs mobile theme, then I should disable them. (That actually happened on my German blog (and also here) – hint to plug-in developers for WP Touch and Spam Free WordPress!).

If you are the solution architect for a retail website which has to be developed; what kind of questions would you ask with respect to "Scalability" purpose with respect to "Technology" being used for the website?

How many customer do you expect in the first month after release? How many after one year?

How many items will be purchased by the customers on average? What about minimal and maximal numbers that could give us hints to the standard deviation?

What's your value and growth hypothesis for your product?

How reliable should the page be?

How much downtime can we tolerate?

What other kind of data do you have?

How do you think "Deactivate Account" should work functionally keeping in mind about "Usability" & "Security" quality criteria?

"Deactivate Account" should inform me what will happen next, and ask for my confirmation about this step. For a limited period I want to be able to re-activate the account, if I accidentally hit the confirm-button, but after 30 days (or so) it will be my fault. (That actually happened to my YouTube account a while ago.) I might become frustrated about it, or I might search for another function – but that will be ok if I take the decision to deactivate my account. I know the risk. I can handle it. After my account is lot, I don't want other users to open an account using the same name, though. Otherwise an old link might refer to a porno video, or something like that.

For every registration, there is an e-mail sent with activation link. Once this activation link is used account is activated and a "Welcome E-mail" is sent to the end-users e-mail inbox. Now, list down the test ideas which could result in spamming if specific tests are not done.

For every registration? What about emails that already are in the system? What about constructed emails like abc+test+test+test@gmail.com, if abc@gmail.com is already in the system? What happens if already activated accounts don't get a new mail, but I use the same email twice for registration, but without activation? What happens I if I activate an already activated account accidentally by clicking the link twice? Are invalid email adresses also handled?

In what different ways can you use "Tamper Data" add-on from "Mozilla Firefox" web browser? If you have not used it till date then how about exploring it and using it; then you can share your experience here.

Taking a look on the plugin page, I saw that I can modify http requests with it. I can use the plugin for analyzing vulnerabilities to web forms and http/https pages in general. I can explore whether a particular responder also responds to different requests thereby exposing critical functionality to url hackers. I don't want to start Firefox right now to explore the plugin, though. :)

Application is being launched in a month from now and management has decided not to test for "Usability" or there are no testers in the team who can perform it and it is a web application. What is your take on this?

I inform management about the risks I see in this decision. If there are none, I will probably not say a word. If I am deeply concerned, I will work hard on delivering my viewpoint, but avoid to "educate" management too deeply about their decision. I will make my choice transparent though, and refuse any "who tested this piece of crap?" questions later.

Share your experience wherein; the developer did not accept security vulnerability and you did great bug advocacy to prove that it is a bug and finally it was fixed. Even if it was not fixed then please let me know about what was the bug and how did you do bug advocacy without revealing the application / company details.

I once worked on a use case where money was spread from one parent account to a bunch of other accounts. There were multiple business rules involved in this spread. We worked with test automation to overcome the simpler business rules. We had expressed our test data in a readable format. From this format we extracted the vulnerabilities for our bug reports.

One example had to do with two different account types. There was account type ABC and type DEF. DEF should get money first, but only get it's special services activated if there was sufficient money left. We constructed a test case in FitNesse, that could exemplify all the conditions easily. We marked down the preconditions for the test (account of type DEF, account of type ABC, parent account with some money), then executed some function (spread money up to 1234 USD), and checked the post results (money in the parent account, money in ABC and DEF, states of the accounts, and the special services). We also documented our expectations in between these pure test data. Convincing our programmers and chief programmers to fix bugs as they occurred was very easy with the examples provided.

What do you have in your tester's toolkit? Name at least 10 such tools or utilities. Please do not list like QTP, LoadRunner, SilkTest and such things. Something which you have discovered (Example: Process Explorer from SysInternals) on your own or from your colleague. If you can also share how you use it then it would be fantastic.

My brain – always on

My hands – always impatient to get on to the next test

My feet – always on the move to the programmer with my latest bug findings

My mouth – to provide timely and critical information about the project, and help others with their testing struggles

The Needle Eye Pattern – to help decouple my test automation approach from the actual product, so that I can unit test my own code

Google – when I am out test ideas

Skype – when I am desperate about my testing strategy, I reach out to others who help me jiggle through it

Yammer – for communicating with colleagues how they solved a problem that seems like mine

telephone – to reach out for more direct feedback from some of my peers

My running shoes – when things get really stuck, a good one hour run helps me overcome my thinking impediments

Let us say there is a commenting feature for the blog post; there are 100 comments currently. How would you load / render every comment. Is it one by one or all 100 at once? Justify.

I would asynchronously pre-fetch some of the data, and load – say – 20 comments in the first batch. After that I would dynamically add the next comments in chunks. There could be a problem with threads while loading, but I will deal with the problem, when we encounter it.

Have you ever done check automation using open-source tools? How did you identify the checks and what value did you add by automating them? Explain.

I used FitNesse, Cucumber, Cuke4Duke, Robot Framework, JBehave, JUnit, Concordion. I identified the checks before developing the story. By the discussion I had with the programmers and the ProductOwners we transformed our absent tacit knowledge about the product and the domain towards a more ubiquitous tacit knowledge, at times we even gained specialist tacit knowledge in our domain in the long run. See Tacit and Explicit Knowledge from Harry Collins on a more in-depth discussion about the terms.

At times, I also had to intensify the already existing examples. I used my background in software testing with decision tables, domain testing, and the like to come up with new risks and new test – sorry – check ideas. By then I extended our check base.

What kind of information do you gather before starting to test a software? (Example: Purpose of this application)

I gather my mission together with my stakeholder. I try to find out what sort of information will be interesting. I will also consult with the developer about potential risks. Then I usually start a brief exploration of the application, only to get some overview. After that I will have identified in less than half a day what I should spent my time testing on, and what problems I already encountered. And yet, I use session-based exploration for this.

How do you achieve data coverage (Inputs coverage) for a specific form with text fields like mobile number, date of birth etc? There are so many character sets and how do you achieve the coverage? You could share your past experience. If not any then you can talk about how it could be done.

I identify the domain rules about that input field. Then I come up with a set of positive test cases. After that I try to break that behavior, and see what happens. That worked reasonable well in the ParkCalc challenge a while ago. After I saw that I could enter scientific numbers into the date and time fields, I could generate parking costs in the range of 5 billion or so. After that I might also be tempted to try things like sql-injection, and so on. When I run out of test ideas, I take a final on the test heuristics cheat sheet from Elisabeth Hendrickson, and see if I left anything crucial out of my approach.

March 26, 2012

RESTful CI Integration with FitNesse

FitNesse offers RESTful command line services. In the past week I have worked on a solution to integrate these command line option in a Jenkins environment with the FitNesse plug-in. It turns out that this didn't work as smooth as expected for me. I needed some special shell-fu to get the plugin and the most recent version of FitNesse working together. Here is how I did this, hoping that someone else will find my solution to help them in their aid.

The plug-in expects a test result from FitNesse in xml-format. It shall also contain the html-portions of the tests. The reporting plug-in will use the html-information to inform about the failed tests, what went wrong, and what went right.

RESTful calls

The RESTful services in FitNesse provide some functionality to put out all the necessary information for the Jenkins plug-in. For the RESTful service, we will need to append the option format=xml to print out the test results as xml-Format.

There is an undocumented switch to include the html-information from the actual tests, The option for including the actual html-information is called includehtml. By then we instruct FitNesse to append the html-output from each test to the resulting xml-file.

To execute the test suite in ExampleTestSuite we can run java -jar fitnesse.jar -p 12345 -c "ExmapleTestSuite?suite&format=xml&includehtml". Unfortunately this will produce an output on standard out, which also contains the information from FitNesse starting, and so on. So, just putting this command into a shell-execute step in Jenkins will not suffice.

AWK to the rescue

Also, if we simple write the contents of this command to a file, and try to read it in with the plugin, we will fail, since it will not produce a valid xml-file for the FitNesse Jenkins plug-in.

On a Linux box, you can use awk to strip out the necessary information from the FitNesse output. We instruct awk to print out everything between the opening <?xml>-tag and the closing -tag with the following snipplet:

awk '/<\?xml/,<\/testResults>/ {print}'

Putting it all together

Using some pipes and output forwarding will create us a file which is processable by the Jenkins plug-in. Using the example from above, and forwarding the results to fitnesse_results.xml, we get the following code:

java -jar fitnesse.jar -p 12345 -c "ExmapleTestSuite?suite&format=xml&includehtml" | awk '/<\?xml/,<\/testResults>/ {print}' > fitnesse_results.xml

This solution also provides the advantage, that you can add excludeFilters to the call of the FitNesse suite. By then you can organize your tests in a test set for your regression tests with one particular tag, and the tests for the current sprint with another tag. You can then switch easily by varying the excluded tag from each suite. You can also use inclusive filters, and take advantage of the remaining filters available for the suite service. Please consult the FitNesse documentation on that.

Outlook

I hope I am able to provide a more convenient function for the next version of FitNesse. This approach does not work on all Windows boxes. I hope I can find some time to work on a feature in FitNesse that will provide this sort of behavior by default. Another way around this problem could be an adaptation of the Jenkins plug-in, but I don't know, who wrote this, or how to contact them. For the time being, this description should help some folks, I hope.

March 25, 2012

Let’s Test prequel with Oliver Vilson

As a prequel to the Let’s Test conference in May, I interviewed some of the European context-driven testers. Today we have Oliver Vilson a context-driven tester from Estonia.

Markus Gärtner: Hi Oliver, could you please introduce yourself? Who are you? What do you do for work?

Oliver Vilson: Hi Markus. Of course I can introduce myself. I’m an Estonian tester, team lead and learning coach (or coaching learner). For work I test as much as I can, lead a small context-driven testing team, coach my team and teach different people as much as I know. Besides more casual things I’m currently working on building up a local community of context-driven testers through tester meetups, peer conferences & Testers Tower. I am talking about testing to as many people as are willing to listen to me and discuss with me.

Markus Gärtner: You are setting up a testing community in Estonia. What you do to grow that community, and what has been the state of the art for software testing in Estonia until when you started? How does it look like today?

Oliver Vilson: Since a community is formed by more than one person, I can’t take full credit for being the one setting it up. It was still a team effort. I see myself more as a catalyst connecting people with ideas and giving the additional positive push required to start the motion.

Even though currently there is also an official board of testing in Estonia, we are trying to form a community of people with whom to argue, discuss, and challenge each other and share experiences in more informal ways. We (at least try to) grow the community through various events – we have just started our monthly meet-ups, hold 3-4 Test Camps per year (similar events to weekend testing, but we spend a full day of testing real applications with pizza & coke), peer conferences known as PESTs and just casual meetings whenever someone needs a little help, wants to discuss some idea they had or just share war-stories behind beer or two.

Before we initiated this community I didn’t sense much of collaboration and communication between testers. Testers were viewed as programmers who couldn’t write code, weren’t experienced enough to do analysis or project management and thus sadly the attitude was carried over to testers as well. Being a tester wasn’t something to be proud of and thus testers used to keep to themselves. Testers of one company hardly collaborated even amongst themselves. Even though the local official board of testers tried to bring them together, I sensed it wasn’t enough due for various reasons.

Now I feel that things are changing. Being a tester doesn’t mean that you are a failed developer. It means you provide different kinds of value to your projects. At least some companies have started to take testers as a profession seriously and I see the attitude is increasing. I also feel that the community is almost getting a life of its own. People are showing initiative instead of hoping to be dragged around and pointed on what to do. Another local CDT community speaker launched his first episode of a live broadcast series called Testers Tower live which I think has great potential. I even feel that I don’t have to be so engaged anymore as I was 2 years ago when the current core members of the community first met. I just need to nudge the progress regularly to avoid too large gaps between events. Some companies have even started forming their own inner communities of testers which is great.

Markus Gärtner: How have you crossed the path of context-driven testing?

Oliver Vilson: I think my paths started to cross before I actually understood it. I was assigned to test a loan management solution built upon the MS CRM 3.0 platform and I started having questions like why should I write test cases for every single validation that is done by the platform itself. Shouldn’t it be enough to verify that the platform validates the input correctly once (or twice) and then spend more time on more important areas. Why-why-why were my main questions even though at the beginning I wasn’t willing to speak these out loud much due to being a rookie in the industry. I wanted to understand why programmers shouldn’t test their own code, why project managers find it hard to understand testing, why some things work on some projects and fail on others and so on. However the breaking point of my (at least partial) understanding came after meeting Michael Bolton in 2008. After those long talks I started understanding more about different why’s and how’s and wanting to learn more.

Markus Gärtner: What do you do differently instead of asking all those “Why” questions since you met with Michael in 2008? I assume you still challenge some underlying assumptions in your projects? Do you do that differently since then?

Oliver Vilson: After meeting Michael I understood that I’m not weird with all those “why” questions; that it’s normal and actually should be done. I started to focus more on “why” questions themselves. Like trying to find out why I’m asking some questions and not asking other ones. And how to explain my reasoning behind my questions. I was also more willing to speak out about my doubts and questions. The boost of self-confidence and motivation was really important.

Of course we still face loads and loads of assumptions in projects, but now I at least try to recognize them and make them visible. Even if the assumptions are considered as-designed and the questions irrelevant, then at least they are out in the open and I learn more about the project’s context – what is relevant to whom and why.

Markus Gärtner: How do you apply context-driven testing at your workplace?

Oliver Vilson: It’s the hardest and easiest question at the same time so far. If I focus on projects we do, then most of the work we do is highly context-driven to meet specific project’s needs. Since we offer testing as a service, then our team is usually involved during the most critical phases of various projects for various customers and thus we must be able to change our approach rapidly to be able to provide the most relevant information about the project in any given time to anyone who is interested. If I focus on how I apply it to everything else, then it’s more of a team effort. We create challenges for each other and try to learn from them to be better tomorrow than we are today. We try to find new ways every day to push ourselves further and further.

Markus Gärtner: Do you have some examples of the challenges that you present each other?