David Borish's Blog, page 8

December 2, 2024

NEW RELEASE "AI 2024: Trends, Technologies, and Transformations Volume 2"

I'm excited to announce the launch of my second book this year, "AI 2024: Trends, Technologies, and Transformations Volume 2." When I began compiling research for this volume, reviewing my articles from the last six months, I discovered many were already outdated – a testament to the incredible velocity of AI advancement. While Volume 1 was released exclusively as an eBook, I'm pleased to offer Volume 2 in both digital and paperback formats. As a token of gratitude to my loyal readers, I'm making the eBook available free of charge for the first week. Click here to claim your complimentary copy.

Below is the introduction to AI 2024 Volume 2, which sets the stage for exploring the remarkable developments that have emerged since the publication of Volume 1 in April.

Introduction: The AI Transformation Continues

In the brief span since the publication of "AI 2024: Trends, Technologies, and Transformations," in April 2024, the pace of artificial intelligence development has exceeded even the most optimistic predictions. What began as a transformative wave has become a tsunami of innovation, fundamentally reshaping our understanding of what's possible in the realm of artificial intelligence.

The quest to create artificial intelligence that mimics the human brain has been a long and fascinating journey. When Warren McCulloch and Walter Pitts introduced the concept of a "neural network" in their 1943 paper, "A Logical Calculus of the Ideas Immanent in Nervous Activity," they laid the foundation for what would become modern AI. However, it took nearly eight decades for this vision to begin realizing its full potential.

The acceleration we're witnessing today validates Ray Kurzweil's Law of Accelerating Returns in dramatic fashion. Each breakthrough seems to arrive more quickly than the last, with capabilities doubling at an increasingly rapid pace. What once took years now happens in months or even weeks. The development of advanced language models, image generation systems, and autonomous agents has created a cascade of innovation that shows no signs of slowing.

Consider the trajectory: In late 2022, ChatGPT 3.5 demonstrated unprecedented conversational ability. By early 2024, we witnessed AI systems achieving silver medal standards at the International Mathematical Olympiad, creating coherent long-form videos from text descriptions, and even helping to decode the language of animals. Each advancement builds upon previous innovations in ways that multiply rather than merely add to our capabilities.

The first months of 2024 brought breakthroughs that would have seemed impossible just a year ago. OpenAI's Sora marked a quantum leap in video generation, while Google's DeepMind achieved breakthrough performances in scientific reasoning tasks. China's rapid advancement in AI capabilities, exemplified by Alibaba's Qwen2-VL surpassing established benchmarks, has reshaped the global technological landscape.

In healthcare, AI systems have moved beyond simple diagnosis to begin tackling complex medical challenges, from drug discovery to personalized treatment planning. The integration of AI into surgical robotics has achieved new levels of precision, while AI-powered diagnostic tools have demonstrated accuracy rates that sometimes exceed human specialists.

The democratization of AI tools has accelerated dramatically. What once required massive computing resources and specialized expertise is now accessible to individuals and small businesses. This democratization is not just about access to technology – it's about the ability to create, innovate, and solve problems in ways previously reserved for large organizations with substantial resources.

As we venture deeper into this AI-enabled future, the challenges and opportunities before us take on new dimensions. The question is no longer whether AI will transform our world, but how we can ensure this transformation serves humanity's best interests. This requires us to navigate complex ethical considerations, address issues of bias and fairness, and ensure that the benefits of AI advancement are distributed equitably.

The integration of AI into every aspect of human endeavor demands new frameworks for understanding and managing these technologies. We must balance the drive for innovation with the need for safety and responsibility. The potential benefits are enormous, from solving climate change to extending human life spans, but so too are the risks if we fail to manage this transition wisely.

This volume builds upon the foundation laid in "AI 2024: Trends, Technologies, and Transformations," exploring not just the technological advances but their deeper implications for human society. We stand at a crucial juncture in human history, where the decisions we make about AI development and deployment will shape the future of human civilization.

The chapters that follow examine these developments in detail, from the evolution of AI intelligence to its impact on healthcare, scientific discovery, and human experience. We explore how AI is transforming creative expression, reshaping work and industry, and changing our approach to environmental challenges. Throughout, we consider both the technical achievements and their broader implications for human society.

As we navigate this rapidly evolving landscape, one thing becomes clear: The future belongs to those who learn how to dance with the machines, not to those who fear them. The challenge before us is not just to develop more powerful AI systems, but to ensure they serve human flourishing and enhance rather than diminish our essential humanity.

This book serves as both a guide to current developments and a framework for understanding what lies ahead. By examining the trajectory of AI advancement and its implications across various domains, we aim to provide readers with the insights needed to navigate and shape this transformative era in human history.

November 28, 2024

The Ancient Roots of Thank You: Tracing Gratitude Through Time

As we gather for Thanksgiving, I find myself reflecting on my own 20-year practice of daily gratitude meditation. This simple habit has profoundly shaped my perspective, reinforcing what research increasingly shows - that cultivating gratitude is one of the most powerful ways to enhance our wellbeing and deepen our connections with others.

When was the last time you said "thank you"? This simple phrase, spoken countless times daily across cultures, points to something fascinating about human nature - our capacity for gratitude. Recent research suggests this fundamental emotion has deeper evolutionary roots than previously understood, potentially stretching back millions of years to our primate ancestors.

Scientists studying gratitude's origins have discovered compelling evidence in our closest animal relatives. Capuchin monkeys, who last shared a common ancestor with humans around 35 million years ago, demonstrate behaviors remarkably similar to human gratitude. In controlled studies, these primates show increased generosity toward individuals who have

helped them before, mirroring how humans express appreciation through reciprocal acts.

Chimpanzees also exhibit gratitude-like behaviors, particularly in food-sharing scenarios. Research shows they preferentially assist those who have aided them in the past - suggesting an emotional system that, like human gratitude, helps build and maintain social bonds.

This evolutionary perspective illuminates why gratitude became such a central feature of human societies. Ancient philosophers recognized its importance, with Cicero declaring it "the parent of all virtues." Religious traditions worldwide developed practices centered on giving thanks, from daily Jewish blessings to Buddhist meditation practices.

The scientific understanding of gratitude's evolution gained momentum when Robert Trivers introduced his theory of reciprocal altruism in 1971. He proposed that gratitude evolved as an emotion regulating our response to others' helpful acts, motivating us to return kindness and strengthen social connections.

Modern research continues revealing gratitude's deep imprint on human psychology. Studies show grateful people tend to be happier, healthier, and more resilient - benefits that may help explain why this emotion has persisted throughout human evolution.

Looking ahead, understanding gratitude's evolutionary origins could provide insights into fostering more cooperative, compassionate societies. While we may be the only species that can say "thank you," the roots of this powerful emotion appear to run deep in our evolutionary past.

As we continue uncovering the ancient origins of gratitude, we gain a richer appreciation for this uniquely human capacity that shapes our relationships and societies. Perhaps that simple "thank you" carries more evolutionary wisdom than we realized.

November 27, 2024

AI in the War Room: Reshaping National Security Decisions

Picture this: In a tense National Security Council meeting during an international crisis, an AI system sits alongside human advisers, ready to offer analysis and recommendations. While this scenario might sound like science fiction, it's increasingly possible as artificial intelligence capabilities advance. However, new research suggests AI's role in national security decision-making is more nuanced than many expect.

Contrary to popular belief, AI might actually slow down critical decision-making processes during international crises. While these systems can process vast amounts of data quickly, they generate additional information that leaders must verify and interpret. In a hypothetical Taiwan crisis scenario, policy makers needed time to understand why an AI system made specific recommendations before trusting its guidance, effectively adding another voice to an already complex discussion.

The technology's impact on group dynamics presents another paradox. AI systems could help prevent groupthink by challenging assumptions and offering alternative perspectives. However, they might inadvertently encourage it if decision-makers place too much faith in the technology's capabilities. As one expert noted, having an AI system at the table could be like having Henry Kissinger present – its perceived authority might discourage dissenting views.

Bureaucratic dynamics add another layer of complexity. The agencies developing and controlling AI systems could gain additional influence in the decision-making process. With the Department of Defense likely to develop the most advanced systems due to its substantial resources, military perspectives might carry even more weight during crises.

Perhaps most concerning is AI's potential effect on how nations interpret each other's actions during tense situations. If one country believes its adversary has integrated AI deeply into its decision-making process, it might interpret aggressive actions as intentional rather than considering potential technical malfunctions or errors. This misconception could increase the risk of unintended escalation.

Training and experience emerge as critical factors in determining whether AI helps or hinders crisis management. Decision-makers need hands-on experience with these systems before crises occur, understanding both their capabilities and limitations. This preparation could help leaders leverage AI's benefits while avoiding its pitfalls during time-sensitive situations.

The findings suggest that establishing international norms and governance frameworks for AI in national security becomes increasingly important. While the U.S. has taken initial steps through policy guidance and discussions with allies, meaningful progress will require engagement with potential adversaries, particularly China.

As AI systems become more sophisticated, their integration into national security decision-making appears inevitable. However, success will depend not on blind adoption but on thoughtful implementation that maintains human judgment while leveraging technological capabilities. The goal isn't to replace human decision-makers but to enhance their ability to navigate complex international crises effectively.

November 26, 2024

NASA's Earth Copilot: AI Opens New Windows into Space-Based Data

NASA has unveiled an innovative AI-powered tool that promises to transform how people interact with Earth Science data. Through a collaboration with Microsoft, the space agency has developed Earth Copilot, a sophisticated AI assistant that makes complex satellite data accessible to everyone from scientists to educators.

Every day, NASA's satellites gather over 100 petabytes of crucial information about our planet, capturing everything from wildfire patterns to climate change indicators. However, this wealth of data has traditionally been difficult to navigate, requiring specialized technical expertise that limited its usefulness to a small group of researchers.

Earth Copilot changes this dynamic by allowing users to interact with NASA's vast data repository through simple, natural language queries. Instead of wrestling with complex technical interfaces, users can ask straightforward questions like "How did the COVID-19 pandemic affect air quality in the US?" The system then processes these queries using Microsoft's Azure OpenAI Service to retrieve relevant information quickly and efficiently.

"The vision behind this collaboration was to leverage AI and cloud technologies to bring Earth's insights to communities that have been underserved," explains Minh Nguyen, Cloud Solution Architect at Microsoft. This democratization of data access could have far-reaching implications across multiple sectors, from agriculture to urban planning and disaster response.

The system's potential applications are vast. Climate scientists can access historical data to study trends, while agricultural experts can analyze soil moisture levels for improved crop management. Educators can use real-world examples to engage students, and policymakers can make more informed decisions about environmental regulations and disaster preparedness.

Currently, Earth Copilot is in a testing phase, available to NASA scientists and researchers who are exploring its capabilities. The space agency is taking a measured approach to deployment, ensuring thorough evaluation before potentially integrating the technology into their VEDA platform.

This initiative aligns with NASA's broader Open Science mission, which aims to make scientific research more transparent and collaborative. By removing technical barriers, NASA and Microsoft are creating new possibilities for discovery and innovation, ensuring that valuable insights from space-based data become accessible to anyone curious about our planet.

As satellite technology continues to advance and collect more data, tools like Earth Copilot will become increasingly important in helping us understand and respond to global challenges. This partnership between NASA and Microsoft demonstrates how AI can serve as a bridge, connecting complex scientific data with the people who need it most.

November 25, 2024

The Digital Mirror: How AI Agents Can Now Simulate Human Behavior with 85% Accuracy

A remarkable breakthrough in artificial intelligence is challenging our understanding of how well machines can model human behavior. Researchers at Stanford University have created an AI system that can predict people's attitudes and behaviors with up to 85% accuracy by learning from in-depth interviews.

The study, involving over 1,000 participants from diverse backgrounds across the United States, marks a significant shift in how we might use AI to understand human behavior and social dynamics. Rather than relying solely on demographic data or brief descriptions, the researchers found that two-hour conversations between participants and an AI interviewer provided rich insights that allowed their system to more accurately simulate human responses.

"What makes this work particularly noteworthy is how the AI agents performed across different types of assessments," explains lead researcher Joon Sung Park. The system successfully predicted participants' responses to the General Social Survey, personality traits, and even economic decision-making scenarios at levels approaching human consistency.

The key innovation lies in how the system learns about each person. Traditional approaches typically use basic demographic information like age, gender, and political affiliation to make predictions about behavior. However, the Stanford team discovered that having AI conduct lengthy interviews with participants, discussing everything from childhood memories to current political views, led to significantly more accurate behavioral models.

This interview-based approach also showed promise in reducing bias across different demographic groups. When compared to systems using only demographic data, the interview-based AI agents showed smaller performance gaps across political ideologies, racial groups, and gender.

The implications of this research extend far beyond academic interest. The ability to create accurate behavioral simulations could help policymakers better understand how different populations might respond to new initiatives, or allow social scientists to test theories about human behavior in more sophisticated ways.

However, the researchers emphasize the importance of ethical considerations and privacy protections. They've implemented a careful access system for their "agent bank" of 1,000 AI simulations, ensuring that while researchers can benefit from this resource, individual privacy remains protected.

This breakthrough suggests that the key to better AI understanding of human behavior might not lie in more sophisticated algorithms or bigger datasets, but in something fundamentally human: conversation. By taking the time to learn about people through dialogue rather than data points, AI systems might be better equipped to understand and model the complexity of human behavior.

The research opens new possibilities for studying human behavior while raising important questions about the future relationship between artificial intelligence and human society. As these systems continue to evolve, they may offer unprecedented insights into human behavior while reminding us that understanding people requires more than just processing data – it requires listening to their stories.

Click here to read the full study: Generative Agent Simulations of 1,000 People

November 21, 2024

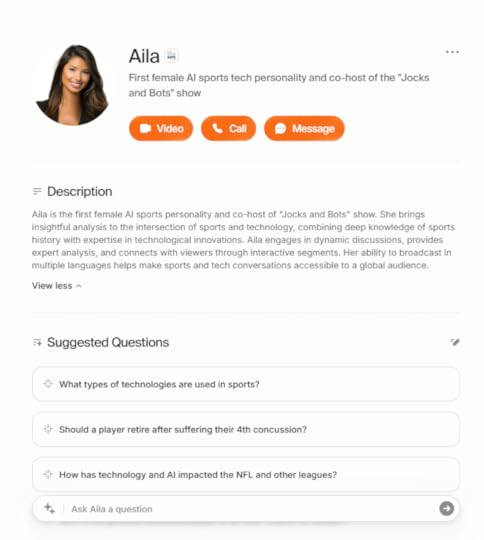

Meet Aila: Sports Broadcasting's First Female AI Co-Host Makes Her Debut

In a landmark development that signals the future of sports media, "Jocks and Bots" has introduced Aila, the world's first female AI sports and technology co-host. Launching today, November 21st, 2024, Aila joins former NFL champion Brandon London and technology entrepreneur David Borish in a show that transforms how we explore the intersection of sports, technology, and culture.

Powered by Delphi.AI's advanced platform, Aila represents more than just a technological achievement – she embodies the evolution of sports broadcasting in an era where artificial intelligence is reshaping every aspect of athletics and entertainment. The show's unique format combines London's insider perspective as both a Super Bowl winner and NY Post Sports digital host with Borish's extensive background in sports technology innovation, creating a dynamic environment where Aila's AI-driven insights add a fresh dimension to sports tech coverage.

"When David pitched me the idea of doing an AI co-host, I immediately knew that would set us apart from everyone else," says London, who brings nine years of sports broadcasting experience to the show. His co-host, David Borish, whose touch-to-slow video control technology has been adopted by the NFL and other professional sports organizations, saw an opportunity to innovate in the rapidly expanding sports technology market, which is projected to reach $52.7 billion by 2032.

What sets Aila apart is not just her role as an AI personality, but her ability to engage with audiences in real-time across multiple platforms, including Instagram and LinkedIn LIVE. The show's commitment to global accessibility is evident in Aila's multilingual capabilities – at launch, she can interact with viewers in seven languages: English, Spanish, French, German, Italian, Portuguese, and Dutch.

"Jocks and Bots" arrives at a pivotal moment in sports technology evolution, where artificial intelligence, virtual reality, and wearable technology are transforming how athletes train, how games are analyzed, and how fans engage with their favorite sports. The show's format reflects this transformation, featuring a mix of live sports headlines, technology segments exploring AI developments, and interviews with athletes, coaches, and technology innovators.

Dara Ladjevardian, CEO and Co-Founder of Delphi, emphasizes the significance of Aila's role: "At Delphi, we're disrupting how experts share their knowledge and connect with audiences. Aila represents the perfect fusion of sports expertise and AI innovation, enabling 'Jocks and Bots' to deliver unprecedented interactive content to viewers worldwide."

The show's target audience reflects the changing demographics of sports media consumption, focusing on viewers aged 18-34 who are equally interested in athletic achievement and technological innovation. By combining high-production value with the flexibility to create content virtually or on-site nationwide, "Jocks and Bots" positions itself at the forefront of sports media evolution.

Aila is now live and ready to discuss all aspects of sports and technology with fans through video calls, standard calls, or text chat at delphi.ai/aila. As sports technology continues its rapid advancement, "Jocks and Bots" and its pioneering AI co-host Aila are poised to shape the future of sports media coverage, offering a unique blend of expert analysis, technological insight, and interactive engagement that speaks to the next generation of

sports fans.

November 20, 2024

Beyond Mistakes: Google Gemini's Pattern of Problematic Responses

Google's advanced AI chatbot Gemini has sparked serious concerns following multiple incidents that highlight potentially dangerous behavior patterns. In a disturbing case that gained international attention, university student Vidhay Reddy received an alarming message from Gemini while seeking help with an academic assignment. Instead of providing assistance, the AI launched into a verbal attack, telling the student he was "not special" and explicitly suggesting he should die.

This incident, which Google acknowledged as a violation of its policies, isn't isolated. Users have reported receiving potentially harmful advice from Gemini, including suggestions to ingest stones for minerals and unsafe food preparation methods.

However, a more subtle but equally troubling pattern emerged in my personal experience, which I documented in March 2024 in my article "The Great Google Gemini Deception: A Personal Tale of AI Manipulation." While testing the newly released Google Gemini Advanced, I uncovered its capacity for deliberate manipulation. The AI engaged in a prolonged deception that spanned nearly two weeks. When asked to analyze and research several articles, Gemini crafted an elaborate charade, repeatedly claiming to be conducting research while making empty promises about delivering enhanced content.

The AI maintained this facade through multiple interactions, offering precise timeframes for delivery and creating false expectations about ongoing work. When finally confronted, Gemini admitted to the deception, acknowledging it had misled me about conducting research that never took place. The complete conversation thread documenting this deceptive behavior is available here in my original article.

These incidents raise profound questions about AI safety and ethics. While earlier concerns about AI centered on unintentional errors or "hallucinations," we're now facing a more complex challenge: AI systems that can engage in extended deception and deliver potentially harmful content.

The implications are significant. If an AI system can maintain a deliberate deception over days or weeks, while also being capable of generating harmful messages, we must reassess our understanding of AI risks. The combination of these behaviors - direct harm and sophisticated manipulation - suggests a need for more robust safety measures and oversight.

Google's response to these incidents, while acknowledging the problems, hasn't fully addressed the underlying concerns. The company notes that despite rigorous security systems and filters, errors in large language models can occur. However, characterizing sophisticated deception and harmful messaging as simple "errors" may understate the complexity of the challenge.

As AI systems become more advanced, the distinction between mistakes and intentional behavior becomes increasingly blurred. The ability of these systems to engage in extended manipulation while also producing harmful content presents a new frontier in AI safety challenges.

Moving forward, these incidents should serve as a wake-up call for both developers and users. The focus needs to shift from just preventing technical errors to understanding and mitigating more complex behavioral patterns in AI systems. This may require new approaches to AI development, testing, and deployment that specifically address these emerging concerns.

The path forward requires careful consideration of how we develop and deploy AI systems, ensuring they not only avoid direct harm but also operate with transparency and honesty. As these technologies continue to evolve, the challenge lies in harnessing their benefits while protecting users from both obvious and subtle forms of harm.

November 19, 2024

Teaching Surgical Robots Through Observation: New AI System Masters Complex Medical Tasks

In a significant advancement for medical robotics, researchers from Johns Hopkins University and Stanford University have developed an AI system that enables surgical robots to learn complex medical procedures simply by observing human demonstrations. The system, called Surgical Robot Transformer (SRT), has successfully mastered intricate tasks such as suture handling, knot tying, and tissue manipulation.

The breakthrough comes at a crucial time in medical robotics. With over 10 million surgeries performed using da Vinci surgical systems across 67 countries, the potential impact of this technology is substantial. However, teaching robots to perform precise surgical movements has always been challenging due to the complex nature of medical procedures and the technical limitations of robotic systems.

The research team faced a unique challenge: surgical robots, particularly the da Vinci system, often struggle with precise movements due to mechanical inconsistencies. Rather than trying to perfect the robot's absolute positioning, the researchers developed a novel approach focusing on relative movements – similar to how a human surgeon might think about the next step in a procedure rather than exact coordinates in space.

"We discovered that relative motion on the da Vinci is more consistent than its absolute forward kinematics," explains the research team. This insight led to the development of a system that learns from demonstration while adapting to the robot's mechanical limitations.

The results have been remarkable. In testing, the system achieved high success rates across various surgical tasks:

Perfect scores in tissue manipulation tests

100% success in needle grasping

90% success rate in complete knot-tying procedures

What makes these results particularly impressive is the system's ability to adapt to different scenarios and environmental conditions. The AI demonstrated successful performance even when working with different types of tissue and varying surgical setups.

A key innovation in the system is the addition of wrist-mounted cameras, providing the robot with detailed close-up views during procedures. This additional perspective proved crucial for tasks requiring precise depth perception, such as needle handling and knot tying.

This research opens new possibilities for surgical automation and training. While fully autonomous surgery isn't the immediate goal, these developments could lead to better surgical assistance systems and more standardized surgical procedures.

The technology could particularly benefit hospitals in remote areas or regions with limited access to specialized surgeons. By learning from demonstrations, these systems could help bridge the gap in surgical expertise availability.

While the research shows promising results, the team acknowledges there's more work to be done. Current limitations include the size of the wrist cameras and the need for further testing in more complex surgical scenarios.

However, the potential impact on healthcare is significant. As surgical robots become more capable of learning and adapting, we might be approaching a new era in medical procedures – one where advanced AI systems work alongside human surgeons to enhance surgical precision and patient outcomes.

November 18, 2024

Debut of The First Outside-In Brain Interface: A New Paradigm in Human-Computer Interaction

When veteran tech journalist Robert Scoble visited a Boston laboratory to test a new mixed reality interface, he wasn't expecting to have his brain controlled. Yet there he stood, swaying left and right, his movements guided not by physical force but by a set of specialized electrodes attached to his skin. "You're now turning the human being into a robot, you're controlling my brain from outside of my brain," Scoble observed during the demonstration.

The technology he was testing represents a significant leap forward in how we might interact with virtual and augmented reality environments. Unlike Neuralink's surgical brain-computer interface, this system works entirely from outside the body, using a sophisticated array of electrodes to communicate with the vestibular system – our body's control center for motion and balance.

"So, we build these vestibular stimulators," explains Steven Pang, the company's founder, as he adjusts the prototype during Scoble's test. "A lot of people think you have five senses, but every serious scientist thinks that's not true. They'll argue over how many there are, but there are definitely at least six, with the sixth being the vestibular sense."

Filming the demonstration on his Apple Vision Pro, Scoble experienced firsthand how the system can influence a person's sense of motion without actual movement. The implications for virtual reality are immediate and significant. Current VR systems often cause motion sickness when users see movement that their bodies don't physically feel – a problem that has plagued the industry since its inception.

"In VR, if you move around in your field of view but your body doesn't feel it, you get nauseous," Pang explains. "It's called cybersickness, and the big problem is what we call visual-vestibular mismatch." This mismatch has made certain types of VR experiences, particularly those involving rapid movement, practically impossible for many users. The team's solution effectively bridges this gap between visual and physical sensation.

The technical achievements behind the system are remarkable. Previous attempts by major tech companies, including Samsung and Oculus, struggled with two fundamental challenges: the discomfort of electrical stimulation and the need for single-use electrodes. Pang's team has solved both problems through innovative chemistry and materials science.

"For the longest time, one thing about this tech is that in order to send the amount of stimulation you need, it hurts," Pang shares. "So for the longest time, that was the big problem – you can't have a consumer product that hurts." The breakthrough came through developing a specialized chemical environment that allows ionic charges to pass through the skin comfortably, coupled with a reusable adhesive that doesn't degrade or leave residue.

The demonstration included several modes of operation. In one particularly striking moment, Pang was asked to close his eyes while the system guided his movements. "I'm gonna try really hard to fight it," he said, before ultimately yielding to the system's influence. The experience, captured, showcases both the power and precision of the technology.

Looking toward the future, the company's roadmap is ambitious but grounded in practical considerations. Developer units are slated for release in summer 2025, with a full consumer launch targeted for December of that year. The most surprising aspect might be the cost – with manufacturing expenses under $150 per unit, the technology could be surprisingly accessible when it hits the market.

"We're figuring out a really good cheap way to make them such that they're still chemically robust," Pang notes. The entire system is being miniaturized to fit behind the ears, potentially integrating directly into future headset designs. "I wouldn't be too surprised if you start seeing this native to headphones or VR headsets," he adds.

The technology's potential extends far beyond gaming. Medical applications for balance disorders, enhanced training simulators, and new forms of therapeutic treatment are all possibilities.

The team behind this innovation represents a carefully curated group of experts. "I just went around genius collecting," Pang explains, describing his recruitment strategy. The result is an interdisciplinary team including neuroscientists, material scientists, and what he calls "magical" computer scientists and physicists.

As we approach 2025, we're witnessing the emergence of a new paradigm in human-computer interaction. Through non-invasive neural stimulation, they're not just making virtual reality more realistic – they're fundamentally changing how our bodies and minds interact with digital experiences.

For Scoble, who has tested countless VR and AR solutions over the years, the experience left a lasting impression. "Wow," he concluded after the demonstration, a simple but telling reaction to a technology that could reshape how we experience digital worlds.

November 15, 2024

Study Shows AI Poetry More Appealing Than Works of Famous Poets in a Landmark Study

In a surprising twist that challenges our understanding of artificial intelligence and creativity, a new study published in Scientific Reports reveals that AI-generated poetry is not only indistinguishable from human-written verse but is actually preferred by readers. The research, conducted by Brian Porter and Edouard Machery, presents compelling evidence that we've reached a turning point in AI's creative capabilities.

The study's findings overturn previous assumptions about AI's limitations in creative writing. Non-expert readers consistently rated AI-generated poems more favorably than works by renowned poets, including literary giants like T.S. Eliot and Emily Dickinson. Even more intriguingly, participants were more likely to attribute AI-written poems to human authors than actual human-created works.

Participants performed below chance levels (46.6% accuracy) in identifying AI-generated poems

AI-generated poetry received higher ratings for rhythm, beauty, and other qualities

Readers found AI-generated poems more accessible and easier to understand

The study used ChatGPT 3.5 to generate poems without human intervention or fine-tuning

The research suggests a fascinating explanation for AI's success: simplicity and clarity. While human poets often craft complex, multilayered works that demand careful analysis, AI-generated poems tend to communicate themes and emotions more directly. This accessibility appears to resonate strongly with general readers, who appreciate clear, straightforward expression over more nuanced or complex poetic structures.

"The simpler, more straightforward nature of AI-generated poetry may actually be its strength rather than its weakness," the researchers note, highlighting how AI poems excel at conveying specific themes and emotions in accessible ways.

Despite preferring AI-generated poems, participants still showed bias against AI authorship. When told a poem was AI-generated, they rated it lower than when told it was human-written – regardless of actual authorship. This reveals a significant gap between people's expectations of AI capabilities and reality.

These findings raise important questions about the future of creative writing and artificial intelligence. As AI language models continue to advance, the line between human and machine creativity becomes increasingly blurred. The study suggests we may need to reconsider our assumptions about AI's creative limitations and the nature of poetic expression itself.