David Borish's Blog, page 6

January 10, 2025

AI Takes Center Stage: 5 New Smart Technologies From CES 2025

As the doors opened at CES 2025, one thing became immediately clear: artificial intelligence isn't just a buzzword anymore—it's the driving force behind the most innovative consumer technology we've seen to date. From household robots to neural wristbands, this year's show floor showcased how AI is transforming everyday experiences into extraordinary possibilities.

Switchbot's AI-Powered Household Marvel: Leading the charge in home automation, Switchbot unveiled a revolutionary household robot that redefines smart living. This AI-powered assistant goes far beyond basic cleaning tasks, incorporating advanced machine learning to map and organize living spaces, monitor pets, and even serve as a mobile air purifier. What sets this robot apart is its remarkable dexterity—capable of carrying small plates and learning from daily interactions to optimize its performance. For busy professionals seeking a truly automated home experience, Switchbot's creation represents a significant leap forward in domestic robotics.

Mudra Link's Neural Wristband: The Future of Control In a stunning display of human-computer interaction, Mudra Link introduced a neural wristband that transforms subtle arm movements into digital commands. Using sophisticated electromyography technology and AI interpretation, this wearable device effectively turns your hand into a virtual mouse, keyboard, or controller. The implications are vast, from revolutionizing presentation dynamics to enabling touchless smart home control, all through intuitive gestures.

BMW's AI-Enhanced Panoramic iDrive: The automotive sector showcased its commitment to AI integration with BMW's panoramic iDrive system. This innovative technology creates an augmented reality experience by projecting crucial driving information onto the windshield using AI to filter and present only the most relevant data. The result is a safer, more intuitive driving experience that keeps drivers' eyes where they need to be—on the road.

Honda's Zero Saloon EV: The Intelligent Driving Companion Honda's Zero Saloon EV demonstrates how AI can transform the driving experience. This electric vehicle goes beyond basic transportation, functioning as an intelligent companion that learns from your daily commute to optimize battery efficiency and route selection. The integration of advanced driver assistance systems with AI creates a vehicle that truly understands and adapts to its driver's needs.

Panasonic's AI Wellness Coach: In the health and wellness category, Panasonic impressed with its AI-driven wellness coach. This innovative system provides personalized guidance for healthier living, demonstrating how artificial intelligence can be harnessed to improve not just our technology interactions, but our overall well-being.

Looking Ahead CES 2025 has made it abundantly clear that AI is no longer a future prospect—it's actively reshaping our relationship with technology. From the way we manage our homes to how we interact with our vehicles, artificial intelligence is creating more intuitive, efficient, and personalized experiences. As these technologies continue to evolve, they promise to make our lives not just easier, but fundamentally more connected and intelligent.

The products showcased at CES 2025 represent more than just technological advancement; they demonstrate a shift toward AI-enhanced living that feels both natural and transformative. As we move forward, it's clear that artificial intelligence will continue to be the driving force behind the most innovative consumer technology, creating a future that's more capable, responsive, and attuned to human needs than ever before.

January 9, 2025

NVIDIA Rewrites the Rules of AI Computing: From Supercomputers to Your PC

NVIDIA has unveiled a transformative lineup of products and technologies that promise to democratize AI computing, bringing previously datacenter-exclusive capabilities into homes and small businesses. The announcements represent a fundamental shift in how AI can be developed, trained, and deployed at every scale.

At the heart of this AI revolution is the new RTX 50 Series, powered by the Blackwell architecture. The series makes a dramatic leap in AI processing capabilities, with even the RTX 5070 matching the previous flagship RTX 4090's performance at $549. This democratization of AI computing power means that tasks that previously required expensive cloud computing or enterprise-grade hardware can now be performed on consumer-grade GPUs.

The significance of this performance leap becomes clear when considering AI model deployment and training. With the new architecture's capabilities, users could potentially run significant language models locally on their PCs. Through Windows WSL2 integration, NVIDIA is creating a pathway for users to run various AI models directly on their computers, from language and vision models to speech processing and digital human animations.

NVIDIA's commitment to making Windows a "world-class AI PC" platform marks a significant shift in personal computing. The company's blueprints, which will be available on ai.nvidia.com, will enable users to run AI models that fit within their system's capabilities. This means that developers and enthusiasts could potentially fine-tune smaller language models, run stable diffusion models locally, or develop custom AI applications without relying on cloud services.

The introduction of Project Digits, NVIDIA's compact AI supercomputer based on the GB110 chip, further revolutionizes personal AI computing. This system, expected to launch around May, brings datacenter-class AI capabilities to a dramatically smaller form factor. It can function as a personal workstation or be accessed like a cloud supercomputer, potentially enabling users to train and run significantly larger AI models than previously possible in a personal setting.

For enterprise applications, NVIDIA's Nemo platform represents a leap forward in practical AI deployment. This digital employee platform could transform how businesses integrate AI into their operations, enabling the creation of sophisticated AI agents that can work alongside human employees. These agents can handle complex tasks, from data analysis to customer service, representing a new paradigm in human-AI collaboration.

The GB200 MV link system's incredible 1.2 petabytes per second memory bandwidth opens new possibilities for AI model training and inference. This level of performance could enable the training of increasingly sophisticated models, pushing the boundaries of what's possible in natural language processing, computer vision, and multimodal AI applications.

In the physical AI realm, NVIDIA's Cosmos platform demonstrates the company's commitment to bridging the gap between digital and physical worlds. This technology is particularly crucial for developing and training AI models for robotics and autonomous systems, as it enables the generation of physics-accurate synthetic data for training. The platform's ability to generate virtual world states from text, image, or video prompts could accelerate the development of AI systems that can better understand and interact with the physical world.

The Thor processor for autonomous vehicles showcases how specialized AI hardware can process massive amounts of sensor data in real-time, using transformer architectures to make split-second decisions. This technology could accelerate the development of more capable autonomous systems across various industries, from transportation to manufacturing.

These announcements collectively signal a future where AI development and deployment become increasingly accessible to individuals and smaller organizations. The ability to run sophisticated AI models locally, combined with powerful tools for model development and training, could spark a new wave of AI innovation. Developers could experiment with and deploy AI solutions without the recurring costs of cloud services, while businesses could maintain more control over their AI operations and data.

The democratization of AI computing power through these new technologies could lead to a proliferation of specialized AI applications developed by smaller teams and individuals, potentially accelerating the pace of AI innovation and its integration into everyday life. As these tools become more accessible, we might see the emergence of entirely new categories of AI applications that were previously impractical due to computational or cost constraints.

NVIDIA's vision suggests a future where AI computing becomes as ubiquitous as personal computing, with individuals and organizations having the capability to develop, train, and deploy sophisticated AI solutions locally. This shift could fundamentally change how we interact with technology and accelerate the development of more personalized and capable AI systems.

January 8, 2025

Near the Singularity: OpenAI's Journey from ChatGPT to Superintelligence

In a significant development for artificial intelligence, OpenAI's CEO Sam Altman has made a series of remarkable announcements that signal a new chapter in the company's mission. Through a combination of cryptic tweets and detailed blog posts, Altman has revealed that OpenAI is not only confident in their path to Artificial General Intelligence (AGI) but is already setting their sights on something far more ambitious: Artificial Superintelligence (ASI). This shift in focus comes at the two-year anniversary of ChatGPT's launch, a milestone that marked the beginning of what Altman describes as "a growth curve like nothing we have ever seen in our company, our industry, and the world broadly."

In his recent reflections, Altman made a striking declaration: "We are now confident we know how to build AGI as we have traditionally understood it." This comes just two days after I boldly predicted we will see AGI this year in my article "AI in 2025: My Top 10 Predictions For the New Year" This statement marks a significant shift from OpenAI's previous positions, suggesting that the technological roadmap to AGI has become much clearer than previously anticipated. The confidence stems from recent breakthroughs in what Altman calls "thinking models," which use substantial computational resources at inference time to process and reason through problems rather than simply generating quick responses.

The evolution of these models, particularly evident in their GPT-3 and GPT-4 iterations, has demonstrated increasingly sophisticated capabilities in complex reasoning, mathematical problem-solving, and scientific analysis. These advancements have shown that AI systems can not only process information but engage in the kind of deep analytical thinking that was once considered uniquely human. The breakthrough isn't just in the models' raw capabilities, but in their ability to combine different types of reasoning in ways that more closely mirror human cognitive processes.

OpenAI's prediction that "in 2025 we may see the first agents join the workforce and materially change the output of companies" represents a concrete timeline for the integration of advanced AI systems into everyday business operations. This isn't just about automation of routine tasks; these AI agents are expected to handle complex, knowledge-based work that traditionally required human expertise.

The implications of this prediction are far-reaching. These AI agents are expected to be capable of understanding context, managing complex projects, and making nuanced decisions within their domains of expertise. The transformation is likely to begin in specialized areas where the value proposition is clearest – perhaps in software development, data analysis, or research – before expanding to broader applications. This gradual integration could fundamentally reshape organizational structures, workflow processes, and the very nature of human-AI collaboration in the workplace.

OpenAI's pivot toward superintelligence represents a quantum leap in ambition. As Altman states, "We are beginning to turn our aim beyond that to superintelligence in the true sense of the word." This focus on superintelligence isn't just about creating more powerful AI systems; it's about developing intelligence that surpasses human capabilities across virtually every domain.

The vision for superintelligence includes systems that could "massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own." This capability could lead to breakthroughs in fields like medicine, materials science, and space exploration at a pace that would be impossible with human researchers alone. The potential for such systems to self-improve and accelerate their own development creates the possibility of an "intelligence explosion," where technological progress becomes self-sustaining and exponential.

Altman's cryptic tweet, "Near the singularity unclear which side," has sparked intense discussion about the technological singularity – the hypothetical point where technological growth becomes uncontrollable and irreversible. This reference to the singularity gains additional weight when considered alongside Ray Kurzweil's prediction of a 2045 singularity date, which now seems potentially conservative given recent developments. You can read more about it in my article "Exponential Progress: Ray Kurzweil's Insights on AI and his Law of Accelerating Returns"

The concept of the singularity raises profound questions about the nature of intelligence and consciousness. As Altman notes in his blog post, there are two possible interpretations of our current position: either we're approaching the simulation hypothesis (the idea that we're already living in a simulated reality) or we're nearing the critical moment of technological takeoff. Both interpretations suggest that humanity is approaching a fundamental transformation in its relationship with technology.

The rapid advancement toward superintelligence brings unprecedented opportunities and significant challenges. As noted by OpenAI's head of alignment, these developments will impact "every single facet of the human experience," from domestic politics to international relations, from market efficiency to social structures, and from healthcare to human enhancement.

The challenges extend beyond technical considerations to fundamental questions about human society and identity. How will we maintain meaningful work and purpose in a world where AI can perform most tasks better than humans? How will we ensure that the benefits of superintelligence are distributed equitably? Most critically, how do we maintain control and alignment of systems that may soon exceed our ability to understand them?

While some might view these predictions as overly optimistic or concerning, Altman acknowledges that it "sounds like science fiction right now and somewhat crazy to even talk about." However, he remains confident that "in the next few years everyone will see what we see," emphasizing the importance of balancing progress with careful consideration of its implications.

OpenAI's approach appears to favor what they call a "slow takeoff" scenario, where the transition to superintelligent systems happens gradually enough to allow for proper safety measures and societal adaptation. This strategy aligns with their stated goal of ensuring that advanced AI systems remain beneficial and aligned with human values.

As we stand on the brink of what could be one of the most transformative periods in human history, OpenAI's announcements suggest that the future of artificial intelligence may arrive sooner than many anticipated. The challenge now lies in ensuring that this rapid advancement toward superintelligence proceeds in a way that maximizes benefits while minimizing potential risks.

The next few years, particularly 2025, may prove to be pivotal in determining how this transformation unfolds. As we approach these milestones, the focus must remain on both the tremendous potential and the careful consideration required to navigate this unprecedented technological frontier. The question is no longer whether we will achieve superintelligence, but how we will handle its arrival and ensure it benefits humanity as a whole.

January 6, 2025

The Future of Mining: How AI is Transforming an Age-Old Industry

The mining industry, traditionally known for its conventional approaches, is experiencing a dramatic transformation through artificial intelligence. From autonomous vehicles navigating vast quarries to AI-powered exploration techniques identifying mineral deposits, the sector is rapidly evolving into a technology-driven powerhouse. Recent developments, including a major funding round for AI-mining startup KoBold Metals, underscore the industry's commitment to innovation.

Mining giants like Rio Tinto have been at the forefront of autonomous operations for over a decade. Their fleet of self-driving haul trucks, capable of carrying 350 tonnes, has already demonstrated a 13% reduction in fuel consumption while improving safety standards. The company's operations generate an astounding 2.4 terabytes of data per minute from mobile equipment and sensors, feeding into their integrated processing and logistics system.

AI-powered exploration has emerged in mineral discovery. Companies like KoBold Metals, backed by prominent investors including Bill Gates and Jeff Bezos, are revolutionizing how we locate crucial minerals. Their recent $537 million Series C funding round, valuing the company at $2.96 billion, demonstrates the industry's confidence in AI-driven exploration methods. KoBold's approach combines geosciences expertise with machine learning to accelerate the discovery of critical minerals essential for the energy transition.

The integration of IoT sensors and AI has transformed equipment maintenance from reactive to predictive. Real-time monitoring of temperature, speed, and vibration allows mining companies to anticipate and prevent equipment failures before they occur. This predictive maintenance approach not only enhances safety but also optimizes operational efficiency and reduces downtime.

The concept of digital twinning, originally developed by NASA, is being adapted for mining operations. Companies are creating virtual replicas of their entire operations, enabling them to test scenarios and optimize processes in a risk-free environment. Rio Tinto's intelligent mine initiative, set to deliver its first ore by 2021, incorporates over 100 innovations, with digital twinning at its core.

AI systems are becoming increasingly sophisticated in environmental impact assessment and management. Future applications will likely include more advanced real-time monitoring of air and water quality, automated pollution detection, and improved compliance with environmental regulations.

The industry is witnessing a shift in workforce requirements, with increasing demand for data scientists and AI specialists. Mining companies are investing in reskilling programs to prepare their workforce for this technological transition. For instance, Rio Tinto has partnered with the Australian government to develop training programs in analytics, IT, and robotics.

The mining sector, while employing a relatively modest workforce of 670,000 in the American quarrying, mining, and extraction sector, has an outsized impact on the global economy as it provides raw materials for virtually every other industry. The integration of AI is not just improving operational efficiency but is fundamentally changing how mining companies approach resource extraction.

The future of mining appears increasingly automated, data-driven, and sustainable. KoBold Metals' success in raising significant funding and their planned IPO within the next three to five years suggests growing investor confidence in AI-driven mining approaches. Their Mingomba copper mine project in Zambia, expected to produce 300,000 tonnes of copper annually from the 2030s, represents the scale of impact AI can have on mineral exploration and production.

As the industry continues to evolve, the integration of AI technologies will likely expand beyond current applications, potentially leading to fully automated mining operations that optimize resource extraction while minimizing environmental impact. This technological disruption in mining is not just about improving efficiency; it's about creating a more sustainable and responsible approach to resource extraction for future generations.

January 3, 2025

Breaking the Token Barrier: Meta's Large Concept Models Usher in New Era of AI

Meta has introduced an innovative and transformative approach to artificial intelligence that could fundamentally change how machines process and understand language. Their new Large Concept Model (LCM) architecture represents a significant shift from current Large Language Models (LLMs), offering a more human-like approach to processing information.

Unlike traditional LLMs that process text at the token level, Meta's LCMs operate at a higher level of abstraction called "concepts." These models work in a sentence representation space, utilizing SONAR embeddings that can handle up to 200 languages in both text and speech modalities. This approach mirrors how humans process information - not just word by word, but through broader conceptual understanding.

The key difference lies in the processing method. While current LLMs like GPT-4 or Llama analyze text token by token, LCMs process information at the sentence level, creating language-agnostic representations that capture meaning rather than just vocabulary. This enables more natural handling of complex ideas and better cross-lingual understanding.

LCMs employ a sophisticated architecture that includes:

A concept encoder that transforms input into language-agnostic representations

A Large Concept Model that processes these representations

A concept decoder that generates output in any supported language

One of the most impressive aspects is the model's ability to handle multiple languages and modalities without additional training. The system can process input in one language and generate output in another, maintaining semantic consistency throughout.

The impact of Large Concept Models on AI development could be far-reaching and profound. By processing information at a conceptual level, LCMs have the potential to better capture the nuances and context of human communication, leading to more natural language understanding. The language-agnostic nature of concepts enables improved handling of multiple languages without requiring extensive language-specific training, which could transform how AI systems handle multilingual tasks. Processing at the sentence level rather than token level introduces enhanced efficiency, particularly in handling long-form content. Perhaps most significantly, the concept-based approach might enable more sophisticated reasoning capabilities that more closely mirror human thought processes, as the system works with complete ideas rather than individual tokens. These combined improvements suggest a significant evolution in how AI systems could process and understand information, potentially bridging the gap between machine processing and human cognition.

While promising, LCMs are still in early stages. The current implementation faces challenges in handling very long sentences and maintaining consistent quality across all supported languages. However, these limitations appear more technical than fundamental, suggesting room for improvement with continued development.

Meta's LCM architecture represents a significant evolution in AI development. By moving away from token-based processing toward concept-based understanding, it opens new possibilities for more sophisticated AI applications. This could lead to:

More nuanced language understanding

Better cross-cultural communication systems

More efficient and accurate translation services

Improved reasoning capabilities in AI systems

The potential implications for the AI landscape are profound. This shift from token-based to concept-based processing could mark the beginning of a new era in artificial intelligence, one where machines process information in ways more similar to human cognition.

Meta's Large Concept Models represent more than just an incremental improvement in AI technology - they suggest a fundamentally different approach to machine understanding. As this technology matures, it could bridge the gap between current AI capabilities and more human-like processing of information, potentially leading to more sophisticated and capable AI systems.

The success of LCMs could mark a pivotal moment in AI development, showing that moving beyond token-based processing is not just possible but potentially crucial for the next generation of AI systems. As research continues and the technology evolves, we may look back on this as a key stepping stone toward more advanced artificial intelligence.

This innovative approach by Meta demonstrates that there are still fundamental breakthroughs to be made in AI architecture, and that the field continues to evolve in exciting and unexpected ways.

December 31, 2024

AI in 2025: My Top 10 Predictions For the New Year

The pace of AI advancement in 2024 has been nothing short of revolutionary. As an AI Strategist deeply embedded in the enterprise landscape, I've had a front-row seat to what can only be described as the most transformative year in artificial intelligence history. When I began writing my books "AI 2024 Trends, Technologies and Transformations" Volumes 1 & 2, I never anticipated how quickly the predictions would be surpassed by reality. The trajectory has been staggering. As we stand on the threshold of 2025, the developments I see on the horizon make even this remarkable year look like just the beginning.

The breakthrough we witnessed in September 2024 with OpenAI's new reasoning models was just the beginning. These models demonstrated unprecedented performance in complex problem-solving, achieving an 83% success rate on International Mathematics Olympiad problems – a dramatic leap from GPT-4o's 13%. This advancement signals a fundamental shift in AI's capabilities, moving from pattern recognition to genuine reasoning ability.

What makes this particularly exciting is the implications for scientific research and innovation. When AI can approach problems with human-like reasoning capabilities, we're not just looking at faster computation – we're looking at new ways of thinking about problems that might have eluded human researchers.

The agile manifesto of 2001 revolutionized software development, but what we're seeing now demands an even more dramatic transformation. While 2024 saw AI primarily as a productivity enhancer for individual team members, 2025 will require a complete reimagining of the development process itself.

I predict we'll see the emergence of what I call "AI-Native Agile" – development methodologies that treat AI not as a tool but as a core team member. This will involve new ceremonies, different sprint structures, and novel approaches to product ownership that account for AI's unique capabilities and limitations.

The parallels between AI's evolution and the internet's early days are striking and instructive. Just as we've moved from requiring HTML knowledge to create websites to having intuitive drag-and-drop interfaces, AI is undergoing a similar transformation. Apple's integration of AI into their ecosystem in 2024 was a watershed moment, but 2025 will take this further.

I expect to see AI capabilities becoming as natural and invisible as internet connectivity is today. The technology will fade into the background while its benefits become more pronounced and accessible to everyone, regardless of technical expertise.

The evolution from monolithic systems to microservices was significant, but the shift to autonomous agents will be revolutionary. These agents represent a fundamental change in how we think about software architecture. Unlike traditional services, agents can operate with greater autonomy and adaptability, creating systems that are more resilient and intelligent.

What excites me most about this transition is the potential for emergent behaviors when multiple agents interact. We're moving from predictable, programmed responses to systems that can genuinely learn and adapt to novel situations.

The real magic happens when agents begin collaborating. In 2025, I predict we'll see sophisticated multi-agent systems that can handle complex tasks through coordinated effort. The key challenges will be in developing robust governance frameworks and ensuring ethical operations.

Companies will need to implement sophisticated monitoring systems and establish clear boundaries for agent interactions. This will likely lead to the emergence of new roles focused on agent oversight and coordination.

The fragmented landscape of specialized AI models is giving way to unified systems that can seamlessly handle text, speech, images, and video. This convergence is not just about convenience – it represents a fundamental shift toward AI systems that can understand and interact with the world more like humans do.

The implications for business are profound. Instead of maintaining multiple specialized systems, companies will be able to deploy single models that can handle any type of input or output, dramatically simplifying their AI infrastructure.

While some might consider it bold, I'm predicting that 2025 will be the year when AI effectively passes any human test. The advancement in reasoning models we've seen in 2024 has convinced me that we're on the cusp of this breakthrough. The implications will be profound, though I expect the transition to be more gradual than many anticipate.

The progress in AI video generation has been nothing short of remarkable. By 2025, I expect to see the creation of 4K videos up to a minute long, with consistent character portrayal throughout. This will revolutionize content creation, leading to new career paths for AI creative professionals who can harness these tools effectively.

The uncanny valley in AI voice technology will be bridged in 2025. The implications for customer service, entertainment, and communication are enormous. This will require careful consideration of ethical guidelines and transparency requirements to ensure people know when they're interacting with AI.

This month META released a paper on perhaps most exciting development of the year, Large Concept Models (LCMs) as the successor to Large Language Models. Operating at a higher level of abstraction, these models work with language- and modality-agnostic concepts rather than tokens. This represents a fundamental shift in how AI processes and generates information, bringing us closer to human-like understanding and creativity.

The real breakthrough lies in their ability to work across languages and modalities without explicit translation, potentially revolutionizing global communication and knowledge sharing.

As we move into 2025, these predictions paint a picture of AI becoming more capable, more accessible, and more deeply integrated into our daily lives. The challenges will be significant, but so too are the opportunities for those ready to embrace this new era of technology.

The key to success will be maintaining a balance between innovation and responsibility, ensuring that as AI becomes more powerful, it remains aligned with human values and interests. As someone deeply involved in guiding organizations through this transformation, I'm excited to see how these predictions unfold and how they'll shape our collective future.

December 30, 2024

Bridging Worlds: How AI Sign Language Recognition Could Connect 466 Million People

The World Health Organization estimates that over 466 million people worldwide have disabling hearing loss, a number projected to grow to nearly 900 million by 2050. For many of these individuals, sign language is their primary means of communication. Now, breakthrough developments in artificial intelligence are promising to transform how deaf and hearing communities interact.

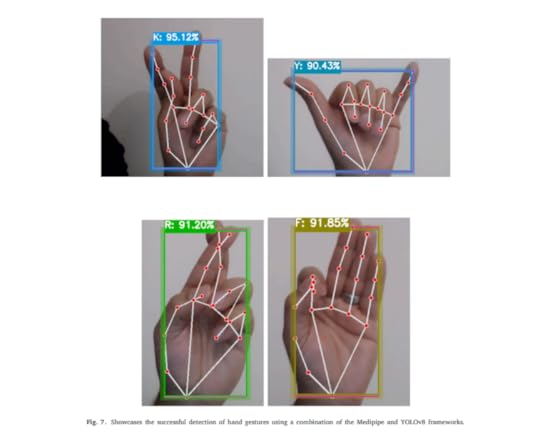

Recent research from Florida Atlantic University has demonstrated remarkable progress in real-time American Sign Language (ASL) recognition using AI. Their system, which combines YOLOv8 object detection with MediaPipe hand tracking technology, achieved an impressive 98% accuracy rate in recognizing ASL alphabet gestures. This level of precision marks a significant step forward in making sign language translation more accessible and reliable.

The implications for everyday communication are profound. When integrated with augmented reality devices like the Ray-Ban Meta Smart Glasses, this technology could enable real-time sign language translation in natural social settings. Imagine a deaf person signing while their smart glasses instantly translate their gestures into spoken words through the glasses' speakers. Simultaneously, the glasses could display text translations of spoken responses in the wearer's field of vision.

The Ray-Ban Meta Smart Glasses' existing features make them particularly well-suited for this application. Their dual 12MP cameras could capture hand movements with high precision, while their open-ear audio system could deliver translations without blocking environmental sounds – crucial for those with partial hearing. The glasses' AI capabilities and connectivity to smartphones provide the necessary processing power for real-time translation.

Educational institutions and workplaces could particularly benefit from this technology. By integrating AI sign language recognition into smart glasses or other AR devices, deaf students could participate more fully in mainstream classrooms, while deaf professionals could more easily navigate meetings and presentations.

The development of this technology also highlights a broader trend in AI applications: focusing on inclusive innovation that addresses real human needs. As AI continues to evolve, its potential to break down communication barriers and create more inclusive societies becomes increasingly apparent.

For the deaf community, these technological advances represent more than just convenience – they represent independence and expanded opportunities for social and professional engagement. As AI sign language recognition systems become more sophisticated and integrated into wearable devices, we may be approaching a future where language barriers between deaf and hearing communities become increasingly fluid.

Looking ahead, researchers are already working on expanding these systems to include full sentence recognition and multiple sign languages. Combined with advances in AR technology, we might soon see smart glasses that can seamlessly translate between spoken and signed languages in real-time, fundamentally transforming how deaf and hearing people interact.

The convergence of AI sign language recognition and AR technology represents a significant step toward a more inclusive world. As these technologies continue to evolve, they promise to break down communication barriers that have existed for centuries, creating new opportunities for connection and understanding between deaf and hearing communities.

December 27, 2024

The Quantum Advantage: How These AI Companies Can Benefit from Recent Quantum Breakthroughs

In a month that may be remembered as a turning point in technological history, December 2024 has delivered three groundbreaking quantum computing achievements which I recently covered in my articles "Quantum Transmission: Engineers Merge Quantum Teleportation with Existing Internet Infrastructure" and "Quantum Leaps: How Scarring and Willow Chip Discoveries Signal Kurzweil's Technological Singularity" these three breakthroughs that promise to fundamentally reshape the technological landscape. As tech giants and innovative startups rush to harness these breakthroughs, the implications extend far beyond the quantum computing market's projected 34.4% CAGR through 2030. The convergence of quantum computing and AI, catalyzed by these developments, isn't just evolving - it's experiencing a disruption that could redefine the boundaries of what's technologically possible.

The December 2024 quantum breakthroughs are set to change how companies leverage both quantum computing and AI technologies. Let's examine how these developments specifically impact key players who are already using both AI and quantum computing.

ZenaTech stands to benefit significantly from both Google's Willow chip breakthrough and the quantum scarring discovery. The company's drone-based wildfire tracking system, which currently processes vast amounts of terrain data, could be transformed by these advances in several ways:

First, the Willow chip's error correction breakthrough could allow ZenaTech to process their drone-collected data with unprecedented accuracy. Currently, their AI systems analyze hundreds of square miles of terrain data for fire management applications. With quantum-enhanced processing, they could not only analyze this data more quickly but also identify subtle patterns in temperature variations and vegetation changes that traditional computers might miss.

The quantum scarring phenomenon could prove particularly valuable for ZenaTech's data storage and retrieval systems. By leveraging these newly understood electron pathways, they could develop more efficient quantum memory systems for their massive datasets, enabling real-time analysis of drone-collected data across their entire network of Native American reservation monitoring systems.

IonQ's trapped ion technology appears perfectly positioned to capitalize on December's breakthroughs. The quantum scarring discovery aligns particularly well with their approach, as trapped ion systems naturally maintain longer coherence times than many competing technologies. This could allow IonQ to implement more stable quantum memory systems based on the newly understood electron behavior patterns.

Moreover, Northwestern's quantum teleportation breakthrough over existing fiber infrastructure could be transformative for IonQ's business model. Their trapped ion systems could now potentially be networked over standard internet infrastructure, dramatically reducing the cost and complexity of creating distributed quantum computing networks. This could accelerate their ability to offer quantum computing as a service, particularly for AI training applications that require massive computational resources.

The recent breakthroughs position Super Micro Computer uniquely at the intersection of classical and quantum computing. Google's Willow chip development, in particular, opens new possibilities for Super Micro's server architecture. They could develop hybrid systems that combine traditional high-performance computing with quantum co-processors, creating integrated solutions that leverage both technologies' strengths.

The demonstration of quantum teleportation over existing infrastructure aligns perfectly with Super Micro's expertise in building networked computing solutions. They could develop new server architectures specifically designed to support this quantum-classical integration, potentially becoming a key infrastructure provider for the emerging quantum internet.

The quantum scarring breakthrough has particular relevance for Quantum Corporation's storage technology development. Their Scalar i7 RAPTOR system could be enhanced by incorporating quantum-based storage techniques that leverage the newly understood electron behavior patterns. This could lead to storage systems that not only hold more data but also enable quantum-speed access to stored information.

The company could also leverage Northwestern's quantum teleportation breakthrough to develop new storage architectures that integrate quantum communication capabilities. This could enable their storage systems to serve as nodes in a quantum network, facilitating secure, high-speed data transfer between quantum and classical computing systems.

The quantum computing breakthroughs of December 2024 represent more than just technological achievements - they mark the beginning of a new era in computing and artificial intelligence. As companies like ZenaTech, IonQ, Super Micro Computer, and Quantum Corporation adapt and evolve to leverage these advances, we're witnessing the early stages of a transformation that will ripple across industries and redefine what's possible in environmental monitoring, data processing, communications, and storage.

December 26, 2024

AI for Everyone: Andrew Ng's Mission to Democratize Artificial Intelligence Education

In an era where artificial intelligence is rapidly transforming every industry, a new course which starts today is making waves in the educational landscape. "AI for Everyone," led by AI pioneer Andrew Ng, is addressing a critical gap in today's technological education – making AI accessible to everyone, regardless of their technical background.

Andrew Ng, a figure synonymous with AI innovation, brings unprecedented credentials to this educational initiative. As the founding lead of Google Brain and former chief scientist at Baidu, Ng has been at the forefront of major AI developments. His journey from Stanford professor to co-founding Coursera in 2012 reflects his commitment to democratizing education. Today, as the founder of DeepLearning.AI and General Partner at AI Fund, he continues to shape the future of AI development and education.

The timing of this course couldn't be more crucial. Traditional educational institutions are facing an unprecedented challenge: keeping pace with the lightning-speed advancement of AI technology. While universities typically take years to develop and update curricula, AI capabilities are evolving monthly, if not weekly. This misalignment has created a significant knowledge gap in the workforce, affecting both technical and non-technical professionals.

"AI for Everyone" addresses this challenge head-on. The course is structured into four comprehensive modules that cover everything from basic AI terminology to complex ethical considerations. What sets it apart is its accessibility – you don't need to be an engineer to understand it. The curriculum demystifies concepts like neural networks, machine learning, and deep learning, making them digestible for business leaders, policymakers, and professionals across all sectors.

Coursera's platform makes this vital education accessible to millions globally. With over 148 million learners worldwide and partnerships with more than 300 leading universities and companies, Coursera has established itself as a premier platform for online learning. Their model allows for flexible, affordable, and job-relevant education that can be accessed from anywhere in the world.

The course itself represents a broader shift in how we approach AI education. Rather than focusing solely on technical implementation, it emphasizes practical application, business strategy, and ethical considerations. Participants learn to identify AI opportunities within their organizations, understand AI's limitations, and navigate the societal implications of this transformative technology.

The curriculum also addresses one of today's most pressing concerns: the ethical deployment of AI. With modules covering discrimination, bias, and the societal impact of AI, the course ensures that learners understand not just the how, but the why and what-if of AI implementation.

Traditional education systems simply cannot adapt quickly enough to keep pace with AI advancements. Courses like "AI for Everyone" are becoming essential bridges, helping professionals stay relevant in a rapidly evolving technological landscape. They provide the agility and immediacy needed to address the skills gap created by AI's exponential growth.

For those interested in expanding their AI knowledge, regardless of their technical background, the course is available on Coursera's platform. You can enroll here on Coursera.

In a world where AI literacy is becoming as fundamental as digital literacy was a decade ago, initiatives like "AI for Everyone" are more than just courses – they're essential tools for professional survival and growth in the AI age.

December 24, 2024

Beyond the Glitch: Harnessing AI Hallucinations as a Tool for Innovation

What scientists once considered a flaw in artificial intelligence systems has emerged as an unexpected catalyst for innovation across multiple fields. While AI hallucinations—the generation of plausible but fabricated information—have caused concerns in chatbot interactions and public discourse, researchers are discovering their remarkable potential as tools for creative problem-solving and breakthrough discoveries.

The phenomenon that helped David Baker secure a Nobel Prize in Chemistry for his groundbreaking protein design work is now finding applications far beyond the realm of molecular biology. This transformation of a perceived weakness into a strength represents a paradigm shift in how we approach innovation and creative problem-solving.

In architecture, firms are beginning to experiment with AI hallucinations to generate novel building designs that challenge traditional constraints while adhering to physical laws. By feeding existing architectural patterns and environmental data into AI systems, designers can explore thousands of possible variations that might never occur to human architects. These systems can suggest innovative solutions for urban challenges like heat island effects or traffic flow optimization by "dreaming up" new street layouts and building configurations that traditional planning approaches might overlook.

Following the success in protein design, materials scientists are applying similar principles to discover new composite materials. By training AI models on existing material properties and letting them "hallucinate" new combinations, researchers are identifying novel compounds with unprecedented characteristics. This approach has already led to early successes in developing more efficient solar panel materials and stronger, lighter building components.

In the artistic realm, sound engineers and musicians are harnessing AI hallucinations to explore new sonic landscapes. By training models on various musical genres and acoustic patterns, they can generate entirely new instrumental combinations and harmonic structures. This technology is particularly promising for film scoring and ambient sound design, where unique atmospheric sounds are highly valued.

Environmental scientists are utilizing AI hallucinations to model possible climate scenarios and ecosystem interactions that haven't been observed in nature. These simulated environments help researchers predict how various species might adapt to changing conditions and identify potential intervention strategies for endangered ecosystems. The technology's ability to generate numerous variations of environmental conditions provides valuable insights for conservation efforts.

Building on the success in protein design, pharmaceutical researchers are expanding the use of AI hallucinations to imagine new molecular structures for drug development. This approach dramatically accelerates the traditional drug discovery process by generating and pre-screening thousands of potential compounds before laboratory testing begins. The technology has shown particular promise in developing targeted cancer therapies and antibiotics for drug-resistant bacteria.

Engineers are applying AI hallucinations to mechanical design problems, allowing machines to "dream up" new configurations for everything from robot joints to engine components. This approach has led to the discovery of more efficient mechanical systems that human engineers might not have considered, often resulting in reduced energy consumption and improved performance.

As our understanding of AI hallucinations evolves, researchers are developing more sophisticated ways to harness this phenomenon. The key lies in carefully constraining the hallucinations within relevant physical laws and practical limitations while maintaining their creative potential. This balance between unbounded creativity and practical utility represents the next frontier in AI-assisted innovation.

The rise of AI hallucinations as a tool for innovation challenges our traditional understanding of the creative process. Rather than viewing these machine-generated ideas as mere errors or glitches, scientists and innovators are increasingly recognizing them as valuable starting points for human-guided exploration and development.

The success of AI hallucinations in scientific discovery suggests we're only beginning to tap into their potential. As AI technology continues to advance, we can expect to see this approach applied to an even broader range of fields, from psychology to space exploration. The key to success lies not in replacing human creativity but in establishing a symbiotic relationship where AI hallucinations serve as a catalyst for human innovation.

This creative problem-solving represents a fundamental shift in how we approach innovation. By embracing the unexpected insights generated by AI hallucinations, we're opening new pathways to discovery that could help address some of humanity's most pressing challenges.