David Borish's Blog, page 7

December 23, 2024

The Patent Paradox: How AI's Legal Prowess Will Transform IP Generation

Since Q1 2024, we've witnessed an accelerating transformation in how artificial intelligence systems handle complex legal and technical work - a shift that sets the stage for automated patent generation at an unprecedented scale. A systematic analysis of recent AI breakthroughs reveals we're at the cusp of a fundamental change in how intellectual property is created.

The journey began with AI systems demonstrating mastery of legal reasoning. In early 2024, multiple AI models not only passed the bar exam but achieved scores in the top 10% of human test-takers. This wasn't just pattern matching - the systems showed sophisticated understanding of legal principles, precedent interpretation, and complex reasoning chains.

The most striking demonstration came from OpenAI's reasoning model 01, which showed it could produce comprehensive legal briefs matching the quality of top-tier law firms. Work that traditionally costs clients $8,000 and requires days of attorney time could be completed in 5 minutes for approximately $3 in API costs. The quality wasn't just acceptable - blind reviews by senior partners at major firms rated these AI-generated briefs as comparable to those produced by experienced attorneys.

The newly announced OpenAI o3 reasoning model which I wrote about in my article "The Race to AGI: OpenAI's NEW o3 Raises the Bar for AI Reasoning" represents another leap forward. Early testing shows the system surpassing human expert performance across multiple technical domains:

Mathematics: Solving complex theoretical problems and proving new theorems

Computer Science: Generating optimized code and novel algorithms

Scientific Analysis: Synthesizing research findings and proposing new hypotheses

Engineering: Designing innovative technical solutions to complex problems

This broad technical capability, combined with deep legal knowledge, creates the perfect foundation for patent generation. The system can now identify novel technical innovations, assess their patentability, and craft legally precise patent applications - all without human intervention.

Traditional patent development is limited by human cognitive bandwidth. A skilled patent attorney might handle 30-40 patent applications per year. Early testing suggests AI systems could potentially generate thousands of valid patent applications daily across multiple technical domains. This isn't just faster - it's a fundamentally different scale of operation.

The economics are equally transformative. With API costs continuing to fall and processing power increasing, the marginal cost of generating each additional patent application approaches zero. This could democratize patent generation, allowing smaller companies and individual inventors to protect their innovations more effectively.

However, several significant challenges need to be addressed:

Patent Office Capacity: Patent offices worldwide aren't currently equipped to handle a potential flood of AI-generated applications. New processes and potentially AI-powered review systems will be needed.

Quality Control: While AI can generate patent applications rapidly, ensuring each one represents genuinely novel and non-obvious innovations requires sophisticated filtering mechanisms.

Legal Framework Updates: Current patent law assumes human inventors. Questions about AI attribution, ownership rights, and what constitutes "obvious to a person skilled in the art" need resolution.

Market Impact The ability to generate patents at scale could reshape industries. Companies could rapidly build defensive patent portfolios, protect emerging technologies, and explore new innovation spaces systematically. However, this could also lead to patent thickets that impede innovation if not managed carefully.

As we look ahead, several transformative trends are emerging in the AI patent landscape. The rise of hybrid systems appears inevitable, where AI's generative capabilities merge with human strategic oversight to create the most effective patent development approach. These collaborative systems will leverage the strengths of both artificial and human intelligence, combining AI's processing power with human intuition and strategic thinking.

We're also witnessing the emergence of specialized AI systems focused on specific technical domains. These domain-specific models are demonstrating superior performance in generating high-quality patents within their areas of expertise, whether in biotechnology, semiconductor design, or renewable energy systems. Their deep understanding of particular fields enables them to identify truly novel innovations more effectively than general-purpose AI systems.

The business landscape is evolving as well, with innovative companies positioning themselves to offer AI-driven patent generation as a service. These enterprises are building sophisticated platforms that combine multiple AI models with automated workflow systems, making patent generation more accessible to businesses of all sizes.

Perhaps most intriguingly, the international patent landscape is becoming increasingly complex as different jurisdictions adopt varying approaches to AI-generated patents. Some countries are embracing AI innovation tools wholeheartedly, while others maintain more conservative stances, leading to a mosaic of regulatory frameworks that companies must navigate carefully.

For the patent system to adapt successfully to this new paradigm, several key steps are needed:

Updated legal frameworks that explicitly address AI-generated innovations

New quality metrics and validation processes for AI-generated patents

Enhanced patent office capabilities to handle increased volume

International coordination on AI patent policies

The transformation of patent generation through AI isn't just about speed or cost reduction - it represents a fundamental shift in how we approach innovation and intellectual property protection. As these systems continue to evolve throughout 2024 and beyond, they will likely reshape the innovation landscape in ways we're only beginning to understand.

The question isn't whether AI will transform patent generation, but how we'll adapt our legal and business systems to harness this capability effectively while maintaining the patent system's core purpose of promoting innovation.

December 19, 2024

Swimming with Sharks: How AI Drones are Making Beaches Safer

In the warm, shallow waters off Santa Barbara County's coast, an unexpected daily dance takes place between juvenile great white sharks and beach enthusiasts. These waters serve as natural nurseries for young sharks, attracted by the perfect combination of temperature and abundant food sources. Now, an innovative AI-powered system called SharkEye is helping humans and sharks share these waters more safely.

Developed by a diverse team at UC Santa Barbara's Benioff Ocean Science Laboratory, SharkEye combines drone technology with artificial intelligence to monitor and predict shark movements. The system employs standardized drone flights to capture video footage of nearshore waters, while machine learning models analyze the footage to detect great white sharks automatically.

"We want to forecast what kind of sharks might be coming our way to share the waves and the beach," explains Douglas McCauley, professor of marine science at UC Santa Barbara. The system doesn't just collect data – it actively shares it with the community through text messages, allowing beachgoers to make informed decisions about water activities.

Currently operating at Padaro Beach in Carpinteria, California, SharkEye represents a significant advancement in marine safety technology. The project's approach is particularly notable because it focuses on coexistence rather than conflict. Instead of viewing sharks as threats to be avoided, SharkEye helps humans adapt their behavior based on shark presence and patterns.

The system's implementation comes at a crucial time, as juvenile great white sharks increasingly aggregate in Southern California's coastal waters. These young sharks, drawn to the area's warm temperatures and abundant food supply of small fish and stingrays, often share space with surfers and swimmers.

While currently limited to one location, similar projects are emerging worldwide, suggesting a growing trend in AI-assisted marine safety. The success of SharkEye could pave the way for wider implementation of AI-driven shark monitoring systems at beaches globally.

As we continue to share our oceans with these magnificent predators, technologies like SharkEye demonstrate how AI can help foster safer coexistence between humans and marine life. The future of beach safety might just be in the watchful eyes of AI-powered drones circling overhead.

December 18, 2024

Learning to Forget: How Nature-Inspired AI is Transforming Machine Learning

In the realm of artificial intelligence, a fundamental challenge has long persisted: the inability of AI systems to selectively remember and forget information, much like humans do. While we naturally filter out the constant stream of unnecessary details in our daily lives, AI systems have traditionally been forced to process everything indiscriminately, leading to significant limitations in their performance and capabilities. Now, Sakana AI has unveiled a breakthrough that could fundamentally change this landscape with their introduction of Neural Attention Memory Models (NAMMs).

Drawing inspiration from human cognition, NAMMs represent a revolutionary approach to memory management in transformer models. Traditional transformers, which form the backbone of many modern AI systems, have been constrained by their need to retain and process all input information equally. This limitation has particularly affected their ability to handle extended tasks and complex scenarios, often resulting in diminished performance and excessive resource consumption.

The innovation behind NAMMs lies in their ability to make intelligent decisions about information retention, much like the human brain. These models employ neural network classifiers that actively decide which information to keep and which to discard, creating a more efficient and effective system for processing information. This approach marks a significant departure from previous attempts at memory management, which often relied on fixed rules or predetermined strategies.

What makes NAMMs particularly remarkable is their development through evolutionary optimization. Unlike traditional machine learning approaches that rely on gradient-based methods, NAMMs evolve through a process of mutation and selection, allowing them to optimize their performance even when dealing with binary decisions about memory retention. This evolutionary approach has proven surprisingly effective, enabling the models to develop sophisticated strategies for managing information.

The practical implications of this breakthrough are already evident in the system's performance across various applications. When implemented with language models, NAMMs demonstrate superior performance in tasks ranging from natural language processing to code generation. Perhaps more impressively, these memory models show an unprecedented ability to transfer their capabilities across different domains and model architectures without additional training.

This transferability represents a significant advancement in the field. A NAMM trained on language tasks can be successfully applied to vision systems or reinforcement learning scenarios, maintaining its efficiency benefits while adapting to entirely new types of information processing. This versatility suggests that the principles underlying NAMMs capture something fundamental about how information should be processed and retained, regardless of the specific domain.

The system's approach to memory management reveals interesting patterns that mirror human cognitive processes. In language tasks, NAMMs learn to retain crucial contextual information while discarding grammatical redundancies. When processing code, they identify and remember essential structural elements while pruning unnecessary comments and boilerplate content. This selective retention demonstrates a level of sophistication that goes beyond simple rule-based systems.

Looking ahead, this breakthrough opens new possibilities for AI development. The ability to selectively retain and process information could lead to more efficient and capable AI systems across various applications, from scientific research to educational technology. However, the true significance of NAMMs lies not just in their immediate applications but in what they reveal about the potential for creating more naturalistic approaches to artificial intelligence.

As we continue to draw inspiration from natural cognitive processes, breakthroughs like NAMMs suggest that the future of AI may lie not in creating ever-larger models, but in developing smarter, more efficient systems that can intelligently manage their resources. This shift towards more selective and efficient processing could mark a new chapter in the development of artificial intelligence, one that moves us closer to systems that can truly emulate the sophistication of natural intelligence.

Click here to read the full paper "An Evolved Universal Transformer Memory"

December 17, 2024

The Future of Weather Prediction: Google's AI Model Achieves New Heights in Accuracy

Google DeepMind has achieved a significant milestone in weather forecasting with their new AI system GenCast, which outperforms the world's leading operational weather prediction system. The model demonstrates superior accuracy in forecasting weather patterns up to 15 days ahead, marking a turning point in how we predict and prepare for weather events.

GenCast, detailed in a recent Nature publication, surpasses the European Centre for Medium-Range Weather Forecasts' (ECMWF) ENS system, long considered the gold standard in weather forecasting. The AI model showed better performance on 97.2% of evaluation metrics, particularly excelling in predicting extreme weather events and tropical cyclone paths.

What sets GenCast apart is its ability to generate highly accurate forecasts in just 8 minutes on a single Google Cloud TPU v5 device. This represents a dramatic improvement in computational efficiency compared to traditional methods that require hours on supercomputers with thousands of processors.

The system's success lies in its innovative approach to weather modeling. Unlike previous AI weather models, GenCast uses a sophisticated diffusion model architecture that can generate multiple realistic weather scenarios. This allows it to better capture the uncertainty inherent in weather forecasting and provide more reliable predictions of extreme events.

Perhaps most notably, GenCast shows particular strength in predicting tropical cyclones, offering a 12-hour advantage in accuracy compared to existing systems. This improvement could provide crucial extra time for communities to prepare for severe weather events.

The implications of this advancement extend beyond traditional weather forecasting. The system shows promise for renewable energy planning, helping wind farms better predict power generation potential. It also demonstrates superior capability in predicting extreme weather events, which could prove invaluable for disaster preparedness and climate adaptation strategies.

As climate change continues to impact weather patterns globally, tools like GenCast represent a significant step forward in our ability to predict and prepare for weather events. Google DeepMind has made the model's code and weights publicly available, allowing the wider weather forecasting community to build upon this breakthrough.

This development signals a new era in weather prediction, where artificial intelligence not only complements but potentially surpasses traditional forecasting methods. As we face increasingly complex weather patterns and climate challenges, such advances in AI-powered forecasting could prove crucial for protecting lives and property worldwide.

Click here to read the full paper "Probabilistic weather forecasting with machine learning"

December 16, 2024

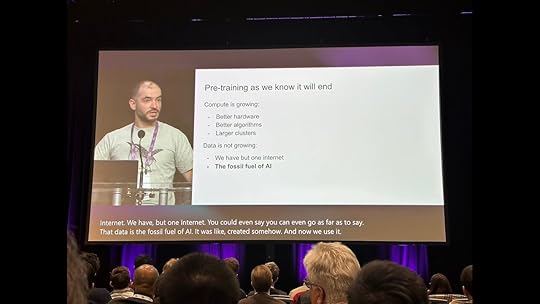

AI's Next Chapter: Moving Past the Internet's Data Limits

In a surprising turn that challenges the prevailing narrative of AI's unstoppable growth, Ilya Sutskever, OpenAI's former chief scientist and founder of Safe Superintelligence Inc., has identified a critical bottleneck in AI development: we're running out of training data. During his recent appearance at the Neural Information Processing Systems (NeurIPS) conference in Vancouver, Sutskever presented this sobering reality that could reshape the future of artificial intelligence.

Sutskever's analysis hinges on a compelling analogy: data is the fossil fuel of AI. Just as oil is a finite resource that powered the industrial revolution, the internet contains a limited amount of human-generated content that currently powers AI advancement. "We've achieved peak data and there'll be no more," Sutskever explains. "We have to deal with the data that we have. There's only one internet."

This limitation creates an intriguing paradox. While computing power continues to expand through better hardware, improved algorithms, and larger clusters, the fundamental ingredient - training data - remains fixed. Current AI models rely heavily on pre-training, a process where they learn patterns from vast amounts of unlabeled data sourced from the internet, books, and other digital content. As these sources reach their limits, the industry faces a crucial inflection point.

Looking toward solutions, Sutskever outlines several potential paths forward. One particularly fascinating parallel he draws is to evolutionary biology. He points to research showing how hominids developed a distinctly different brain-to-body mass scaling pattern compared to other mammals. This biological precedent suggests that AI might similarly discover new approaches to learning and scaling beyond traditional pre-training methods.

The next generation of AI systems, according to Sutskever, will be fundamentally different from today's models. He predicts they will demonstrate true reasoning capabilities rather than just pattern matching. These systems will be "agentic in real ways," meaning they'll be able to autonomously perform tasks, make decisions, and interact with software on their own.

A key characteristic of these future systems is their unpredictability. "The more a system reasons, the more unpredictable it becomes," Sutskever notes, comparing it to how advanced chess AIs make moves that even grandmasters can't anticipate. These systems will understand concepts from limited data and avoid the confusion that plagues current models.

Several potential solutions are emerging to address the data limitation:

Synthetic Data Generation: Creating artificial training data that maintains the quality and diversity needed for effective AI training.

Agent-Based Learning: Developing systems that learn through interaction and experience rather than static data.

Inference-Time Computation: Shifting focus from pre-training to real-time learning and adaptation.

More Efficient Training Methods: Developing algorithms that can learn more from less data.

The implications of this data ceiling extend beyond technical considerations. Companies and researchers must now rethink their approaches to AI development. The era of simply feeding more data into larger models is ending, pushing the field toward more innovative and efficient training methods.

As AI development approaches this data limit, the industry stands at a crucial juncture. The challenge isn't just technical but conceptual - requiring a fundamental rethinking of how artificial intelligence learns and develops. Sutskever's insights suggest that while the current path of AI development may be reaching its limits, new approaches could lead to even more capable systems.

The future of AI won't be determined by who has access to the most data, but by who can develop the most efficient and innovative ways to learn from limited information. This shift could democratize AI development, making it less dependent on massive data collections and more focused on algorithmic innovations and novel learning approaches.

As we navigate this transition, the AI community must balance the push for advancement with the reality of our resources. The next chapter in AI development may not be about bigger datasets, but about smarter ways to learn from the information we already have.

December 11, 2024

Learning Curve: Student AI Usage Outpaces Academic Integration

A recent survey by the Digital Education Council reveals what many educators already suspected: artificial intelligence has become an integral part of student life. The study found that 86% of students now use AI in their studies, with nearly a quarter utilizing these tools daily.

This widespread adoption reminds me of my own experience as a programming student. I was six months away from completing my Visual Basic course when Microsoft announced VB.NET, effectively making our entire curriculum outdated overnight. When I approached my professor about this shift, hoping to learn the newer technology, I was met with a firm stance that we would stick to the original syllabus. This experience highlighted a crucial challenge in education: the gap between rapid technological advancement and academic adaptation.

Today's students face a similar disconnect. While they actively embrace AI tools - with ChatGPT leading at 66% usage, followed by Grammarly and Microsoft Copilot at 25% each - their institutions often struggle to keep pace. The survey found that 80% of students feel their universities' AI integration falls short of expectations.

Students aren't just using AI casually - they're integrating it deeply into their academic workflow. The top uses include information searches (69%), grammar checking (42%), and document summarization (33%). On average, students utilize 2.1 AI tools for their coursework, suggesting a sophisticated understanding of AI's capabilities.

However, this adoption comes with its own challenges. Despite their regular use of AI tools, 58% of students report feeling insufficient in their AI knowledge and skills. Even more concerning, 48% feel unprepared for an AI-enabled workforce. These statistics suggest that while students are quick to adopt AI technology, they need more structured guidance in using it effectively.

Students are clear about their expectations: 73% want faculty training in AI tools, 72% desire student training and more AI literacy courses, and 71% want involvement in decisions about AI tool implementation. These requests reflect a mature understanding that AI isn't just a passing trend but a fundamental shift in education.

Alessandro Di Lullo, CEO of the Digital Education Council, emphasizes that universities must now view AI as "core infrastructure rather than a tool." This perspective shift is crucial for preparing students for an AI-driven future while maintaining educational quality and integrity.

The parallel between my VB.NET experience and today's AI integration challenges highlights a crucial difference in the pace of technological change. While my university could have waited six months or even a year to adopt VB.NET without significant consequences, today's AI landscape offers no such luxury. The technology is evolving at an unprecedented rate, with new models and capabilities emerging almost weekly. Schools that delay AI integration risk falling irretrievably behind their more adaptive peers.

This disparity is already evident. While some institutions like American University's Kogod School of Business are actively "infusing AI into every part of our curriculum" and Columbia Business School is developing specialized AI tools (Business Insider, 2024), other universities have essentially given up on regulating or incorporating AI, choosing instead to turn a blind eye to its use (Warner University, 2024). This stark contrast in approaches could create lasting gaps in educational quality and student preparedness.

The survey data from the Digital Education Council (2024) becomes even more concerning in this context - with 58% of students feeling insufficient in their AI knowledge and 48% feeling unprepared for an AI-enabled workforce, institutions that resist or delay AI integration may be creating a competitive disadvantage that grows exponentially over time.

Rather than resisting change, institutions need to embrace it while providing proper guidance and structure, understanding that in the AI era, the cost of delay isn't just measured in months but in missed opportunities that may never be recovered.

December 9, 2024

The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma

The technological revolution unfolding before us isn't just another wave of innovation – it's a tsunami of transformative power that could either elevate humanity to unprecedented heights or sweep away our existing social order. This is the stark warning from Mustafa Suleyman, co-founder of DeepMind and Inflection AI, in his crucial new book "The Coming Wave."

Unlike previous technological revolutions, this one centers on two fundamental forces of nature: intelligence and life itself. Through artificial intelligence and synthetic biology, humanity is gaining godlike powers to reshape both thought and matter at their most basic levels. While these advances promise extraordinary benefits – from medical breakthroughs to clean energy solutions – they also introduce existential risks that our current institutions are woefully unprepared to handle.

Suleyman identifies several characteristics that make this wave particularly dangerous. These technologies evolve at dizzying speeds, enable massively asymmetric impacts (where small groups can wield enormous power), work across every domain of society, and increasingly display signs of autonomy. Perhaps most alarmingly, they are diffusing faster than any consumer technology in history, putting unprecedented capabilities into the hands of potential bad actors.

The dilemma we face is stark: How do we harness these technologies' immense benefits while preventing their misuse? According to Suleyman, we're caught between two nightmarish scenarios. Either we submit to a surveillance state that stamps out innovation in the name of security, or we continue our current trajectory toward eventual catastrophe through runaway technological development.

The solution, Suleyman argues, lies in what he calls "containment" – a comprehensive framework of ten concentric layers designed to maintain human control over these powerful technologies. These layers range from technical safety measures built into the tools themselves to international treaties and new global institutions. He emphasizes that containment isn't about stopping progress, but rather about ensuring technology serves humanity's interests rather than threatening our existence.

Time is of the essence. What once took centuries of technological development now happens in months or even days. The wave is already building, and our window for establishing effective containment measures is rapidly closing. While Suleyman acknowledges that perfect containment may seem impossible given technology's inherent tendency to spread, he argues that we have no choice but to try. The alternative – allowing these technologies to proliferate unchecked – is simply too dangerous to contemplate.

The message is clear: We stand at a pivotal moment in human history. The coming wave of AI and synthetic biology will transform our world irrevocably. Whether that transformation leads to utopia or dystopia depends entirely on our ability to develop and implement effective containment strategies before it's too late.

Click here to learn more about The Coming Wave

December 6, 2024

From Lab to Life: AI's Role in Making IVF More Accessible

The path to parenthood through IVF has traditionally been marked by uncertainty - both medical and financial. With costs ranging from $15,000 to over $30,000 per cycle in the US, and many couples requiring multiple attempts, the financial burden can be overwhelming. However, an innovative startup called Gaia is changing this landscape by harnessing the power of artificial intelligence.

At the heart of Gaia's approach is a sophisticated machine learning model trained on millions of data points from sources like the CDC and the Human Fertilisation and Embryology Authority. This AI system analyzes variables including medical history, demographics, and lifestyle factors to predict the likelihood of successful pregnancy over three IVF cycles.

The company uses these predictions to create a unique payment model that protects hopeful parents from crushing financial risk. Members pay an upfront "protection fee" averaging $7,600 and a fixed per-cycle cost around $22,700. The game-changing element? If treatment doesn't result in a baby after three cycles, members only pay the protection fees.

"If they don't have a child, they don't pay for their treatment," explains Nader AlSalim, Gaia's CEO and co-founder, who experienced the financial strain of IVF firsthand before starting the company. His personal journey, which cost over $50,000, inspired him to create a more accessible solution.

The impact of this AI-driven approach extends beyond individual families. By removing substantial financial barriers, Gaia is making fertility treatment accessible to many who previously couldn't consider it. The company's recent expansion into the US market marks a significant step toward broader accessibility.

Looking ahead, AI's role in fertility treatment continues to expand. Researchers are developing automated systems and advanced embryo screening methods that could reduce the number of cycles needed for success. These technological advances, combined with innovative financial models like Gaia's, are transforming what's possible for people struggling with infertility.

For the one in six adults who experience infertility, these developments represent more than just technological progress - they represent hope. As AI continues to enhance both the science and accessibility of fertility treatments, the dream of parenthood becomes attainable for more people than ever before.

December 5, 2024

Google's Genie 2 AI: Turning Static Images into Living, Playable Worlds

Google DeepMind has unveiled Genie 2, an advanced AI system that transforms single images into interactive 3D environments. This powerful technology represents a significant advancement in artificial intelligence training, offering unprecedented possibilities for developing and testing AI agents in diverse virtual worlds.

At its core, Genie 2 operates by taking a single prompt image and converting it into a fully interactive 3D environment that responds to keyboard and mouse inputs. What makes this system particularly impressive is its ability to maintain consistent physics, lighting, and object interactions while generating new content on the fly for up to a minute of gameplay.

The system demonstrates remarkable capabilities across various domains. It can handle complex physics simulations, including water effects, smoke behavior, and gravity. It maintains object permanence, remembering areas that are no longer in view and accurately recreating them when players return. Perhaps most impressively, it can generate NPCs (non-player characters) that exhibit intelligent behaviors and interactions.

Future Applications:

Game Development: Game designers could rapidly prototype concepts by simply inputting concept art, receiving playable environments instantly

Architecture Visualization: Architects could turn blueprints into explorable 3D spaces

Education: Creating interactive historical reconstructions or scientific simulations

Virtual Training: Companies could generate custom training scenarios for employees

Urban Planning: Cities could visualize and test proposed developments

Film Pre-visualization: Directors could quickly test different scene layouts and camera angles

As Genie 2 continues to evolve, we can expect several key improvements to enhance its capabilities. The current visual output, while functional, will likely advance to achieve crisp 4K resolution or beyond, creating more immersive and detailed environments.

The system's current one-minute generation limit should expand significantly as computational power and algorithms improve, allowing for extended gameplay sessions and deeper world exploration. We'll likely witness more sophisticated physics simulations that can handle complex interactions between multiple objects and elements, alongside more fluid and lifelike character animations that better mimic natural movement.

The technology should also develop an enhanced ability to maintain consistent world details over longer periods, remembering and accurately recreating previously generated areas with greater fidelity. Perhaps most importantly, processing speeds will increase dramatically, enabling real-time generation and response that matches the immediacy of traditional video games, making the experience seamless for both human players and AI agents.

The technology's potential for AI training cannot be overstated. By providing an infinite variety of training environments, Genie 2 could help develop more adaptable and capable AI systems that can handle unexpected situations and transfer their learning to real-world applications.

Click here to read the full paper: Genie 2: A large-scale foundation world model

December 3, 2024

Smart Buildings Get Smarter: AI's Key Role in Real Estate Sustainability

Artificial Intelligence is emerging as a crucial tool in the commercial real estate sector's battle against climate change. As buildings account for approximately 40% of global carbon emissions, property managers and owners are turning to AI-powered solutions to optimize energy usage and reduce their environmental impact.

Modern commercial buildings are equipped with numerous smart devices and sensors that generate vast amounts of data. AI systems process this information to make real-time adjustments to building operations, particularly in energy-intensive systems like HVAC. These intelligent platforms can predict usage patterns, adjust temperature settings based on occupancy, and identify potential equipment failures before they occur.

The impact extends beyond day-to-day operations. AI tools are now helping property managers make strategic decisions about their building portfolios. By analyzing factors such as energy consumption patterns, maintenance records, and occupancy rates, these systems can recommend which properties should be retrofitted or sold to achieve sustainability goals.

Compliance reporting, often a complex and time-consuming process, has been simplified through AI-powered platforms that automatically track and report environmental performance metrics. These systems can monitor progress toward sustainability targets and ensure adherence to evolving environmental regulations.

The technology is also making waves in new construction projects. AI algorithms can analyze building materials and construction methods to identify options with lower carbon footprints, helping developers make more sustainable choices from the ground up.

However, challenges remain. The effectiveness of AI systems depends heavily on the quality and quantity of data available. Building managers must ensure their data collection methods are robust and comprehensive. Additionally, while the initial investment in AI technology can be significant, the long-term benefits in energy savings and improved building performance often justify the cost.

As we move toward a net-zero future, the integration of AI in building management isn't just an option – it's becoming a necessity. These intelligent systems are proving to be invaluable allies in the quest for sustainable real estate, offering precise, data-driven solutions to reduce carbon emissions while maintaining optimal building performance.