Jeremy Keith's Blog, page 82

March 15, 2017

Progressive Web App questions

I got a nice email recently from Colin van Eenige. He wrote:

For my graduation project I���m researching the development of Progressive Web Apps and found your offline book called resilient web design. I was very impressed by the implementation of the website and it really was a nice experience.

I���m very interested in your vision on progressive web apps and what capabilities are waiting for us regarding offline content. Would it be fine if I���d send you some questions?

I said that would be fine, although I couldn’t promise a swift response. He sent me four questions. I finally got ‘round to sending my answers…

1. https://resilientwebdesign.com/ is an offline web book (progressive web app). What was the primary reason make it available like this (besides the other formats)?

Well, given the subject matter, it felt right that the canonical version of the book should be not just online, but made with the building blocks of the web. The other formats are all nice to have, but the HTML version feels (to me) like the “real” book.

Interestingly, it wasn’t too much trouble for people to generate other formats from the HTML (ePub, MOBI, PDF), whereas I think trying to go in the other direction would be trickier.

As for the offline part, that felt like a natural fit. I had already done that with a previous book of mine, HTML5 For Web Designers, which I put online a year or two after its print publication. In that case, I used AppCache for the offline functionality. AppCache is horrible, but this use case might be one of the few where it works well: a static book that’s never going to change. Cache invalidation is one of the worst parts of using AppCache so by not having any kinds of updates at all, I dodged that bullet.

But when it came time for Resilient Web Design, a service worker was definitely the right technology. Still, I’ve got AppCache in there as well for the browsers that don’t yet support service workers.

2. What effect you you think Progressive Web Apps will have on content consuming and do you think these will take over the purpose of some Native Apps?

The biggest effect that service workers could have is to change the expectations that people have about using the web, especially on mobile devices. Right now, people associate the web on mobile with long waits and horrible spammy overlays. Service workers can help solve that first part.

If people then start adding sites to their home screen, that will be a great sign that the web is really holding its own. But I don’t think we should get too optimistic about that: for a user, there’s no difference between a prompt on their screen saying “add to home screen” and a prompt on their screen saying “download our app”���they’re equally likely to be dismissed because we’ve trained people to dismiss anything that covers up the content they actually came for.

It’s entirely possible that websites could start taking over much of the functionality that previously was only possible in a native app. But I think that inertia and habit will keep people using native apps for quite some time.

The big exception is in markets where storage space on devices is in short supply. That’s where the decision to install a native app isn’t taken likely (given the choice between your family photos and an app, most people will reject the app). The web can truly shine here if we build lightweight, performant services.

Even in that situation, I’m still not sure how many people will end up adding those sites to their home screen (it might feel so similar to installing a native app that there may be some residual worry about storage space) but I don’t think that’s too much of a problem: if people get to a site via search or typing, that’s fine.

I worry that the messaging around “progressive web apps” is perhaps over-fetishising the home screen. I don’t think that’s the real battleground. The real battleground is in people’s heads; how they perceive the web and how they perceive native.

After all, if the average number of native apps installed in a month is zero, then that’s not exactly a hard target to match. :-)

3. What is your vision regarding Progressive Web Apps?

For me, progressive web apps don’t feel like a separate thing from making websites. I worry that the marketing of them might inflate expectations or confuse people. I like the idea that they’re simply websites that have taken their vitamins.

So my vision for progressive web apps is the same as my vision for the web: something that people use every day for all sorts of tasks.

I find it really discouraging that progressive web apps are becoming conflated with single page apps and the app shell model. Those architectural decisions have nothing to do with service workers, HTTPS, and manifest files. Yet I keep seeing the concepts used interchangeably. It would be a real shame if people chose not to use these great technologies just because they don’t classify what they’re building as an “app.”

If anything, it’s good ol’ fashioned content sites (newspapers, wikipedia, blogs, and yes, books) that can really benefit from the turbo boost of service worker+HTTPS+manifest.

I was at a conference recently where someone was given a talk encouraging people to build progressive web apps but discouraging people from doing it for their own personal sites. That’s a horrible, elitist attitude. I worry that this attitude is being codified in the term “progressive web app”.

4. What is the biggest learning you���ve had since working on Progressive Web Apps?

Well, like I said, I think that some people are focusing a bit too much on the home screen and not enough on the benefits that service workers can provide to just about any website.

My biggest learning is that these technologies aren’t for a specific subset of services, but can benefit just about anything that’s on the web. I mean, just using a service worker to explicitly cache static assets like CSS, JS, and some images is a no-brainer for almost any project.

So there you go���I’m very excited about the capabilities of these technologies, but very worried about how they’re being “sold”. I’m particularly nervous that in the rush to emulate native apps, we end up losing the very thing that makes the web so powerful: URLs.

March 13, 2017

In AMP we trust

AMP Conf was one of those deep dive events, with two days dedicated to one single technology: AMP.

Except AMP isn’t really one technology, is it? And therein lies the confusion. This was at the heart of the panel I was on. When we talk about AMP, we could be talking about one of three things:

The AMP format. A bunch of web components. For instance, instead of using an img element on an AMP page, you use an amp-img element instead.

The AMP rules. There’s one JavaScript file, hosted on Google’s servers, that turns those web components from spans into working elements. No other JavaScript is allowed. All your styles must be in a style element instead of an external file, and there’s a limit on what you can do with those styles.

The AMP cache. The source of most confusion���and even downright enmity���this is what’s behind the fact that when you launch an AMP result from Google search, you don’t go to another website. You see Google’s cached copy of the page instead of the original.

The first piece of AMP���the format���is kind of like a collection of marginal gains. Where the img element might have some performance issues, the amp-img element optimises for perceived performance. But if you just used the AMP web components, it wouldn’t be enough to make your site blazingly fast.

The second part of AMP���the rules���is where the speed gains start to really show. You can’t have an external style sheet, and crucially, you can’t have any third-party scripts other than the AMP script itself. This is key to making AMP pages super fast. It’s not so much about what AMP does; it’s more about what it doesn’t allow. If you never used a single AMP component, but stuck to AMP’s rules disallowing external styles and scripts, you could easily make a page that’s even faster than what AMP can do.

At AMP Conf, Natalia pointed out that The Guardian’s non-AMP pages beat out the AMP pages for performance. So why even have AMP pages? Well, that’s down to the third, most contentious, part of the AMP puzzle.

The AMP cache turns the user experience of visiting an AMP page from fast to instant. While you’re still on the search results page, Google will pre-render an AMP page in the background. Not pre-fetch, pre-render. That’s why it opens so damn fast. It’s also what causes the most confusion for end users.

From my unscientific polling, the behaviour of AMP results confuses the hell out of people. The fact that the page opens instantly isn’t the problem���far from it. It’s the fact that you don’t actually go to an another page. Technically, you’re still on Google. An analogous mental model would be an RSS reader, or an email client: you don’t go to an item or an email; you view it in situ.

Well, that mental model would be fine if it were consistent. But in Google search, only some results will behave that way (the AMP pages) and others will behave just like regular links to other websites. No wonder people are confused! Some search results take them away and some search results keep them on Google …even though the page looks like a different website.

The price that we pay for the instantly-opening AMP pages from the Google cache is the URL. Because we’re looking at Google’s pre-rendered copy instead of the original URL, the address bar is not pointing to the site the browser claims to be showing. Everything in the body of the browser looks like an article from The Guardian, but if I look at the URL (which is what security people have been telling us for years is important to avoid being phished), then I’ll see a domain that is not The Guardian’s.

But wait! Couldn’t Google pre-render the page at its original URL?

Yes, they could. But they won’t.

This was a point that Paul kept coming back to: trust. There’s no way that Google can trust that someone else’s URL will play by the AMP rules (no external scripts, only loading embedded content via web components, limited styles, etc.). They can only trust the copies that they themselves are serving up from their cache.

By the way, there was a joint AMP/search panel at AMP Conf with representatives from both teams. As you can imagine, there were many questions for the search team, most of which were Glomar’d. But one thing that the search people said time and again was that Google was not hosting our AMP pages. Now I don’t don’t know if they were trying to make some fine-grained semantic distinction there, but that’s an outright falsehood. If I click on a link, and the URL I get taken to is a Google property, then I am looking at a page hosted by Google. Yes, it might be a copy of a document that started life somewhere else, but if Google are serving something from their cache, they are hosting it.

This is one of the reasons why AMP feels like such a bait’n’switch to me. When it first came along, it felt like a direct competitor to Facebook’s Instant Articles and Apple News. But the big difference, we were told, was that you get to host your own content. That appealed to me much more than having Facebook or Apple host the articles. But now it turns out that Google do host the articles.

This will be the point at which Googlers will say no, no, no, you can totally host your own AMP pages …but you won’t get the benefits of pre-rendering. But without the pre-rendering, what’s the point of even having AMP pages?

Well, there is one non-cache reason to use AMP and it’s a political reason. Beleaguered developers working for publishers of big bloated web pages have a hard time arguing with their boss when they’re told to add another crappy JavaScript tracking script or bloated library to their pages. But when they’re making AMP pages, they can easily refuse, pointing out that the AMP rules don’t allow it. Google plays the bad cop for us, and it’s a very valuable role. Sarah pointed this out on the panel we were on, and she was spot on.

Alright, but what about The Guardian? They’ve already got fast pages, but they still have to create separate AMP pages if they want to get the pre-rendering benefits when they show up in Google search results. Sorry, says Google, but it’s the only way we can trust that the pre-rendered page will be truly fast.

So here’s the impasse we’re at. Google have provided a list of best practices for making fast web pages, but the only way they can truly verify that a page is sticking to those best practices is by hosting their own copy, URLs be damned.

This was the crux of Paul’s argument when he was on the Shop Talk Show podcast (it’s a really good episode���I was genuinely reassured to hear that Paul is not gung-ho about drinking the AMP Kool Aid; he has genuine concerns about the potential downsides for the web).

Initially, I accepted this argument that Google just can’t trust the rest of the web. But the more I talked to people at AMP Conf���and I had some really, really good discussions with people away from the stage���the more I began to question it.

Here’s the thing: the regular Google search can’t guarantee that any web page is actually 100% the right result to return for a search. Instead there’s a lot of fuzziness involved: based on the content, the markup, and the number of trusted sources linking to this, it looks like it should be a good result. In other words, Google search trusts websites to���by and large���do the right thing. Sometimes websites abuse that trust and try to game the system with sneaky tricks. Google responds with penalties when that happens.

Why can’t it be the same for AMP pages? Let me host my own AMP pages (maybe even host my own AMP script) and then when the Googlebot crawls those pages���the same as it crawls any other pages���that’s when it can verify that the AMP page is abiding by the rules. If I do something sneaky and trick Google into flagging a page as fast when it actually isn’t, then take my pre-rendering reward away from me.

To be fair, Google has very, very strict rules about what and how to pre-render the AMP results it’s caching. I can see how allowing even the potential for a false positive would have a negative impact on the user experience of Google search. But c’mon, there are already false positives in regular search results���fake news, spam blogs. Googlers are smart people. They can solve���or at least mitigate���these problems.

Google says it can’t trust our self-hosted AMP pages enough to pre-render them. But they ask for a lot of trust from us. We’re supposed to trust Google to cache and host copies of our pages. We’re supposed to trust Google to provide some mechanism to users to get at the original canonical URL. I’d like to see trust work both ways.

March 6, 2017

Empire State

I’m in New York. Again. This time it’s for Google’s AMP Conf, where I’ll be giving ‘em a piece of my mind on a panel.

The conference starts tomorrow so I’ve had a day or two to acclimatise and explore. Seeing as Google are footing the bill for travel and accommodation, I’m staying at a rather nice hotel close to the conference venue in Tribeca. There’s live jazz in the lounge most evenings, a cinema downstairs, and should I request it, I can even have a goldfish in my room.

Today I realised that my hotel sits in the apex of a triangle of interesting buildings: carrier hotels.

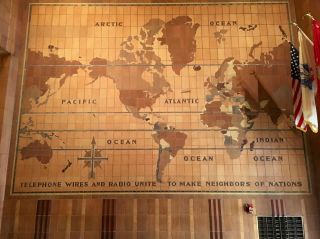

Looming above my hotel is 32 Avenue of the Americas. On the outside the building looks like your classic Gozer the Gozerian style of New York building. Inside, the lobby features a mosaic on the ceiling, and another on the wall extolling the connective power of radio and telephone.

The same architects also designed 60 Hudson Street, which has a similar Art Deco feel to it. Inside, there’s a cavernous hallway running through the ground floor but I can’t show you a picture of it. A security guard told me I couldn’t take any photos inside …which is a little strange seeing as it’s splashed across the website of the building.

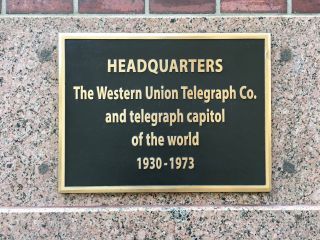

I walked around the outside of 60 Hudson, taking more pictures. Another security guard asked me what I was doing. I told her I was interested in the history of the building, which is true; it was the headquarters of Western Union. For much of the twentieth century, it was a world hub of telegraphic communication, in much the same way that a beach hut in Porthcurno was the nexus of the nineteenth century.

For a 21st century hub, there’s the third and final corner of the triangle at 33 Thomas Street. It’s a breathtaking building. It looks like a spaceship from a Chris Foss painting. It was probably designed more like a spacecraft than a traditional building���it’s primary purpose was to withstand an atomic blast. Gone are niceties like windows. Instead there’s an impenetrable monolith that looks like something straight out of a dystopian sci-fi film.

Brutalist on the outside, its interior is host to even more brutal acts of invasive surveillance. The Snowden papers revealed this AT&T building to be a centrepiece of the Titanpointe programme:

They called it Project X. It was an unusually audacious, highly sensitive assignment: to build a massive skyscraper, capable of withstanding an atomic blast, in the middle of New York City. It would have no windows, 29 floors with three basement levels, and enough food to last 1,500 people two weeks in the event of a catastrophe.

But the building���s primary purpose would not be to protect humans from toxic radiation amid nuclear war. Rather, the fortified skyscraper would safeguard powerful computers, cables, and switchboards. It would house one of the most important telecommunications hubs in the United States…

Looking at the building, it requires very little imagination to picture it as the lair of villainous activity. Laura Poitras’s short film Project X basically consists of a voiceover of someone reading an NSA manual, some ominous background music, and shots of 33 Thomas Street looming in its oh-so-loomy way.

A top-secret handbook takes viewers on an undercover journey to Titanpointe, the site of a hidden partnership. Narrated by Rami Malek and Michelle Williams, and based on classified NSA documents, Project X reveals the inner workings of a windowless skyscraper in downtown Manhattan.

March 3, 2017

Small steps

The new Clearleft website is live! Huzzah!

Many people have been working very hard on it and it’s all looking rather nice. But, as I said before, the site launch isn’t the end���it’s just the beginning.

There are some obvious next steps: fixing bugs, adding content, tweaking copy, and, oh yeah, that whole “testing with real users” thing. But there’s also an opportunity to have some fun on the front end. Now that the site is out there in the wild, there’s a real incentive to improve its performance.

Off the top of my head, these are some areas where I think we can play around:

Font loading. Right now the site is just using @font-face. A smart font-loading strategy���at least for the body copy���could really help improve the perceived performance.

Responsive images. A long-term solution will require some wrangling on the back end, but I reckon we can come up with some way of generating different sized images to reference in srcset.

Service worker. It’s a no-brainer. Now that the Clearleft site is (finally!) running on HTTPS, having a simple service worker to cache static assets like CSS, JavaScript and some images seems like the obvious next step. The question is: what other offline shenanigans could we get up to?

I’m looking forward to tinkering with some of those technologies. Each one should make an incremental improvement to the site’s performance. There are already some steps on the back-end that are making a big difference: upgrading to PHP7 and using HTTP2.

Now the real fun begins.

February 22, 2017

Long betting

It has been exactly six years to the day since I instantiated this prediction:

The original URL for this prediction (www.longbets.org/601) will no longer be available in eleven years.

It is exactly five years to the day until the prediction condition resolves to a Boolean true or false.

If it resolves to true, The Bletchly Park Trust will receive $1000.

If it resolves to false, The Internet Archive will receive $1000.

Much as I would like Bletchley Park to get the cash, I’m hoping to lose this bet. I don’t want my pessimism about URL longevity to be rewarded.

So, to recap, the bet was placed on

02011-02-22

It is currently

02017-02-22

And the bet times out on

02022-02-22.

February 21, 2017

Variable fonts

We have a tradition here at Clearleft of having the occasional lunchtime braindump. They’re somewhat sporadic, but it’s always a good day when there’s a “brown bag” gathering.

When Google’s AMP format came out and I had done some investigating, I led a brown bag playback on that. Recently Mark did one on Fractal so that everyone knew how work on that was progressing.

Today Richard gave us a quick brown bag talk on variable web fonts. He talked us through how these will work on the web and in operating systems. We got a good explanation of how these fonts would get designed���the type designer designs the “extreme” edges of size, weight, or whatever, and then the file format itself can extrapolate all the in-between stages. So, in theory, one single font file can hold hundreds, thousands, or hundreds of thousands of potential variations. It feels like switching from bitmap images to SVG���there’s suddenly much greater flexibility.

A variable font is a single font file that behaves like multiple fonts.

There were a couple of interesting tidbits that Rich pointed out…

While this is a new file format, there isn’t going to be a new file extension. These will be .ttf files, and so by extension, they can be .woff and .woff2 files too.

This isn’t some proposed theoretical standard: an unprecedented amount of co-operation has gone into the creation of this format. Adobe, Apple, Google, and Microsoft have all contributed. Agreement is the hardest part of any standards process. Once that’s taken care of, the technical solution follows quickly. So you can expect this to land very quickly and widely.

This technology is landing in web browsers before it lands in operating systems. It’s already available in the Safari Technology Preview. That means that for a while, the very best on-screen typography will be delivered not in eBook readers, but in web browsers. So if you want to deliver the absolute best reading experience, look to the web.

And here’s the part that I found fascinating…

We can currently use numbers for the font-weight property in CSS. Those number values increment in hundreds: 100, 200, 300, etc. Now with variable fonts, we can start using integers: 321, 417, 183, etc. How fortuitous that we have 99 free slots between our current set of values!

Well, that’s no accident. The reason why the numbers were originally specced in increments of 100 back in 1996 was precisely so that some future sci-fi technology could make use of the ranges in between. That’s some future-friendly thinking! And as H��kon wrote:

One of the reasons we chose to use three-digit numbers was to support intermediate values in the future. And the future is now :)

Needless to say, variable fonts will be covered in Richard’s forthcoming book.

February 20, 2017

Amber

I really enjoyed teaching in Porto last week. It was like having a week-long series of CodeBar sessions.

Whenever I’m teaching at CodeBar, I like to be paired up with people who are just starting out. There’s something about explaining the web and HTML from first principles that I really like. And people often have lots and lots of questions that I enjoy answering (if I can). At CodeBar���and at The New Digital School���I found myself saying “Great question!” multiple times. The really great questions are the ones that I respond to with “I don’t know …let’s find out!”

CodeBar is always a very rewarding experience for me. It has given me the opportunity to try teaching. And having tried it, I can now safely say that I like it. It’s also a great chance to meet people from all walks of life. It gets me out of my bubble.

I can’t remember when I was first paired up with Amber at CodeBar. It must have been sometime last year. I do remember that she had lots of great questions���at some point I found myself explaining how hexadecimal colours work.

I was impressed with Amber’s eagerness to learn. I also liked that she was making her own website. I told her about Homebrew Website Club and she started coming along to that (along with other CodeBar people like Cassie and Alice).

I’ve mentioned to multiple CodeBar students that there’s pretty much an open-door policy at Clearleft when it comes to shadowing: feel free to come along and sit with a front-end developer while they’re working on client projects. A few people have taken up the offer and enjoyed observing myself or Charlotte at work. Amber was one of those people. Again, I was very impressed with her drive. She’s got a full-time job (with sometimes-crazy hours) but she’s so determined to get into the world of web design and development that she’s willing to spend her free time visiting Clearleft to soak up the atmosphere of a design studio.

We’ve decided to turn this into something more structured. Amber and I will get together for a couple of hours once a week. She’s given me a list of some of the areas she wants to explore, and I think it’s a fine-looking list:

I want to gather base, structural knowledge about the web and all related aspects. Things seem to float around in a big cloud at the moment.

I want to adhere to best practices.

I want to learn more about what direction I want to go in, find a niche.

I’d love to opportunity to chat with the brilliant people who work at Clearleft and gain a broad range of knowledge from them.

My plan right now is to take a two-track approach: one track about the theory, and another track about the practicalities. The practicalities will be HTML, CSS, JavaScript, and related technologies. The theory will be about understanding the history of the web and its strengths and weaknesses as a medium. And I want to make sure there’s plenty of UX, research, information architecture and content strategy covered too.

Seeing as we’ll only have a couple of hours every week, this won’t be quite like the masterclass I just finished up in Porto. Instead I imagine I’ll be laying some groundwork and then pointing to topics to research. I guess it’s a kind of homework. For example, after we talked today, I set Amber this little bit of research for the next time we meet: “What is the difference between the internet and the World Wide Web?”

I’m excited to see where this will lead. I find Amber’s drive and enthusiasm very inspiring. I also feel a certain weight of responsibility���I don’t want to enter into this lightly.

I’m not really sure what to call this though. Is it mentorship? Or is it coaching? Or training? All of the above?

Whatever it is, I’m looking forward to documenting the journey. Amber will be writing about it too. She is already demonstrating a way with words.

February 17, 2017

Teaching in Porto, day five

For the final day of the week-long masterclass, I had no agenda. This was a time for the students to work on their own projects, but I was there to answer any remaining questions they might have.

As I suspected, the people with the most interest and experience in development were the ones with plenty of questions. I was more than happy to answer them. With no specific schedule for the day, we were free to merrily go chasing down rabbit holes.

SVG? Sure, I’d be happy to talk about that. More JavaScript? My pleasure! Databases? Not really my area of expertise, but I’m more than willing to share what I know.

It was a fun day. The centrepiece was a most excellent lunch across the river at a really traditional seafood place.

At the very end of the day, after everyone else had gone, I sat down with Tiago to discuss how the week went. Overall, I was happy. I was nervous going into this masterclass���I had never done a whole week of teaching���but based on the feedback I got, I think I did okay. There were times when I got impatient, and I wish I could turn back the clock and erase those moments. I noticed that those moments tended to occur when it was time for hands-on-keyboards coding: “no, not like that���like this!” I need to get better at handling those situations. But when we working on paper, or having stand-up discussions, or when I was just geeking out on a particular topic, everything felt quite positive.

All in all, this week has been a great experience. I know it sounds like a clich��, but I felt it was a real honour and a privilege to be involved with the New Digital School. I’ve enjoyed doing hands-on teaching, and I’d like to do more of it.

February 16, 2017

Teaching in Porto, day four

Day one covered HTML (amongst other things), day two covered CSS, and day three covered JavaScript. Each one of those days involved a certain amount of hands-on coding, with the students getting their hands dirty with angle brackets, curly braces, and semi-colons.

Day four was a deliberate step away from all that. No more laptops, just paper. Whereas the previous days had focused on collaboratively working on a single document, today I wanted everyone to work on a separate site.

The sites were generated randomly. I made five cards with types of sites on them: news, social network, shopping, travel, and learning. Another five cards had subjects: books, music, food, pets, and cars. And another five cards had audiences: students, parents, the elderly, commuters, and teachers. Everyone was dealt a random card from each deck, resulting in briefs like “a travel site about food for the elderly” or “a social network about music for commuters.”

For a bit of fun, the first brainstorming exercise (run as a 6-up) was to come with potential names for this service���4 minutes for 6 ideas. Then we went around the table, shared the ideas, got feedback, and settled on the names.

Now I asked everyone to come up with a one-sentence mission statement for their newly-named service. This was a good way of teasing out the most important verbs and nouns, which led nicely into the next task: answering the question “what is the core functionality?”

If that sounds familiar, it’s because it’s the first part of the three-step process I outlined in Resilient Web Design:

Identify core functionality.

Make that functionality available using the simplest possible technology.

Enhance!

We did some URL design, figuring out what structures would make sense for straightforward GET requests, like:

/things

/things/ID

Then, once it was clear what the primary “thing” was (a car, a book, etc.), I asked them to write down all the pieces that might appear on such a page; one post-it note per item e.g. “title”, “description”, “img”, “rating”, etc.

The next step involved prioritisation. They took those post-it notes and put them on the wall, but they had to put them in a vertical line from top to bottom in decreasing order of importance. This can be a challenge, but it’s better to solve these problems now rather than later.

Okay. I know asked them to “mark up” those vertical lists of post-it notes: writing HTML tag names by each one. By doing this before doing any visual design, it meant they were thinking about the meaning of the content first.

After that, we did a good ol’ fashioned classic 6-up sketching exercise, followed by critique (including a “designated dissenter” for each round). At this point, I was encouraging them to go crazy with ideas���they already had the core functionality figured out (with plain ol’ client/server requests and responses) so they could all the bells and whistles they wanted on top of that.

We finished up with a discussion of some of those bells and whistles, and how they could be used to improve the user experience: Ajax, geolocation, service workers, notifications, background sync …the sky’s the limit.

It was a whirlwind tour for just one day but I think it helped emphasise the importance of thinking about the fundamentals before adding enhancements.

This marked the end of the structured masterclass lessons. Tomorrow I’m around to answer any miscellaneous questions (if I can) and chat to the students individually while they work on their term projects.

February 15, 2017

Teaching in Porto, day three

Day two ended with a bit of a cliffhanger as I had the students mark up a document, but not yet style it. In the morning of day three, the styling began.

Rather than just treat “styling” as one big monolithic task, I broke it down into typography, colour, negative space, and so on. We time-boxed each one of those parts of the visual design. So everyone got, say, fifteen minutes to write styles relating to font families and sizes, then another fifteen minutes to write styles for colours and background colours. Bit by bit, the styles were layered on.

When it came to layout, we closed the laptops and returned to paper. Everyone did a quick round of 6-up sketching so that there was plenty of fast iteration on layout ideas. That was followed by some critique and dot-voting of the sketches.

Rather than diving into the CSS for layout���which can get quite complex���I instead walked through the approach for layout; namely putting all your layout styles inside media queries. To explain media queries, I first explained media types and then introduced the query part.

I felt pretty confident that I could skip over the nitty-gritty of media queries and cross-device layout because the next masterclass that will be taught at the New Digital School will be a week of responsive design, taught by Vitaly. I just gave them a taster���Vitaly can dive deeper.

By lunch time, I felt that we had covered CSS pretty well. After lunch it was time for the really challenging part: JavaScript.

The reason why I think JavaScript is challenging is that it’s inherently more complex than HTML or CSS. Those are declarative languages with fairly basic concepts at heart (elements, attributes, selectors, etc.), whereas an imperative language like JavaScript means entering the territory of logic, loops, variables, arrays, objects, and so on. I really didn’t want to get stuck in the weeds with that stuff.

I focused on the combination of JavaScript and the Document Object Model as a way of manipulating the HTML and CSS that’s already inside a browser. A lot of that boils down to this pattern:

When (some event happens), then (take this action).

We brainstormed some examples of this e.g. “When the user submits a form, then show a modal dialogue with an acknowledgement.” I then encouraged them to write a script …but I don’t mean a script in the JavaScript sense; I mean a script in the screenwriting or theatre sense. Line by line, write out each step that you want to accomplish. Once you’ve done that, translate each line of your English (or Portuguese) script into JavaScript.

I did quick demo as a proof of concept (which, much to my surprise, actually worked first time), but I was at pains to point out that they didn’t need to remember the syntax or vocabulary of the script; it was much more important to have a clear understanding of the thinking behind it.

With the remaining time left in the day, we ran through the many browser APIs available to JavaScript, from the relatively simple���like querySelector and Ajax���right up to the latest device APIs. I think I got the message across that, using JavaScript, there’s practically no limit to what you can do on the web these days …but the trick is to use that power responsibly.

At this point, we’ve had three days and we’ve covered three layers of web technologies: HTML, CSS, and JavaScript. Tomorrow we’ll take a step back from the nitty-gritty of the code. It’s going to be all about how to plan and think about building for the web before a single line of code gets written.

Jeremy Keith's Blog

- Jeremy Keith's profile

- 55 followers