Jeremy Keith's Blog, page 60

August 1, 2019

Navigation preloads in service workers

There’s a feature in service workers called navigation preloads. It’s relatively recent, so it isn’t supported in every browser, but it’s still well worth using.

Here’s the problem it solves…

If someone makes a return visit to your site, and the service worker you installed on their machine isn’t active yet, the service worker boots up, and then executes its instructions. If those instructions say “fetch the page from the network”, then you’re basically telling the browser to do what it would’ve done anyway if there were no service worker installed. The only difference is that there’s been a slight delay because the service worker had to boot up first.

The service worker activates.

The service worker fetches the file.

The service worker does something with the response.

It’s not a massive performance hit, but it’s still a bit annoying. It would be better if the service worker could boot up and still be requesting the page at the same time, like it would do if no service worker were present. That’s where navigation preloads come in.

The service worker activates while simultaneously requesting the file.

The service worker does something with the response.

Navigation preloads���like the name suggests���are only initiated when someone navigates to a URL on your site, either by following a link, or a bookmark, or by typing a URL directly into a browser. Navigation preloads don’t apply to requests made by a web page for things like images, style sheets, and scripts. By the time a request is made for one of those, the service worker is already up and running.

To enable navigation preloads, call the enable() method on registration.navigationPreload during the activate event in your service worker script. But first do a little feature detection to make sure registration.navigationPreload exists in this browser:

if (registration.navigationPreload) {

addEventListener('activate', activateEvent => {

activateEvent.waitUntil(

registration.navigationPreload.enable()

);

});

}

If you’ve already got event listeners on the activate event, that’s absolutely fine: addEventListener isn’t exclusive���you can use it to assign multiple tasks to the same event.

Now you need to make use of navigation preloads when you’re responding to fetch events. So if your strategy is to look in the cache first, there’s probably no point enabling navigation preloads. But if your default strategy is to fetch a page from the network, this will help.

Let’s say your current strategy for handling requests looks like this:

addEventListener('fetch', fetchEvent => {

const request = fetchEvent.request;

fetchEvent.respondWith(

fetch(request)

.then( responseFromFetch => {

// maybe cache this response for later here.

return responseFromFetch;

})

.catch( fetchError => {

return caches.match(request)

.then( responseFromCache => {

return responseFromCache || caches.match('/offline');

});

});

);

})

That’s a fairly standard strategy: try the network first; if that doesn’t work, try the cache; as a last resort, show an offline page.

It’s that first step (“try the network first”) that can benefit from navigation preloads. If a preload request is already in flight, you’ll want to use that instead of firing off a new fetch request. Otherwise you’re making two requests for the same file.

To find out if a preload request is underway, you can check for the existence of the preloadResponse promise, which will be made available as a property of the fetch event you’re handling:

fetchEvent.preloadResponse

If that exists, you’ll want to use it instead of fetch(request).

if (fetchEvent.preloadResponse) {

// do something with fetchEvent.preloadResponse

} else {

// do something with fetch(request)

}

You could structure your code like this:

addEventListener('fetch', fetchEvent => {

const request = fetchEvent.request;

if (fetchEvent.preloadResponse) {

fetchEvent.preloadResponse

.then( responseFromPreload => {

// maybe cache this response for later here.

return responseFromPreload;

})

.catch( preloadError => {

return caches.match(request)

.then( responseFromCache => {

return responseFromCache || caches.match('/offline');

});

});

} else {

fetch(request)

.then( responseFromFetch => {

// maybe cache this response for later here.

return responseFromFetch;

})

.catch( fetchError => {

return caches.match(request)

.then( responseFromCache => {

return responseFromCache || caches.match('/offline');

});

});

}

But that’s not very DRY. Your logic is identical, regardless of whether the response is coming from fetch(request) or from fetchEvent.preloadResponse. It would be better if you could minimise the amount of duplication.

One way of doing that is to abstract away the promise you’re going to use into a variable. Let’s call it retrieve. If a preload is underway, we’ll assign it to that variable:

if (fetchEvent.preloadResponse) {

const retrieve = fetchEvent.preloadResponse;

}

If there is no preload happening (or this browser doesn’t support it), assign a regular fetch request to the retrieve variable:

if (fetchEvent.preloadResponse) {

const retrieve = fetchEvent.preloadResponse;

} else {

const retrieve = fetch(request);

}

If you like, you can squash that into a ternary operator:

const retrieve = fetchEvent.preloadResponse ? fetchEvent.preloadResponse : fetch(request);

Use whichever syntax you find more readable.

Now you can apply the same logic, regardless of whether retrieve is a preload navigation or a fetch request:

addEventListener('fetch', fetchEvent => {

const request = fetchEvent.request;

const retrieve = fetchEvent.preloadResponse ? fetchEvent.preloadResponse : fetch(request);

fetchEvent.respondWith(

retrieve

.then( responseFromRetrieve => {

// maybe cache this response for later here.

return responseFromRetrieve;

})

.catch( fetchError => {

return caches.match(request)

.then( responseFromCache => {

return responseFromCache || caches.match('/offline');

});

});

);

})

I think that’s the least invasive way to update your existing service worker script to take advantage of navigation preloads.

Like I said, preload navigations can give a bit of a performance boost if you’re using a network-first strategy. That’s what I’m doing here on adactio.com and on thesession.org so I’ve updated their service workers to take advantage of navigation preloads. But on Resilient Web Design, which uses a cache-first strategy, there wouldn’t be much point enabling navigation preloads.

Jeff Posnick made this point in his write-up of bringing service workers to Google search:

Adding a service worker to your web app means inserting an additional piece of JavaScript that needs to be loaded and executed before your web app gets responses to its requests. If those responses end up coming from a local cache rather than from the network, then the overhead of running the service worker is usually negligible in comparison to the performance win from going cache-first. But if you know that your service worker always has to consult the network when handling navigation requests, using navigation preload is a crucial performance win.

Oh, and those browsers that don’t yet support navigation preloads? No problem. It’s a progressive enhancement. Everything still works just like it did before. And having a service worker on your site in the first place is itself a progressive enhancement. So enabling navigation preloads is like a progressive enhancement within a progressive enhancement. It’s progressive enhancements all the way down!

By the way, if all of this service worker stuff sounds like gibberish, but you wish you understood it, I think my book, Going Offline, will prove quite valuable.

July 25, 2019

Principle

I like good design principles. I collect design principles���of varying quality���at principles.adactio.com. Ben Brignell also has a (much larger) collection at principles.design.

You can spot the less useful design principles after a while. They tend to be wishy-washy; more like empty aspirational exhortations than genuinely useful guidelines for alignment. I’ve written about what makes for good design principles before. Matthew Str��m also asked���and answered���What makes a good design principle?

Good design principles are memorable.

Good design principles help you say no.

Good design principles aren���t truisms.

Good design principles are applicable.

I like those. They’re like design principles for design principles.

One set of design principles that I’ve included in my collection is from gov.uk: government design principles . I think they’re very well thought-through (although I’m always suspicious when I see a nice even number like 10 for the amount of items in the list). There’s a great line in design principle number two���Do less:

Government should only do what only government can do.

This wasn’t a theoretical issue. The multiple departmental websites that preceded gov.uk were notorious for having too much irrelevant content���content that was readily available elsewhere. It was downright wasteful to duplicate that content on a government site. It wasn’t appropriate.

Appropriateness is something I keep coming back to when it comes to evaluating web technologies. I don’t think there are good tools and bad tools; just tools that are appropriate or inapropriate for the task at hand. Whether it’s task runners or JavaScript frameworks, appropriateness feels like it should be the deciding factor.

I think that the design principle from GDS could be abstracted into a general technology principle:

Any particular technology should only do what only that particular technology can do.

Take JavaScript, for example. It feels “wrong” when a powerful client-side JavaScript framework is applied to something that could be accomplished using HTML. Making a blog that’s a single page app is over-engineering. It violates this principle:

JavaScript should only do what only JavaScript can do.

Need to manage state or immediately update the interface in response to user action? Only JavaScript can do that. But if you need to present the user with some static content, JavaScript can do that …but it’s not the only technology that can do that. HTML would be more appropriate.

I realise that this is basically a reformulation of one of my favourite design principles, the rule of least power:

Choose the least powerful language suitable for a given purpose.

Or, as Derek put it:

In the web front-end stack ��� HTML, CSS, JS, and ARIA ��� if you can solve a problem with a simpler solution lower in the stack, you should. It���s less fragile, more foolproof, and just works.

ARIA should only do what only ARIA can do.

JavaScript should only do what only JavaScript can do.

CSS should only do what only CSS can do.

HTML should only do what only HTML can do.

July 23, 2019

Patterns Day video and audio

If you missed out on Patterns Day this year, you can still get a pale imitation of the experience of being there by watching videos of the talks.

Here are the videos, and if you���re not that into visuals, here���s a podcast of the talks (you can subscribe to this RSS feed in your podcasting app of choice).

On Twitter, that ���It would be nice if the talks had their topic listed,��� which is a fair point. So here goes:

Yaili���s talk is about design systems,

Amy���s talk is about design systems,

Danielle���s talk is about design systems,

Varya���s talk is about design systems,

Emil���s talk is about design systems, and

Heydon���s talk is about a large seabird.

It���s fascinating to see emergent themes (other than, y���know, the obvious theme of design systems) in different talks. In comparison to the first Patterns Day, it felt like there was a healthy degree of questioning and scepticism���there were plenty of reminders that design systems aren���t a silver bullet. And I very much appreciated Yaili���s point that when you see beautifully polished design systems that have been made public, it���s like seeing the edited Instagram version of someone���s life. That reminded me of Responsive Day Out when Sarah Parmenter, the first speaker at the very first event, opened everything by saying ���most of us are winging it.���

I can see the value in coming to a conference to hear stories from people who solved hard problems, but I think there���s equal value in coming to a conference to hear stories from people who are still grappling with hard problems. It���s reassuring. I definitely got the vibe from people at Patterns Day that it was a real relief to hear that nobody���s got this figured out.

There was also a great appreciation for the ���big picture��� perspective on offer at Patterns Day. For myself, I know that I���ll be cogitating upon Danielle���s talk and Emil���s talk for some time to come���both are packed full of ineresting ideas.

Good thing we���ve got the videos and the podcast to revisit whenever we want.

And if you���re itching for another event dedicated to design systems, I highly recommend snagging a ticket for the Clarity conference in San Francisco next month.

July 16, 2019

Trad time

Fifteen years ago, I went to the Willie Clancy Summer School in Miltown Malbay:

I���m back from the west of Ireland. I was sorry to leave. I had a wonderful, music-filled time.

I’m not sure why it took me a decade and a half to go back, but that’s what I did last week. Myself and Jessica once again immersed ourselves in Irish tradtional music. I’ve written up a trip report over on The Session.

On the face of it, fifteen years is a long time. Last time I made the trip to county Clare, I was taking pictures on a point-and-shoot camera. I had a phone with me, but it had a T9 keyboard that I could use for texting and not much else. Also, my hair wasn’t grey.

But in some ways, fifteen years feels like the blink of an eye.

I spent my mornings at the Willie Clancy Summer School immersed in the history of Irish traditional music, with Paddy Glackin as a guide. We were discussing tradition and change in generational timescales. There was plenty of talk about technology, but we were as likely to discuss the influence of the phonograph as the influence of the internet.

Outside of the classes, there was a real feeling of lengthy timescales too. On any given day, I would find myself listening to pre-teen musicians at one point, and septegenarian masters at another.

Now that I’m back in the Clearleft studio, I’m finding it weird to adjust back in to the shorter timescales of working on the web. Progress is measured in weeks and months. Technologies are deemed outdated after just a year or two.

The one bridging point I have between these two worlds is The Session. It’s been going in one form or another for over twenty years. And while it’s very much on and of the web, it also taps into a longer tradition. Over time it has become an enormous repository of tunes, for which I feel a great sense of responsibility …but in a good way. It’s not something I take lightly. It’s also something that gives me great satisfaction, in a way that’s hard to achieve in the rapidly moving world of the web. It’s somewhat comparable to the feelings I have for my own website, where I’ve been writing for eighteen years. But whereas adactio.com is very much focused on me, thesession.org is much more of a community endeavour.

I question sometimes whether The Session is helping or hindering the Irish music tradition. “It all helps”, Paddy Glackin told me. And I have to admit, it was very gratifying to meet other musicians during Willie Clancy week who told me how much the site benefits them.

I think I benefit from The Session more than anyone though. It keeps me grounded. It gives me a perspective that I don’t think I’d otherwise get. And in a time when it feels entirely to right to question whether the internet is even providing a net gain to our world, I take comfort in being part of a project that I think uses the very best attributes of the World Wide Web.

July 4, 2019

Summer of Apollo

It’s July, 2019. You know what that means? The 50th anniversary of the Apollo 11 mission is this month.

I’ve already got serious moon fever, and if you’d like to join me, I have some recommendations…

Watch the Apollo 11 documentary in a cinema. The 70mm footage is stunning, the sound design is immersive, the music is superb, and there’s some neat data visualisation too. Watching a preview screening in the Duke of York’s last week was pure joy from start to finish.

Listen to 13 Minutes To The Moon, the terrific ongoing BBC podcast by Kevin Fong. It’s got all my favourite titans of NASA: Michael Collins, Margaret Hamilton, and Charlie Duke, amongst others. And it’s got music by Hans Zimmer.

Experience the website Apollo 11 In Real Time on the biggest monitor you can. It’s absolutely wonderful! From July 16th, you can experience the mission timeshifted by exactly 50 years, but if you don’t want to wait, you can dive in right now. It genuinely feels like being in Mission Control!

Movie Knight

I mentioned how much I enjoyed Mike Hill’s talk at Beyond Tellerrand in D��sseldorf:

Mike gave a talk called The Power of Metaphor and it���s absolutely brilliant. It covers the monomyth (the hero���s journey) and Jungian archetypes, illustrated with the examples Star Wars, The Dark Knight, and Jurassic Park.

At Clearleft, I’m planning to reprise the workshop I did a few years ago about narrative structure���very handy for anyone preparing a conference talk, blog post, case study, or anything really:

Ellen and I have been enjoying some great philosophical discussions about exactly what a story is, and how does it differ from a narrative structure, or a plot. I really love Ellen���s working definition: Narrative. In Space. Over Time.

This led me to think that there���s a lot that we can borrow from the world of storytelling���films, novels, fairy tales���not necessarily about the stories themselves, but the kind of narrative structures we could use to tell those stories. After all, the story itself is often the same one that���s been told time and time again���The Hero���s Journey, or some variation thereof.

I realised that Mike’s monomyth talk aligns nicely with my workshop. So I decided to prep my fellow Clearlefties for the workshop with a movie night.

Popcorn was popped, pizza was ordered, and comfy chairs were suitably arranged. Then we watched Mike’s talk. Everyone loved it. Then it was decision time. Which of three films covered in the talk would we watch? We put it to a vote.

It came out as an equal tie between Jurassic Park and The Dark Knight. How would we resolve this? A coin toss!

The toss went to The Dark Knight. In retrospect, a coin toss was a supremely fitting way to decide to watch that film.

It was fun to watch it again, particularly through the lens of Mike’s analyis of its Jungian archetypes.

But I still think the film is about game theory.

July 2, 2019

The trimCache function in Going Offline …again

It seems that some code that I wrote in Going Offline is haunted. It’s the trimCache function.

First, there was the issue of a typo. Or maybe it’s more of a brainfart than a typo, but either way, there’s a mistake in the syntax that was published in the book.

Now it turns out that there’s also a problem with my logic.

To recap, this is a function that takes two arguments: the name of a cache, and the maximum number of items that cache should hold.

function trimCache(cacheName, maxItems) {

First, we open up the cache:

caches.open(cacheName)

.then( cache => {

Then, we get the items (keys) in that cache:

cache.keys()

.then(keys => {

Now we compare the number of items (keys.length) to the maximum number of items allowed:

if (keys.length > maxItems) {

If there are too many items, delete the first item in the cache���that should be the oldest item:

cache.delete(keys[0])

And then run the function again:

.then(

trimCache(cacheName, maxItems)

);

A-ha! See the problem?

Neither did I.

It turns out that, even though I’m using then, the function will be invoked immediately, instead of waiting until the first item has been deleted.

Trys helped me understand what was going on by making a useful analogy. You know when you use setTimeout, you can’t put a function���complete with parentheses���as the first argument?

window.setTimeout(doSomething(someValue), 1000);

In that example, doSomething(someValue) will be invoked immediately���not after 1000 milliseconds. Instead, you need to create an anonymous function like this:

window.setTimeout( function() {

doSomething(someValue)

}, 1000);

Well, it’s the same in my trimCache function. Instead of this:

cache.delete(keys[0])

.then(

trimCache(cacheName, maxItems)

);

I need to do this:

cache.delete(keys[0])

.then( function() {

trimCache(cacheName, maxItems)

});

Or, if you prefer the more modern arrow function syntax:

cache.delete(keys[0])

.then( () => {

trimCache(cacheName, maxItems)

});

Either way, I have to wrap the recursive function call in an anonymous function.

Here’s a gist with the updated trimCache function.

What’s annoying is that this mistake wasn’t throwing an error. Instead, it was causing a performance problem. I’m using this pattern right here on my own site, and whenever my cache of pages or images gets too big, the trimCaches function would get called …and then wouldn’t stop running.

I’m very glad that���witht the help of Trys at last week’s Homebrew Website Club Brighton���I was finally able to get to the bottom of this. If you’re using the trimCache function in your service worker, please update the code accordingly.

Management regrets the error.

July 1, 2019

Patterns Day Two

Who says the sequels can’t be even better than the original? The second Patterns Day was The Empire Strikes Back, The Godfather Part II, and The Wrath of Khan all rolled into one …but, y’know, with design systems.

If you were there, then you know how good it was. If you weren’t, sorry. Audio of the talks should be available soon though, with video following on.

The talks were superb! I know I’m biased becuase I put the line-up together, but even so, I was blown away by the quality of the talks. There were some big-picture questioning talks, a sequence of nitty-gritty code talks in the middle, and galaxy-brain philosophical thoughts at the end. A perfect mix, in my opinion.

Words cannot express how grateful I am to Alla, Yaili, Amy, Danielle, Heydon, Varya, Una, and Emil. They really gave it their all! Some of them are seasoned speakers, and some of them are new to speaking on stage, but all of them delivered the goods above and beyond what I expected.

Big thanks to my Clearleft compadres for making everything run smoothly: Jason, Amy, Cassie, Chris, Trys, Hana, and especially Sophia for doing all the hard work behind the scenes. Trys took some remarkable photos too. He posted some on Twitter, and some on his site, but there are more to come.

And if you came to Patterns Day 2, thank you very, very much. I really appreciate you being there. I hope you enjoyed it even half as much as I did, because I had a ball!

Once again, thanks to buildit @ wipro digital for sponsoring the pastries and coffee, as well as running a fun giveaway on the day. Many thank to Bulb for sponsoring the forthcoming videos. Thanks again to Drew for recording the audio. And big thanks to Brighton’s own Holler Brewery for very kindly offering every attendee a free drink���the weather (and the beer) was perfect for post-conference discussion!

It was incredibly heartwarming to hear how much people enjoyed the event. I was especially pleased that people were enjoying one another’s company as much as the conference itself. I knew that quite a few people were coming in groups from work, while other people were coming by themselves. I hoped there’d be lots of interaction between attendees, and I’m so, so glad there was!

You’ve all made me very happy.

Thank you to @adactio and @clearleft for an excellent #PatternsDay. ����

��� dhuntrods (@dhuntrods) June 29, 2019

Had so many great conversations! Thanks as well to everyone that came and listened to us, you’re all the best.

����

Well done for yet another fantastic event. The calibre of speakers was so high, and it was reassuring to hear they have the same trials, questions and toil with their libraries. So insightful, so entertaining.

��� Barry Bloye (@barrybloye) June 29, 2019

Had the most amazing time at the #PatternsDay, catching up with old friends over slightly mad conversations. Huge thanks to @adactio and @clearleft for putting together such warm and welcoming event, and to all the attendees and speakers for making it so special ������

��� Alla Kholmatova (@craftui) June 29, 2019

Had such an amazing time at yesterday’s #PatternsDay. So many notes and thoughts to process Thank you to all the speakers and the folk at @clearleft for organising it. ������

��� Charlie Don’t Surf (@sonniesedge) June 29, 2019

Had an awesome time at #PatternsDay yesterday! Some amazing speakers and got to meet some awesome folk along the way! Big thanks to @adactio and @clearleft for organising such a great event!

��� Alice Boyd-Leslie (@aboydleslie) June 29, 2019

Absolutely amazing day at #PatternsDay. Well done @adactio and @clearleft. The speakers were great, attendees great and it was fantastic to finally meet some peers face to face. ���

��� Dave (@daveymackintosh) June 28, 2019

Had a blast at #PatternsDay !!! Met so many cool ppl

��� trash bandicoot (@freezydorito) June 28, 2019

I���ve had a hell of a good time at #PatternsDay. It���s been nice to finally meet so many folks that I only get to speak to on here.

��� Andy Bell (@andybelldesign) June 28, 2019

As expected, the @clearleft folks all did a stellar job of running a great event for us.

Patterns Day is an excellent single-day conference packed full of valuable content about design systems and pattern libraries from experienced practicioners. Way to go @clearleft! �������� #PatternsDay

— Kimberly Blessing (@obiwankimberly) June 28, 2019

Round of applause to @adactio and @clearleft for a great #patternsday today ������������

��� Dan Donald (@hereinthehive) June 28, 2019

Big thanks to @adactio and @clearleft for a fantastic #PatternsDay. Left with tons of ideas to take back to the shop. pic.twitter.com/GwEtWrxbK8

��� Alex �������� (@alexandtheweb) June 28, 2019

@adactio thanks Jeremy for organising this fabulous day of inspiring talks in a such a humane format. I enjoyed every minute of it ���� #patternsday

— David Roessli �������������� (@roessli) June 28, 2019

An amazing day was had at #PatternsDay. Caught up with friends I hadn���t seen for a while, made some new ones, and had my brain expand by an excellent set of talks. Big hugs to @adactio and the @clearleft team. Blog post to follow next week, once I���ve got my notes in order.

��� Garrett Coakley (@garrettc) June 28, 2019

June 24, 2019

Am I cached or not?

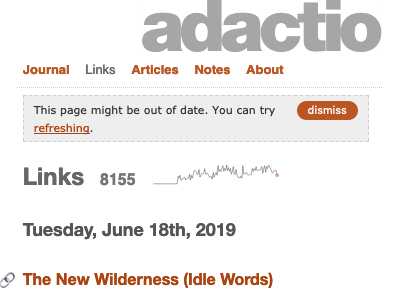

When I was writing about the lie-fi strategy I’ve added to adactio.com, I finished with this thought:

What I���d really like is some way to know���on the client side���whether or not the currently-loaded page came from a cache or from a network. Then I could add some kind of interface element that says, ���Hey, this page might be stale���click here if you want to check for a fresher version.���

Trys heard my plea, and came up with a very clever technique to alter the HTML of a page when it’s put into a cache.

It���s a function that reads the response body stream in, returning a new stream. Whilst reading the stream, it searches for the character codes that make up: . If it finds them, it tacks on a data-cached attribute.

Nice!

But then I was discussing this issue with Tantek and Aaron late one night after Indie Web Camp D��sseldorf. I realised that I might have another potential solution that doesn’t involve the service worker at all.

Caveat: this will only work for pages that have some kind of server-side generation. This won’t work for static sites.

In my case, pages are generated by PHP. I’m not doing a database lookup every time you request a page���I’ve got a server-side cache of posts, for example���but there is a little bit of assembly done for every request: get the header from here; get the main content from over there; get the footer; put them all together into a single page and serve that up.

This means I can add a timestamp to the page (using PHP). I can mark the moment that it was served up. Then I can use JavaScript on the client side to compare that timestamp to the current time.

I’ve published the code as a gist.

In a script element on each page, I have this bit of coducken:

var serverTimestamp = ;

Now the JavaScript variable serverTimestamp holds the timestamp that the page was generated. When the page is put in the cache, this won’t change. This number should be the number of seconds since January 1st, 1970 in the UTC timezone (that’s what my server’s timezone is set to).

Starting with JavaScript’s Date object, I use a caravan of methods like toUTCString() and getTime() to end up with a variable called clientTimestamp. This will give the current number of seconds since January 1st, 1970, regardless of whether the page is coming from the server or from the cache.

var localDate = new Date();

var localUTCString = localDate.toUTCString();

var UTCDate = new Date(localUTCString);

var clientTimestamp = UTCDate.getTime() / 1000;

Then I compare the two and see if there’s a discrepency greater than five minutes:

if (clientTimestamp - serverTimestamp > (60 * 5))

If there is, then I inject some markup into the page, telling the reader that this page might be stale:

document.querySelector('main').insertAdjacentHTML('afterbegin',`

dismiss

This page might be out of date. You can try refreshing.

`);

The reader has the option to refresh the page or dismiss the message.

It’s not foolproof by any means. If the visitor’s computer has their clock set weirdly, then the comparison might return a false positive every time. Still, I thought that using UTC might be a safer bet.

All in all, I think this is a pretty good method for detecting if a page is being served from a cache. Remember, the goal here is not to determine if the user is offline���for that, there’s navigator.onLine.

The upshot is this: if you visit my site with a crappy internet connection (lie-fi), then after three seconds you may be served with a cached version of the page you’re requesting (if you visited that page previously). If that happens, you’ll now also be presented with a little message telling you that the page isn’t fresh. Then it’s up to you whether you want to have another go.

I like the way that this puts control back into the hands of the user.

June 19, 2019

Toast

Shockwaves rippled across the web standards community recently when it appeared that Google Chrome was unilaterally implementing a new element called toast. It turns out that���s not the case, but the confusion is understandable.

First off, this all kicked off with the announcement of ���intent to implement���. That makes it sounds like Google are intending to, well, ���implement this. In fact ���intent to implement��� really means ���intend to mess around with this behind a flag���. The language is definitely confusing and this is something that will hopefully be addressed.

Secondly, Chrome isn���t going to ship a toast element. Instead, this is a proposal for a custom element currently called std-toast. I���m assuming that should the experiment prove successful, it���s not a foregone conclusion that the final element name will be called toast (minus the sexually-transmitted-disease prefix). If this turns out to be a useful feature, there will surely be a discussion between implementators about the naming of the finished element.

This is the ideal candidate for a web component. It makes total sense to create a custom element along the lines of std-toast. At first I was confused about why this was happening inside of a browser instead of first being created as a standalone web component, but it turns out that there���s been a fair bit of research looking at existing implementations in libraries and web components. So this actually looks like a good example of paving an existing cowpath.

But it didn���t come across that way. The timing of announcements felt like this was something that was happening without prior discussion. Terence Eden writes:

It feels like a Google-designed, Google-approved, Google-benefiting idea which has been dumped onto the Web without any consideration for others.

I know that isn���t the case. And I know how many dedicated people have worked hard on this proposal.

Adrian Roselli also remarks on the optics of this situation:

To be clear, while I think there is value in minting a native HTML element to fill a defined gap, I am wary of the approach Google has taken. A repo from a new-to-the-industry Googler getting a lot of promotion from Googlers, with Googlers on social media doing damage control for the blowback, WHATWG Googlers handling questions on the repo, and Google AMP strongly supporting it (to reduce its own footprint), all add up to raise alarm bells with those who advocated for a community-driven, needs-based, accessible web.

Dave Cramer made a similar point:

But my concern wasn���t so much about the nature of the new elements, but of how we learned about them and what that says about how web standardization works.

So there���s a general feeling (outside of Google) that there���s something screwy here about the order of events. A lot discussion and research seems to have happened in isolation before announcing the intent to implement:

It does not appear that any discussions happened with other browser vendors or standards bodies before the intent to implement.

Why is this a problem? Google is seeking feedback on a solution, not on how to solve the problem.

Going back to my early confusion about putting a web component directly into a browser, this question on Discourse echoes my initial reaction:

Why not release std-toast (and other elements in development) as libraries first?

It turns out that std-toast and other in-browser web components are part of an idea called layered APIs. In theory this is an initiative in the spirit of the extensible web manifesto.

The extensible web movement focused on exposing low-level APIs to developers: the fetch API, the cache API, custom elements, Houdini, and all of those other building blocks. Layered APIs, on the other hand, focuses on high-level features ���like, say, an HTML element for displaying ���toast” notifications.

Layered APIs is an interesting idea, but I���m worried that it could be used to circumvent discussion between implementers. It���s a route to unilaterally creating new browser features first and standardising after the fact. I know that���s how many features already end up in browsers, but I think that the sooner that authors, implementers, and standards bodies get a say, the better.

I certainly don���t think this is a good look for Google given the debacle of AMP���s ���my way or the highway��� rollout. I know that���s a completely different team, but the external perception of Google amongst developers has been damaged by the AMP project���s anti-competitive abuse of Google���s power in search.

Right now, a lot of people are jumpy about Microsoft���s move to Chromium for Edge. My friends at Microsoft have been reassuring me that while it���s always a shame to reduce browser engine diversity, this could actually be a good thing for the standards process: Microsoft could theoretically keep Google in check when it comes to what features are introduced to the Chromium engine.

But that only works if there is some kind of standards process. Layered APIs in general���and std-toast in particular���hint at a future where a single browser vendor can plough ahead on their own. I sincerely hope that���s a misreading of the situation and that this has all been an exercise in miscommunication and misunderstanding.

Like Dave Cramer says:

I hear a lot about how anyone can contribute to the web platform. We���ve all heard the preaching about incubation, the Extensible Web, working in public, paving the cowpaths, and so on. But to an outside observer this feels like Google making all the decisions, in private, and then asking for public comment after the feature has been designed.

Jeremy Keith's Blog

- Jeremy Keith's profile

- 55 followers