Paul Gilster's Blog, page 98

October 11, 2018

OSIRIS-REx: Long Approach to Bennu

With a robotic presence at Ryugu, JAXA’s Hayabusa2 mission is showing what can be done as we subject near-Earth asteroids to scrutiny. We’ll doubtless learn a lot about asteroid composition, all of which can factor into, among other things, the question of how we would approach changing the trajectory of any object that looked like it might come too close to Earth. The case for studying near-Earth asteroids likewise extends to learning more about the evolution of the Solar System.

NASA’s first near-Earth asteroid visit will take place on December 3, when the OSIRIS-REx mission arrives at asteroid Bennu, with a suite of instruments including the OCAMS camera suite (PolyCam, MapCam, and SamCam), the OTES thermal spectrometer, the OVIRS visible and infrared spectrometer, the OLA laser altimeter, and the REXIS x-ray spectrometer. Like Hayabusa2, this mission is designed to collect a surface sample and return it to Earth.

And while Hayabusa2 has commanded the asteroid headlines in recent days, OSIRIS-REx has been active in adjusting its course for the December arrival. The first of four asteroid approach maneuvers (AAM-1) took place on October 1, with the main engine thrusters braking the craft relative to Bennu, slowing the approach speed by 351.298 meters per second. The current speed is 140 m/sec after a burn that consumed on the order of 240 kilograms of fuel.

Science operations began on August 17, with PolyCam taking optical navigation images of Bennu on a Monday, Wednesday and Friday cadence until the AAM-1 burn, and then moving to daily ‘OpNavs’ afterwards. The MapCam camera is also taking images that measure changes in reflected light from Bennu’s surface as sunlight strikes it at a variety of angles, which helps to determine the asteroid’s albedo as we measure light reflection from various angles.

The AAM-1 maneuver is, as I mentioned above, the first in a series of four designed to slow the spacecraft to match Bennu’s orbit. Asteroid Approach Maneuver-2 is to occur on October 15. As the approach phase continues, OSIRIS-REx has three other high-priority tasks:

To observe the area near the asteroid for dust plumes or natural satellites and continue the study of Bennu’s light and spectral properties

To jettison the protective cover of the craft’s sampling arm and extend the arm in mid-October

To use OCAMS to show the shape of the asteroid by late October. By mid-November, OSIRIS-REx should begin to detect surface features.

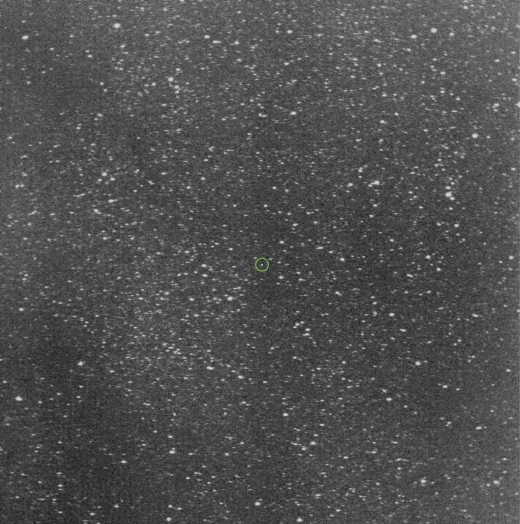

It was in August that the PolyCam camera obtained the first image from 2.2 million kilometers out. Subsequently, the MapCam image below was obtained.

Image: This MapCam image of the space surrounding asteroid Bennu was taken on Sept. 12, 2018, during the OSIRIS-REx mission’s Dust Plume Search observation campaign. Bennu, circled in green, is approximately 1 million km from the spacecraft. The image was created by co-adding 64 ten-second exposures. Credit: NASA/Goddard/University of Arizona.

Bennu shows up here as little more than a dot in an image that is part of OSIRIS-REx’s search for dust and gas plumes on Bennu’s surface. These could present problems during close operations around the object, and could also provide clues about possible cometary activity. The search did not turn up any dust plumes from Bennu, but a second search is planned once the spacecraft arrives.

The first month at the asteroid will be taken up with flybys of Bennu’s north pole, equator and south pole at distances between 19 and 7 kilometers, allowing for direct measurements of its mass as well as close observation of the surface. The surface surveys will allow controllers to identify two possible landing sites for the sample collection, which is scheduled for early July, 2020. OSIRIS-REx will then return to Earth, ejecting the Sample Return Capsule for landing in Utah in September of 2023.

The latest image offered up by the OSIRIS-REx team is an animation showing Bennu brightening during the approach from mid-August to the beginning of October.

Image: This processed and cropped set of images shows Bennu (in the center of the frame) from the perspective of the OSIRIS-REx spacecraft as it approaches the asteroid. During the period between August 17 and October 1, the spacecraft’s PolyCam imager obtained this series of 20 four-second exposures every Monday, Wednesday, and Friday as part of the mission’s optical navigation campaign. From the first to the last image, the spacecraft’s range to Bennu decreased from 2.2 million km to 192,000 km, and Bennu brightened from approximately magnitude 13 to magnitude 8.8 from the spacecraft’s perspective. Date Taken: Aug. 17 – Oct. 1, 2018. Credit: NASA/Goddard/University of Arizona.

Of all the OSIRIS-REx images I’ve seen so far, I think the one below is the prize. But then, I always did like taking the long view.

Image: On July 16, 2018, NASA’s OSIRIS-REx spacecraft obtained this image of the Milky Way near the star Gamma2 Sagittarii during a routine spacecraft systems check. The image is a 10 second exposure acquired using the panchromatic filter of the spacecraft’s MapCam camera. The bright star in the lower center of the image is Gamma2 Sagittarii, which marks the tip of the spout of Sagittarius’ teapot near the center of the galaxy. The image is roughly centered on Baade’s Window, one of the brightest patches of the Milky Way, which fills approximately one fourth of the field of view. Relatively low amounts of interstellar dust in this region make it possible to view a part of the galaxy that is usually obscured. By contrast, the dark region near the top of the image, the Ink Spot Nebula, is a dense cloud made up of small dust grains that block the light of stars in the background. MapCam is part of the OSIRIS-REx Camera Suite (OCAMS) operated by the University of Arizona. Date Taken: July 16, 2018. Credit: NASA/GSFC/University of Arizona.

We’re 53 days from arrival at Bennu. You can follow news of OSIRIS-REx at its NASA page or via Twitter at @OSIRIS-REx. Dante Lauretta (Lunar and Planetary Laboratory) is principal investigator on the mission; the University of Arizona OSIRIS-REx page is here. And be sure to check Emily Lakdawalla’s excellent overview of operations at Bennu in the runup to arrival.

October 10, 2018

MASCOT Operations on Asteroid Ryugu

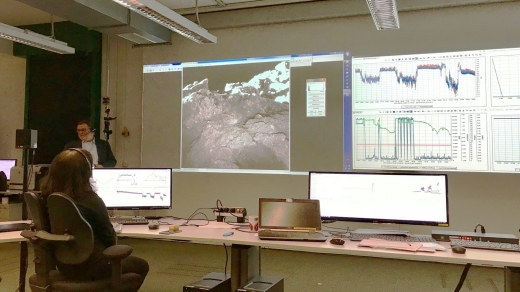

To me, the image below is emblematic of space exploration. We look out at vistas that have never before been seen by human eye, contextualized by the banks of equipment that connect us to our probes on distant worlds. The fact that we can then sling these images globally through the Internet, opening them up to anyone with a computer at hand, gives them additional weight. Through such technologies we may eventually recover what we used to take for granted in the days of the Moon race, a sense of global participation and engagement.

We’re looking at the MASCOT Control Centre at the German Aerospace Center (Deutsches Zentrum für Luft- und Raumfahrt; DLR) in Cologne, where the MASCOT lander was followed through its separation from the Japanese Hayabusa2 probe on October 3, its landing on asteroid Ryugu, and the end of the mission, some 17 hours later.

Image: In the foreground is MASCOT project manager Tra-Mi Ho from the DLR Institute of Space Systems in Bremen at the MASCOT Control Centre of the DLR Microgravity User Support Centre in Cologne. In the background is Ralf Jaumann, scientific director of MASCOT, presenting some of the 120 images taken with the DLR camera MASCAM. Credit: DLR.

As scientists from Japan, Germany and France looked on, MASCOT (Mobile Asteroid Surface Scout) successfully acquired data about the surface of the asteroid at several locations and safely returned its data to Hayabusa2 before its battery became depleted. A full 17 hours of battery life allowed an extra hour of operations, data collection, image acquisition and movement to various surface locations.

MASCOT is a mobile device, capable of using its swing-arm to reposition itself as needed. Attitude changes can keep the top antenna directed upward while the spectroscopic microscope faces downwards, a fact controllers put to good use. Says MASCOT operations manager Christian Krause (DLR):

“After a first automated reorientation hop, it ended up in an unfavourable position. With another manually commanded hopping manoeuvre, we were able to place MASCOT in another favourable position thanks to the very precisely controlled swing arm.”

MASCOT moved several meters to its early measuring points, with a longer move at the last as controllers took advantage of the remaining battery life. Three asteroid days and two asteroid nights, with a day-night cycle lasting 7 hours and 36 minutes, covered the lander’s operations.

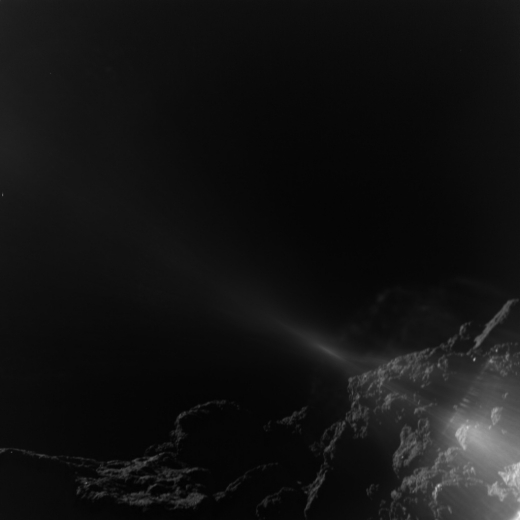

Image: The images acquired with the MASCAM camera on the MASCOT lander during the descent show an extremely rugged surface covered with numerous angular rocks. Ryugu, a four-and-a-half billion year-old C-type asteroid has shown the scientists something they had not expected, even though more than a dozen asteroids have been explored up close by space probes. On this close-up, there are no areas covered with dust — the regolith that results from the fragmentation of rocks due to exposure to micrometeorite impacts and high-energy cosmic particles over billions of years. The image from the rotating MASCOT lander was taken at a height of about 10 to 20 meters. Credit: MASCOT/DLR/JAXA.

The dark surface of Ryugu reflects about 2.5 percent of incoming starlight, so that in the image below, the area shown is as dark as asphalt. According to DLR, the details of the terrain can be captured because of the photosensitive semiconductor elements of the 1000 by 1000 pixel CMOS (complementary metal-oxide semiconductor) camera sensor, which can enhance low light signals and produce usable image data.

Image: DLR’s MASCAM camera took 20 images during MASCOT’s 20-minute fall to Ryugu, following its separation from Hayabusa2, which took place at 51 meters above the asteroid’s surface. This image shows the landscape near the first touchdown location on Ryugu from a height of about 25 to 10 meters. Light reflections on the frame structure of the camera body scatter into the field of vision of the MASCAM (bottom right) as a result of the backlit light of the Sun shining on Ryugu. Credit: MASCOT/DLR/JAXA.

We now collect data and go about evaluating the results of MASCOT’s foray. The small lander had a short life but it seems to have delivered on every expectation. As Hayabusa2 operations continue at Ryugu, we’ll learn a great deal about the early history of the Solar System and the composition of near-Earth asteroids like these, all of which we’ll be able to weigh against what we find at asteroid Bennu when the OSIRIS-REx mission reaches its target in December.

Image: MASCOT as photographed by the ONC-W2 immediately after separation. MASCOT was captured on three consecutively shot images, with image capture times between 10:57:54 JST – 10:58:14 JST on October 3. Since separation time itself was at 10:57:20 JST, this image was captured immediately after separation. The ONC-W2 is a camera attached to the side of the spacecraft and is shooting diagonally downward from Hayabusa2. This gives an image showing MASCOT descending with the surface of Ryugu in the background. Credit: JAXA, University of Tokyo, Kochi University, Rikkyo University, Nagoya University, Chiba Institute of Technology, Meiji University, University of Aizu, AIST.

Bear in mind that the the 50th annual meeting of the Division for Planetary Sciences (DPS) of the American Astronomical Society (AAS) is coming up in Knoxville in late October. Among the press conferences scheduled are one covering Hayabusa2 developments and another the latest from New Horizons. The coming weeks will be a busy time for Solar System exploration.

October 9, 2018

Voyager 2’s Path to Interstellar Space

I want to talk about the Voyagers this morning and their continuing interstellar mission, but first, a quick correction. Yesterday in writing about New Horizons’ flyby of MU69, I made an inexplicable gaffe, referring to the event as occurring on the 19th rather than the 1st of January (without my morning coffee, I had evidently fixated on the ‘19’ of 2019). Several readers quickly spotted this in the article’s penultimate paragraph and I fixed it, but unfortunately the email subscribers received the uncorrected version. So for the record, we can look forward to the New Horizons flyby of MU69 on January 1, 2019 at 0533 UTC. Sorry about the error.

Let’s turn now to the Voyagers, and the question of how long they will stay alive. I often see 2025 cited as a possible terminus, with each spacecraft capable of communication with Earth and the operation of at least one instrument until then. If we make it to 2025, then Voyager 1 would be 160 AU out, and Voyager 2 will have reached 135 AU or thereabouts. In his book The Interstellar Age, Jim Bell — who worked as an intern on the Voyager science support team at JPL starting in 1980, with Voyager at Saturn — notes that cycling off some of the remaining instruments after 2020 could push the date further, maybe to the late 2020s.

After that? With steadily decreasing power levels, some heaters and engineering subsystems will have to be shut down, and with them the science instruments, starting with the most power-hungry. Low-power instruments like the magnetometer could likely stay on longer.

And then there’s this possibility. Stretching out their lifetimes might demand reducing the Voyagers’ output to an engineering signal and nothing else. Bell quotes Voyager project scientist Ed Stone: “As long as we have a few watts left, we’ll try to measure something.”

Working with nothing more than this faint signal, some science could be done simply by monitoring it over the years as it recedes. Keep Voyager doing science until 2027 and we will have achieved fifty years of science returns. Reduced to that single engineering signal, the Voyagers might stay in radio contact until the 2030s.

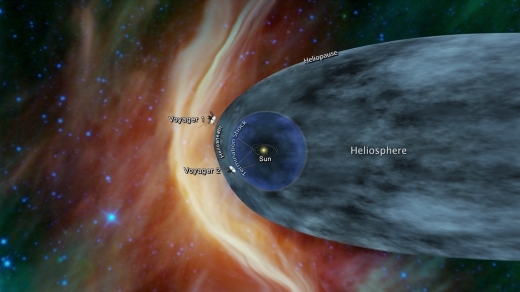

Image: This graphic shows the position of the Voyager 1 and Voyager 2 probes, relative to the heliosphere, a protective bubble created by the Sun that extends well past the orbit of Pluto. Voyager 1 crossed the heliopause, or the edge of the heliosphere, in 2012. Voyager 2 is still in the heliosheath, or the outermost part of the heliosphere. Credit: NASA/JPL-Caltech.

Bear in mind the conditions the Voyagers have to deal with even now. With more than 25 percent of their plutonium having decayed, power limitations are a real factor. If either Voyager passed by an interesting inner Oort object, the cameras could not be turned on because of the power drain, which would shut down their heaters. Continuing power demands from radio communications and the heaters create thorny problems for controllers.

Even so, we’re still doing good science. Today the focus is on a still active Voyager 2, which may be nearing interstellar space. Voyager 2 is now 17.7 billion kilometers from Earth, about 118 AU, and has been traveling through the outermost layers of the heliosphere since 2007. The solar wind dominates this malleable region, which changes during the Sun’s eleven year activity cycle. Solar flares and coronal mass ejections all factor into its size and shape. Ahead is the heliosphere’s outer boundary, the heliopause, beyond which lies interstellar space.

The Cosmic Ray Subsystem instrument on Voyager 2 is now picking up a 5 percent increase in the rate of cosmic rays hitting the spacecraft as compared with what we saw in early August. Moreover, the Low-Energy Charged Particle instrument is picking up an increase in higher-energy cosmic rays. The increases parallel what Voyager 1 found beginning in May, 2012, about three months before it exited the heliopause.

So we may be about to get another interstellar spacecraft. Says Ed Stone:

“We’re seeing a change in the environment around Voyager 2, there’s no doubt about that. We’re going to learn a lot in the coming months, but we still don’t know when we’ll reach the heliopause. We’re not there yet — that’s one thing I can say with confidence.”

We haven’t crossed the outer regions of the heliosphere with a functioning spacecraft more than once, so there is a lot to learn. Voyager 2 moves through a different part of the outer heliosphere — the heliosheath — than Voyager 1 did, so we can’t project too much into the timeline. We’ll simply have to keep monitoring the craft to see what happens.

What an extraordinary ride it has been, and here’s hoping we can keep both Voyagers alive as long as possible. Even when their power is definitively gone, they’ll still be inspiring our imaginations. We’re 300 years from the Inner Oort Cloud, and a whopping 30,000 years from the Oort Cloud’s outer edge. In 40,000 years, Voyager 2 will pass about 110,000 AU from the red dwarf Ross 248, which will at that time be the closest star to the Sun. Both spacecraft will eventually follow 250-million year orbits around the center of the Milky Way.

I return to Jim Bell, who waxes poetic at the thought. He envisions a far future when our remote descendants may be able to see the Voyagers again. Here is a breathtaking vision indeed:

Over time — enormous spans of time, as the gravity of passing stars and interacting galaxies jostles them as well as the stars in our galaxy — I imagine that the Voyagers will slowly rise out of the plane of our Milky Way, rising, rising ever higher above the surrounding disk of stars and gas and dust, as they once rose above the plane of their home stellar system. If our far-distant descendants remember them, then our patience, perseverance, and persistence could be rewarded with perspective when our species — whatever it has become — does, ultimately, follow them. The Voyagers will be long dormant when we catch them, but they will once again make our spirits soar as we gaze upon these most ancient of human artifacts, and then turn around and look back. I have no idea if they’ll still call it a selfie then, but regardless of what it’s called, the view of our home galaxy, from the outside, will be glorious to behold.

October 8, 2018

Fine-Tuning New Horizons’ Trajectory

I love the timing of New Horizons’ next encounter, just as we begin a new year in 2019. On the one hand, we’ll be able to look back to a mission that has proven successful in some ways beyond the dreams of its creators. On the other hand, we’ll have the first close-up brush past a Kuiper Belt Object, 2014 MU69 or, as it’s now nicknamed, Ultima Thule. This farthest Solar System object ever visited by a spacecraft may, in turn, be followed by yet another still farther, if all goes well and the mission is extended. This assumes, of course, another target in range.

We can’t rule out a healthy future for this spacecraft after Ultima Thule. Bear in mind that New Horizons seems to be approaching its current target along its rotational axis. That could reduce the need for additional maneuvers to improve visibility for the New Horizons cameras, saving fuel for later trajectory changes if indeed another target can be found. The current mission extension ends in 2021, but another extension would get a powerful boost if new facilities like the Large Synoptic Survey Telescope become available, offering more capability at tracking down an appropriate KBO. Hubble and New Horizons itself will also keep looking.

But even lacking such a secondary target, an operational New Horizons could return useful data about conditions in the outer Solar System and the heliosphere, with the spacecraft’s radioisotope thermoelectric generator still producing sufficient power for some years. I’ve seen a worst-case 2026 as the cutoff point, but Alan Stern is on record as saying that the craft has enough hydrazine fuel and power from its plutonium generator to stay functional until 2035.

By way of comparison with Voyager, which we need to revisit tomorrow, New Horizons won’t reach 100 AU until 2038, nicely placed to explore the heliosphere if still operational.

But back to Ultima Thule, a destination now within 112 million kilometers of the spacecraft. New Horizons is closing at a rate of 14.4 kilometers per second, enroute to what the New Horizons team says will need to be a 120 by 320 kilometer ‘box’ in a flyby that needs to be predicted within 140 seconds. Based on what we saw at Pluto/Charon, these demands can be met.

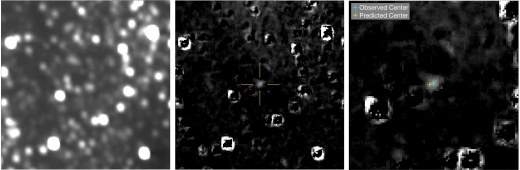

Image: At left, a composite optical navigation image, produced by combining 20 images from the New Horizons Long Range Reconnaissance Imager (LORRI) acquired on Sept. 24. The center photo is a composite optical navigation image of Ultima Thule after subtracting the background star field; star field subtraction is an important component of optical navigation image processing since it isolates Ultima from nearby stars. At right is a magnified view of the star-subtracted image, showing the close proximity and relative agreement between the observed and predicted locations of Ultima. Credit: NASA/JHUAPL/SwRI/KinetX.

Above are the latest navigation images from New Horizons’ Long Range Reconnaissance Imager (LORRI). An engine burn on October 3 further tightened location and timing information for the New Year’s flyby, a 3 ½ minute maneuver that adjusted the spacecraft’s trajectory and increased its speed by 2.1 meters per second. Records fall every time New Horizons does this, with the October 3 correction marking the farthest course correction ever performed.

It’s interesting to learn, too, that this is the first time New Horizons has made a targeting maneuver for the Ultima Thule flyby that used pictures taken by New Horizons itself. The ‘aim point’ is 3,500 kilometers from Ultima at closest approach, and we’ve just learned that these navigation images confirm that Ultima is within 500 kilometers of its expected position.

“Since we are flying very fast and close to the surface of Ultima, approximately four times closer than the Pluto flyby in July 2015, the timing of the flyby must be very accurate,” said Derek Nelson, of KinetX Aerospace, Inc., New Horizons optical navigation lead. “The images help to determine the position and timing of the flyby, but we must also trust the prior estimate of Ultima’s position and velocity to ensure a successful flyby. These first images give us confidence that Ultima is where we expected it to be, and the timing of the flyby will be accurate.”

I’m already imagining New Year’s eve with Ultima Thule to look forward to. You can adjust your own plans depending on your time zone, but the projected flyby time is 0533 UTC on the 1st. As with Pluto/Charon, the excitement of the encounter continues to build. In the broader picture, the more good science we do in space, the more drama we produce as we open up new terrain. This week alone, we need to look at the Hayabusa2 operations at Ryugu, the upcoming OSIRIS-REx exploration of asteroid Bennu, and the continuing saga of Voyager 2.

But New Horizons also reminds us of an uncomfortable fact. When it comes to the outer system, this is the only spacecraft making studies of the Kuiper Belt from within it, and there is no other currently planned. Data from this mission will need to carry us for quite some time.

October 5, 2018

DE-STAR and Breakthrough Starshot: A Short History

Last Monday’s article on the Trillion Planet Survey led to an email conversation with Phil Lubin, its founder, in which the topic of Breakthrough Starshot invariably came up. When I’ve spoken to Dr. Lubin before, it’s been at meetings related to Starshot or presentations on his DE-STAR concept. Standing for Directed Energy System for Targeting of Asteroids and exploRation, DE-STAR is a phased laser array that could drive a small payload to high velocities. We’ve often looked in these pages at the rich history of beamed propulsion, but how did the DE-STAR concept evolve in Lubin’s work for NASA’s Innovative Advanced Concepts office, and what was the path that led it to the Breakthrough Starshot team?

The timeline below gives the answer, and it’s timely because a number of readers have asked me about this connection. Dr. Lubin is a professor of physics at UC-Santa Barbara whose primary research beyond DE-STAR has involved the early universe in millimeter wavelength bands, and a co-investigator on the Planck mission with more than 400 papers to his credit. He is co-recipient of the 2006 Gruber Prize in Cosmology along with the COBE science team for their groundbreaking work in cosmology. Below, he tells us how DE-STAR emerged.

By Philip Lubin

June 2009: Philip Lubin begins work on large scale directed energy systems at UC Santa Barbara. Baseline developed is laser phased array using MOPA [master oscillator power amplifier, a configuration consisting of a master laser (or seed laser) and an optical amplifier to boost the output power] topology. The DE system using this topology is named DE-STAR (Directed Energy System for Targeting Asteroids and exploRation). Initial focus is on planetary defense and relativistic propulsion. Development program begins. More than 250 students involved in DE R&D at UCSB since.

February 14, 2013: UCSB group has press release about DE-STAR program to generate public discussion about applications of DE to planetary defense in anticipation of February 15 asteroid 2012 DA14, which was to come within geosync orbit. On February 15 Chelyabinsk meteor/asteroid hit. This singular coincidence of press release and hit the next day generated a significant change in interest in possible use of large scale DE for space applications. This “pushed the DE ball over the hill”.

August 2013: Philip Lubin and group begin publication of detailed technical papers in multiple journals. DE-STAR program is introduced at invited SPIE [Society of Photo-optical Instrumentation Engineers] plenary talk in San Diego at Annual Photonics meeting. More than 50 technical papers and nearly 100 colloquia from his group have emerged since then.

August 2013: 1st Interstellar Congress held in Dallas, Texas by Icarus Interstellar. Eric Malroy introduces concepts for the use of nanomaterials in sails.

August 2013: First proposal submitted to NASA for DE-STAR system from UC Santa Barbara.

January 2014: Work begins on extending previous UCSB paper to much longer “roadmap” paper which becomes “A Roadmap to Interstellar Flight” (see below).

February 11, 2014 – Lubin gives colloquium on DE-STAR at the SETI Institute in Mountain View, CA. Summarizes UCSB DE program for planetary defense, relativistic propulsion and implications for SETI. SETI Institute researchers suggest Lubin speak with NASA Ames director Pete Worden as he was not at the talk. Worden eventually leaves Ames a year later on March 31, 2015 to go to the Breakthrough Foundation. Lubin and Worden do not meet until 18 months later at the Santa Clara 100YSS meeting (see below).

August 2014: Second proposal submitted to NASA for DE-STAR driven relativistic spacecraft. Known as DEEP-IN (Directed Energy Propulsion for Interstellar Exploration). Accepted and funded by NASA NIAC program as Phase I program. Program includes directed energy phased array driving wafer scale spacecraft as one option [Phase 1 report “A Roadmap to Interstellar Flight” available here].

April 2015: Lubin submits the “roadmap” paper to the Journal of the British Interplanetary Society.

June 2015: Lubin presents DE driven relativistic flight at Caltech Keck Institute meeting. Meets with Emmett and Glady W Technology Fund.

August 31, 2015: August 31, 2015: Lubin and Pete Worden attend 100YSS (100 Year Star Ship) conference in Santa Clara, CA [Worden is now executive director, Breakthrough Starshot, and former director of NASA Ames Research Center]. Lubin is invited by Mae Jemison (director of 100YSS) to give a talk about the UCSB NASA DE program as a viable path to interstellar flight. Worden has to leave before Lubin’s talk, but in a hallway meeting Lubin informs Worden of the UCSB NASA Phase I NASA program for DE driven relativistic flight. This meeting takes places as Lubin recalls Feb 2014 SETI meeting where a discussion with Worden is suggested. Worden asks for further information about the NASA program and Lubin sends Worden the paper “A Roadmap to Interstellar Flight” summarizing the NASA DEEP-IN program. Worden subsequently forwards paper to Yuri Milner.

December 16, 2015: Lubin, Worden and Pete Klupar [chief engineer at Breakthrough Prize Foundation] meet at NASA Ames to discuss DEEP-IN program and “roadmap” paper.

December 2015: Milner calls for meeting with Lubin to discuss DEEP-IN program, “roadmap” paper and the prospects for relativistic flight.

January 2016: Private sector funding of UCSB DE for relativistic flight effort by Emmett and Glady W Technology Fund begins. Unknown to public – anonymous investor greatly enhances UCSB DE effort.

January 2016: First meeting with Milner in Palo Alto. Present are Lubin, Milner, Avi Loeb (Harvard University), Worden and Klupar. Milner sends “roadmap” paper to be reviewed by other physicists. A long series of calls and meetings ensue. This begins the birth of Breakthrough Starshot program.

March 2016 – NASA Phase II proposal for DEEP-IN submitted. Renamed Starlight subsequently. Accepted and funded by NASA.

March 2016: After multiple reviews of Lubin “roadmap” paper by independent scientists, Breakthrough Initiatives endorses idea of DE driven relativistic flight.

April 12, 2016: Public release of Breakthrough Starshot. Hawking endorses idea at NY public announcement.

October 4, 2018

2015 TG387: A New Inner Oort Object & Its Implications

Whether or not there is an undiscovered planet lurking in the farthest reaches of the Solar System, the search for unknown dwarf planets and other objects continues. Extreme Trans-Neptunian objects (ETNOs) are of particular interest. The closest they come to the Sun is well beyond the orbit of Neptune, with the result that they have little gravitational interaction with the giant planets. Consider them as gravitational probes of what lies beyond the Kuiper Belt.

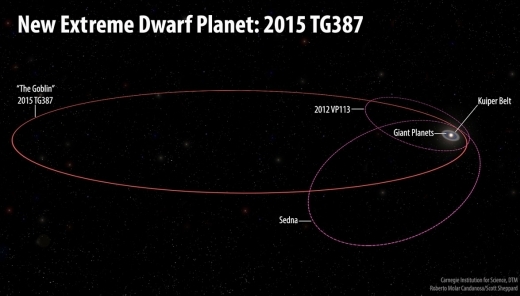

Among the population of ETNOs are the most distant subclass, known as Inner Oort Cloud objects (IOCs), of which we now have three. Added to Sedna and 2012 VP113 comes 2015 TG387, discovered by Scott Sheppard (Carnegie Institution for Science), Chad Trujillo (Northern Arizona University) and David Tholen (University of Hawaiʻi). The object was first observed in 2015, leading to several years of follow-up observations necessary to obtain a good orbital fit.

For 2015 TG387 is a challenging catch, discovered at about 80 AU from the Sun but normally at far greater distance:

“We think there could be thousands of small bodies like 2015 TG387 out on the Solar System’s fringes, but their distance makes finding them very difficult,” Tholen said. “Currently we would only detect 2015 TG387 when it is near its closest approach to the sun. For some 99 percent of its 40,000-year orbit, it would be too faint to see, even with today’s largest telescopes.”

Image: The orbits of the new extreme dwarf planet 2015 TG387 and its fellow Inner Oort Cloud objects 2012 VP113 and Sedna, as compared with the rest of the Solar System. 2015 TG387 was nicknamed “The Goblin” by its discoverers, since its provisional designation contains “TG”, and the object was first seen around Halloween. Its orbit has a larger semi-major axis than both 2012 VP11 and Sedna, so it travels much farther from the Sun, out to 2300 AU. Credit: Roberto Molar Candanosa and Scott Sheppard / Carnegie Institution for Science.

Perihelion, the closest distance this object gets to the Sun, is now calculated at roughly 65 AU, so we are dealing with an extremely elongated orbit. 2015 TG387 has, after VP113 and Sedna (80 and 76 AU respectively), the third most distant perihelion known, but it has a larger orbital semi-major axis, so its orbit carries it much further from the Sun than either, out to about 2,300 AU. At these distances, Inner Oort Cloud objects are all but isolated from the bulk of the Solar System’s mass.

You may recall that it was Sheppard and Trujillo who discovered 2012 VP113 as well, triggering a flurry of investigation into the orbits of such worlds. The gravitational story is made clear by the fact that Sedna, 2012 VP113 and 2015 TG387 all approach perihelion in the same part of the sky, as do most known Extreme Trans-Neptunian objects, an indication that their orbits are being shaped by something in the outer system. Thus the continuing interest in so-called Planet X, a hypothetical world whose possible orbits were recently modeled by Trujillo and Nathan Kaib (University of Oklahoma).

The simulations show the effect of different Planet X orbits on Extreme Trans-Neptunian objects. In 2016, drawing on previous work from Sheppard and Trujillo, Konstantin Batygin and Michael Brown examined the orbital constraints for a super-Earth at several hundred AU from the Sun in an elliptical orbit. Including such a world in their simulations, the latter duo were able to show that several presumed planetary orbits could result in stable orbits for other Extreme Trans-Neptunian objects. Let’s go to the paper to see how 2015 TG387 fits into the picture:

…Trujillo (2018) ran thousands of simulations of a possible distant planet using the orbital constraints put on this planet by Batygin and Brown (2016a). The simulations varied the orbital parameters of the planet to identify orbits where known ETNOs were most stable. Trujillo (2018) found several planet orbits that would keep most of the ETNOs stable for the age of the solar system.

So we’ve fit the simulated orbit with Sedna, 2012 VP113 and other ETNOs. The next step was obvious:

To see if 2015 TG387 would also be stable to a distant planet when the other ETNOs are stable, we used several of the best planet parameters found by Trujillo (2018). In most simulations involving a distant planet, we found 2015 TG387 is stable for the age of the solar system when the other ETNOs are stable. This is further evidence the planet exists, as 2015 TG387 was not used in the original Trujillo (2018) analysis, but appears to behave similarly as the other ETNOs to a possible very distant massive planet on an eccentric orbit.

Image: Movie of the discovery images of 2015 TG387. Two images were taken about 3 hours apart on October 13, 2015 at the Subaru Telescope on Maunakea, Hawaiʻi. 2015 TG387 can be seen moving between the images near the center, while the more distant background stars and galaxies remain stationary. Credits: Dave Tholen, Chad Trujillo, Scott Sheppard.

We know very little about 2015 TG387 itself, though the paper, assuming a moderate albedo, finds a likely diameter in the range of 300 kilometers. The stability of this small object’s orbit, keeping it aligned and stable in relation to the eccentric orbit of the hypothesized Planet X, supports the existence of the planet, especially since the derived orbit of 2015 TG387 was determined after the Planet X orbital simulations. Despite this, notes the paper in conclusion, “…2015 TG387 reacts with the planet very similarly to the other known IOCs and ETNOs.”

Another interesting bit: There is a suggestion that ETNOs in retrograde orbit are stable. Given this, the authors do not rule out the idea that the planet itself might be on a retrograde orbit.

The paper is Sheppard et al., “A New High Perihelion Inner Oort Cloud Object,” submitted to The Astronomical Journal (preprint).

October 3, 2018

Kepler 1625b: Orbited by an Exomoon?

8,000 light years from Earth in the constellation Cygnus, the star designated Kepler 1625 may be harboring a planet with a moon. The planet, Kepler 1625b, is a gas giant several times the mass of Jupiter. What David Kipping (Columbia University) and graduate student Alex Teachey have found is compelling though not definitive evidence of a moon orbiting the confirmed planet.

If we do indeed have a moon here, and upcoming work should be able to resolve the question, we are dealing, at least in part, with the intriguing scenario many scientists (and science fiction writers) have speculated about. Although a gas giant, Kepler 1625b orbits close to or within the habitable zone of its star. A large, rocky moon around it could be a venue for life, but the moon posited for this planet doesn‘t qualify. It’s quite large — roughly the size of Neptune — and like its putative parent, a gaseous body. If we can confirm the first exomoon, we’ll have made a major advance, but the quest for habitable exomoons does not begin around Kepler 1625b.

Image: Columbia’s Alex Teachey, lead author of the paper on the detection of a potential exomoon. Credit: Columbia University.

None of this should take away from the importance of the detection, for exploring moons around exoplanets will doubtless teach us a great deal about how such moons form. Unlike the Earth-Moon system, or the Pluto/Charon binary in our own Solar System, Kepler-1625b’s candidate moon would not have formed through a collision between two rocky bodies early in the history of planetary development. We’d like to learn how it got there, if indeed it is there. Far larger than any Solar System moon, it is estimated to be but 1.5% of its companion’s mass.

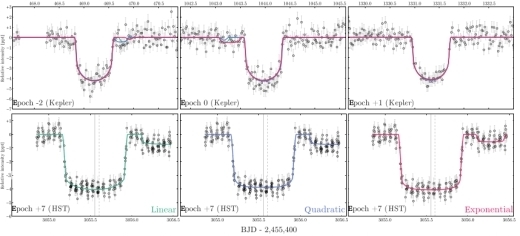

The methods David Kipping has long espoused through the Hunt for Exomoons with Kepler (HEK) project have come to fruition here. Working with data on 284 planets identified through the Kepler mission, each of them with orbital periods greater than 30 days, Kipping and Teachey found interesting anomalies at Kepler-1625b, where Kepler had recorded three transits. The lightcurves produced by these transits across the face of the star as seen from the spacecraft showed deviations that demanded explanation.

Image: Exomoon hunter David Kipping. Credit: Columbia University.

Their interest heightened, the researchers requested and were awarded 40 hours of time on the Hubble Space Telescope, whose larger aperture could produce data four times more precise than that available from Kepler. On October 28 and 29 of 2017, the scientists took data through 26 Hubble orbits. Examining the lightcurve of a 19-hour long transit of Kepler-1625b, they noted a second, much smaller decrease in the star’s light some 3.5 hours later.

Kipping refers to it as being consistent with “…a moon trailing the planet like a dog following its owner on a leash,” but adds that the Hubble observation window closed before the complete transit of the candidate moon could be measured. The paper addresses this second dip:

The most compelling piece of evidence for an exomoon would be an exomoon transit, in addition to the observed TTV [transit timing variation]. If Kepler-1625b’s early transit were indeed due to an exomoon, then we should expect the moon to transit late on the opposite side of the barycenter. The previously mentioned existence of an apparent flux decrease toward the end of our observations is therefore where we would expect it to be under this hypothesis. Although we have established that this dip is most likely astrophysical, we have yet to discuss its significance or its compatibility with a self-consistent moon model.

In and of itself, this is exciting information, but as noted above, we also learn in this morning’s paper in Science Advances that transit timing variations are apparent here. The planet itself began its transit 77.8 minutes earlier than predicted. One way to account for this is by the pull of a moon on the planet, resulting in their both orbiting a common center of gravity and thus throwing the transit calculation (based on an unaccompanied planet) off. What Kipping and Teachey will need to eliminate is the possibility that a second planet, yet undetected, could have caused the timing variation. There is thus far no evidence from Kepler of such a planet.

“A companion moon is the simplest and most natural explanation for the second dip in the light curve and the orbit-timing deviation,” said lead author Teachey. “It was a shocking moment to see that light curve, my heart started beating a little faster and I just kept looking at that signature. But we knew our job was to keep a level head testing every conceivable way in which the data could be tricking us until we were left with no other explanation.”

Image: This is Figure 4 from the paper. Caption: Moon solutions. The three transits in Kepler (top) and the October 2017 transit observed with HST (bottom) for the three trend model solutions. The three colored lines show the corresponding trend model solutions for model M, our favored transit model. The shape of the HST transit differs from that of the Kepler transits owing to limb darkening differences between the bandpasses. Credit: David Kipping, Alex Teachey.

One problem with exomoon hunting is that the ideal candidate planets are those in wide orbits, but this makes for long periods between transits. Even so, the number of large planets in orbits farther from their star than 1 AU is growing, and such worlds should be useful targets for the upcoming James Webb Space Telescope. Although we still have to confirm Kepler-1625b’s moon, such a confirmation could prove only the beginning of a growing exomoon census.

We’ll know more as we make more detections, but for now, I think Kipping and Teachey’s caution is commendable. Noting that confirmation will involve long scrutiny, observations and skepticism from within the community, they point out:

…it is difficult to assign a precise probability to the reality of Kepler-1625b-i. Formally, the preference for the moon model over the planet-only model is very high, with a Bayes factor exceeding 400,000. On the other hand, this is a complicated and involved analysis where a minor effect unaccounted for, or an anomalous artifact, could potentially change our interpretation. In short, it is the unknown unknowns that we cannot quantify. These reservations exist because this would be a first-of-its-kind detection — the first exomoon.

A final thought: The paper points out that the original Kepler data that flagged Kepler-1625b as interesting in exomoon terms were actually stronger than the Kepler data Kipping and Teachey added into the mix for this work. They are now working with the most recent release, which had to be revisited for all factors that could affect the analysis. It turns out that this most recent release “only modestly favors that hypothesis when treated in isolation.” The HST data make the strongest case in strengthening the case for an exomoon. The authors believe that this shows the need to pursue similar Kepler planets for exomoons with HST and other facilities, even in cases where the Kepler data themselves do not show a large exomoon-like signature.

The paper is Teachey & Kipping, “Evidence for a large exomoon orbiting Kepler-1625b,” Science Advances 3 October 2016 (complete citation when I have it).

October 2, 2018

Into the Cosmic Haystack

A new paper from Jason Wright (Penn State) and colleagues Shubham Kanodia and Emily Lubar deals with SETI and the ‘parameter space’ within which we search, with interesting implications. For the researchers show that despite searching for decades through a variety of projects and surveys, SETI is in early days indeed. Those who would draw conclusions about its lack of success to this point fail to understand the true dimensions of the challenge.

But before getting into the meat of the paper, let’s talk about a few items in its introduction. For Wright and team contextualize SETI in relation to broader statements about our place in the cosmos. We can ask questions about what we see and what we don’t see, but we have to avoid being too facile in our interpretation of what some consider to be an ‘eerie silence’ (the reference is to a wonderful book by Paul Davies of the same name).

Image: Penn State’s Jason Wright. Credit: PSU.

Back in the 1970s, Michael Hart argued that even with very slow interstellar travel, the Milky Way should have been well settled by now. If, that is, there were civilizations out there to settle it. Frank Tipler made the same point, deducing from the lack of evidence that SETI itself was pointless, because if other civilizations existed, they would have already shown up.

In their new paper, Wright and team take a different tack, looking at the same argument as applied to more terrestrial concerns. Travel widely (Google Earth will do) and you’ll notice that most of the places you select at random show no obvious signs of humans or, in a great many cases, our technology. Why is this? After all, it takes but a small amount of time to fly across the globe when compared to the age of the technology that makes this possible. Shouldn’t we, then, expect that by now, most parts of the Earth’s surface should bear signs of our presence?

It’s a canny argument in particular because we are the only example of a technological species we have, and the Hart-style argument fails for us. If we accept the fact that although there are huge swaths of Earth’s surface that show no evidence of us, the Earth is still home to a technological civilization, then perhaps the same can be said for the galaxy. Or, for that matter, the Solar System, so much of which we have yet to explore. Could there be, for example, a billion year old Bracewell probe awaiting activation among the Trans-Neptunian objects?

Maybe, then, there is no such thing as an ‘eerie silence,’ or at least not one whose existence has been shown to be plausible. The matter seems theoretical until you realize it impacts practical concerns like SETI funding. If we assume that extraterrestrial civilizations do not exist because they have not visited us, then SETI is a wasteful exercise, its money better spent elsewhere.

By the same token, some argue that because we have not yet had a SETI detection of an alien culture, we can rule out their existence, at least anywhere near us in the galaxy. What Wright wants to do is show that the conclusion is false, because given the size of the search space, SETI has barely begun. We need, then, to examine just how much of a search we have actually been able to mount. What interstellar beacons, for example, might we have missed because we lacked the resources to keep a constant eye on the same patch of sky?

The Wright paper is about the parameter space within which we hope to find so-called ‘technosignatures.’ Jill Tarter has described a ‘cosmic haystack’ existing in three spatial dimensions, one temporal dimension, two polarization dimensions, central frequency, sensitivity and modulation — a haystack, then, of nine dimensions. Wright’s team likes this approach:

This “needle in a haystack” metaphor is especially appropriate in a SETI context because it emphasizes the vastness of the space to be searched, and it nicely captures how we seek an obvious product of intelligence and technology amidst a much larger set of purely natural products. SETI optimists hope that there are many alien needles to be found, presumably reducing the time to find the first one. Note that in this metaphor the needles are the detectable signatures of alien technology, meaning that a single alien species might be represented by many needles.

Image: Coming to terms with the search space as SETI proceeds, in this case at Green Bank, WV. Credit: Walter Bibikow/JAI/Corbis /Green Bank Observatory.

The Wright paper shows how our search haystacks can be defined even as we calculate the fraction of them already examined for our hypothetical needles. A quantitative, eight-dimensional model is developed to make the calculation, with a number of differences between the model haystack and the one developed by Tarter, and factoring in recent SETI searches like Breakthrough Listen’s ongoing work. The assumption here, necessary for the calculation, is that SETI surveys have similar search strategies and sensitivities.

This assumption allows the calculation to proceed, and it is given support when we learn that its results align fairly well with the previous calculation Jill Tarter made in a 2010 paper. Thus Wright: “…our current search completeness is extremely low, akin to having searched something like a large hot tub or small swimming pool’s worth of water out of all of Earth’s oceans.”

And then Tarter, whose result for the size of our search is a bit smaller. Let me just quote her (from an NPR interview in 2012) on the point:

“We’ve hardly begun to search… The space that we’re looking through is nine-dimensional. If you build a mathematical model, the amount of searching that we’ve done in 50 years is equivalent to scooping one 8-ounce glass out of the Earth’s ocean, looking and seeing if you caught a fish. No, no fish in that glass? Well, I don’t think you’re going to conclude that there are no fish in the ocean. You just haven’t searched very well yet. That’s where we are.”

This being the case, the idea that a lack of success for SETI to date is a compelling reason to abandon the search is shown for what it is, a misreading of the enormity of the search space. SETI cannot be said to have failed. But this leads to a different challenge. Wright again:

We should be careful, however, not to let this result swing the pendulum of public perceptions of SETI too far the other way by suggesting that the SETI haystack is so large that we can never hope to find a needle. The whole haystack need only be searched if one needs to prove that there are zero needles—because technological life might spread through the Galaxy, or because technological species might arise independently in many places, we might expect there to be a great number of needles to be found.

The paper also points out that in its haystack model are included regions of interstellar space between stars for which there is no assumption of transmitters. Transmissions from nearby stars are but a subset of the haystack, and move up in the calculation of detection likelihood.

So we keep looking, wary of drawing conclusions too swiftly when we have searched such a small part of the available parameter space, and we look toward the kind of searches that can accelerate the process. These would include “…surveys with large bandwidth, wide fields of view, long exposures, repeat visits, and good sensitivity,” according to the paper. The ultimate survey? All sky, all the time, the kind of all-out stare that would flag repeating signals that today could only register as one-off phenomena, and who knows what other data of interest not just to SETI but to the entire community of deep-sky astronomers and astrophysicists.

The paper is Wright et al., “How Much SETI Has Been Done? Finding Needles in the n-Dimensional Cosmic Haystack,” accepted at The Astronomical Journal (preprint).

October 1, 2018

Trillion Planet Survey Targets M-31

Can rapidly advancing laser technology and optics augment the way we do SETI? At the University of California, Santa Barbara, Phil Lubin believes they can, and he’s behind a project called the Trillion Planet Survey to put the idea into practice for the benefit of students. As an incentive for looking into a career in physics, an entire galaxy may be just the ticket.

For the target is the nearest galaxy to our own. The Trillion Planet Survey will use a suite of meter-class telescopes to search for continuous wave (CW) laser beacons from M31, the Andromeda galaxy. But TPS is more than a student exercise. The work builds on Lubin’s 2016 paper called “The Search for Directed Intelligence,” which makes the case that laser technology foreseen today could be seen across the universe. And that issue deserves further comment.

Centauri Dreams readers are familiar with Lubin’s work with DE-STAR, (Directed Energy Solar Targeting of Asteroids and exploRation), a scalable technology that involves phased arrays of lasers. DE-STAR installations could be used for purposes ranging from asteroid deflection (DE-STAR 2-3) to propelling an interstellar spacecraft to a substantial fraction of the speed of light (DE-STAR 3-4). The work led to NIAC funding (NASA Starlight) in 2015 examining beamed energy systems for propulsion in the context of miniature probes using wafer-scale photonics and is also the basis for Breakthough Starshot.

Image: UC-Santa Barbara physicist Philip Lubin. Credit: Paul Wellman/Santa Barbara Independent.

A bit more background here: Lubin’s Phase I study “A Roadmap to Interstellar Flight “ is available online. It was followed by Phase II work titled “Directed Energy Propulsion for Interstellar Exploration (DEEP-IN).” Lubin’s discussions with Pete Worden on these ideas led to talks with Yuri Milner in late 2015. The Breakthrough Starshot program draws on the DE-STAR work, particularly in its reliance on miniaturized payloads and, of course, a laser array for beamed propulsion, the latter an idea that had largely been associated with large sails rather than chip-sized payloads. Mason Peck and team’s work on ‘sprites’ is also a huge factor.

But let’s get back to the Trillion Planet Survey — if I start talking about the history of beamed propulsion concepts, I could spend days, and anyway, Jim Benford has already undertaken the task in these pages in his A Photon Beam Propulsion Timeline. What’s occupies us this morning is the range of ideas that play around the edges of beamed propulsion, one of them being the beam itself, and how it might be detected at substantial distances. Lubin’s DE-STAR 4, capable of hitting an asteroid with 1.4 megatons of energy per day, would stand out in many a sky.

In fact, according to Lubin’s calculations, such a system — if directed at another star — would be seen in systems as distant as 1000 light years as, briefly, the brightest star in the sky. Suddenly we’re talking SETI, because if we can build such systems in the foreseeable future, so can the kind of advanced civilizations we may one day discover among the stars. Indeed, directed energy systems might announce themselves with remarkable intensity.

Image: M31, the Andromeda Galaxy, the target of the largely student led Trillion Planet Survey. Credit & Copyright: Robert Gendler.

Lubin makes this point in his 2016 paper, in which he states “… even modest directed energy systems can be ‘seen’ as the brightest objects in the universe within a narrow laser linewidth.” Amplifying on this from the paper, he shows that stellar light in a narrow bandwidth would be very small in comparison to the beamed energy source:

In case 1) we treat the Sun as a prototype for a distant star, one that is unresolved in our telescope (due to seeing or diffraction limits) but one where the stellar light ends up in ~ one pixel of our detector. Clearly the laser is vastly brighter in this sense. Indeed for the narrower linewidth the laser is much brighter than an entire galaxy in this sense. For very narrow linewidth lasers (~ 1 Hz) the laser can be nearly as bright as the sum of all stars in the universe within the linewidth. Even modest directed energy systems can stand out as the brightest objects in the universe within the laser linewidth.

And again (and note here that the reference to ‘class 4’ is not to an extended Kardashev scale, but rather to a civilization transmitting at DE-STAR 4 levels, as defined in the paper):

As can be seen at the distance of the typical Kepler planets (~ 1 kly distant) a class 4 civilization… appears as the equivalent of a mag~0 star (ie the brightest star in the Earth’s nighttime sky), at 10 kly it would appear as about mag ~ 5, while the same civilization at the distance of the nearest large galaxy (Andromeda) would appear as the equivalent of a m~17 star. The former is easily seen with the naked eye (assuming the wavelength is in our detection band) while the latter is easily seen in a modest consumer level telescope.

Out of this emerges the idea that a powerful civilization could be detected with modest ground-based telescopes if it happened to be transmitting in our direction when we were observing. Hence the Trillion Planet Survey, which looks at using small telescopes such as those in the Las Cumbres Observatory’s robotic global network to make such a detection.

With M31 as the target, the students in the Trillion Planet Survey are conducting a survey of the galaxy as TPS gets its software pipeline into gear. Developed by Emory University student Andrew Stewart, the pipeline processes images under a set of assumptions. Says Stewart:

“First and foremost, we are assuming there is a civilization out there of similar or higher class than ours trying to broadcast their presence using an optical beam, perhaps of the ‘directed energy’ arrayed-type currently being developed here on Earth. Second, we assume the transmission wavelength of this beam to be one that we can detect. Lastly, we assume that this beacon has been left on long enough for the light to be detected by us. If these requirements are met and the extraterrestrial intelligence’s beam power and diameter are consistent with an Earth-type civilization class, our system will detect this signal.”

Screening transient signals from its M31 images, the team will then submit them to further processing in the software pipeline to eliminate false positives. The TPS website offers links to background information, including Lubin’s 2016 paper, but as of yet has little about the actual image processing, so I’ll simply quote from a UCSB news release on the matter:

“We’re in the process of surveying (Andromeda) right now and getting what’s called ‘the pipeline’ up and running,” said researcher Alex Polanski, a UC Santa Barbara undergraduate in Lubin’s group. A set of photos taken by the telescopes, each of which takes a 1/30th slice of Andromeda, will be knit together to create a single image, he explained. That one photograph will then be compared to a more pristine image in which there are no known transient signals — interfering signals from, say, satellites or spacecraft — in addition to the optical signals emanating from the stellar systems themselves. The survey photo would be expected to have the same signal values as the pristine “control” photo, leading to a difference of zero. But a difference greater than zero could indicate a transient signal source, Polanski explained. Those transient signals would then be further processed in the software pipeline developed by Stewart to kick out false positives. In the future the team plans to use simultaneous multiple color imaging to help remove false positives as well.

Why Andromeda? The Trillion Planet Survey website notes that the galaxy is home to at least one trillion stars, a stellar density higher than the Milky Way’s, and thus represents “…an unprecedented number of targets relative to other past SETI searches.” The project gets the students who largely run it into the SETI business, juggling the variables as we consider strategies for detecting other civilizations and upgrading existing search techniques, particularly as we take into account the progress of exponentially accelerating photonic technologies.

Projects like these can exert a powerful incentive for students anxious to make a career out of physics. Thus Caitlin Gainey, now a freshman in physics at UC Santa Barbara:

“In the Trillion Planet Survey especially, we experience something very inspiring: We have the opportunity to look out of our earthly bubble at entire galaxies, which could potentially have other beings looking right back at us. The mere possibility of extraterrestrial intelligence is something very new and incredibly intriguing, so I’m excited to really delve into the search this coming year.”

And considering that any signal arriving from M31 would have been enroute for well over 2 million years, the TPS also offers the chance to involve students in the concept of SETI as a form of archaeology. We could discover evidence of a civilization long dead through signals sent well before civilization arose on Earth. A ‘funeral beacon’ announcing the demise of a once-great civilization is a possibility. In terms of artifacts, the search for Dyson Spheres or other megastructures is another. The larger picture is that evidence of extraterrestrial intelligence can come in various forms, including optical or radio signals as well as artifacts detectable through astronomy. It’s a field we continue to examine here, because that search has just begun.

Phil Lubin’s 2016 paper is “The Search for Directed Intelligence,” REACH – Reviews in Human Space Exploration, Vol. 1 (March 2016), pp. 20-45. (Preprint / full text).

September 28, 2018

Small Provocative Workshop on Propellantless Propulsion

In what spirit do we pursue experimentation, and with what criteria do we judge the results? Marc Millis has been thinking and writing about such questions in the context of new propulsion concepts for a long time. As head of NASA’s Breakthrough Propulsion Physics program, he looked for methodologies by which to push the propulsion envelope in productive ways. As founding architect of the Tau Zero Foundation, he continues the effort through books like Frontiers of Propulsion Science, travel and conferences, and new work for NASA through TZF. Today he reports on a recent event that gathered people who build equipment and test for exotic effects. A key issue: Ways forward that retain scientific rigor and a skeptical but open mind. A quote from Galileo seems appropriate: “I deem it of more value to find out a truth about however light a matter than to engage in long disputes about the greatest questions without achieving any truth.”

by Marc G Millis

A workshop on propellantless propulsion was held at a sprawling YMCA campus of classy rusticity, in Estes Park Colorado, from Sept 10 to 14. These are becoming annual events, with the prior ones being in LA in Nov 2017, and in Estes Park, Sep 2016. This is a fairly small event of only about 30 people.

It was at the 2016 event where three other labs reported the same thrust that Jim Woodward and his team had been reporting for some time – with the “Mach Effect Thruster” (which also goes by the name “Mach Effect Gravity Assist” device). Backed by those independent replications, NASA awarded Woodward’s team NIAC grants. Updates on this work and several other concepts were discussed at this workshop. There will be a proceedings published after all the individual reports are rounded up and edited.

Before I go on to describe these updates, I feel it would be helpful to share a technique that I regularly use to when trying to assess potential breakthrough concepts. I began using this technique when I ran NASA’s Breakthrough Propulsion Physics project to help decide which concepts to watch and which to skip.

When faced with research that delves into potential breakthroughs, one faces the challenge of distinguishing which of those crazy ideas might be the seeds of breakthroughs and which are the more generally crazy ideas. In retrospect, it is easy to tell the difference. After years of continued work, the genuine breakthroughs survive, along with infamous quotes from their naysayers. Meanwhile the more numerous crazy ideas are largely forgotten. Making that distinction before the fact, however, is difficult.

So how do I tell that difference? Frankly, I can’t. I’m not clairvoyant nor brilliant enough to tell which idea is right (though it is easy to spot flagrantly wrong ideas). What I can judge and what needs to be judged is the reliability of the research. Regardless if the research is reporting supportive or dismissive evidence of a new concept, those findings mean nothing unless they are trustworthy. The most trustworthy results come from competent, rigorous researchers who are impartial – meaning they are equally open to positive or negative findings. Therefore, I first look for the impartiality of the source – where I will ignore “believers” or pedantic pundits. Next, I look to see if their efforts are focused on the integrity of the findings. If experimenters are systematically checking for false positives, then I have more trust in their findings. If theoreticians go beyond just their theory to consider conflicting viewpoints, then I pay more attention. And lastly, I look to see if they are testing a critical make-break issue or just some less revealing detail. If they won’t focus on a critical issue, then the work is less relevant.

Consider the consequences of that tactic: If a reliable researcher is testing a bad idea, you will end up with a trustworthy refutation of that idea. Null results are progress – knowing which ideas to set aside. Reciprocally, if a sloppy or biased researcher is testing a genuine breakthrough, then you won’t get the information you need to take that idea forward. Sloppy or biased work is useless (even if from otherwise reputable organizations). The ideal situation is to have impartial and reliable researchers studying a span of possibilities, where any latent breakthrough in that suite will eventually reveal itself (the “pony in the pile”).

Now, back to the workshop. I’ll start with the easiest topic, the infamous EmDrive. I use the term “infamous” to remind you that (1) I have a negative bias that can skew my impartiality, and (2) there are a large number of “believers” whose experiments never passed muster (which lead to my negative bias and overt frustration).

Three different tests of the EmDrive were reported of varying degrees of rigor. All of the tests indicated that the claimed thrust is probably attributable to false positives. The most thorough tests were from the Technical University of Dresden, Germany, led by Martin Tajmar, and where his student, Marcel Weikert presented the EmDrive tests, and Matthias Kößling on the details of their thrust stand. They are testing more than one version of the EmDrive, under multiple conditions, and all with alertness for false positives. Their interim results show that thrusts are measured when the device is not in a thrusting mode – meaning that something else is creating the appearance of a thrust. They are not yet fully satisfied with the reliability of their findings and tests continue. They want to trace the apparent thrust its specific cause.

The next big topic was Woodward’s Mach Effect Thruster – determining if the previous positive results are indeed genuine, and then determining if they are scalable to practical levels. In short – it is still not certain if the Mach Effect Thruster is demonstrating a genuine new phenomenon or if it is a case of a common experimental false positive. In addition to work of Woodward’s team, led by Heidi Fearn, the Dresden team also had substantial progress to report, specifically where Maxime Monette covered the Mach Effect thruster details in addition to the thrust stand details from Matthias Kößling. There was also an analytical assessment by based on conventional harmonic oscillators, plus more than one presentation related to the underlying theory.

One of the complications that developed over the years is that the original traceability between Woodward’s theory and the current thruster hardware has thinned. The thruster has become a “back box” where the emphasis is now on the empirical evidence and less on the theory.

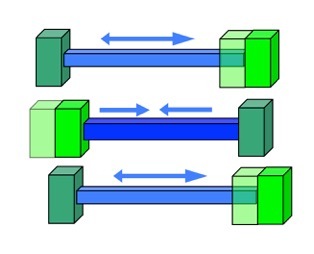

Originally, the thruster hardware closely followed the 1994 patent which itself was a direct application of Woodward’s 1990 hypothesized fluctuating inertia. It involved two capacitors at opposite ends of a piezoelectric separator, where the capacitors experience the inertial fluctuations (during charging and discharging cycles) and where the piezoelectric separator cyclically changes length between these capacitors.

Its basic operation is as follows: While the rear capacitor’s inertia is higher and the forward capacitor lower, the piezoelectric separator is extended. The front capacitor moves forward more than the rear one moves rearward. Then, while the rear capacitor’s inertia is lower and the forward capacitor higher, the piezoelectric separator is contracted. The front capacitor moves backward less than the rear one moves forward. Repeating this cycle shifts the center of mass of the system forward – apparently violating conservation of momentum.

The actual conservation of momentum is more difficult to assess. The original conservation laws are anchored to the idea of an immutable connection between inertia and an inertial frame. The theory behind this device deals with open questions in physics about the origins and properties of inertial frames, specifically evoking “Mach’s Principle.” In short, that principle is ‘inertia here because of all the matter out there.’ Another related physics term is “Inertial Induction.” Skipping through all the open issues, the upshot is that variations in inertia would require revisions to the conservation laws. It’s an open question.

Back to the tale of the evolved hardware. Eventually over the years, the hardware configuration changed. While Woodward and his team tried different ways to increase the observed thrust, the ‘fluctuating inertia’ components and the ‘motion’ components were merged. Both the motions and mass fluctuations are now occurring in a stack of piezoelectric disks. Thereafter, the emphasis shifted to the empirical observations. There were no analyses to show how to connect the original theory to this new device. The Dresden team did develop a model to link the theory to the current hardware, but determining its viability is part of the tests that are still unfinished [Tajmar, M. (2017). Mach-Effect thruster model. Acta Astronautica, 141, 8-16.].

Even with the disconnect between the original theory and hardware now under test, there were a couple of presentations about the theory, one by Lance Williams and the other by Jose’ Rodal. Lance, reporting on discussions he had when attending the April 2018 meeting of American Physical Society, Division of Gravitational Physics, suggested how to engage the broader physics community about this theory, such as using the more common term of “Inertial Induction” instead of “Mach’s Principle.” Lance elaborated on the prevailing views (such as the absence of Maxwellian gravitation) that would need to be brought into the discussion – facing the constructive skepticism to make further advances. Jose’ Rodal elaborated on the possible applicability of “dilatons” from the Kaluza–Klein theory of compactified dimensions. Amid these and other presentations, there was lively discussion involving multiple interpretations of well established physics.

An additional provocative model for the Mach Effect Thruster came from an interested software engineer, Jamie Ciomperlik, who dabbles in these topics for recreation. In addition to his null tests of the EmDrive, he created a numerical simulation for the Mach Effect using conventional harmonic oscillators. The resulting complex simulations showed that, with the right parameters, a false positive thrust could result from vibrational effects. After lengthy discussions, it was agreed to examine this more closely, both experimentally and analytically. Though the experimentalists already knew of possible false positives from vibration, they did not previously have an analytical model to help hunt for these effects. One of the next steps is to check how closely the analysis parameters match the actual hardware.

Quantum approaches were also briefly covered, where Raymond Chiao discussed the negative energy densities of Casimir cavities and Jonathan Thompson (a prior student of Chiao’s) gave an update on experiments to demonstrate the “Dynamical Casimir effect” – a method to create a photon rocket using photons extracted from the quantum vacuum.

There were several other presentations too, spanning topics of varying relevance and fidelity. Some of these were very speculative works, whose usefulness can be compared to the thought-provoking effect of good science fiction. They don’t have to be right to be enlightening. One was from retired physicist and science fiction writer, John Cramer, who described the assumptions needed to induce a wormhole using the Large Hadron Collider (LHC) that could cover 1200 light-years in 59 days.

Representing NASA’s Innovative Advanced Concepts (NIAC), Ron Turner gave an overview of the scope and how to propose for NIAC awards.

A closing thought about consequences. By this time next year, we will have definitive results on the Mach Effect Thruster, and the findings of the EmDrive will likely arrive sooner. Depending on if the results are positive or negative, here are my recommendations on how to proceed in a sane and productive manner. These recommendations are based on history repeating itself, using both the good and bad lessons:

If It Does Work:

Let the critical reviews and deeper scrutiny run their course. If this is real, a lot of people will need to repeat it for themselves to discover what it’s about. This takes time, and not all of it will be useful or pleasant. Pay more attention to those who are attempting to be impartial, rather than those trying to “prove” or “disprove.” Because divisiveness sells stories, expect press stories focusing on the controversy or hype, rather than reporting the blander facts.

Don’t fall for the hype of exaggerated expectations that are sure to follow. If you’ve never heard of the “Gartner Hype Cycle,” then now’s the time to look it up. Be patient, and track the real test results more than the news stories. The next progress will still be slow. It will take a while and a few more iterations before the effects start to get unambiguously interesting.

Conversely, don’t fall for the pedantic disdain (typically from those whose ideas are more conventional and less exciting). You’ll likely hear dismissals like, “Ok, so it works, but it’s not useful. ” or “We don’t need it to do the mission.” Those dismissals only have a kernel of truth in a very narrow, near-sighted manner.

Look out for the sharks and those riding the coattails of the bandwagon. Sorry to mix metaphors, but it seemed expedient. There will be a lot of people coming out of the woodwork in search of their own piece of the action. Some will be making outrageous claims (hype) and selling how their version is better than the original. Again, let the test results, not the sales pitches, help you decide.

If It Does Not Work:

Expect some to dismiss the entire goal of “spacedrives” based on the failure of one or two approaches. This is a “generalization error” which might make some feel better, but serves no useful purpose.

Expect others to chime in with their alternative new ideas to fill the void, the weakest of which will be evident by their hyped sales pitches.

Follow the advice given earlier: When trying to figure out which idea to listen too, check their impartiality and rigor. Listen to those that are not trying to sell nor dismiss, but rather to honestly investigate and report. When you find those service providers, keep tuned in to them.

To seek new approaches toward the breakthrough goals, look for the intersection of open questions in physics to the critical make-break issues of those desired breakthroughs. Those intersections are listed in our book Frontiers of Propulsion Science .

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers