Stan Garfield's Blog, page 19

December 7, 2022

Gary A. Klein: Profiles in Knowledge

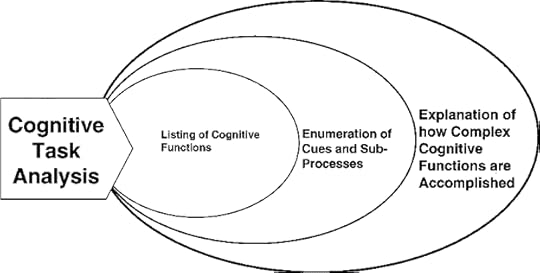

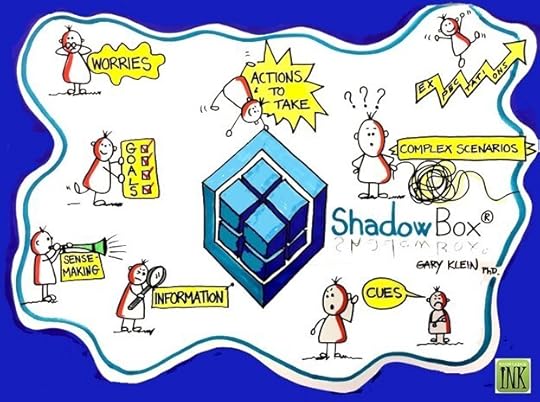

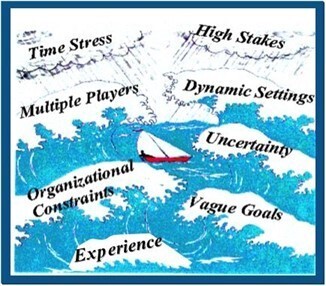

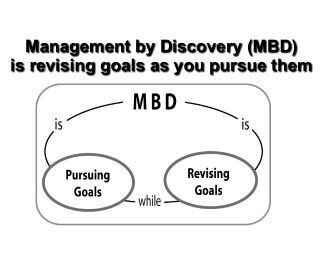

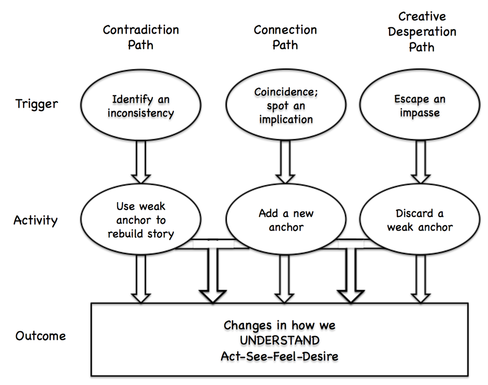

This is the 86th article in the Profiles in Knowledge series featuring thought leaders in knowledge management. Gary A. Klein has written five books, co-written one, and co-edited three. He is known for the cognitive methods and models he developed, including the Data/Frame Theory of sensemaking, the Management by Discovery model of planning in complex settings, and the Triple Path Model of insight. He developed the Pre-Mortem method of risk assessment, Cognitive Task Analysis for uncovering the tacit knowledge that goes into decision making, and the ShadowBox Training approach for cognitive skills. Gary pioneered the Naturalistic Decision Making (NDM) movement in 1989, which has grown to hundreds of international researchers and practitioners. And he has helped to initiate the new discipline of macrocognition.

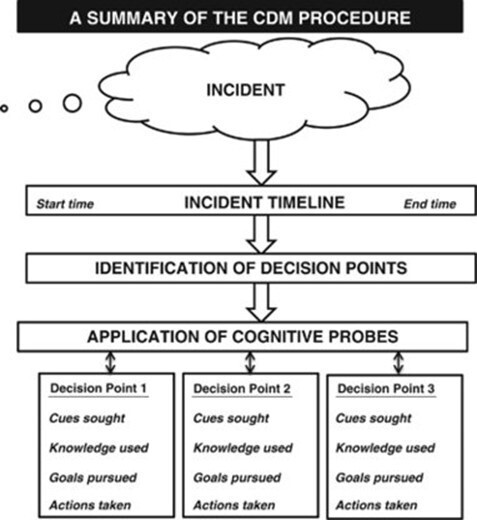

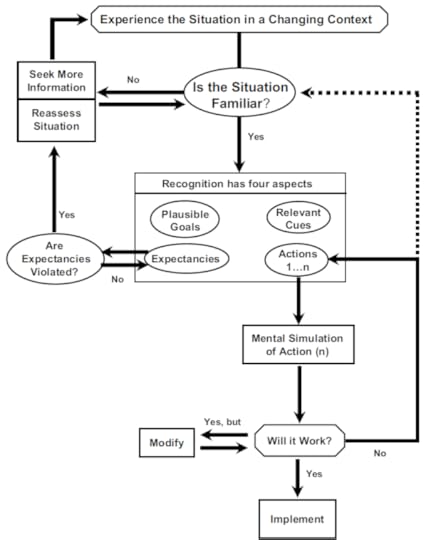

Gary developed the Recognition-Primed Decision (RPD) model to describe how people actually make decisions in natural settings. It has been incorporated into Army doctrine for command and control. He has investigated sensemaking, replanning, and anticipatory thinking. Gary devised methods for on-the-job training to help organizations recycle their expertise and their tacit knowledge to newer workers. And he developed the Knowledge Audit for doing cognitive task analysis, the Critical Decision Method (CDM), and the Artificial Intelligence Quotient (AIQ).

Gary A. Klein should not be confused with Gary L. Klein (PhD in Cognitive Social Psychology and BA in Experimental Psychology) who specializes in Artificial Intelligence and Cognitive Psychology and recorded a Naturalistic Decision Making Association podcast or Gary Klein (PhD, MS, and BS) who wrote several articles about knowledge management.

Gary and I have been at three of the same KMWorld Conferences: 2022, 2015, and 2012.

Background

BackgroundGary received his Ph.D. in experimental psychology from the University of Pittsburgh in 1969. He spent the first phase of his career in academia as an Assistant Professor of Psychology at Oakland University (1970–1974). The second phase was spent working for the government as a research psychologist for the U.S. Air Force (1974–1978). The third phase began in 1978 when he founded his own R&D company, Klein Associates, which grew to 37 people by the time it was acquired by Applied Research Associates (ARA) in 2005.

He was selected as a Fellow of Division 19 of the American Psychological Association in 2006. In 2008 Gary received the Jack A. Kraft Innovator Award from the Human Factors and Ergonomics Society.

EducationPh.D., Experimental Psychology — University of Pittsburgh, 1969M.S., Physiological Psychology — University of Pittsburgh, 1967B.A., Psychology — City College of New York, 1964ExperiencePresident and Chief Executive Officer, ShadowBox LLC, 2014 — PresentSenior Scientist, Macrocognition LLC, 2009 — PresentSenior Scientist, Cognitive Solutions Division of Applied Research Associates, 2005–2010Chairman and Chief Scientist, Klein Associates, Inc, 1978–2005Research Psychologist, U.S. Air Force Human Resources Laboratory, WPAFB, 1974–1978Assistant Professor of Psychology, Oakland University in Michigan. 1970–1974Associate Professor of Psychology, Wilberforce University in Ohio, 1969–1970ProfilesLinkedInShadowBox TrainingMacroCognitionRésuméWikipediaTwitterResearch.comContentGary KleinMacroCognitionShadowBox Training: Accelerate ExpertiseLinkedIn ArticlesLinkedIn PostsPsychology Today Blog — Seeing What Others Don’t: The remarkable ways we gain insightsResearchGateGoogle ScholarSemantic ScholarPublicationsExpertise Management: Challenges for adopting Naturalistic Decision Making as a knowledge management paradigm with Brian Moon and Holly BaxterConditions for intuitive expertise: A failure to disagree with Daniel KahnemanWhen to Consult Your IntuitionBusiness as Unusual: How to train when expertise becomes outdatedExpertise in judgment and decision making: A case for training intuitive decision skills with Jenny Phillips and Winston SieckGuest blog posts for Dave SnowdenAutomated PartnersStupor CrunchersNaturalistic Decision Making ToolsKnowledge elicitation tools, primarily methods for doing Cognitive Task Analysis such as the Critical Decision Method, the Situation Awareness Record, Applied Cognitive Task Analysis (ACTA), the Knowledge Audit, the Cognitive Audit, and Concept Maps.Cognitive specifications and representations, such as the Cognitive Requirements Table, the Critical Cue Inventory, the Cognimeter, Integrated Cognitive Analyses for Human-Machine Teaming, Contextual Activity Templates, and Diagrams of Work Organization Possibilities.Training approaches, including the ShadowBox technique, Tactical Decision Games, Artificial Intelligence Quotient, On-the-Job Training, and Cognitive After-Action Review Guide for Observers.Design methods, e.g., Decision-Centered Design, Principles for Collaborative Automation, and Principles of Human-Centered Computing.Evaluation techniques such as Sero!, Concept Maps, Decision Making Record, Work-Centered Evaluation.Teamwork aids, e.g., the Situation Awareness Calibration questions, the Cultural Lens model.Risk assessment methods: the Pre-Mortem.Measurement techniques such as Macrocognitive measures, Hoffman’s “performance assessment by order statistics” and four scales for explainable Artificial Intelligence.Conceptual descriptions: these are models like the Recognition-Primed Decision model that have been used in a variety of ways.Getting SmarterHow can we strengthen our tacit knowledge? Here are nine ideas we can put into practice.

Seek feedback.Consult with Experts.Vicarious experiences.Curiosity.A growth mindset.Overcoming a procedural mindset.Harvesting mistakes.Adapt and discover.Don’t let evaluation interfere with training.How Can We Identify the Experts?There are soft criteria, indicators we can pay attention to. I have identified seven so far. Even though none of these criteria are fool-proof, all of them seem useful and relevant:

Successful performance — measurable track record of making good decisions in the past.Peer respect.Career — number of years performing the task.Quality of tacit knowledge such as mental models.Reliability.Credentials — licensing or certification of achieving professional standards.Reflection.Re-Thinking Expertise: The Skill Portfolio AccountWe can distinguish five general types of skills that experts may have:

Perceptual-motor skillsConceptual skillsManagement skillsCommunication skillsAdaptation skillsThese are not components of expertise. Some skills may be relevant in one domain but not another. And they are reasonably independent.

These five general skills are identified on several criteria: First, they are acquired through experience and feedback, as opposed to being natural talents. Second, they are relevant to the tasks people perform, and therefore the set of skills will vary by task and domain. Third, superior performance on these skills should differentiate experts from journeymen.

The skills will vary for different domains and tasks. Our focus should be on the most important sub-skills for that domain. Otherwise, it is too easy to have an ever-expanding set of skills to contend with. In some domains, one or more of these general skills may not apply at all. And people may be experts in some aspects of a task but not others.

Methods, Movement, and Models1. Artificial Intelligence Quotient (AIQ)Metrics for Explainable AI: Challenges and ProspectsScorecard for Self-Explaining Capabilities of AI Systems 2. Cognitive Task Analysis

2. Cognitive Task Analysis 3. Critical Decision Method (CDM)

3. Critical Decision Method (CDM)

— — — — — — — — — — — — — — Source

4. Knowledge AuditHow Professionals Make Decisions edited by Henry Montgomery, Raanan Lipshitz, and Berndt Brehmer — Chapter 23 with Laura Militello:

The Knowledge Audit as a Method for Cognitive Task Analysis

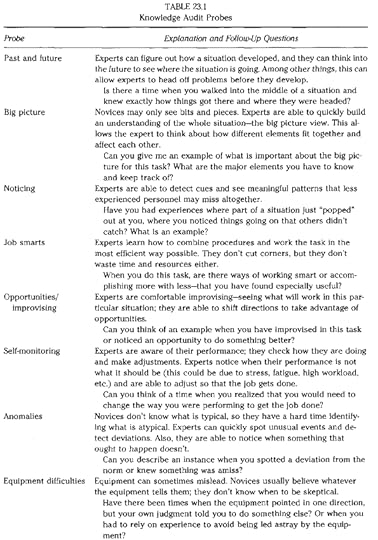

The Knowledge Audit was designed to survey the different aspects of expertise required to perform a task skillfully (Crandall, Klein, Militello, & Wolf, 1994). It was developed for a project sponsored by the Naval Personnel Research & Development Center. The specific probes used in the Knowledge Audit were drawn from the literature on expertise (Chi, Glaser, & Farr, 1988; Glaser, 1989; Klein, 1989; Klein & Hoffman, 1993; Shanteau, 1989). By examining a variety of accounts of expertise, it was possible to identify a small set of themes that appeared to differentiate experts from novices. These themes served as the core of the probes used in the Knowledge Audit.

The Knowledge Audit was part of a larger project to develop a streamlined method for Cognitive Task Analysis that could be used by people who did not have an opportunity for intensive training. This project, described by Militello, Hutton, Pliske, Knight, and Klein (1997), resulted in the Applied Cognitive Task Analysis (ACTA) program, which includes a software tutorial. The Knowledge Audit is one of the three components of ACTA.

The original version of the Knowledge Audit is described by Crandall et al. (1994) in a report on the strategy that was being used to develop ACTA. That version of the Knowledge Audit probed a variety of knowledge types: perceptual skills, mental models, metacognition, declarative knowledge, analogues, and typicality/anomalies. Perceptual skills referred to the types of perceptual discriminations that skilled personnel had learned to make. Mental models referred to the causal understanding people develop about how to make things happen. Metacognition referred to the ability to take one’s own thinking skills and limitations into account. Declarative knowledge referred to the body of factual information people accumulate in performing a task. Analogues referred to the ability to draw on specific previous experiences in making decisions. Typicality/anomalies referred to the associative reasoning that permits people to recognize a situation as familiar, or, conversely, to notice the unexpected.

Some other probes were deleted because they were found to be more difficult concepts for a person just learning to conduct a Cognitive Task Analysis to understand and explore; others were deleted because they elicited redundant information from the subject-matter experts being interviewed. To streamline the method for inclusion in the ACTA package, eight probes were identified that seemed most likely to elicit key types of cognitive information across a broad range of domains.

CURRENT VERSION

The Knowledge Audit has been formalized to include a small set of probes, a suggested wording for presenting these probes, and a method for recording and representing the information. This is the form presented in the ACTA0 software tutorial. The value of this formalization is to provide sufficient structure for people who want to follow steps and be reasonably confident that they will be able to gather useful material.

Table 23.1 presents the set of Knowledge Audit probes in the current version. These are listed in the column on the left. Table 23.1 also shows the types of follow-up questions that would be used to obtain more information. At the conclusion of the interview, this format becomes a knowledge representation. By conducting several interviews, it is possible to combine the data into a larger scale table to present what has been learned.

However, formalization is not always helpful, particularly if it creates a barrier for conducting effective knowledge elicitation sessions. We do not recommend that all the probes be used in a given interview. Some of the probes will be irrelevant, given the domain, and some will be more pertinent than others. Furthermore, the follow-up questions for any probe can and should vary, depending on the answers received.

In addition, the wording of the probes is important. Militello et al. (1997) conducted an extensive evaluation of wording and developed a format that seemed effective. For example, they found that the term tricks of the trade generated problems because it seemed to call for quasi-legal procedures. Rules of thumb was rejected because the term tended to elicit high-level, general platitudes rather than important practices learned via experience on the job. In the end, the somewhat awkward but neutral term job smarts was used to ask about the techniques people picked up with experience.

Turning to another category, the concept of perceptual skills made sense to the research community but was too academic to be useful in the field. After some trial and error, the term noticing was adopted to help people get the sense that experience confers an ability to notice things that novices tend to miss. These examples illustrate how important language can be and how essential the usability testing was for the Knowledge Audit.

The wording shown in Table 23.1 is not intended to be used every time. As people gain experience with the Knowledge Audit, they will undoubtedly develop their own wording. They may even choose their own wording from the beginning. The intent in providing suggested wording is to help people who might just be learning how to do Cognitive Task Analysis interviews and need a way to get started. In structured experimentation, researchers often have to use the exact same wording with each participant. The Knowledge Audit, however, is not intended as a tool for basic research in which exact wording is required. It is a tool for eliciting information, and it is more important to maintain rapport and follow up on curiosity than to maximize objectivity.

CONDUCTING A KNOWLEDGE AUDIT

We have learned that the Knowledge Audit is too unfocused to be used as a primary interviewing tool without an understanding of the major components of the task to be investigated. It can be too easy for subject-matter experts to just give speeches about their pet theories on what separates the skilled from the less skilled. That is why the suggested probes try to focus the interview on events and examples. Even so, it can be hard to generate a useful answer to general questions about the different aspects of expertise. A prior step seems useful whereby the interviewer determines the key steps in the task and then identifies those steps that require the most expertise. The Knowledge Audit is then focused on these steps, or even on substeps, rather than on the task as a whole. This type of framing makes the Knowledge Audit interview go more smoothly.

We have also found that the Knowledge Audit works better when the subject-matter experts are asked to elaborate on their answers. In our workshops, we encourage interviewers to work with the subject-matter experts to fill in a table, with columns for deepening on each category. One column is about why it is difficult (to see the big picture, notice subtle changes, etc.) and what types of errors people make. Another column gets at the cues and strategies used by experts in carrying out the process in question. These follow-up questions seem important for deriving useful information from a Knowledge Audit interview. In this way, the interview moves from just gathering opinions about what goes into expertise and gets at more details. For a skilled interviewer, once these incidents are identified, it is easy to turn to a more in-depth type of approach, such as the Critical Decision Method (Hoffman, Crandall, & Shadbolt, 1998). Once the subject-matter expert is describing a challenging incident, the Knowledge Audit probes can be used to deepen on the events that took place during that incident.

For example, Pliske, Hutton, and Chrenka (2000) used the Knowledge Audit to examine expert-novice differences in business jet pilots using weather data to fly. Additional cognitive probes were applied to explore critical incidents and experiences in which weather issues challenged the pilot’s decision-making skills. As a result of these interviews, a set of cognitive demands associated with planning, taxi/takeoff, climb, cruise, descent/approach, and land/taxi were identified, as well as cues, information sources, and strategies experienced pilots rely on to make these difficult decisions and judgments.

FUTURE VERSION

One of the weaknesses of the Knowledge Audit is that the different probes are unconnected. They are aspects of expertise, but there is no larger framework for integrating them. Accordingly, it may be useful to consider a revision to the Knowledge Audit that does attempt to situate the probes within a larger scheme.

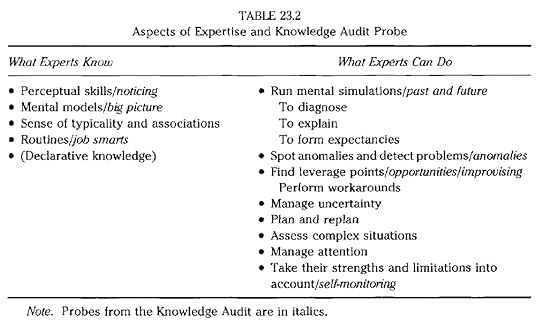

In considering the probes presented in Table 23.1, they seem to fall into two categories. One category is types of knowledge that experts have or “what experts know,” and the second category is ways that experts use these types of knowledge or “what experts can do.” Table 23.2 shows a breakdown that follows these categories. Probes from the current version of the Knowledge Audit are included in italics next to the aspect of expertise each addresses.

The left-hand column in Table 23.2 shows different types of knowledge that experts have. They have perceptual skills, enabling them to make fine discriminations. They have mental models of how the primary causes in the domain operate and interact. They have associative knowledge, a rich set of connections between objects, events, memories, and other entities. Thus, they have a sense of typicality allowing them to recognize familiar and typical situations. They know a large set of routines, which are action plans, well-compiled tactics for getting things done.

Experts also have a lot of declarative knowledge, but this is put in parentheses in Table 23.2 because Cognitive Task Analysis does not need to be used to find out about declarative knowledge.

The right-hand column in Table 23.2 is a partial list of how experts can use the different types of knowledge they possess. Thus, experts can use their mental models to diagnose faults, and also to project future states. They can run mental simulations (Klein & Crandall, 1995). Mental simulation is not a form of knowledge but rather an operation that can be run on mental models to form expectancies, explanations, and diagnoses. Experts can use their ability to detect familiarity and typicality as a basis for spotting anomalies. This lets them detect problems quickly. Experts can use their mental models and knowledge of routines to find leverage points and use these to figure out how to improvise. Experts can draw on their mental models to manage uncertainty. These are the types of activities that distinguish experts and novices. They are based on the way experts apply the types of knowledge they have. One can think of the processes listed in the right-hand column as examples of macrocognition (Cacciabue & Hollnagel, 1995; Klein, Klein, & Klein, 2000).

Also note that the current version of the Knowledge Audit does not contain probes for all the items in the right column.

Table 23.2 is intended as a more organized framework for the Knowledge Audit. It is also designed to encourage practitioners to devise their own frameworks. Thus, R. R. Hoffman (personal communication) has adapted the Knowledge Audit. His concern is not as much with contrasting experts and novices as with capturing categories of cognition relevant to challenging tasks, such as forecasting the weather. Hoffman’s categories are noticing patterns, forming hypotheses, seeking information (in the service of hypothesis testing), sensemaking (interpreting situations to assign meaning to them), tapping into mental models, reasoning by using domain-specific rules (e.g., meteorological rules), and metacognition.

One of the advantages of the representation of the Knowledge Audit shown in Table 23.2 is that the categories are more coherent than in Table 23.1. Although there is considerable overlap between the Knowledge Audit probes in Table 23.1 and the aspects of expertise in Table 23.2, we have not developed wording for all of the probes taking into account the new focus on macrocognition. Moreover, we have not yet determined the conditions under which we might want to construct a Knowledge Audit incorporating more of the items from the right-hand column of Table 23.2, the macrocognitive processes seen in operational settings.

CONCLUSIONS

The Knowledge Audit embodies an account of expertise. We contend that any Cognitive Task Analysis project makes assumptions about the nature of expertise. The types of questions asked, the topics that are followed up, and the areas that are probed more deeply all reflect the researchers’ concepts about expertise. These concepts may result in a deeper and more insightful Cognitive Task Analysis project, or they may result in a distorted view of the cognitive aspects of proficiency. One of the strengths of the Knowledge Audit is that it makes these assumptions explicit.

Because it distinguishes between types of knowledge and applications of the knowledge, the proposed future version of the Knowledge Audit is more differentiated than the original version. This distinction has both theoretical and practical implications. There are also aspects of expertise that are not reflected in the Knowledge Audit, such as emotional reactivity (e.g., Damasio, 1998), memory skills, and so forth. We are not claiming that the Knowledge Audit is a comprehensive tool for surveying the facets of expertise. Its intent was to provide interviewers with an easy-to-use approach to capture some important cognitive aspects of task performance.

There can be interplay between the laboratory and the field. Cognitive Task Analysis tries to put concepts of expertise into practice. It can refine and drive our views on expertise just as laboratory studies do. The Knowledge Audit is a tool for studying expertise and a tool for reflecting about expertise.

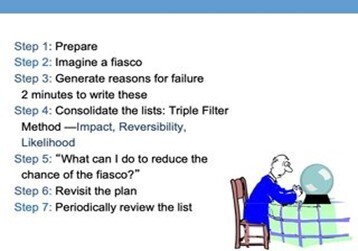

5. Pre-Mortem MethodThe Pre-Mortem MethodPerforming a Project Pre-MortemEvaluating the Effectiveness of the Pre-Mortem Technique on Plan Confidence with Beth Veinott and Sterling Wiggins 6. ShadowBox TrainingShadowBox Training for Making Better Decisions

6. ShadowBox TrainingShadowBox Training for Making Better Decisions

— — — — — — — — — — — — — — Source

7. Naturalistic Decision Making (NDM)The Naturalistic Decision Making ApproachNaturalistic Decision Making 8. Data/Frame TheoryA data-frame theory of sensemaking

8. Data/Frame TheoryA data-frame theory of sensemaking 9. Recognition-Primed Decision (RPD) ModelThe RPD Model: Criticisms and Confusions

9. Recognition-Primed Decision (RPD) ModelThe RPD Model: Criticisms and Confusions 10. Management by Discovery (MBD) Model

10. Management by Discovery (MBD) Model 11. Triple Path Model

11. Triple Path Model

— — — — — — — — — — — — — — Source

Articles by OthersPressAsian Development Bank (ADB) Use of Pre-Mortem by Susann RothA great story of tacit knowledge, and how to make it explicit by Nick MiltonShawn CallahanPatrick LambeMatt MooreDave SnowdenA brief conversation with Gary KleinOn Experts, Expertise and PowerASK Talks with Dr. Gary Klein (APPEL Knowledge Services — NASA)The five types of triggers for insights — and how you can spur innovation in your own organization by Madanmohan RaoA Conversation with Gary Klein by Daniel KahnemanGary Klein: An interview by Bob MorrisAnalyzing Failure Beforehand by Paul BrownHow to Develop Your Intuitive Decision Making by Winston SieckFarnam StreetGary Klein’s Triple Path Model of InsightImproving PerformanceConferencesI/ITSEC 2022 (Interservice/Industry Training, Simulation and Education Conference) — Tutorial: Principles for Designing Effective, Efficient, and Engaging Training to Accelerate ExpertiseNaturalistic Decision MakingKMWorld2022 Keynote: Artificial Intelligence Quotient — Tools for Building Appropriate Trust in TechnologySlidesVideo RecordingXAI Discovery Platform — VideoXAI Discovery Platform — Tool2015 Closing Keynote: Insights, Ideas, & Innovation2012 Keynote: KM Saves LivesPodcastsShadowBox TrainingPeople and ProjectsHow Your Setting Affects Your Decision-Making AbilityConducting Pre-Mortem AnalysisThe Science of InsightsSeeing What Others Don’tDecision Making in CrisisThe Expert vs. the Algorithm: Gary Klein on Decision-Making in HealthcareNaturalistic Decision Making AssociationVideosDecision Making in Crisis — The Cynefin CoGary Klein and Dave Snowden on KM and Singapore’s Risk Assessment and Horizon Scanning SystemThe Lightbulb Moment — Webinarhttps://medium.com/media/b92bec9fde41f18b4189734050b083a4/hrefLightbulb Moment — TEDxDaytonhttps://medium.com/media/ae6e357e3544a1e2dbe6f03041056fc7/hrefHow do Decisions Really Work?https://medium.com/media/fea5de43a5690d8bcce96a911b0e8f4e/hrefDeconstructing How Experts Make Decisionshttps://medium.com/media/babbb11c419e2dff731d0e69550256f5/hrefIntroduction to the World of Naturalistic Decision Makinghttps://medium.com/media/77a36045c5472599c9cbe187c01d2aa9/hrefConnecting Medical Simulations to Cognitionhttps://medium.com/media/74f712d2789ce8ae5713f0f608013e9d/hrefInsightshttps://medium.com/media/1e8d40a3218a1082ac2f71d67c6b6972/hrefHow can leaders make good decisions under a crisis?https://medium.com/media/9ea65ff84da1f455a92301e918976635/hrefCrisis Management Team in the Golden Hourhttps://medium.com/media/54676445f497411c1d1483fb88fec176/hrefUncertainty Metaphorshttps://medium.com/media/e2912a501de7eba5507646eeb2ea96a4/hrefBooksSnapshots of the Mind Sources of Power: How People Make Decisions

Sources of Power: How People Make Decisions Seeing What Others Don’t: The Remarkable Ways We Gain Insights

Seeing What Others Don’t: The Remarkable Ways We Gain Insights Streetlights and Shadows: Searching for the Keys to Adaptive Decision Making

Streetlights and Shadows: Searching for the Keys to Adaptive Decision Making The Power of Intuition: How to Use Your Gut Feelings to Make Better Decisions at Work

The Power of Intuition: How to Use Your Gut Feelings to Make Better Decisions at Work Working Minds: A Practitioner’s Guide to Cognitive Task Analysis with Beth Crandall and Robert R. Hoffman

Working Minds: A Practitioner’s Guide to Cognitive Task Analysis with Beth Crandall and Robert R. Hoffman Decision Making in Action: Models and Methods edited with Judith Orasanu and Roberta Caldenwood

Decision Making in Action: Models and Methods edited with Judith Orasanu and Roberta Caldenwood Naturalistic Decision Making edited with Caroline Zsambok

Naturalistic Decision Making edited with Caroline Zsambok Linking Expertise and Naturalistic Decision Making edited with Eduardo Salas

Linking Expertise and Naturalistic Decision Making edited with Eduardo Salas [image error]

[image error]

December 2, 2022

Knowledge Management Thought Leader 29: Heather Hedden

Heather Hedden designs, creates, and edits taxonomies, thesauri, metadata, and ontologies for indexing and tagging content to support content retrieval, search, and findability.

She trains others to create taxonomies and wrote the book The Accidental Taxonomist, now in its third edition. Heather is a frequent presenter at many of the leading conferences on taxonomy, search, and information architecture. She is based in the Boston area.

Current PositionsData & Knowledge Engineer, Semantic Web Company (SWC) 2020 — PresentTaxonomy Consultant and Instructor, Hedden Information Management, 2004 — PresentEducationPrinceton University, M.A., Near Eastern Studies, 1987–1990The American University in Cairo, Center for Arabic Study Abroad, 1988–1989Cornell University, B.A., Government (comparative politics and international relations), 1983–1987Profiles LinkedIn Hedden Information Management Twitter Facebook Profiles in Knowledge BooksThe Accidental Taxonomist, 3rd edition

Indexing Specialties: Web Sites

Videos

Understanding Attributes and Taxonomies

Other YouTube Videos

Conferences

The Knowledge Graph Conference

IAC (The information architecture conference)

Taxonomy Boot Camp

Bite-sized Taxonomy Boot Camp London

Enterprise Search & Discovery

Enterprise Search Summit

Other Content

LinkedIn Posts

LinkedIn Articles

The Accidental Taxonomist Blog

Hedden Information Management

Articles

SlideShare

Metadata and Taxonomies

Videos

Understanding Attributes and Taxonomies

Other YouTube Videos

Conferences

The Knowledge Graph Conference

IAC (The information architecture conference)

Taxonomy Boot Camp

Bite-sized Taxonomy Boot Camp London

Enterprise Search & Discovery

Enterprise Search Summit

Other Content

LinkedIn Posts

LinkedIn Articles

The Accidental Taxonomist Blog

Hedden Information Management

Articles

SlideShare

Metadata and TaxonomiesMetadata and taxonomies are distinct but related, and both are very important for making information, data, and content easier to manage and find.

Metadata is really standardized data about anything with shared attributes that needs to be organized and retrieved. These could be documents, spreadsheets, presentation files, images, multimedia files, or specialized files, such as engineering drawings. The documents and digital content tend to be content within an information system or file system. This is not limited, however, to database management systems, but includes content management system, document management system, digital asset management systems, authoring and publishing systems, collaboration systems, intranet systems, and workflow and project management systems, in addition to those that are forms of database management system, such as customer relationship management systems and product information management systems.

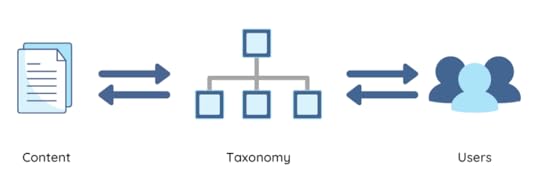

Taxonomies are organized arrangements of controlled terms/concepts that are associated with content to make it easier to find and retrieve the desired content. People use taxonomies to find the concepts they want and not just have to rely on keyword matches, which sometimes are inadequate or inaccurate. Taxonomies are often thought of as classification systems, such as those used in libraries, research collections, government statistics, or manufacturing specifications. While taxonomies have their origins in classification schemes, they have been adapted and thus go beyond the limited uses and formalities of such schemes. Taxonomies can be customized to a set of content, the needs of its users, and the requirements of a system and its user interface. We are all familiar with taxonomies for browsing for products in ecommerce websites, but each online store has a different set of products and thus a different taxonomy.

Taxonomies: Connecting Users to Content Taxonomy Uses

Taxonomy UsesFunctional Uses of Taxonomies

Browsing: If taxonomies are presented as displayed hierarchies, users can view browse to find and select a desired concept and then the retrieve content tagged with it.Searching: Users can enter words or phrases into a search box, and those words are matched against taxonomy terms that are tagged to the content. The matched taxonomy terms might display to the user in a drop-down list that comprises type-ahead or search-suggest matches to the search string.Discovery: Users may find content that they did not expect or did now know to look for, either by following links to related terms or following the link of a taxonomy term tagged to selected content.Filtering: If taxonomies are presented as facets for different aspects of content, users can limit their search results by selecting taxonomy terms from each of several facets and thus refine their search.Sorting: If taxonomies are organized into metadata property types, users can sort a list of results by matching criteria, which include being about topics of tagged taxonomy terms.Visualizing data: Taxonomy terms may be visualized in tag clouds where relative size of the term label font indicates frequency of occurrence, hierarchical topic trees, or networks of concepts and relationship links, which can provide an understanding of the subject domain.Personalizing information: Content can be delivered that meets a user’s profile or custom alerts which are based on pre-selected taxonomy terms.Recommendation of content: Content similar to what a user had selected can be recommended, based on shared taxonomy terms.Content management: Taxonomies can provide controlled metadata values of different types to manage content rights and workflow management.Content and data analysis: If taxonomies are linked to ontologies, which contain specific attributes and relations, search and analysis can be for data attributes and not just content.[image error]November 27, 2022

Knowledge Management Infographics

Communities Manifesto: 10 Principles for Successful Communities

[image error]November 19, 2022

Johel Brown-Grant: Profiles in Knowledge

This is the 85th article in the Profiles in Knowledge series featuring thought leaders in knowledge management. Johel Brown-Grant is a strategist in using storytelling to create job stories, user journeys, and personas. He has expertise in implementing knowledge strategies to map knowledge flows, uncover tacit knowledge, and foster an agile environment. Johel specializes in the use of User Experience (UX) and design thinking methodologies to support product development, applying service design to support innovation.

Johel is a storytelling strategist, UX architect, design thinking specialist, and knowledge management professional with extensive experience in enterprise learning, change management, and business storytelling. He speaks and facilitates workshops on the power of storytelling to teach, engage, empower, motivate and connect learners, leaders, groups and professional communities across diverse industries and professions. Johel has led important knowledge management and storytelling projects in government, and corporate and academic settings. In his current role at the U.S. State Department, he coordinates enterprise learning efforts across multiple departments.

A former Fulbright scholar, Johel holds master’s degrees in sociolinguistics, literature, and information and knowledge strategy from Universidad de Costa Rica, University of Pittsburgh, and Columbia University in the City of New York, respectively, and a doctorate in communication and rhetoric from Rensselaer Polytechnic Institute. He is based in Washington, DC.

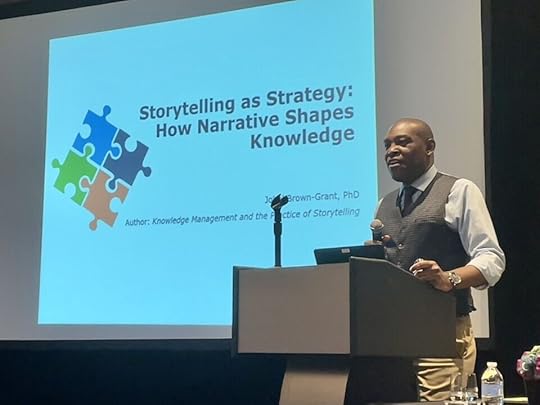

I enjoyed his session at KMWorld last week. I am in the lower right corner of this photo:

Background

Background

Originally form Costa Rica, Johel’s journey through storytelling and design began when, as a grad student in English Literature, he read Karen Shriver’s Dynamics in Document Design. With his prior training in sociolinguistics, he started to make the connections between culture, stories, language, and design. That experience came full circle when he worked in the software industry researching the design and development of style guides for graphical user interfaces. His entire career since then has been a constant exploration, discovery, testing, learning, and training others on the story-design-experience lifecycle.

Johel’s career is a collection of eclectic stories and experiences as a usability engineer, college professor and administrator, head of corporate communication, UX researcher/designer, knowledge management leader, strategist, and author. However, his most prized role is as a storyteller aficionado, who enjoys joining story slams and occasionally making appearances in the DC Moth storytelling circles.

EducationColumbia University — MS, Information and Knowledge Strategy, 2011–2012Rensselaer Polytechnic Institute — PhD, Communication and Rhetoric, 1998–2002University of Pittsburgh — MA, English, 1996–1998Universidad de Costa Rica — M.L., Linguistics, 1994–1996Grinnell CollegeProfilesStoryDNXLinkedInTwitterContentStoryDNXPublicationsArticlesResearchGateResearchGate (search)Semantic ScholarDe la Difusión a la Generación del Conocimiento: Elementos para lograr un Salto Cualitativo en el Uso de la Plataformas Virtuales (From Diffusion to Knowledge Generation: Elements to achieve a Qualitative Leap in the Use of Virtual Platforms)Incorporación de las tecnologías de información y comunicaciónen la docencia universitaria estatal costarricense: Problemas y soluciones (Incorporation of information and communication technologies in Costa Rican state university teaching: Problems and solutions)This article analyzes the issues that currently hinder the efforts to integrate Information and Communication Technologies (ICT) into instruction in the Costa Rican state-run higher education system. To address these issues, the article offers a set of solutions that may be used as a basis to improve ongoing ICT integration efforts or as a springboard for ICT integration initiatives. As a conclusion the article poses that the ultimate solution for ICT integration into college teaching involves a change of attitude among university authorities and the opening of wider and more effective discursive spaces to flesh out these issues

BlogsStoryDNX BlogLinkedIn PostsStorytelling Chronicle 1: The Experience is the StoryStorytelling Chronicle 2: The StoryverseStorytelling Chronicle 3: Data…the storyStorytelling Chronicle 4: NASA’s My Best MistakeStorytelling Chronicle 5: Interview Question # 1Storytelling Chronicle 6: What Makes a Good Story?Storytelling Chronicle 7: Organizational EQStorytelling Chronicle 8: What can storytelling do for project management?Articles by OthersKnowledge Services and Storytelling by Guy St. ClairBuilding KM Skills: World Vision, U.S. Department of State, and NASWA Discuss Traits for Success by APQCEmploying storytelling as a vehicle for knowledge sharing at KMWorld 2022 by Sydney BlanchardStories are the best way to get to the experience; experience is the story, and the story is the experience. Putting together a story is the act of putting together knowledge.PresentationsEngagement Strategies to Strengthen Communities of PracticeDiscursive Strategies to Build a Community of e-Portfolio Users in a Portfolio-Centric Institution with Rachid EladlouniDesigning an Online Program for Executive EducationKMWorld Conference

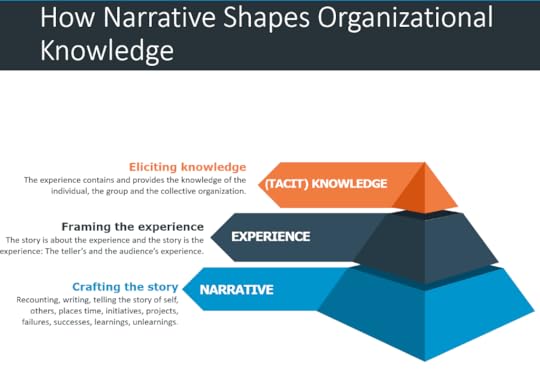

2022 A102: Storytelling as Strategy: How Narrative Shapes Knowledge

Organizations often approach storytelling as a positive and important resource that may be implemented to address complex operational challenges. This perspective usually focuses on the possible benefits narratives may yield and the need to develop resources to support storytelling initiatives. Even though there is an increasing understanding of the power of narratives to capture and share knowledge, there is a wide gap between what we perceive storytelling can do for organizations and the actual mechanisms and practices needed to implement it.Really effective storytelling in organizations must be conceived, developed, and deployed as a strategy that encompasses multiple actors, so resources are coordinated in a framework supporting an institutional vision for knowledge sharing. Johel explained why storytelling is a strategy, what its basic elements are, and what organizations need to do to develop and implement one that supports organizational goals. Drawing on practical examples, case studies and work by various authors, he took attendees on a practical and interactive journey to get ideas to develop their own storytelling strategies.Slides

PodcastsSmall Steps, Giant Leaps — Episode 40: Technical Storytelling, Part OneSmall Steps, Giant Leaps — Episode 41: Technical Storytelling, Part TwoVideosFrom Storytelling Strategist to StorytellerDesigning Knowledge: Capitalizing on the Connections between KM and Design Thinkinghttps://medium.com/media/2f7b04d2bb01b6b98d96c84a5811232c/hrefBooksKnowledge Management and the Practice of Storytelling: The Competencies and Skills Needed for a Successful Implementation

PodcastsSmall Steps, Giant Leaps — Episode 40: Technical Storytelling, Part OneSmall Steps, Giant Leaps — Episode 41: Technical Storytelling, Part TwoVideosFrom Storytelling Strategist to StorytellerDesigning Knowledge: Capitalizing on the Connections between KM and Design Thinkinghttps://medium.com/media/2f7b04d2bb01b6b98d96c84a5811232c/hrefBooksKnowledge Management and the Practice of Storytelling: The Competencies and Skills Needed for a Successful Implementation Excerpt

Excerpt

Table of Contents

IntroductionSection I: Conceptual Review1. Understanding the Concept of Storytelling2. The Practice of Storytelling as Knowledge Management3. Literacy, Competencies, and SkillsSection II: Competencies and Skills4. Rhetorical Competencies and Skills5. Performative Competencies and Skills6. Ethnographic Competencies and SkillsSection III: Assessment and Evaluation7. Assessing Storytelling Competencies and Skills8. Evaluating the Effectiveness of StorytellingSection IV: Lessons and Takeaways9. Lessons LearnedReferencesAssessment Strategies for Knowledge Organizations with Dean Testa and Denise Bedford Excerpt

Excerpt

Table of Contents

Section I: Assessment Fundamentals1. Assessment for Organizations2. Assessment as a Management Tool3. Assessment Models and MethodsSection II: Knowledge Management Assessments — Moving Theory to Practice4. Assessment Models and Methods for Knowledge Organizations5. Assessing Knowledge Management Capabilities6. Assessing Knowledge Capital Assets7. Assessing Knowledge Capacity of the BusinessSection III: Knowledge Management Assessments — Taking Action8. Designing a Knowledge Assessment Strategy9. Communicating Assessment ResultsSection IV: Sustaining Knowledge Management Assessments10. Getting Ready for Governance — Sustaining an Assessment Strategy11. Assessment CulturesAppendix A. Developing a Knowledge Assessment Strategy — A Project PlanBook ChaptersA Maturity Model for Storytelling Development in Knowledge OrganizationsECKM 2020 — Proceedings of the 21st European Conference on Knowledge ManagementECKM 2020 21st European Conference on Knowledge Management — page 107PreviewKnowledge Management Education Standards: Developing Practical Guidance — with Denise Bedford, Denise, Alexeis Garcia-Perez, and Marion GeorgieffECKM 2018 — Proceedings of the 19th European Conference on Knowledge ManagementECKM 2018 19th European Conference on Knowledge Management — page 74PreviewLifewide, lifelong comprehensive approach to knowledge management education: emerging standards — with Denise Bedford and Marion GeorgieffECKM 2016 Proceedings — page 62VINE Journal of Information and Knowledge Management SystemsPreview[image error]November 18, 2022

Knowledge Management Thought Leader 28: Jane Hart

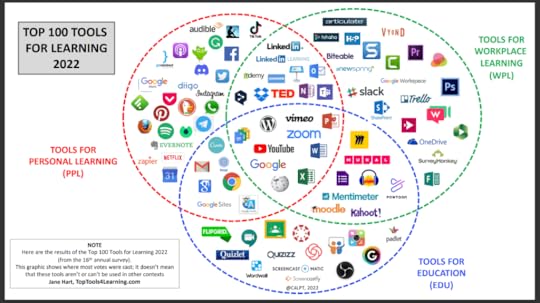

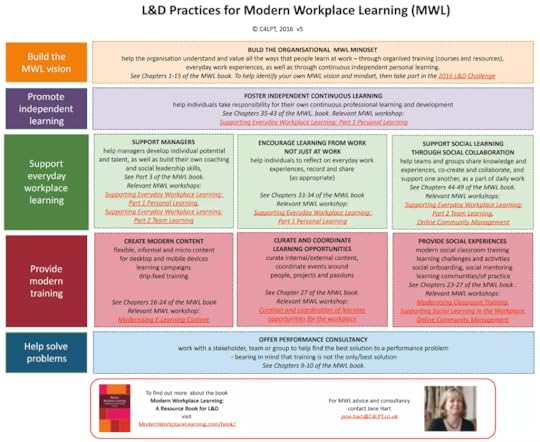

Jane Hart is an independent advisor and consultant who has been helping organizations for over 30 years, based in Hythe, England. She currently focuses on helping to modernize the Learning & Development (L&D) function in order to support learning more broadly and in more relevant ways in the workplace. Jane is the Founder of the Centre for Learning & Performance Technologies and produces the popular annual Top Tools for Learning list. She specializes in modern workplace learning, learning and performance technologies, social media tools, knowledge sharing, and workplace collaboration

In February 2013, the UK-based Learning & Performance Institute (LPI) presented Jane with the Colin Corder Award for Outstanding Contribution to Learning. In May 2018, the US-based ATD (Association for Talent Development) presented Jane with the Distinguished Contribution to Talent Development award.

ExperienceModern Workplace Learning: Speaker, Writer, and Adviser, 2000 — PresentCentre for Learning & Performance Technologies: Founder, 1997 — PresentEducationCity, University of London — MSc, Information Systems & Technology, 1990King’s College London, U. of London — BA, German & Portuguese, 197Profiles LinkedIn Modern Workplace Learning (MWL) Centre for Learning & Performance Technologies (C4LPT) Twitter Facebook Profiles in Knowledge BooksSocial Learning Handbook 2014: A practical guide to using social media to work and learn smarter

Modern Workplace Learning 2023: Continuous Learning and Development — What it means for individuals, their manager, and L&D

Videos

YouTube Channel

Other YouTube Videos

Other Content

LinkedIn Posts

Learning in the Modern Workplace Blog

Muck Rack Articles

How to build a culture for knowledge sharing at workHelp the team see the value of sharing — and what it will bring to each one of them individually (the “What’s In it For Me”), as well as what it will bring to the group as a whole (e.g., the ability to capture team knowledge, improve communications, productivity, teamwork and ultimately business performance).Help the team establish sharing as part of their daily routine — if it is seen as an extra to the daily work it won’t become a habit, so taking the time daily to share will need to be become ingrained into everyday work. This might mean, at first, setting some time aside each day to establish the practice.Help to encourage those who feel concerned about sharing for whatever reasons they might have — This might be due to a lack of confidence or competence, or fear that it will mean loss of power. In some cases, you may need to work one-to-one with an individual to address their personal concerns.Help the team to “add value” to what they share — one of the easiest ways for individuals to start sharing is to provide links to resources they have come across. However, they need to be aware that they also need to provide some additional context or commentary too, so that others can decide whether it is worth their while clicking through the links.Help the team to “work out loud” — another key way to help a team share is to encourage them to “work out loud” and talk about their work openly, the experiences they are having, as well as their successes and failures. This is part of the collective reflection process discussed in the previous section. The advantage of doing this is that others in the team have full visibility on what is happening in the team and can easily spot others with expertise or experience in areas of work they might be about to embark on.Help the team to avoid “over-sharing” — this is sharing for the sake of it and can often happen if too much pressure is put on a team to prove they are “social”. In which case it may be useful to re-assure the group that: (a) they are not being forced to be social and share everything, and (b) they will not be penalized if they don’t contribute all the time.

Videos

YouTube Channel

Other YouTube Videos

Other Content

LinkedIn Posts

Learning in the Modern Workplace Blog

Muck Rack Articles

How to build a culture for knowledge sharing at workHelp the team see the value of sharing — and what it will bring to each one of them individually (the “What’s In it For Me”), as well as what it will bring to the group as a whole (e.g., the ability to capture team knowledge, improve communications, productivity, teamwork and ultimately business performance).Help the team establish sharing as part of their daily routine — if it is seen as an extra to the daily work it won’t become a habit, so taking the time daily to share will need to be become ingrained into everyday work. This might mean, at first, setting some time aside each day to establish the practice.Help to encourage those who feel concerned about sharing for whatever reasons they might have — This might be due to a lack of confidence or competence, or fear that it will mean loss of power. In some cases, you may need to work one-to-one with an individual to address their personal concerns.Help the team to “add value” to what they share — one of the easiest ways for individuals to start sharing is to provide links to resources they have come across. However, they need to be aware that they also need to provide some additional context or commentary too, so that others can decide whether it is worth their while clicking through the links.Help the team to “work out loud” — another key way to help a team share is to encourage them to “work out loud” and talk about their work openly, the experiences they are having, as well as their successes and failures. This is part of the collective reflection process discussed in the previous section. The advantage of doing this is that others in the team have full visibility on what is happening in the team and can easily spot others with expertise or experience in areas of work they might be about to embark on.Help the team to avoid “over-sharing” — this is sharing for the sake of it and can often happen if too much pressure is put on a team to prove they are “social”. In which case it may be useful to re-assure the group that: (a) they are not being forced to be social and share everything, and (b) they will not be penalized if they don’t contribute all the time.Finally, when it comes to measuring the success of social sharing, it is all too easy to think that measuring social activity is the way to do this — that is by counting the number of posts, or comments, or likes, and so on — and then reward those who talk the most! But it will be much more important to measure how sharing has brought about improvements in job or team productivity or performance. This means focusing on the value that has been generated rather than the activity itself. Your role will be to help managers identify how they can measure these performance improvements — rather than try to manage the whole process for them!

TJ: Training Journal — October 2019 — Workplace learning expert Jane Hart discusses her life in L&D

PLAYING TO WIN

Jane’s top tips for success:

Stand out in a crowd. Don’t be a lemming and just follow the crowd, make your own mark.Question the status quo. Don’t just do what’s always been done, think about how you might do things differently.Try new things. If you fail, learn from your mistakes and try something else; don’t give up.Be a role model. Don’t just talk about new stuff, show what’s possible.Give your best. Don’t just get by.Show enthusiasm. It rubs off on people and is worth an awful lot.Keep ahead of the game. Don’t just keep up.Futureproof your own career. No one else is going to do it for you, and the world is moving so fast that you will need to be prepared for your next step.Learn something new every day. Not just from a course, but something from your daily work or from the web.Share your knowledge and experiences, don’t hoard them. Knowledge isn’t power; sharing knowledge is power.Top 100 Tools for Learning 2022 — Results of the 16th Annual Survey L&D Practices for Modern Workplace Learning

L&D Practices for Modern Workplace Learning [image error]

[image error]

November 11, 2022

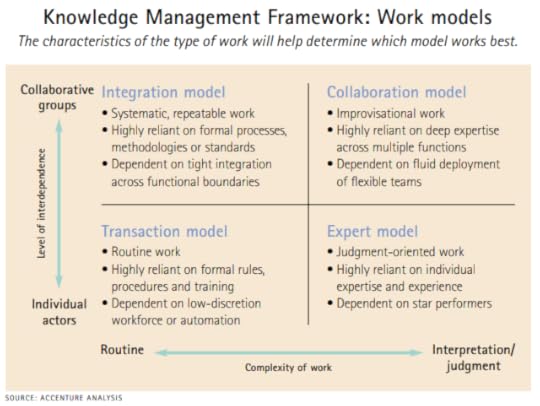

Knowledge Management Thought Leader 27: Jeanne Harris

Until her retirement in 2014, Jeanne led Accenture’s business intelligence, analytics, performance management, knowledge management, and data warehousing consulting practices.

Her research interests include data monetization strategies, cognitive computing, business implications of technological innovation, and the evolving role of data scientists and other analytical talent. Jean’s specialties include analytics, cloud computing, decision making, information technology, managing knowledge and attention, and talent management.

Jeanne has written over 100 articles on analytics, big data, cognitive computing, business intelligence, knowledge management, and IT strategy in major business and technology publications, such as Harvard Business Review, MIT Sloan Management Review, and the Financial Times.

She and I have both lectured at Columbia University. We both have undergraduate degrees from Washington University and overlapped there from 1972–1974.

ExperienceColumbia University, 2011–Present: Teaches a virtual, graduate-level course “Leading Business Analytics in the Enterprise”International Institute for Analytics 2017–2020Accenture, 1977–2014; last position: Global Managing Director, Information Technology Research at the Accenture Institute for High Performance in ChicagoEducationUniversity of Illinois Urbana-Champaign: MS, Information Science, 1974–1975Washington University in St. Louis: BA, Art History, English Literature, 1971–1974Profiles Columbia University Wikipedia Site LinkedIn Twitter Facebook Profiles in Knowledge BooksCompeting on Analytics: The New Science of Winning with Tom Davenport

Analytics at Work: Smarter Decisions, Better Results with Tom Davenport and Robert Morrison

Videos

Big Results from Big Data

Competing on Analytics

Other Content

LinkedIn Posts

Google Scholar

ResearchGate

HBR

Publications

Innovation at Work: The Relative Advantage of Using Consumer IT in the Workplace

Critical Evaluation: Put Your Analytics into Action

Turning Cognitive Computing into Business Value Today

Getting Value from Your Data Scientists

Starting a Corporate Information Business? Consider These Six Tips

It Takes Teams to Solve the Data Scientist Shortage

Competing on Talent Analytics

Getting Serious About Analytics: Better Insights, Better Decisions, Better Outcomes

Lifetime Achievement Award — The Women Leaders in Consulting, 2009 — Consulting Magazine

Videos

Big Results from Big Data

Competing on Analytics

Other Content

LinkedIn Posts

Google Scholar

ResearchGate

HBR

Publications

Innovation at Work: The Relative Advantage of Using Consumer IT in the Workplace

Critical Evaluation: Put Your Analytics into Action

Turning Cognitive Computing into Business Value Today

Getting Value from Your Data Scientists

Starting a Corporate Information Business? Consider These Six Tips

It Takes Teams to Solve the Data Scientist Shortage

Competing on Talent Analytics

Getting Serious About Analytics: Better Insights, Better Decisions, Better Outcomes

Lifetime Achievement Award — The Women Leaders in Consulting, 2009 — Consulting MagazineThought leadership plays an increasingly critical role in the ability of a consulting firm to differentiate itself in the marketplace.Interview with Ajay Ohri

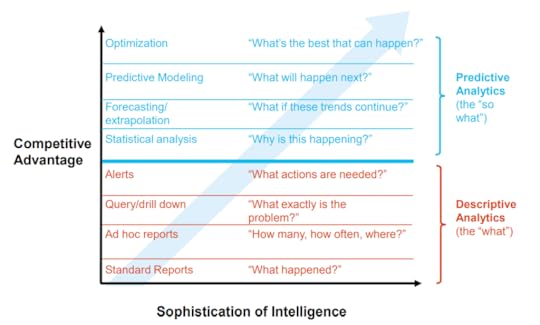

Analytics are an important tool for cutting costs and improving efficiency. Optimization techniques and predictive models can anticipate market shifts, enable companies to move quickly to slash costs and eliminate waste. But there are other reasons analytics are more important than ever:

Manage risk. More precise metrics and risk management models will enable managers to make better decisions, reduce risk and monitor changing business conditions more effectively.Know what is really working. Rigorous testing and monitoring of metrics can establish whether your actions are really making desired changes in your business or not.Leverage existing investments (in IT and information) to get more insight, faster execution, and more business value in business processes.Invest to emerge stronger as business conditions improve. High performers take advantage of downturns to retool, gain insights into new market dynamics and invest so that they are prepared for the upturn. Analytics give executives insight into the dynamics of their business and how shifts influence business performance.Identify and seize new opportunities for competitive advantage and differentiation.Analytics at Work: Secrets of Data-Charged Organizations Knowledge management strategies that create value

: A profile of major knowledge management strategies — with Leigh P. Donoghue and Bruce A. Weitzman

Knowledge management strategies that create value

: A profile of major knowledge management strategies — with Leigh P. Donoghue and Bruce A. Weitzman [image error]

[image error]

November 8, 2022

Improving Gender Balance in KM Thought Leadership Recognition

I have reached the half-way point in my Lucidea’s Lens: Knowledge Management Thought Leaders series. 26 profiles have already been published, and there are 26 more to go. The question came up about why all 26 profiles have featured women.

Why is that, and what about the men? This post answers those questions and provides statistics about gender balance in my KM lists and blog posts.

In my very first blog post in 2006 I included a list of 32 knowledge management thought leaders. Of those in the list, 25 (78%) were men and just 7 (22%) were women, but I didn’t pay any attention to this at the time. When my first book was published in 2007, I expanded this list to 52 and included it in the appendix. It had 44 men (85%) and 8 women (15%), which was even worse, but once again, it went unnoticed.

In 2016 I published a blog post, 100 KM Thought Leaders and 50 KM Consultants, which was later updated to 200 KM Thought Leaders and 100 KM Consultants. These lists have continued to grow over time.

In 2018 Bruce Boyes posted an article in RealKM Magazine, Improving gender equality in knowledge management, in which he wrote:

Today March 8 is International Women’s Day, offering an opportunity to reflect on gender equality in the knowledge management (KM) discipline. Is there a gender imbalance in KM, and if there is, what should we do about it?

While I haven’t been able to locate any robust statistics about the proportion of women in various KM roles, there are some strong indicators that the discipline has a significant gender equality problem. For example, in Stan Garfield’s comprehensive list of KM Thought Leaders, just 16% are women.

Barbara Fillip followed that up with a post in her Insight Mapping blog, Women in Knowledge Management (or any male-dominated field), in which she wrote:

Stan Garfield posted a list of KM Thought Leaders. I don’t think the list is new, but it circulated recently on LinkedIn, which is where I saw it. The great majority of the thought leaders on that list are men. I am re-posting here the names of women who were on that list. The idea is 1) to give the women more visibility with a separate list; 2) to point out that perhaps there are many more women thought leaders in KM who need to be added to this list.

There was also a recent article in RealKM by Bruce Boyes about the issue of gender equity in KM. The article seemed to point to the fact that the field of KM isn’t immune to broader societal inequalities. There is nothing surprising about that and I can’t disagree. Having experienced some gender inequality frustrations of my own. As a woman, I have a good sense of how it has affected my career. I can’t say I have good answers or solutions other than to become more vocal about it AND take responsibility for some of it as well.

At the end of 2017 I started a new series of LinkedIn articles Profiles in Knowledge: Insights from KM Thought Leaders. At first, I did not consider gender and geographic balance in the people I profiled, but after reading what Bruce and Barbara wrote, I began to think about how I could address that in the series. I replied to Barbara with the following comment:

The issue of possible cultural/geographic bias in the list was raised when I first published it. I encouraged suggested additions, and as a result, the list grew from 50 to 100 to 200. Suggested additions are still welcome.

I became more sensitive to the larger percentage of male thought leaders I had profiled and made a conscious effort to provide better gender balance. Starting in late 2018, one year into the series, I included more women. I also tried to alternate between different regions of the world in which the thought leaders resided.

When I started the Lucidea’s Lens: KM Thought Leaders series earlier this year, I decided to feature 52 women, one each week for a year. I continue to write my Profiles in Knowledge series in which I now feature men each month. By writing about a different woman each week and a different man each month, I am attempting to achieve better balance over time.

Here are details on the current gender breakdowns for the KM Thought Leaders List and the two series. Some of the Lucidea’s Lens series are updated versions of previous Profiles in Knowledge. In the list below, “first-time individual” refers to new profiles of people who were either previously featured in multi-person profiles or who are being featured for the first time.

KM Thought Leaders List: 205 men (78%), 57 women (22%)Individual Profiles in Knowledge: 46 men (58%), 33 women (42%)Multi-person Profiles in Knowledge: 48 men (70%), 21 women (30%)Totals of individual and multi-person Profiles in Knowledge: 94 men (64%), 54 women (36%)Lucidea’s Lens Thought Leaders Series: 26 women (11 first-time individual) (100%)Totals of Individual Profiles in Knowledge and Lucidea’s Lens first-time individuals: 46 men (51%), 44 women (49%)There are many outstanding women who deserve recognition but who are sometimes overshadowed by men, so this is my effort at correcting that. If you know of thought leaders in knowledge management you believe I should include in either series, please write to me at stangarfield@gmail.com so I can consider them.

See also: Profiles in Knowledge in honor of International Women’s Day SIKM Leaders Community threads on gender KM thought leaders — are they REALLY all from the USA? by Nick Milton Where are the non-US/UK/Australia thought leaders in KM? by Nick Milton.[image error]October 28, 2022

Knowledge Management Thought Leader 26: Kirsimarja Blomqvist

Kirsimarja Blomqvist is Professor for Knowledge Management at Lappeenranta University of Technology (LUT) in Lappeenranta, Finland. Her professional interests include trust, knowledge management, collaborative innovation, digitalization, new forms of organizing, and digital platforms. She has published over 180 research articles in international academic journals, books, and conferences.

Kirsimarja serves as Associate Editor for the Journal of Trust Research and on the editorial boards for Industrial Marketing Management and Journal of Organization Design. She is a founding and board member of First International Network of Trust Researchers (FINT) network comprising over 700 trust researchers around the world. She serves on the Academy of Finland Research Council for Culture and Society, the Finnish Cultural Foundation, and Fulbright Finland.

ExperienceLappeenranta University of Technology — Professor for Knowledge management, 2002 — PresentStanford University — Visiting Scholar, 2013–2015 and 2011–2012Telia Sonera — Director, 1999–2007EducationTurku School of Economics, MSc, International MarketingLUT University, PhD, Economics & Business AdministrationProfiles LUT University CV LinkedIn Facebook Twitter Books All Book Chapters Google BooksKnowledge Management, Arts, and Humanities: Interdisciplinary Approaches and the Benefits of Collaboration edited by Meliha Handzic and Daniela Carlucci — Chapter 2 (with Anna-Maija Nisula): Understanding and Fostering Collective Ideation: An Improvisation-Based Method

Managing Innovation: Understanding and Motivating Crowds edited by Alexander Brem, Joe Tidd, and Tugrul Daim — Chapter 2 (with Miia Kosonen, Chunmei Gan, and Mika Vanhala): User Motivation and Knowledge Sharing in Idea Crowdsourcing

Understanding Trust in Organizations edited by Nicole Gillespie, Ashley Fulmer, and Roy Lewicki — Chapter 13 (with Lisa van der Werff and Sirpa Koskinen): Trust Cues in Artificial Intelligence: A Multi-Level Case Study in a Service Organization

Videos

What is trust in B2B relationships?

Generalized and specific trust

Changing nature of trust in a global environment

How is trust in Finland specific compared to other countries?

Is there an optimal trust?

Challenges of Remote Working #2 — Trust, Surveillance and AI

Other Content

Google Scholar

Semantic Scholar

ResearchGate

Academia

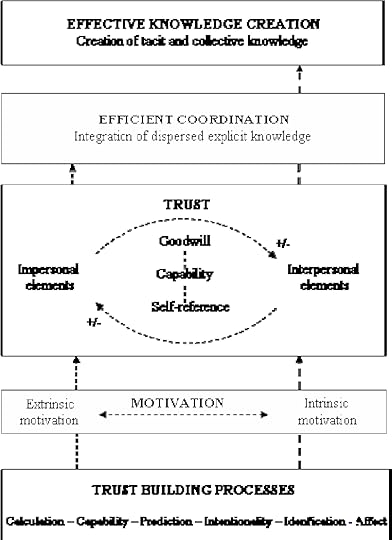

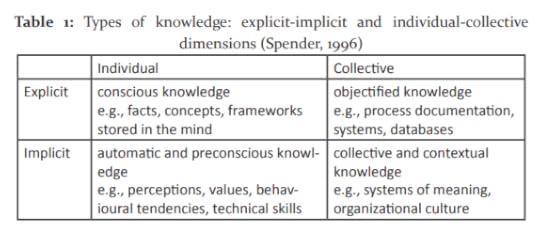

Trust in Knowledge-Based Organizations

Videos

What is trust in B2B relationships?

Generalized and specific trust

Changing nature of trust in a global environment

How is trust in Finland specific compared to other countries?

Is there an optimal trust?

Challenges of Remote Working #2 — Trust, Surveillance and AI

Other Content

Google Scholar

Semantic Scholar

ResearchGate

Academia

Trust in Knowledge-Based Organizations Knowledge Transfer in Service-Business Acquisitions with Miia Kosonen

Knowledge Transfer in Service-Business Acquisitions with Miia Kosonen [image error]

[image error]

October 22, 2022

Mark Britz: Profiles in Knowledge

This is the 84th article in the Profiles in Knowledge series featuring thought leaders in knowledge management. Mark Britz is a performance strategist. He helps people and organizations do better by first being better connected, building on their strengths, and identifying opportunities to improve workflow. He specializes in social learning, culture, organizational design, collaboration, social business, and Personal Knowledge Management (PKM).

Mark is Director of Event Programming at The Learning Guild and Workplace Performance Advisor at Social By Design Solutions, his own consultancy. He is the co-author of Social by Design: How to create and scale a collaborative company. Mark is a frequent writer, speaker, and commenter. He is based in Syracuse, New York.

Background

Mark solves organizational performance problems. Using an array of formal and social strategies, his efforts have altered conventional beliefs about organizational development and have helped companies improve their execution, agility, and collaborative technology use.

He has led the design and development of solutions involving formal, informal, and social learning. Mark promotes the use of collaborative technologies and user-generated content to extend training, enhance peer-to-peer knowledge sharing, and encourage informal learning channels.

EducationSyracuse University — Master of Science, Inclusive EducationState University of New York College at Oswego — Bachelor of Science, Secondary EducationProfilesLinkedInAboutTwitterFacebookContentThe Simple Shift: Navigating complexity, simplySocial By Design Solutions: Improve Engagement, Increase Collaboration, Create Continuous LearningThe Learning GuildLinkedIn ArticlesLinkedIn PostsLinkedIn DocumentsMediumThe Learning Guild’s TWISTLearning Solutions MagazineArticlesFlippancy: The Biggest Threat to Enterprise Social MediaL&D’s Business Is Not In Driving Social BusinessL&D Needs to Get In The Time Saving BusinessA Tale of Two SocialsSocial Learning Q&AOpen Up!True social is about openness, authenticity, and honesty.

My take? Social in organizations, like real life relationship building, should take work and using social tech should involve some rigor to build meaningful networks not just get work done. In the long run these deeper activities build stronger, necessary skills. If you already have closed groups now in your ESN, I’d argue that the act of removing them would be a catalytic mechanism that can have far reaching, unforeseen and positive impacts.

Yes, it may be uncomfortable but if we want the openness that we crave and achieve the innovation and creativity we need then just say no and better support people in finding their way in wall-less garden.

Conversations Over ClicksLet’s be honest, everyone gets moved a little when someone likes or shares their content online, but this is only for a moment and then the emotion is gone at the speed of the Internet. Ask yourself, what have you “liked” or shared of someone else’s content that you actually remember? Or more importantly led you to think or behave differently? I’d argue that when you put fingers to the keyboard and type a response to engage in additional online dialog it is memorable. It’s memorable and closer to behavior change because it’s done often with careful thought and a more sustained emotional connection to the individual(s) and the content.

So rather than count vanity metrics, we aim to share to start conversations. Drawing on a mantra of mine that “knowledge doesn’t exist within us but between us, in our conversations,” we look to meaningful dialog as being much closer to behavior change than the simple, fleeting click of an icon. We know full well too that we will have far fewer conversations than likes but this is about quality and not quantity.

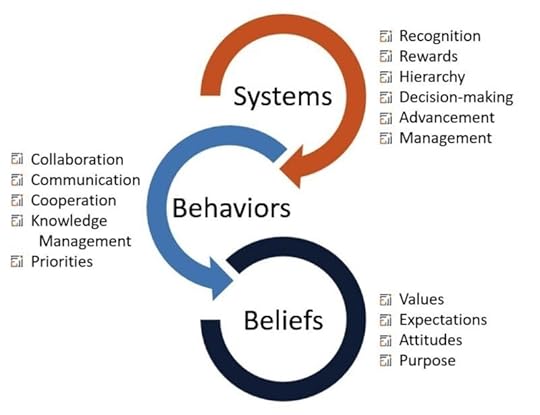

The Epitome of Social By Design: Context is critical for socialThe goal of social is to reduce friction.Social starts with people not technology.Conversation creates movement.Strong social cohesion is built in layers.Increasing conversation that is the key indicator of progress.Social Learning at Work: Quick Start GuidePractice Transparency and Openness: Show Your Work/ Work Out Loud. Begin by ensuring that all important but not sensitive work is accessible and encourage observation and input by all levels of the organization.Reward & Recognize: Be visible in thanking, acknowledging and rewarding sharing and cooperative behaviors. Be diligent in encouraging open sharing of ideas, resources and collaboration. Recognize and praise group inputs and outcomes. Model exactly what you want to see. Encourage leaders to model exactly what you want to see.Encourage Curation over Creation: Share resources with the added “why” of its personal value to you — add context. Ensure access to outside information and to internal channels to share. Identify models of proper vetting and context adding to aid in the movement of best of kind resources and information quickly through the organization.Formalize Social Technology: Use traditional communication channels (email, meetings) to move people to increased participation on social platforms. Create a simple structure for groups and keep them open vs. closed or private. Have employees complete profiles with an emphasis on skills and interests over titles. Nudge large group emails to be posted on social platforms. Ask sincere questions, to which the answers will help and inform your work.Systems, Behaviors, BeliefsLet’s focus a little LESS on working to change a leader’s own social behaviors and MORE on helping leaders to change the systems that hinder organization-wide social behaviors.

A healthy organizational social structure

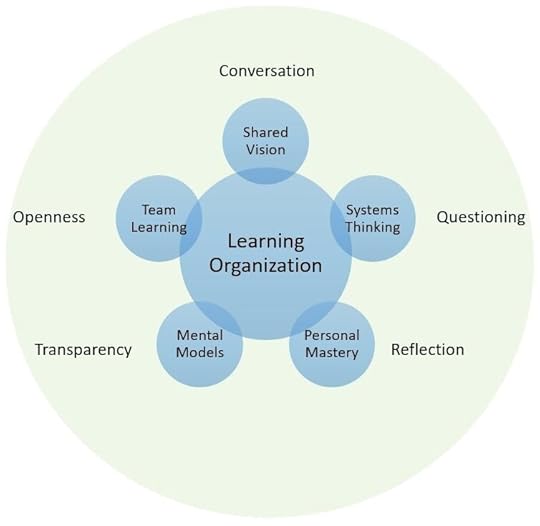

A healthy organizational social structureAt its most basic element, learning is about communication. So to be truly realized, (Senge’s 5 Disciplines of) a Learning Organization requires a healthy organizational social structure.

The Problem with Collaboration

The Problem with CollaborationHarold Jarche succinctly stated that “Collaboration is working together for a common objective“. This can be solving a problem, building something new or different and it is very, very different than cooperating which he goes on to state is “sharing freely without any expectation of reciprocation or reward.” When we stop and consider how much of our interactions at work are really cooperative rather than collaborative, we might want to better call it what it is. And when someone talks of a trusting relationship or being part of a community, scratch that surface a bit — all that glitters isn’t gold.

CommentsCompetency Models — HR & Understanding Work in the Network Era by Jon Husband

Mark’s comment: Jon, don’t you think this will always be fluctuating? Social being about the dynamic condition of being human and networks being driven by technology (always changing). Competency Models are typically explicit — not that it’s a bad thing but it seems that in a world of (borrowing from Harold Jarche) “perpetual beta” the cycle of explicit-tacit-explicit is rapid, the strength for individuals then doesn’t lie in aligning to a model but the ability to learn-reflect-adjust-learn. Then again, you do note the term “generic” when describing these models and that does allow for some flexibility You stated too that “I believe it is still too early, in 2013, to deeply understand what effective and successful performance in a networked environment looks like” — I would guess that the real measure is not about performance IN the network but more the performance because of it? Excuse my ramble — you’ve given me much to ponder (as usual) and for that — thanks!

Jon’s reply: “I would guess that the real measure is not about performance IN the network but more the performance because of it?” I think this is a very good point. Thanks for expressing it so clearly, Mark!

Why writing matters by Euan Semple

Mark’s comment: Until I started writing (blogs, micro-blogging) not as often as I’d like of course, I hadn’t truly experienced the immediacy of this public reflective exercise and how powerful it is. The thoughts once written invite global commentary which may or may not be valuable. The real value is in how, once written, the ideas, beliefs, and practices noted reverberate internally.

A simpler approach to KM by Harold Jarche

Mark’s comment: Is KM about collecting data or is it really about sense-making” — An excellent question to start any executive dialog on this topic. Sadly technical systems vs. human systems have dominated this conversation for far too long so it is quite cemented for many. Your 3 points are excellent and success ultimately, and once again rests on trust doesn’t it?

Harold’s reply: That’s what I firmly believe, based on several decades of experience, Mark, but nobody is beating a path to my door to do this.

Articles by OthersIntroducing The eLearning Guild’s Newest Team Member by David KellyMR Digital & Learning Interview by Myles RunhamRegulating surprises by Dennis PearceSocial By Design: A Book Review And Interview by Mitch MitchellThe ITA Jay Cross Memorial Award for 2018 by Clark Quinn6 Highly Important Benefits Of Knowledge Management You Need to Know by Dragoș BulugeanThe Internet Time Alliance Jay Cross Memorial Award for 2018 by Harold JarcheThe third annual Internet Time Alliance Jay Cross Memorial Award for 2018 was presented to Mark Britz. Mark has experience both inside and outside organizations and has focused on improving workplace performance. He questions conventional beliefs about organizational development and has championed better ways to work and learn in the emerging networked workplace. Mark is currently at the Learning Guild as well as a Service Partner with the 70:20:10 Institute. He was an early adopter of using social media for onboarding and has long been active on social media, contributing to the global conversation on improving workplace learning.

Culture, the most powerful presence in your organization, is only learned socially & informally. Social Media spreads your culture quickly — for better or worse.

Mark wrote about learning at work:

If we want real learning in organizations we must get back to the core of how and where people learn, and what moves us most. Simply, much learning happens in our work and with others. Organizations/leadership would do well then to have more strategic conversations about how to create more space, more opportunity, and more connection rather than more courses, classes and content.ConferencesDevLearn Conference & ExpoLearning 2022Learning Solutions Conference & Expo 2016PresentationsSlideShareRe-image Organizational LearningWhy blogging still mattersPodcastsA new era of learning and development — People at WorkSocial Learning at Work: A Quick Start Guide — Learning UncutWhere can we find inspiration for L&D? — The Mind Tools L&D PodcastOrganizational design: Should L&D fight the system? — The Mind Tools L&D PodcastSocial by Design vs Social by Chance — The Mind Tools L&D PodcastSocial by Design: Revisited — The Mind Tools L&D PodcastVideosYouTubeConversation with Jane Harthttps://medium.com/media/2621fe6c4a3859be6c646e9c13d4d513/hrefInterviewed by Guy Wallacehttps://medium.com/media/1ccc2558ad433fa3710df5739afb08de/hrefSocial practices, principles and platformshttps://medium.com/media/1e35ef702eccd5c6298c5c5c034557fa/hrefBooksSocial by Design: How to create and scale a collaborative company with James Tyer

Revolutionize Learning & Development: Performance and Innovation Strategy for the Information Age by Clark Quinn — Case Study in Chapter 8: Systems Made Simple

Revolutionize Learning & Development: Performance and Innovation Strategy for the Information Age by Clark Quinn — Case Study in Chapter 8: Systems Made Simple [image error]

[image error]

October 21, 2022

Knowledge Management Thought Leader 25 — Mary Ellen Bates

Mary Ellen Bates is the founder and principal of Bates Information Services Inc. After 15 years managing corporate information centers and specialized libraries, she started her business in 1991 with the intent of providing high-end research and analysis services to strategic decision-makers.

She also writes, teaches and speaks frequently about issues in the information industry. She has written seven books on various aspects of the information industry, as well as a number of white papers and ebooks sponsored by information companies for lead generation.

Since 2006, she has offered coaching services for both new and long-time solopreneurs, after having served as an informal mentor for many fellow infopreneurs. May Ellen is a self-described Info-entrepreneur, enabler of better business decisions, and strategic coach for solopreneurs.

Mary Ellen believes in the value of community and the importance of giving back to the people and organizations that have supported her over the years. She twice served as the president of the Association of Independent Information Professionals, have been on the board of directors for the Special Libraries Association. Her awards include AIIP’s Sue Rugge Memorial Award for mentoring (twice) and the President’s Award, the John Jacob Astor Award in Library and Information Science, and the Special Libraries Association’s Professional Award. She is a Fellow of SLA.

Mary Ellen earned a Master’s in Library and Information Science from the University of California at Berkeley and a Bachelor of Arts in Philosophy from the University of California at Santa Barbara. She lives in the foothills of the Rocky Mountains near Boulder, Colorado.

ExperiencePrincipal, Bates Information Services, 1991 — PresentColumnist, Online Searcher Magazine, 2001 — PresentQuote: