Daniel Miessler's Blog, page 107

December 22, 2017

A List of Machine Learning (ab)Use Cases

To benefit from the work I put into my typography, read natively at: A List of Machine Learning (ab)Use Cases.

—

I keep thinking of interesting use cases for Machine Learning at various stages of its development (near and more distant future), and I figured I’d capture them.

I’ll also include common ones and interesting ones I’ve heard elsewhere.

Extract faces from video, and tell you 1) if they’re known criminals, 2) if their agitated in some way that could indicate a future problem, 3) what their current mood is, 4) if they’re gay or straight, 5) how smart they’re likely to be. All these things will be compiled into various predictions of behavior.

In addition to facial recognition, analysis will be done on the entire presentation of a human as they move through public places. This means their clothing, their body language, their gait, their facial expressions, any voice data that can be consumed and parsed for emotion, etc. All these will further enhance the prediction algorithms.

Realtime translation of what’s being said by someone in another culture or language (or even your own), with the option to adjust how much it interprets other signals like body language, tone, facial expression, etc. to augment the translation (Saša Zdjelar).

Public conversations will be parsed in realtime in public places, and sensitive content will be extracted and tied to individuals for the purposes of preventing crime. This will start out as terrorism prevention only, but will eventually make it down to regular crime as well. Not only will this be simple parsing of conversations via NLP and voice recognition, but analysis of the voice and tone will be combined with the content (and the person) to identify possible threat actors.

Analyze a large population of people in a popular public place and determine what the current fashion is at any given moment, and then based on how it’s changing predict what it will be soon (Benedict Evans).

Many of these might be disturbing for whatever reason. I’m capturing what’s inevitably going to be possible and implemented, not the moral implications thereof.

Analyze an industrial process that involves humans (or not) and identify opportunities for improvement (Benedict Evans).

Listen to the sounds in a household and recommend actions based on hidden problems. If there is tension in the air between two people (they’re stressed because of work, or they’ve had a fight), recommend a de-escalation technique to relax both sides.

Notice when someone is getting tired while reading or listening to something (based on observing their attention) and make adjustments to the content they’re being shown, e.g., make the text larger, add color to key points, slow the audio down or make it louder, or add pitch variation or an additional narrator voice, etc.

Read everything produced on the internet and surface great content that would have gone unnoticed otherwise. Basically, Crawlers + ML -> AI Reddit. You train the algorithms with content that everyone loved that was surfaced organically, and then find content that has the same je ne sais quoi on random blogs and podcasts with no followers. The result is the meritocratization of content.

Listen to speech patterns and identify early signs of dementia and other cognitive diseases.

Observe students while they read, write, play, and take exams, and then build them a custom curriculum based on the best ways for them to learn.

Watch a farm’s crop from above using drone footage and custom-build fertilization and pesticide treatment plan.

Using 360 degree sensors mounted on every person, monitor one’s surroundings 24/7, and alert the wearer of dangers around them, e.g., someone following with the intent to mug or attack them.

Using 360 degree sensors mounted on every person, monitor one’s surroundings 24/7, and alert the wearer when there is an opportunity for serendipity nearby, e.g., meeting a new lover, or making a business contact, based on shared interests or mutual acquaintances.

Parse all your activity for a given year, including what you read, watched, enjoyed, etc., and then build a customized Serendipity Calendar that incorporates the types of events you’d love, restaurants you should try, people you should meet, shows to watch, etc. They’re all automatically placed on your calendar, and you just trust the system, do the events, and marvel at how much delight you get from it.

Using 360 degree sensors mounted on every person, monitor all interactions with people and tell the wearer when the people around them are being deceitful, shy, flirtatious, or careful. When combined with A/MR this will allow for skins showing these various attributes in the regular field of vision.

Play the perfect song at the perfect moment, based on understanding the history of everyone present, key pauses and moments where it would have the most impact.

Optimize recommended route choices based on whether you’re in a hurry or have time to enjoy yourself (Saša Zdjelar).

Monitor the world’s telescope arrays (including amateurs’) for asteroids that could pose a threat to Earth. It’ll be like SETI, but for asteroid detection.

Review accounting records and detect fraud and abuse.

Monitor web traffic logs and detect which connections are bots, which are users attempting to do something fraudulent or malicious, and which are legitimate users.

Watch a workplace and find the correlations between healthy, productive employees and their behaviors vs. lethargic, underperforming employees and their behaviors. Then recommend policy changes to make the company more productive.

Monitor a company’s constantly updated data lake and detect malicious insiders, malicious external attackers, insecure configurations, dangerous business workflows, improper security controls around sensitive data, etc., and then recommend the controls that (based on up-to-date data for your type of organization) would reduce the most risk. Essentially, CISO as an Algorithm.

I wrote about many of these in my book titled The Real Internet of Things.

The unifying concept is the ability to use trillions of eyes and ears to constantly observe our universe in a way that humans never can, and then to find the patterns in that data and use it to improve human lives.

The primary themes here are ultimately oriented around human flourishing, and they are:

Detecting threats before they carry out their attacks.

Finding opportunities to improve or optimize a process.

Identify what makes humans happy, and find ways to nudge us in that direction through recommendations.

As many of the examples show, however, the technology can and will be abused. The tech itself will help achieve goals, but it’s up to us to make sure we’re trying to achieve the right ones.

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

A List of Machine Learning (Ab)use Cases

To benefit from the work I put into my typography, read natively at: A List of Machine Learning (Ab)use Cases.

—

[image error]

I keep thinking of interesting use cases for Machine Learning at various stages of its development (near and more distant future), and I figured I’d capture them. Here they are, and the list will grow as I think of more.

I’ll also include common ones and interesting ones I’ve heard elsewhere.

Extract faces from video, and tell you 1) if they’re known criminals, 2) if their agitated in some way that could indicate a future problem, 3) what their current mood is, 4) if they’re gay or straight, 5) how smart they’re likely to be. All these things will be compiled into various predictions of behavior.

In addition to facial recognition, analysis will be done on the entire presentation of a human as they move through public places. This means their clothing, their body language, their gait, their facial expressions, any voice data that can be consumed and parsed for emotion, etc. All these will further enhance the prediction algorithms.

Public conversations will be parsed in realtime in public places, and sensitive content will be extracted and tied to individuals for the purposes of preventing crime. This will start out as terrorism prevention only, but will eventually make it down to regular crime as well. Not only will this be simple parsing of conversations via NLP and voice recognition, but analysis of the voice and tone will be combined with the content (and the person) to identify possible threat actors.

[image error]

Analyze a large population of people in a popular public place and determine what the style is at any given moment, and then predict what it will be soon (Benedict Evans).

Many of these might be disturbing for whatever reason. I’m capturing what’s inevitably going to be possible and implemented, not the moral implications thereof.

Analyze an industrial process that involves humans (or not) and identify opportunities for improvement (Benedict Evans).

Listen to the sounds in a household and recommend actions based on hidden problems. If there is tension in the air between two people (they’re stressed because of work, or they’ve had a fight), recommend a de-escalation technique to relax both sides.

Notice when someone is getting tired while reading or listening to something (based on observing their attention) and make adjustments to the content they’re being shown, e.g., make the text larger, add color to key points, slow the audio down or make it louder, or add pitch variation or an additional narrator voice, etc.

Read everything produced on the internet and surface great content that would have gone unnoticed otherwise. Basically, Crawlers + ML -> AI Reddit. You train the algorithms with content that everyone loved that was surfaced organically, and then find content that has the same je ne sais quoi on random blogs and podcasts with no followers. The result is the meritocratization of content.

Listen to speech patterns and identify early signs of dementia and other cognitive diseases.

Observe students while they read, write, play, and take exams, and then build them a custom curriculum based on the best ways for them to learn.

Watch a farm’s crop from above using drone footage and custom-build fertilization and pesticide treatment plan.

Using 360 degree sensors mounted on every person, monitor one’s surroundings 24/7, and alert the wearer of dangers around them, e.g., someone following with the intent to mug or attack them.

Using 360 degree sensors mounted on every person, monitor one’s surroundings 24/7, and alert the wearer when there is an opportunity for serendipity nearby, e.g., meeting a new lover, or making a business contact, based on shared interests or mutual acquaintances.

Using 360 degree sensors mounted on every person, monitor all interactions with people and tell the wearer when the people around them are being deceitful, shy, flirtatious, or careful. When combined with A/MR this will allow for skins showing these various attributes in the regular field of vision.

(…in progress)

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 20, 2017

./getawspublicips.sh: Know the Public AWS IPs You Have Facing the Internet

To benefit from the work I put into my typography, read natively at: ./getawspublicips.sh: Know the Public AWS IPs You Have Facing the Internet.

—

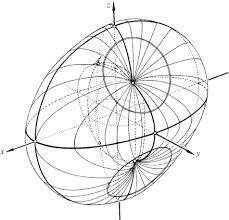

US MongoDB servers via Shodan

The most important challenge facing the companies I work with is knowing what they have facing the internet.

There are lots of other ways to be insecure, of course. Bad endpoint security and security hygiene will get a company hacked by phishing. And bad AppSec will get you hacked through the apps that you need to keep online.

The biggest problem I see however—by a wide margin—is companies not having any idea what ports, protocols, and applications they are presenting to the world.

Their networks are too large, they change too often, and their security teams are too busy filling out security questionnaires and deploying new security solutions to watch their perimeter in a reliable way. Moving to the cloud has made this much worse because developers are standing up boxes constantly, and hardly anyone is actually tracking what’s live at any given time.

AWS is by far the biggest cloud provider for my customers, but I have similar commands for the other providers.

That’s why I created getawspublicips.sh, which simply tells you—at any given moment—what the public IPs are for a given AWS account. It sounds like a small thing, but it isn’t. And if you’ve read this far you probably agree. Here’s the code and how to set it up.

1. Install the aws command

AWS has a cli command called aws, which you can install like so:

pip install awscli

Learn about the aws command syntax here.

2. Configure the aws command

Then you have to set it up with your information.

aws configure

You’ll need to put in your access keys, your region, and your desired output format, e.g., whatever your keys are, us-west-1, json.

3. getawspublicips.sh

Now that the aws command will work for you, you can do the following:

You might have to fix the single and double quotes when pasting this code.

aws ec2 describe=instances –instance-ids | grep -i publicipaddress | awk ‘{ print $2 }’ | cut -d ‘”‘ -f2

The output of this will be a simple list of all your public IPs for your EC2 instances.

54.8x.49.101

54.81.50.x02

54.x2.51.103

54.83.52.1×4

Of course, knowing what those IPs are is just the start of the beginning. You’ll then need a process for ensuring that they’re not presenting a port, protocol, or application that you didn’t know about and/or haven’t secured.

You can do that with lots of tools, e.g., masscan, nmap (with NSE functionality), arachni, et al.

But the most important bit is at least knowing what your attack surface is, and keeping that list updated on a regular basis. I recommend running this perhaps hourly, and feeding that into your monitoring and alert/response framework for when something nasty pops up.

Hope this helps someone.

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 18, 2017

Unsupervised Learning: No. 105

To benefit from the work I put into my typography, read natively at: Unsupervised Learning: No. 105.

—

This is episode No. 105 of Unsupervised Learning—a weekly show where I curate 5-20 hours of reading in infosec, technology, and humans into a 30 minute summary. The goal is to catch you up on current events, tell you about the best content from the week, and hopefully give you something to think about as well…

This week’s topics: TRITON, 1.4 billion credentials, HP keyloggers, iTunes Bitcoin laundering, removing credit card signatures, technologgy news, human news, discovery, notes, recommendations, and the aphorism of the week…

Listen and subscribe via…

Read below for this episode’s show notes & newsletter, and get previous editions…

Security news

FireEye researchers have identified a nasty new ICS attack framework called TRITON. The system provides easy-to-use APIs for sending control commands to Triconex SIS controllers over the proprietary TriStation protocol. The researchers believe this to be a State Actor developed piece of malware, but didn't have enough information to guess which. Read the full analysis here.

1.4 billion email addresses and clear-text credentials were discovered in a dark web forum. The content appears to be a fairly freshly updated collection of 252 other breaches.

HP seems to have keylogger issues. This is the second time one has been discovered in their products this year.

Criminals are laundering Bitcoins by uploading and buying their own music on iTunes, which gives them a legitimate check from Apple.

American Express, Mastercard, and Discover are eliminating the signature requirement for purchases in April of 2018. We seem to be missing a company that rhymes with Visa, but I imagine they'll come along too.

Patching: Microsoft

Technology news

The FCC has repealed Obama's 2015 Net Neutrality rules, which most people in the tech world seem to think is the same thing as repealing Net Neutrality. I see it a bit differently, which I captured in my piece titled, Disambiguation of Net Neutrality.

Microsoft is putting an official SSH client into Windows 10.

You can do SSO with AWS now.

Apple bought Shazam.

Human News

A number of ex-Facebook people are coming out saying that Facebook is bad for society. Specifically, people over-using it.

22% of students with student loan debt are in default, and the rate is double what it was just four years ago.

Google used AI to find two new exoplanets. This is en example of why I think AI doesn't need to be better than humans at finding things to be useful. Machine Learning's main advantage is the ability to look—unblinkingly—with a trillion eyes that never get tired.

A Navy Airmen describes an encounter with an aircraft that, “had no plumes, wings, or rotors, and it outran our F-18s". His take? “I want to fly one.” These are the types of stories that led the Pentagon to start a secretive UFO investigation program.

AI is coming for many types of lawyer jobs sooner rather than later. It's all about having too much data for humans to review, whereas AI never gets tired.

MeerKAT is is an array of 64 dishes spread across one kilometer in Africa that will be orders orfmagnitude more sensitive than our most powerful radio telescopes. It goes live in 2018.

Solo Karaoke is getting super popular in Japan.

Ideas

The Biggest Advantage in Machine Learning Will Come From Superior Coverage, Not Superior Analysis — My essay on how it doesn't matter if humans are better than algorithms for doing analysis if they can't possibly look at the data that needs to be analyzed.

Disambiguation of Net Neutrality — Why I believe most people are misguided on the Net Neutrality issue because it's a lot more complex than it appears. If your mind is 100% made up on this issue—or if it's not—I recommend you read this one.

I wonder if the future of malls is to become physical instantiations of things that are primarily online. Education, healthcare, and trying out products that are ultimately bought and paid for online. So 95% of everything is done online, but the one component that can't be reproduced—physical interaction—is done at the mall. Health clinics. Watching free lectures from top universities. Group video gaming. Trying on clothes. Returning clothes. Etc.

IoT Benefits and Personal Privacy Are Inversely Correlated — Pick one. The more information you withhold the worse your experience will be. And the more you give, and the better your experience, the less privacy you will have.

Aristotle said there were three types of friendship: those based on utility, those based on pleasure, and those based on mutual appreciation. He said the third kind are the best.

The Amazon Machine — Amazon is a company that makes other Amazon's, and that's the thing that makes it so formidable.

Discovery

Ten Year Futures, by Benedict Evans

A Visualization of The World's Most Common and Contagious MythConceptions, by Information is Beautiful

Lincoln's Lyceum Address

How to Get Notified When Your Ubuntu Boxes Need Security Updates — I finally wrote this tutorial.

A visually compelling presentation on how Millennials are both screwed and being blamed for their situation.

The New York Times is the last major newspaper to still have a books section. The next time you're looking for your next read, consider browsing their best-seller list. And here's Amazon's Best Books of 2017 list as well.

How to break a CAPTCHA in 15 minutes using Machine Learning

A visualization of the movies with the biggest gap between critic reviews and fan reviews. The Last Jedi is way up there, with a 37% gap.

REST is the new SOAP

Math as Code — A cheat sheet for people good at code but bad at math.

Basic Network Pivoting Techniques — Ncat, socat, ssh, socks, Metasploit, etc.

PasteHunter — Analyzing paste data using ELK.

Notes

I wrote a review of The Last Jedi. It's full of spoilers and bad language, so be warned on both accounts. This is me being young and emotional, so if you don't like that look on me you might want to pass on this one.

I've read around 10 books since my last book update, and I'm currently finishing What to Think About Machines That Think, and just started Principles, by Ray Dalio. I also finished Player Piano, by Kurt Vonnegut.

Recommendations

December 17, 2017

How to Get Notified When Your Ubuntu Box Needs Security Updates

To benefit from the work I put into my typography, read natively at: How to Get Notified When Your Ubuntu Box Needs Security Updates.

—

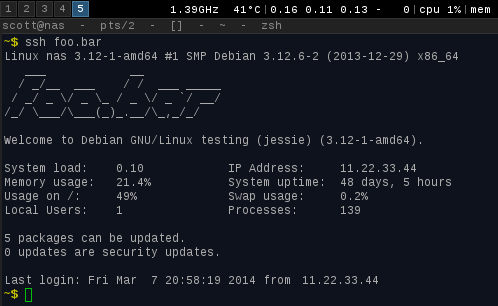

If you’ve been messing with Linux for a while you’ve no doubt seen screens like the above a million times. But for me it’s never been enough. If you have lots of boxes it’s possible to forget about them, so finding out that you have security updates when you log in isn’t proactive enough.

So let me save you 37 Googles. Here’s how to find out if your Ubuntu box needs security updates applied.

# Install apt-notifier to enable the check

apt install update-notifier

# Check to see how many security updates you have

/usr/lib/update-notifier/apt-check2>&1 | cut -d ‘;’ -f 2`

# Install ssmpt so you can send email from the command line

apt install ssmtp

# Then create a script in /etc/cron.hourly to check if there are updates available

Don’t forget to make the script executable.

SECUPDATES=/usr/lib/update-notifier/apt-check 2>&1 | cut -d ';' -f 2

if (( $SECUPDATES > 0 )); then

ssmtp you@email.domain < echo "There are security updates on cat /etc/hostname." | ssmtp -s "Updates on cat /etc/hostname"

else

exit;

fi

Now you will receive an email whenever your Ubuntu box has security updates available!

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 16, 2017

My Review of The Last Jedi

To benefit from the work I put into my typography, read natively at: My Review of The Last Jedi.

—

Leia Man of Steel

I’m going to try my hand at doing a film review. It’ll be like the other ones you’ve read from professionals, except mine will have lots of cussing. I was going to be hyperbolic about my reaction, but I decided to control my emotions and be logical.

The Last Jedi was the worst Star Wars movie ever made. It’s worse than the prequels. And it’s the second worst thing to happen to planet Earth behind Trump being elected.

See? I’m completely capable of restraint.

Spoilers below.

The prequels were bad because they crossed the line a number of times with foolishness and humor. They had characters that were designed to be funny, but weren’t. They had too much of a good things at time, just to pander, and in general they lacked the seriousness that Star Wars needs and deserves.

Rian Johnson didn’t just cross that line. He skull fucked the line.

The first scene opens with a comedy skit exactly like you’d see on Saturday Night Live. It’s kind of funny, actually. But then you realize it’s in Star Wars, and you throw up in your mouth.

If you’re in the middle of a big scene and someone starts cracking jokes, that person needs to die. Or at least get scolded like when the light guy walked through Christian Bale’s Terminator set. But no, it was a complete joke.

Ha ha—funny—Star Wars is funny.

Snoke gets this giant buildup, killed in an afterthought. Luke dies as an afterthought.

And in the middle we have Leia flying through space (which she somehow survives without any sort of suit) like fucking Superman.

We have the most powerful weapon in the universe turn out to be an engine with mass attached to it. Who knew that you could just point a giant ship at an entire enemy fleet and jump to light speed in order to win. Oh, and it turns out you could have just done it before you watched all your friends die.

So I guess we can just build giant empty ships now full of rocks, and put a hyperdrive on them and point them at enemy planets. Done and done. Fuck me.

Oh, and you know what’s super fun? Dropping bombs in space—where there’s no gravity.

Rian Johnson will do absolutely fucking anything to get an “ooh” and an “ah”, apparently not giving a shit about the fact that he just cracked the integrity of the universe (and our childhoods).

The casino scene was flippant jack-off-ery. It was worse than a Jar Jar Binks porno filmed using only Lego.

Then you have this perfectly timed pseudo-stressful climax of being able to remove the tracking device just in time…except after seeing this happen once, twice, three times in all his other films, it just looks trite and shallow. Like the fourth time you see a used car salesman smile.

I’d keep going with examples, but I’ve already thrown up on myself.

The bottom line is that Star Wars is serious. It’s a fucking opera, not a comedy. Fuck him, and fuck Star Wars, since he’s doing the next one as well.

It’s dead to me until we get a remake that has the levity it deserves.

I guess I’m waiting for Game of Thrones in 2027 or whatever.

(kicks something nearby and hurts foot)

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 14, 2017

Disambiguation of Net Neutrality

To benefit from the work I put into my typography, read natively at: Disambiguation of Net Neutrality.

—

People mean many different things when they use the term Net Neutrality, but I think what they generally reduce to is, “Maintaining an internet that is fair and useful for all users.”

That’s hard to disagree with, and I certainly don’t. If that’s what it means, then I’m 100% for it.

But some people think Net Neutrality means that the internet shouldn’t be different for people who have little money and people who have lots of money. Or that internet providers shouldn’t be able to make partnerships with other companies to provide bundled services. I have never seen any other business that follows these rules, so I’m not sure why we’d expect it on the internet.

When you buy a phone plan, you can buy a pre-paid plan, a medium plan, a family plan, or if you have lots of money you can get an all-you-can eat plan. Those are offering tiers, and they’re products that the provider sells.

Them coming up with new ones is what they’re supposed to do as a business. And if they decide to partner with Volvo or Spotify to help make their (and their partner’s) service better, we don’t see that as some kind of attack on our freedom.

And it’s the same for lots of businesses. You can buy yearly plans for gym memberships, or lifetime plans. You buy VIP tickets at concerts. You can get the base model of your car or you can get a car with all the options.

The reason this is all ok is that we have the option to buy something else instead.

To me there are two core issues with Net Neutrality that need to be protected:

Basic access rights.

Fostering competition.

For Basic Access Rights, being able to Google things and get instant (unfiltered) answers is arguably a human right at this point. Same with using Wikipedia, or any of the many other core internet infrastructure sites. Having all citizens have this level of access to the internet should be the goal of any country, and if any private enterprise (or government regulation) interferes with that goal it should be opposed.

And that brings us to Fostering Competition. The reason it’s ok for businesses to offer lots of tiers and have random partnerships with different companies is because they’re doing it to be innovative, to make more money by offering value to their customers, and if their customers don’t like it they can just move to a competitor.

That’s a beautiful thing. Both that they are free to try lots of different things, and that you can punish them for it by leaving.

The problem is when you have monopolies, or monopoly-like services, that are 1) essential for human flourishing, 2) don’t have (m)any competitors to enable choice, and 3) they attempt to force people to use certain sub-optimal options that benefit them and not the user.

That is the combination we need to avoid, and the fulcrum of the entire thing is consumer choice.

I think business offerings and tiers are red herrings that take our eyes off the fact that big companies are using monopolistic practices to squash smaller competitors, and overzealous government regulation is keeping the bar too high for new entrants.

The other issue is that government regulation can actually cause harm when done incorrectly. There are often unwanted externalities that do more harm than they help. The 2008 crisis was fundamentally enabled by a positive desire to get more low-income families to become homeowners, for example. But when the government told the financial industry that they needed to start making sub-prime loans, the unscrupulous stepped in and we see what happened. And it all started with a government play that probably shouldn’t have happened.

I’m not an expert in small business regulation, but I think we probably have the same situation with enabling people to quickly go into business doing x, y, or z. If you have to fill out 741 forms, carry 23 kinds of insurance, get inspected constantly by 17 different organizations, etc.—you’re basically setting bars that only rich people can reach. So, just like the housing crisis, you’re trying to do a good thing by protecting people from harm, but you’re actually stopping everyday people from becoming business owners and competing with the big companies.

And here’s the kicker—the big companies spend TONS of money lobbying for “safety” regulations that keep the new companies out of their markets. So there are thousands of regulations that hurt potential new business owners—and ultimately the people—all under the guise of doing the right thing.

Bottom line here is that it’s possible for regulation to go wrong—both in an evil way controlled by lobbyists and corrupt government, and by well-meaning legislation that creates unanticipated externalities.

Summary

So what’s the point here?

Net Neutrality shouldn’t mean the same internet for everyone. That’s not how anything works, and it’s not how the internet should work either.

Net Neutrality should absolutely mean fair and useful internet for everyone, meaning we must guarantee affordable access to core internet services. And every government should be protecting that right for its citizens.

Government regulation can both help and harm, and there’s no single regulation that “is Net Neutrality”. Net Neutrality is a concept, not a specific law.

Companies should be free to innovate, bundle, and offer all types of services to different types of customers. It’s everywhere in the non-internet world, and there’s no reason it should be different for internet companies.

The key thing that makes it ok for companies to innovate and offering exclusive deals and tiers for different customers and partners is that you can always just use someone else. When you offer inferior service to customers that don’t have the option to leave, you’ve broken the entire model.

Many people are fiercely engaged in this debate without realizing the multiple (and often seemingly conflicted) realities that are in play. It’s a lot more complex than many would have you believe.

Take the time to think through the concepts yourself.

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

The Biggest Advantage in Machine Learning Will Come From Superior Coverage, Not Superior Analysis

To benefit from the work I put into my typography, read natively at: The Biggest Advantage in Machine Learning Will Come From Superior Coverage, Not Superior Analysis.

—

I think there’s confusion around two distinct and practical benefits of Machine Learning (which is somewhat distinct from Artificial Intelligence).

Superior Analysis — A Machine Learning solution is being compared to a human with the best training in the world, e.g., a recognized oncologist, and they’re going head to head at doing analysis on a particular topic in a situation where the attention of the human is not a factor. In this situation the human is at near-maximum potential. They’re awake, alert, and their full attention is focused on the problem for as long as necessary to do the best job they can.

Superior Coverage — A Machine Learning solution is being compared to a non-existent human and/or a crude automated analysis of some sort simply because there aren’t enough humans to look at the content. In this case, it doesn’t matter how good a human could do in the situation as compared to the algorithm, because it would require hundreds, thousands, millions, or billions of additional humans to perform that job.

There’s also a third case where algorithms are better than junior humans in the field but not humans with the best training and experience.

Many people in tech are conflating these two use cases when criticizing AI/ML.

In my field of Information Security, for example, the popular argument—just as in many fields—is that humans are far superior to algorithms at doing the work, and that AI is useless because it can’t beat human analysts.

Humans being better than machines at a particular task is irrelevant when there aren’t enough humans to do the work.

If there is, say, 100 lines of data produced by a company, and you have a team of 10 analysts spread across L1, L2, and L3 who are looking at that data over the course of a day, then you’re getting 100% coverage of the content. And compared to an algorithm the humans are just going to win. At this point even the L1 analyst will do so, and definitely the L2s and L3s. That’s an example of Superior Analysis not being true.

But I would argue that most companies don’t have anywhere near enough analysts to look at the data that their companies are producing. Just taking a stab at the numbers, I’d guess that the top 5% of companies might have a ratio that has them looking at 50-85% of the data they should be—and that’s in a pre-data-lake world where existing IT tools aren’t producing near their potential data output that could be analyzed with enough eyes.

For most companies, however (say the top 90%) they probably have human security analyst ratios that only allow 5-25% coverage of what they wish they were seeing and evaluating. And for the bottom 10% of companies I’d say they’re looking at less than 1% of the data they should be, likely because they don’t have any security analysts at all.

This visibility problem is exacerbated by a few realities:

Due to our constantly adding new IT systems, and doing more business, the amount of data that needs to be analyzed is growing exponentially, with many small organizations producing terabytes per day and large organizations producing petabytes per day.

We’ve only just started to properly capture all the IT data exhaust from companies into data lakes for analysis, so we’re only seeing a tiny fraction of what’s there to see. Once data lakes become common, the amount of data for each company will hockey-stick, which is a separate problem from creating more data because there is more business and more IT systems. In other words—producing more data from existing tools and business vs. having more tools and doing more business.

Machines operate 24/7 with no deviation in quality over time, because they don’t get tired. If they just worked for 2 weeks in a row looking at 700 images a minute, they’ll do just as good on the next 700 as they did the first.

It’s much easier to train algorithms than humans, and then roll out that change to the entire environment and/or planet where applicable.

Even if you could train enough humans to do this work, the training would be highly inconsistent due to different life experience and constant churn in the workforce.

I’m reminded of the open source “many eyes” problem, where the source code being open doesn’t matter if nobody is looking at it. That’s exactly the type of problem that I’m talking about here with the Superior Coverage advantage.

When you combine these factors you finally see full advantage of algorithmic analysis: there’s just no way for humans to provide the value that machines can—not because humans are worse at analysis, but because we can’t scale our knowledge to the match the problem.

If you take all the things in the world that need evaluation by human experts—things like everyone’s heart rate, everyone’s IT data, the efficiency of global energy distribution, evaluation of visual sensor data for possible threats, finding bugs in the world’s source code, finding asteroids that might hit Earth, etc.—I don’t think it’s realistic to expect humankind to ever be able to look at even a tiny fraction of 1% of this content.

When you hear someone dismissing Machine Learning approaches to data analysis with the argument that humans are better, consider the question of how much data there is to look at, and what percentage a potential human workforce can realistically evaluate.

And this is assuming the human would be better at it if they did the analysis.

Once the algorithms become better than human specialists (see Chess, Go, Cancer Detection, Radiology Film Analysis, etc.) the Superior Analysis factor becomes an exponent to the Superior Coverage factor—especially when you consider the fact that you can roll that update out via copy and paste as opposed to retraining billions of humans.

Exciting times indeed.

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 12, 2017

Unsupervised Learning: No. 104

To benefit from the work I put into my typography, read natively at: Unsupervised Learning: No. 104.

—

This is episode No. 104 of Unsupervised Learning—a weekly show where I curate 3-5 hours of reading in infosec, technology, and humans into a 30 minute summary. The goal is to catch you up on current events, tell you about the best content from the week, and hopefully give you something to think about as well…

This week’s topics: NiceHash hacked, Apple bugs, Stealing Cars via Relay, Crypto Collusion, technologgy news, human news, discovery, notes, recommendations, and the aphorism of the week…

Listen and subscribe via…

Read below for this episode’s show notes & newsletter, and get previous editions…

Security news

Someone just stole $70 million from NiceHash, a crypto mining company. Know this: if you have cryptocurrency, you need to take its protection very seriously. And the more visible you are about having it, the higher your risk.

Apple fixed two major bugs recently—one that allowed you to log in as root to Macs without a password, and an undisclosed bug in HomeKit devices.

Relay systems are being used to steal high-end cars. You basically get the key to activate, and then you rebroadcast that signal to the car so it thinks the key is present.

When small numbers of people control significant amounts of a cryptocurrency, there's significant risk of manipulation.

Technology news

Amazon announced tons of stuff at their re:Invent conference, including EKS which is basically an Amazon implementation of Kubernetes. Cloud9 is a new web-based IDE. Rekognition is an AI service that identifies distinct people in images and video. Translate is a language translation service that uses machine and deep learning at scale and for low cost. GuardDuty is a managed threat detection service that continuously monitors for unauthorized activity in your environment. Comprehend is a service that pulls insights from text. Fargate, which allows you to launch containers without managing the servers that host them. They also released 6 new products around IoT security, including many focused on edge devices. There were many more announcements, but these are the ones that glimmered for me.

Steam has dropped Bitcoin as a payment option because it's too volatile.

Silicon Valley is paying models to show up to tech parties and talk to the men. Sigh.

Human news

Farmers are committing suicide at over twice the rate of veterans.

After 37 years, Voyager fired up its trajectory thrusters at the command of the Voyager team on Earth. It took the commands almost 20 hours to reach Voyager because it's around 21 billion kilometers away (actually in interstellar space). I am blown away that this works but I can't get my phone to play songs correctly in my car over bluetooth.

A Chinese paleontologist found a small dinosaur tail trapped in amber in Myanmar. The tail was extremely well preserved and shows intact feathers, adding to the evidence that many dinosaurs had feathers like modern birds.

Google has released an AI tool that looks at your genome and recommends customized therapies.

CVS bought Aetna, which I hope will bring an improvement in the availability of decent healthcare. I'm quite happy to see Amazon, Walmart, Walgreens, and CVS compete to have the best and most available healthcare in the country by having it available both online and multiple places around town. Cheap drugs too. Once again, Amazon is forcing good things to happen.

Ideas

Technical Professions Progress From Magical to Boring. And InfoSec is in the middle of the transition right now.

Responsible Disclosure? How About Responsible Behavior? My essay on how to simplify the disclosure debate by stepping out of the security industry.

Facebook is the opposite of mindfulness. I should write an essay about it, but the sentence by itself pretty much covers it.

I'm going to write another essay about this at some point, but I've sensed a lot of unhealthy groupthink on the net neutrality issue. Ben Thompson's argument was quite good for why he's supporting the FCC's decision. Basically, the existing law and net neutrality are not the same thing. So it's possible to be for net neutrality and for the repeal. That's one confusion. The other one is around the harm that can be caused by imprecise and overreaching regulations. Not many people know that the financial crisis in 2008 was largely caused by regulation. Not just removing controls on shady practices, but actually forcing banks to make bad deals to help poor people. That legislation, from Clinton and Bush, started the entire mess. It's another example of where you can have good ideas turn into bad legislation, but where the negative externality might not manifest for quite some time, and might not be easy to link to the regulation. Short version: the net neutrality issue is not as simple as most think it is.

Measuring Happiness

Discovery

I made a graphic that shows the differences and relationship between Artificial Intelligence and the different types of Machine Learning.

NIST has released a new draft of their Cybersecurity Framework.

What I Learned From Doing 1,000 Code Reviews

Attributes of the best interviewers, from interviewing.io.

In Safari, you can type Shift-⌘-\ to search your open tabs.

How to send email like a CEO.

The 2017 Information Is Beautiful Data Visualization Winners

A campaign cybersecurity playbook (any party).

The Thrive Questionnaire

PacketTotal — Free, high-quality .pcap analysis. Note: You're sending your network traffic to the internet.

DNSLeakTest tests your DNS for leaks (good name)

Ten Year Futures, by Benedict Evans.

A History of Big Data in Security, by Rafael Marty.

Wired's Guide to Digital Security. This one is cool because it has you pick your threat profile.

Notes

If you can, do me a favor and give me a great rating rate the show on iTunes. It's technically for the podcast, but it's the same content since this newsletter is the show notes. Thank you!

Recommendations

Update your Macs, and make sure everyone around you does as well.

If you have Bitcoin, or any other type of cryptocurrency, make sure you have it secured. There are groups of thieves going around breaking into peoples' digital lives just to steal the stuff, and they're quite good at it.

Aphorism

“If you have a bowl of apples and you eat the best ones, you only have the best ones left.” ~ Shelly Horton

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

December 9, 2017

Technical Professions Progress from Magical to Boring

To benefit from the work I put into my typography, read natively at: Technical Professions Progress from Magical to Boring.

—

There was probably a time when accounting was the most magical thing in the world. The ability to manipulate numbers and derive truths about the state of things.

Constructing a tall building used to done by what was called a Master Builder, which is someone who could do every piece of the build themselves. The architecture, the foundation, the support structure, the walls and floors—everything. But then buildings became too advanced, and each step in the process had to be broken into sub-steps, and there became specialists in all those different skills.

I highly recommend The Checklist Manifesto.

Once the complexity became too great, the system of connecting all those different disciplines, at the exact right time, had to be done by a series of complex checklists. And at that point it’s just a matter of following an exact procedure (defined in the checklist) according to a standard.

Kind of boring, I imagine. But not so back when there weren’t any checklists—back then it was something special to divine something into being.

Surgeons are basically L4 tech support for the human body.

The pattern I’m seeing here is that in the early maturity of a profession there are no checklists and there are no standards. And because of this we’re all genuinely surprised if anything is created at all. It’s magic every time.

An OG InfoSec Practitioner at Work

But then, as the profession matures, it becomes more and more about repetition and precision, and the magic gives way to structure. Even more importantly, the goal of the profession is to become more defined, more structured, and—necessarily—more boring.

I find it ironic that I’m talking about checklists becoming important in security’s future, when we’ve spent so long fighting the “checkbox” assessment.

I think we’re getting close to an initial inflection point in the field of information security. It started as magic. Anyone who knew anything about it was—by definition—a wizard. And now it’s increasingly obvious that good security looks a lot like good health, fitness, and hygiene. Like disaster preparedness. And building good software perhaps looks a lot like building a skyscraper with the checklist coordination of dozens of teams.

I think the natural endpoint in all this is that information security will eventually be as exciting as accounting. It’ll be a discipline of nested disciplines, checklists, and easily verifiable states of existence within an organization that can be assessed by other people. Much like a building inspection or a medical exam.

There are key differences between infosec and other fields that will stress the limits of the metaphor.

My key takeaway is that it seems the goal of technical professions should be to become boring. Because boring means mature, and mature means that it can consistently deliver the value it promises. Skyscraper construction does that. Software construction does not.

This also raises a similar point about deep learning: can it truly be dependable and high-quality if you can’t see the variables?

It’s disturbing to me that we basically need to choose between magic and quality. Consistency requires knowing the variables—or at least as long as humans are required to perform the steps. The delight produced by magic comes from the mystery, and that’s precisely the piece that we need to give up.

It’s a strange choice to have to make within a profession you love.

—

I spend 5-20 hours a week collecting and curating content for the site. If you're the generous type and can afford fancy coffee whenever you want, please consider becoming a member at just $10/month.

Stay curious,

Daniel

Daniel Miessler's Blog

- Daniel Miessler's profile

- 18 followers