Jeff Atwood's Blog, page 4

February 3, 2016

The Scooter Computer

When we initially deployed our handbuilt colocated servers for Discourse in 2013, I needed a way to provide an isolated VPN channel in for secure remote access and troubleshooting. Rather than dedicate a whole server to this task, I purchased the inexpensive, open source firmware friendly Asus RT-N16 router, flashed it with the popular TomatoUSB open source firmware, removed the antennas, turned off the WiFi and dropped it off in our colocated rack to let it act as a dedicated VPN access point.

And that box – which was $100 then and around $70 now – worked well enough until now. Although the version of OpenSSL in the 2012 era Tomato firmware we used is not vulnerable to Heartbleed, it's still getting out of date in terms of the encryption it supports and allows. And Tomato itself is updated sporadically, chaotically at best.

Let's face it: this is just a little box that runs a chopped up version of Linux, with a bit of specialized wireless hardware and multiple antennas tacked on … that we're not even using. So when it came time to upgrade, we wondered:

Why not just go with a small box that can run a real, full Linux distro? Wouldn't that be simpler and easier to keep up to date?

After doing some research and asking on Twitter, I discovered there are a ton of amazing little Broadwell "mini-PC" boxes available on AliExpress.

The specs are kind of amazing for the price. I paid ~$350 each for the ones I selected:

i5-5200 Broadwell 2 core / 4 thread CPU at 2.2 Ghz - 2.7 Ghz

8GB DDR3 × 2 = 16GB RAM

128GB M.2 SSD

Dual gigabit Realtek 8168 ethernet

front 4 USB 3.0 ports / rear 4 USB 2.0 ports

Dual HDMI out

(There's also optical and analog audio connectors on the front, as well as a SD card reader, which I covered with a sticker since we had no need for audio. I also stripped the WiFi out since we didn't need it, but it was included for the price, too.)

Selecting the i5-4258u, 4GB RAM, and 64GB SSD pushes the price down to $270. That's still a solid CPU, only a single generation behind Intel's latest and greatest Skylake, and carrying the midrange i5 moniker; it's no pushover. There are also many, many variants of this box from other AliExpress sellers that have slightly older, cheaper CPUs that are still plenty powerful. You can easily spec a box similar to this one for $200.

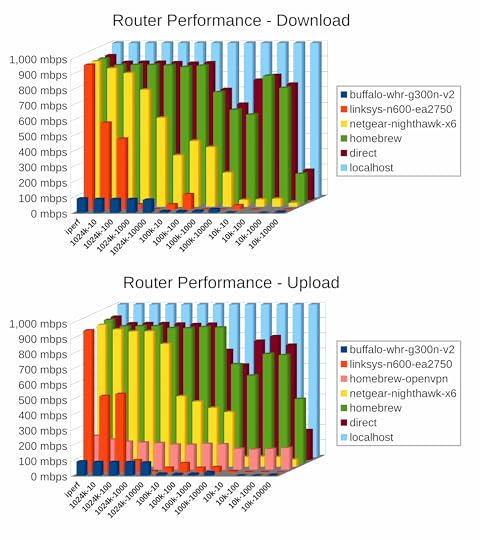

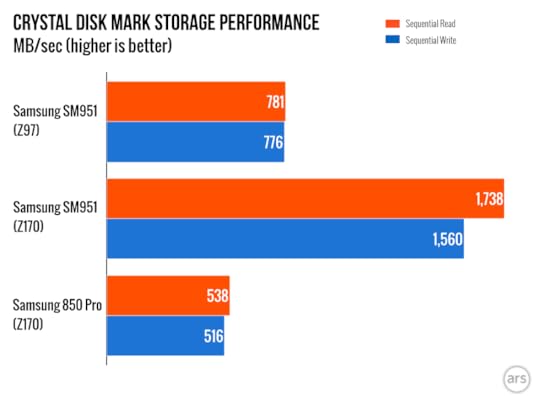

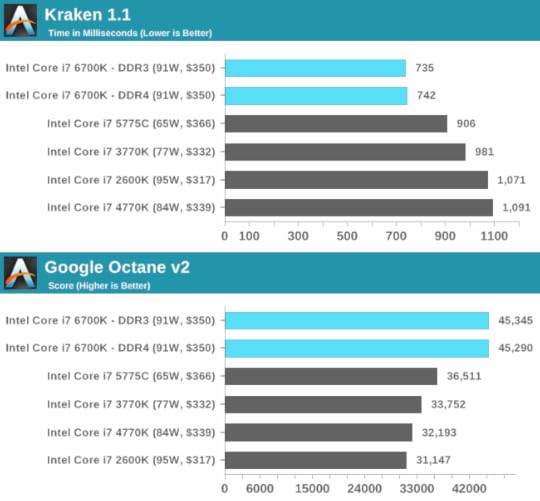

That's not a whole lot more than the $200 you'd pay for a high end router these days, and as Ars Technica notes, the average x86 box is radically faster.

Note that the above graphs, "homebrew" means an old, 1.8 Ghz Ivy Bridge dual core chip, 3 generations behind current CPUs, that doesn't even merit the i3 or i5 designation, and has no hyperthreading. Do bear that in mind as you keep reading.

Meet The Scooter Computer

This box may be small, and only 15 watt TDP, but it is mighty. I spun up a new Digital Ocean droplet and ran a quick benchmark:

sudo apt-get install sysbench

sysbench --test=cpu --cpu-max-prime=20000 run

Tie Shuttle 6

total time: 28.0707s

total num events: 10000

total time take: 28.0629

per-request stats:

min: 2.77ms

avg: 2.81ms

max: 3.99ms

~95 percentile: 3.00ms

Digital Ocean Droplet

total time: 35.9541s

total num events: 10000

total time taken: 35.9492

per-request stats:

min: 3.50ms

avg: 3.59ms

max: 13.31ms

~95 percentile: 3.79ms

Results will of course vary by cloud provider, but rest assured this box is just as fast as and possibly even faster than the average cloud box you could spin up right now. Of course it is "only" 2 cores / 4 threads, but the more cores you need, the slower they tend to go because of the overall TDP limits of the core package.

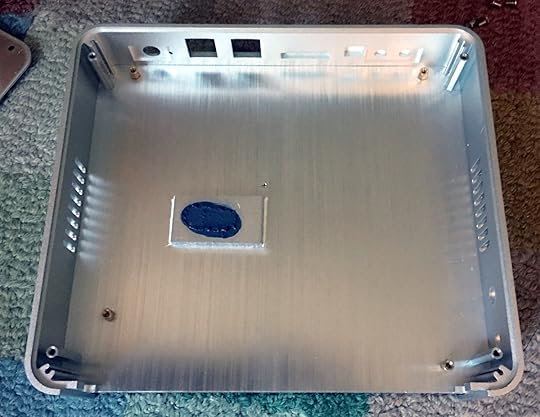

One thing that's not immediately obvious in photos is that this thing is indeed small but hefty, like holding a solid chunk of aluminum in your hand. That's because the box is passively cooled — the whole case is the heatsink, as the CPU on the bottom of the motherboard mates with the finned top of the case.

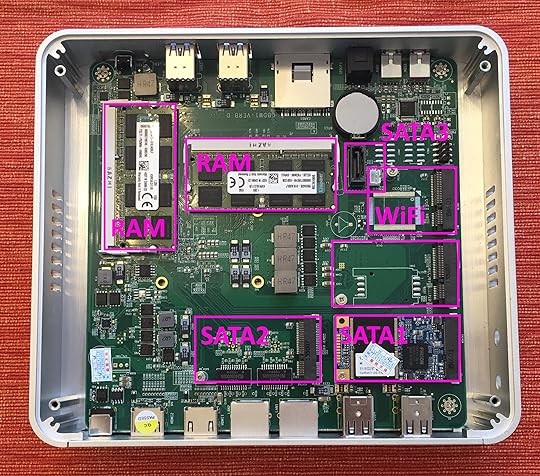

Opening this box you realize just how simple things are inside it; it's barely more than a highly integrated motherboard strapped to an aluminum block. This isn't a Steve Jobs truck, a Mac Mini car, or even a motorcycle. This is a scooter.

Scooters are very primitive machines; it is both their greatest strength and their greatest weakness. It's arguably the simplest personal wheeled vehicle there is. In these short distance scenarios, scooters tend to win over, say, bicycles because there's less setup and teardown necessary – you don't have to lock up a scooter, nor do you have to wear a helmet. Just hop on and go! You get almost all the benefits of gravity and wheeled efficiency with a minimum of fuss and maintenance. And yes, it's fun, too!

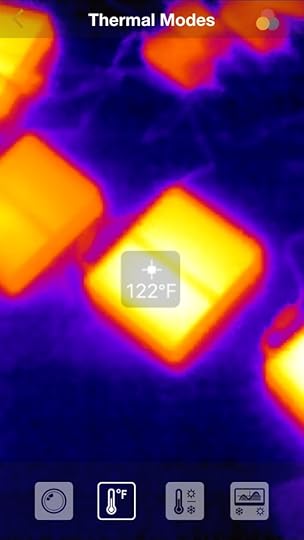

Passively cooled computers are paragons of simplicity and reliable consumer electronics, but passively cooling a "real" x86 PC is the holy grail. To get serious performance you usually need to feed the CPU at least 10 to 20 watts – and dissipating that kind of energy with zero fans and ambient airflow alone is not trivial. Let's see how our scooter does overnight running Mersenne Primes, which is the heaviest CPU load possible.

You can place your hand on the top of the box during this, but it's uncomfortable. And the whole box radiates heat, not just the top. Overall it was completely stable for me during overnight mprime torture testing with the 15w TDP CPU I chose, and I am comfortable with these boxes sitting in our rack in the datacenter, even under extended full load. However, I would be very careful putting a 28w TDP CPU in this box unless you are absolutely sure it won't be at full load very often. Passive cooling is hard.

Power consumption, as measured by my Kill-a-Watt, ranged from 7 watts at the Ubuntu Server 14.04 text login screen, to 8-10 watts at an idle Ubuntu 15.10 GUI login screen (the default OS it arrived with), to 14-18 watts in memory testing, to 26 watts in mprime.

I should also mention that even under extreme mprime load, both CPUs stayed at 2.5 Ghz indefinitely, which is unusual in my experience. To achieve 2.7 Ghz you need a single threaded load. Considering the base clock of the i5-5200u is 2.2 Ghz, that's quite good! Many 4-6-8 core CPUs drop all the way down to their base clock pretty fast once they have significant load, which makes the "turbo" moniker a bit of a lie.

(By the way, don't bother using burnP6, it generates way too little heat compared to mprime, which is an absolute monster. If your CPU can survive an overnight run of mprime, I can assure you it's ready for just about anything the real world can throw at it, ever.)

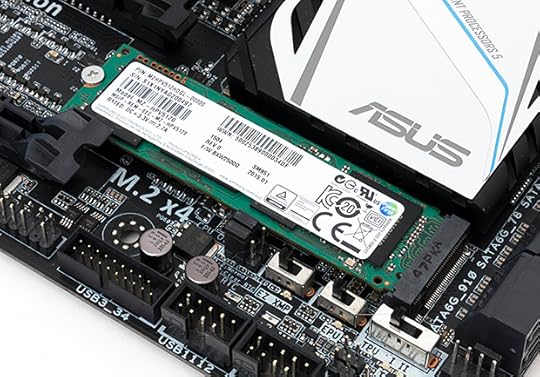

Disk

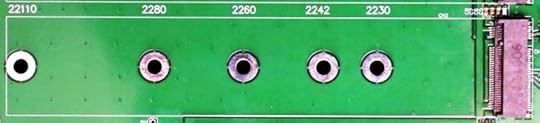

The machine has M.2 slots for two drives, as well as a SATA port and power cable (not pictured, but was included in the box) if you want to mate a 2.5" drive with the drive mounting holes on the bottom of the case. So if you prefer a mirrored two drive RAID array here for reliability, or a giant honking 2TB 2.5" HDD slapped in there for media storage, all of that is possible!

Be careful, as the internal M.2 slots are 2242, meaning 42mm length. There seem to be mostly (only?) lower cost SSD drives available in this size for whatever reason.

Don't worry, though, the bundled 128GB Phison S9 M.2 SSD has decent performance, roughly equal to a good SSD from a few years ago:

dd bs=1M count=512 if=/dev/zero of=test conv=fdatasync

hdparm -Tt /dev/sda

536870912 bytes (537 MB) copied, 1.52775 s, 351 MB/s

Timing cached reads: 11434 MB in 2.00 seconds = 5720.61 MB/sec

Timing buffered disk reads: 760 MB in 3.00 seconds = 253.09 MB/sec

That's respectable SSD performance and won't hold you back in most use cases, but it's not a barn-burning disk subsystem, either. I'm not entirely sure retrofitting, say, the state of the art Samsung 950 Pro M.2 2280 drive is possible due to length restrictions.

Of course the Samsung 850 Pro would fit fine as a traditional 2.5" SATA drive mounted to the case cover, and would perform like this:

536870912 bytes (537 MB) copied, 1.20895 s, 444 MB/s

Timing cached reads: 38608 MB in 2.00 seconds = 19330.61 MB/sec

Timing buffered disk reads: 1584 MB in 3.00 seconds = 527.92 MB/sec

RAM

Intel limits these Broadwell U class CPUs to 16GB RAM total, so maxing the box out is only going to set you back around $70. Still, that's a significant percentage of the ~$350 total cost, and you may not need that much RAM for what you have in mind.

However, do be careful that you get dual-channel RAM for lower RAM configurations; you don't want a single 4GB DIMM, you want two 2GB DIMMs. They ship from the vendor with a single DIMM, so beware. It may not matter depending on the task, as noted by AnandTech, but our boxes will be used for OpenSSL, and memory is cheap, so why not?

The Versatile Scooter

When I began looking at this, I was shocked to discover just how low-end the x86 CPUs are in a lot of "dedicated" devices, such as the official pfSense hardware:

Sure, 2.4 Ghz and 8 cores on that C2758 sounds reasonable – until you realize those are old Intel Bay Trail Atom cores. Even the current Cherry Trail Atom cores aren't so hot. Furthermore, those are probably the maximum "turbo" frequencies being quoted, which are unlikely to be sustained under any kind of real multi-core load. Also, did I mention this is being sold as a $1,400 device? Except for the lack of more than 2 dedicated gigabit ethernet ports, I'd put our scooter computer up against that C2758 any day of the week. And you know what? It'd win.

I think this logic applies to a lot of dedicated hardware these days — routers, switches, firewalls, and so on. You're often better off building up a modern high power, low TDP x86 box and slapping a regular Linux distro on there.

You can even kinda-sorta fit six of them in a 1U rack space.

(Well, except for the power bricks and cables. Vertical mounting on a 1U shelf works out a bit better, and each conveniently came with a stand for vertical operation.)

Now that I've worked with these boxes, I've become rather enamored of the Scooter Computer concept. Wherever we were thinking that we had to run either:

A virtual machine on big iron for some small but important utility function in our rack.

Dedicated, purpose built hardware for networking, firewall, or switching with a custom OS.

… we can now take advantage of cheap, reliable, flexible, totally solid state commodity x86 hardware that's spread across many machines and running standard Linux distributions, like all the rest of our 1U servers.

[advertisement] At Stack Overflow, we put developers first. We already help you find answers to your tough coding questions; now let us help you find your next job.

January 2, 2016

Zopfli Optimization: Literally Free Bandwidth

In 2007 I wrote about using PNGout to produce amazingly small PNG images. I still refer to this topic frequently, as seven years later, the average PNG I encounter on the Internet is very unlikely to be optimized.

For example, consider this recent Perry Bible Fellowship cartoon.

Saved directly from the PBF website, this comic is a 800 × 1412, 32-bit color PNG image of 671,012 bytes. Let's save it in a few different formats to get an idea of how much space this image could take up:

BMP24-bit3,388,854

BMP8-bit1,130,678

GIF8-bit, no dithering147,290

GIF8-bit, max dithering283,162

PNG32-bit671,012

PNG is a win because like GIF, it has built-in compression, but unlike GIF, you aren't limited to cruddy 8-bit, 256 color images. Now what happens when we apply PNGout to this image?

Default PNG671,012

PNGout623,8597% smaller

Take any random PNG of unknown provenance, apply PNGout, and you're likely to see around a 10% file size savings, possibly a lot more. Remember, this is lossless compression. The output is identical. It's a smaller file to send over the wire, and the smaller the file, the faster the decompression. This is free bandwidth, people! It doesn't get much better than this!

Except when it does.

In 2013 Google introduced a new, fully backwards compatible method of compression they call Zopfli.

The output generated by Zopfli is typically 3–8% smaller compared to zlib at maximum compression, and we believe that Zopfli represents the state of the art in Deflate-compatible compression. Zopfli is written in C for portability. It is a compression-only library; existing software can decompress the data. Zopfli is bit-stream compatible with compression used in gzip, Zip, PNG, HTTP requests, and others.

I apologize for being super late to this party, but let's test this bold claim. What happens to our PBF comic?

Default PNG671,012

PNGout623,8597% smaller

ZopfliPNG585,11713% smaller

Looking good. But that's just one image. We're big fans of Emoji at Discourse, let's try it on the original first release of the Emoji One emoji set – that's a complete set of 842 64×64 PNG files in 32-bit color:

Default PNG2,328,243

PNGout1,969,97315% smaller

ZopfliPNG1,698,32227% smaller

Wow. Sign me up for some of that.

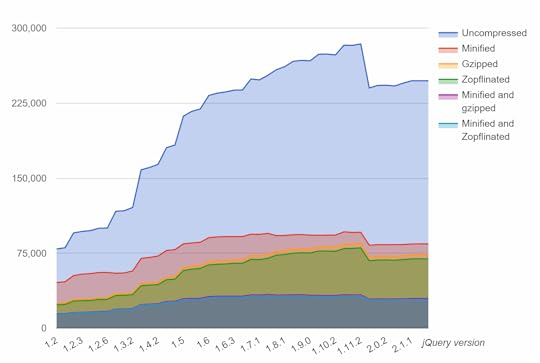

In my testing, Zopfli reliably produces 3 to 8 percent smaller PNG images than even the mighty PNGout, which is an incredible feat. Furthermore, any standard gzip compressed resource can benefit from Zopfli's improved deflate, such as jQuery:

Or the standard compression corpus tests:

Sizegzip -9kzipZopfli

Alexatop10k693mb128mb125mb124mb

Calgary3.1mb1017kb979kb975kb

Canterbury2.8mb731kb674kb670kb

enwik8100mb36mb35mb35mb

(Oddly enough, I had not heard of kzip – turns out that's our old friend Ken Silverman popping up again, probably using the same compression bag of tricks from his PNGout utility.)

But there is a catch, because there's always a catch – it's also 80 times slower. No, that's not a typo. Yes, you read that right.

gzip -95.6s

7zip mm=Deflate mx=9128s

kzip336s

Zopfli454s

There's a little caveat here in that gzip compression is faster than it looks in the above comparsion, because level 9 is a bit slow for what it does:

TimeSize

gzip -111.5s40.6%

gzip -212.0s39.9%

gzip -313.7s39.3%

gzip -415.1s38.2%

gzip -518.4s37.5%

gzip -624.5s37.2%

gzip -729.4s37.1%

gzip -845.5s37.1%

gzip -966.9s37.0%

It's up to you to decide if that whopping 0.1% compression ratio difference between gzip -7and gzip -9 is worth the doubling in CPU time. In related news, this is why pretty much every compression tool's so-called "Ultra" compression level or mode is generally a bad idea. You fall off an algorithmic cliff pretty fast, so stick with the middle or the optimal part of the curve, which tends to be the default compression level. They do pick those defaults for a reason.

PNGout was not exactly fast to begin with, so imagining something that's 80 times slower (at best!) to compress an image or a file is definite cause for concern. You may not notice on small images, but try running either on a larger PNG and it's basically time to go get a sandwich. Or if you have a multi-core CPU, 4 to 16 sandwiches. This is why applying Zopfli to user-uploaded images might not be the greatest idea, because the first server to try Zopfli-ing a 10k × 10k PNG image is in for a hell of a surprise.

However, remember that decompression is still the same speed, and totally safe. This means you probably only want to use Zopfli on pre-compiled resources, which are designed to be compressed once and downloaded millions of times – rather than a bunch of PNG images your users uploaded which may only be viewed a few hundred or thousand times at best, regardless of how optimized the images happen to be.

For example, at Discourse we have a default avatar renderer which produces nice looking PNG avatars for users based on the first letter of their username, plus a color scheme selected via the hash of their username. Oh yes, and the very nice Roboto open source font from Google.

We spent a lot of time optimizing the output avatar images, because these avatars can be served millions of times, and pre-rendering the whole lot of them, given the constraints of …

10 numbers

26 letters

~250 color schemes

~5 sizes

… isn't unreasonable at around 45,000 unique files. We also have a centralized https CDN we set up to to serve avatars (if desired) across all Discourse instances, to further reduce load and increase cache hits.

Because these images stick to shades of one color, I reduced the color palette to 8-bit to save space, and of course we run PNGout on the resulting files. They're about as tiny as you can get.

When I ran Zopfli on the above avatars, I was super excited to see my expected 3 to 8 percent free file size reduction and after the console commands ran, I saw that saved … 1 byte, 5 bytes, and 2 bytes respectively. Cue sad trombone.

(Yes, it is technically possible to produce strange "lossy" PNG images, but I think that's counter to the spirit of PNG which is designed for lossless images. If you want lossy images, go with JPG or another lossy format.)

The great thing about Zopfli is that, assuming you are OK with the extreme up front CPU demands, it is a "set it and forget it" optimization step that can apply anywhere and will never hurt you. Well, other than possibly burning a lot of spare CPU cycles.

If you work on a project that serves compressed assets, take a close look at Zopfli. It's not a silver bullet – as with all advice, run the tests on your files and see – but it's about as close as it gets to literally free bandwidth in our line of work.

[advertisement] Find a better job the Stack Overflow way - what you need when you need it, no spam, and no scams.

December 4, 2015

The Hugging Will Continue Until Morale Improves

I saw in today's news that Apple open sourced their Swift language. One of the most influential companies in the world explicitly adopting an open source model – that's great! I'm a believer. One of the big reasons we founded Discourse was to build an open source solution that anyone, anywhere could use and safely build upon.

It's not that Unix won -- just that closed source lost. Big time.

— Jeff Atwood (@codinghorror) July 1, 2015

People were also encouraged that Apple was so refreshingly open about this whole process and involving the larger community in the process. They even hired from the community, which is something I always urge companies to do.

Also, not many people were, shall we say … fans … of Objective C as a language. There was a lot of community interest in having another viable modern language to write iOS apps in, and to Apple's credit, they produced Swift, and even promised to open source it by the end of the year. And they delivered, in a deliberate, thoughtful way. (Did I mention that they use CommonMark? That's kind of awesome, too.)

One of my heroes, Miguel de Icaza, happens to have lots of life experience in open sourcing things that were not exactly open source to start with. He applauded the move, and even made a small change to his Mono project in tribute:

When Swift was open sourced today, I saw they had a Code of Conduct. We had to follow suit, Mono has adopted it: https://t.co/hVO3KL1Dn5

— Miguel de Icaza (@migueldeicaza) December 4, 2015

Which I also thought was kinda cool.

It surprises me that anyone could ever object to the mere presence of a code of conduct. But some people do.

A weak Code of Conduct is a placebo label saying a conference is safe, without actually ensuring it’s safe.

Absence of a Code of Conduct does not mean that the organizers will provide an unsafe conference.

Creating safety is not the same as creating a feeling of safety.

Things organizers can do to make events safer: Restructure parties to reduce unsafe intoxication-induced behavior; work with speakers in advance to minimize potentially offensive material; and provide very attentive, mindful customer service consistently through the attendee experience.

Creating a safe conference is more expensive than just publishing a Code of Conduct to the event, but has a better chance of making the event safe.

Safe conferences are the outcome of a deliberate design effort.

I have to say, I don't understand this at all. Even if you do believe these things, why would you say them out loud? What possible constructive outcome could result from you saying them? It's a textbook case of honesty not always being the best policy. If this is all you've got, just say nothing, or wave people off with platitudes, like politicians do. And if you're Jared Spool, notable and famous within your field, it's even worse – what does this say to everyone else working in your field?

Mr. Spool's central premise is this:

Creating safety is not the same as creating a feeling of safety.

Which, actually … isn't true, and runs counter to everything I know about empathy. If you've ever watched It's Not About the Nail, you'll understand that a feeling of safety is, in fact, what many people are looking for. It's not the whole story by any means, but it's a very important starting point. An anchor.

People understand you cannot possibly protect them from every single possible negative outcome at a conference. That's absurd. But they also want to hear you stand up for them, and say out loud that, yes, these are the things we believe in. This is what we know to be true. Here is how we will look out for each other.

I also had a direct flashback to Deborah Tannen's groundbreaking You Just Don't Understand, in which you learn that men are all about fixing the problem, so much so that they rush headlong into any remotely plausible solution, without stopping along the way to actually listen and appreciate the depth of the problem, which maybe … can't really even be fixed?

If women are often frustrated because men do not respond to their troubles by offering matching troubles, men are often frustrated because women do … he feels she is trying to take something away from him by denying the uniqueness of his experience … if women resent men's tendency to offer solutions to problems, men complain about women's refusal to take action to solve the problems they complain about.

Since many men see themselves as problem solvers, a complaint or a trouble is a challenge … Trying to solve a problem or fix a trouble focuses on the message level. But for most women who habitually report problems at work or in friendships, the message is not the main point … trouble talk is intended to reinforce rapport by sending the metamessage "We're the same; you're not alone."

Women are frustrated when they not only don’t get this reinforcement but, quite the opposite, feel distanced by the advice, which seems to send the metamessage "We’re not the same. You have the problems; I have the solutions."

Having children really underscored this point for me. The quickest way to turn a child's frustration into a screaming, explosive tantrum is to try to fix their problem for them. This is such a hard thing for engineers to wrap their heads around, particularly male engineers, because we are all about fixing the problems. That's what we do, right? That's why we exist? We fix problems?

I once wrote this in reply to an Imgur discussion topic about navigating an "emotionally charged sitation":

Oh, you want a master class in dealing with emotionally charged situations? Well, why didn't you just say so?

Have kids. Within a few years you will learn to be an expert in dealing with this kind of stuff, because what nobody tells you about having kids is that for the first ~5 years, they are constantly. freaking. the. f**k. out.

46 Reasons My Three Year Old Might Be Freaking Out

If this seems weird to you, or like some kind of made up exaggerated hilarious absurd brand of humor, oh trust me. It's not. Real talk. This is actually how it is.

In their defense, it's not their fault: they've never felt fear, anger, hunger, jealousy, love, or any of the dozen other incredibly complex emotions you and I deal with on a daily basis. So they learn. But along the way, there will be many many many manymanymanymany freakouts. And guess who's there to help them navigate said freakouts?

You are.

What works is surprisingly simple:

Be there.

Listen.

Empathize, hug, and echo back to them. Don't try to solve their problems! DO NOT DO IT! Paradoxically, this only makes it way worse if you do. Let them work through the problem on their own. They always will – and knowing someone trusts you enough to figure our your own problems is a major psychological boost.

You gotta lick your rats, man.

(protip: this works identically on adults and kids. Turns out most so-called adults aren't fully grown up. Who knew?)

I guess my point is that rats aren't so different from people. We all want the same thing. Comfort from someone who can tell us that the world is safe, the world is not out to get you, that bad things can (and might) happen to you but you'll still be OK because we will help you. We're all in this thing together, you're a human being much like myself and we love you.

That's why a visible, public code of conduct is a good idea, not only at an in-person conference, but also on a software project like Swift, or Mono. But programmers being programmers – because they spend all day every day mired in the crazy world of infinitely recursive rules from their OS, from their programming language, from their APIs, from their tools – are rules lawyers par excellence. Nobody on planet Earth is better at arguing to the death over a set of completely arbitrary, made up rules than the average programmer.

I knew in my heart of hearts that someone – and by someone I mean a programmer – would inevitably complain about the fact that Mono had added a code of conduct, another "unnecessary" ruleset. So I made a programmer joke.

@migueldeicaza I find these rules offensive and will be fining a complaint

— Jeff Atwood (@codinghorror) December 4, 2015

This is the second time in as many days that I made what I thought was an obvious joke on Twitter that was interpreted seriously.

When someone starts at Discourse, I have the talk with them. "You remember your family? Forget them. Look at me. *We* are your family now."

— Jeff Atwood (@codinghorror) December 2, 2015

OK, maybe sometimes my Twitter jokes aren't very good. Well, you know, that's just, like … your opinion, man. I should probably switch from Twitter to Myspace or Ello or Google Plus or Snapchat or something.

But it bothered me that people, any people, would think I actually asked new hires to put the company above their family.* Or that I didn't believe in a code of conduct. I guess some of that comes from having ~200k followers; once your audience gets big enough, Poe's Law becomes inevitable?

Anyway, I wanted to say I'm sorry. And I'm particularly sorry that eevee, who wrote that awesome PHP is a Fractal of Bad Design article that I once riffed on, thought I was serious, or even worse, that my joke was in bad taste. Even though the negative article about Discourse eevee wrote did kinda hurt my feelings.

@samsaffron @JakubJirutka programmers should not have feelings that is a liability

— Jeff Atwood (@codinghorror) October 2, 2015

I know we have our differences, but if we as programmers can't come together through our collective shared horror over PHP, the Nickelback of programming languages, then clearly I have failed.

To show that I absolutely do believe in the value of a code of conduct, even as public statements of intent that we may not completely live up to, even if we've never had any incidents or problems that would require formal statements – I'm also adding a code of conduct as defined by contributor-covenant.org to the Discourse project. We're all in this open source thing together, you're a human being very much like us, and we vow to treat you with the same respect we'd want you to treat us. This should not be controversial. It should be common. And saying so matters.

If you maintain an open source project, I strongly urge you to consider formally adopting a code of conduct, too.

@codinghorror hugs!

— Miguel de Icaza (@migueldeicaza) December 4, 2015

The hugging will continue until morale improves.

* That's only required of co-founders

[advertisement] Building out your tech team? Stack Overflow Careers helps you hire from the largest community for programmers on the planet. We built our site with developers like you in mind.

November 29, 2015

The 2016 HTPC Build

I've loved many computers in my life, but the HTPC has always had a special place in my heart. It's the only always-on workhorse computer in our house, it is utterly silent, totally reliable, sips power, and it's at the center of our home entertainment, networking, storage, and gaming. This handy box does it all, 24/7.

I love this little machine to death; it's always been there for me and my family. The steady march of improvements in my HTPC build over the years lets me look back and see how far the old beige box PC has come in the decade I've been blogging:

2005~$1000512 MB RAM, single core CPU80 watts idle

2008~$5202 GB RAM, dual core CPU45 watts idle

2011~$4204 GB RAM, dual core CPU + GPU22 watts idle

2013~$3008 GB RAM, dual core CPU + GPU×215 watts idle

2016~$3208 GB RAM, dual core CPU + GPU×410 watts idle

As expected, the per-thread performance increase from 2013's Haswell CPU to 2016's Skylake CPU is modest – 20 percent at best, and that might be rounding up. About all you can do is slap more cores in there, to very limited benefit in most applications. The 6100T I chose is dual-core plus hyperthreading, which I consider the sweet spot, but there are some other Skylake 6000 series variants at the same 35w power envelope which offer true quad-core, or quad-core plus hyperthreading – and, inevitably, a slightly lower base clock rate. So it goes.

The real story is how power consumption was reduced another 33 percent. Here's what I measured with my trusty kill-a-watt:

10w idle with display off

11w idle with display on

13w active standard netflix (720p?) movie playback

14w multiple torrents, display off

15w 1080p video playback in MPC-HC x64

40w Lego Batman 3 high detail 720p gameplay

56w Prime95 full CPU load + Rthdribl full GPU load

These are impressive numbers, much better than I expected. Maybe part of it is the latest Windows 10 update which supports the new Speed Shift technology in Skylake. Speed Shift hands over CPU clockspeed control to the CPU itself, so it can ramp its internal clock up and down dramatically faster than the OS could. A Skylake CPU, with the right OS support, gets up to speed and back to idle faster, resulting in better performance and less overall power draw.

Skylake's on-board HD 530 graphics is about twice as fast as the HD 4400 that it replaces. Haswell offered the first reasonable big screen gaming GPU on an Intel CPU, but only just. 720p was mostly attainable in older games with the HD 4400, but I sometimes had to drop to medium detail settings, or lower. Two generations on, with the HD 530, even recent games like GRID Autosport, Lego Jurassic Park and so on can now be played at 720p with high detail settings at consistently high framerates. It depends on the game, but a few can even be played at 1080p now with medium settings. I did have at least one saved benchmark result on the disk to compare with:

GRID 2, 1280×720, high detail defaults

MaxMinAvg

i3-4130T, Intel HD 4400 GPU322127

i3-6100T, Intel HD 530 GPU503239

Skylake is a legitimate gaming system on a chip, provided you are OK with 720p. It's tremendous fun to play Lego Batman 3 with my son.

At 720p using high detail settings, where there used to be many instances of notable slowdown, particularly in co-op, it now feels very smooth throughout. And since games are much cheaper on PC than consoles, particularly through Steam, we have access to a complete range of gaming options from new to old, from indie to mainstream – and an enormous, inexpensive back catalog.

Of course, this is still far from the performance you'd get out of a $300 video card or a $300 console. You'll never be able to play a cutting edge, high end game like GTA V or Witcher 3 on this HTPC box. But you may not need to. Steam in-home streaming has truly come into its own in the last year. I tried streaming Batman: Arkham Knight from my beefy home office computer to the HTPC at 1080p, and I was surprised to discover just how effortless it was – nor could I detect any visual artifacts or input latency.

It's super easy to set up – just have the Steam client running on both machines at a logged in Windows desktop (can't be on the lock screen), and press the Stream button on any game that you don't have installed locally. Be careful with WiFi when streaming high resolutions, obviously, but if you're on a wired network, I found the experience is nearly identical to playing the game locally. As long as the game has native console / controller support, like Arkham Knight and Fallout 4, streaming to the big screen works great. Try it! That's how Henry and I are going to play through Just Cause 3 this Tuesday and I can't wait.

As before in 2013, I only upgraded the guts of the system, so the incremental cost is low.

GA-H170N-WIFI H170 motherboard — $120

8GB DDR4 RAM — $46

Intel i3-6100T 35w, 3.2 GHz dual core CPU — $155

That's a total of $321 for this upgrade cycle, about the cost of a new Xbox One or PS4. The i3-6100T should be a bit cheaper; according to Intel it has the same list price as the i3-6100, but suffers from weak availability. The motherboard I chose is a little more expensive, too, perhaps because it includes extras like built in WiFi and M.2 support, although I'm not using either quite yet. You might be able to source a cheaper H170 motherboard than mine.

The rest of the system has not changed much since 2013:

PicoPSU 90 — $50

Antec ISK 300-150 — $68

512GB SSD boot drive — $150

2TB 2.5" HDD × 2 — $200

Populate these items to taste, pick whatever drives and mini-ITX case you prefer, but definitely stick with the PicoPSU, because removing the large, traditional case power supply makes the setup both a) much more power efficient at low wattage, and b) much roomier inside the case and easier to install, upgrade, and maintain.

I also switched to Xbox One controllers, for no really good reason other than the Xbox 360 is getting more obsolete every month, and now that my beloved Rock Band 4 is available on next-gen systems, I'm trying to slowly evict the 360s from my house.

The Windows 10 wireless Xbox One adapter does have some perks. In addition to working with the newer and slightly nicer gamepads from the Xbox One, it supports an audio stream over each controller via the controller's headset connector. But really, for the purposes of Steam gaming, any USB controller will do.

While I've been over the moon in love with my HTPC for years, and I liked the Xbox 360, I have been thoroughly unimpressed with my newly purchased Xbox One. Both the new and old UIs are hard to use, it's quite slow relative to my very snappy HTPC, and it has a ton of useless features that I don't care about, like broadcast TV support. About all the Xbox One lets you do is sometimes play next gen games at 1080p without paying $200 or $300 for a fancy video card, and let's face it – the PS4 does that slightly better. If those same games are available on PC, you'll have a better experience streaming them from a gaming PC to either a cheap Steam streaming box, or a generalist HTPC like this one.

The Xbox One and PS4 are effectively plain old PCs, built on:

Intel Atom class (aka slow) AMD 8-core x86 CPU

8 GB RAM

AMD Radeon 77xx / 78xx GPUs

cheap commodity 512GB or 1TB hard drives (not SSDs)

The golden age of x86 gaming is well upon us. That's why the future of PC gaming is looking brighter every day. We can see it coming true in the solid GPU and idle power improvements in Skylake, riding the inevitable wave of x86 becoming the dominant kind of (non mobile, anyway) gaming for the forseeable future.

[advertisement] At Stack Overflow, we help developers learn, share, and grow. Whether you’re looking for your next dream job or looking to build out your team, we've got your back.

November 19, 2015

To ECC or Not To ECC

On one of my visits to the Computer History Museum – and by the way this is an absolute must-visit place if you are ever in the San Francisco bay area – I saw an early Google server rack circa 1999 in the exhibits.

Not too fancy, right? Maybe even … a little janky? This is building a computer the Google way:

Instead of buying whatever pre-built rack-mount servers Dell, Compaq, and IBM were selling at the time, Google opted to hand-build their server infrastructure themselves. The sagging motherboards and hard drives are literally propped in place on handmade plywood platforms. The power switches are crudely mounted in front, the network cables draped along each side. The poorly routed power connectors snake their way back to generic PC power supplies in the rear.

Some people might look at these early Google servers and see an amateurish fire hazard. Not me. I see a prescient understanding of how inexpensive commodity hardware would shape today's internet. I felt right at home when I saw this server; it's exactly what I would have done in the same circumstances. This rack is a perfect example of the commodity x86 market D.I.Y. ethic at work: if you want it done right, and done inexpensively, you build it yourself.

This rack is now immortalized in the National Museum of American History. Urs Hölzle posted lots more juicy behind the scenes details, including the exact specifications:

Supermicro P6SMB motherboard

256MB PC100 memory

Pentium II 400 CPU

IBM Deskstar 22GB hard drives (×2)

Intel 10/100 network card

When I left Stack Exchange (sorry, ) one of the things that excited me most was embarking on a new project using 100% open source tools. That project is, of course, Discourse.

Inspired by Google and their use of cheap, commodity x86 hardware to scale on top of the open source Linux OS, I also built our own servers. When I get stressed out, when I feel the world weighing heavy on my shoulders and I don't know where to turn … I build servers. It's therapeutic.

I like to give servers a little pep talk while I build them. "Who's the best server! Who's the fastest server!"

— Jeff Atwood (@codinghorror) November 16, 2015

Don't judge me, man.

But more seriously, with the release of Intel's latest Skylake architecture, it's finally time to upgrade our 2013 era Discourse servers to the latest and greatest, something reflective of 2016 – which means building even more servers.

Discourse runs on a Ruby stack and one thing we learned early on is that Ruby demands exceptional single threaded performance, aka, a CPU running as fast as possible. Throwing umptazillion CPU cores at Ruby doesn't buy you a whole lot other than being able to handle more requests at the same time. Which is nice, but doesn't get you speed per se. Someone made a helpful technical video to illustrate exactly how this all works:

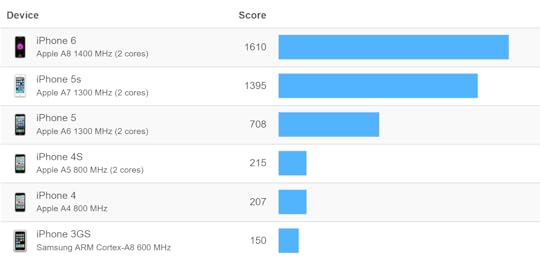

This is by no means exclusive to Ruby; other languages like JavaScript and Python also share this trait. And Discourse itself is a JavaScript application delivered through the browser, which exercises the mobile / laptop / desktop client CPU. Mobile devices reaching near-parity with desktop performance in single threaded performance is something we're betting on in a big way with Discourse.

So, good news! Although PC performance has been incremental at best in the last 5 years, between Haswell and Skylake, Intel managed to deliver a respectable per-thread performance bump. Since we are upgrading our servers from Ivy Bridge (very similar to the the i7-3770k), the generation before Haswell, I'd expect a solid 33% performance improvement at minimum.

Even worse, the more cores they pack on a chip, the slower they all go. From Intel's current Xeon E5 lineup:

E5-1680 → 8 cores, 3.2 Ghz

E5-1650 → 6 cores, 3.5 Ghz

E5-1630 → 4 cores, 3.7 Ghz

Which brings me to the following build for our core web tiers, which optimizes for "lots of inexpensive, fast boxes"

2013

2016

Xeon E3-1280 V2 Ivy Bridge 3.6 Ghz / 4.0 Ghz quad-core ($640)

SuperMicro X9SCM-F-O mobo ($190)

32 GB DDR3-1600 ECC ($292)

SC111LT-330CB 1U chassis ($200)

Samsung 830 512GB SSD ×2 ($1080)

1U Heatsink ($25)

i7-6700k Skylake 4.0 Ghz / 4.2 Ghz quad-core ($370)

SuperMicro X11SSZ-QF-O mobo ($230)

64 GB DDR4-2133 ($520)

CSE-111LT-330CB 1U chassis ($215)

Samsung 850 Pro 1TB SSD ×2 ($886)

1U Heatsink ($20)

$2,427

$2,241

31w idle, 87w BurnP6 load

14w idle, 81w BurnP6 load

So, about 10% cheaper than what we spent in 2013, with 2× the memory, 2× the storage (probably 50-100% faster too), and at least ~33% faster CPU. With lower power draw, to boot! Pretty good. Pretty, pretty, pretty, pretty good.

(Note that the memory bump is only possible thanks to Intel finally relaxing their iron fist of maximum allowed RAM at the low end; that's new to the Skylake generation.)

One thing is conspicuously missing in our 2016 build: Xeons, and ECC Ram. In my defense, this isn't intentional – we wanted the fastest per-thread performance and no Intel Xeon, either currently available or announced, goes to 4.0 GHz with Skylake. Paying half the price for a CPU with better per-thread performance than any Xeon, well, I'm not going to kid you, that's kind of a nice perk too.

Error-correcting code memory (ECC memory) is a type of computer data storage that can detect and correct the most common kinds of internal data corruption. ECC memory is used in most computers where data corruption cannot be tolerated under any circumstances, such as for scientific or financial computing.

Typically, ECC memory maintains a memory system immune to single-bit errors: the data that is read from each word is always the same as the data that had been written to it, even if one or more bits actually stored have been flipped to the wrong state. Most non-ECC memory cannot detect errors although some non-ECC memory with parity support allows detection but not correction.

It's received wisdom in the sysadmin community that you always build servers with ECC RAM because, well, you build servers to be reliable, right? Why would anyone intentionally build a server that isn't reliable? Are you crazy, man? Well, looking at that cobbled together Google 1999 server rack, which also utterly lacked any form of ECC RAM, I'm inclined to think that reliability measured by "lots of redundant boxes" is more worthwhile and easier to achieve than the platonic ideal of making every individual server bulletproof.

Being the type of guy who likes to question stuff… I began to question. Why is it that ECC is so essential anyway? If ECC was so important, so critical to the reliable function of computers, why isn't it built in to every desktop, laptop, and smartphone in the world by now? Why is it optional? This smells awfully… enterprisey to me.

Now, before everyone stops reading and I get permanently branded as "that crazy guy who hates ECC", I think ECC RAM is fine:

The cost difference between ECC and not-ECC is minimal these days.

The performance difference between ECC and not-ECC is minimal these days.

Even if ECC only protects you from rare 1% hardware error cases that you may never hit until you literally build hundreds or thousands of servers, it's cheap insurance.

I am not anti-insurance, nor am I anti-ECC. But I do seriously question whether ECC is as operationally critical as we have been led to believe, and I think the data shows modern, non-ECC RAM is already extremely reliable.

First, let's look at the Puget Systems reliability stats. These guys build lots of commodity x86 gamer PCs, burn them in, and ship them. They helpfully track statistics on how many parts fail either from burn-in or later in customer use. Go ahead and read through the stats.

For the last two years, CPU reliability has dramatically improved. What is interesting is that this lines up with the launch of the Intel Haswell CPUs which was when the CPU voltage regulation was moved from the motherboard to the CPU itself. At the time we theorized that this should raise CPU failure rates (since there are more components on the CPU to break) but the data shows that it has actually increased reliability instead.

Even though DDR4 is very new, reliability so far has been excellent. Where DDR3 desktop RAM had an overall failure rate in 2014 of ~0.6%, DDR4 desktop RAM had absolutely no failures.

SSD reliability has dramatically improved recently. This year Samsung and Intel SSDs only had a 0.2% overall failure rate compared to 0.8% in 2013.

Modern commodity computer parts from reputable vendors are amazingly reliable. And their trends show from 2012 onward essential PC parts have gotten more reliable, not less. (I can also vouch for the improvement in SSD reliability as we have had zero server SSD failures in 3 years across our 12 servers with 24+ drives, whereas in 2011 I was writing about the Hot/Crazy SSD Scale.) And doesn't this make sense from a financial standpoint? How does it benefit you as a company to ship unreliable parts? That's money right out of your pocket and the reseller's pocket, plus time spent dealing with returns.

We had a, uh, "spirited" discussion about this internally on our private Discourse instance.

This is not a new debate by any means, but I was frustrated by the lack of data out there. In particular, I'm really questioning the difference between "soft" and "hard" memory errors:

But what is the nature of those errors? Are they soft errors – as is commonly believed – where a stray Alpha particle flips a bit? Or are they hard errors, where a bit gets stuck?

I absolutely believe that hard errors are reasonably common. RAM DIMMS can have bugs, or the chips on the DIMM can fail, or there's a design flaw in circuitry on the DIMM that only manifests in certain corner cases or under extreme loads. I've seen it plenty. But a soft error where a bit of memory randomly flips?

There are two types of soft errors, chip-level soft error and system-level soft error. Chip-level soft errors occur when the radioactive atoms in the chip's material decay and release alpha particles into the chip. Because an alpha particle contains a positive charge and kinetic energy, the particle can hit a memory cell and cause the cell to change state to a different value. The atomic reaction is so tiny that it does not damage the actual structure of the chip.

Outside of airplanes and spacecraft, I have a difficult time believing that soft errors happen with any frequency, otherwise most of the computing devices on the planet would be crashing left and right. I deeply distrust the anecdotal voodoo behind "but one of your computer's memory bits could flip, you'd never know, and corrupted data would be written!" It'd be one thing if we observed this regularly, but I've been unheathily obsessed with computers since birth and I have never found random memory corruption to be a real, actual problem on any computers I have either owned or had access to.

But who gives a damn what I think. What does the data say?

A 2007 study found that the observed soft error rate in live servers was two orders of magnitude lower than previously predicted:

Our preliminary result suggests that the memory soft error rate in two real production systems (a rack-mounted server environment and a desktop PC environment) is much lower than what the previous studies concluded. Particularly in the server environment, with high probability, the soft error rate is at least two orders of magnitude lower than those reported previously. We discuss several potential causes for this result.

A 2009 study on Google's server farm notes that soft errors were difficult to find:

We provide strong evidence that memory errors are dominated by hard errors, rather than soft errors, which previous work suspects to be the dominant error mode.

Yet another large scale study from 2012 discovered that RAM errors were dominated by permanent failure modes typical of hard errors:

Our study has several main findings. First, we find that approximately 70% of DRAM faults are recurring (e.g., permanent) faults, while only 30% are transient faults. Second, we find that large multi-bit faults, such as faults that affects an entire row, column, or bank, constitute over 40% of all DRAM faults. Third, we find that almost 5% of DRAM failures affect board-level circuitry such as data (DQ) or strobe (DQS) wires. Finally, we find that chipkill functionality reduced the system failure rate from DRAM faults by 36x.

In the end, we decided the non-ECC RAM risk was acceptable for every tier of service except our databases. Which is kind of a bummer since higher end Skylake Xeons got pushed back to the extra-fancy Purley platform upgrade in 2017. Regardless, we burn in every server we build with a complete run of memtestx86 and overnight prime95/mprime, and you should too. There's one whirring away through endless memory tests right behind me as I write this.

I find it very, very suspicious that ECC – if it is so critical to preventing these random, memory corrupting bit flips – has not already been built into every type of RAM that we ship in the ubiquitous computing devices all around the world as a cost of doing business. But I am by no means opposed to paying a small insurance premium for server farms, either. You'll have to look at the data and decide for yourself. Mostly I wanted to collect all this information in one place so people who are also evaluating the cost/benefit of ECC RAM for themselves can read the studies and decide what they want to do.

Please feel free to leave comments if you have other studies to cite, or significant measured data to share.

[advertisement] At Stack Overflow, we put developers first. We already help you find answers to your tough coding questions; now let us help you find your next job.

September 17, 2015

Building a PC, Part VIII: Iterating

The last time I seriously upgraded my PC was in 2011, because the PC is over. And in some ways, it truly is – they can slap a ton more CPU cores on a die, for sure, but the overall single core performance increase from a 2011 high end Intel CPU to today's high end Intel CPU is … really quite modest, on the order of maybe 30% to 40%.

In that same timespan, mobile and tablet CPU performance has continued to just about double every year. Which means the forthcoming iPhone 6s will be almost 10 times faster than the iPhone 4 was.

Remember, that's only single core CPU performance – I'm not even factoring in the move from single, to dual, to triple core as well as generally faster memory and storage. This stuff is old hat on desktop, where we've had mainstream dual cores for a decade now, but they are huge improvements for mobile.

When your mobile devices get 10 times faster in the span of four years, it's hard to muster much enthusiasm for a modest 1.3 × or 1.4 × iterative improvement in your PC's performance over the same time.

I've been slogging away at this for a while; my current PC build series spans 7 years:

Building a PC, Part VII: Rebooting

Building a PC, Part VI: Rebuilding

Building a PC, Part V: Upgrading

Building a PC, Part IV: Now It's Your Turn

Building a PC, Part III: Overclocking

Building a PC, Part II: Burn in

Building a PC, Part I: Minimal boot

The fun part of building a PC is that it's relatively easy to swap out the guts when something compelling comes along. CPU performance improvements may be modest these days, but there are still bright spots where performance is increasing more dramatically. Mainly in graphics hardware and, in this case, storage.

The current latest-and-greatest Intel CPU is Skylake. Like Sandy Bridge in 2011, which brought us much faster 6 Gbps SSD-friendly drive connectors (although only two of them), the Skylake platform brings us another key storage improvement – the ability to connect hard drives directly to the PCI Express lanes. Which looks like this:

… and performs like this:

Now there's the 3× performance increase we've been itching for! To be fair, a raw increase of 3× in drive performance doesn't necessarily equate to a computer that boots in one third the time. But here's why disk speed matters:

If the CPU registers are how long it takes you to fetch data from your brain, then going to disk is the equivalent of fetching data from Pluto.

What I've always loved about SSDs is that they attack the PC's worst-case performance scenario, when information has to come off the slowest device inside your computer – the hard drive. SSDs massively reduced the variability of requests for data. Let's compare L1 cache access time to minimum disk access time:

Traditional hard drive

0.9 ns → 10 ms (variability of 11,111,111× )

SSD

0.9 ns → 150 µs (variability of 166,667× )

SSDs provide a reduction in overall performance variability of 66×! And when comparing latency:

7200rpm HDD — 1800ms

SATA SSD — 4ms

PCIe SSD — 0.34ms

Even going from a fast SATA SSD to a PCI Express SSD, you're looking at a 10x reduction in drive latency.

Here's what you need:

256GB Samsung SM951 M.2 drive $213

Asus Z170-A motherboard $165

Intel i5-i6600k Skylake CPU $270

16GB DDR4 memory $134

These are the basics. It's best to use the M.2 connection as a fast boot / system drive, so I scaled it back to the smaller 256 GB version. I also had a lot of trouble getting my hands on the faster i7-6700k CPU, which appears supply constrained and is currently overpriced as a result.

Even though the days of doubling (or even 1.5×-ing) CPU performance are long gone for PCs, there are still some key iterative performance milestones to hit. Like mainstream 4k displays, I believe mainstream PCI express SSDs are another important step in the overall evolution of desktop computing. Or its corpse, anyway.

[advertisement] Find a better job the Stack Overflow way - what you need when you need it, no spam, and no scams.

August 18, 2015

Our Brave New World of 4K Displays

It's been three years since I last upgraded monitors. Those inexpensive Korean 27" IPS panels, with a resolution of 2560×1440 – also known as 1440p – have served me well. You have no idea how many people I've witnessed being Wrong On The Internet on these babies.

I recently got the upgrade itch real bad:

4K monitors have stabilized as a category, from super bleeding edge "I'm probably going to regret buying this" early adopter stuff, and beginning to approach mainstream maturity.

Windows 10, with its promise of better high DPI handling, was released. I know, I know, we've been promised reasonable DPI handling in Windows for the last five years, but hope springs eternal. This time will be different!™

I needed a reason to buy a new high end video card, which I was also itching to upgrade, and simplify from a dual card config back to a (very powerful) single card config.

I wanted to rid myself of the monitor power bricks and USB powered DVI to DisplayPort converters that those Korean monitors required. I covet simple, modern DisplayPort connectors. I was beginning to feel like a bad person because I had never even owned a display that had a DisplayPort connector. First world problems, man.

1440p at 27" is decent, but it's also … sort of an awkward no-man's land. Nowhere near high enough resolution to be retina, but it is high enough that you probably want to scale things a bit. After living with this for a few years, I think it's better to just suck it up and deal with giant pixels (34" at 1440p, say), or go with something much more high resolution and trust that everyone is getting their collective act together by now on software support for high DPI.

Given my great experiences with modern high DPI smartphone and tablet displays (are there any other kind these days?), I want those same beautiful high resolution displays on my desktop, too. I'm good enough, I'm smart enough, and doggone it, people like me.

I was excited, then, to discover some strong recommendations for the Asus PB279Q.

The Asus PB279Q is a 27" panel, same size as my previous cheap Korean IPS monitors, but it is more premium in every regard:

3840×2160

"professional grade" color reproduction

thinner bezel

lighter weight

semi-matte (not super glossy)

integrated power (no external power brick)

DisplayPort 1.2 and HDMI 1.4 support built in

It is also a more premium monitor in price, at around $700, whereas I got my super-cheap no-frills Korean IPS 1440p monitors for roughly half that price. But when I say no-frills, I mean it – these Korean monitors didn't even have on-screen controls!

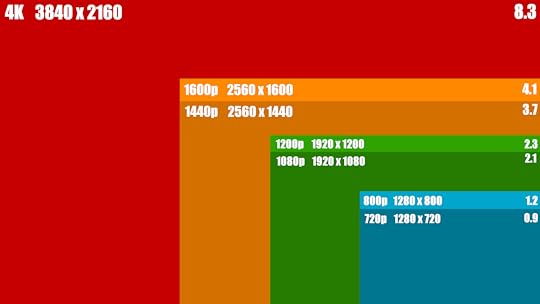

4K is a surprisingly big bump in resolution over 1440p — we go from 3.7 to 8.3 megapixels.

But, is it … retina?

It depends how you define that term, and from what distance you're viewing the screen. Per Is This Retina:

27" 3840×2160

'retina' at a viewing distance of 21"

27" 2560×1440

'retina' at a viewing distance of 32"

With proper computer desk ergonomics you should be sitting with the top of your monitor at eye level, at about an arm's length in front of you. I just measured my arm and, fully extended, it's about 26". Sitting at my desk, I'm probably about that distance from my monitor or a bit closer, but certainly beyond the 21" necessary to call this monitor 'retina' despite being 163 PPI. It definitely looks that way to my eye.

I have more words to write here, but let's cut to the chase for the impatient and the TL;DR crowd. This 4K monitor is totally amazing and you should buy one. It feels exactly like going from the non-retina iPad 2 to the retina iPad 3 did, except on the desktop. It makes all the text on your screen look beautiful. There is almost no downside.

There are a few caveats, though:

You will need a beefy video card to drive a 4K monitor. I personally went all out for the GeForce 980 Ti, because I might want to actually game at this native resolution, and the 980 Ti is the undisputed fastest single video card in the world at the moment. If you're not a gamer, any midrange video card should do fine.

Display scaling is definitely still a problem at times with a 4K monitor. You will run into apps that don't respect DPI settings and end up magnifying-glass tiny. Scott Hanselman provided many examples in January 2014, and although stuff has improved since then with Windows 10, it's far from perfect.

Browsers scale great, and the OS does too, but if you use any desktop apps built by careless developers, you'll run into this. The only good long term solution is to spread the gospel of 4K and shame them into submission with me. Preach it, brothers and sisters!

Enable DisplayPort 1.2 in the monitor settings so you can turn on 60Hz. Trust me, you do not want to experience a 30Hz LCD display. It is unspeakably bad, enough to put one off computer screens forever. For people who tell you they can't see the difference between 30fps and 60fps, just switch their monitors to 30hz and watch them squirm in pain.

Watching this, I begin to understand why gamers want 90Hz, 120Hz or even 144Hz monitors. 60fps / 60 Hz should be the absolute minimum, no matter what resolution you're running. Luckily DisplayPort 1.2 enables 60 Hz at 4K, but barely. You'll need DisplayPort 1.3+ to do better than that.

Disable the crappy built in monitor speakers. Headphones or bust, baby!

Turn down the brightness from the standard factory default of retina scorching 100% to something saner like 50%. Why do manufacturers do this? Is it because they hate eyeballs? While you're there, you might mess around with some basic display calibration, too.

This Asus PB279Q 4K monitor is the best thing I've upgraded on my computer in years. Well, actually, thing(s) I've upgraded, because I am not f**ing around over here.

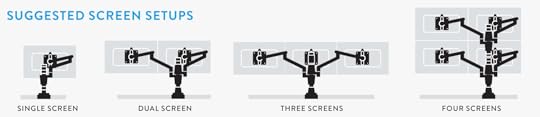

I'm a long time proponent of the triple monitor lifestyle, and the only thing better than a 4K display is three 4K displays! That's 11,520×2,160 pixels to you, or 6,480×3,840 if rotated.

(Good luck attempting to game on this configuration with all three monitors active, though. You're gonna need it. Some newer games are too demanding to run on "High" settings on a single 4K monitor, even with the mighty Nvidia 980 Ti.)

I've also been experimenting with better LCD monitor arms that properly support my preferred triple monitor configurations. Here's a picture from the back, where all the action is:

These are the Flo Monitor Supports, and they free up a ton of desk space in a triple monitor configuration while also looking quite snazzy. I'm fond of putting my keyboard just under the center monitor, which isn't possible with any monitor stand.

With these Flo arms you can "scale up" your configuration from dual to triple or even quad (!) monitor later.

4K monitors are here, they're not that expensive, the desktop operating systems and video hardware are in place to properly support them, and in the appropriate size (27") we can finally have an amazing retina display experience at typical desktop viewing distances. Choose the Asus PB279Q 4K monitor, or whatever 4K monitor you prefer, but take the plunge.

In 2007, I asked Where Are The High Resolution Displays, and now, 8 years later, they've finally, finally arrived on my desktop. Praise the lord and pass the pixels!

Oh, and gird your loins for 8K one day. It, too, is coming.

[advertisement] Building out your tech team? Stack Overflow Careers helps you hire from the largest community for programmers on the planet. We built our site with developers like you in mind.

August 8, 2015

Welcome to The Internet of Compromised Things

This post is a bit of a public service announcement, so I'll get right to the point:

Every time you use WiFi, ask yourself: could I be connecting to the Internet through a compromised router with malware?

It's becoming more and more common to see malware installed not at the server, desktop, laptop, or smartphone level, but at the router level. Routers have become quite capable, powerful little computers in their own right over the last 5 years, and that means they can, unfortunately, be harnessed to work against you.

I write about this because it recently happened to two people I know.

.@jchris A friend got hit by this on newly paved win8.1 computer. Downloaded Chrome, instantly infected with malware. Very spooky.

— not THE Damien Katz (@damienkatz) May 20, 2015

@codinghorror *no* idea and there’s almost ZERO info out there. Essentially malicious JS adware embedded in every in-app browser

— John O'Nolan (@JohnONolan) August 7, 2015

In both cases, they eventually determined the source of the problem was that the router they were connecting to the Internet through had been compromised.

This is way more evil genius than infecting a mere computer. If you can manage to systematically infect common home and business routers, you can potentially compromise every computer connected to them.

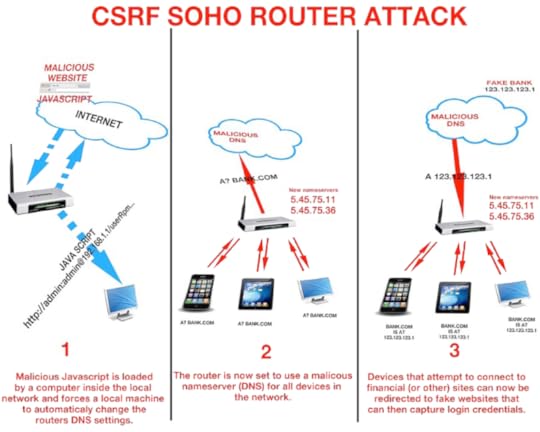

Hilarious meme images I am contractually obligated to add to each blog post aside, this is scary stuff and you should be scared.

Router malware is the ultimate man-in-the-middle attack. For all meaningful traffic sent through a compromised router that isn't HTTPS encrypted, it is 100% game over. The attacker will certainly be sending all that traffic somewhere they can sniff it for anything important: logins, passwords, credit card info, other personal or financial information. And they can direct you to phishing websites at will – if you think you're on the "real" login page for the banking site you use, think again.

Heck, even if you completely trust the person whose router you are using, they could be technically be doing this to you. But they probably aren't.

Probably.

In John's case, the attackers inserted annoying ads in all unencrypted web traffic, which is an obvious tell to a sophisticated user. But how exactly would the average user figure out where this junk is coming from (or worse, assume the regular web is just full of ad junk all the time), when even a technical guy like John – founder of the open source Ghost blogging software used on this very blog – was flummoxed?

But that's OK, we're smart users who would only access public WiFi using HTTPS websites, right? Sadly, even if the traffic is HTTPS encrypted, it can still be subverted! There's an extremely technical blow-by-blow analysis at Cryptostorm, but the TL;DR is this:

Compromised router answers DNS req for *.google.com to 3rd party with faked HTTPS cert, you download malware Chrome. Game over.

HTTPS certificate shenanigans. DNS and BGP manipulation. Very hairy stuff.

How is this possible? Let's start with the weakest link, your router. Or more specifically, the programmers responsible for coding the admin interface to your router.

They must be terribly incompetent coders to let your router get compromised over the Internet, since one of the major selling points of a router is to act as a basic firewall layer between the Internet and you… right?

In their defense, that part of a router generally works as advertised. More commonly, you aren't being attacked from the hardened outside. You're being attacked from the soft, creamy inside.

That's right, the calls are coming from inside your house!

By that I mean you'll visit a malicious website that scripts your own browser to access the web-based admin pages of your router, and reset (or use the default) admin passwords to reconfigure it.

Nasty, isn't it? They attack from the inside using your own browser. But that's not the only way.

Maybe you accidentally turned on remote administration, so your router can be modified from the outside.

Maybe you left your router's admin passwords at default.

Maybe there is a legitimate external exploit for your router and you're running a very old version of firmware.

Maybe your ISP provided your router and made a security error in the configuration of the device.

In addition to being kind of terrifying, this does not bode well for the Internet of Things.

Internet of Compromised Things, more like.

OK, so what can we do about this? There's no perfect answer; I think it has to be a defense in depth strategy.

Inside Your Home

Buy a new, quality router. You don't want a router that's years old and hasn't been updated. But on the other hand you also don't want something too new that hasn't been vetted for firmware and/or security issues in the real world.

Also, any router your ISP provides is going to be about as crappy and "recent" as the awful stereo system you get in a new car. So I say stick with well known consumer brands. There are some hardcore folks who think all consumer routers are trash, so YMMV.

I can recommend the Asus RT-AC87U – it did very well in the SmallNetBuilder tests, Asus is a respectable brand, it's been out a year, and for most people, this is probably an upgrade over what you currently have without being totally bleeding edge overkill. I know it is an upgrade for me.

(I am also eagerly awaiting Eero as a domestic best of breed device with amazing custom firmware, and have one pre-ordered, but it hasn't shipped yet.)

Download and install the latest firmware. Ideally, do this before connecting the device to the Internet. But if you connect and then immediately use the firmware auto-update feature, who am I to judge you.

Change the default admin passwords. Don't leave it at the documented defaults, because then it could be potentially scripted and accessed.

Turn off WPS. Turns out the Wi-Fi Protected Setup feature intended to make it "easy" to connect to a router by pressing a button or entering a PIN made it … a bit too easy. This is always on by default, so be sure to disable it.

Make sure remote administration is turned off. I've never owned a router that ever had this on by default, but I suppose it can't hurt to check.

For Wifi, turn on WPA2+AES and use a long, strong password. Again, I feel most modern routers get the defaults right these days, but just check. The password is your responsibility, and password strength matters tremendously for wireless security, so be sure to make it a long one – at least 20 characters with all the variability you can muster.

Pick a unique SSID. Default SSIDs just scream hack me, for I have all defaults and a clueless owner. And no, don't bother "hiding" your SSID, it's a waste of time.

Experts only: install an open source firmware. I discussed this a fair bit in Everyone Needs a Router, but you have to be very careful which router model you buy, and you'll probably need to stick with older models. There are several which are specifically sold to be friendly to open source firmware.

Outside Your Home

Well, this one is simple. Assume everything you do outside your home, on a remote network or over WiFi is being monitored by IBGs: Internet Bad Guys.

I know, kind of an oppressive way to voyage out into the world, but it's better to start out with a defensive mindset, because you could be connecting to anyone's compromised router or network out there.

But, good news. There are only two key things you need to remember once you're outside, facing down that fiery ball of hell in the sky and armies of IBGs.

Never access anything but HTTPS websites.

If it isn't available over HTTPS, don't go there!

You might be OK with HTTP if you are not logging in to the website, just browsing it, but even then IBGs could inject malware in the page and potentially compromise your device. And never, ever enter anything over HTTP you aren't 100% comfortable with bad guys seeing and using against you somehow.

We've made tremendous progress in HTTPS Everywhere over the last 5 years, and these days most major websites offer (or even better, force) HTTPS access. So if you just want to quickly check your GMail or Facebook or Twitter, you will be fine, because those services all force HTTPS.

If you must access non-HTTPS websites, or you are not sure, always use a VPN.

A VPN encrypts all your traffic, so you no longer have to worry about using HTTPS. You do have to worry about whether or not you trust your VPN provider, but that's a much longer discussion than I want to get into right now.

It's a good idea to pick a go-to VPN provider so you have one ready and get used to how it works over time. Initially it will feel like a bunch of extra work, and it kinda is, but if you care about your security an encrypt-everything VPN is bedrock. And if you don't care about your security, well, why are you even reading this?

If it feels like these are both variants of the same rule, always strongly encrypt everything, you aren't wrong. That's the way things are headed. The math is as sound as it ever was – but unfortunately the people and devices, less so.

Be Safe Out There

Until I heard Damien's story and John's story, I had no idea router hardware could be such a huge point of compromise. I didn't realize that you could be innocently visiting a friend's house, and because he happens to be the parent of three teenage boys and the owner of an old, unsecured router that you connect to via WiFi … your life will suddenly get a lot more complicated.

As the amount of stuff we connect to the Internet grows, we have to understand that the Internet of Things is a bunch of tiny, powerful computers, too – and they need the same strong attention to security that our smartphones, laptops, and servers already enjoy.

[advertisement] At Stack Overflow, we help developers learn, share, and grow. Whether you’re looking for your next dream job or looking to build out your team, we've got your back.

August 5, 2015

I Tried VR and It Was Just OK

It's been about a year and a half since I wrote The Road to VR, and a … few … things have happened since then.

Facebook bought Oculus for a skadillion dollars

I have to continually read thinkpieces describing how the mere act of strapping a VR headset on your face is such a transformative, disruptive, rapturous experience that you'll never look at the world the same way again.

I am somewhat OK with the former, although the idea of my heroes John Carmack and Michael Abrash as Facebook employees still raises my hackles. But the latter is more difficult to stomach. And it just doesn't stop.

For example, this recent WSJ piece. (I can't link directly to it, you have to click through from Google search results to get past the paywall).

I’ll spare you the rapturous account of the time I sculpted in three dimensions with light, fire, leaves and rainbows inside what felt like a real-life version of a holodeck from “Star Trek.” Writing about VR is like fiction about sex—seldom believable and never up to the task.

If you really want to understand how compelling VR is, you just have to try it. And I guarantee you will. At some point in the next couple of years, one of your already-converted friends will insist you experience it, the same way someone gave you your first turn at a keyboard or with a touch screen. And it will be no less a transformative experience.

I don't mean to call out the author here. There are a dozen other similarly breathless VR articles I could cite, where an amazing VR wonderland is looming right around the corner for all of us, any day now. The hype levels are off the charts. And if you haven't tried it, boy, you just don't know! It can't be explained, it must be experienced! There are people who honestly believe that in 5 years nobody will make non-VR games any more.

Well, I have experienced modern VR. A lot. I've tried both the Oculus DK1, the Oculus DK2, and a 360° backpack-and-controllers Survios rig, which looks something like this:

Based on those experiences, I can't reconcile these hype levels with what I felt. At all. Right now, VR is not something I'd unconditionally recommend to a fellow avid gamer, much less a casual gamer.

To be honest, when I tried the DK1 and DK2, after a few hours of demos and exploration, I couldn't wait to get the headset off. Not because I was motion sick – I don't get motion sick, and never have – but because I was bored. And a little frustrated by control limitations. Not exactly the stuff transformative world-changing disruption is made of.

Here's what that experience looks like, by the way. You can practically feel the gaming excitement dripping off me.

And if you don't find watching me experience my virtual world fascinating (although I can't imagine why) I suppose you can enjoy what's on my screen:

Chroma-shifted, stereographic, fisheye VR gibberish.

I've always been the first kid on my block to recommend an awesome, transformative gaming experience, from the Atari 2600 to the Kinect. I mean, that's kind of who I am, isn't it? The alpha geek, the guy who owned a Vectrex and thought vector graphics were the cat's pajamas, the guy who bought one of the first copies of Guitar Hero in 2005 and would not shut up about it. For that matter I dragged my buddies to a VR storefront in Boulder, Colorado circa 1993 so we could play Dactyl Nightmare. And I have to say, in my alpha geek opinion, modern VR has a long way to go before it'll be ready for the rapturous smartphone levels of adoption that media pundits imply is just around the corner.

I apologize if this comes off as negative, and no, I haven't tried the magical new VR headset models that are Just Around The Corner and Will Change Everything. I'll absolutely try them when they are available. Let me be clear that I think the technical challenges around VR are deep, hard, and fascinating, and I could not be happier that some of the best programmers of our generation are working on this stuff. But from what I've seen and experienced to date, there is just no way that VR is going to be remotely mainstream in 5 years. I'm doubtful that can happen in a decade or even two decades, to be honest, but a smart person always hedges their bets when trying to predict the future.

I think the current state of VR, or at least the "strap a nice smartphone or two on your face" version of it, has quite a few fundamental physical problems to deal with before it has any chance of being mainstream.

It should be as convenient as a pair of glasses

Nobody "enjoys" strapping two pounds of stuff on their face unless they are in a hazardous materials situation. We can barely get people to wear bicycle helmets, and yet they are going to be lining up around the block to slap this awkward, gangly VR contraption on their head? Existing VR headsets get awfully sweaty after 30 minutes of use, and they're also difficult to fit over glasses. The idea of gaming with a heavy, sweaty, uncomfortable headset on for hours at a time isn't too appealing – and that's coming from a guy who thinks nothing of spending 6 hours in a gaming jag with headphones on.

For VR to be quick and easy and pervasive, the headset would need to be so miniaturized as to be basically invisible – akin to putting on a cool pair of sunglasses.

Maybe current VR headsets are like the old brick cellphones from the 90's. The question is, how quickly can they get from 1990 to 2007?

It should be wireless

The world has been inexorably moving towards wireless everything, but in this regard VR headsets are a glorious throwback to science fiction movies from the 1970s. Your VR headset and everything else on it will be physically wired, in multiple ways, to a powerful computer. Wires, wires, everywhere, as far as your eyes … can't see.