Jeff Atwood's Blog, page 3

November 2, 2016

Your Digital Pinball Machine

I've had something of an obsession with digital pinball for years now. That recently culminated in me buying a Virtuapin Mini.

OK, yes, it's an extravagance. There's no question. But in my defense, it is a minor extravagance relative to a real pinball machine.

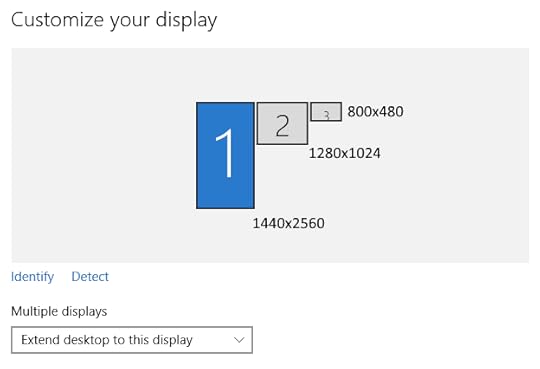

The mini is much smaller than a normal pinball machine, so it's easier to move around, takes up less space, and is less expensive. Plus you can emulate every pinball machine, ever! The Virtuapin Mini is a custom $3k build centered around three screens:

27" main playfield (HDMI)

23" backglass (DVI)

8" digital matrix (USB LCD)

Most of the magic is in those screens, and whether the pinball sim in question allows you to arrange the three screens in its advanced settings, usually by enabling a "cabinet" mode.

Let me give you an internal tour. Open the front coin door and detach the two internal nuts for the front bolts, which are finger tight. Then remove the metal lockdown bar and slide the tempered glass out.

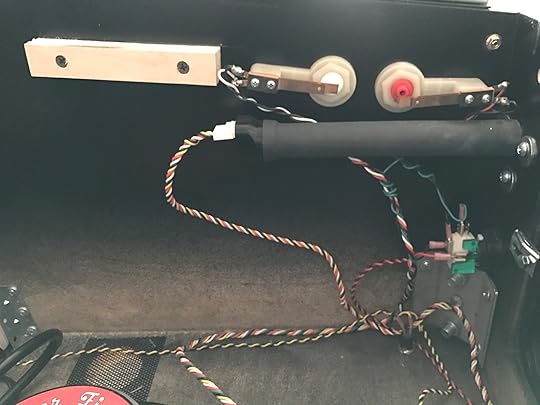

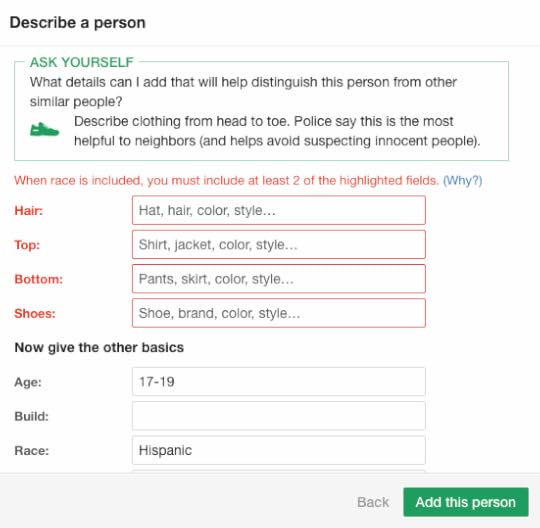

The most uniquely pinball item in the case is right at the front. This Digital Plunger Kit connects the 8 buttons (2 on each side, 3 on the front, 1 on the bottom) and includes an analog tilt sensor and analog plunger sensor. All of which shows up as a standard game controller in Windows.

On the left front side, the audio amplifier and left buttons.

On the right front side, the digital plunger and right buttons.

The 27" playfield monitor is mounted using a clever rod assembly to the standard VESA mount on the back, so we can easily rotate it up to work on the inside as needed.

To remove the playfield, disconnect the power cord and the HDMI connector. Then lift it up and out, and you now have complete access to the interior.

Notice the large down-firing subwoofer mounted in the middle of the body, as well as the ventilation holes. The PC "case" is just a back panel, and the power strip is the Smart Strip kind where it auto-powers everything based on the PC being powered on or off. The actual power switch is on the bottom front right of the case.

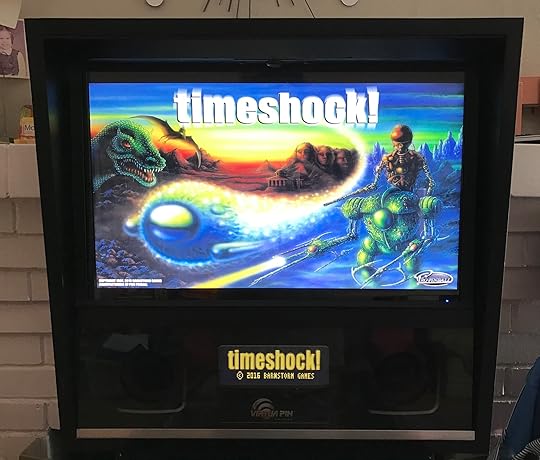

Powering it up and getting all three screens configured in the pinball sim of your choice results in … magic.

It is a thoroughly professional build, as you'd expect from a company that has been building these pinball rigs for the last decade. It uses real wood (not MDF), tempered glass, and authentic metal pinball parts throughout.

I was truly impressed by the build quality of this machine. Paul of Virtuapin said they're on roughly version four of the machine and it shows. It's over 100 pounds fully assembled and arrives on a shipping pallet. I can only imagine how heavy the full size version would be!

That said, I do have some tweaks I recommend:

Make absolutely sure you get an IPS panel as your 27" playfield monitor. As arrived, mine had a TN panel and while it was playable if you stood directly in front of the machine, playfield visibility was pretty dire outside that narrow range. I dropped in the BenQ GW2765HT to replace the GL2760H that was in there, and I was golden. If you plan to order, I would definitely talk to Paul at VirtuaPin and specify that you want this IPS display even if it costs a little more. The 23" backglass monitor appears to be IPS already so no need to change there.

The improved display has a 1440p resolution compared to the 1080p originally shipped, so you might want to upgrade from the GeForce 750 Ti video card to the just-released 1050 Ti. This is not strictly required, as I found the 750 Ti an excellent performer even at the higher resolution, but I plan to play only fully 3D pinball sims and the 1050 Ti gets excellent reviews for $140, so I went for it.

Internally everything is exceptionally well laid out, the only very minor improvement I'd recommend is connecting the rear exhaust fan to the motherboard header so its fan speed can be dynamically controlled by the computer rather than being at full power all the time.

On the Virtuapin website order form the PC they provide sounds quite outdated, but don't sweat it: I picked the lowest options thinking I would have to replace it all, and they shipped me a Haswell based quad-core PC with 8GB RAM and a 256GB SSD, even though those options weren't even on the order form.

I realize $3k (plus palletized shipping) is a lot of money, but I estimate it would cost you at least $1500 in parts to build this machine, plus a month of personal labor. Provided you get the IPS playfield monitor, this is a solidly constructed "real" pinball machine, and if you're into digital pinball like I am, it's an absolute joy to play and a good deal for what you actually get. As Ferris Bueller once said:

If you'd like to experiment with this and don't have three grand burning a hole in your pocket, 90% of digital pinball simulation is a widescreen display in portrait mode. Rotate one of your monitors, add another monitor if you're feeling extra fancy, and give it a go.

As for software, most people talk about Visual Pinball for these machines, and it works. But the combination of janky hacked-together 2D bitmap technology used in the gameplay, and the fact that all those designs are ripoffs that pay nothing in licensing back to the original pinball manufacturers really bothers me.

I prefer Pinball Arcade in DirectX 11 mode, which is downright beautiful, easily (and legally!) obtainable via Steam and offers a stable of 60+ incredible officially licensed classic pinball tables to choose from, all meticulously recreated in high resolution 3D with excellent physics.

As for getting pinball simulations running on your three monitor setup, if you're lucky the game will have a cabinet mode you can turn on. Unfortunately, this can be weird due to … licensing issues. Apparently building a pinball sim on the computer requires entirely different licensing than placing it inside a full-blown pinball cabinet.

Pinball Arcade has a nifty camera hack someone built that lets you position three cameras as needed to get the three displays. You will also need the excellent x360ce program to dynamically map joystick events and buttons to a simulated Xbox 360 controller.

Pinball FX2 added a cabinet mode about a year ago, but turning it on requires a special code and you have to send them a picture of your cabinet (!) to get that code. I did, and the cabinet mode works great; just enter your code, specify the coordinates of each screen in the settings and you are good to go. While these tables definitely have arcadey physics, I find them great fun and there are a ton to choose from.

Pro Pinball Timeshock Ultra is unique because it's originally from 1997 and was one of the first "simulation" level pinball games. The current rebooted version is still pre-rendered graphics rather than 3D, but the client downloads the necessary gigabytes of pre-rendered content at your exact screen resolution and it looks amazing.

Timeshock has explicit cabinet support in the settings and via command line tweaks. Position each window as necessary, then enable fullscreen for each one and it'll snap to the monitor you placed it on. It's "only" one table, but arguably the most classic of all pinball sims. I sincerely hope they continue to reboot the rest of the Pro Pinball series, including Big Race USA which is my favorite.

I've always loved pinball machines, even though they struggled to keep up with digital arcade games. In some ways I view my current project, Discourse, as a similarly analog experience attempting to bridge the gap to the modern digital world:

The fantastic 60 minute documentary Tilt: The Battle to Save Pinball has so many parallels with what we're trying to do for forum software.

Pinball is threatened by Video Games, in the same way that Forums are threatened by Facebook and Twitter and Tumblr and Snapchat. They're considered old and archaic technology. They've stopped being sexy and interesting relative to what else is available.

Pinball was forced to reinvent itself several times throughout the years, from mechanical, to solid state, to computerized. And the defining characteristic of each "era" of pinball is that the new tables, once you played them, made all the previous pinball games seem immediately obsolete because of all the new technology.

The Pinball 2000 project was an attempt to invent the next generation of pinball machines:

It wasn't a new feature, a new hardware set, it was everything new. We have to get everything right. We thought that we had reinvented the wheel. And in many respects, we had.

This is exactly what we want to do with Discourse – build a forum experience so advanced that playing will make all previous forum software seem immediately obsolete.

Discourse aims to save forums and make them relevant and useful to a whole new generation.

So if I seem a little more nostalgic than most about pinball, perhaps a little too nostalgic at times, maybe that's why.

[advertisement] Building out your tech team? Stack Overflow Careers helps you hire from the largest community for programmers on the planet. We built our site with developers like you in mind.

August 25, 2016

Can Software Make You Less Racist?

I don't think we computer geeks appreciate how profoundly the rise of the smartphone, and Facebook, has changed the Internet audience. It's something that really only happened in the last five years, as smartphones and data plans dropped radically in price and became accessible – and addictive – to huge segments of the population.

People may have regularly used computers in 2007, sure, but that is a very different thing than having your computer in your pocket, 24/7, with you every step of every day, integrated into your life. As Jerry Seinfeld noted in 2014:

But I know you got your phone. Everybody here's got their phone. There's not one person here who doesn't have it. You better have it … you gotta have it. Because there is no safety, there is no comfort, there is no security for you in this life any more … unless when you're walking down the street you can feel a hard rectangle in your pants.

It's an addiction that is new to millions – but eerily familiar to us.

From "only nerds will use the Internet" to "everyone stares at their smartphones all day long!" in 20 years. Not bad, team :-).

— Marc Andreessen (@pmarca) January 16, 2015

The good news is that, at this moment, every human being is far more connected to their fellow humans than any human has ever been in the entirety of recorded history.

Spoiler alert: that's also the bad news.

Nextdoor is a Facebook-alike focused on specific neighborhoods. The idea is that you and everyone else on your block would join, and you can privately discuss local events, block parties, and generally hang out like neighbors do. It's a good idea, and my wife started using it a fair amount in the last few years. We feel more connected to our neighbors. But one unfortunate thing you'll find out when using Nextdoor is that your neighbors are probably a little bit racist.

I don't use Nextdoor myself, but I remember Betsy specifically complaining about the casual racism she saw there, and I've also seen it mentioned several times on Twitter by people I follow. They're not the only ones. It became so epidemic that Nextdoor got a reputation for being a racial profiling hub. Which is … not good.

Social networking historically trends young, with the early adopters. Facebook launched as a site for college students. But as those networks grow, they inevitably age. They begin to include older people. And those older people will, statistically speaking, be more racist. I apologize if this sounds ageist, but let me ask you something: do you consider your parents a little racist? I will personally admit that one of my parents is definitely someone I would label a little bit racist. It's … not awesome.

The older the person, the more likely they are to have these "old fashioned" notions that the presence of black people on your block is inherently suspicious, and marriage should probably be defined as between a man and a woman.

In one meta-analysis by Jeffrey Lax and Justin Phillips of Columbia University, a majority of 18–29 year old Americans in 38 states support same sex marriage while in only 6 states do less than 45% of 18–29 year olds support same-sex marriage. At the same time not a single state shows support for same-sex marriage greater than 35% amongst those 64 and older

The idea that regressive social opinions correlate with age isn't an opinion; it's a statistical fact.

Support for same-sex marriage in the U.S.

18 - 29 years old 65%

30 - 49 years old 54%

50 - 64 years old 45%

65+ years old 39%

Are there progressive septuagenarians? Sure there are. But not many.

To me, failure to support same-sex marriage is as inconceivable as failing to support interracial marriage. Which was not that long ago, to the tune of the late 60s and early 70s. If you want some truly hair-raising reading, try Loving v. Virginia on for size. Because Virginia is for lovers. Just not those kind of lovers, 49 years ago. In the interests of full disclosure, I am 45 years old, and I graduated from the University of Virginia.

You're more connected with your neighbors than ever before. But through that connection you may also find out some regressive things about your neighbors that you'd never have discovered in years of the traditional daily routine of polite waves, hellos from the driveway, and casual sidewalk conversations.

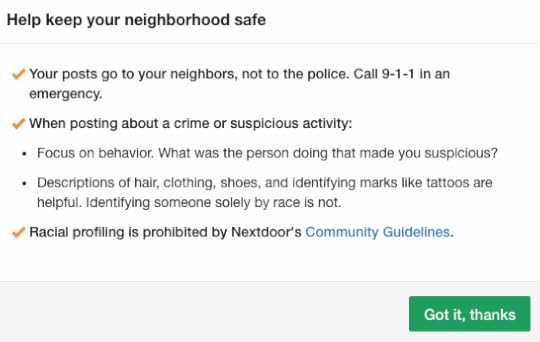

To their immense credit, rather than accepting this status quo, Nextdoor did what any self-respecting computer geek would do: they changed their software. Now, when you attempt to post about a crime or suspicious behavior …

… you get smart, just in time nudges to think less about race, and more about behavior.

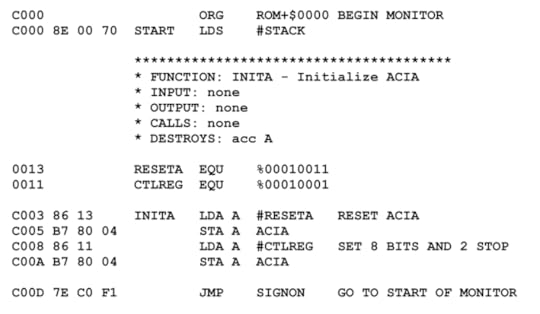

The results were striking:

Nextdoor claims this new multi-step system has, so far, reduced instances of racial profiling by 75%. It’s also decreased considerably the number of notes about crime and safety. During testing, the number of crime and safety issue reports abandoned before being published rose by 50%. “It’s a fairly significant dropoff,” said Tolia, “but we believe that, for Nextdoor, quality is more important than quantity.”

I'm a huge fan of designing software to help nudge people, at exactly the right time, to be their better selves. And this is a textbook example of doing it right.

Would using Nextdoor and encountering these dialogs make my aforementioned parent a little bit less racist? Probably not. But I like to think they would make that parent stop for at least a moment and consider the importance of focusing on the behavior that is problematic, rather than the individual person. This is a philosophy I promoted on Stack Overflow, I continue to promote with Discourse, and I reinforce daily with our three kids. You never, ever judge someone by what they look like. Look at what they do.

If you were getting excited about the prospect of validating Betteridge's Law yet again, I'm sorry to disappoint you. I truly do believe software, properly designed software, can not only help people be more civil to each other, but can also help people – maybe even people you love – behave a bit less like racists online.

[advertisement] At Stack Overflow, we help developers learn, share, and grow. Whether you’re looking for your next dream job or looking to build out your team, we've got your back.

July 24, 2016

The Raspberry Pi Has Revolutionized Emulation

Every geek goes through a phase where they discover emulation. It's practically a rite of passage.

I think I spent most of my childhood – and a large part of my life as a young adult – desperately wishing I was in a video game arcade. When I finally obtained my driver's license, my first thought wasn't about the girls I would take on dates, or the road trips I'd take with my friends. Sadly, no. I was thrilled that I could drive myself to the arcade any time I wanted.

My two arcade emulator builds in 2005 satisfied my itch thoroughly. I recently took my son Henry to the California Extreme expo, which features almost every significant pinball and arcade game ever made, live and in person and real. He enjoyed it so much that I found myself again yearning to share that part of our history with my kids – in a suitably emulated, arcade form factor.

Down, down the rabbit hole I went again:

I discovered that emulation builds are so much cheaper and easier now than they were when I last attempted this a decade ago. Here's why:

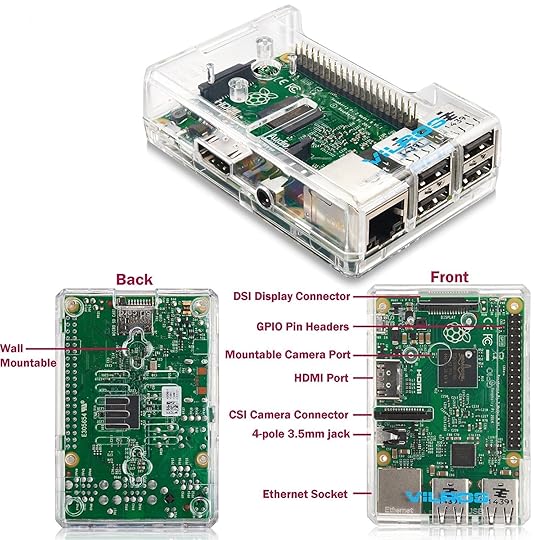

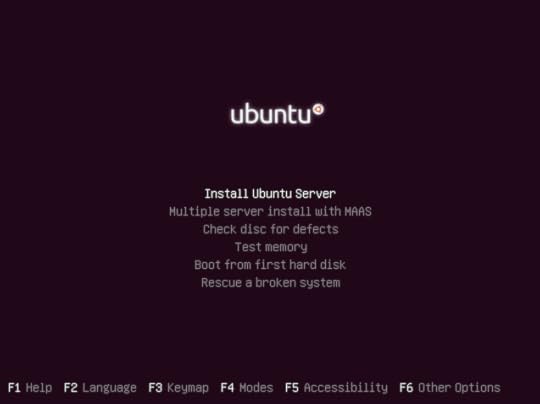

The ascendance of Raspberry Pi has single-handedly revolutionized the emulation scene. The Pi is now on version 3, which adds critical WiFi and Bluetooth functionality on top of additional speed. It's fast enough to emulate N64 and PSX and Dreamcast reasonably, all for a whopping $35. Just download the RetroPie bootable OS on a $10 32GB SD card, slot it into your Pi, and … well, basically you're done. The distribution comes with some free games on it. Add additional ROMs and game images to taste.

Chinese all-in-one JAMMA cards are available everywhere for about $90. Pandora's Box is one "brand". These things are are an entire 60-in-1 to 600-in-1 arcade on a board, with an ARM CPU and built-in ROMs and everything … probably completely illegal and unlicensed, of course. You could buy some old broken down husk of an arcade game cabinet, anything at all as long as it's a JAMMA compatible arcade game – a standard introduced in 1985 – with working monitor and controls. Plug this replacement JAMMA box in, and bam: you now have your own virtual arcade. Or you could build or buy a new JAMMA compatible cabinet; there are hundreds out there to choose from.

Cheap, quality arcade size IPS LCDs of 18-23". The CRTs I used in 2005 may have been truer to old arcade games, but they were a giant pain to work with. They're enormous, heavy, and require a lot of power. Viewing angle and speed of refresh are rather critical for arcade machines, and both are largely solved problems for LCDs at this point, which are light, easy to work with, and sip power for $100 or less.

Add all that up – it's not like the price of MDF or arcade buttons and joysticks has changed substantially in the last decade – and what we have today is a console and arcade emulation wonderland! If you'd like to go down this rabbit hole with me, bear in mind that I've just started, but I do have some specific recommendations.

Get a Raspberry Pi starter kit. I recommend this particular starter kit, which includes the essentials: a clear case, heatsinks – you definitely want small heatsinks on your 3, as it dissipate almost 4 watts under full load – and a suitable power adapter. That's $50.

Get a quality SD card. The primary "drive" on your Pi will be the SD card, so make it a quality one. Based on these excellent benchmarks, I recommend the Sandisk Extreme 32GB or Samsung Evo+ 32GB models for best price to peformance ratio. That'll be $15, tops.

Download and install the bootable RetroPie image on your SD card. It's amazing how far this project has come since 2013, it is now about as close to plug and play as it gets for free, open source software. The install is, dare I say … "easy"?

Decide how much you want to build. At this point you have a fully functioning emulation brain for well under $100 which is capable of playing literally every significant console and arcade game created prior to 1997. Your 1985 self is probably drunk with power. It is kinda awesome. Stop doing the Safety Dance for a moment and ask yourself these questions:

What controls do you plan to plug in via the USB ports? This will depend heavily on which games you want to play. Beyond the absolute basics of joystick and two buttons, there are Nintendo 64 games (think analog stick(s) required), driving games, spinner and trackball games, multiplayer games, yoke control games (think Star Wars), virtual gun games, and so on.

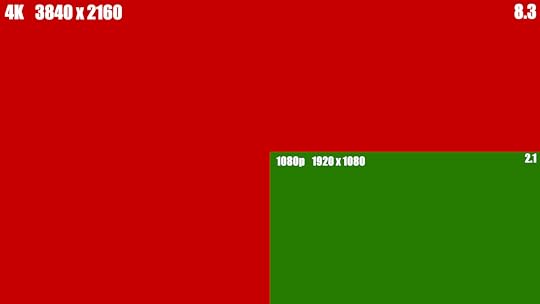

What display to you plan to plug in via the HDMI port? You could go with a tiny screen and build a handheld emulator, the Pi is certainly small enough. Or you could have no display at all, and jack in via HDMI to any nearby display for whatever gaming jamboree might befall you and your friends. I will say that, for whatever size you build, more display is better. Absolutely go as big as you can in the allowed form factor, though the Pi won't effectively use much more than a 1080p display maximum.

How much space do you want to dedicate to the box? Will it be portable? You could go anywhere from ultra-minimalist – a control box you can plug into any HDMI screen with a wireless controller – to a giant 40" widescreen stand up arcade machine with room for four players.

What's your budget? We've only spent under $100 at this point, and great screens and new controllers aren't a whole lot more, but sometimes you want to build from spare parts you have lying around, if you can.

Do you have the time and inclination to build this from parts? Or do you prefer to buy it pre-built?

These are all your calls to make. You can get some ideas from the pictures I posted at the top of this blog post, or search the web for "Raspberry Pi Arcade" for lots of other ideas.

As a reasonable all-purpose starting point, I recommend the Build-Your-Own-Arcade kits from Retro Built Games. From $330 for full kit, to $90 for just the wood case.

You could also buy the arcade controls alone for $75, and build out (or buy) a case to put them in.

My "mainstream" recommendation is a bartop arcade. It uses a common LCD panel size in the typical horizontal orientation, it's reasonably space efficient and somewhat portable, while still being comfortably large enough for a nice big screen with large speakers gameplay experience, and it supports two players if that's what you want. That'll be about $100 to $300 depending on options.

I remember spending well over $1,500 to build my old arcade cabinets. I'm excited that it's no longer necessary to invest that much time, effort or money to successfully revisit our arcade past.

Thanks largely to the Raspberry Pi 3 and the RetroPie project, this is now a simple Maker project you can (and should!) take on in a weekend with a friend or family. For a budget of $100 to $300 – maybe $500 if you want to get extra fancy – you can have a pretty great classic arcade and classic console emulation experience. That's way better than I was doing in 2005, even adjusting for inflation.

[advertisement] At Stack Overflow, we put developers first. We already help you find answers to your tough coding questions; now let us help you find your next job.

May 20, 2016

The Golden Age of x86 Gaming

I've been happy with my 2016 HTPC, but things have been changing fast, largely because of something I mentioned in passing back in November:

The Xbox One and PS4 are effectively plain old PCs, built on:

Intel Atom class (aka slow) AMD 8-core x86 CPU

8 GB RAM

AMD Radeon 77xx / 78xx GPUs

cheap commodity 512GB or 1TB hard drives (not SSDs)

The golden age of x86 gaming is well upon us. That's why the future of PC gaming is looking brighter every day. We can see it coming true in the solid GPU and idle power improvements in Skylake, riding the inevitable wave of x86 becoming the dominant kind of (non mobile, anyway) gaming for the forseeable future.

And then, the bombshell. It is all but announced that Sony will be upgrading the PS4 this year, no more than three years after it was first introduced … just like you would upgrade a PC.

Sony may be tight-lipped for now, but it's looking increasingly likely that the company will release an updated version of the PlayStation 4 later this year. So far, the rumoured console has gone under the moniker PS4K or PS4.5, but a new report from gaming site GiantBomb suggests that the codename for the console is "NEO," and it even provides hardware specs for the PlayStation 4's improved CPU, GPU, and higher bandwidth memory.

CPU: 1.6 → 2.1 Ghz CPU

GPU: 18 CUs @ 800Mhz → 36 CUs @ 911Mhz

RAM: 8GB DDR5 176 GB/s → 218 GB/s

In PC enthusiast parlance, you might say Sony just slotted in a new video card, a faster CPU, and slightly higher speed RAM.

This is old hat for PCs, but to release a new, faster model that is perfectly backwards compatible is almost unprecedented in the console world. I have to wonder if this is partially due to the intense performance pressure of VR, but whatever the reason, I applaud Sony for taking this step. It's a giant leap towards consoles being more like PCs, and another sign that the golden age of x86 is really and truly here.

I hate to break this to PS4 enthusiasts, but as big of an upgrade as that is – and it really is – it's still nowhere near enough power to drive modern games at 4k. Nvidia's latest and greatest 1080 GTX can only sometimes manage 30fps at 4k. The increase in required GPU power when going from 1080p to 4k is so vast that even the PC "cost is no object" folks who will happily pay $600 for a video card and $1000 for the rest of their box have some difficulty getting there today. Stuffing all that into a $299 box for the masses is going to take quite a few more years.

Still, I like the idea of the PS4 Neo so much that I'm considering buying it myself. I strongly support this sea change in console upgradeability, even though I swore I'd stick with the Xbox One this generation. To be honest, my Xbox One has been a disappointment to me. I bought the "Elite" edition because it had a hybrid 1TB drive, and then added a 512GB USB 3.0 SSD to the thing and painstakingly moved all my games over to that, and it is still appallingly slow to boot, to log in, to page through the UI, to load games. It's also noisy under load and sounds like a broken down air conditioner even when in low power, background mode. The Xbox One experience is way too often drudgery and random errors instead of the gaming fun it's supposed to be. Although I do unabashedly love the new controller, I feel like the Xbox One is, overall, a worse gaming experience than the Xbox 360 was. And that's sad.

Or maybe I'm just spoiled by PC performance, and the relatively crippled flavor of PC you get in these $399 console boxes. If all evidence points to the golden age of x86 being upon us, why not double down on x86 in the living room? Heck, while I'm at it … why not triple down?

This, my friends, is what tripling down on x86 in the living room looks like.

It's Intel's latest Skull Canyon NUC. What does that acronym stand for? Too embarrassing to explain. Let's just pretend it means "tiny awesome x86 PC". What's significant about this box is it contains the first on-die GPU Intel has ever shipped that can legitimately be considered console class.

It's not cheap at $699, but this tiny box bristles with cutting edge x86 tech:

Quad-core i7-6770HQ CPU (2.6 Ghz / 3.5 Ghz)

Iris Pro Graphics 580 GPU with 128MB eDRAM

Up to 32GB DDR4-2666 RAM

Dual M.2 PCI x4 SSD slots

802.11ac WiFi / Bluetooth / Gigabit Ethernet

Thunderbolt 3 / USB 3.1 gen 2 Type-C port

Four USB 3.0 ports

HDMI 2.0, mini-DP 1.2 video out

SDXC (UHS-I) card reader

Infrared sensor

3.5mm combo digital / optical out port

3.5mm headphone jack

All impressive, but the most remarkable items are the GPU and the Thunderbolt 3 port. Putting together a HTPC that can kick an Xbox One's butt as a gaming box is now as simple as adding these three items together:

Intel NUC kit NUC6i7KYK $699

16GB DDR4-2400 $64

Samsung 950 Pro NVMe M.2 (512GB) $317

Ok, fine, it's a cool $1,080 plus tax compared to $399 for one of those console x86 boxes. But did I mention it has skulls on it? Skulls!

The CPU and disk performance on offer here are hilariously far beyond what's available on current consoles:

Disk performance of the two internal PCIe 3.0 4x M.2 slots, assuming you choose a proper NVMe drive as you should, is measured in not megabytes per second but gigabytes per second. Meanwhile consoles lumber on with, at best, hybrid drives.

The Jaguar class AMD x86 cores in the Xbox One and PS4 are about the same as the AMD A4-5000 reviewed here; those benchmarks indicate a modern Core i7 will be about four times faster.

But most importantly, its GPU performance is on par with current consoles. NUC blog measured 41fps average in Battlefield 4 at 1080p and medium settings. Digging through old benchmarks I find plenty of pages where a Radeon 78xx or 77xx series video card, the closest analog to what's in the XBox One and PS4, achieves a similar result in Battlefield 4:

I personally benchmarked GRID 2 at 720p (high detail) on all three of the last HTPC models I owned:

MaxMinAvg

i3-4130T, HD 4400322127

i3-6100T, HD 530503239

i7-6770HQ, Iris Pro 580965978

When I up the resolution to 1080p, I get 59fps average, 38 min, 71 max. Checking with Notebookcheck's exhaustive benchmark database, that is closest to the AMD R7 250, a rebranded Radeon 7770.

What we have here is legitimately the first on-die GPU that can compete with a low-end discrete video card from AMD or Nvidia. Granted, an older one, one you could buy for about $80 today, but one that is certainly equivalent to what's in the Xbox One and PS4 right now. This is a real first for Intel, and it probably won't be the last time, considering that on-die GPU performance increases have massively outpaced CPU performance increases for the last 5 years.

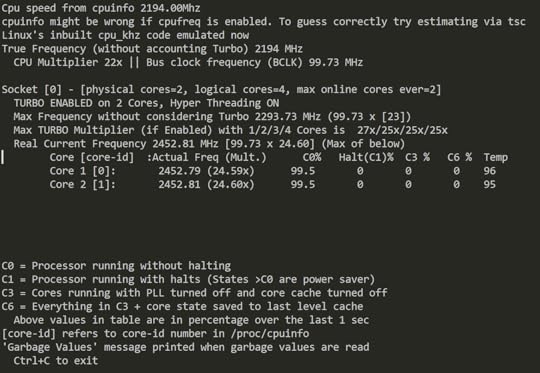

As for power usage, I was pleasantly surprised to measure that this box idles at 15w at the Windows Desktop doing nothing, and drops to 13w when the display sleeps. Considering the best idle numbers I've measured are from the Scooter Computer at 7w and my previous HTPC build at 10w, that's not bad at all! Under full game load, it's more like 70 to 80 watts, and in typical light use, 20 to 30 watts. It's the idle number that matters the most, as that represents the typical state of the box. And compared to the 75 watts a console uses even when idling at the dashboard, it's no contest.

Of course, 4k video playback is no problem, though 10-bit 4K video may be a stretch. If that's not enough — if you dream bigger than medium detail 1080p gameplay — the presence of a Thunderbolt 3 port on this little box means you can, at considerable expense, use any external GPU of your choice.

That's the Razer Core external graphics dock, and it's $499 all by itself, but it opens up an entire world of upgrading your GPU to whatever the heck you want, as long as your x86 computer has a Thunderbolt 3 port. And it really works! In fact, here's a video of it working live with this exact configuration:

Zero games are meaningfully CPU limited today; the disk and CPU performance of this Skull Canyon NUC is already so vastly far ahead of current x86 consoles, even the PS4 Neo that's about to be introduced. So being able to replace the one piece that needs to be the most replaceable is huge. Down the road you can add the latest, greatest GPU model whenever you want, just by plugging it in!

The only downside of using such a small box as my HTPC is that my two 2.5" 2TB media drives become external USB 3.0 enclosures, and I am limited by the 4 USB ports. So it's a little … cable-y in there. But I've come to terms with that, and its tiny size is an acceptable tradeoff for all the cable and dongle overhead.

I still remember how shocked I was when Apple switched to x86 back in 2005. I was also surprised to discover just how thoroughly both the PS4 and Xbox One embraced x86 in 2013. Add in the current furor over VR, plus the PS4 Neo opening new console upgrade paths, and the future of x86 as a gaming platform is rapidly approaching supernova.

If you want to experience what console gaming will be like in 10 years, invest in a Skull Canyon NUC and an external Thunderbolt 3 graphics dock today. If we are in a golden age of x86 gaming, this configuration is its logical endpoint.

[advertisement] Find a better job the Stack Overflow way - what you need when you need it, no spam, and no scams.

May 6, 2016

Your Own Personal WiFi Storage

Our kids have reached the age – at ages 4, 4, and 7 respectively – that taking longer trips with them is now possible without everyone losing what's left of their sanity in the process. But we still have the same problem on multiple hour trips, whether it's in a car, or on a plane – how do we bring enough stuff to keep the kids entertained without carting 5 pounds of books and equipment along, per person? And if we agree, like most parents, that the iPad is the general answer to this question, how do I get enough local media downloaded and installed on each of their iPads before the trip starts? And do I need 128GB iPads, because those are kind of expensive?

We clearly have a media sharing problem. I asked on Twitter and quite a number of people recommended the HooToo HT-TM05 TripMate Titan at $40. I took their advice, and they were right – this little device is amazing!

10400mAh External Battery

WiFi USB 3.0 media sharing device

Wired-to-WiFi converter

WiFi-to-WiFi bridge to share a single paid connection

The value of the last two points is debatable depending on your situation, but the utility of the first two is huge! Plus the large built in battery means it can act as a self-powered WiFi hotspot for 10+ hours. All this for only forty bucks!

It's a very simple device. It has exactly one button on the top:

Hold the button down for 5+ seconds to power on or off.

Tap the button to see the current battery level, represented as 1-4 white LEDs.

The blue LED will change to green if connected to another WiFi or wired network.

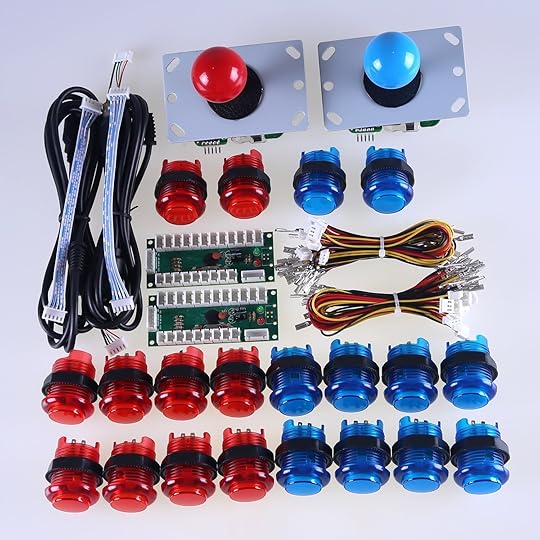

Once you get yours, just hold down the button to power it on, let it fully boot, and connect to the new TripMateSith WiFi network. As to why it's called that, I suspect it has to do with the color scheme of the device and this guy.

I am guessing licensing issues forced them to pick the 'real' name of TripMate Titan, but wirelessly, it's known as TripMateSith-XXXX. Connect to that. The default password is 11111111 (that's eight ones).

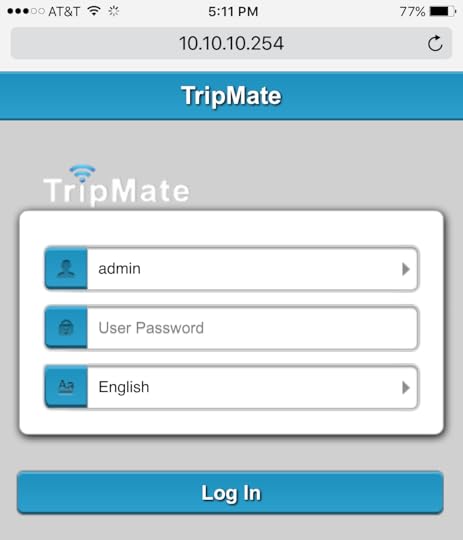

Once connected, navigate to 10.10.10.254 in your browser. Username is admin, no password.

This interface is totally smartphone compatible, for the record, but I recommend you do this from a desktop or laptop since we need to upgrade the firmware immediately. As received, the device has firmware 2.000.022 and you'll definitely want to upgrade to the latest firmware right away:

Make sure a small USB storage device is attached – it needs local scratch disk space to upgrade.

You'd think putting the firmware on a USB storage device and inserting said USB storage device into the HooToo would work, and I agree that's logical, but … you'd be wrong.

Connect from a laptop or desktop, then visit the Settings, Firmware page and upload the firmware file from there. (I couldn't figure out any way to upgrade firmware from a phone, at least not on iOS.)

Storage

For this particular use, so we can attach the storage, leave it attached forever, and kinda-sorta pretend it is all one device, I recommend a tiny $32 128GB USB 3.0 drive. It's not a barn-burner, but it's fast enough for its diminutive size.

In the past, I've recommended very fast USB 3.0 drives, but I think that time is coming to an end. If you need something larger than 128GB, you could carry a USB 3.0 enclosure with a 2.5" SSD, but the combination of travel and spinning hard drives makes me nervous. Not to mention the extra power consumption. Instead, I recommend one of the new, budget compact M.2 SSDs in a USB 3.0 enclosure:

480GB M.2 2280 SATA SSD ($130)

M.2 SATA to USB 3.0 Enclosure ($23)

I discovered this brand of Phison controller based budget M.2 SSDs when I bought the Scooter Computers and they are surprisingly great performers for the price, particularly if you stick with the newest Phison S10 controller. And they run absolute circles around large USB flash drives in performance! The larger the drive, believe me, the more you need to care about this, like say you need to quickly copy a bunch of reasonably new media for the kids to enjoy before you go catch that plane.

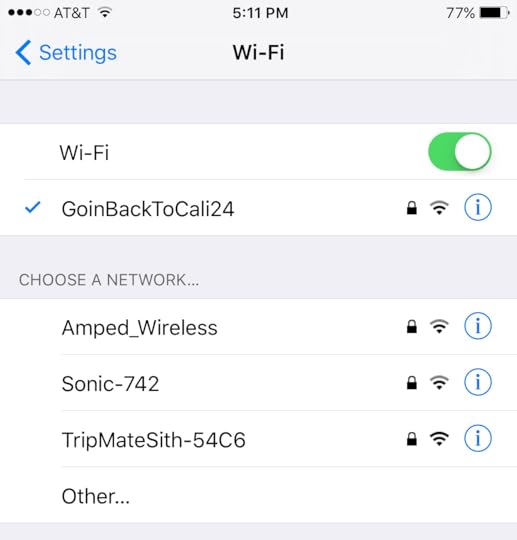

Settings and WiFi

Let's continue setting up our HooToo Tripmate Titan. In the web interface, under Settings, Network Settings, these are the essentials:

In Host Name, first set the device name to something short and friendly. You will be typing this later on every device you attach to it. I used mully and sully for mine.

In Wi-Fi and LAN

pick a strong, long WiFi password, because there's very little security on the device beyond the WiFi gate.

set the WiFi channel to either 1, 6, or 11 so you are not crowding around other channels.

set security to WPA2-PSK only. No need to support old, insecure connection types.

There's more here, if you want to bridge wired or wirelessly, but this will get you started.

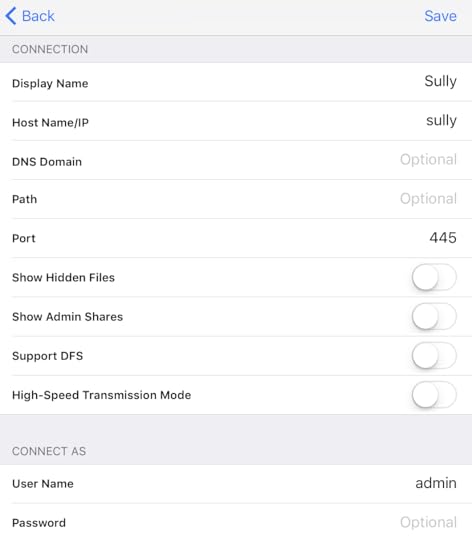

Windows

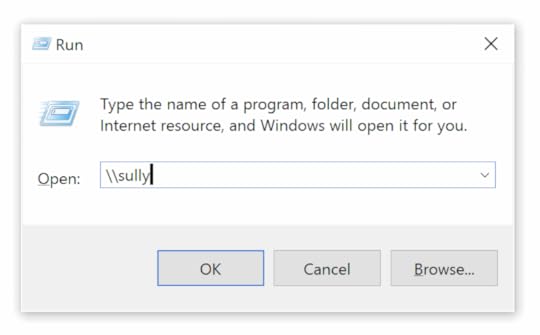

Connect to the HooToo's WiFi network, then type in the name of the device (mine's called sully) in Explorer or the File Run dialog, prefixed by \\.

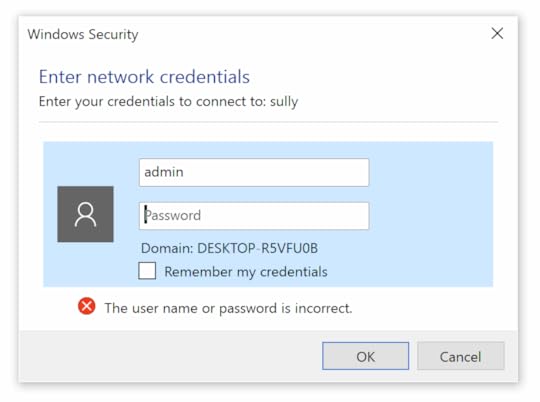

The default user accounts are admin and guest with no passwords, unless you set one up. Admin lets you write files; guest does not.

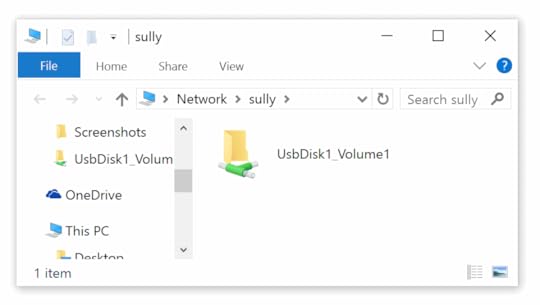

Once you connect you'll see the default file share for the USB device and can begin browsing the files at UsbDisk1_Volume1.

iOS

I use the File Explorer app for iOS, though I am sure there are plenty of other alternatives. It's $5, and I have it installed on all my iOS devices.

Connect to the HooToo's WiFi network, then add a new Windows type share via the menu on the left. (I'm not sure if other share types work, they might, but that one definitely does.) Enter the name of the device here and the account admin with no password. If you forget to enter account info, you'll get prompted on connect.

Once set up, this connection will be automatically saved for future use. And once you connect, you can browse the single available file share at UsbDisk1_Volume1 and play back any files.

Be careful, though, as media files you open here will use the default iOS player – you may need a third party media player if the file has complex audio streams (DTS, for example) or unusual video encoders.

Caveats

For some reason, with a USB 3.0 flash drive attached, the battery slowly drains even when powered off. So you'll want to remove any flash drive when the HooToo is powered off for extended periods. I have no idea why this happens, but I was definitely able to reproduce the behavior. Kind of annoying since my whole goal was to have "one" device, but oh well.

This isn't a fancy, glitzy Plex based system, it's a basic filesystem browser. Devices that have previously connected to this WiFi network will definitely connect to it when no other WiFi networks are available, like say, when you're in a van driving to Legoland, or on a plane flying to visit your grandparents. You will still have to train people to visit the File Explorer app, and the right device name to look for, or create a desktop link to the proper share.

But in my book, simple is good. The HooToo HT-TM05 TripMate plus a small 128GB flash drive is an easy, flexible way to wirelessly share large media files across a ton of devices for less than 75 bucks total, and it comes with a large, convenient rechargeable battery.

I think one of these will live, with its charger cable and a flash drive chock full of awesome media, permanently inside our van for the kids. Remember, no matter where you go, there your … files … are.

[advertisement] Building out your tech team? Stack Overflow Careers helps you hire from the largest community for programmers on the planet. We built our site with developers like you in mind.

April 29, 2016

They Have To Be Monsters

Since I started working on Discourse, I spend a lot of time thinking about how software can encourage and nudge people to be more empathetic online. That's why it's troubling to read articles like this one:

My brother’s 32nd birthday is today. It’s an especially emotional day for his family because he’s not alive for it.

He died of a heroin overdose last February.

This year is even harder than the last. I started weeping at midnight and eventually cried myself to sleep. Today’s symptoms include explosions of sporadic sobbing and an insurmountable feeling of emptiness. My mom posted a gut-wrenching comment on my brother’s Facebook page about the unfairness of it all. Her baby should be here, not gone. “Where is the God that is making us all so sad?” she asked.

In response, someone — a stranger/(I assume) another human being — commented with one word: “Junkie.”

The interaction may seem a bit strange and out of context until you realize that this is the Facebook page of a person who was somewhat famous, who produced the excellent show Parks and Recreation. Not that this forgives the behavior in any way, of course, but it does explain why strangers would wander by and make observations.

There is deep truth in the old idea that people are able to say these things because they are looking at a screen full of words, not directly at the face of the person they're about to say a terrible thing to. That one level of abstraction the Internet allows, typing, which is so immensely powerful in so many other contexts …

“falling in love, breaking into a bank, bringing down the govt…they all look the same right now: they look like typing” @PennyRed #TtW16 #k3

— whitney erin boesel (@weboesel) April 16, 2016

… has some crippling emotional consequences.

As an exercise in empathy, try to imagine saying some of the terrible things people typed to each other online to a real person sitting directly in front of you. Or don't imagine, and just watch this video.

I challenge you to watch the entirety of that video. I couldn't do it. This is the second time I've tried, and I had to turn it off not even 2 minutes in because I couldn't take it any more.

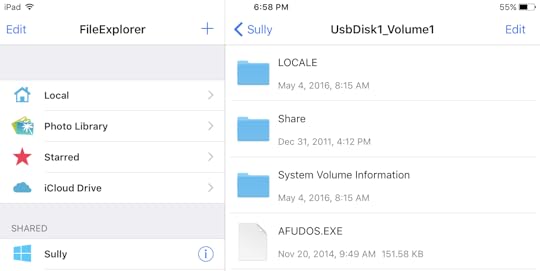

It's no coincidence that these are comments directed at women. Over the last few years I have come to understand how, as a straight white man, I have the privilege of being immune from most of this kind of treatment. But others are not so fortunate. The Guardian analyzed 70 million comments and found that online abuse is heaped disproportionately on women, people of color, and people of different sexual orientation.

And avalanches happen easily online. Anonymity disinhibits people, making some of them more likely to be abusive. Mobs can form quickly: once one abusive comment is posted, others will often pile in, competing to see who can be the most cruel. This abuse can move across platforms at great speed – from Twitter, to Facebook, to blogposts – and it can be viewed on multiple devices – the desktop at work, the mobile phone at home. To the person targeted, it can feel like the perpetrator is everywhere: at home, in the office, on the bus, in the street.

I've only had a little taste of this treatment, once. The sense of being "under siege" – a constant barrage of vitriol and judgment pouring your way every day, every hour – was palpable. It was not pleasant. It absolutely affected my state of mind. Someone remarked in the comments that ultimately it did not matter, because as a white man I could walk away from the whole situation any time. And they were right. I began to appreciate what it would feel like when you can't walk away, when this harassment follows you around everywhere you go online, and you never really know when the next incident will occur, or exactly what shape it will take.

Imagine the feeling of being constantly on edge like that, every day. What happens to your state of mind when walking away isn't an option? It gave me great pause.

I admired the way Stephanie Wittels Wachs actually engaged with the person who left that awful comment. This is a man who has two children of his own, and should be no stranger to the kind of pain involved in a child's death. And yet he felt the need to post the word "Junkie" in reply to a mother's anguish over losing her child to drug addiction.

Isn’t this what empathy is? Putting myself in someone else’s shoes with the knowledge and awareness that I, too, am human and, therefore, susceptible to this tragedy or any number of tragedies along the way?

Most would simply delete the comment, block the user, and walk away. Totally defensible. But she didn't. She takes the time and effort to attempt to understand this person who is abusing her mother, to reach them, to connect, to demonstrate the very empathy this man appears incapable of.

Consider the related story of Lenny Pozner, who lost a child at Sandy Hook, and became the target of groups who believe the event was a hoax, and similarly selflessly devotes much of his time to refuting and countering these bizarre claims.

Tracy’s alleged harassment was hardly the first, Pozner said. There’s a whole network of people who believe the media reported a mass shooting that never happened, he said, that the tragedy was an elaborate hoax designed to increase support for gun control. Pozner said he gets ugly comments often on social media, such as, “Eventually you’ll be tried for your crimes of treason against the people,” “… I won’t be satisfied until the caksets are opened…” and “How much money did you get for faking all of this?”

It's easy to practice empathy when you limit it to people that are easy to empathize with – the downtrodden, the undeserving victims. But it is another matter entirely to empathize with those that hate, harangue, and intentionally make other people's lives miserable. If you can do this, you are a far better person than me. I struggle with it. But my hat is off to you. There's no better way to teach empathy than to practice it, in the most difficult situations.

In individual cases, reaching out and really trying to empathize with people you disagree with or dislike can work, even people who happen to be lifelong members of hate organizations, as in the remarkable story of Megan Phelps-Roper:

As a member of the Westboro Baptist Church, in Topeka, Kansas, Phelps-Roper believed that AIDS was a curse sent by God. She believed that all manner of other tragedies—war, natural disaster, mass shootings—were warnings from God to a doomed nation, and that it was her duty to spread the news of His righteous judgments. To protest the increasing acceptance of homosexuality in America, the Westboro Baptist Church picketed the funerals of gay men who died of AIDS and of soldiers killed in Iraq and Afghanistan. Members held signs with slogans like “GOD HATES FAGS” and “THANK GOD FOR DEAD SOLDIERS,” and the outrage that their efforts attracted had turned the small church, which had fewer than a hundred members, into a global symbol of hatred.

Perhaps one of the greatest failings of the Internet is the breakdown in cost of emotional labor.

First we’ll reframe the problem: the real issue is not Problem Child’s opinions – he can have whatever opinions he wants. The issue is that he’s doing zero emotional labor – he’s not thinking about his audience or his effect on people at all. (Possibly, he’s just really bad at modeling other people’s responses – the outcome is the same whether he lacks the will or lacks the skill.) But to be a good community member, he needs to consider his audience.

True empathy means reaching out and engaging in a loving way with everyone, even those that are hurtful, hateful, or spiteful. But on the Internet, can you do it every day, multiple times a day, across hundreds of people? Is this a reasonable thing to ask of someone? Is it even possible, short of sainthood?

The question remains: why would people post such hateful things in the first place? Why reply "Junkie" to a mother's anguish? Why ask the father of a murdered child to publicly prove his child's death was not a hoax? Why tweet "Thank God for AIDS!"

Unfortunately, I think I know the answer to this question, and you're not going to like it.

I don't like it. I don't want it. But I know.

I have laid some heavy stuff on you in this post, and for that, I apologize. I think the weight of what I'm trying to communicate here requires it. I have to warn you that the next article I'm about to link is far heavier than anything I have posted above, maybe the heaviest thing I've ever posted. It's about the legal quandary presented in the tragic cases of children who died because their parents accidentally left them strapped into carseats, and it won a much deserved pulitzer. It is also one of the most harrowing things I have ever read.

Ed Hickling believes he knows why. Hickling is a clinical psychologist from Albany, N.Y., who has studied the effects of fatal auto accidents on the drivers who survive them. He says these people are often judged with disproportionate harshness by the public, even when it was clearly an accident, and even when it was indisputably not their fault.

Humans, Hickling said, have a fundamental need to create and maintain a narrative for their lives in which the universe is not implacable and heartless, that terrible things do not happen at random, and that catastrophe can be avoided if you are vigilant and responsible.

In hyperthermia cases, he believes, the parents are demonized for much the same reasons. “We are vulnerable, but we don’t want to be reminded of that. We want to believe that the world is understandable and controllable and unthreatening, that if we follow the rules, we’ll be okay. So, when this kind of thing happens to other people, we need to put them in a different category from us. We don’t want to resemble them, and the fact that we might is too terrifying to deal with. So, they have to be monsters.”

This man left the junkie comment because he is afraid. He is afraid his own children could become drug addicts. He is afraid his children, through no fault of his, through no fault of anyone at all, could die at 30. When presented with real, tangible evidence of the pain and grief a mother feels at the drug related death of her own child, and the reality that it could happen to anyone, it became so overwhelming that it was too much for him to bear.

Those "Sandy Hook Truthers" harass the father of a victim because they are afraid. They are afraid their own children could be viciously gunned down in cold blood any day of the week, bullets tearing their way through the bodies of the teachers standing in front of them, desperately trying to protect them from being murdered. They can't do anything to protect their children from this, and in fact there's nothing any of us can do to protect our children from being murdered at random, at school any day of the week, at the whim of any mentally unstable individual with access to an assault rifle. That's the harsh reality.

When faced with the abyss of pain and grief that parents feel over the loss of their children, due to utter random chance in a world they can't control, they could never control, maybe none of us can ever control, the overwhelming sense of existential dread is simply too much to bear. So they have to be monsters. They must be.

And we will fight these monsters, tooth and nail, raging in our hatred, so we can forget our pain, at least for a while.

After Lyn Balfour’s acquittal, this comment appeared on the Charlottesville News Web site:

“If she had too many things on her mind then she should have kept her legs closed and not had any kids. They should lock her in a car during a hot day and see what happens.”

I imagine the suffering that these parents are already going through, reading these words that another human being typed to them, just typed, and something breaks inside me. I can't process it. But rather than pitting ourselves against each other out of fear, recognize that the monster who posted this terrible thing is me. It's you. It's all of us.

The weight of seeing through the fear and beyond the monster to simply discover yourself is often too terrible for many people to bear. In a world of hard things, it's the hardest there is.

[advertisement] At Stack Overflow, we help developers learn, share, and grow. Whether you’re looking for your next dream job or looking to build out your team, we've got your back.

April 15, 2016

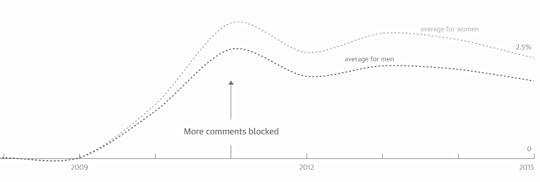

Here's The Programming Game You Never Asked For

You know what's universally regarded as un-fun by most programmers? Writing assembly language code.

As Steve McConnell said back in 1994:

Programmers working with high-level languages achieve better productivity and quality than those working with lower-level languages. Languages such as C++, Java, Smalltalk, and Visual Basic have been credited with improving productivity, reliability, simplicity, and comprehensibility by factors of 5 to 15 over low-level languages such as assembly and C (Brooks 1987, Jones 1998, Boehm 2000). You save time when you don't need to have an awards ceremony every time a C statement does what it's supposed to.

Assembly is a language where, for performance reasons, every individual command is communicated in excruciating low level detail directly to the CPU. As we've gone from fast CPUs, to faster CPUs, to multiple absurdly fast CPU cores on the same die, to "gee, we kinda stopped caring about CPU performance altogether five years ago", there hasn't been much need for the kind of hand-tuned performance you get from assembly in mainstream programming. Sure, there are the occasional heroics, and they are amazing, but in terms of Getting Stuff Done, assembly has been well off the radar of mainstream programming for probably twenty years now, and for good reason.

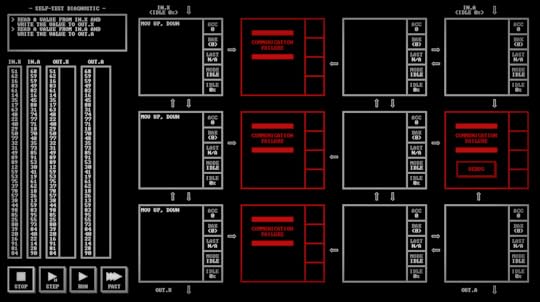

So who in their right mind would take up tedious assembly programming today? Yeah, nobody. But wait! What if I told you your Uncle Randy had just died and left behind this mysterious old computer, the TIS-100?

And what if I also told you the only way to figure out what that TIS-100 computer was used for – and what good old Uncle Randy was up to – is to fix its corrupted boot sequence … using assembly language? From reading a (blessedly short 14 page) photocopied reference manual?

Well now, by God, it's time to learn us some assembly and get to the bottom of this mystery, isn't it? As its creator notes, this is the assembly language programming game you never asked for!

I was surprised to discover my co-founder Robin Ward liked TIS-100 so much that he not only played the game (presumably to completion) but wrote a TIS-100 emulator in C. This is apparently the kind of thing he does for fun, in his free time, when he's not already working full time with us programming Discourse. Programmers gotta … program.

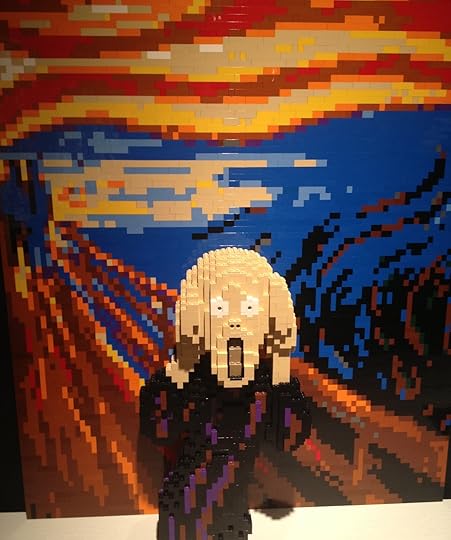

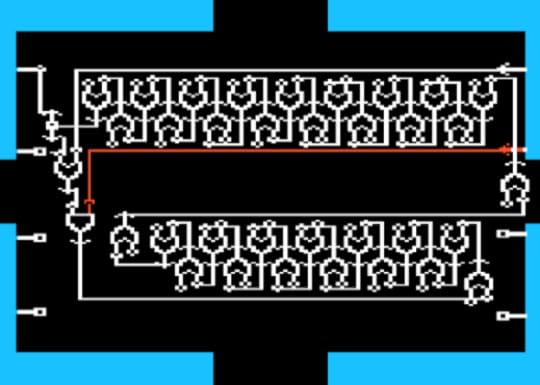

Of course there's a long history of programming games. What makes TIS-100 unique is the way it fetishizes assembly programming, while most programming games take it a bit easier on you by easing you in with general concepts and simpler abstractions. But even "simple" programming games can be quite difficult. Consider one of my favorites on the Apple II, Rocky's Boots, and its sequel, Robot Odyssey. I loved this game, but in true programming fashion it was so difficult that finishing it in any meaningful sense was basically impossible:

Let me say: Any kid who completes this game while still a kid (I know only one, who also is one of the smartest programmers I’ve ever met) is guaranteed a career as a software engineer. Hell, any adult who can complete this game should go into engineering. Robot Odyssey is the hardest damn “educational” game ever made. It is also a stunning technical achievement, and one of the most innovative games of the Apple IIe era.

Visionary, absurdly difficult games such as this gain cult followings. It is the game I remember most from my childhood. It is the game I love (and despise) the most, because it was the hardest, the most complex, the most challenging. The world it presented was like being exposed to Plato’s forms, a secret, nonphysical realm of pure ideas and logic. The challenge of the game—and it was one serious challenge—was to understand that other world. Programmer Thomas Foote had just started college when he picked up the game: “I swore to myself,” he told me, “that as God is my witness, I would finish this game before I finished college. I managed to do it, but just barely.”

I was happy dinking around with a few robots that did a few things, got stuck, and moved on to other things. To be honest, I got a little turned off by the way it treated programming as electrical engineering, and messing around with a ton of AND OR and NOT gates was just not my jam. I was already cutting my teeth on BASIC by that point and I sensed a level of mastery was necessary here that I didn't have and probably didn't want. I mean seriously, look at this:

I'll take a COBOL code listing over that monstrosity any day of the week. Perhaps Robot Odyssey was so hard because, in the end, it was a bare metal CPU programming simulation.

A more gentle example of a modern programming game is Tomorrow Corporation's excellent Human Resource Machine.

It has exactly the irreverent sense of humor you'd expect from the studio that built World of Goo and Little Inferno, both excellent and highly recommendable games in their own right. It starts with only 2 instructions and slowly widens to include 11. If you've ever wanted to find out if someone is interested in programming, recommend this game to them and find out. Corporate drudgery has never been so … er, fun?

I'm thinking about this because I believe there's a strong connection between these kinds of programming games and being a talented software engineer. It's that essential sense of play, the idea that you're experimenting with this stuff because you enjoy it, and you bend it to your will out of the sheer joy of creation more than anything else. As I once said:

Joel implied that good programmers love programming so much they'd do it for no pay at all. I won't go quite that far, but I will note that the best programmers I've known have all had a lifelong passion for what they do. There's no way a minor economic blip would ever convince them they should do anything else. No way. No how.

Here's where I am going with this: I'd rather sit a potential hire in front of Human Resource Machine and time how long it takes them to work through a few levels than have them solve FizzBuzz for me on a whiteboard. Is this merely about attaining competency in a certain technical skill that's worth a certain amount of money, or are you improvising and having fun?

That's why I was so excited when Patrick, Thomas, and Erin founded Starfighter.

If you want to know how good a programmer is, give them a real-ish simulation of a real-ish system to hack against and experiment with – and see how far they get. In security parlance, this is known as a CTF, as popularized by Defcon. But it's rarely extended to programming until now. Their first simulation is StockFighter.

Participants are given:

An interactive trading blotter interface

A real, functioning set of limit-order-book venues

A carefully documented JSON HTTP API, with an API explorer

A series of programming missions.

Participants are asked to:

Implement programmatic trading against a real exchange in a thickly traded market.

Execute block-shopping trading strategies.

Implement electronic market makers.

Pull off an elaborate HFT trading heist.

This is a seriously next level hiring strategy, far beyond anything else I've seen out there. It's so next level that to be honest, I got really jealous reading about it, because I've felt for a long time that Stack Overflow should be doing yearly programming game events exactly like this, with special one-time badges obtainable only by completing certain levels on that particular year. As I've said many times, Stack Overflow is already a sort of game, but people would go nuts for a yearly programming game event. Absolutely bonkers.

I know we've talked about giving lip service to the idea of hiring the best, but if that's really what you want to do, the best programmers I've ever known have excelled at exactly the situation that Starfighter simulates — live troubleshooting and reverse engineering an existing system, even to the point of finding rare exploits.

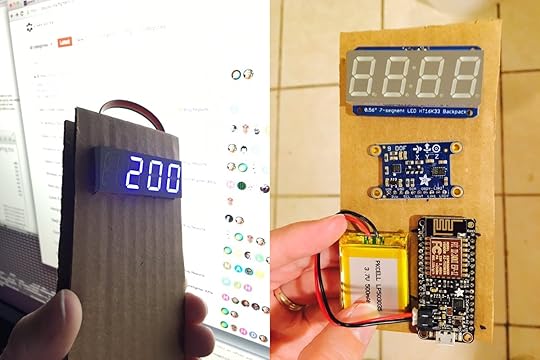

Consider the dedication of this participant who built a complete wireless trading device for StockFighter. Was it necessary? Was it practical? No. It's the programming game we never asked for.

An arbitrary programming game is neither practical nor necessary, but it is a wonderful expression of the inherent joy in playing and experimenting with code. If I could find them, I'd gladly hire a dozen people just like that any day, and set them loose on our very real programming project.

[advertisement] At Stack Overflow, we put developers first. We already help you find answers to your tough coding questions; now let us help you find your next job.

March 25, 2016

Thanks For Ruining Another Game Forever, Computers

In 2006, after visiting the Computer History Museum's exhibit on Chess, I opined:

We may have reached an inflection point. The problem space of chess is so astonishingly large that incremental increases in hardware speed and algorithms are unlikely to result in meaningful gains from here on out.

So. About that. Turns out I was kinda … totally completely wrong. The number of possible moves, or "problem space", of Chess is indeed astonishingly large, estimated to be 1050:

100,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000

Deep Blue was interesting because it forecast a particular kind of future, a future where specialized hardware enabled brute force attack of the enormous chess problem space, as its purpose built chess hardware outperformed general purpose CPUs of the day by many orders of magnitude. How many orders of magnitude? In the heady days of 1997, Deep Blue could evaluate 200 million chess positions per second. And that was enough to defeat Kasparov, the highest ever ranked human player – until 2014 at least. Even though one of its best moves was the result of a bug.

200,000,000

In 2006, about ten years later, according to the Fritz Chess benchmark, my PC could evaluate only 4.5 million chess positions per second.

4,500,000

Today, about twenty years later, that very same benchmark says my PC can evaluate a mere 17.2 million chess positions per second.

17,200,000

Ten years, four times faster. Not bad! Part of that is I went from dual to quad core, and these chess calculations scale almost linearly with the number of cores. An eight core CPU, no longer particularly exotic, could probably achieve ~28 million on this benchmark today.

28,000,000

I am not sure the scaling is exactly linear, but it's fair to say that even now, twenty years later, a modern 8 core CPU is still about an order of magnitude slower at the brute force task of evaluating chess positions than what Deep Blue's specialized chess hardware achieved in 1997.

But here's the thing: none of that speedy brute forcing matters today. Greatly improved chess programs running on mere handheld devices can perform beyond grandmaster level.

In 2009 a chess engine running on slower hardware, a 528 MHz HTC Touch HD mobile phone running Pocket Fritz 4 reached the grandmaster level – it won a category 6 tournament with a performance rating of 2898. Pocket Fritz 4 searches fewer than 20,000 positions per second. This is in contrast to supercomputers such as Deep Blue that searched 200 million positions per second.

As far as chess goes, despite what I so optimistically thought in 2006, it's been game over for humans for quite a few years now. The best computer chess programs, vastly more efficient than Deep Blue, combined with modern CPUs which are now finally within an order of magnitude of what Deep Blue's specialized chess hardware could deliver, play at levels way beyond what humans can achieve.

Chess: ruined forever. Thanks, computers. You jerks.

Despite this resounding defeat, there was still hope for humans in the game of Go. The number of possible moves, or "problem space", of Go is estimated to be 10170:

1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000

Remember that Chess had a mere fifty zeroes there? Go has more possible moves than there are atoms in the universe.

Wrap your face around that one.

Deep Blue was a statement about the inevitability of eventually being able to brute force your way around a difficult problem with the constant wind of Moore's Law at your back. If Chess is the quintessential European game, Go is the quintessential Asian game. Go requires a completely different strategy. Go means wrestling with a problem that is essentially impossible for computers to solve in any traditional way.

A simple material evaluation for chess works well – each type of piece is given a value, and each player receives a score depending on his/her remaining pieces. The player with the higher score is deemed to be 'winning' at that stage of the game.

However, Chess programmers innocently asking Go players for an evaluation function would be met with disbelief! No such simple evaluation exists. Since there is only a single type of piece, only the number each player has on the board could be used for a simple material heuristic, and there is almost no discernible correlation between the number of stones on the board and what the end result of the game will be.

Analysis of a problem this hard, with brute force completely off the table, is colloquially called "AI", though that term is a bit of a stretch to me. I prefer to think of it as building systems that can learn from experience, aka machine learning. Here's a talk which covers DeepMind learning to play classic Atari 2600 videogames. (Jump to the 10 minute mark to see what I mean.)

As impressive as this is – and it truly is – bear in mind that games as simple as Pac-Man still remain far beyond the grasp of Deep Mind. But what happens when you point a system like that at the game of Go?

DeepMind built a system, AlphaGo, designed to see how far they could get with those approaches in the game of Go. AlphaGo recently played one of the best Go players in the world, Lee Sedol, and defeated him in a stunning 4-1 display. Being the optimist that I am, I guessed that DeepMind would win one or two games, but a near total rout like this? Incredible. In the space of just 20 years, computers went from barely beating the best humans at Chess, with a problem space of 1050, to definitively beating the best humans at Go, with a problem space of 10170. How did this happen?

Well, a few things happened, but one unsung hero in this transformation is the humble video card, or GPU.

Consider this breakdown of the cost of floating point operations over time, measured in dollars per gigaflop:

1961$8,300,000,000

1984$42,780,000

1997$42,000

2000$1,300

2003$100

2007$52

2011$1.80

2012$0.73

2013$0.22

2015$0.08

What's not clear in this table is that after 2007, all the big advances in FLOPS came from gaming video cards designed for high speed real time 3D rendering, and as an incredibly beneficial side effect, they also turn out to be crazily fast at machine learning tasks.

The Google Brain project had just achieved amazing results — it learned to recognize cats and people by watching movies on YouTube. But it required 2,000 CPUs in servers powered and cooled in one of Google’s giant data centers. Few have computers of this scale. Enter NVIDIA and the GPU. Bryan Catanzaro in NVIDIA Research teamed with Andrew Ng’s team at Stanford to use GPUs for deep learning. As it turned out, 12 NVIDIA GPUs could deliver the deep-learning performance of 2,000 CPUs.

Let's consider a related case of highly parallel computation. How much faster is a GPU at password hashing?

Radeon 79708213.6 M c/s

6-core AMD CPU52.9 M c/s

Only 155 times faster right out of the gate. No big deal. On top of that, CPU performance has largely stalled in the last decade. While more and more cores are placed on each die, which is great when the problems are parallelizable – as they definitely are in this case – the actual performance improvement of any individual core over the last 5 to 10 years is rather modest.

But GPUs are still doubling in performance every few years. Consider password hash cracking expressed in the rate of hashes per second:

GTX 295200925k

GTX 690201254k

GTX 780 Ti2013100k

GTX 980 Ti2015240k

The latter video card is the one in my machine right now. It's likely the next major revision from Nvidia, due later this year, will double these rates again.

(While I'm at it, I'd like to emphasize how much it sucks to be an 8 character password in today's world. If your password is only 8 characters, that's perilously close to no password at all. That's also why why your password is (probably) too damn short. In fact, we just raised the minimum allowed password length on Discourse to 10 characters, because annoying password complexity rules are much less effective in reality than simply requiring longer passwords.)

Distributed AlphaGo used 1202 CPUs and 176 GPUs. While that doesn't sound like much, consider that as we've seen, each GPU can be up to 150 times faster at processing these kinds of highly parallel datasets — so those 176 GPUs were the equivalent of adding ~26,400 CPUs to the task. Or more!

Even if you don't care about video games, they happen to have a profound accidental impact on machine learning improvements. Every time you see a new video card release, don't think "slightly nicer looking games" think "wow, hash cracking and AI just got 2× faster … again!"

I'm certainly not making the same mistake I did when looking at Chess in 2006. (And in my defense, I totally did not see the era of GPUs as essential machine learning aids coming, even though I am a gamer.) If AlphaGo was intimidating today, having soundly beaten the best human Go player in the world, it'll be no contest after a few more years of GPUs doubling and redoubling their speeds again.

AlphaGo, broadly speaking, is the culmination of two very important trends in computing:

Huge increases in parallel processing power driven by consumer GPUs and videogames, which started in 2007. So if you're a gamer, congratulations! You're part of the problem-slash-solution.

We're beginning to build sophisticated (and combined) algorithmic approaches for entirely new problem spaces that are far too vast to even begin being solved by brute force methods alone. And these approaches clearly work, insofar as they mastered one of the hardest games in the world, one that many thought humans would never be defeated in.

Great. Another game ruined forever by computers. Jerks.

Based on our experience with Chess, and now Go, we know that computers will continue to beat us at virtually every game we play, in the same way that dolphins will always swim faster than we do. But what if that very same human mind was capable of not only building the dolphin, but continually refining it until they arrived at the world's fastest minnow? Where Deep Blue was the more or less inevitable end result of brute force computation, AlphaGo is the beginning of a whole new era of sophisticated problem solving against far more enormous problems. AlphaGo's victory is not a defeat of the human mind, but its greatest triumph.

(If you'd like to learn more about the powerful intersection of sophisticated machine learning algorithms and your GPU, read this excellent summary of AlphaGo and then download the DeepMind Atari learner and try it yourself.)

[advertisement] Find a better job the Stack Overflow way - what you need when you need it, no spam, and no scams.

March 4, 2016

We Hire the Best, Just Like Everyone Else

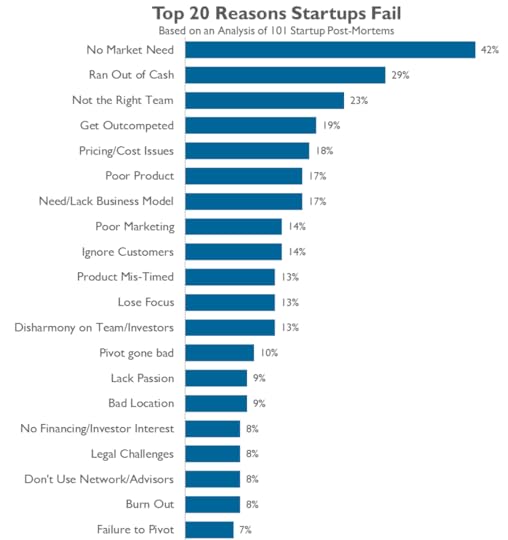

One of the most common pieces of advice you'll get as a startup is this:

Only hire the best. The quality of the people that work at your company will be one of the biggest factors in your success – or failure.

I've heard this advice over and over and over at startup events, to the point that I got a little sick of hearing it. It's not wrong. Putting aside the fact that every single other startup in the world who heard this same advice before you is already out there frantically doing everything they can to hire all the best people out from under you and everyone else, it is superficially true. A company staffed by a bunch of people who don't care about their work and aren't good at their jobs isn't exactly poised for success. But in a room full of people giving advice to startups, nobody wants to talk about the elephant in that room:

It doesn't matter how good the people are at your company when you happen to be working on the wrong problem, at the wrong time, using the wrong approach.

Most startups, statistically speaking, are going to fail.

And they will fail regardless of whether they hired "the best" due to circumstances largely beyond their control. So in that context does maximizing for the best possible hires really make sense?

Given the risks, I think maybe "hire the nuttiest risk junkie adrenaline addicted has-ideas-so-crazy-they-will-never-work people you can find" might actually be more practical startup advice. (Actually, now that I think about it, if that describes you, and you have serious Linux, Ruby, and JavaScript chops, perhaps you should email me.)

I told that person the same thing I tell all prospective job candidates: "come with me if you want to live"

— Jeff Atwood (@codinghorror) May 24, 2015

Okay, the goal is to increase your chance of success, however small it may be, therefore you should strive to hire the best. Seems reasonable, even noble in its way. But this pursuit of the best unfortunately comes with a serious dark side. Can anyone even tell me what "best" is? By what metrics? Judged by which results? How do we measure this? Who among us is suitable to judge others as the best at … what, exactly? Best is an extreme. Not pretty good, not very good, not excellent, but aiming for the crème de la crème, the top 1% in the industry.

The real trouble with using a lot of mediocre programmers instead of a couple of good ones is that no matter how long they work, they never produce something as good as what the great programmers can produce.

Pursuit of this extreme means hiring anyone less than the best becomes unacceptable, even harmful:

In the Macintosh Division, we had a saying, “A players hire A players; B players hire C players” – meaning that great people hire great people. On the other hand, mediocre people hire candidates who are not as good as they are, so they can feel superior to them. (If you start down this slippery slope, you’ll soon end up with Z players; this is called The Bozo Explosion. It is followed by The Layoff.) — Guy Kawasaki

There is an opportunity cost to keeping someone when you could do better. At a startup, that opportunity cost may be the difference between success and failure. Do you give less than full effort to make your enterprise a success? As an entrepreneur, you sweat blood to succeed. Shouldn’t you have a team that performs like you do? Every person you hire who is not a top player is like having a leak in the hull. Eventually you will sink. — Jon Soberg

Why am I so hardnosed about this? It’s because it is much, much better to reject a good candidate than to accept a bad candidate. A bad candidate will cost a lot of money and effort and waste other people’s time fixing all their bugs. Firing someone you hired by mistake can take months and be nightmarishly difficult, especially if they decide to be litigious about it. In some situations it may be completely impossible to fire anyone. Bad employees demoralize the good employees. And they might be bad programmers but really nice people or maybe they really need this job, so you can’t bear to fire them, or you can’t fire them without pissing everybody off, or whatever. It’s just a bad scene.

On the other hand, if you reject a good candidate, I mean, I guess in some existential sense an injustice has been done, but, hey, if they’re so smart, don’t worry, they’ll get lots of good job offers. Don’t be afraid that you’re going to reject too many people and you won’t be able to find anyone to hire. During the interview, it’s not your problem. Of course, it’s important to seek out good candidates. But once you’re actually interviewing someone, pretend that you’ve got 900 more people lined up outside the door. Don’t lower your standards no matter how hard it seems to find those great candidates. — Joel Spolsky

I don't mean to be critical of anyone I've quoted. I love Joel, we founded Stack Overflow together, and his advice about interviewing and hiring remains some of the best in the industry. It's hardly unique to express these sort of opinions in the software and startup field. I could have cited two dozen different articles and treatises about hiring that say the exact same thing: aim high and set out to hire the best, or don't bother.

This risk of hiring not-the-best is so severe, so existential a crisis to the very survival of your company or startup, the hiring process has to become highly selective, even arduous. It is better to reject a good applicant every single time than accidentally accept one single mediocre applicant. If the interview process produces literally anything other than unequivocal "Oh my God, this person is unbelievably talented, we have to hire them", from every single person they interviewed with, right down the line, then it's an automatic NO HIRE. Every time.

This level of strictness always made me uncomfortable. I'm not going to lie, it starts with my own selfishness. I'm pretty sure I wouldn't get hired at big, famous companies with legendarily difficult technical interview processes because, you know, they only hire the best. I don't think I am one of the best. More like cranky, tenacious, and outspoken, to the point that I wake up most days not even wanting to work with myself.