Gennaro Cuofano's Blog, page 67

July 9, 2025

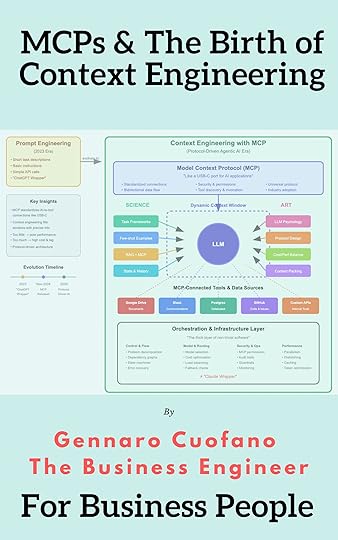

The Era of Context Engineering

We went from ‘every generative AI startup is a ChatGPT wrapper’ back in 2023, to ‘every agentic AI startup is a Claude wrapper.’

And yet, this marked a key shift, something much deeper than the architectural change from prompt engineering to context engineering.

A couple of days ago, in a post on X, Andrej Karpathy highlighted something quite important.

This observation captures more than just a shift in criticism—it reveals a fundamental transformation in how AI applications are built.

The journey from simple prompt optimization to complex context engineering represents one of the most significant architectural evolutions in modern software.

The dismissive “wrapper” label persists, but the reality has evolved far beyond what critics understand.

We’ve moved from applications that simply format and relay prompts to sophisticated systems that orchestrate entire information architectures.

This isn’t about writing better questions anymore—it’s about building the cognitive infrastructure that enables AI to operate autonomously and effectively.

This analysis synthesizes Andrej Karpathy’s mental model of context engineering with Gennaro Cuofano’s observations on the evolution of AI applications, incorporating the architectural impact of the Model Context Protocol (MCP). The shift from prompt to context engineering, now accelerated by standardized protocols, represents not just a technical evolution but a fundamental transformation in how we architect intelligent systems.

Get MCPs & Context Engineering Now

The Architectural Revolution: From Prompts to Protocol-Driven ContextPeople associate prompts with short task descriptions you’d give an LLM in your day-to-day use.

This mental model, which involves typing a clever question into ChatGPT and receiving a response, defined the early era of generative AI applications.

Prompt engineering meant crafting better questions, adding “think step-by-step” instructions, or including a few examples. It was simple, accessible, and fundamentally limited.

In every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step. This isn’t wordsmithing—it’s information architecture at scale.

The shift from prompt to context engineering transforms AI applications from simple query-response systems into sophisticated autonomous agents capable of complex, multi-step reasoning and execution.

The post The Era of Context Engineering appeared first on FourWeekMBA.

Your Brain on AI

We’re living through a profound transformation in human cognition. As AI tools become as commonplace as calculators once were, our brains are adapting in real-time, creating measurable changes in how we think, remember, and solve problems.

While it’s easy to argue that “we’re getting more stupid because of AI,” the perspective is much more nuanced, and it has to do with the intimate relationship humans have with technology (tools).

Which wires our brains in a specific direction to enable us to make the best of these tools as a humanity.

In that context, we can’t look at lost ability in a narrow domain of the cognitive spectrum and conclude that a technology is bad (an error we often make each time a new technology comes along).

Instead, we should step back and examine the entire cognitive spectrum, considering what we lose and what we gain, as humanity’s enhanced capabilities that come with new technology.

New research from MIT reveals that this isn’t just a behavioral shift—it’s a fundamental rewiring of our neural architecture.

The post Your Brain on AI appeared first on FourWeekMBA.

March 9, 2025

Contextual Adaptability Framework

One of the most expensive mistakes in business is missing the context.

So much so that assuming you’ve done all the right things in the wrong context, you’re still failing badly.

In a way, properly assessing the context and territory also helps understand if the time for specific actions is right.

And while timing can’t be controlled, at least you can avoid fatal mistakes while building up from there.

In addition to that, in the grand strategy framework, I’ve also highlighted the three core parts that make for a proper territory/context assessment:

Recap: In This Issue!

Recap: In This Issue!Contextual Adaptability: The Foundation of Grand Strategy

The ability of a company to fit into the current market while preparing for future growth.Ensures resilience against disruptions and long-term sustainability.Determined by External Forces (market landscape) and Internal Capabilities (organizational strengths).External Forces (Understanding the Territory)

Macro TrendsTechnology shifts: AI, automation, quantum computing.Economic factors: Inflation, interest rates, global trade patterns.Social & demographic shifts: Aging populations, urbanization, changing values.Key Questions:How will emerging technologies disrupt our industry?How are economic factors impacting our market?What social or demographic changes are affecting consumer behavior?Industry DynamicsCompetitive forces: New entrants, substitutes, buyer/supplier power.Market evolution: Growth rates, emerging niches, industry consolidation.Key Questions:Who are our biggest competitors, and how are they evolving?Are new market segments emerging?How is our supply chain being disrupted?Regulatory EnvironmentPolicy changes: New legislation, compliance requirements.Geopolitical risks: Trade tensions, sanctions, international relations.Standards evolution: Industry certifications, regulations.Key Questions:What new laws or regulations could impact us?Are there geopolitical risks affecting our industry?Do we need to adapt to changing industry standards?Customer LandscapeBehavior shifts: Changing consumer preferences and purchasing patterns.Unmet needs: Emerging problems, underserved segments.Decision journeys: How customers discover, evaluate, and buy.Key Questions:How are customer preferences changing?Are there new or underserved customer segments?How can we influence customer decision-making?Internal Capabilities (Assessing Your Position)

Core AssetsProprietary technologies, patents, data analytics, brand reputation.Key Questions:What unique assets give us a competitive edge?How strong is our data and market intelligence?Is our brand recognized and trusted?Resource BaseFinancial capital: Cash reserves, funding access.Human capital: Talent pool, leadership, expertise.Operational infrastructure: Supply chain, facilities, equipment.Key Questions:Do we have sufficient financial resources?Do we have the right talent and leadership?Is our operational infrastructure scalable?Organizational CapabilitiesDecision-making speed, adaptability, innovation systems.Key Questions:How fast and effectively do we make critical decisions?How well do we adapt to change?Are we investing enough in R&D and innovation?Strategic PositioningDifferentiation, market position, customer relationships.Key Questions:What is our unique value proposition?Are we positioned well in the market?Does our company’s culture align with our long-term strategy?Contextual Adaptability Framework

Purpose: Helps organizations assess their ability to fit into the current market while preparing for future disruptions.How It Works:Evaluates External Forces (market conditions and risks).Assesses Internal Capabilities (strengths and adaptability).Identifies gaps between the company’s current position and future needs.Develops strategies to improve adaptability in weak areas.Implementation GuideAssess both external forces and internal capabilities using key questions.Identify gaps between current positioning and future market needs.Develop strategies to improve adaptability and resilience.Continuously reassess as market conditions evolve.Key Insight from Google’s Strategy

2025 is a critical inflection point where Google must gain AI market share or risk losing its leadership in search and AI.Contextual adaptability is crucial for navigating technological disruptions and competitive threats.Successful companies align external market changes with internal strategic capabilities.With massive  Gennaro Cuofano, The Business Engineer

Gennaro Cuofano, The Business Engineer

This is part of an Enterprise AI series to tackle many of the day-to-day challenges you might face as a professional, executive, founder, or investor in the current AI landscape.

The post Contextual Adaptability Framework appeared first on FourWeekMBA.

Time Horizon Analysis

Balancing short-term operational needs with long-term strategic actions in a paradigm-shifting business landscape is extremely hard.

Indeed, as I’ve shown you in the previous pieces, time analysis is the second critical step after assessing adaptability to that context within a grand strategy assessment.

The Time Horizon Analysis Framework provides a structured approach to decision-making, categorizing strategies into three primary timeframes:

It breaks down the time horizons into three core blocks:

Immediate Horizon (0-2 Years) – Tactical responses to current market conditions.Mid-Term Horizon (3-5 Years) – Strategic repositioning for emerging trends.Long-Term Horizon (6+ Years) – Visionary planning for disruptive and paradigm-shifting transformations.By integrating these horizons, companies can ensure agility in the present while building resilience for the future.

The post Time Horizon Analysis appeared first on FourWeekMBA.

Grand Strategy Framework

Understanding the territory is critical in the business world as it helps you discover the “rules of the game” you’re part of.

That’s what grand strategy is for.

I’ve explained that in detail in Grand Strategy.

I can’t stress enough how understanding the underlying structure – underneath your feet – or the existing, developing, changing, shifting context to position yourself in the right place or at least avoid lethal traps hidden in the territory is critical and the most valuable skill as a business person.

Again, that’s a Grand Strategy.

You can build your map (strategy) and derive your possible routes (tactics) from there.

Let’s focus on this issue on the grand strategy side.

Where do you start to define where you are in the world?

Understanding the TerritoryGrand strategy helps identify structural shifts and avoid risks.Mapping the territory involves assessing external forces, internal capabilities, and time horizons.Mapping the TerritoryContextual Adaptability – How well an organization adjusts to change.External Forces – Identifying opportunities and threats.Internal Capabilities – Assessing strengths and weaknesses.Time Horizon Analysis – Balancing short-term and long-term planning.Assessing External Forces (Opportunities & Threats)Macro Trends – Technology shifts, economic changes, social and demographic shifts.Industry Dynamics – Competitive forces, market evolution, industry structure.Regulatory Environment – Policy changes, geopolitical risks, compliance requirements.Customer Landscape – Behavior shifts, unmet needs, decision journeys.Key Questions for External ForcesWhat technologies or economic trends could disrupt our industry?Who are our biggest competitors, and how are they evolving?What regulatory risks or opportunities should we prepare for?How are consumer preferences shifting in our industry?Assessing Internal Capabilities (Strengths & Weaknesses)Core Assets – Proprietary technology, data, brand reputation.Resource Base – Financial capital, human capital, operational infrastructure.Organizational Capabilities – Decision-making, adaptability, innovation.Strategic Positioning – Differentiation, market position, strategic alignment.Key Questions for Internal CapabilitiesWhat proprietary technologies or assets give us a competitive edge?Do we have the financial and operational resources to scale?How quickly can we adapt to market changes?Is our business aligned with long-term industry needs?Time Horizon AnalysisImmediate Horizon (0-2 Years) – Tactical responses to competitive dynamics and market shifts.Mid-Term Horizon (3-5 Years) – Strategic repositioning for emerging trends.Long-Term Horizon (6-10+ Years) – Visionary planning for disruptive industry shifts.Key Questions for Time HorizonsWhat requires immediate response or adaptation?What industry transformations require strategic repositioning?What entirely new territories might emerge that require reimagination?Contextual Mapping: Strategic Impact vs. UncertaintyCore Focus (High Impact, Low Uncertainty) – Invest in key trends and strategic certainties.Key Uncertainties (High Impact, High Uncertainty) – Hedge bets on potential disruptions.Monitor Only (Low Impact, Low Uncertainty) – Track background trends with minor influence.Contingency Plan (Low Impact, High Uncertainty) – Scout emerging wildcards and risks.Key Questions for Contextual MappingWhat trends require immediate investment for competitive advantage?What critical uncertainties could significantly impact our industry?Which background trends should we track to avoid being blindsided?What potential wildcards should we monitor for future impact?Multi-Horizon IntegrationBalance short-term actions with long-term innovation to ensure survival and growth.Successful companies manage both execution and future planning effectively.Final Takeaway: Understanding the environment, adapting strategically, and balancing risks and investments ensures long-term success.

Understanding the TerritoryGrand strategy helps identify structural shifts and avoid risks.Mapping the territory involves assessing external forces, internal capabilities, and time horizons.Mapping the TerritoryContextual Adaptability – How well an organization adjusts to change.External Forces – Identifying opportunities and threats.Internal Capabilities – Assessing strengths and weaknesses.Time Horizon Analysis – Balancing short-term and long-term planning.Assessing External Forces (Opportunities & Threats)Macro Trends – Technology shifts, economic changes, social and demographic shifts.Industry Dynamics – Competitive forces, market evolution, industry structure.Regulatory Environment – Policy changes, geopolitical risks, compliance requirements.Customer Landscape – Behavior shifts, unmet needs, decision journeys.Key Questions for External ForcesWhat technologies or economic trends could disrupt our industry?Who are our biggest competitors, and how are they evolving?What regulatory risks or opportunities should we prepare for?How are consumer preferences shifting in our industry?Assessing Internal Capabilities (Strengths & Weaknesses)Core Assets – Proprietary technology, data, brand reputation.Resource Base – Financial capital, human capital, operational infrastructure.Organizational Capabilities – Decision-making, adaptability, innovation.Strategic Positioning – Differentiation, market position, strategic alignment.Key Questions for Internal CapabilitiesWhat proprietary technologies or assets give us a competitive edge?Do we have the financial and operational resources to scale?How quickly can we adapt to market changes?Is our business aligned with long-term industry needs?Time Horizon AnalysisImmediate Horizon (0-2 Years) – Tactical responses to competitive dynamics and market shifts.Mid-Term Horizon (3-5 Years) – Strategic repositioning for emerging trends.Long-Term Horizon (6-10+ Years) – Visionary planning for disruptive industry shifts.Key Questions for Time HorizonsWhat requires immediate response or adaptation?What industry transformations require strategic repositioning?What entirely new territories might emerge that require reimagination?Contextual Mapping: Strategic Impact vs. UncertaintyCore Focus (High Impact, Low Uncertainty) – Invest in key trends and strategic certainties.Key Uncertainties (High Impact, High Uncertainty) – Hedge bets on potential disruptions.Monitor Only (Low Impact, Low Uncertainty) – Track background trends with minor influence.Contingency Plan (Low Impact, High Uncertainty) – Scout emerging wildcards and risks.Key Questions for Contextual MappingWhat trends require immediate investment for competitive advantage?What critical uncertainties could significantly impact our industry?Which background trends should we track to avoid being blindsided?What potential wildcards should we monitor for future impact?Multi-Horizon IntegrationBalance short-term actions with long-term innovation to ensure survival and growth.Successful companies manage both execution and future planning effectively.Final Takeaway: Understanding the environment, adapting strategically, and balancing risks and investments ensures long-term success.With massive  Gennaro Cuofano, The Business Engineer

Gennaro Cuofano, The Business Engineer

This is part of an Enterprise AI series to tackle many of the day-to-day challenges you might face as a professional, executive, founder, or investor in the current AI landscape.

The post Grand Strategy Framework appeared first on FourWeekMBA.

Grand Strategy

One of the major mistakes in business, which can turn out to be quite expensive, is to confuse strategy with tactics, or even worse, grand strategy for strategy.

Indeed, understanding context is the most valuable skill for a human being and, better yet, for a group of humans operating in the real world.

The Business Engineer is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.Subscribed

Context is like the territory. It’s like you are on a minefield, but you believe it’s a greenfield.

Understanding the territory is critical as it helps you discover the “rules of the game” you’re part of.

A territory, its shape, and its direction will be quite structural. It doesn’t matter how hard you try; it’ll be bigger than you.

Thus, just like a giant wave that is coming, understanding the territory enables you to position yourself to ride that or avoid to be wrecked by it.

Unless you believe you can shape the wave, and only a few really can.

The reason is that many of the massive changes that come, especially in the tech world, are part of what I’ve defined as “fundamental or foundational shifts.”

These are often the result of a tech convergence, where a few technologies become fully viable at once, accelerating the speed of progress in a much broader industry.

Thus, going back to grand strategy, that is what it means to understand the territory.

It will inform you of the real-world dynamics within which you can operate in the first place.

Reading that structure and understanding the underlying territory is a grand strategy.

From there, you need a map.

Or a way to navigate that territory once you’ve understood it, even intuitively.

That’s what strategy is, it’s the temporary map, which you have drafted, as a result of having understood the territory, its dynamics, the way it’s shaped.

But also the way it’s changing or has changed.

That is why, without grand strategy, there is no strategy.

The map follows the territory.

You can formulate tactics once you have mapped the context based on the underlying territory.

Tactics are all the possible routes to move on that map based on the territory.

Once again, there is no single route; many are possible, and a few will lead in the right direction on the map.

Each will bring you closer to the territory where you can achieve the most as a person or business.

Thus, from here, it is derived that there’s no effective strategy without a grand strategy. And not good routes without the map, thus the strategy.

Keep that in mind!

Grand Strategy = Territory (Structural/Contextual)Strategy = Map (Navigational/Directional)Tactic = Routes (Operational/Executional/Optional)

Grand Strategy = Territory (Structural/Contextual)Strategy = Map (Navigational/Directional)Tactic = Routes (Operational/Executional/Optional)The post Grand Strategy appeared first on FourWeekMBA.

February 9, 2025

The AI Capex Race

Amazon, Google, Microsoft, and Meta will spend over $215 billion on AI in 2025, with Amazon leading at $100 billion.

Big Tech players transitioning into “Hyperscalers” are spending $210 billion in Capex (capital expenditure) in 2025 alone. Image Credit: WSJ

Big Tech players transitioning into “Hyperscalers” are spending $210 billion in Capex (capital expenditure) in 2025 alone. Image Credit: WSJDespite DeepSeek’s low-cost AI breakthrough, tech CEOs defend their investments, citing AI-driven application reinvention.

Amazon is set to spend over $100 billion on AI-driven infrastructure.

Google will raise its capex to $75 billion, up from $52.5 billion.

Microsoft’s AI investments will exceed $80 billion.

Meta plans to spend between $60–$65 billion as a combined bet on AI/AR.

This massive spending spree has shocked the market, particularly as Chinese AI startup DeepSeek has demonstrated that advanced AI models can be trained at much lower costs.

Despite this, tech CEOs continue to justify these investments, predicting an AI-driven reinvention of applications and a surge in cloud demand.

Another key factor is the AI supply bottleneck, where the explosion of AI demand, both consumer and enterprise, has outpaced cloud providers like Amazon, Microsoft, and Google.

What will this spend be used for?

And a key question that remains is: Are these companies over-investing or acting irrationally?

As I’ve shown you above, the industry is evolving quickly.

And for now, that is quickly getting reshaped every 2-3 years. Massive moats must be made, not only on the application but also on the foundational side.

However, the learning from DeepSeek is that while the research still has a long way to go, the key point stays in terms of optimization techniques that can be used, especially on the reasoning side for quick take-off.

Not only that, at the beginning of the piece, I’ve shown you the impressive result that just came out from a bunch of young researchers at Stanford and the University of Washington with test-time scaling.

One thing here as you read the paper is how much space there is to optimize on the reasoning side, which means we’ll see exponential progress in the next couple of years.

Yet, to be at the frontier, you must be competitive on the whole pipeline and move yourself in two directions. Up in the AI foundational stack, up to the point of building data centers and creating your own AI chip architecture. And downstream, getting closer and closer to the final consumer.

In 2018, I started to map out the “AI supply chain,” which meant a slightly different thing, yet the core of the “competitive moating” hasn’t changed.

As a frontier/foundational AI player, you must move upstream and downstream into the AI supply chain.

Keep tight, as in the upcoming issues, I’ll try to tackle what’s each player’s potential moat in AI.

What Bet is Each Big Tech Player Placing on AI? Future Competitive MoatingEach of the Big Tech players is placing its bet on how AI will develop.

As with any bet, it might be successful, yet that is how you build moats as a market develops into the future.

Amazon’s CapEx BetAmazon’s massive spending will be primarily on expanding AWS data centers and cloud services; all the while, the company is going further down the stack of creating its own AI models.

In the meantime, as a low-hanging fruit, if Amazon were to implement AI on top of its core and, for instance, boost its ads business with it, that would be already a quick leg up, at least on the revenue side.

Amazon Ads has officially passed $50 billion in revenue in 2024, coming in as the fastest-growing segment for Amazon, even faster than AWS.

Amazon Ads has officially passed $50 billion in revenue in 2024, coming in as the fastest-growing segment for Amazon, even faster than AWS.For instance, Alexa’s AI revamp might be another one on the consumer side.

Amazon is set to unveil a generative AI-powered Alexa at a February 26 event, introducing conversational and agent-like capabilities.

A $5–$10 monthly fee is considered, with an initial free rollout.

The upgrade aims to revitalize Alexa’s relevance and monetize AI features, aligning with Jeff Bezos’ original vision.

Google’s CapEx BetGoogle’s capex will be focused on AI data centers and cloud computing, as AI will serve as the core infrastructure for the suite of its products.

Google is looking at it from three perspectives:

Leading AI infrastructure (within Google Cloud).World-class research, including models and tooling (within Google DeepMind).And our products and platforms that bring these innovations to people at scale (within the plethora of tools and companies Alphabet owns, but first of all within Google Search).Thus, in the meantime, the low-hanging fruit is really the search business.

Google Search, with interfaces like AI Overviews in Search, has just expanded to 100+ countries, increasing search engagement, especially among younger users.Google Ads Machine, with Google integrating AI features, from image generation to targeting, to improve the delivery and reach of these formats.Google is now integrating AI into its core in two ways:

It means that with this simple AI integration, one on Google Search can at least try to patch things up in the short term, as redefining the whole search UX for AI at Google’s scale is (almost) mission impossible.

On the Google Ads side, the company can boost advertising budgets through it, thus translating these AI enhancements as a revenue leg up.

With the premise that Google Search might lose scale, advertisers might opt for other platforms.

Yet, a key advantage for Google is it also owns YouTube, which is probably still the most impressive digital platform.

With the integration of AI into its Ad Machine, for instance, Google has seen improved search performance, which has also driven the cost per click higher, thus probably making the company a few more billion a year.

Thus, Google’s core moat is the search business.

While the company boosts its revenue via AI, it also explores ways to monetize its AI via ads.

Microsoft CapEx Bet

Microsoft CapEx BetMicrosoft’s AI investments will exceed $80 billion as the OpenAI partnership is loosened as SoftBank gets in the loop.

In this phase of AI adoption, Microsoft has been defined by OpenAI’s partnership. By the end of 2024, as OpenAI is planning is transition to full profit, primarily funded via SoftBank, this is all changing.

That will require Microsoft to quickly shift its whole strategy to compete, a la Google, across the whole spectrum of AI, from data centers to business and consumer applications.

This CapEx investment will be critical for Microsoft to move in two directions.

One, keep strengthening its Azure’s AI infrastructure and AI data centers.

Two, and most importantly, the company will also need to accelerate its own models’ development, as OpenAI is loosening the partnership with Microsoft and getting married to SoftBank to stay relevant in this further phase of AI development.

The risk of losing the “AI reasoning train” is too high, and Microsoft will do all it can to cling to it.

Meta’s CapEx Bet

Meta plans to spend between $60–$65 billion as a combined bet on AI/AR.

With that CapEx, Meta will need to get back on track to be the top player in the Open-Source AI space while placing a massive bet on AR, which is the alternative platform for Meta, which still massively relies on Apple to distribute its products, which puts it in a long-term weak position.

Each of these companies knows it is placing probably the most critical bet of the last 50 years, and losing the train there will mean losing relevance and going into obsolescence.

That’s the price that the loser will pay…

Recap: In This Issue!The AI Reasoning Take-Off: A New Acceleration PhaseAI reasoning is entering a breakthrough phase due to new techniques like test-time scaling, making reasoning much cheaper and more efficient.This could lead to a faster-than-expected transition from reasoning to full AI agents that can handle complex tasks autonomously.Optimization in AI reasoning is still in its early stages, meaning rapid improvements will continue over the next few years.The Three Phases of AI EvolutionLLMs via Pre-training (Past – 2022)Scaling data, compute, and attention-based architectures (GPT-3, GPT-4, LLaMA).Rapid progress until hitting initial scaling limits.Reasoning via Post-training (Current – 2023-2025)AI is moving beyond simple Q&A to more complex reasoning tasks through post-training tricks.Example: Chain-of-thought prompting, tool use, and memory integration.Real Agentic AI (Coming Fast – 2025+)Moving from human-in-the-loop prompting to AI agents autonomously handling multiple tasks in the background.Humans will shift from guiding every step to checking outcomes and ensuring alignment.The AI Cost Curve is Falling FastInference, reasoning, and intelligence costs are decreasing rapidly, driving fierce AI competition.The race at the frontier of AI has no natural moats—only continuous innovation can sustain dominance.AI players must find alternative defensibility strategies beyond model capabilities.The AI CapEx Race: Big Tech’s $215B AI Investment in 2025Amazon, Google, Microsoft, and Meta will invest over $215 billion in AI infrastructure in 2025.The AI market is moving into a “hyperscaler era,” where capital spending on AI infrastructure will dictate future winners.CompanyAI Investment (2025)Primary FocusAmazon$100B+AI-driven AWS expansion, AI-powered adsGoogle$75BAI search & ad infrastructure, cloud dominanceMicrosoft$80BAzure AI infrastructure, OpenAI alternative modelsMeta$60-65BAI + AR platform bets, AI-driven open-source leadership

Despite cost breakthroughs (e.g., DeepSeek’s low-cost AI models), Big Tech is doubling down on CapEx investments.The AI supply bottleneck remains a major constraint—AI demand is outpacing cloud capacity.Are There No Moats in AI?Many assume AI models are quickly commoditized, meaning no moats exist at the frontier.But defensibility isn’t just about core models—it’s about infrastructure, branding, distribution, and vertical integration.AI moats come from locking in customers through AI-native applications, infrastructure, and network effects.The AI Innovation Pipeline: How AI Moats FormA true “Frontier AI Moat” isn’t just model development—it involves the entire AI lifecycle:

R&D & Talent – The first layer of AI differentiation is top research teams.Model Training – Scaling data & compute efficiently.Post-Training Optimization – Fine-tuning models for complex reasoning tasks.Inference Infrastructure – AI serving costs must be optimized for real-world applications.Application & Distribution – Turning AI advances into real business advantages.AI hyperscalers (OpenAI, Anthropic, Google) need more than models—they need cloud infrastructure & hardware access.The GPU arms race continues—AI firms need 10x more GPUs for R&D/testing than for model training itself.AI Talent Wars: The Hidden MoatAI talent remains one of the biggest bottlenecks in the industry.The AI boom created a severe talent shortage, leading to aggressive poaching among frontier AI players.Key AI talent moves (2024-2025):Mustafa Suleyman (DeepMind → Microsoft AI)Mira Murati (OpenAI CTO → New AI startup)John Schulman (OpenAI → Anthropic → Back to OpenAI)The AGI Bet: Why AI Labs Are Investing at Insane LevelsAI labs are betting that AGI (Artificial General Intelligence) will emerge within the decade.OpenAI co-founder Ilya Sutskever’s new startup, SSI, is already valued at $20B despite being in stealth mode.If AGI materializes, it could unlock a new economic paradigm, driving AI labs’ extreme investments.Future AI Moats: How Big Tech is Positioning for AI DominanceAmazon’s AI StrategyAWS expansion → AI cloud dominance.AI-powered ads & Alexa AI → Monetizing consumer AI.Custom AI models → Reducing OpenAI reliance.Google’s AI StrategyGoogle Search + AI Overviews → Enhancing search engagement.Google Ads AI → Increasing ad revenue via AI targeting.AI Cloud Infrastructure → Competing with AWS & Azure.Microsoft’s AI StrategyAzure AI → Strengthening its cloud moat.Building alternative AI models → As OpenAI diversifies its partnerships.Meta’s AI StrategyBetting on open-source AI → Positioning as an alternative to proprietary AI.Massive AR investment → Building an AI-driven hardware ecosystem.Key TakeawaysAI reasoning is rapidly improving, driving us closer to fully autonomous AI agents.AI model training costs are falling, but inference & infrastructure remain critical challenges.The AI CapEx race will define future winners—Big Tech is investing over $215B in AI in 2025.AI moats exist beyond models—they form through branding, infrastructure, and distribution.The AGI bet is pushing AI firms toward massive, long-term investments in frontier AI.With massive  Gennaro Cuofano, The Business Engineer

Gennaro Cuofano, The Business Engineer

This is part of an Enterprise AI series to tackle many of the day-to-day challenges you might face as a professional, executive, founder, or investor in the current AI landscape.

The post The AI Capex Race appeared first on FourWeekMBA.

The Full AI Innovation Pipeline

I’m defining the “Frontier AI Innovation Pipeline” as the complete set of activities with a new AI model that moves the industry’s needle.

And that can be roughly broken down into:

R&D.Model development.Training.Post training.Inference.Indeed, the AI model pipeline is super complex and multi-faceted.

With the number of GPUs needed to do research and tests for the release of a new model.

Indeed, you have to, in general, look at a proportion of 1/5 or 1/10 in terms of GPU needed to train a model for the final release vs. R&D and tests before the model is complete.

For instance, DeepSeek’s case used 2,000 Nvidia H800 GPUs for training. That means the company had to have at least 10x that amount of chips for research, smaller-scale testing,

And then, there is the elephant in the room: inference.

In other words, even when the AI model is ready for deployment unless it’s open source and its weights can be downloaded and repurposed directly on an application or device, in all other cases, it will be served either via APIs or via an app interface.

In that instance, you will need underlying server infrastructure to serve these models.

And especially on the reasoning side, that can be hard to sustain at scale!

Thus, again, when considering moats on the frontiers of AI, all the above are critical factors to consider as each comes into play.

In addition, just like Google started as a single search engine, then vertically integrated to have a specialized infrastructure to serve its whole suite of applications, a player like OpenAI and Anthropic, over time, will go through a similar path to lock in massive moats.

Google today is among the major players in the data center space.

The massive Google facilities to serve internal AI workloads for Google Image Credit: Semyanalysis

The massive Google facilities to serve internal AI workloads for Google Image Credit: SemyanalysisThe competitive moat isn’t just in the model itself, but instead on the infrastructure you build out of it, combined with the locked-in market shares of an industry that is still all over the place, as winner-take-all effects haven’t consolidated yet but will, at due time!

And so far, we haven’t even accounted for the most significant expense for these frontier AI players: talent!

The post The Full AI Innovation Pipeline appeared first on FourWeekMBA.

Are There No Moats in AI?

This week, I treated a tricky topic in business AI: moats.

I’ve called it “Part One” because this is dedicated to the applications side of it (the so-called “wrappers”) to highlight how not only you can, but now more than ever, it’s possible to get momentum, build something quite valuable, out of nowhere and with very low entry barriers.

Yet, that must be translated into vertical infrastructure, branding, and distribution to become a moat.

I’ve mapped all out there into the AI Competitive Moat Acid Test.

Back to the frontier models.

Or pretty much, the guys, like OpenAI, Anthropic, Meta, and Google, who need to keep pushing these AI models to the next level to maintain a competitive edge, thus also wide market shares of the AI market.

Many people are surprised when tech gets commoditized quickly.

But that’s a fact.

Yet that doesn’t mean you can’t build a competitive moat out of it, but it won’t be just on the core tech; but rather, it’s how that core teck will translate into accelerated growth, distribution, and branding, you get out of it to lock in a market.

In non-linear competition, I’ve explained the axioms of competition in a tech-driven world.

Non-Linear Competition

Non-Linear Competition·

Feb 5

That isn’t that different from other domains, yet it’s much faster in tech. So, we often witness these cycles in the industry over a short period.

Also, as the market matures, tech progress will hit a plateau, and when that happens, market lock-ins and winner-take-all effects will come in.

But in AI, it’ll take another decade to get there. And yet, if you are a frontier model player, that’s the game you picked.

In addition, when you look specifically at the frontier model market, you have to look at it with a multi-faceted perspective and as the intertwining between three core elements of the whole ecosystem:

That’s also why, across the “frontier stack,” you will see fierce competition, massive capital expenditure, human capital poaching, and much more.

In short, outside pure GPU manufacturing, which will imply the build-up of a fab, if you’re a “frontier model,” you’ll need to get involved with core strategic partnerships on the hardware side while getting your way into the cloud infrastructure stack of the “AI foundational stack.”

This isn’t a race to grab the most valuable part of the market in the future, as without infrastructure, how do you serve a model in the first place, to billions of people, or to enable trillions of world’s tasks?

So that’s the key point.

For instance, in 2024, OpenAI’s business model pillars moved around three core areas:

Consumer and B2B tool (ChatGPT free and premium).API platform.And enterprise integrations.

Yet, as OpenAI moves ahead, it must also reach the infrastructure side.

Indeed, when you look at the “foundational ecosystem stack,” it has many moving parts.

Thus, it’s not just model development. Instead, that is only a facet of it. Indeed, when it comes to the GPU count, people often confuse the number of AI chips needed to train a model with the total number a frontier AI player must have available for the “frontier’s pipeline” at any time.

And even post-DeepSeek, this is shrinking, if at all, it’s putting even more pressure on these frontier AI companies to gather more, more advanced, and better-networked GPUs, as a 1% increase in the margins might result in a massive outcome for companies that are betting on AGI.

Thus, here are three core points to touch upon:

The full “AI innovation pipeline.”Another cost that is often overlooked, but that is the heavy part of the whole thing for now: talent!And, last, but quite important, If you believe that is happening in a few years, the Value of AGI.The post Are There No Moats in AI? appeared first on FourWeekMBA.

The AI Reasoning Take Off

Prepare for an AI reasoning take-off as a further technique/paradigm; test-time scaling makes reasoning much cheaper and better.

That means we might go much faster from reasoning to agents (meant open-ended ones capable of very complex tasks) than expected.

The Business Engineer is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Subscribed

For instance, during test-time computing, we’ve learned that AI models can improve reasoning by scaling up and thinking longer during inference.

However, with test-time scaling, we learned that simply budget-forcing the reasoning model gets much better by using fewer resources.

In short, researchers added a “Wait” command when the “reasoning AI process” wasn’t efficient, thus stopping it instead of having it consume tokens without providing the proper outcome.

This method outperformed OpenAI’s o1-preview, hinting that brute-force scaling may give way to smarter optimization techniques on top.

In short, that points out that there is a lot of optimization space on top of these reasoning models that can be done on the cheap and with “smart hacks”

Indeed, at least on the basic reasoning side, there is still so much optimization space that the progress can continue for the next few years.

In short, since the “ChatGPT moment,” we went and are going through three significant phases of take-off:

LLMs via pre-training, where all it mattered was scaling data, computing, and tweaking attention-based algorithms to get there. While this has hit, in part, a plateau,Reasoning via post-training (currently here) and a set of architectural tricks on top enable AI models to produce outcomes way more complex than simple answers. We’re now at the phase where this is accelerating.“Real agentic” (we’re getting there fast), here we move from a paradigm where the human is continuously in the loop to make the LLM work on several tasks to a paradigm where the human only checks if the agent output aligns with the desired outcome as it handles multiple tasks in parallel and in background.

These combined and further developing in parallel will make things go even faster.

This also shows that the “cost of inference” and then “reasoning” and thus intelligence are going down fast, creating an impressive race at the AI frontier, where there are no moats.

But if that is the case, are there no moats in AI?

This week, I will focus on that!

The post The AI Reasoning Take Off appeared first on FourWeekMBA.