The reproducibility of psychological science

The Reproducibility Project results have just been published in Science, a massive, collaborative, ‘Open Science’ attempt to replicate 100 psychology experiments published in leading psychology journals. The results are sure to be widely debated – the biggest result being that many published results were not replicated. There’s an article in the New York Times about the study here: Many Psychology Findings Not as Strong as Claimed, Study Says

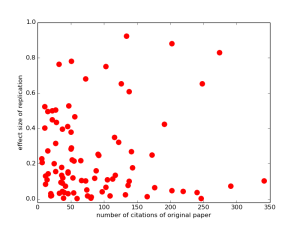

This is a landmark in meta-science : researchers collaborating to inspect how psychological science is carried out, how reliable it is, and what that means for how we should change what we do in the future. But, it is also an illustration of the process of Open Science. All the materials from the project, including the raw data and analysis code, can be downloaded from the OSF webpage. That means that if you have a question about the results, you can check it for yourself. So, by way of example, here’s a quick analysis I ran this morning: does the number of citations of a paper predict how large the effect size will be of a replication in the Reproducibility Project? Answer: not so much

That horizontal string of dots along the bottom is replications with close to zero-effect size, and high citations for the original paper (nearly all of which reported non-zero and statistically significant effects). Draw your own conclusions!

Link: Reproducibility OSF project page

Link: my code for making this graph (in python)

Tom Stafford's Blog

- Tom Stafford's profile

- 13 followers