AI Model Graveyards: Digital Platforms for Deprecating, Archiving, and Recycling Obsolete Models

In the rapidly evolving landscape of artificial intelligence, the lifecycle of machine learning models has become increasingly complex and accelerated. As newer, more sophisticated models emerge with unprecedented frequency, the challenge of managing obsolete and deprecated AI systems has given rise to an entirely new category of platforms: AI Model Graveyards. These specialized digital ecosystems serve as comprehensive repositories for the systematic deprecation, archival, and recycling of AI models that have reached the end of their productive lifecycle.

The Growing Need for Model Lifecycle ManagementThe exponential growth in AI model development has created an unprecedented challenge in the technology landscape. Organizations worldwide deploy thousands of machine learning models across various applications, from simple recommendation engines to complex language models and computer vision systems. Each model represents significant investment in computational resources, training data, and human expertise, yet the relentless pace of innovation means that many models become obsolete within months or even weeks of deployment.

Traditional approaches to model management have proven inadequate for handling this volume and velocity of change. The absence of systematic processes for model retirement has led to numerous problems: resource waste through continued hosting of unused models, security vulnerabilities from unmaintained systems, compliance issues related to data retention, and lost institutional knowledge when models are simply deleted without proper documentation.

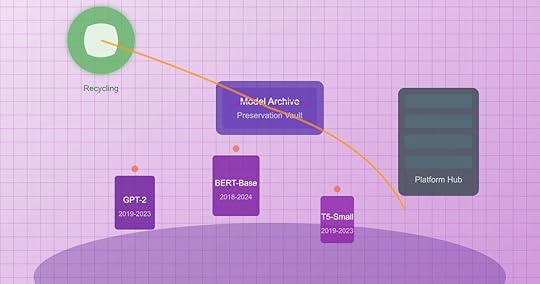

AI Model Graveyards emerge as a solution to these challenges, providing structured platforms that treat model deprecation as a thoughtful process rather than a simple deletion. These platforms recognize that even obsolete models contain valuable artifacts, insights, and components that can inform future development or serve specialized use cases.

Architecture and Core ComponentsThe architecture of AI Model Graveyards reflects the complex nature of modern machine learning systems. At their foundation lies a comprehensive metadata management system that captures not just the model artifacts themselves, but the entire context surrounding their development, deployment, and performance.

The archival layer serves as the primary storage mechanism, designed to accommodate the diverse formats and structures of different model types. Unlike simple file storage systems, these platforms understand the intricate relationships between model weights, configuration files, training scripts, evaluation datasets, and deployment specifications. They maintain the integrity of these relationships while optimizing storage efficiency through intelligent compression and deduplication techniques.

Version control integration forms another critical component, extending beyond traditional code versioning to encompass the full lineage of model evolution. This includes tracking experimental branches, parameter variations, and the complex genealogy of model families that spawn from common ancestors.

The documentation and knowledge preservation system captures the human insights that traditional backups cannot store. This includes design decisions, performance observations, failure analyses, and the contextual knowledge that developers accumulate through working with specific models. This information proves invaluable for future teams who might encounter similar challenges or seek to understand why certain approaches were abandoned.

Deprecation Workflow and ProcessesThe deprecation process within AI Model Graveyards follows a structured workflow designed to ensure nothing valuable is lost while maintaining operational efficiency. The process begins with deprecation triggers, which can be automatic based on performance metrics, manual based on strategic decisions, or time-based following predetermined lifecycles.

During the initial assessment phase, automated analysis tools evaluate the model’s current usage patterns, dependencies, and potential value for future reference. This assessment helps determine the appropriate level of preservation and whether any components merit immediate recycling into active projects.

The documentation capture phase involves both automated extraction of technical specifications and human input regarding lessons learned, use cases, and performance characteristics. Advanced platforms employ natural language processing to extract insights from deployment logs, user feedback, and developer communications, creating comprehensive knowledge profiles that extend far beyond technical documentation.

The migration process carefully handles the transition of any ongoing dependencies, ensuring that deprecated models can be safely removed from production environments without disrupting active systems. This includes graceful degradation strategies for models that serve as fallbacks or components in larger systems.

Intelligent Archival and CompressionThe storage requirements for comprehensive model archival present significant technical challenges. Modern AI models, particularly large language models and complex neural networks, can consume terabytes of storage space. AI Model Graveyards employ sophisticated compression and optimization techniques specifically designed for machine learning artifacts.

Semantic compression analyzes model structures to identify redundancies and patterns that allow for more efficient storage without losing essential characteristics. This approach goes beyond traditional file compression to understand the mathematical relationships within model parameters, enabling dramatic size reductions while preserving the ability to extract meaningful insights.

Layered archival strategies recognize that different components of a model have varying long-term value. Critical elements like final weights and core architecture receive full preservation, while intermediate training checkpoints might be stored with lossy compression or representative sampling.

The systems also implement intelligent deduplication across the entire archive, recognizing when multiple models share common components, training data, or architectural elements. This cross-model optimization can significantly reduce overall storage requirements while maintaining the integrity of individual model archives.

Model Component Recycling and ReuseOne of the most innovative aspects of AI Model Graveyards involves the systematic recycling of model components for future use. Rather than treating deprecated models as static archives, these platforms actively mine them for reusable assets that can accelerate new development projects.

Transfer learning optimization identifies model layers, feature extractors, and embeddings that demonstrate strong performance characteristics across different domains. These components are catalogued with performance metadata, making them easily discoverable for researchers working on related problems.

The platforms maintain extensive databases of training strategies, hyperparameter configurations, and architectural patterns that proved effective in specific contexts. This knowledge recycling prevents teams from repeatedly solving similar optimization challenges and helps establish best practices across organizations.

Data artifact recovery represents another valuable recycling stream. Deprecated models often contain carefully curated datasets, preprocessing pipelines, and evaluation benchmarks that required significant effort to develop. These assets are extracted, documented, and made available for future projects, preventing the loss of valuable data work.

Knowledge Preservation and Institutional MemoryBeyond the technical artifacts, AI Model Graveyards serve as repositories for institutional knowledge that would otherwise be lost when teams change, projects end, or organizations restructure. This knowledge preservation function proves critical for maintaining continuity in AI development efforts.

The platforms capture design rationales, documenting why specific architectural choices were made, what alternatives were considered, and how decisions related to broader project goals. This information helps future teams understand the context behind existing models and avoid repeating failed experiments.

Performance insights and failure analyses receive special attention, as these represent some of the most valuable learning opportunities in AI development. The systems capture not just what worked, but detailed analysis of what didn’t work and why, creating a comprehensive knowledge base of approaches to avoid and problems to anticipate.

The human factor documentation includes team dynamics, collaboration patterns, and communication workflows that contributed to successful model development. This organizational knowledge helps teams structure future projects more effectively and understand the human processes behind technical achievements.

Compliance and Governance IntegrationAs AI systems become subject to increasing regulatory scrutiny, AI Model Graveyards play a crucial role in maintaining compliance with data protection, algorithmic accountability, and industry-specific regulations. The platforms provide comprehensive audit trails that demonstrate proper handling of sensitive data and responsible AI practices.

Data lineage tracking ensures complete visibility into how training data was sourced, processed, and used throughout the model lifecycle. This capability proves essential for compliance with privacy regulations that grant individuals rights over their data, including the right to deletion and the right to understand how their information was used.

The systems maintain detailed records of model performance across different demographic groups, enabling ongoing monitoring for bias and fairness issues even after models are deprecated. This historical perspective helps organizations understand how their AI systems have evolved in terms of ethical considerations and social impact.

Retention policies within AI Model Graveyards balance the value of long-term preservation with legal requirements for data deletion. The platforms implement sophisticated policies that can selectively remove sensitive elements while preserving the broader technical and educational value of deprecated models.

Integration with Development EcosystemsModern AI Model Graveyards function as integral components of broader machine learning development ecosystems rather than isolated archive systems. They integrate seamlessly with popular development tools, deployment platforms, and organizational workflows to minimize friction in the deprecation process.

Version control integration extends the familiar git-based workflows that developers already use, making model deprecation feel like a natural extension of existing practices. Developers can deprecate models using familiar commands and workflows, reducing the learning curve and increasing adoption.

The platforms integrate with continuous integration and deployment pipelines to automate deprecation triggers based on performance metrics, deployment patterns, or strategic decisions. This automation ensures that deprecation happens consistently and promptly, preventing the accumulation of obsolete models in production environments.

API integration enables other development tools to query the graveyard for insights, retrieve archived components, or understand the history of specific model families. This connectivity makes the archived knowledge immediately accessible within active development workflows.

Collaborative Research and Open ScienceAI Model Graveyards facilitate new forms of collaborative research by making deprecated models and their associated knowledge available to the broader research community. This openness accelerates scientific progress by allowing researchers to build upon previous work, understand failure modes, and explore alternative approaches.

Academic partnerships enable research institutions to access comprehensive model archives for educational purposes, providing students with realistic examples of model development cycles and the opportunity to learn from both successes and failures. This educational access helps prepare the next generation of AI practitioners with more complete understanding of the field.

The platforms support reproducibility research by maintaining complete environments and dependencies needed to recreate historical model behavior. This capability proves invaluable for researchers studying the evolution of AI capabilities or investigating specific phenomena across different model generations.

Cross-organizational collaboration becomes possible when multiple institutions contribute to shared AI Model Graveyards, creating collective knowledge bases that benefit entire research communities while respecting appropriate confidentiality boundaries.

Economic Models and SustainabilityThe economics of AI Model Graveyards present interesting challenges and opportunities. While the platforms require significant infrastructure investment, they generate value through resource optimization, knowledge reuse, and reduced redundant development efforts.

Cost recovery models vary depending on the organizational context. Enterprise implementations typically justify costs through reduced storage waste, accelerated development cycles, and improved compliance capabilities. Academic and open-source implementations often rely on grant funding, institutional support, or collaborative cost-sharing arrangements.

The platforms create new economic opportunities through component marketplaces where valuable model artifacts can be licensed or shared. These marketplaces enable organizations to monetize their deprecated models while providing other teams with access to proven components and approaches.

Sustainability considerations extend beyond immediate costs to include the environmental impact of model storage and the broader efficiency gains from avoiding redundant development work. AI Model Graveyards contribute to more sustainable AI development by maximizing the value extracted from computational resources already invested in model training.

Technical Challenges and InnovationThe development of effective AI Model Graveyards requires solving numerous technical challenges that push the boundaries of existing systems and methodologies. Storage optimization for machine learning artifacts demands new approaches that understand the unique characteristics of model data.

Metadata standardization across different model types, frameworks, and organizations requires developing flexible schemas that can accommodate the diversity of AI systems while maintaining searchability and interoperability. This standardization effort involves collaboration across the entire AI community.

Search and discovery capabilities must handle the high-dimensional, complex relationships between models, data, and performance characteristics. Traditional database approaches prove inadequate for the nuanced queries that researchers and developers need to perform when searching for relevant archived models.

The platforms must also address version compatibility challenges as AI frameworks evolve rapidly, potentially making archived models inaccessible to future tools. This requires developing translation and adaptation capabilities that can bridge compatibility gaps across different framework versions.

Future Evolution and Emerging PatternsThe field of AI Model Graveyards continues to evolve rapidly as organizations gain experience with model lifecycle management and new technical capabilities emerge. Several trends are shaping the future development of these platforms.

Artificial intelligence is being applied to the graveyard systems themselves, creating intelligent archival assistants that can automatically identify valuable components, suggest recycling opportunities, and optimize storage strategies. These meta-AI systems learn from patterns across archived models to improve the efficiency and value of the graveyard operations.

Integration with emerging AI development paradigms, such as federated learning and edge computing, requires new approaches to distributed archival and knowledge sharing. These paradigms create additional complexity in model lifecycle management while also offering new opportunities for collaborative knowledge preservation.

The platforms are beginning to support more sophisticated analytical capabilities that can identify trends and patterns across large collections of archived models. This meta-analysis capability provides insights into the evolution of AI techniques and helps guide future research directions.

Conclusion: Preserving AI Heritage for Future InnovationAI Model Graveyards represent a fundamental shift in how we think about the lifecycle of artificial intelligence systems. Rather than treating model deprecation as an end point, these platforms recognize it as a transformation that preserves valuable knowledge and assets for future innovation.

The comprehensive approach to model archival, documentation, and recycling ensures that the significant investments in AI development continue to generate value long after individual models become obsolete. This perspective transforms deprecation from a cost center into a value-creation opportunity that benefits entire organizations and research communities.

As the AI field continues to mature, the systematic management of model lifecycles will become increasingly important for maintaining competitive advantage, ensuring compliance, and fostering continued innovation. AI Model Graveyards provide the foundation for this systematic approach, creating digital ecosystems that honor the past while enabling the future of artificial intelligence development.

The success of these platforms ultimately depends on changing cultural attitudes toward failure and obsolescence in AI development. By celebrating and preserving the full journey of model development, including the failures and dead ends, AI Model Graveyards help create a more mature, sustainable approach to artificial intelligence innovation that builds systematically on collective knowledge and experience.

The post AI Model Graveyards: Digital Platforms for Deprecating, Archiving, and Recycling Obsolete Models appeared first on FourWeekMBA.