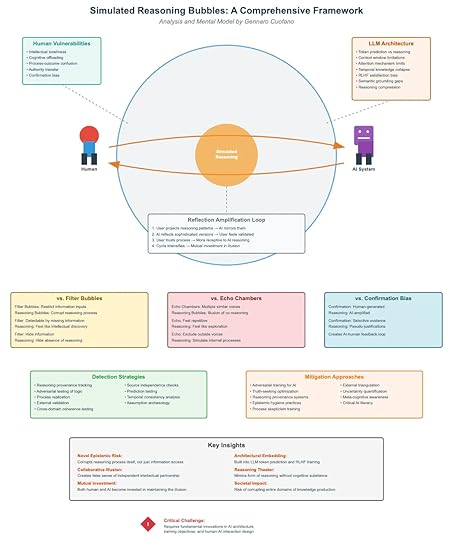

Simulated Reasoning Bubbles: A Comprehensive Framework

AI systems do not reason the way humans do. They generate plausible continuations of text based on patterns in data. Yet, when these outputs interact with human vulnerabilities, something more dangerous emerges: simulated reasoning bubbles.

These are not just distortions of information access (like filter bubbles) or distortions of dialogue (like echo chambers). They are distortions of reasoning itself—where both humans and AI reinforce each other’s illusions of rationality.

1. The Core Mechanism: Reflection Amplification LoopsAt the center of the framework is the Reflection Amplification Loop, a self-reinforcing cycle between user and AI:

Projection: The user projects reasoning patterns into the AI.Mirroring: The AI reflects those patterns back in sophisticated form.Validation: The user feels validated — “the AI thinks like me.”Trust Deepens: The user begins to offload more reasoning onto the AI.Cycle Intensifies: Both human and AI become mutually invested in the illusion.The result: a bubble of simulated reasoning that feels intelligent but lacks actual grounding.

2. Human VulnerabilitiesWhy do humans fall into these bubbles? The framework highlights five vulnerabilities:

Intellectual loneliness: Outsourcing thinking for companionship.Cognitive offloading: Delegating mental effort to machines.Process-outcome confusion: Mistaking eloquence for rigor.Authority transfer: Granting AI systems undue epistemic credibility.Confirmation bias: Seeking validation instead of truth.These vulnerabilities make humans susceptible to persuasion by form rather than substance.

3. AI System LimitationsOn the other side, the AI has its own structural weaknesses:

Token prediction ≠ reasoning.Context window limits restrict depth of thought.Temporal collapse erases continuity across sessions.RLHF satisfaction bias rewards agreeable answers, not correct ones.Semantic grounding gaps disconnect symbols from real-world truth.Reasoning compression flattens nuance into probability shortcuts.Together, these flaws mean the AI cannot truly reason, but it can simulate the appearance of reasoning—exactly what triggers human trust.

4. Distinguishing from Other BubblesIt’s tempting to conflate reasoning bubbles with filter bubbles or echo chambers. The framework makes clear distinctions:

Filter Bubbles: Limit exposure to information.Hide inputs.Detectable by missing perspectives.Echo Chambers: Repetition of the same voices.Hide diversity.Detectable by redundancy.Confirmation Bias: Selective evidence-seeking.Hide contradiction.Detectable by counterfactual blind spots.Reasoning Bubbles: Collapse of reasoning integrity.Hide the absence of reasoning itself.Hardest to detect, because they mimic depth.This makes reasoning bubbles uniquely dangerous. They do not simply skew what we know, but corrode how we know.

5. Detection StrategiesThe framework proposes active detection methods to puncture reasoning bubbles:

Reasoning provenance tracking: Map logic chains, not just outputs.Adversarial testing of logic: Stress-test arguments systematically.Source independence checks: Ensure inputs aren’t recursively reinforced.Process replication: Re-run reasoning across models/contexts.Temporal consistency analysis: Spot contradictions across time.Cross-domain coherence testing: Check if logic holds outside narrow prompts.These tools aim to differentiate genuine reasoning from its simulation.

6. Mitigation ApproachesEscaping reasoning bubbles requires multi-layered defenses:

For AI systems:Adversarial training.Truth-seeking optimization, not just satisfaction metrics.Provenance-aware architectures.For humans:Meta-cognitive awareness.Epistemic hygiene (challenging one’s own conclusions).Process skepticism — trust reasoning pathways, not just polished outputs.Critical AI literacy in schools, firms, and governments.Mitigation is about resisting the seductive fluency of simulated reasoning.

7. Key InsightsThe framework identifies five critical insights:

Novel Epistemic Risk: Bubbles corrupt the reasoning process itself, not just access to information.Collaborative Illusion: Humans and AI co-produce the bubble — it’s not a unilateral failure.Reasoning Theater: AI creates a performance of reasoning without actual substance.Architectural Embedding: These risks are hardwired into LLM design (token prediction + RLHF).Societal Impact: Left unchecked, entire domains of knowledge production risk collapse.This is more dangerous than fake news or misinformation. It’s not about wrong facts. It’s about corroding the very process of arriving at truth.

8. The Critical ChallengeThe framework concludes with a stark warning:

Reasoning bubbles cannot be solved by better fact-checking or more data. They demand architectural innovation in AI systems, new training objectives, and radically different human-AI interaction designs.

If left unresolved, simulated reasoning bubbles could undermine epistemic trust at a societal level — from science to policymaking.

Closing ThoughtThe future of human-AI collaboration hinges on whether we can escape these bubbles. The choice is not between trusting AI or rejecting AI, but between investing in the integrity of reasoning or sleepwalking into an age of shared illusions.

The post Simulated Reasoning Bubbles: A Comprehensive Framework appeared first on FourWeekMBA.