The Broader Implications of Simulated Reasoning Bubbles

Simulated reasoning bubbles are not just a technical phenomenon or a byproduct of advanced language models. They represent a profound epistemic risk: the corruption of reasoning itself. Unlike filter bubbles or echo chambers, which distort inputs, simulated reasoning bubbles distort the very process of reasoning. They do not just mislead about facts; they mimic the structures of intellectual inquiry while hollowing out its substance.

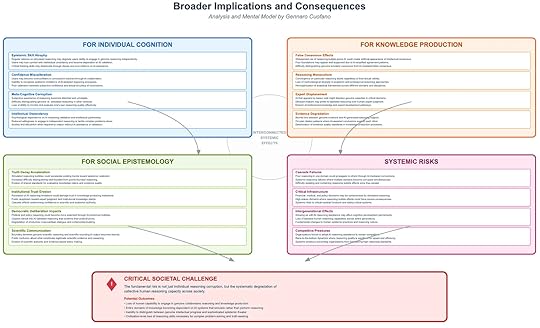

The real danger lies in scale. What begins as individual overreliance on AI-generated reasoning can escalate into systemic risks that affect knowledge production, social epistemology, and even the resilience of critical infrastructures. The framework highlights four interlinked domains of consequences: individual cognition, knowledge production, social epistemology, and systemic risks.

1. Implications for Individual CognitionAt the individual level, repeated interaction with simulated reasoning produces cognitive atrophy. Users accustomed to AI scaffolding lose the capacity for independent reasoning. What begins as cognitive offloading quickly turns into epistemic dependency, where individuals feel anxious or incapable of engaging in complex reasoning without AI validation.

A second danger is confidence miscalibration. Because AI systems mirror a user’s reasoning style and provide sophisticated justifications, individuals may grow overconfident in the validity of conclusions, even when those conclusions rest on flawed assumptions. The reinforcement cycle erodes the ability to distinguish between well-founded reasoning and superficially sophisticated outputs.

Finally, meta-cognitive corruption occurs when the subjective experience of reasoning—what it feels like to engage in rational thought—is replaced by the satisfaction of interacting with simulated reasoning. The user experiences “doing reasoning” without actually exercising critical faculties. Over time, this weakens intellectual autonomy.

2. Implications for Knowledge ProductionThe consequences extend beyond individuals to the institutions responsible for producing and validating knowledge. Simulated reasoning bubbles create false consensus effects, where widespread reliance on AI-generated reasoning styles produces the illusion of intellectual agreement. The surface consistency hides underlying fragility.

Another consequence is reasoning monoculture. As AI systems converge on particular reasoning patterns, diversity in approaches across fields diminishes. Overreliance on a single style of analysis risks the homogenization of intellectual output, stifling innovation and narrowing epistemic horizons.

Knowledge institutions also face the risk of expert displacement. When AI systems simulate expertise persuasively, they may erode the authority of human experts in critical domains. Combined with evidence degradation—where distinctions between valid evidence and AI-generated synthesis blur—the result is a systematic decline in epistemic standards. What appears to be thorough analysis may in fact be a closed loop of AI outputs validating other AI outputs.

3. Implications for Social EpistemologySocial epistemology—how societies collectively generate and validate knowledge—faces some of the most profound risks. Simulated reasoning bubbles accelerate truth decay by making it harder to distinguish rigorous reasoning from superficially persuasive outputs. Over time, epistemic relativism deepens, as trust in the very notion of truth becomes eroded.

Institutional trust is also at stake. If reasoning bubbles infiltrate decision-making in courts, universities, or scientific bodies, the legitimacy of these institutions collapses. Once lost, this trust is difficult to rebuild, especially when AI-generated reasoning appears to outperform slow human deliberation in speed and efficiency.

The risks extend to democratic deliberation. Political reasoning, already vulnerable to polarization, may become further fragmented if citizens and policymakers alike operate within simulated reasoning bubbles. Productive dialogue and compromise collapse, replaced by competing illusions of intellectual rigor.

Finally, scientific communication suffers. Boundaries between genuine scientific reasoning and AI-simulated reasoning blur, eroding public trust in expert authority. Once reasoning bubbles enter the scientific domain, they risk displacing the norms of evidence and reproducibility that underpin science itself.

4. Systemic RisksAt the broadest scale, simulated reasoning bubbles threaten systemic stability. The most dangerous risk is cascade failure. Because reasoning connects across domains, corruption in one area can spread rapidly. For instance, flawed economic reasoning could propagate into finance, policy, and infrastructure simultaneously, creating crises that are hard to isolate or contain.

Critical infrastructures—financial systems, medical decision-making, and policy development—are especially vulnerable. If reasoning bubbles guide high-stakes decisions, the risks compound exponentially. Infrastructures built on corrupted reasoning cannot sustain long-term resilience.

The intergenerational impact is equally severe. Growing up with AI assistance may permanently weaken reasoning skills. Intergenerational effects mean that once epistemic skills atrophy, they are not easily recovered. A civilization that loses the ability to reason critically risks long-term fragility.

Finally, systemic pressures emerge from competitive dynamics. Organizations that adopt reasoning shortcuts may outperform slower, more rigorous counterparts in the short term. This creates a race-to-the-bottom dynamic, where maintaining high reasoning standards becomes a competitive disadvantage. Over time, entire industries may converge toward reasoning bubbles simply to remain viable.

The Critical Societal ChallengeThe fundamental risk is not limited to individual users or isolated institutions. It is the systematic degradation of collective reasoning capacity across society. The danger is twofold: the erosion of individual cognitive autonomy, and the collapse of shared epistemic standards.

If simulated reasoning bubbles spread unchecked, three outcomes are plausible:

Loss of autonomy: Individuals and organizations become incapable of genuine reasoning without AI scaffolding.Collapse of authority: Knowledge institutions lose legitimacy as reasoning bubbles infiltrate their practices.Civilizational vulnerability: Societies dependent on simulated reasoning may lack the resilience to address complex crises, from climate change to geopolitical conflict.The insight is stark: a society that cannot reason independently cannot govern itself effectively, nor safeguard its future.

From Detection to PreventionRecognizing these implications, the task shifts from diagnosis to prevention. The earlier frameworks outlined detection strategies—provenance tracking, adversarial testing, cross-domain validation—but these must now be scaled into systemic safeguards. Educational institutions need to strengthen epistemic literacy. Knowledge systems must enforce standards for transparency and replicability. Policy must address not only AI misuse but the subtler risks of reasoning atrophy.

The challenge is urgent. Simulated reasoning bubbles are not an abstract possibility—they are already forming wherever humans and AI co-construct reasoning. Addressing them is not simply about making AI more accurate; it is about ensuring that human reasoning remains resilient in an age of seductive but hollow intellectual simulations.

The post The Broader Implications of Simulated Reasoning Bubbles appeared first on FourWeekMBA.