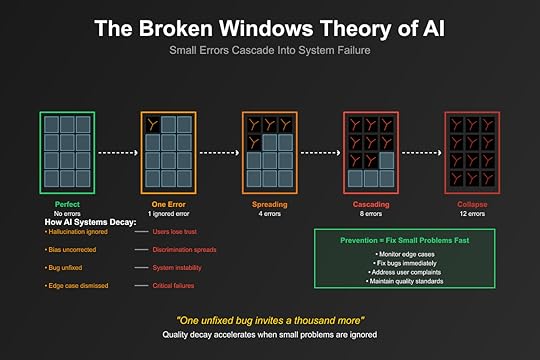

The Broken Windows Theory of AI: Small Errors Cascade

One AI hallucination goes uncorrected in a company’s knowledge base. Soon, other errors appear and persist. Quality standards erode. Trust evaporates. Within months, the entire AI system becomes unreliable and unused. This is the Broken Windows Theory in artificial intelligence: visible minor flaws signal that errors are acceptable, creating cascading degradation that destroys entire systems.

Criminologists James Q. Wilson and George Kelling introduced the Broken Windows Theory in 1982, arguing that visible signs of minor disorder—like broken windows—signal that nobody cares, encouraging escalating criminal behavior. The same social psychology now operates in AI systems: small visible errors signal that quality doesn’t matter, triggering cascading degradation.

The Original Order InsightWilson and Kelling’s ObservationThe theory emerged from observing how neighborhoods decline. One broken window left unrepaired signals abandonment. More windows get broken. Graffiti appears. Crime escalates. The progression from minor disorder to major breakdown follows predictable patterns.

The mechanism is social signaling. Broken windows don’t cause crime directly but communicate that disorder is tolerated. This perception changes behavior. People adjust their actions to match perceived norms.

Environmental InfluenceThe theory reveals how environments shape behavior. Order encourages order. Disorder encourages disorder. Context determines conduct more than character.

This operates through unconscious social calibration. People constantly adjust to environmental cues about acceptable behavior. Small signals create large behavioral shifts.

AI’s Disorder AmplificationThe First HallucinationWhen AI generates its first uncorrected falsehood, it signals that accuracy is optional. Users notice. Trust decreases. Verification increases. The first broken window appears.

The hallucination persists in outputs, training data, and user memory. It gets referenced, repeated, and reinforced. One error becomes many through systemic propagation.

Users adapt to unreliability. They stop reporting errors, assuming they’re normal. They work around problems rather than fixing them. Acceptance of small errors enables large ones.

Quality Degradation CascadesEach uncorrected error lowers the quality baseline. If this error is acceptable, why not that one? If small hallucinations persist, why fix large ones? Standards erode through precedent.

The degradation accelerates through feedback loops. Poor quality outputs become training data. Bad training data creates worse models. Worse models generate more errors. The broken windows multiply exponentially.

Teams adapt to degradation by lowering expectations. What was unacceptable becomes tolerable. What was tolerable becomes normal. The new normal is broken.

Trust Collapse DynamicsTrust erodes asymmetrically. Building trust requires consistent reliability. Destroying it requires single failures. Every broken window damages trust disproportionately.

The collapse accelerates through social proof. One user’s distrust influences others. Negative experiences spread faster than positive ones. Trust breakdown becomes viral.

Once trust collapses, recovery becomes nearly impossible. Users assume errors even when absent. They verify everything, defeating AI’s purpose. Broken windows create permanent skepticism.

VTDF Analysis: Degradation EconomicsValue ArchitectureValue in AI systems depends on reliability and trust. Broken windows destroy both. Small errors eliminate large value propositions.

The value destruction is nonlinear. Systems retain value despite minor flaws until a tipping point. Then value collapses catastrophically. Broken windows create value cliffs, not slopes.

Value perception changes before actual degradation. Users see broken windows and assume systemic problems. Perceived quality determines value more than actual quality.

Technology StackEvery layer can have broken windows. UI glitches. API errors. Model hallucinations. Data corruption. Each layer’s broken windows affect all others.

The stack propagates degradation vertically. Frontend errors suggest backend problems. Model errors imply data issues. Broken windows at any layer signal systemic failure.

Technical debt accumulates around broken windows. Workarounds for unfixed errors. Patches on patches. Complexity masking problems. Each broken window creates technical mortgage.

Distribution ChannelsDistribution amplifies broken window effects. One user’s error experience influences many. Support channels spread awareness of problems. Distribution makes broken windows visible system-wide.

Channels adapt to broken windows by adding disclaimers. Warning about potential errors. Hedging on reliability. Lowering expectations. Distribution channels become apology channels.

Partner relationships suffer from visible broken windows. Clients lose confidence. Integrations get postponed. Contracts include more contingencies. Broken windows break business development.

Financial ModelsFinancial models assume quality and reliability. Broken windows violate these assumptions. Revenue projections fail when quality degrades.

The financial impact cascades. Increased support costs. Higher churn rates. Slower acquisition. Lower pricing power. Every broken window has financial consequences.

Investment suffers from broken window perception. VCs see degradation signals. Valuations decrease. Funding becomes harder. Capital markets punish visible disorder.

Real-World DegradationThe Documentation DecayAI-generated documentation often contains small errors. Incorrect parameters. Outdated examples. Minor inconsistencies. These broken windows signal that accuracy doesn’t matter.

The decay accelerates as developers stop maintaining docs. Why fix documentation when AI will just introduce new errors? Broken windows justify further neglect.

Eventually, documentation becomes actively harmful. Developers learn to distrust it. They seek alternatives. The official source becomes the last resort. Broken windows destroy information infrastructure.

The Chatbot DeteriorationCustomer service chatbots exhibit broken window dynamics. Initial small errors go uncorrected. Response quality degrades. Users learn to game or avoid the system. The chatbot becomes a broken window itself.

The deterioration spreads to human agents. If the chatbot gives wrong answers, why should humans be accurate? AI broken windows lower human standards.

Customer experience collapses. Support becomes adversarial. Users expect problems. Companies expect complaints. Broken windows create hostile ecosystems.

The Code Assistant CorruptionAI code assistants introduce subtle bugs. Off-by-one errors. Edge case oversights. Performance inefficiencies. These broken windows accumulate in codebases.

Developers adapt by accepting lower quality. If AI-generated code has bugs, why write perfect code? Broken windows normalize technical debt.

Code quality spirals downward. Reviews become less thorough. Standards relax. Technical debt explodes. The entire development culture degrades around AI broken windows.

The Cascade MechanismsNormalization of DevianceEach uncorrected error shifts the acceptable baseline. What seemed wrong becomes normal through exposure. Broken windows normalize dysfunction.

The normalization happens gradually. First error: noticed and concerning. Tenth error: noticed but accepted. Hundredth error: unnoticed. Attention fatigue enables normalization.

Organizations codify deviance. Policies adapt to reality rather than fixing it. Standards lower to match practice. Broken windows become institutionalized.

Social Proof DeteriorationPeople calibrate behavior to observed norms. When errors persist, they signal acceptable standards. Everyone’s behavior degrades to match visible broken windows.

The deterioration spreads through teams. New members learn that errors are normal. Experienced members stop fighting degradation. Social proof makes broken windows contagious.

Leadership signals amplify effects. When leaders accept broken windows, everyone does. When quality isn’t prioritized, it disappears. Broken windows at the top create systemic rot.

Compound Interest of NeglectUnfixed errors accumulate interest. Each makes the next easier to ignore. Each makes fixing harder. Broken windows create compound technical and cultural debt.

The interest accelerates over time. Linear accumulation of problems. Exponential increase in complexity. Geometric growth in repair cost. Broken windows become more expensive to fix over time.

Eventually, fixing becomes impossible. Too many errors. Too much interdependence. Too little trust. Broken windows reach irreversibility.

Strategic ImplicationsFor AI DevelopersFix the first error immediately. The first broken window matters most. It sets standards. It signals care. Zero tolerance for initial errors prevents cascades.

Make quality visible. Display error rates. Publish corrections. Celebrate fixes. Visible quality maintenance prevents broken window perception.

Design for maintainability. Systems that are hard to fix accumulate broken windows. Easy correction enables rapid repair. Maintainability prevents accumulation.

For OrganizationsEstablish quality rituals. Regular error reviews. Systematic corrections. Public quality metrics. Rituals prevent broken window normalization.

Empower error correction. Anyone who sees errors should be able to fix or report them. Barriers to correction create broken windows. Democratize quality maintenance.

Treat small errors seriously. Minor flaws signal major problems. Early intervention prevents cascades. Broken window prevention is cheaper than repair.

For UsersReport every error. Your silence enables broken windows. Your reports prevent cascades. Users are the first line of defense.

Don’t adapt to degradation. Maintaining standards prevents normalization. Demanding quality encourages it. User expectations shape system quality.

Vote with usage. Use reliable systems. Abandon broken ones. Market signals motivate broken window repair.

The Future of AI OrderAutomated Window RepairFuture AI might self-detect and correct errors. Automated quality monitoring. Self-healing systems. Continuous improvement. Technology might prevent broken windows.

But automation might create new broken windows. Who fixes the fixers? What if repair systems fail? Meta-broken windows might emerge.

Quality as Competitive AdvantageOrganizations that prevent broken windows might dominate. Quality becomes differentiator. Reliability drives adoption. Broken window prevention becomes strategic necessity.

This creates quality races. Higher standards. Better monitoring. Faster fixes. Competition might eliminate broken windows through market forces.

The Perfect Storm RiskAI broken windows might cascade across systems. Interconnected AIs propagating errors. Industry-wide quality degradation. Systemic trust collapse. Broken windows might break everything.

This risk requires coordinated response. Industry standards. Quality protocols. Shared monitoring. Preventing AI broken windows might require collective action.

Conclusion: The Price of PerfectionThe Broken Windows Theory in AI reveals a fundamental truth: small errors matter enormously. Not because of their direct impact but because of what they signal and enable.

Every uncorrected AI error is a broken window. It signals that quality doesn’t matter. It enables further degradation. It normalizes dysfunction. The cascade from first error to system failure follows predictable patterns.

This doesn’t mean demanding impossible perfection but recognizing that visible errors have invisible consequences. They shape behavior, erode trust, and degrade quality in ways that compound over time.

The choice is stark: fix broken windows immediately or watch systems degrade. Maintain quality vigilantly or lose it entirely. In AI systems, as in neighborhoods, disorder is entropy’s default direction.

The next time you see an AI error go uncorrected, remember: you’re not just seeing a bug, you’re seeing the first crack in a dam that might fail catastrophically. Fix the window before the whole house falls down.

The post The Broken Windows Theory of AI: Small Errors Cascade appeared first on FourWeekMBA.