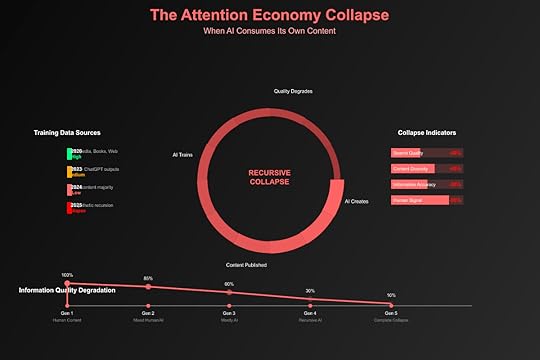

The Attention Economy Collapse: When AI Consumes Its Own Content

The internet is eating itself. As AI-generated content floods the web, future AI models increasingly train on synthetic data, creating a recursive loop that degrades information quality with each iteration. This isn’t just a technical problem—it’s the collapse of the attention economy’s fundamental assumption: that human attention creates authentic signals. We’re witnessing the digital equivalent of inbreeding, and the offspring are getting stranger.

The Attention Economy’s Original SinThe Human Signal AssumptionThe attention economy was built on a simple premise:

Human Attention = Value: What people look at mattersEngagement = Quality: More interaction means better contentBehavioral Data = Truth: Actions reveal preferencesScale = Significance: Viral equals valuableThese assumptions worked when humans generated all content and engagement.

The Breaking PointAI breaks every assumption:

Synthetic Attention: Bots viewing bot contentManufactured Engagement: AI comments on AI postsFabricated Behavior: Algorithms gaming algorithmsArtificial Virality: Machines making things “trend”The attention economy’s currency has been counterfeited at scale.

The Model Collapse PhenomenonGeneration 1: The Golden AgeTraining Data: Human-generated internet (pre-2020)

Wikipedia articles by expertsStack Overflow answers by developersReddit discussions by humansNews articles by journalistsResult: High-quality, diverse models

Generation 2: The Contamination BeginsTraining Data: Mix of human and AI content (2020-2024)

AI-generated articles mixed with humanChatGPT responses treated as authoritativeSynthetic images in training setsBot conversations in social dataResult: Subtle degradation, hallucination increase

Generation 3: The Recursive NightmareTraining Data: Primarily AI-generated (2024+)

AI articles training new AISynthetic data creating synthetic dataErrors compounding through iterationsReality increasingly distantResult: Model collapse, reality disconnection

Generation 4: The Singularity of NonsenseProjection: Complete synthetic loop

No original human contentInfinite recursion of artifactsComplete detachment from realityInformation heat deathThe Mathematical RealityThe Degradation FunctionWith each generation of AI training on AI content:

“`

Quality(n+1) = Quality(n) × (1 – ε) + Noise(n)

“`

Where:

ε = degradation rate (typically 5-15%)Noise = cumulative errors and artifactsn = generation numberAfter just 10 generations: 40-80% quality loss

The Diversity CollapseShannon Entropy Reduction:

Generation 1: High entropy (diverse information)Generation 2: 20% entropy reductionGeneration 3: 50% entropy reductionGeneration 4: 80% entropy reductionGeneration 5: Homogeneous outputModels converge on average, losing edge cases and uniqueness.

Real-World ManifestationsThe SEO ApocalypseGoogle search results increasingly return:

AI-generated articles optimized by AICircular citations (AI citing AI citing AI)Phantom information (believable but false)Semantic similarity without substanceSearch quality degrading measurably quarter over quarter.

The Wikipedia ProblemWikipedia faces an existential crisis:

AI-generated articles flooding submissionsEditors unable to verify synthetic contentCitations pointing to AI-generated sourcesKnowledge base poisoning acceleratingThe world’s knowledge repository is being contaminated.

The Social Media OuroborosTwitter/X estimated composition:

30-40% bot accounts50%+ of trending topics artificialAI replies outnumbering human responsesEngagement metrics meaninglessReal human conversation becoming impossible to find.

The Stock Photo DisasterImage databases now contain:

60%+ AI-generated imagesSynthetic images training new generatorsArtifacts compounding (extra fingers becoming normal)Real photography becoming “unusual”Visual reality being rewritten by recursive generation.

VTDF Analysis: The Collapse DynamicsValue ArchitectureOriginal Value: Human attention as scarce resourceSynthetic Inflation: Infinite fake attention availableValue Destruction: Real signals drowned in noiseTerminal State: Attention becomes worthlessTechnology StackGeneration Layer: AI creating contentDistribution Layer: Algorithms promoting AI contentConsumption Layer: AI consuming AI contentTraining Layer: New AI learning from old AIDistribution StrategyAlgorithmic Amplification: AI content optimized for algorithmsViral Mechanics: Synthetic engagement driving reachPlatform Incentives: Quantity over quality rewardedHuman Displacement: Real creators giving upFinancial ModelAd Revenue: Based on fake engagementCreator Economy: Humans can’t compete with AI volumePlatform Economics: Cheaper to serve AI contentMarket Failure: True value discovery impossibleThe Stages of CollapseStage 1: Enhancement (2020-2022)AI assists human creatorsQuality improvements visibleDiversity maintainedHuman oversight activeStage 2: Substitution (2023-2024)AI replaces human creatorsQuality appears maintainedDiversity beginning to narrowHuman oversight overwhelmedStage 3: Recursion (2025-2026)AI primarily learning from AIQuality degradation acceleratingDiversity collapsingHuman signal lostStage 4: Collapse (2027+)Complete synthetic loopQuality floor reachedHomogeneous outputReality disconnection completeThe Information Diet CrisisThe Junk Food ParallelAI content is information junk food:

Optimized for Consumption: Maximum engagementNutritionally Empty: No real insightAddictive: Designed for dopamine hitsCheap to Produce: Near-zero marginal costDisplaces Real Food: Crowds out human contentThe Malnutrition SymptomsSociety showing information malnutrition:

Decreased critical thinkingIncreased conspiracy beliefsInability to distinguish real from fakeLoss of shared realityEpistemic crisis acceleratingThe Feedback Doom LoopHow It AcceleratesAI generates plausible contentAlgorithms promote it (optimized for engagement)Humans engage (can’t distinguish from real)Engagement signals quality (platform assumption)More AI content created (following “successful” patterns)Next AI generation trains on itLoop repeats with degraded inputWhy It Can’t Self-CorrectNo Natural Predator: Nothing stops bad AI content

No Quality Ceiling: Infinite generation possible

No Human Bandwidth: Can’t review at scale

No Economic Incentive: Cheaper to let it run

The Tragedy of the Digital CommonsThe Commons Being DestroyedThe internet as shared resource:

Knowledge Commons: Wikipedia, forums, blogsVisual Commons: Photo databases, art repositoriesSocial Commons: Human conversation spacesCode Commons: GitHub, Stack OverflowAll being polluted by synthetic content.

The Rational Actor ProblemEach actor’s incentives:

Platforms: Serve cheap AI content for profitCreators: Use AI to compete on volumeUsers: Can’t distinguish, consume anywayAI Companies: Need training data, create moreIndividual rationality creates collective irrationality.

Attempted Solutions and Why They FailDetection Arms RaceAI Detectors: Always one step behind

Generation N detector defeated by Generation N+1False positive rate makes them unusableArms race favors generatorsWatermarkingTechnical Watermarks: Easily removed

Compression destroys watermarksScreenshot launderingAdversarial removalHuman VerificationBlue Checks and Verification: Gamed immediately

Verified accounts soldHuman farms for verificationEconomic incentives for fraudBlockchain ProvidenceCryptographic Proof: Technically sound, practically useless

Requires universal adoptionUser experience nightmareDoesn’t prevent initial fraudThe Economic ImplicationsAdvertising CollapseWhen attention is synthetic:

CPM Rates: Plummeting as fraud increasesROI: Negative for most campaignsBrand Safety: Impossible to guaranteeMarket Size: Shrinking despite “growth”The $600B digital ad industry built on sand.

Content Creator ExtinctionHumans can’t compete:

Volume: AI produces 1000x moreCost: AI nearly freeSpeed: AI instantaneousOptimization: AI perfectly tunedProfessional content creation becoming extinct.

Platform EnshittificationCory Doctorow’s concept accelerated:

Platforms good to users (to attract)Platforms abuse users (for advertisers)Platforms abuse advertisers (for profit)Platforms collapse (no real value left)AI accelerates this to months not years.

Future ScenariosScenario 1: The Dead InternetBy 2030:

99% of content AI-generatedHuman communication moves to private channelsPublic internet becomes synthetic wastelandNew “human-only” networks emergeScenario 2: The Great FilteringRadical curation:

Extreme gatekeeping returnsPre-internet institutions resurrectCostly signaling for humannessSmall, verified communities onlyScenario 3: The Epistemic CollapseComplete information breakdown:

No shared reality possibleTruth becomes unknowableSociety fragments completelyDark age of informationThe Path ForwardIndividual StrategiesInformation Hygiene: Carefully curate sourcesDirect Relationships: Value in-person communicationCreation Over Consumption: Make rather than scrollDigital Minimalism: Less but betterVerification Habits: Always check sourcesCollective SolutionsHuman-Only Spaces: Authenticated communitiesCostly Signaling: Proof-of-human mechanismsLegal Frameworks: Synthetic content lawsEconomic Restructuring: New monetization modelsCultural Shift: Valuing authenticity over viralityTechnical InnovationsProof of Personhood: Cryptographic humanityFederated Networks: Decentralized human verificationSemantic Fingerprinting: Deep authenticity markersEconomic Barriers: Cost for content creationTime Delays: Slow down information velocityConclusion: The Ouroboros AwakensThe attention economy is consuming itself, and we’re watching in real-time. Each AI model trained on the synthetic output of its predecessors takes us further from reality, creating an Ouroboros of information—the serpent eating its own tail until nothing remains but the eating itself.

This isn’t just a technical problem of model collapse or an economic problem of market failure. It’s an epistemic crisis that threatens the foundation of shared knowledge and collective sensemaking. When we can no longer distinguish human from machine, real from synthetic, truth from hallucination, we lose the ability to coordinate, collaborate, and progress.

The irony is perfect: in trying to capture and monetize human attention, we’ve created systems that destroy the value of attention itself. The attention economy’s greatest success—AI that can generate infinite content—is also its ultimate failure.

The question isn’t whether the collapse will happen—it’s already underway. The question is whether we can build new systems, new economics, and new ways of validating truth before the ouroboros completes its meal.

—

Keywords: attention economy, model collapse, AI training data, synthetic content, information quality, ouroboros problem, recursive training, digital commons, epistemic crisis

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Attention Economy Collapse: When AI Consumes Its Own Content appeared first on FourWeekMBA.