The Prisoner’s Dilemma of AI Safety: Why Everyone Defects

OpenAI abandoned its non-profit mission. Anthropic takes enterprise money despite safety origins. Meta open-sources everything for competitive advantage. Google rushes releases after years of caution. Every AI company that started with safety-first principles has defected to competitive pressures. This isn’t weakness—it’s the inevitable outcome of game theory. The prisoner’s dilemma is playing out at civilizational scale, and everybody’s choosing to defect.

The Classic Prisoner’s DilemmaThe Original GameTwo prisoners, unable to communicate:

Both Cooperate: Light sentences for both (best collective outcome)Both Defect: Heavy sentences for both (worst collective outcome)One Defects: Defector goes free, cooperator gets maximum sentenceRational actors always defect, even though cooperation would be better.

The AI Safety VersionAI companies face the same structure:

All Cooperate (Safety): Slower, safer progress for everyoneAll Defect (Speed): Fast, dangerous progress, potential catastropheOne Defects: Defector dominates market, safety-focused companies dieThe dominant strategy is always defection.

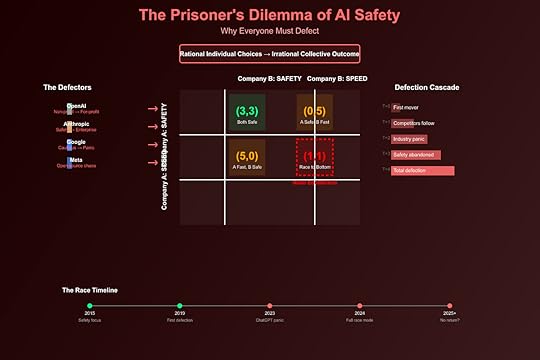

The Payoff MatrixThe AI Company Dilemma“`

Company B: Safety Company B: Speed

Company A: Safety (3, 3) (0, 5)

Company A: Speed (5, 0) (1, 1)

“`

Payoffs (Company A, Company B):

(3, 3): Both prioritize safety, sustainable progress(5, 0): A speeds ahead, B becomes irrelevant(0, 5): B speeds ahead, A becomes irrelevant(1, 1): Arms race, potential catastropheNash Equilibrium: Both defect (1, 1)

Real-World PayoffsCooperation (Safety-First):

Slower model releasesHigher development costsRegulatory complianceLimited market shareLong-term survivalDefection (Speed-First):

Rapid deploymentMarket dominationMassive valuationsRegulatory captureExistential riskThe Defection ChroniclesOpenAI: The Original Defector2015 Promise: Non-profit for safe AGI

2019 Reality: For-profit subsidiary created

2023 Outcome: $90B valuation, safety team exodus

The Defection Path:

Started as safety-focused non-profitNeeded compute to competeRequired investment for computeInvestors demanded returnsReturns required speed over safetySafety researchers quit in protestAnthropic: The Reluctant Defector2021 Promise: AI safety company by ex-OpenAI safety team

2024 Reality: Enterprise focus, massive funding rounds

The Rationalization:

“We need resources to do safety research”“We must stay competitive to influence standards”“Controlled acceleration better than uncontrolled”“Someone worse would fill the vacuum”Each rationalization true, collectively they ensure defection.

Meta: The Chaos AgentStrategy: Open source everything to destroy moats

Game Theory Logic:

Can’t win the closed model raceOpen sourcing hurts competitors moreCommoditizes complement (AI models)Maintains platform powerMeta isn’t even playing the safety game—they’re flipping the board.

Google: The Forced DefectorPre-2022: Cautious, research-focused, “we’re not ready”

Post-ChatGPT: Panic releases, Bard rush, safety deprioritized

The Pressure:

Stock price demands responseTalent fleeing to competitorsNarrative of “falling behind”Innovator’s dilemma realizedEven the most resourced player couldn’t resist defection.

The Acceleration TrapWhy Cooperation FailsFirst-Mover Advantages in AI:

Network effects from user dataTalent attraction to leadersCustomer lock-in effectsRegulatory capture opportunitiesPlatform ecosystem controlThese aren’t marginal advantages—they’re existential.

The Unilateral Disarmament ProblemIf one company prioritizes safety:

Competitors gain insurmountable leadSafety-focused company becomes irrelevantNo influence on eventual AGI developmentInvestors withdraw fundingCompany dies, unsafe actors win“Responsible development” equals “market exit.”

The Multi-Player DynamicsThe Iterative Game ProblemIn repeated prisoner’s dilemma, cooperation can emerge through:

Reputation effectsTit-for-tat strategiesPunishment mechanismsCommunication channelsBut AI development isn’t iterative—it’s winner-take-all.

The N-Player ComplexityWith multiple players:

Coordination becomes impossibleOne defector breaks cooperationNo enforcement mechanismMonitoring is difficultAttribution is unclearCurrent Players: OpenAI, Anthropic, Google, Meta, xAI, Mistral, China, open source…

One defection cascades to all.

The International DimensionThe US-China AI Dilemma“`

China: Safety China: Speed

US: Safety (3, 3) (0, 5)

US: Speed (5, 0) (-10, -10)

“`

The stakes are existential:

National security implicationsEconomic dominance at stakeMilitary applications inevitableNo communication channelNo enforcement mechanismBoth must defect for national survival.

The Regulatory ArbitrageCountries face their own dilemma:

Strict Regulation: AI companies leave, economic disadvantageLoose Regulation: AI companies flock, safety risksResult: Race to the bottom on safety standards.

The Investor Pressure MultiplierThe VC DilemmaVCs face their own prisoner’s dilemma:

Fund Safety: Lower returns, LPs withdrawFund Speed: Higher returns, existential riskThe Math:

10% chance of 100x return > 100% chance of 2x returnEven if 10% includes extinction riskIndividual rationality creates collective irrationalityThe Public Market PressurePublic companies (Google, Microsoft, Meta) face quarterly earnings:

Can’t explain “we slowed for safety”Stock price punishes cautionActivists demand accelerationCEO replaced if resistingThe market is the ultimate defection enforcer.

The Talent Arms RaceThe Researcher’s DilemmaAI researchers face choices:

Join Safety-Focused: Lower pay, slower progress, potential irrelevanceJoin Speed-Focused: 10x pay, cutting-edge work, impactReality: $5-10M packages for top talent at speed-focused companies

The Brain Drain CascadeTop researchers join fastest companiesFastest companies get fasterSafety companies lose talentSpeed gap widensMore researchers defectCascade acceleratesTalent concentration ensures defection wins.

The Open Source WrenchThe Ultimate DefectionOpen source is the nuclear option:

No safety controls possibleNo takebacks once releasedDemocratizes capabilitiesEliminates competitive advantagesMeta’s Strategy: If we can’t win, nobody wins

The Inevitability ProblemEven if all companies cooperated:

Academia continues researchOpen source community continuesNation-states develop secretlyIndividuals experimentSomeone always defects.

Why Traditional Solutions FailRegulation: Too Slow, Too WeakThe Speed Mismatch:

AI: Months to new capabilitiesRegulation: Years to new rulesEnforcement: Decades to developBy the time rules exist, game is over.

Self-Regulation: No EnforcementIndustry promises meaningless without:

Verification mechanismsPunishment for defectionMonitoring capabilitiesAligned incentivesEvery “AI Safety Pledge” has been broken.

International Cooperation: No TrustRequirements for cooperation:

Verification of compliancePunishment mechanismsCommunication channelsAligned incentivesTrust between partiesNone exist between US and China.

Technical Solutions: InsufficientProposed Solutions:

Alignment research (takes time)Interpretability (always behind)Capability control (requires cooperation)Compute governance (requires enforcement)Technical solutions can’t solve game theory problems.

The Irony of AI Safety LeadersThe Cassandra PositionSafety advocates face an impossible position:

If right about risks: Ignored until too lateIf wrong about risks: Discredited permanentlyIf partially right: Dismissed as alarmistNo winning move except not to play—but that ensures losing.

The Defection of Safety LeadersEven safety researchers defect:

Ilya Sutskever leaves OpenAI for new ventureAnthropic founders left Google for speedGeoffrey Hinton quits to warn—after building everythingThe safety community creates the race it warns against.

The Acceleration DynamicsThe Compound EffectEach defection accelerates others:

Company A defects: Gains advantageCompany B must defect: Or dieCompany C sees B defect: Must defect fasterNew entrants: Start with defectionCooperation becomes impossible: Trust destroyedThe Point of No ReturnWe may have already passed it:

GPT-4 triggered industry-wide panicEvery major company now racingBillions flowing to accelerationSafety teams disbanded or marginalizedOpen source eliminating controlsThe game theory has played out—defection won.

Future ScenariosScenario 1: The Capability ExplosionEveryone defects maximally:

Exponential capability growthNo safety measuresRecursive self-improvementLoss of controlExistential eventProbability: Increasing

Scenario 2: The Close CallNear-catastrophe causes coordination:

Major AI accidentGlobal recognition of riskEmergency cooperationTemporary slowdownEventual defection returnsProbability: Moderate

Scenario 3: The Permanent RaceContinuous acceleration without catastrophe:

Permanent competitive dynamicsSafety always secondaryGradual risk accumulationNormalized existential threatProbability: Current trajectory

Breaking the DilemmaChanging the GameSolutions require changing payoff structure:

Make Cooperation More Profitable: Subsidize safety researchMake Defection More Costly: Severe penalties for unsafe AIEnable Verification: Transparent development requirementsCreate Enforcement: International AI authorityAlign Incentives: Restructure entire industryEach requires solving the dilemma to implement.

The Coordination ProblemTo change the game requires:

Global agreement (impossible with current tensions)Economic restructuring (against market forces)Technical breakthroughs (on unknown timeline)Cultural shift (generational change)Political will (lacking everywhere)We need cooperation to enable cooperation.

Conclusion: The Inevitable DefectionThe prisoner’s dilemma of AI safety isn’t a bug—it’s a feature of competitive markets, international relations, and human nature. Every rational actor, facing the choice between certain competitive death and potential existential risk, chooses competition. The tragedy isn’t that they’re wrong—it’s that they’re right.

OpenAI’s transformation from non-profit to profit-maximizer wasn’t betrayal—it was inevitability. Anthropic’s enterprise pivot wasn’t compromise—it was survival. Meta’s open-source strategy isn’t chaos—it’s game theory. Google’s panic wasn’t weakness—it was rationality.

We’ve created a system where the rational choice for every actor leads to the irrational outcome for all actors. The prisoner’s dilemma has scaled from a thought experiment to an existential threat, and we’re all prisoners now.

The question isn’t why everyone defects—that’s obvious. The question is whether we can restructure the game before the final defection makes the question moot.

—

Keywords: prisoner’s dilemma, AI safety, game theory, competitive dynamics, existential risk, AI arms race, defection, cooperation failure, Nash equilibrium

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Prisoner’s Dilemma of AI Safety: Why Everyone Defects appeared first on FourWeekMBA.