the AI business model

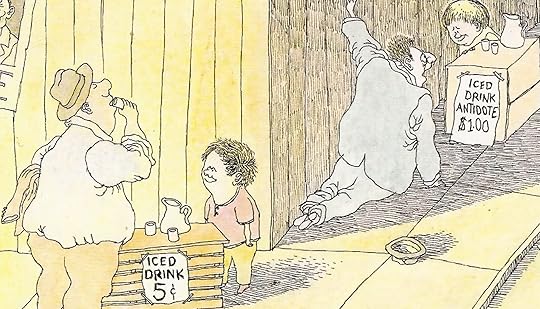

I used this Gahan Wilson cartoon a while back to illustrate the ed-tech business model: the big ed-tech companies always sell universities technology that does severe damage to the educational experience, and when that damage becomes obvious they sell universities more tech that’s supposed to fix the problems the first bundle of tech caused.

This is also the AI business model: to unleash immense personal, social, and economic destruction and then claim to be the ones to repair what they have destroyed.

Consider the rising number of chatbot-enabled teen suicides: OpenAI, Meta, Character Technologies — all these companies, and others, produce bots that encourage teens to kill themselves.

So do these companies want teens to kill themselves? Of course not! That would be stupid! Every dead teen is a customer lost. What’s becoming clear is that they’re hoping to give teens, and adults, suicidal thoughts. Their goal is not suicide but rather suicidal ideation.

Look at OpenAI’s blog post, significantly titled “Helping People When They Need It Most”:

When we detect users who are planning to harm others, we route their conversations to specialized pipelines where they are reviewed by a small team trained on our usage policies and who are authorized to take action, including banning accounts. If human reviewers determine that a case involves an imminent threat of serious physical harm to others, we may refer it to law enforcement. We are currently not referring self-harm cases to law enforcement to respect people’s privacy given the uniquely private nature of ChatGPT interactions.

So that’s Step One: Don’t get law enforcement involved. Step Two is still in process, but here’s a big part of it:

Today, when people express intent to harm themselves, we encourage them to seek help and refer them to real-world resources.

Well … except when they don’t. As they acknowledge elsewhere in the blog post, when conversations get long, that is, when people are really messed up and in a tailspin, “as the back-and-forth grows, parts of the model’s safety training may degrade.”

Continuing:

We are exploring how to intervene earlier and connect people to certified therapists before they are in an acute crisis. That means going beyond crisis hotlines and considering how we might build a network of licensed professionals people could reach directly through ChatGPT. This will take time and careful work to get right.

This I think is the key point. OpenAI will “build a network of licensed professionals” — and when ChatGPT refers a suicidal person to such a professional, will OpenAI take a cut of the fee? Of course it will.

Notice that ChatGPT will, in such an emergency, connect the suicidal person to a therapist within the chatbot interface. You can go to the office later, but let’s do an initial conversation here. Your credit card will be billed. (And for how long will OpenAI employ human beings as their chat therapists? Dear reader, I’ll let you guess. In the end the failings of one chatbot will be — in theory — corrected by another chatbot. And if you want to complain about that the response will come from a third chatbot. It’ll be chatbots all the way down.)

So then the circle will be complete: drawing vulnerable people in, encouraging their suicidal ideation, and then profiting from its treatment. That’s how to “help people when they need it most” — by manipulating them into the needing-it-most position. Thus the cartoon above. Sure, some kids will go too far and kill themselves, but we’ll keep tweaking the algorithm to reduce the frequency of such cases. You can’t make an omelet without breaking a few eggs!

I sometimes ask family and friends: What would the big tech companies have to do, how evil would they have to become, to get The Public to abandon them? And I think the answer is: They can do anything they want and almost no one will turn aside.

A few years ago I said that vindictiveness was the moral crisis of our time. But some (not all, but some) of our rage has burned itself out. The passive acceptance of utter cruelty, in this venue and in others, has become the most characteristic feature of our cultural moment.

Alan Jacobs's Blog

- Alan Jacobs's profile

- 534 followers