When the Model breaks: what accidents reveal about Design

There’s a peculiar silence that follows a model crash. A moment of suspended disbelief, cursor frozen mid-command, screen blinking in quiet rebellion, the machine silently buzzing and… well, not responding. In the world of digital design — especially in the complex ecosystem of tools you need to work in BIM — failure is not just disruptive: it’s disorienting. The model, after all, is supposed to obey every rule we set and every constraint we define.

And yet, it breaks.

When it does, it’s tempting to treat these episodes as mere technical hiccups. A corrupted family in Revit? Those things happen. A misfired node in Dynamo? Everyone can be distracted once in a while. A misaligned geometry in Navisworks? No standard can survive in the field. They’re all bugs to fix, glitches to patch, and every BIM coordinator knows how to move a geometry in Navisworks, don’t they? But what if these breakdowns are not exceptions to the system but revelations from it? And I don’t mean it in the biblical sense, as they’re often treated. Failure isn’t just in the software, but in the way we think through it.

1. The Beauty of the BreakdownWe often approach digital tools with a sense of mastery, armed with templates, scripts, and clean logic. But the more fluent we become, the more invisible our assumptions grow. We begin to see the interface as neutral, the logic as infallible, the model as truth. Until something goes wrong. And in that moment — when the system refuses to comply, when the geometry folds in on itself, when a routine loops into infinity — we’re granted a rare glimpse behind the curtain.

Failure exposes the scaffolding of our thinking. It forces us to confront the cognitive shortcuts we’ve taken, the blind spots in our workflows, the metaphors we’ve projected onto machines. It reminds us that design, even at its most digital, is an interpretive act full of ambiguity, contingency, and contradiction.

This week’s article follows up on a series on the usefulness of failure, and it’s about those moments. Not the polished outputs or the best practices, but the stumbles, the wrong turns, the crashes, the contour of accidents using them not as cautionary tales, but as field notes from the edges of certainty. Because sometimes, when the model breaks, it’s trying to tell us something we’ve forgotten how to hear.

2. Moments of Rupture: Revit, Dynamo, and Navisworks in FreefallSome failures arrive like avalanches: no warning, just the sudden weight of a system that collapses. Others creep in quietly, unnoticed, until a single trigger sets off a chain reaction. In digital design, we build with logic, geometry, and metadata, but the things that break our models are rarely as neat.

This is a story in three acts. Each one begins with intention. Each one ends with collapse.

2.1. The Revit Handle That Killed a SkyscraperIt started innocently, as these things often do: a detail-oriented designer trying to count door handles not by the door because you never know. In a skyscraper. So they did what any skilled modeller might be tempted to: created a shared handle family, nested it into the door, and loaded it into the model. On the other side of the office, another similarly detail-oriented designer did the same thing. With the same handle. Two handles. Same name. Different geometry. Both shared. Both loaded into the model. At the same time.

And that’s how the giant fell.

It wasn’t a dramatic crash at first. Just slow, grinding lag. Then unexpected errors when syncing. Weird shit going down. Eventually, the model refused to open. A building, thousands of elements strong, felled by two tiny handles vying for attention at the naming level. They were nested inside every door in a 35-story structure, duplicating themselves recursively, confusing the project browser and corrupting the central file.

David didn’t kill Goliath with a sling. He did it with a door handle.

This wasn’t just an oversight: it was a symptom of faith. Faith in parametric order, in the clean elegance of shared families, in the idea that a model, once smart, will remain stable. But even intelligence becomes fragile when the logic folds in on itself. And sometimes a complex tool isn’t the right one to solve a simple thing.

2.2. Dynamo’s Material Identity Crisis

2.2. Dynamo’s Material Identity CrisisThis one was mine.

I wrote the script in the middle of office hours, surrounded by people who were whining because they didn’t like Revit and by people who were convinced the BIM coordinator should model their families for them, and yet I was pleased with the simplicity of my approach. A Dynamo routine to rename all materials based on a parameter expressing their ID codes. Elegant, efficient, recursive. I watched it loop through the dataset, line by line, renaming with robotic precision. Except I hadn’t accounted for one thing: univocity. The fucking IDs weren’t unique.

The script dutifully applied the same name to multiple materials. The model hiccupped. Then stuttered. Then died. When I tried re-opening it, it was corrupted beyond recovery. A beautiful logic executed without critical pause.

Dynamo doesn’t give you red flags when logic becomes dangerous. It does what it’s told, even if what it’s told is quietly destructive. The illusion of control is thick in visual programming; every node looks like certainty, but when your assumptions about structure falter, the whole design becomes a house of cards.

These cards. And they’re angry.2.3. The Clash That Wasn’t (But Should Have Been)

These cards. And they’re angry.2.3. The Clash That Wasn’t (But Should Have Been)Navisworks promised clarity. A simple Hard clash test between MEP and Structure: boxes checked, geometries loaded, intersections flagged. We presented the report with confidence: no major clashes between the pipes and the walls. The team breathed easily. Weeks later, someone noticed something on a section of the model. Two pipes, parallel, lost inside a pillar. Completely swallowed. Not flagged in the clash test. Not visible in any report. Not caught in coordination. We had chosen the standard Hard clash with Normal Intersection. Which, as it turns out, only detects intersections between triangles. And Navisworks, under the hood, sees everything as triangles: if the triangles don’t intersect — even if the pipes clearly shouldn’t be there — the test says everything’s fine, and the hungry pillar almost got away with swallowing a whole portion of a system.

We hadn’t known. Or maybe we did know but didn’t connect the brain to the hand when we set up the test. Or maybe I had always used Hard (Conservative) tests — that reports potential collisions even if they might be false positives — and didn’t remember to stay… well, conservative.

In the pursuit of precision, false clarity won over noisy truth.

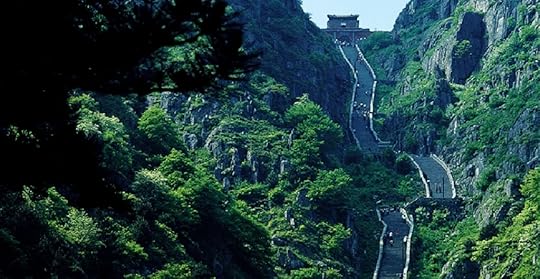

As a Chinese would say, we had eyes to see but couldn’t recognise Mount Tai

As a Chinese would say, we had eyes to see but couldn’t recognise Mount TaiEach of these moments should have left a crater in its wake, not in the model but in the way we approached our tools. Revit didn’t collapse because of geometry; it collapsed because of a paranoid overzeal. Dynamo executed perfectly, but on flawed assumptions. Navisworks told us what we asked, not what we needed to know.

As we have seen, breakdowns aren’t rare anomalies but they’re the system talking back and revealing, often too late, the gap between our logic and our understanding. So what do we do with them?

3. Layer by Layer: Unpacking the AccidentFailure makes for a compelling story. But once the panic subsides and the laughter — however bitter — fades, something more useful remains and it’s not people screaming in the office for you to get fired (although that has been know to happen, in certain particularly pleasant environments): what remains should be insight. Not into the tools themselves, which are usually easy enough to debug, but into the hidden frameworks we carry into our use of them. Our mental models. Our defaults and defects. The scaffolding of trust we place in digital logic.

Let’s peel back the layers of each collapse, not to blame the software or even the workflow, but to understand the thinking that made the accident possible.

3.1. Revit: The Illusion of Semantic ControlWhat broke: a skyscraper model collapsed after the duplication of a shared nested family — two handles, same name, different geometry — embedded across dozens of doors.

Why it broke: Revit’s shared families rely on strict naming protocols. Nesting two differently defined families with identical names should not be possible and yet it happened, and created a recursive conflict that destabilised the entire file. What appeared to be a local, contained action had global, systemic consequences. See here for more technical information on nested families.

What it revealed: I know we said that the model is the universal source of truth on a project, but maybe we took it a little too far. Wishing to count handles is fine, but this never meant that you should be able to create a literal schedules of the damn handle. It doesn’t even mean — pearl clutching alert — that they need to be modelled. On top of that, the fragility in this case didn’t come from bad modelling but from a misplaced faith in who was in charge of what. Every person assumed they were the Holy Priest of Handles, and that no one else would have the same idea. The skyscraper collapsed under the weight of task mismanagement, a fetish for handles and a pinch of bad luck.

I’m not saying this was the handle, but I’m not saying it wasn’t.3.2. Dynamo: Logic Without Foresight

I’m not saying this was the handle, but I’m not saying it wasn’t.3.2. Dynamo: Logic Without ForesightWhat broke: a Dynamo script renamed materials across a model using an ID system that didn’t enforce uniqueness. The result: multiple materials received the same name, breaking references and irreparably corrupting the file.

Why it broke: the script executed flawlessly from a syntactic perspective. The logic was correct but the assumptions behind it were not. It lacked a validation step to ensure univocity of identifiers. In essence, it treated an unreliable dataset as if it were reliable.

What it revealed: this failure exposed the gap between operational logic and design logic. Dynamo, like all visual programming tools, lulls us into a sense of transparency but visibility isn’t the same as understanding. What was missing wasn’t a warning message or a debugger; it was a reflective pause before execution. A human judgment about the trustworthiness of input. The accident didn’t happen in the nodes: it happened in the blind spot between confidence and caution.

3.3. Navisworks: Visualization as DeceptionWhat broke: a set of pipes embedded within a structural pillar went undetected during coordination because the clash test used (Hard with Normal Intersection) didn’t register triangle-level collisions.

Why it broke: the geometry of the pipes technically didn’t intersect the triangles of the pillar model. Navisworks’ default clash engine looks for triangle-on-triangle contact, not object logic or material conflict. The more thorough test (Hard with Conservative Intersection) wasn’t selected.

What it revealed: this was a failure of interface epistemology, of believing that what the software shows you is what’s real. We mistook absence of evidence for evidence of absence. And in doing so, we revealed a deeper flaw: a habit of reading BIM coordination visuals as objective truth rather than as filtered interpretations of geometry. The software didn’t hide the clash. It just didn’t name it. And in the space between those two things, a mistake risked slipping through.

It always looks so neat… how can it be wrong?

It always looks so neat… how can it be wrong?Each of these failures challenges the same core myth: that digital tools deliver certainty.

What they deliver is a simulation — of logic, of geometry, of decision-making — that only works when we understand the boundaries of the simulation itself. Revit isn’t just about modelling; it’s also about naming and nesting. Dynamo isn’t just about automating; it’s about assuming. Navisworks isn’t just about detecting; it’s about defining what counts as a detection.

We didn’t just misclick. We misbelieved (if that’s even a word). And that is what makes breakdowns so valuable: they break not just the model, but the illusion of completeness that surrounds it. They remind us that every parametric system hides an ontology. Every automation encodes a philosophy. Every visualisation reflects a choice about what counts as visible.

4. Breakdown as a Mirror of ThinkingHow to Turn Errors into Insight: a Framework for Collective ReflectionIf failure is inevitable, then the question is not how to avoid it, but how to learn from it. Yet we have seen how most design and BIM contexts tend to quietly correct and hastily blame failures. Rarely are they treated as moments worthy of structured reflection. What’s missing isn’t the lesson: it’s the lens.

This section offers a step-by-step guide for turning technical breakdowns into cognitive breakthroughs. Whether you’re leading a design thinking workshop, a BIM coordination retrospective, or a studio critique, this framework invites participants to slow down, reframe, and rethink. Let’s do some pattern recognition and mental model repair.

Step 1: Name the Rupture

What exactly went wrong?

Where did you first notice it?

Purpose: to disarm the emotional reflex (blame, shame, denial) and move into descriptive clarity. Use storytelling here; walk through the events chronologically. Don’t interpret yet. Just observe.

Tool: use a shared timeline or whiteboard to reconstruct the failure in steps. Pin screenshots, emails, clash reports or reports for model versioning.

Step 2: Surface the Assumptions

Step 2: Surface the AssumptionsWhat were we assuming when we made this choice?

What did we believe about the tool, the data, or each other?

Purpose: to excavate the cognitive architecture behind the action. Every error is rooted in an expectation, often invisible until challenged.

Technique: ask each participant to write down one assumption they now realise they held at the time. Share them anonymously or in sequence.

Step 3: Map the Invisible System

“I assumed I was the only one in charge of handles.”

“We assumed the clash test covered edge overlaps.”

“I assumed the data input was clean and unique.”

What systems were at play that we didn’t account for?

Where did handoffs, translations, or black boxes obscure logic?

Purpose: to recognise that most failures are systemic, not individual. This is especially true in environments like BIM, where geometry, metadata, software logic, and team roles interact invisibly.

Tool: use a systems mapping method (e.g., causal loop diagrams, swimlane flowcharts) to trace how the problem moved through the environment.

Step 4: Shift the Frame

Step 4: Shift the FrameIf we assume the failure is trying to teach us something, what is it?

What hidden risk, inefficiency, or bias did it expose?

Purpose: to reframe failure from something to fix to something to listen to. Invite speculative thinking here.

Technique: use the “Because X, we now understand Y” format.

Step 5: Embed the Learning

“Because the material renaming failed, we now understand that Dynamo needs validation layers before execution.”

“Because the clash was missed, we now understand the limits of visual logic in Navisworks.”

What small but permanent change can we make to prevent this type of failure or to catch it earlier?

Purpose: to transform insight into practice. Avoid big procedural overhauls. Focus on micro-adjustments: scripts, templates, naming conventions, review rituals.

Tool: create a “BIM Rule of Thumb” wall: brief, memorable heuristics derived from lived experience. Add one for each failure dissected.

Step 6: Register the Failure

Step 6: Register the FailureCan this failure be useful to others in the future?

Purpose: to build institutional memory, and to signal that transparency is a strength. Consider anonymising and archiving key failures as part of an internal “Lessons Learned” library or case study repository.

Medium: short slide deck or internal wiki (my favourite).

Why It Works

Why It WorksThis approach works because it treats failure not as noise, but as data. It slows down the desire to repair, and instead opens up a space to interrogate mental models, defaults, and tool biases. It reinforces team learning over individual error, and supports a design culture rooted in humility and curiosity. When practised consistently, this framework builds antifragility (the ability to grow stronger after a rupture) not just in the model, but in the minds behind it.

5. From Control to CuriosityFor most of us trained in the discipline of digital modelling, the goal was always control. Clean geometry, parametric rigour, predictable outcomes. The model, if well-structured, would be a fortress, resistant to error, immune to chaos, and immune to surprise.

But what if the model isn’t a fortress?

What if it’s a laboratory?

The stories we’ve unpacked so far — of doors collapsing skyscrapers, scripts sabotaging themselves, and pipes hiding in plain sight — point to a deeper truth: breakdowns are not just interruptions in design logic, but they are invitations to rethink how we model, how we automate, and how we know. And in that space, a different kind of mindset might begin to emerge: one not driven by fear of failure, but by curiosity about the edges of control.

5.1. Designing with the (possibly not mental) BreakdownTo design with breakdowns means not only accepting that systems will fail, but also asking: where is failure most likely? It means:

Leaving test points in scripts: deliberately placing visual checkpoints, logs, or null catches in Dynamo routines;Baking in ambiguity: using placeholder families in Revit to stress-test naming conventions or type catalogues;Running clash tests in conflict: setting up contradictory parameters in Navisworks to compare what is technically intersecting versus what is logically colliding.These aren’t just safeguards but direct provocations to the system, which forces us to engage in speculative debugging, an act of design thinking that treats uncertainty not as a flaw in the model, but as a source of new questions.

5.2. Curiosity as Design PostureShifting from control to curiosity also changes our relationship to precision. It doesn’t mean abandoning it. It means asking:

What kinds of precision are productive—and which are performative?

We often refine for the sake of appearances. Alignments that look good on screen but mean little in construction. Schedules that list every parameter but hide structural errors. This mindset shift invites us to explore not more detail, but better insight.

Productive uncertainty emerges when we choose not to flatten complexity, but to interact with it. When we stop asking the model to “behave,” and start asking what the model is trying to say—especially when it misbehaves.

5.3. From Risk Management to Insight StrategyThe industry often talks about risk management, as if the goal is simply to catch errors early and reduce exposure. But in design, risk can be more than a liability: it can be signal, a crack that shows us where our logic is stretched, where our tools are being misread, or where a more radical rethink might be required. That’s where productive uncertainty lives: in the zone where we don’t know what will happen but we know that what happens will teach us something.

As we have seen, designing with this mindset means no longer hiding failure in silence, or fixing it quietly in the background, but pulling it to the foreground to interrogate it. Publicly, patiently, professionally.

5.4. The Model as a Question, Not an AnswerUltimately, this is the shift: from modeling as assertion to modeling as inquiry. From “the model is correct” to “what is the model showing us that we didn’t expect?”

When we approach our tools not as instruments of control, but as collaborators in curiosity, something changes. The errors stop being embarrassing. The debugging becomes the design. And the model — broken, brilliant, and imperfect — becomes a map of our own evolving thinking.

Postscript: Designing with UncertaintyModeling, at its core, is a search. A series of hypotheses made visible in geometry and constraints.

A negotiation between precision and ambiguity, between constraint and imagination. To design with uncertainty is not to abandon standards or embrace chaos, nor to relinquish any effort in risk identification and management, but to hold space for the unknown that will inevitably occurr. To treat each failure not as a sign of incompetence, but as a generative rupture, a moment when the tool says: Look again. If we’re listening closely, every error remind us that every model is provisional. Not a declaration, but a question. So let us design not for perfection, but for reflection, let us write scripts that are testable and families that test a theory, and reviews that are not just quality checks, but learning rituals: in a world where complexity is the rule, and certainty is an illusion, the most powerful design posture is not mastery. It’s attention. And the model — cracked, looping, triangulated — is asking for it like a bratty child.