How “AI” submissions have changed our submissions process

This is older news, but I wanted to have something I could easily point people to that explains how our submissions process has changed since the arrival of generative “AI” models.

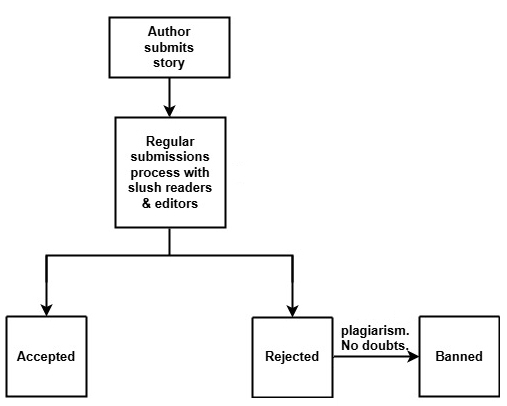

Up until March 2023, our submissions review process worked something like this:

Plagiarism cases happened, but represented a very small percentage of monthly submissions. They were typically caught because:

Someone on our team recognized the workThey used a plagiarism detection evasion tool and it drew my attention (seen one, seen them all)We knew about the person from a whisper networkWithin months of ChatGPT’s public release, the signal-to-noise ratio shifted. Plagiarism was a fringe case and easily handled by the old model, but the sheer volume of generated work threatened to make human-written works the minority. The old way of finding the works we wanted to publish was no longer sustainable for us, so we temporarily closed submissions in February 2023.

When we reopened in March 2023, we implemented a new process that looks more like this:

The oval step is an in-house automated check. I haven’t spoken much about what we’re checking for there because I don’t want to make it easier for the spammers/sloppers to avoid being caught. Just like with malware and email spam, the patterns shift over time, so I’ve had to make regular changes within that oval over the last two years. (I am the developer of the submission software, so the responsibility for this falls to me.)

No process is perfect. Spam detection has existed for email for decades and still makes mistakes. I would never trust an algorithm to make a final assessment and fully accept that each “suspicious” story is a potential false positive. As such, I personally evaluate each suspicious submission. Our slush readers do not have access to this queue.

Bans are only issued when I am certain a work is generated or plagiarized. If I’m only 99% convinced, I’ll just flag the author for continued review. (One of the few things I’ll admit is part of the oval.) These cases always end in rejection, but they never would have made it further in the submissions process anyway. Some of them likely were using AI, but I’d rather err on the side of caution when banning on the table.

If I believe a work was miscategorized, I check why (to see if I need to refine the filters) and move it back to the main queue. Some of these stories have resulted in acceptance and publication. According to my data, there’s no indication that false positives have negatively impacted our final evaluations. We don’t limit the number of stories we accept on a monthly basis, so there are no missed opportunities in the process. The worst that happens with a miscategorized work is a slightly longer response time. How long depends on whether or not there is an active surge in generated submissions. (Unlike 2023, the daily and weekly volume of generated submissions is more spikey. Some good days. Some really bad.) The range has been anything from a delay of hours to a couple of weeks.

The intent of the oval is not to save time, but rather to act as a pressure valve. What broke our process in 2023 was the signal-to-noise ratio. By redirecting the flow of suspicious submissions to a separate queue, we’ve been able to maintain our team’s attention on the work that has to happen on a daily basis. Adopting this approach has given us the ability to weather storms significantly worse than the one that shut us down and more importantly, it has done so without creating an undo burden or deterrent for authors.

Checking the suspicious submissions has, however, added a substantial amount of work to my plate over the last two years. I’ve begrudgingly accepted this price because of the value I assign to having an open submissions process. Having a path for new and lesser-known voices to find their way into our pages is very important to me and this has kept that door open.

The price I pay is directly impacted by the false positive rate. That’s an area for continued improvement and a topic for another day.

—

And repeated from the previous post: For those that would respond to our complaints with “why don’t you just judge it on its own merits”, keep dreaming. Despite the hype, even if we set aside our legal and ethical concerns with how these systems were developed, the output of these tools is nowhere near the standards we expect. Besides, we’ve said we don’t want it. We don’t publish mysteries or romance either, but those authors are at least respectful of our time and don’t insist that we evaluate their work “on its own merits” when it doesn’t meet our guidelines. (This is not to equate mystery or romance writers with people who use generative AI. Simply demonstrating how real writers behave.) Why would we want to work with someone that can’t respect that?