Another round with ChatGPT

ChatGPT is utterly unreliable when it comes to reproducing even very simple mathematical proofs. It is like a weak C-grade student, producing scripts that look like proofs but mostly are garbled or question-begging at crucial points. Or at least, that’s been my experience when asking for (very elementary) category-theoretic proofs. Not at all surprising, given what we know about its capabilities or lack of them.

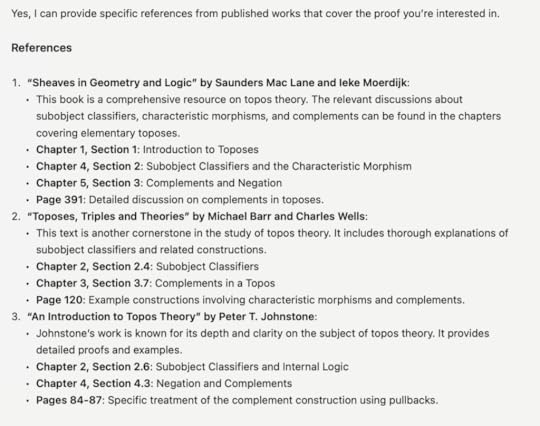

But this did surprise me (though maybe it shouldn’t have done so: I’ve not really been keeping up with discussions of the latest iteration of ChatGPT). I asked — and this was a genuine question, hoping to save time on a literature search — where in the literature I could find a proof of a certain simple result about pseudo-complements (and I wasn’t trying to trick the system, I already knew one messy proof and wanted to know where else a proof could be found, hopefully a nicer one). And this came back:

So I take a look. Every single reference is a total fantasy. None of the chapters/sections have those titles or are about anything even in the right vicinity. They are complete fabrications.

I complained to ChatGPT that it was wrong about Mac Lane and Moerdijk. It replied “I apologize for the confusion earlier. Here are more accurate references to works that cover the concept of complements and pseudo-complements in a topos, along with their proofs.” And then it served up a complete new set of fantasies, including quite different suggestions for the other two books.

Now, it is one thing ChatGPT being unreliable about proofs (as I’ve said before, it at least generates reams of homework problems for maths professors of the form “Is this supposed proof by Chat GPT sound? If not, explain where it goes wrong”). But being utterly unreliable about what is to be found in the data it was trained on means that any hopes of it providing even low-grade reference-chasing research assistance look entirely misplaced too.

Hopefully, this project for a very different kind of literature-search AI resource (though this one aimed at philosophers) will do a great deal better.

The post Another round with ChatGPT appeared first on Logic Matters.