ChatGPT will lead you astray — but you know that!

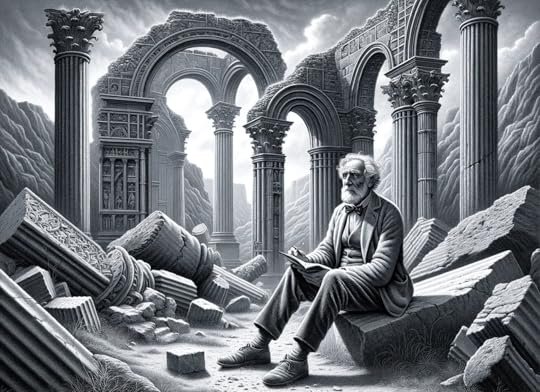

There’s no doubt that playing around with ChatGPT 4 can be fun. And the DALL·E image-generating capacities are really rather remarkable. Here I am, sitting in the ruins, as the world seems to be falling apart around me, trying to distract myself with matters categorial.

I’m mighty glad, though, that I am retired from the fray and am not having to cope with students using ChatGPT for good or ill. To be sure, it has its academic uses. For example, a few trials asking it to recommend books on various topic provided quite sensible lists (and it even had the good taste to recommend a certain intro to formal logic … and it doesn’t know who’s asking!). But as for doing your writing for you …

I might be deceiving myself, but in the Cambridge supervision system, where students have to argue about what they have written, week by week, you won’t get away with relying too much on ChatGPT to write your essays. But elsewhere, in places where one-to-one (or one-to-two) teaching-time is nowhere near so generous, how will teachers negotiate the new situation? There’s an interesting and not exactly cheering discussion thread here, most relevant to philosophers, on Daily Nous.

In a different kind of usage, I did try asking ChatGPT some elementary questions in category theory. For example, it is well known that not all Xs are Ys (the details don’t matter): I had a slighty messy example, but am sure it is easy to do better. So I asked for the simplest case of an X which isn’t a Y. And got back a very nicely constructed answer, which set things up very well, explained the notions involved and gave a supposed example that looked superficially plausible. But it was just wrong, though it took me a little while to see it. So I pressed for more detail of why the described X wasn’t a Y. And got back more superficially plausible chat, which I could imagine well taking in a weaker student.

The same again, when I asked ChatGPT to fill in some details of a sketched proof of a well-known categorial result (the sort of place where one might arm-wave in a lecture, and say “we can now easily show ….”). Again its supposed completions had just the right look-and-feel. But were in fact just wrong at key points.

This might be good for teachers — a whole new class of examples to use: “Here is a ChatGPT proof. Is it right? If not where does it go wrong?”. But not so good, perhaps, for mathematics students: somewhat less strong students who aren’t suitably primed are going to repeatedly end up with flatly false beliefs about which alleged proofs really are in good order.

The post ChatGPT will lead you astray — but you know that! appeared first on Logic Matters.