It continues…

Since last November, we’ve seen a steadily-increasing influx of submissions written with these ChatGPT and other LLMs. In February, it increased so sharply that we were left with no alternative but to close submissions, regroup, and start investing in changes to our submission system to help deter or identify these works.

All of this nonsense has cost us time, money, and mental health. (Far more than the $1000 threshold that Microsoft Chief Economist and Corporate Vice President Michael Schwarz sees as a harm threshold before any regulation of generative AI should be implemented. But let’s not go down that path. It’s not like I’d trust regulation advice from the guy with his hand in the cookie jar.)

When we reopened submissions in March, I acknowledged that any solution would need to evolve to meet the countermeasures it encounters. Much like dealing with spam, credit card fraud, or malware, there are people constantly looking for ways to get around whatever blocks you can throw in their path. That pattern held.

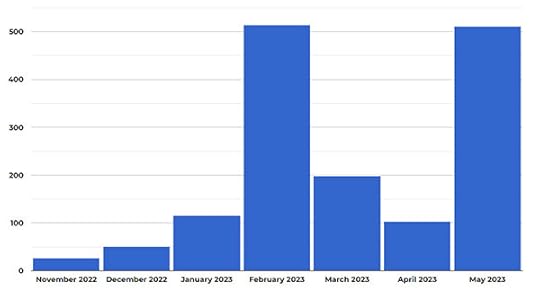

Here’s a look at how things have been since November:

This graph represents the number of “authors” we’ve had to ban. With very rare exceptions, those are people sending us machine-generated works in violation of our guidelines. All of them are aware of our policy and the consequences should they be caught. It’s right there on the submission form and they check a box acknowledging it.

Our normal workload is about 1100 legitimate submissions each month. The above numbers are in addition to that. Before anyone does the “but the quality” song and dance number, none of those works had any chance at publication, even if they weren’t in violation of our guidelines.

As you can see, our prevention efforts bore some fruit in March and April before being thwarted in May. So then, why aren’t we closed this time?

Honestly, I’m not ruling out the possibility. We were honestly surprised by just how effective some basic elimination efforts were at reducing volume in March and April. That was a shot in the dark, is still blocking some, but is not a viable option for the latest wave. We’re only keeping our head above water this time because we have some new tools at our disposal. From the start, our primary focus has been to find a way to identify “suspicious” submissions and deprioritize them in our evaluation process. (Much like how your spam filter deals with potentially unwanted emails.) That’s working well enough to help.

I’m not going to explain what makes a submission suspicious, but I will say that it includes many indicators that go beyond the story itself. This month alone, I’ve added three more to the equation. The one thing that is presently missing from the equation is integration with any of the existing AI detection tools. Despite their grand claims, we’ve found them to be stunningly unreliable, primitive, significantly overpriced, and easily outwitted by even the most basic of approaches. There are three or four that we use for manual spot-checks, but they often contradict one another in extreme ways.

If your submission is flagged as suspicious, it isn’t the end of the world. We still manually check each of those submissions to be certain it’s been properly classified. If you’re innocent, the worst that happens is that it takes us longer to get to your story. Here are the possible outcomes:

Yep, you deserved it. Banned.Hmm… not entirely sure. Reject as we would have normally, but future submissions are more likely to receive this level of scrutiny.Nope, innocent mistake. Reset suspicion indicator and process as a regular submission.If spam doesn’t get caught by the filter, the process changes to:

Make note of any potentially identifying features that can be worked into future detection measures.Banned.As I’ve said from the beginning, this is very much a volume problem. Since reopening, we’ve experienced an increasing number of double-workload days. Based on those trends and what we’ve learned from source-tracing submissions, it’s likely that we will experience triple or quadruple volume days within a year. That’s not sustainable, but each enhancement to the present model (or even applying temporary submission closures) buys us some more time to come up with something else and we’re not out of ideas yet.