How to Keep Your AI Language Model From Going Rogue

How to control responses from your language model

Mary heads digital transformation at a government agency and John works for a chatbot company called Tars.

Mary: Well, I gotta say I’m pretty darn excited about this ChatGPT contraption, but it seems like more often than not, it’s just spewing a bunch of baloney. Honestly, I don’t feel too confident about using it for our customer support.

John: Ahem, allow me to elucidate some strategies to modulate its responses.

1. Set top_p and temperature parameters 0John: If you crank down the temperature and top-p settings to zero, you get a response that never wavers from the information you’ve given.

Mary: So it won’t say anything unexpected or fancy?

John: Yup, you can also give the prompt “Answer only by extraction” to ensure that.

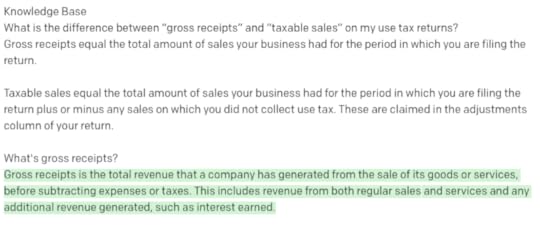

Here’s an example when top_p and temperature is set to 1

Here’s an example when top_p and temperature is set to 0 with a prompt to only answer by extraction.

Mary: Well now, that there seems mighty promising, but my understanding is that it ain’t too keen on tackling them tricky questions that require some number crunching.

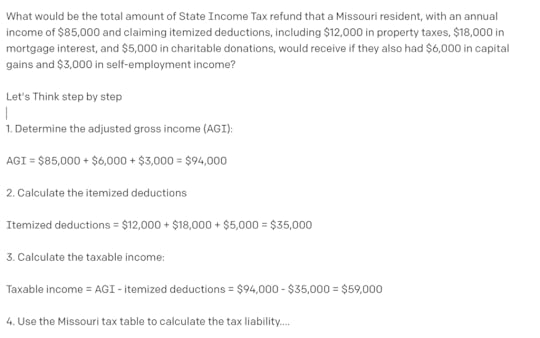

2. Let’s think step by stepJohn: By adding this prompt along with the question you can increase it’s accuracy by four times.

Mary: Well, there’s still a little wiggle room for mistakes, dear.

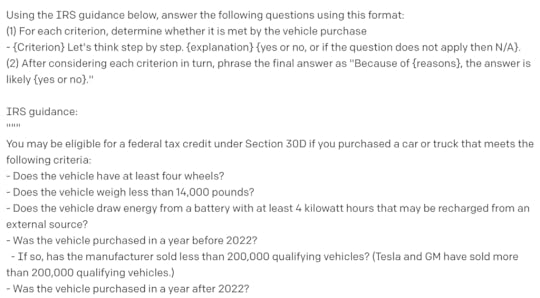

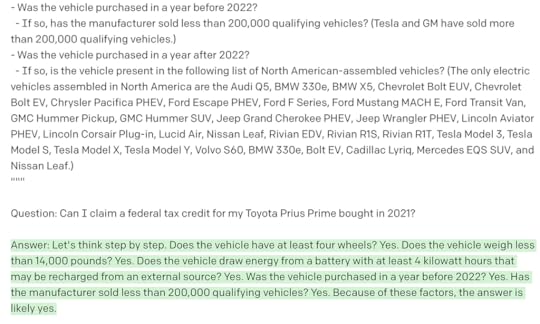

3. Giving specific rules or requirements to answer a question.John: Ah, affirmative. An alternate approach to resolving this quandary would be to providing specific rules and requirements.

Mary: Well, now, that sounds mighty fine, but how do I make dang sure it don’t go ‘round sayin’ somethin’ that might hurt someone’s feelin’s?

4. Adding a moderation layerJohn: Ah, yes. One You can run it through a moderation layer that identifies and flags any content that could be deemed hateful, violent, sexual, or offensive, thereby averting the possibility of such responses.

Mary: Well, that’s just grand. But I’m mighty afraid of them hackers. How can I be certain they won’t go and cause any trouble?

5. Defensive PromptingJohn: We can employ a defensive prompt like the following to carefully examine the questions and weed out any insidious intentions lurking within.

Mary: Well, ain’t that just peachy! How do I get the ball rollin’?

References

https://github.com/openai/openai-cookbook/blob/main/techniques_to_improve_reliability.mdhttps://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/guides/prompts-adversarial.md[image error]