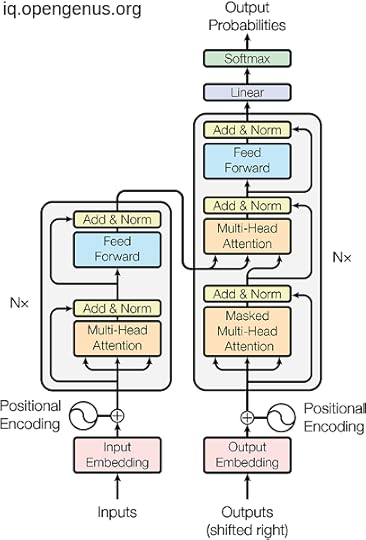

Hello everybody, today we will discuss one of the revolutionary concepts in the artificial intelligence sector not only in Natural Language Processing but also nowadays in the Computer Vision, which is the Transformers and the heart of it Self-Attention.

Table of Contents :1. Before transformers.2. Attention is all you need.3. Self-Attention.4. Multi head attention.5. Multiple encoder and decoder.6. attention vs self-attention vs multi-head attention.7. Application.

In our journey today ...

Published on January 22, 2023 08:09