Moral Enhancement

[image error]A recent comment on my piece, “The Future of Religion,” offered a few good objections to my transhumanism. I’d like to offer some brief replies to those objections.

First, Dr. Arnold stated, “One of your assumptions seems to be that enhancing human well-being will be the fundamental driver of our technologies.” This may be true but isn’t what I’m saying.

For me, it’s the other way around. I’m saying that using future technologies have the potential to greatly enhance human (and posthuman) well-being. It is easy to see how technology does this today by considering sanitation, clean water, vaccines, labor-saving devices, antibiotics, dentistry, comfortable furniture to sit and sleep on, well-designed shelter, heating and cooling, etc. Just consider living in the middle ages when the life expectancy was about 25 years and, for the most part, people died miserably. So we can imagine that future technologies will enhance human flourishing even more.

Now I do agree with the professor that many technologies: 1) benefit only those who can afford them (that was true in the past, is true now, and may well be true in the future); 2) are designed only for profit (and thus may or may not benefit people); and 3) can have disastrous results (nuclear weapons are an obvious example).

Dr. Arnold also says that “technologies will be developed with broader concerns of human well-being as the fundamental driving force.” I suppose some will and some won’t and some will be a mixed bag. For example, medical research is often driven both by profit and the researcher’s sincere desire to do good. Such issues raise economic considerations about the extent to which, for example, the supposed “invisible hand” (pursuing your self-interest in the market helps others) is operative.

So the extent to which the common good motivates support for science and technology probably depends on the cultural milieu. So in the Scandinavian countries, there is a better chance that concerns about the common good motivate scientific research and economic policy than in the USA where those in power (especially Republicans) increasingly don’t care about the common good. (And when government does try to help its citizens, say by passing an “affordable care act,” private interests and the political parties they control work to undermine the promotion of the general welfare.)

So I agree when Dr. Arnold says, “I see little reason to be particularly hopeful that we will manage this [develop technologies aimed at increasing human flourishing unless] … we will have either (a) effective democratic control over technology developments that bend them to universalizable (or general) human needs, or (b) an enlightened core of leaders who develop them in these ways.” This insight reinforces my view that some countries are better than others in promoting the flourishing of their citizens.

I also agree with Dr. Arnold that capitalism creates desires for things we don’t need, “Our big tech companies, more than anything, seem driven by figuring out ways to market stuff to us that we don’t need.” (I actually think the issue with tech companies relates more to the data they collect which can be used for good or ill.) Again all this raises complicated issues about capitalism, the wealth inequality it creates, the destruction of the environment it encourages, and more.

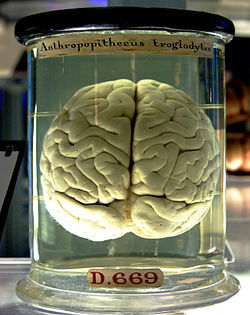

But the market is a human creation so this again leads me back to the fundamental problem—humans have reptilian brains forged in the Pleistocene. We are so deeply flawed morally (territoriality, aggression, dominance hierarchies, etc) and intellectually (thousands of brain bugs) that in order to avoid being destroyed (climate change, nuclear war, environmental degradation, pandemics, asteroids, etc.) we need a radical upgrade. And technologies like genetic engineering, artificial intelligence, neural implants, and nanotechnology hold the promise of, for the first time in human history, upgrading our programming.

Naturally, there are obvious risks involved and no risk-free way to proceed. I’m just not sure whether we can slowly become more educated and improve culture fast enough to survive. But perhaps using technology to expedite the process of reprogramming ourselves will lead to our extinction too. I just don’t know.

I’m not even sure where the weight of reason lies on this issue or if we now possess the intellectual wherewithal to know how best to proceed. Maybe reason is mostly “the slave of the passions,” because of our evolutionary history.

What I know is this—only intellectual and moral virtue will save us.

___________________________________________________________

Note: I’ll try to do more research on this topic soon. For more see[image error]

Unfit for the Future: The Need For Moral Enhancement (Uehiro Series In Practical Ethics)[image error] (Oxford University Press)

[image error][image error]

The Ethics of Human Enhancement: Understanding the Debate[image error]

(Oxford University Press)[image error]

[image error][image error]

Brain Bugs: How the Brain’s Flaws Shape Our Lives[image error] (W. W. Norton & Company)

” Reply to commentators on Unfit for the Future” – Ingmar Persson and Julian Savulescu

“The Moral Agency Argument Against Moral Bioenhancement” – Massimo Reichlin

“Is It Desirable to Be Able to Do the Undesirable? Moral Bioenhancement and the Little Alex Problem” – Michael Hauskeller

Why is It Hard for Us to Accept Moral Bioenhancement?: Comment on Savulescu’s Argument – Masahiro Morioka

“Would Moral Enhancement Limit Freedom?” – Carissa Veliz