Time’s arrow, the design inference on FSCO/I and the one root of a complex world-order (–> Being, logic & first principles, 25)

On August 7th, News started a discussion on time’s arrow (which ties to the second law of thermodynamics). I found an interesting comment by FF:

FF, 4: >> It’s always frustrating to read articles on time’s arrow or time travel. In one camp, we have the Star Trek physics fanatics who believe in time travel in any direction. In the other camp, we have those who believe only in travel toward the future. But both camps are wrong. It is logically impossible for time to change at all, in any direction. We are always in the present, a continually changing present. This is easy to prove. Changing time is self-referential. Changing time (time travel) would require a velocity in time which would have to be given as v = dt/dt = 1, which is of course nonsense. That’s it. This is the only proof you need. And this is the reason that nothing can move in spacetime and why Karl Popper called spacetime, “Einstein’s block universe in which nothing happens.” . . . >>

That set me to thinking, and I responded. The connexions involved in that response in turn lead to the core framework of the design inference. Accordingly, I think this goes to logic and first principles, given the fundamental character of physics and particularly of thermodynamics and its bridge to information:

KF, 6: >>a set of puzzles.

Obviously, we inhabit what we call now, which changes in a successive, causally cumulative pattern. Thus we identify past, present (ever advancing), future. In that world, there are entities that while they may change, have a coherent identity: be-ings.

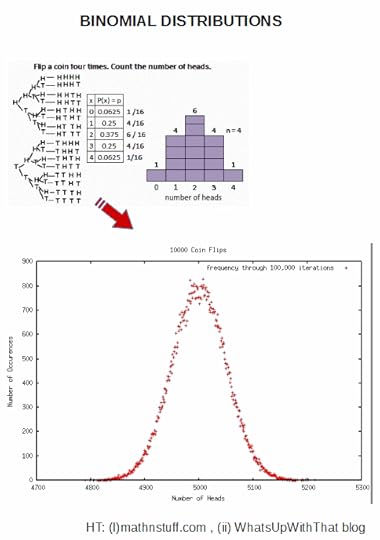

As a part of change, energy flows and does so in a way that gradually dissipates its concentrations, hence time’s arrow. This flows from the statistical properties of phase spaces for systems with large [typ 10^18 – 26 and up] numbers of particles. Thus we come to the overwhelming trend towards clusters of microstates with dominant statistical weight. We can typify by pondering a string of 500 to 1,000 coins and their H/T possibilities. If you want a more “physical” analogue, try a paramagnetic substance with as many elements in a weak B-field that specifies alignment with or against its direction. We then see that overwhelmingly near 50-50 in H/T or W/A dominates in a sharply peaked binomial distribution.

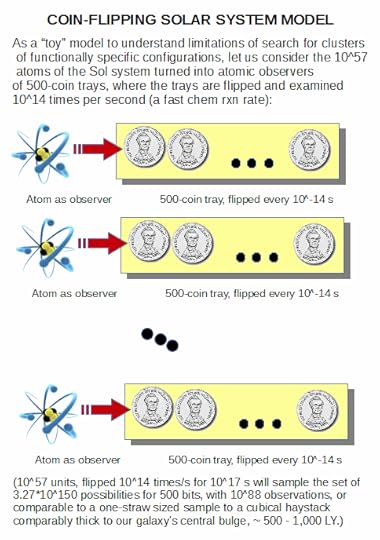

Thus, we may contemplate the 10^57 atoms of the Sol system (or the ~ 10^80 of the observed cosmos), each acting as an observer of a string of 500 coins (or, 1,000):

Thus we see the binomial distribution of coin-flipping possibilities, here based on 10,000 actual tosses iterated 100,000 times, also indicating just how tight the peak is, mostly being between 4,850 and 5,150 H:

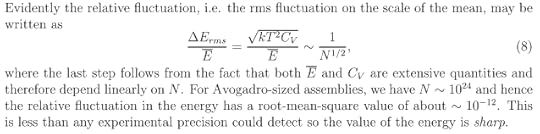

Thus we see the roots of discussions on fluctuations:

Note, not coincidentally, sqrt (10^4) = 10^2, or 100.

And, if the pattern of changes is not mechanically forced and/or intelligently guided, the resulting bit patterns overwhelmingly will have no particularly meaningful or configuration-dependent functionally specific, information-rich configuration. Indeed, this is another way of putting the key design inference that on this sort of statistical analysis, functionally specific, complex organisation and/or associated information (FSCO/I, the relevant subset of CSI) is a highly reliable index of intelligently directed configuration as material causal factor.

Where, too, discussion on such a binomial distribution is WLOG, as any state or configuration, in principle — and using some description language and universal constructor to give effect — can be specified as a sufficiently long bit string. Trivially, try AutoCAD and NC instructions for machines.

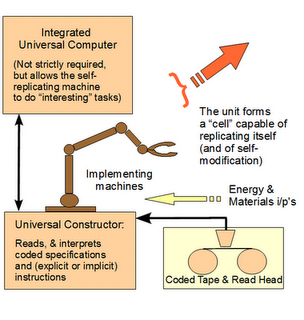

In particular, let us consider a von Neumann kinematic self replicator as both a machine with universal fabrication in principle and as a functional, information-rich highly organised component of the living cell with its own origin to be explained as the basis for reproduction in cell based life:

As a result, we see that Orgel is right:

>living organisms are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity . . . .

[HT, Mung, fr. p. 190 & 196:]

These vague idea can be made more precise by introducing the idea of information. Roughly speaking, the information content of a structure is the minimum number of instructions needed to specify the structure.

[–> this is of course equivalent to the string of

yes/no questions required to specify the relevant J S Wicken “wiring

diagram” for the set of functional states, T, in the much larger space

of possible clumped or scattered configurations, W, as Dembski would go on to define in NFL in 2002, also cf here,— here and

— here

— (with here on self-moved agents as designing causes).]

One can see intuitively that many instructions are needed to specify a complex structure. [–> so if the q’s to be answered are Y/N, the chain length is an information measure that indicates complexity in bits . . . ] On the other hand a simple repeating structure can be specified in rather few instructions. [–> do once and repeat over and over in a loop . . . ] Complex but random structures, by definition, need hardly be specified at all . . . . Paley was right to emphasize the need for special explanations of the existence of objects with high information content, for they cannot be formed in nonevolutionary, inorganic processes [–> Orgel had high hopes for what Chem evo and body-plan evo could do by way of info generation beyond the FSCO/I threshold, 500 – 1,000 bits.] [The Origins of Life (John Wiley, 1973), p. 189, p. 190, p. 196.]>

Thus, we come to the heart of why the design inference on FSCO/I is

so reliable: trillions of observed cases of its origin; uniformly,

caused by intelligently directed configuration.

So, the design inference has underpinnings in the informational view

of statistical thermodynamics, on which entropy is seen as a metric of

average missing information to specify particular microstate, given only

the macro-observable state. Thus, too, degree of randomness of a

particular configuration is strongly tied to required “minimum”

description length. A truly random configuration essentially has to be

quoted to describe it. A highly orderly (so, repetitive) one can be

specified as: unit cell, repeated n times in some array.

Information-rich descriptions are intermediate and are meaningful in

some language: language is naturally somewhat compressible but is not

reducible to repeating unit cells.

Consequently, the design inference on FSCO/I is robust.

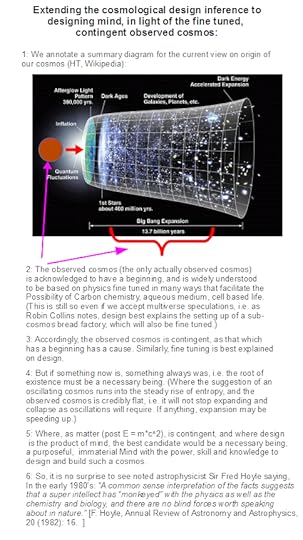

We also recognise a space-time matrix and presence of fields so that

there is a context of interactions of beings in the common world.

However, simultaneity turns out to be an observer-relative

phenomenon, energy and information face a speed limit, c. Further,

massive bodies of planetary, stellar or galactic scale sufficiently warp

the space-time fabric to have material effects. Along the way, the

micro scale is quantised, leading also to a world of virtual particles

below the Einstein energy-time uncertainty threshold that shapes field

interactions and may have macro effects up to the level of cosmic

inflation and even bubbles expanding into sub-cosmi.

There are also issues of rationally free mind rising above what

computing substrates can do as dynamic-stochastic systems, interacting

with brains through quantum influences.

Reality is complex yet somehow unified — the first metaphysical

problem contemplated as a philosophical general issue in our

civilisation, the one and the many.

And that in turn points to the importance of the world-root that springs up into the causal-temporal, successive, cumulative order that we inhabit. >>

Walker and Davies add further insights:

In physics, particularly in statistical mechanics, we base many of our calculations on the assumption of metric transitivity, which asserts that a system’s trajectory will eventually [–> given “enough time and search resources”] explore the entirety of its state space – thus everything that is phys-ically possible will eventually happen. It should then be trivially true that one could choose an arbitrary “final state” (e.g., a living organism) and “explain” it by evolving the system backwards in time choosing an appropriate state at some ’start’ time t_0 (fine-tuning the initial state). In the case of a chaotic system the initial state must be specified to arbitrarily high precision. But this account amounts to no more than saying that the world is as it is because it was as it was, and our current narrative therefore scarcely constitutes an explanation in the true scientific sense.

We are left in a bit of a conundrum with respect to the problem of specifying the initial conditions necessary to explain our world. A key point is that if we require specialness in our initial state (such that we observe the current state of the world and not any other state) metric transitivity cannot hold true, as it blurs any dependency on initial conditions – that is, it makes little sense for us to single out any particular state as special by calling it the ’initial’ state. If we instead relax the assumption of metric transitivity (which seems more realistic for many real world physical systems – including life), then our phase space will consist of isolated pocket regions and it is not necessarily possible to get to any other physically possible state (see e.g. Fig. 1 for a cellular automata example).

[–> or, there may not be “enough” time and/or resources for the relevant exploration, i.e. we see the 500 – 1,000 bit complexity threshold at work vs 10^57 – 10^80 atoms with fast rxn rates at about 10^-13 to 10^-15 s leading to inability to explore more than a vanishingly small fraction on the gamut of Sol system or observed cosmos . . . the only actually, credibly observed cosmos]

Thus the initial state must be tuned to be in the region of phase space in which we find ourselves [–> notice, fine tuning], and there are regions of the configuration space our physical universe would be excluded from accessing, even if those states may be equally consistent and permissible under the microscopic laws of physics (starting from a different initial state). Thus according to the standard picture, we require special initial conditions to explain the complexity of the world, but also have a sense that we should not be on a particularly special trajectory to get here (or anywhere else) as it would be a sign of fine–tuning of the initial conditions. [ –> notice, the “loading”] Stated most simply, a potential problem with the way we currently formulate physics is that you can’t necessarily get everywhere from anywhere (see Walker [31] for discussion). [“The “Hard Problem” of Life,” June 23, 2016, a discussion by Sara Imari Walker and Paul C.W. Davies at Arxiv.]

Again, food for thought. END

PS: I followed up by searching and commented at 2 below. On reflection what is there is so pivotal that I append it as integral to the OP. And no, I do not have to endorse or even like the corpus of a thinker’s work in order to acknowledge where s/he has made a truly striking, powerfully penetrating point. We are invited to not despise “prophesyings,” but to test all things and hold on to the good. In that spirit:

KF, 2 below: >> . . . I find an interesting discussion on the one and the many:

One of the most basic and continuing problems of man’s history is the question of the one and the many and their relationship. The fact that in recent years men have avoided discussion of this matter has not ceased to make their unstated presuppositions with respect to it determinative of their thinking.

Much of the present concern about the trends of these times is literally wasted on useless effort because those who guide the activities cannot resolve, with the philosophical tools at hand to them, the problem of authority. This is at the heart of the problem of the proper function of government, the power to tax, to conscript, to execute for crimes, and to wage warfare. The question of authority is again basic to education, to religion, and to the family. Where does authority rest, in democracy or in an elite, in the church or in some secular institution, in God or in reason? . . . The plea that this is a pluralistic culture is merely recognition of the problem-not an answer. The problem of authority is not answerable to reason alone, and basic to reason itself are pre-theoretical suppositions or axioms1 which represent essentially religious commitments. And one such basic commitment is with respect to the question of the one and the many.2 The fact that students can graduate from our universities as philosophy majors without any awareness of the importance or centrality of this question does not make the one and the many less basic to our thinking. The difference between East and West, and between various aspects of Western history and culture, rests on answers to this problem which, whether consciously or unconsciously, have been made. Whether recognized or not, every argument and every theological, and philosophical, political, or any other exposition is based on a presupposition about man, God, and society-about reality. This presupposition rules and determines the conclusion; the effect is the result of a cause. And one such basic presupposition is with reference to the one and the many.

[ By R. J. Rushdoony April 24, 2017 Adapted from The One and the Many: Studies in the Philosophy of Order and Ultimacy ]

The one and the many, unity in coherent (and hopefully intelligible) diversity as a cosmos is absolutely pivotal. Including, on the force of the design inference i/l/o time’s arrow but also onward issues of the root of reality, the necessary and independent core from which all the diversity we see springs.

So, this question we must tackle, tackle in a fundamental way.>>

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

Michael J. Behe's Blog

- Michael J. Behe's profile

- 219 followers