Glenn Goodrich's Blog

December 19, 2011

KYCKing My Career in the Pants

Ruprict.net has been dormant for months now, mostly because I’ve started to shift my career into a much more Ruby-colored light. In the last few months, besides really focusing all my spare nerd time on Ruby, I became the Managing Editor of Rubysource.com. As a result, all my technical blogging has funneled into that site. It is a ton of fun and the more I submerge into Ruby and its community, the more I enjoy it.

Ruprict.net has been dormant for months now, mostly because I’ve started to shift my career into a much more Ruby-colored light. In the last few months, besides really focusing all my spare nerd time on Ruby, I became the Managing Editor of Rubysource.com. As a result, all my technical blogging has funneled into that site. It is a ton of fun and the more I submerge into Ruby and its community, the more I enjoy it.

For this reason and others, I started looking for a job a few months back as well. The job search was almost exclusively Ruby jobs, which was a risk since I’ve never done Ruby professionally. However, I did land a couple of offers, which weren’t right for various reasons. This proved that I could get a job in Ruby land, something I was unsure of when I began the search.

Some weeks back, I met a guy named Les Porter at a local Ruby development meetup. Les had pasted a sign on his shirt that said he was looking for Charlotte-based Rubyists for a startup called KYCK. KYCK is “the global soccer conversation”, focusing on the social, rich media, and e-commerce aspects around the world’s most popular sport. I talked to Les a couple of times, just to see what he was offering, and we made an appointment for me to come down and see their facility.

Being honest, I did not really think the KYCK thing would pan out for me. I have never been in the startup life, always playing it “safe” with somewhat established companies. The more I talked to Les, however, the more excited I became.

KYCK offers me a change to help lead a team and build something from the ground up. It offers me a chance to focus on the technologies I love and really grow all of my skills. There will be major sacrifices in the short term, for sure. The more I thought about the potential upside, the more I realized I had to take the risk. It’s time to put my money where my mouth is and really do something. As you might have guessed, I took the job.

I start in the new year, and I am excited and terrified and anxious and a million other things. Right now, every minute of every day I am thinking about how great it could be and how much I have to learn. I think if I wasn’t scared, then I’d be pursuing the wrong path.

So, I’ll be stepping out of the ESRI world for a bit. I do love this community, so I part with the proverbial “sweet sorrow”. It has been one of the privileges of my career to watch the community around GIS and ESRI grow, while considering myself a very small part of that.

KYCK goes to public beta on January 15th, 2012. Stop by and see the site in your spare time, and if you are a soccer-head, keep KYCK on your radar and register when you can.

I wish the GIS/ESRI community nothing but success, knowing it will continue to thrive in amazing ways. I hope the community wishes me luck, as well. It is going to be a crazy ride.

Filed under: Uncategorized

March 16, 2011

Using RobotLegs to Create a Widget for the ArcGIS Viewer for Flex

I submitted two talks to last week’s ESRI Developers Summit, one of which was not selected. This made me indignant enough to write a blog post explaining the shunned presentation, in the hopes that all the people that did not vote for it will slap their foreheads and pray for the invention of time travel. Or, maybe I just want to dialog on using Robotlegs with the Flex Viewer.

In the Advanced Flex presentation that Bjorn and Mansour gave at DevSummit, he extolled the use of frameworks. Paraphrasing, he said “Find a framework and stick with it” By “framework”, he meant something like PureMVC, Cairngorm (3, mind you, not 2), or Robotlegs. I agree with the sentiment, wholly, although I am also a bit baffled at why the Flex Viewer didn’t use one. My guess is that they will claim they have their own framework, which is certainly true, but it flies in the face of what Mansour spoke of in his presentation. I raised my hand to ask this question, but (thankfully) time ran out on the questions and I was not selected. It is probably just as well, as I don’t want to get on Mansour’s or Bjorn’s bad side. They’ve both been very helpful to me in the past and I doubt I am even close to beyond needing more of their knowledge.

So, in this blog post, I am going to create a Flex Viewer Widget with Robotlegs. First, though, I’ll explain a couple of things. The reason I like using Robotlegs (or any well-supported framework) is myriad. It makes my widget much more testable, meaning, I can more easily write unit tests to exercise the widget. This allows me to design the widget in a test-driven fashion, which is a good thing. I wonder why the Flex Viewer source doesn’t include any unit tests. Again, it’s not like ESRI is promoting bad habits, but they aren’t promoting good ones either. Also, Robotlegs is known outside the ArcGIS world, so future Flex developers on my project that know Robotlegs will be able to get productive more quickly. Granted, they’ll still have to learn about the ArcGIS API for Flex as well as the widget-based approach taken by the Flex Viewer, but the patterns Robotlegs uses brings context to this learning. That’s my story, and I am sticking to it.

So, why Robotlegs? Because I like it. It’s got a great community and it makes a ton of sense. Read on to see what I mean.

OK, let’s get to the code.

The Scenario

The widget we are building today is a Geolocation Widget. (final product here) It’s function is to figure out where the user is and store that location in application, allowing the user to zoom to their location. It will rely on javascript to do the IP Location search (which we won’t get into too much. Suffice it to say that we’re using the HTML 5 Geolocation API for this with a fallback)

Now, I don’t really want this post to be an intro to Robotlegs. There are plenty of those, including a very recent one by Joel Hooks (an RL founding member and crazy-smart guy) Read through those posts (as of this post, there are 3, with more on the way) It should get you enough background to understand what is going on here. Go ahead, I’ll wait.

Back? Confused? Robotlegs takes a bit to comprehend (not even sure if I totally get it, ahem) So, here are the things you’ll need to go through this in your own Flex development environment:

The ArcGIS API for Flex (I am using 2.2)

Robotlegs (using 1.4 here)

FlexUnit (4.0.0.2) and related swcs

AsMock (1.0) with the FlexUnit integration swc

Robotlegs Modular Utilities (github) Stray (who is brilliant) and Mr. Hooks have written a modular utility for RL, and since our Flex Viewer is modular, we are using it.

For simplicity, just grab the git repository here, which has all this stuff set up for you. Don’t get too hung up on the number of libraries and third party code. This is how separation of concerns looks, leveraging the best library for a specific function.

The ‘Main’ Context

For those of you that did go read the Robotlegs introductory articles, you know that we have Contexts, Views, Mediators, Commands, and Services. The context is the first thing we need to sort out, and there is a couple of caveats to our particular situation. First, the Flex Viewer is not a RL application and, therefore, it does not have a context. However, we need one, even using the Modular Utility. In a nutshell, the Application Context is there as a parent context, and will supply the injector to the module contexts (unless you create one explicitly). So, first thing to do is create the main context:

import com.esri.viewer.BaseWidget;

import flash.display.DisplayObjectContainer;

import flash.system.ApplicationDomain;

import org.robotlegs.core.IInjector;

import org.robotlegs.utilities.modular.mvcs.ModuleContext;

public class ApplicationContext extends ModuleContext {

public override function startup():void {

viewMap.mapType(BaseWidget);

}

}

In the main application context, we register the BaseWidget class, which will allow Robotlegs to inject any widget going forward. This makes using Robotlegs a bit more involved than just sticking with the “core” Flex Viewer widget approach, but it’s a small price to pay, in my opinion.

The View

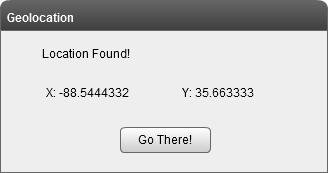

Since functionality drives the widget, next is the view. In this scenario. we want the geolocation to be loaded when the widget is first opened. Once it is found, the location should be displayed and the user can pan to that location as desired. In my mind’s eye, I see a couple of labels and a button. Also, we have 3 states: Searching for location, location found, and location not found. I see this:

We have a feedback area, the location is displayed, and the user can pan whenever they like with the “Go there!” button. Not gonna win any awards, but it gets the job done. From looking at our UI, there are a couple of takeaways: First, the location is loaded automatically, which means it happens when the user opens the widget. Second, we can start to think about our states and what is visible for each state. Finally, something has to handle user pressing that button. In RL, it’s the job of the Mediator to handle view events, so this is what ours looks like:

public class GeolocationWidgetMediator extends ModuleMediator

{

[Inject]

public var location:IGeolocation;

[Inject]

public var widget:GeolocationWidget;

override public function onRegister():void{

eventMap.mapListener(widget.btnGoThere, MouseEvent.CLICK, handleGoThere);

//Could also use this, but then you have to figure out the target.

//this.addViewListener(MouseEvent.CLICK, handleGoThere);

this.addContextListener(GeolocationEvent.LOCATION_FOUND, handleLocationFound);

widget.currentState = GeolocationWidget.STATE_SEARCHING_FOR_LOCATION;

getLocation();

}

private function handleGoThere(event:MouseEvent):void{

var point:MapPoint = com.esri.ags.utils.WebMercatorUtil.geographicToWebMercator(new MapPoint(location.x,location.y)) as MapPoint;

widget.map.centerAt(point);

addGraphic(point);

}

private function getLocation():void{

//Find yourself

dispatch(new GeolocationEvent(GeolocationEvent.GET_LOCATION));

}

}

When the Mediator is registered, we map the button click event to handleGoThere(), which simply zooms to our location. But wait, where did that location come from?

The Model

The location object is our Model and is of type IGeolocation (I used an interface for the model here, and now I kinda wish I hadn’t…oh well) We know it needs x/y coordinates, so we’ll make a model object that fulfills our requirement:

public class Geolocation implements IGeolocation

{

private var _x:Number;

private var _y:Number;

public function get x():Number{

return _x;

}

public function get y():Number{

return _y;

}

public function Geolocation(x:Number=0.00,y:Number=0.00)

{

_x = x;

_y = y;

}

}

There, now we have a model that is injected into our mediator. The model is a singleton (not a Singleton, it’s managed by RL) so that anything that cares about changes to the model can just have it injected and they’re always up to date. MMMM….that feels good. When we look at our module context, you’ll see how to make the model a singleton (we do it with services too!) For now, we’re still talking about the mediator. The getLocation() method fires off a GeolocationEvent, which we need to ‘splain.

Events

The Mediator takes view/framework events and translates them into Business Events. In this case, the loading of the widget is translated into a go-get-our-geolocation event, which we call GeolocationEvent.GET_LOCATION.

public class GeolocationEvent extends Event

{

public static const LOCATION_FOUND:String = "locationFound";

public static const LOCATION_NOT_FOUND:String = "locationNotFound";

public static const GET_LOCATION:String = "getGeolocation";

public var location:IGeolocation;

public function GeolocationEvent(type:String)

{

super(type);

}

override public function clone():Event{

return new GeolocationEvent(type);

}

}

Nothing special about events in RL, which is GOOD. Our GeolocationEvent has a payload of our location model, and it handles a few event types. We know when getGeolocation happens, the other two events are in response to finding out geolocation. We’ll talk a bit about those when we get to the service. For now, something has to respond to our GeolocationEvent.GET_LOCATION

Commands

In RL, you handle business events with Commands. Commands are responsible for answering the question raised by the event, either by going outside the application (when they talk to services) or by some other means. In our case, we’ll make a GeolocationCommand that calls some service to get our geolocation.

public class GetGeolocationCommand extends Command

{

[Inject]

public var service:IGeolocationService;

[Inject]

public var event:GeolocationEvent;

override public function execute():void{

service.getGeolocation();

}

}

About the simplest command you can possibly have. Also, note how RL is helping us flush out our needs while guiding us in using best practices. What have you done for RL lately? Yet it still guides you on the path of rightgeousness. Let’s discuss that IGeolocationService.

Services

The ‘S’ in MVCS. Services are our gateway to what lies beyond. When we need to go outside the app for data, we ask a service to do that. In this case, our service is going to ask the HTML5 Geolocation API for our current location. This means, basically, that we call out using ExternalInterface and define a couple of callbacks so we can handle whatever the service returns.

public class GeolocationService extends Actor implements IGeolocationService

{

public function GeolocationService()

{

ExternalInterface.addCallback("handleLocationFound",handleLocationFound);

ExternalInterface.addCallback("handleLocationNotFound",handleLocationNotFound);

}

public function getGeolocation():void

{

ExternalInterface.call("esiGeo.getGeolocation");

}

public function handleLocationFound(x:Number, y:Number):void{

var location:Geolocation = new Geolocation(x,y);

var event:GeolocationEvent = new GeolocationEvent(GeolocationEvent.LOCATION_FOUND);

event.location = location;

dispatch(event);

}

public function handleLocationNotFound():void{

var event:GeolocationEvent = new GeolocationEvent(GeolocationEvent.LOCATION_NOT_FOUND);

dispatch(event);

}

}

Notice that our service extends the Actor class, which is an RL class that (basically) gives us access to EventDispatcher. The service will fire events (as you see above) when it gets back results from the beyond.

Something has to handle the events thrown by the service. We want these results to show up in our view, which means, we want these results to change our model. Back to the Mediator.

The Circle of RL

Back in the GelocationWidgetMediator, we can subscribe to the events raised by the service. As a side note, you really have a couple of options here. If your data is more complex than an x/y pair, you’ll likely want to parse it before it gets to the mediator. At this point, I would recommend you look at Joel’s posts and, for something super cool and useful, the Robotlegs Oil extensions. I will likely blog about Oil in the future. You could also create a presentaion model, inject it into the view and data bind to the model objects on the PM. I am doing it the simplest way because my scenario is simple. Anyway, back to the mediator and handling the events raised by the service. You subscribe to context events in the same onRegister() function that you subscribe to view events.

override public function onRegister():void{

eventMap.mapListener(widget.btnGoThere, MouseEvent.CLICK, handleGoThere);

//Could also use this, but then you have to figure out the target.

//this.addViewListener(MouseEvent.CLICK, handleGoThere);

this.addContextListener(GeolocationEvent.LOCATION_FOUND, handleLocationFound);

this.addContextListener(GeolocationEvent.LOCATION_NOT_FOUND, handleLocationNotFound);

widget.currentState = GeolocationWidget.STATE_SEARCHING_FOR_LOCATION;

getLocation();

}

public function handleLocationNotFound(event:GeolocationEvent):void{

widget.currentState = GeolocationWidget.STATE_LOCATION_NOT_FOUND;

}

We saw handleLocationFound() above, here I added the handling of the not found scenario. All it does is set the state to the “not found” state, which hides buttons/labels/whatever.

The Context

As I mentioned earlier, the module needs its own module context. The context’s job is to wire everyting up. Our mediator is mapped to its view, events to commands, and the services our registered. Also, the items that will be injected to the various players (like the model and the service) are specified.

public class GeolocationWidgetContext extends ModuleContext

{

public function GeolocationWidgetContext(contextView:DisplayObjectContainer, injector:IInjector){

super(contextView, true, injector, ApplicationDomain.currentDomain);

}

override public function startup():void{

//Singletons

injector.mapSingletonOf(IGeolocationService, GeolocationService);

injector.mapSingletonOf(IGeolocation,Geolocation);

//Mediators

mediatorMap.mapView(GeolocationWidget, GeolocationWidgetMediator);

//Commands

commandMap.mapEvent(GeolocationEvent.GET_LOCATION, GetGeolocationCommand,GeolocationEvent);

commandMap.mapEvent(GeolocationEvent.LOCATION_FOUND, FindPolygonCommand, GeolocationEvent);

}

}

Our context extends ModuleContext, which is supplied by the Robotlegs Modular Utilities. The ModuleContext creates a ModuleEventDispathcher and ModuleCommandMap, and is basically (as Joel states here) just a convenience mechanism. Since we are in a module, there is one last little item we have to do to make this all come together. Our module should (read: needs to) implement the org.robotlegs.utilities.modular.core.IModule interface. This defines two functions (a setter for parentInjector and a dispose() method) that ensures the API to initialize the module and RL is in place. So, in the script of the MXML, you have:

[Inject]

public function set parentInjector(value:IInjector):void{

context = new GeolocationWidgetContext(this,value);

}

//Cleanup the context

public function dispose():void{

context.dispose();

context=null;

}

The set parentInjector allows us to create a child injector as well as use mappings from the main context. Read Joel’s post on Modular stuff for more detail. The dispose() function is just good practice, allowing you to free up anything you need to free up. The mapSingletonOf calls are how you tell RL to just make one of these things. Above the Geolocation model object is made a singleton, so the mediator and the command get the same copy. In a more complex widget, you could data bind to that bad boy and anything that changes it shows up in the view without any code. That…howyousay?…rocks!

That really covers the meat of creating a Flex Widget for the ArcGIS Viewer for Flex using Robotlegs. As I mentioned, this was submitted to the Flex-a-Widget challenge at the Developers Summit and did not place. The winners (which, to be honest, I voted for) did things display Street View and Bing 3D and windows into your friggin’ soul in the Flex Viewer, so you can see why this simple-to-the-point-of-being-useless widget did not place. Still the guts of this widget are pretty sexy, and now hopefully you can build your own soul-displaying widgets using Robotlegs.

The code on github has a few items that I didn’t think pertinent to this blog, like unit tests (which are VERY important and a BIG reason why using something like RL is crucial) Also, the source has another sequence of taking the point and finding the county where the user is currently, just for fun. I hope you found this useful. If I messed anything up or got something wrong, please let me know in the comments. Much of the reason I do blog posts like this one is to confirm that things are what I think the are. I have (frequently) been wrong before, so correct me if you see an error.

Filed under: ArcDeveloper, Flex

March 11, 2011

ESRI DevSummit 2011: Daz(zl)ed and Confused

As I am flying back to Charlotte from another ESRI DevSummit, my head is awash in a storm of possibility, hope, and worry. This conference is head-and-shoulders above any other conference on my radar in relevance and utility to my career. Every year I leave amazed at how far ESRI has come with it’s product suite, particularly on the server-side, and every year I feel overwhelmed at what I don’t know and what I am not doing. The ESRI developer community has exploded from a collection of people that couldn’t install Tomcat to a sophisticated, intelligent set of technical ninjas. Watching this change from my perspective has been interesting and extremely humbling, as sometimes I feel like I am a part of something great, and sometimes I feel like I am falling behind. All things considered, though, it is a great time to be a GIS and ESRI developer.

Reviewing the content of the conference, as i am prone to do each year, I would have to say the overarching theme was mobile, mobile, and more mobile. Of course, I live on the server, so I didn’t give and desktop sessions a second glance. I focused mostly on the Flex and Javascript APIs, as I simply don’t have room in my arsenal for another web API (sorry Silverlight. I will say that the demo of the week was probably the Kinect map demo given by @SharpGIS.) The Flex sessions are always great, especially if Mansour Raad is presenting. His energy and knowledge truly make him unique among presenters. He showed off Flex “Burrito”, which is the code name for the preview release of the next Flex IDE. Burrito allows a developer to target Blackberry, Android, and (wait for it…) iPhone devices with Flex. Mansour showed off a few apps running on Android and iOS, which is crazy if you think where Flash on the iPhone was only a year ago. The maturation of the REST API lead to demonstrations of more sophisticated editing scenarios on the web, making me wonder if the future of ArcMap isn’t the Flex Viewer (or other API “viewers”) The javascript team showed similar demos, focusing on iOS (both iPad and iPhone) with HTML 5 goodness. I really though the drag-and-drop demonstration, which showed a CSV of points being dropped on a web page and added to the map, as well as dropping a map service URL onto the map to add it to the map content, was particularly impressive. There is a part of me that really thinks HTML 5 is the end game for the web, even if Flex can still do some things that HTML cannot.

One of the items that Mansour and the Flex team showed was a pre-release version of the Flex Viewer Application Builder. The Builder allows a user to, basically, point and click their way to a Flex Viewer, choosing basemaps, operational layers, and tools/widgets. The goal is to allow them to avoid having to edit XML and all the pain that comes with doing that. Being honest, I am not all that excited about such a tool. Also, I am not sure that showing a “no-need-for-a-developer” tool at a developers summit is really playing well to the demographic. My guess is that Silverlight and javascript will follow suit, and we’ll have many clients feeling they can cut out development shops and live with what ESRI has created. Expanding on this theme a bit, the very existence of a *supported* viewer from the vendor could be problematic as well. In my opinion, this can potentially kill innovation around the web APIs. Why, as a client trying to save money, would I choose to create a custom web mapping application, when the vendor has a viewer that is supported? The answer is, in most cases, I wouldn’t. So, as ESRI developers, we will be mostly relegated to viewer configuration or, if we are lucky, custom widget creation. Plus, the client base of the APIs becomes 99% ESRI supported viewer and 1% demos that no one would really use. I talked to a few developers about this and opinions varied from what I expressed above to “nah, it’ll be OK, there will always be enough GIS development work” I am not sure what the answer is, as I understand why ESRI created the viewers. They are doing what they feel is best for their clients. I can’t really fault them for that. However, I think every year ESRI eats a little bit more into the realm of their business partners, and the effect of that will be seen with ESRI partners having to move to other business or failing outright.

There, enough with the gloom-and-doom. The mood around the conference was overwhelmingly positive, if not sycophantic. Coming back, my fellow devs and I are discussing how we are going to get more mobile, more cloudy, and more better. Despite my aforementioned worries, I am recharged as in previous years. If you didn’t attend the conference, you should watch some of the sessions. Almost all the ones I attended were excellent. This includes the user presentations, which I’ll review now.

Starting at the bottom of the user presentation barrel, my talk on using jQuery to create a legend for the ArcGIS Server javascript API was OK. I made some pretty bad slide choices (code on a black background is not the way to go) and I had a couple of clumsy holy-crap-he-just-hit-the-microphone-and-blew-out-my-eardrums moments. On the positive side, a couple of folks came up afterwards and talked to me about using what I’d done, which is always reassuring. On the super-fantastic-presentation end of the users, you had the usual suspects for the most part. The DTS Agile crew (@dbouwman and @bnoyle) gave near perfect presentations on HTML 5, the cloud, and Flex pixel bending. Kirk van Gork (@kvangork) may have stole the show with his presentation on making apps that “Don’t Suck”, which I did not attend but will be watching this week, for sure. Another really great presentation was on using MongoDB to create a Feature Cache. This presentation was done by a (for gawd’s sake) 21-yr old Brazilian developer who informed us he had never presented before. I was thoroughly impressed with this poised and intelligent young man, and you should definitely give that presentation a look.

Well, the plane has started the initial descent into Charlotte. I am ready to be home and thankful for another wonderful DevSummit. If you have any questions about sessions or the like, feel free to hit me on Twitter or comment on this post. Go forth and spread the Word of the GeoNerd.

Filed under: ArcDeveloper Tagged: ESRI

February 25, 2011

Review: Building Location-Aware Applications

Recently, @manningbooks asked for reviewers of “Location-Aware Applications” by Richard Ferraro and Murat Aktihanoglu. I jumped at the chance, since this book has been on my To Read List since I saw its announcement. I like my reviews to give an overall thumbs-up or thumbs-down before getting into the details, so I’ll do that here. Overall, the book is very much worth reading (so, that’s a “thumbs up”). If you are new to Location Based Services (LBS) applications or if you work in only a small bit of that huge landscape, then you will gain breadth of knowledge from reading this book. Now, on to the details…

The first chapter dives into the history of LBS and how we arrived to the present day. It defines the “LBS Value Chain” (mobile device –> content provider –> communication network –> positioning component) and does a comparison of the various technologies used to obtain location information. GPS, CellID, and WiFi Positioning are described and compared at an appropriate detail. A discussion of LBS vs Proximity Based Services (those services that where a phone talks to the nearest phone(s)), was interesting, as I had never really distinguished the two. The chapter wraps up by listing the challenges of developing LBS applications (cost, myriad of devices, privacy issues, etc) and defining the Location Holy Grail that will push the future of LBS apps. This chapter will allow you to speak intelligently about developing LBS apps, something I really did not have going into the book.

Chapter 2 focuses on Positioning Technologies, such as Cell Tower Triangulation, GPS, etc. Again, I learned a ton by reading about the various approaches to positioning tech, including things like Assisted-GPS (A-GPS) and the Open Cell ID movement. I have been annoying my wife in the car by actually timing how long it takes our GPS to find the first satellite, which is called Time to First Fix (TTFF) (“Will you please just drive?”). Did you know that, not only did the military develop GPS but, it uses a more precise GPS its commercial counterpart? I want to get me some of that “pure” GPS, I bet that is some good s[tuff].

Chapter 3 deals with mapping and the various APIs that exist on the web. As an “experienced” geo-developer, I didn’t learn much about the APIs that I did not already know. I did learn a bit about Cloudmade, which looks pretty solid as an open source mapping (with a really cool style editor). It was interesting to read about which providers used which data (Google [used to use] TeleAtlas, for example, which I had forgotten was wholly owned by TomTom) Also, the chapter had a very simple example in many of the mapping APIs (including Mapstraction, which I thought was cool) allowing a quick glance at how the differ. Finally, the item I found most interesting was the discussion on the licensing of the APIs. I’ve known that Google won’t let you put their maps behind a login, but Yahoo has some weird clause that says you can’t use any data on top of their map that is newer than 90 days. Weirdos.

Chapter 4 deals with content from both licensing and distribution format aspects. This was the only chapter, for you ArcDevelopers out there, that mentioned ESRI on any level. The major license categories were: Pay, Free to Use (which means, look at our terms of service), and Open Source. I didn’t realize that there was an open source license, called Open Database License (ODbL), that was created specifically for OpenStreetMap. The content distribution formats mentioned were GeoRSS (both flavors: GML and Simple), GeoJSON, and KML. Again, for you ArcDevelopers, they don’t mention ESRI’s GeoREST specification format (as well they shouldn’t….sorry, tangent) Chapter 4 has a couple of code examples as well for “mashups”, which I couldn’t make work.

Chapter 5 runs through the various “needs” of Consumer LBS Applications. Existing applications are used to exemplify how these needs can be met. For example, the Need to Navigate uses Ulocate and the Telmap app, whereas the Need to Connect discusses things like Google Latitude (and it’s lack of adoption) Whrrl, and Loopt. For you iPhone users/devs, there is a nice table of iPhone LBS-social media apps. I learned of a game called GPS Mission in the Need to Play, which I plan to try out. The last bit of the chapter talks about Augmented Reality, citing the lack of AR apps that have a social media tie-in.

Chapter 6 describes the various mobile platforms and how to develop for each one. The list here is overwhelming. In a nutshell, I took away that Java is still the most widespread (Java ME, that is), the iPhone and Android approach are gaining rapidly, and Symbian is going to die. The mention of Palm’s WebOS is brief, but it cites how marketing can kill a good idea. The WebOS is probably the easiest platform to develop against, and it has things like true multitasking, which neither the iPhone or Android can tout. (FTR, Android’s multi-tasking can be “true”, but most apps still write their state to persistent storage on loss of focus. This is due to the fact that the OS will start killing out-of-focus apps if memory becomes a premium.) The chapter also runs through how to develop a simple app for most of these platforms. Comparing the development approach of Android to iPhone is scary, as the Android approach is simple Java and the iPhone requires multiple files in a proprietary language. There is no mention of soon-to-be-released Windows Phone, which I found a bit surprising. The book discusses Windows Mobile 6.5, which I can’t imagine anyone is using any longer.

Chapter 7 deals with Connectivity Issues, focusing on some of the terms introduced earlier in the book, like quick TTFF. It covers things like making sure you tell the user when you are using their GPS (and, therefore, draining their battery) and wraps up with a look at some of the mobile OS location APIs. All in all, I thought this was the thinnest (content-wise) chapter.

Chapter 8 gets into the stuff I that I was most-clueless about, monetizing LBS. The various ways that you can charge your users are covered, like one-off charges, subscriptions, in-app charging, etc. Other ways to generate money were more business focused, like charging for real-estate on your massively popular site or location data charging. I really enjoyed this chapter.

Chapter 9 goes back to nerd land, but on the server-side. It offers various ways on how to manage data on the server, from users to map tiles to POIs to spatial RDBMS data. PostGIS and MSSQL get a decent mention here, with lighter comment on Oracle and MySQL. Some of the LBS servers are given press here, mainly MapServer. ArcGIS Server gets another very light mention here, as well as MapInfo, MapPoint, GeoMedia (Intergraph) and Maptitude. All in all, I thought this chapter was too light on server-side coverage, which I think would be pretty important if you were hosting your own super-macdaddy geolocation web application.

Chapter 10 deals with the subject that no one likes to deal with: Privacy. The chapter does a good job explaining “Locational Privacy” and relating it to the more mainstream risks of all informational privacy. Privacy is probably the largest issue with your LBS app, and everyone from the mobile operator down to your user is going to want to know how you handle it and, in some cases, will force you to handle it their way. Basically, the best way to keep data private is to not collect it. If you have to collect it, don’t store it. If you have to store it, anonymize it. If you can’t do that, encrypt-the-hell out of it. Another good chapter.

Chapter 11 goes through considerations when distributing your application. In the mobile arena, there are tons of different deployment scenarios, not to mention big issues like price and timing of release. Plus, you can deploy to an OS (Android), a mobile operator (Vodaphone), a handset (iPhone), or independently. A lot of stuff to consider before you just focus on iPhone and Android (and maybe, Win 7)

The last chapter discusses business strategy to securing your idea. This is a bunch of stuff that, as a developer, you don’t want to do, but you have to if you want to make huge piles of money. Things like writing your business plan and getting partnerships are mentioned, which means you’ll have to get our of the nerd cave in your mom’s basement if you want this app to take off. It ends by running through the trademark and patenting process, which sounds a lot like getting a colonoscopy, only more enjoyable.

Again, I enjoyed this book and I appreciate the opportunity to review it. I am not affiliated with Manning or the authors in any fashion, if that matters.

All in all, the book does what you’d expect, departing real knowledge about LBS applications in today’s world. It’s surprising to me how the LBS world is almost completely void of ESRI technology, since I consider ESRI to be the leader in GIS software. I think this shows how much GIS has changed in the public eye. Regardless, I recommend reading Building Location-Aware Applications for anyone planning to develop LBS apps or wanting to learn a big picture of LBS app development.

Filed under: books Tagged: Books, lbs

January 31, 2011

2011 ESRI Dev Summit: Prologue

Yay! One of my presentations was selected for the User Presentation track at ESRI Developers Summit. It is called “You are Legend” and it shows how I created a jQuery plugin that creates an interactive TOC/Legend combination for your jsapi map. I am kind of surprised it was chosen, since it didn’t seem like I was getting many votes and the whole TOC/Legend concept is, in many people’s mind, a big usability no-no. I really did it because most of my clients want one and I like jQuery and I like javscript and I had to do something for the ESRI Charlotte Developers Meetup. The DevSummit version of the presentation will be, I am hoping, much enhanced and a bit more polished, if for no other reason than I won’t likely be 3 beers into the night before the talk (the Charlotte meetup was in a pub).

Last year I thought the user presentations were really, really good. If you want to network with other developers, attend as many of these as you can and then ask questions after the talk. I know that I appreciated the questions after my talk last year and, as a result, have added Twitter contacts with which I can now discuss ongoing development issues. Also, if you’re headed to DevSummit, let me know (comment here or @ruprictGeek on the twittersphere) and maybe we can plan a couple of pub-based nerd sessions. Here are some of (what I consider) other interesting sessions:

Tip, Tricks, and Best Practices for REST architecture

Coding Dojo – Sharpening Your Skills

High Performance Feature Cache with NoSQL for ArcGIS Server

Using Google AppEnging and Fusion Tables with ArcGIS Server

Responsive Geo-Web Design: HTML5 is Cool, But Maybe Not for Everyone

I am not sure if all of these made the cut, but they do look interesting. I didn’t put any Python or Silverlight presos on my list, simply because I don’t use that stuff much, but if that is your cup-o-tea there are a lot of offerings. Another thing that surprised me was that my Robotlegs presentation did not get selected. Out of the two I entered, I thought that one would get the votes. Basically, I was going to walk through using Robotlegs to create a Flex Viewer widget. The ArcGIS Flex Viewer is nice, but I don’t like that they rolled their own framework. There are so many well-supported and, frankly, superior frameworks out there (Robotlegs, Swiz, PureMVC, Cairngorm3) that would have allowed ArcGIS Flex devs to leverage those communities. I am trying to enter a widget in the Flex-a-widget challenge that uses Robotlegs, so we’ll see if I can get that done.

Try to get to DevSummit if you are able. If you are a ArcGIS Developer, this is by far the best conference of the year.

Filed under: ArcDeveloper Tagged: ArcDeveloper

August 30, 2010

ArcGIS Server Legend Resource Application

Building on my last post, I wanted to create a RESTful app that serves up legends from ArcGIS Server. Ideally, this app would not need any configuration. I thought this was very doable, since I could just put the URL information for the map service into the URL for the legend resource service by my new RESTful app. All in all, this was much easier than even I predicted. Oh, and I have to thank Colin Casey (again) for refactoring my code to look more Ruby-way like.

At first, I thought of using a Rails app for this, which I did stand up and get running. However, it seemed like way too much for just a simple app. There is no database, no real views, and not much that required all the conventions that Rails uses. I have been hearing tons about Sinatra (including a running joke that it’s where experienced Rails developers eventually land) and how lightweight it is. And, it is VERY lightweight. I am not going to post any examples of Sinatra here, but a rudimentary scan of the Sinatra home page is enough to show that it’s not a lot of weight. So, I selected Sinatra and had the app working in a few hours (most of which were me struggling with my RubyNoob issues) The result is legend_resource and is posted on github for your criticism and mockery.

Using LegendResource

It’s very easy to get legend_resource up and running.

Pull down the code (git clone git@github.com:ruprict/legend_resource.git)

Install Bundler, if it isn’t already (gem install bundler –pre)

You might have to install RMagick, if you have not already. As I alluded to in my last post, you should go the the RMagick site and figure out the best way to install it for your operating system. (#copout)

type ‘bundle install’ from the git repo root. This should install all the necessary gems.

type ‘rackup’. This should fire up the application on port 9292.

Now that the app is up and running, you can generate a legend for any map service. For example, if you want a legend for the USA_Percent_Male map service in the Demographics folder on server.arcgisonline.com, click on this link:

http://localhost:9292/legend/server.arcgisonline.com/Demographics/USA_Percent_Male

Or create an HTML page with an img tag and set that link to the src. Like this,

[image error]

That’s it. Neat, huh?

The URL scheme is pretty simple: http:///legend//, where

webserver is whereever the legend_resource app is currently hosted.

mapserver is your ArcGIS server

mapservicepath is the path to the service on mapserver. This works for services in the root or in a folder. The link above was in a folder, for example.

Config Options

The configuration options for legend_resource are few right now. Out-of-the-github, I want it to just work, and it does. It writes the files to the local file system if you don’t tell it otherwise. Right now, the only other option is a Google Storage for Developers backend that exists because I received my invite to it this morning. If you want to use that, you have to:

1) Change the gstore.yaml file to add your access_key and secret.

2) Comment out the line in config.ru that sets up the GStoreLegend as the file handler.

run LegendResource

# Uncomment this line to use Google Storage (don't forget to change the gstore.yml file)')

#LegendResource.set :filehandler, GStoreLegend

I did that for a Heroku app that I am hosting at http://agslegend.heroku.com (the image above is from said Heroku app) that you are free to use to crank out a few legends. Just bear in mind that the map service will have to be exposed to the web for my Heroku app to see it.

I hope someone else finds this useful. It was a ton of fun to make and I learned a lot about ruby in the process. I realize that my code is pretty noobish from the Ruby standpoint, so feel free to fork the repo, refactor, and issue pull requests. That’s how I learn. Also, if you’d like to see other options for the legends, hit me on github or leave a comment.

Happy Legending!

UPDATE: One thing I don’t think I made clear is that the REST interface for this service only handles GET and DELETE. If you HTTP GET to the url, it will either create or return the legend. If you HTTP DELETE to it, it deletes the legend, so, the next GET will create it anew.

Filed under: ArcDeveloper, Ruby Tagged: ArcGIS Server, Ruby

August 19, 2010

Create Legend Images from ArcGIS Server with Ruby

Recently, I needed to create a legend for an ArcGIS Server map service, and was amazed that it was still a non-trivial activity. Googling it, I saw a few posts with (what I considered to be) WAY too complicated C# code for such a simple task. Also, there is no great way to get a legend out of ArcMap (for gawd’s sake) which left me thinking “Surely, there is an easy way to do this.” (expletives removed)

I have been mucking about (again) with ruby and Rails, which is great and depressing all at the same time. I don’t use much ruby in my 9-to-5, as it’s neck-deep in Microsoft, so I try to shoehorn ruby into my work however I can. In this case, I remembered the venerable Dave Bouwman’s Ruby-Fu presentation at DevSummit (That guy is always doing the stuff I want to be doing. I don’t like him.) and that he had found a ruby library that spoke both REST and SOAP to ArcGIS Server. Being an experienced AGS dev-monkey, I also know that you can get legend and symbology information from the SOAP API. Thusly, a not-terribly-original-or-visionary idea was born: Use ruby to create a legend.

My main requirement is that it easy, something on the order of:

Tell the thing the URL of my map service.

Tell the thing I want a legend for the map service.

Save the legend given to me by the thing to a file.

So, I got my shoehorn out and when in search of ArcGIS-flavored ruby bits.

arcserver.rb

I hunted down the slides for Dave’s preso to find the name of the ruby library he mentioned. It is arcserver.rb (github) written by Colin Casey, an extremely patient and approachable developer. I sent him a message with my Grand Legend Plan, and he say “Do it, man.” arcserver.rb already did #1 and #2 from my list above. All I had to do was #3. Once you’ve installed the arcserver.rb gem (so, type ‘gem install arcserver.rb’ at your command prompt and watch it install all kinds of stuff….WEEE!) (run this in irb):

require 'rubygems'

require 'arcserver'

server = ArcServer::MapServer.new("http://server.arcgisonline.com/ArcGIS...")

server.get_legend_info

That gives you a text representation of all the legend information for the service. So, all I had to do was loop through all the symbology and write out the symbols. Easy, right? Well, in ruby evertyhing is easy (no fanboy bias there at all). I needed an image processor, and the Old Man of Ruby Image Processors is RMagick. Now, bear in mind that I did all of this on a Windows box, but I used Cygwin. You can install RMagick 2 on Windows natively, so I hear/read, but I didn’t do it that way. However you do it, you’re gonna need RMagick, and therefore, ImageMagick. (um, everything in ruby is easy *cough*)

Got RMagick installed?? Awesome! Wasn’t that easy? Are you giving me the finger? Let’s continue. One of my goals in contributing to Colin’s project was to not pollute it too much. Being pretty much a ruby novice, I figured the best thing to do was to keep as much of my code in its own class/file, so Colin could easily remove/rewrite/laugh-and-point-at it how he saw fit. The final (cleaned and reorganized by Colin) version consists of a LegendImage class that you can see here on Github (I don’t want to post a ton of code here, it’s just unwieldy) I am not going to walk through the code, but I’d like to point out that it’s

Now, to create a legend image, do the same thing we did above, but add (if you’ve installed RMagick since you started irb, you need to restart it):

require 'RMagick'

server.get_legend_image.write("legend.png")

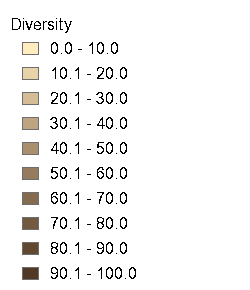

which will write a PNG file called “legend.png” in the from which directory you fired up ‘irb’. Kick ass. Three lines of code (less if you chain) to get a (very basic, but usable) legend from ArcGIS Server. Here is the legend it creates using the Diversity service from ArcGISOnline.

I thought that was pretty cool and useful. There may be other, more elegant ways out there to get a legend from an ArcGIS Server map service, and I am sure someone will mention them in the comments. Even so, I enjoyed doing this little exercise and I appreciate Colin letting me contribute to his project. Here are all the irb commands needed once you have the prerequisites and the gem installed:

require 'rubygems'

require 'RMagick'

require 'arcserver'

server = ArcServer::MapServer.new("http://server.arcgisonline.com/ArcGIS...")

server.get_legend_image.write("legend.png")

My next task is to use this library in a Rails-based web mapping application. Hopefully, I’ll get to that in the next week or so.

Filed under: ArcDeveloper, Ruby Tagged: ArcGIS Server, Ruby

April 26, 2010

Apple’s Fruitless Security

For 20+ years I have relied on Jeff for friendship and technical advice. He is a solid nerd, rooted in things Oracle/Linux with more knowledge of hardware and operating systems than any ten people I know. In other words, he is no moron. Recently, his iTunes account was hacked and upwards of $300 worth of apps/movies purchased. Apple’s response? Paraphrasing “You need to cancel your credit card and take up disputing the charges with them. As for Apple, we’ll be keeping that money. Oh, and once you get a new card, please remember to add it back to your disabled iTunes account.” In other words, you just got ramrodded while we watched, did nothing, and profited by it.

For 20+ years I have relied on Jeff for friendship and technical advice. He is a solid nerd, rooted in things Oracle/Linux with more knowledge of hardware and operating systems than any ten people I know. In other words, he is no moron. Recently, his iTunes account was hacked and upwards of $300 worth of apps/movies purchased. Apple’s response? Paraphrasing “You need to cancel your credit card and take up disputing the charges with them. As for Apple, we’ll be keeping that money. Oh, and once you get a new card, please remember to add it back to your disabled iTunes account.” In other words, you just got ramrodded while we watched, did nothing, and profited by it.

Now, I am an Apple fan. I have an iPhone; I have a G5 (PowerPC-based, old school baby) that I use every day. I love Mac OSX, finding it superior to the Windows operating systems (although, Windows 7 is pretty damn good) and have been on the cusp of buying a MacBook for a few months now. I bought my wife an iMac, and she loves it. In other words, I am not a Windows nerd bashing Apple. I was once a blind fanboy, encouraging everyone I knew to by a Mac and get an iPhone. I would passionately debate why Apple products were superior to all comers, sometimes without the benefit of rational thought.

I am an Apple user, and this may be the last straw.

Once the honeymoon of my relationship with Apple products faded into history, I started noticing what Apple gives me as a proponent of their products. They don’t trust me to change a battery or add storage. They force me to use a singular application to activate and update my phone (iTunes). Their products are outrageously more expensive when compared to the competition. A few times a year they have a media circus to unveil new crazy expensive hardware while their king talks down to me like I am expected to embrace whatever floats to the top of his mock turtleneck, even when it’s underwhelming (copy/paste). Apple wardens off their systems, keeping a who’s who list of frameworks and products that are allowed inside the velvet ropes (i.e., the striking omission of Flash on the iPhone) They allow me to pay $99 for the right to develop for their mobile platform, but only if I use a language who’s base feature set would have been laughed out of most late-1970′s development shops. Oh, and I can pay another $99/year to have a closed off online e-mail/contacts/photo/file offering who’s initial shininess fades rapidly under the light of actual use.

All this, and now an approach to online fraud protection that only an evil dictator could appreciate. Apple’s software was hacked, my friend was affected, and they basically asked him to suck on it and come back for more. I have heard many a user/nerd pontificate on why Apple’s user base pays a premium to be treated like dirt. I have wondered aloud why the governments of the UK and the US will drop the “Monopoly” moniker on Microsoft, but allow Apple to dominate and control the mobile market without a peep. You have to hand it to Apple, they have created the perfect spot for themselves.

I want to have faith in the masses. I want to hope for the day that the users revolt and demand Apple to stop gouging our wallets and closing off their systems. I just don’t see it coming. Talking to other die-hard Apple users, they say that Apple should be allowed to control what is allowed on their devices and operating systems. These are the same people that would have held sit-ins to force Microsoft to allow more than one browser in Windows. The double-standards are obvious and ubiquitous.

I’ve been told that I don’t have to buy Apple products. I don’t have to subject myself to the whims of black turtlenecks. This is true. My hope is now shifting to Microsoft and Google. Two other behemoths that want my money. Here’s hoping that they realize that my business is their privilege, that my information is worth protecting, and that my choice is still mine.

Filed under: Apple Tagged: apple

April 13, 2010

Unit Testing Objects Dependent on ArcGIS Server Javascript API

Recently, I’ve created a custom Dojo dijit that contains a esri.Map from the ArcGIS Server Javascript API. The dijit, right now, creates the map on the fly, so it calls new esri.Map(…) and map.addLayer(new esri.layers.ArcGISTiledMapServiceLayer(url)), for example. This can cause heartburn when trying to unit test the operations that my custom dijit is performing. I am going to run through the current (somewhat hackish) way I am performing unit tests without having to create a full blown HTML representation of the dijit.

I’ll be using JSpec for this example, so you may want to swing over to that site and brush up on the syntax, which is pretty easy to grok, especially if you’ve done any BDD/spec type unit testing before.

The contrived, but relatively common, scenario for this post is:

My custom dijit makes a call to the server to get information about some feature.

The service returns JSON with the extent of the object in question.

I want my map control to go to that extent.

Following the Arrange-Act-Assert pattern for our unit tests, the vast majority of the work here will be in the Arrange part. I don’t want to pull in the entire AGS Javascript API for my unit tests. It’s big and heavy and I am not testing it so I don’t want it. Also, I don’t want to invoke the call to the server in this test. Again, it’s not really what I am testing, and it slows the tests down. I want to test that, if my operation gets the right JSON, it sets the extent on the map properly.

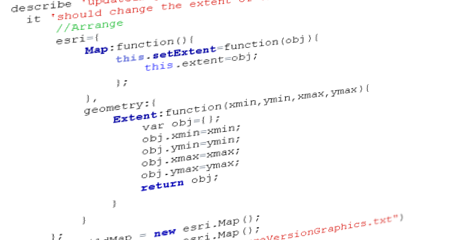

The Whole Test

Here is the whole JSpec file:

describe 'VersionDiff'

before_each

vd = new esi.dijits.VersionDiff();

end

describe 'updateImages()'

it 'should change the extent of the child map'

//Arrange

esri={

Map:function(){

this.setExtent=function(obj){

this.extent=obj;

};

},

geometry:{

Extent:function(xmin,ymin,xmax,ymax){

var obj={};

obj.xmin=xmin;

obj.ymin=ymin;

obj.xmax=xmax;

obj.ymax=ymax;

return obj;

}

}

};

vd.childMap = new esri.Map();

var text = fixture("getFeatureVersionGraphics.txt")

text = dojo.fromJson(text)

//Act

vd.updateMapToFeatureExtent(text)

//Assert

vd.childMap.extent.xmin.should.be 7660976.8567093275

vd.childMap.extent.xmax.should.be 7661002.6869258471

vd.childMap.extent.ymin.should.be 704520.0600393787

vd.childMap.extent.ymax.should.be 704553.8080708608

end

end

end

//Arrange

The Arrange portion of the test stubs out the methods that will be called in the esri namespace. Since the goal of the test is to make sure my djiit changes the extent of the map, all I need to do is stub out the setExtent method on the Map. setExtent takes an Extent object as an argument, so I create that in my local, tiny esri namespace. Now I can set the property on my dijit using my stubbed out map. Thanks to closures global variables (ahem), the esri namespace I just created will be available inside my function under test. Closures are sexy, and I only know enough about them to be dangerous. Yay! I don’t have to suck in all the API code for this little test. That fixture function is provided by JSpec, and basically pulls in a text file that has the JSON I want to use for my test. I created the fixture my saving the output of a call to my service, so now I don’t have to invoke the service inside the unit test.

//Act

This is the easy part. Call the function under test, passing in our fixture.

//Assert

How do I know the extent was changed? When I created my tiny esri namespace, my esri.geometry.Extent() function returns an object that has the same xmin/ymin/xmax/ymax properties of an esri.geometry.Extent object. The setExtent() function on the map stores this object in an extent property. All I have to do is make sure the extent values match what was in my fixture.

I didn’t include the source to the operation being tested, because I don’t think it adds much to the point. Suffice it to say that it calls setExtent() on the childMap property.

So What?

I realize this may not be the greatest or cleanest approach, but it is serving my needs nicely. I am sure in my next refactor of the unit tests that I’ll find a new approach that makes me hate myself for this blog post. As always, leave a comment if you have any insight or opinion. Oh, and regardless of this test, you should really look into JSpec for javascript unit testing. What I show here is barely the tip of what it offers.

![Reblog this post [with Zemanta]](https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/hostedimages/1381355315i/4755210.png)

Filed under: ArcDeveloper, javascript Tagged: Add new tag, ArcGIS Server, javascript, Unit testing

April 1, 2010

Robotlegs and Cairngorm 3: Initial Impressions

Smackdown? (Well, not really...)

I have had Robotlegs on my radar screen for months now, just didn’t have the time/brains to really check it out. I cut my Flex framework teeth on Cairngorm 2, which seems to be the framework non grata of all the current well-known Flex frameworks (Swiz, PureMVC, Robotlegs, etc.) However, at Joel Hooks‘ suggestion, I took a look at Cairngorm 3 (henceforth referred to as CG3), enough to do a presentation on it for a recent nerd conference. The presentation includes a demo app that I wrote using Cairngorm 3, which (currently) uses Parsley as it’s IoC container. That’s right, Cairngorm 3 presumes an IoC container, but does not specify one. This means that you can adapt the CG3 guidelines to your IoC of choice. This is only one of the many ways CG3 is different from CG2, with others being:

No more locators, model or otherwise. Your model can be injected by the IoC or handled via events. For you Singleton haters, this is a biggie.

A Presentation Model approach is prescribed, meaning your MXML components are ignorant of everything except an injected presentation model. The views events (clicks, drags, etc) call functions on the presentation model inline. The presentation model then raises the business events. This allows simple unit testing of the view logic in the presentation models.

The Command Pattern is still in use in CG3, but commands are not mapped the same way. For CG3, your commands are mapped to events by your IoC. Parsley has a command pattern approach in the latest version that actually came from the CG3 beta. This approach uses metadata (like [Command] and [CommandResult]) to tell Parsley which event to map. Again, this results in highly testable command logic.

CG3 includes a few peripheral libraries to handle common needs that are very nice. Things like Popups, navigation, validation, and the observer pattern are all included in libraries that are not dependent on using CG3 or anything, really. If you don’t intend to use CG3, it may be worth your while just to check out these swcs.

All in all, CG3 is a breath of fresh air for someone who has been using CG2. Cairngorm 2 is fine, and it’s certainly better than not using any framework, but the singletons always made me uneasy and, in some of our lazier moments, we ended up with hacks to get the job done. I feel that CG3 really supports a test-driven approach and Parsley is very nice and well-documented. It’s worth saying that I only know enough about Parsley to get it to work in the CG3-prescribed manner, and there seems to be much more to the framework.

Once I had a basic handle on CG3, Joel said he was interested in how CG3 could work with Robotlegs (henceforth referred to as RL). Also, after my presentation, a couple of folks wandered up and mentioned RL as well. So, when I got home, I ported the demo app to RL (it’s a branch of the github repo I link to above) so I could finally check it off my Nerd Bucket List.

First of all, Robotlegs is, like CG3, prescriptive in nature. It is there to help you wire up your dependencies and maintain loose coupling to create highly testable apps (right, all buzzwords out of the way in one sentence. Excellent) Like CG3, it presumes an IoC, but does not specify a particular one. The “default” implementation uses SwiftSuspenders (link?) but it allows anyone to use another IoC if they feel the need. I have heard rumblings of a Parsley implementation of RL, which I’d like to see. Also, the default implementation is more MVCS-ish than the default CG3 implementation. What the hell does that mean? Well, MVC is a broad brush and can be applied to many architectures. In my opinion, the CG3-Parsley approach uses the Presentation Model as the View, the Parsley IoC/Messaging framework for the controller and injected value objects for the model. The RL approach uses view mediators and views for the view, which reverses the dependency from CG3. The RL controller is very similar, but commands are mapped explicitly in the context to their events, rather than using metadata. The model is also value objects, but it’s handled differently. In CG3, the model is injected into commands and presentation models, then bound to the view. So, if a command changes a model object, it’s reflected in the presentation model, and the view is bound to that attribute on the presentation model. In RL, the command would raise an event to signify model changes, passing the changes as the event payload. The view mediator listens for the event, handles the event payload to update the view, again through data binding. (NOTE: You can handle the model this way in Parsley as well, using [MessageHandler] metadata tags, FYI)

It’s worth mentioning that when I did the RL port, I added a twist by using the very impressive as3-signals library from Robert Penner. Signals is an alternative to event handling in Flex, and I really like it. Check it out. Anyway, RL and Signals play very well together, but it means I wasn’t necessarily comparing apples-to-apples. Signals is not a requirement of using RL, at all, but the Twittersphere was raving about it and I can see why. The biggest con to using Signals with CG3 might be some of the peripheral CG3 libraries. For example, I think you’d end up writing more code to adapt things like the Navigation Library to Signals. The Navigation Library uses NavigationEvent to navigate between views, which would need to be adapted to Signals. Of course, I am of the opinion that, if you are going to use something like Signals, you should use it for ALL events and not mix the two eventing approaches. This is a philosophical issue that hasn’t had the chance (in my case) to be tested by pragmatism.

So, which framework do I like better? After a single demo application, it’s hard to make a firm choice. I really like the CG3 approach, and it’s only in beta. However, I also really like the Signals and RL integration, which I think makes eventing in Flex much easier to code and test. I am not that big a fan of the SwftSuspenders IoC context, as there doesn’t seem to be anyway to change parameters at runtime, which is something I use IoC to handle. An example is a service URL that would be different for test and production. I’d like to be able to change that in an XML file without having to rebuild my SWF. I asked about this on the Robotlegs forum, and was told that it’s a roll-your-own answer. On the other hand, Parsely offers the ability to create the context in MXML, actionscript, XML, or any mixture of the three. I like that. I think the winner could be a Parsley-RL-Signals implementation pulling in the peripheral CG3 libraries, which mixes everything I like into one delicious approach. MMMMMM.

Anyone have questions about the two frameworks that I didn’t cover? Hit the comments. Also, if anything I have said/presumed/implied here is flat out wrong, please correct me. The last thing I want to do is lead people (including myself) down the wrong path.

Related articles by Zemanta (well, some of them were by Zemanta, others by me)

Cairngorm 3.0.2 release (blogs.adobe.com)

Joel Hooks’ Robotlegs Image Gallery Example using the Presentation Model (I used this for my port to RL)

Enterprise Development with Flex (oreilly.com) This book looks interesting, but they (disappointingly) stick to discussing CG2.

The Flexible Configuration Options of Parsley (blogs.adobe.com)

Eidiot’s Signals Async Testing Utility on Github (use this to test your signals)

People You Should Follow on Twitter About This Stuff

Joel Hooks

Eidiot

Odoenet

Me (if ya want

)

)![Reblog this post [with Zemanta]](https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/hostedimages/1381160958i/4413460.png)

Filed under: Flex Tagged: Cairngorm 3, Flex, robotlegs

Glenn Goodrich's Blog