Greg L. Turnquist's Blog, page 9

May 17, 2016

Demo app built for #LearningSpringBoot

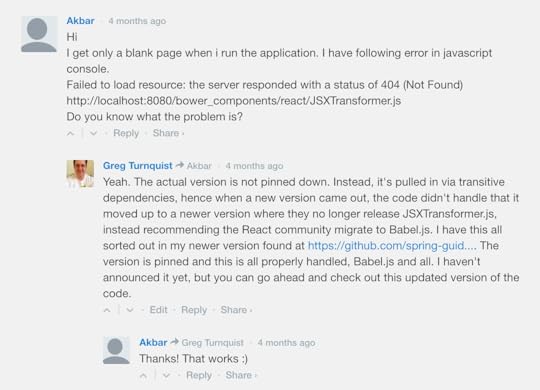

I’ve spent the last few days building the app I will use in the new Learning Spring Boot video. I was able to actually move quickly thanks to the power of Spring Boot, Spring Data, and some other features I’ll dive into in more detail in the video itself.

I’ve spent the last few days building the app I will use in the new Learning Spring Boot video. I was able to actually move quickly thanks to the power of Spring Boot, Spring Data, and some other features I’ll dive into in more detail in the video itself.

It’s really fun to sit down and BUILD an application with Spring Boot. The Spring Framework has always been fun to develop apps. But Spring Boot takes that fun and excitement to a whole new level. The hardest part was saying, “this is all I need. This covers the case” and stopping. While I have it mostly in place. Just a few bits left.

Having been inspired a couple years ago by this hilarious clip, I sought the most visually stunning and audacious demo I could think of: snapping a picture and uploading for others to see.

I created one based on Spring Data REST for various conferences and eventually turned it into a scaleable microservice based solution. (See my videos on the side bar for examples of that!) That was really cool and showed how far you could get with a domain model definition. But that path isn’t the best route for this video. So I am starting over.

I created one based on Spring Data REST for various conferences and eventually turned it into a scaleable microservice based solution. (See my videos on the side bar for examples of that!) That was really cool and showed how far you could get with a domain model definition. But that path isn’t the best route for this video. So I am starting over.

Once again, I take the same concept, but rebuild it from scratch using the same focus I used in writing the original Learning Spring Boot book. Take the most often used bits of Spring (Spring MVC, Spring Data, and Spring Security), and show how Spring Boot accelerates the developer experience while prepping you for Real World ops.

Once again, I take the same concept, but rebuild it from scratch using the same focus I used in writing the original Learning Spring Boot book. Take the most often used bits of Spring (Spring MVC, Spring Data, and Spring Security), and show how Spring Boot accelerates the developer experience while prepping you for Real World ops.

I have chatted with many people including those within my own company, and those outside. They have all given me a consistent message: it helped them with some of the most common situations they face on a daily basis. That’s what I was shooting for! Hopefully, this time around I can trek along the same critical path but cover ground that was missed last time. And also prune out things that turned out to be mistakes. (e.g. This time around, I’m not spending as time on JavaScript.)

I have chatted with many people including those within my own company, and those outside. They have all given me a consistent message: it helped them with some of the most common situations they face on a daily basis. That’s what I was shooting for! Hopefully, this time around I can trek along the same critical path but cover ground that was missed last time. And also prune out things that turned out to be mistakes. (e.g. This time around, I’m not spending as time on JavaScript.)

Now it’s time to knuckle down and lay out the scripts and start recording video. That part will be new ground. I have recorded screencasts and webinars. But not on this scale. I hope to build the best video for all my readers (and soon to be viewers).

Now it’s time to knuckle down and lay out the scripts and start recording video. That part will be new ground. I have recorded screencasts and webinars. But not on this scale. I hope to build the best video for all my readers (and soon to be viewers).

Wish me luck!

The post Demo app built for #LearningSpringBoot appeared first on Greetings Programs.

May 13, 2016

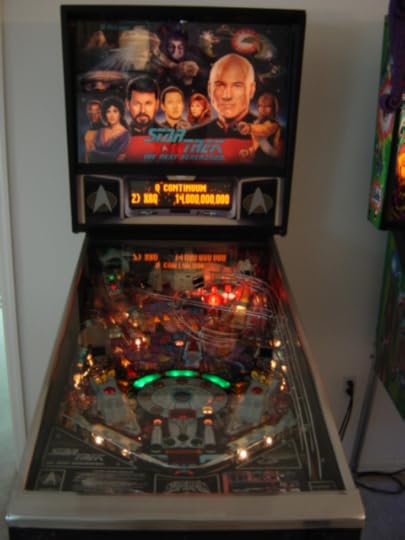

Awesome pinball invitational @RavenTools last night! #pinball

Last night was phenomenal! In listening to the inaugural episode of NashDev podcast, I heard word of a pinball invitational being hosted in . Trekking there last night with my father-in-law, we didn’t leave until after it’s official time had long past.

Last night was phenomenal! In listening to the inaugural episode of NashDev podcast, I heard word of a pinball invitational being hosted in . Trekking there last night with my father-in-law, we didn’t leave until after it’s official time had long past.

Chatting with the locals was also pretty cool. And reminded me how much I missed playing pinball. Now that my kids are getting bigger, I might be finding more time to put them into action. My five-year-old will now power-on Cirqus Voltaire, pull a chair over, and start playing.

They tipped me off about other collectors in my own town, so I have started poking around to rekindle my pinball presence.

I traded stories with several of the other collectors. I had forgotten how fun it was to talk shop about my machines and hear about how others had found theirs. It also reminded me how in this day and age, where everything is on the Internet, pinball is one of those things where it’s so much fun to get your hands on it.

I traded stories with several of the other collectors. I had forgotten how fun it was to talk shop about my machines and hear about how others had found theirs. It also reminded me how in this day and age, where everything is on the Internet, pinball is one of those things where it’s so much fun to get your hands on it.

I signed my name on the way out, adding myself to a list of people interested in doing more pinball stuff in the future. All in all, it was an awesome time.

http://greglturnquist.com/wp-content/uploads/2016/05/pinball-at-raventools.mp4

The post Awesome pinball invitational @RavenTools last night! #pinball appeared first on Greetings Programs.

May 10, 2016

#LearningSpringBoot Video is on its way!

I just signed a contract to produce a video-based sequel to Learning Spring Boot, a veritable 2nd edition!

I just signed a contract to produce a video-based sequel to Learning Spring Boot, a veritable 2nd edition!

This will move a lot faster than its predecessor. Schedule says primary recording should be done sometime in August, so hopefully you can have it in your hands FAST! (I know Packt has quick schedules, but this is even faster).

This won’t be some rehash of the old book. Instead, we’ll cover a lot of fresh ground including:

Diving into Spring’s start.spring.io website.

Building a rich, fully functional web app using Spring Boot, Spring Data JPA, Spring Security, and more.

Using Boot’s latest and greatest tools like: Actuator, DevTools, and CRaSH.

I’ve written three books. Recording video will be a new adventure. If you want to stay tuned for updates, be sure to sign up for my newsletter.

Can’t wait! Hope you’re as excited as me.

The post #LearningSpringBoot Video is on its way! appeared first on Greetings Programs.

May 3, 2016

REST, SOAP, and CORBA, i.e. how we got here

I keep running into ideas, thoughts, and decisions swirling around REST. So many things keep popping up that make me want to scream, “Just read the history, and you’ll understand it!!!”

So I thought I might pull out the good ole Wayback Machine to an early part of my career and discuss a little bit about how we all got here.

So I thought I might pull out the good ole Wayback Machine to an early part of my career and discuss a little bit about how we all got here.

In the good ole days of CORBA

This is an ironic expression, given computer science can easily trace its roots back to WWII and Alan Turing, which is way before I was born. But let’s step back to somewhere around 1999-2000 when CORBA was all the rage. This is even more ironic, because the CORBA spec goes back to 1991. Let’s just say, this is where I come in.

First of all, do you even know what CORBA is? It is the Common Object Request Broker Architecture. To simplifiy, it was an RPC protocol based on the Proxy pattern. You define a language neutral interface, and CORBA tools compile client and server code into your language of choice.

The gold in all this? Clients and servers could be completely different languages. C++ clients talking to Java servers. Ada clients talking to Python servers. Everything from the interface definition language to the wire protocol was covered. You get the idea.

Up until this point, clients and servers spoke binary protocols bound up in the language. Let us also not forget, that open source wasn’t as prevalent as it is today. Hessian RPC 1.0 came out in 2004. If you’re thinking of Java RMI, too bad. CORBA preceded RMI. Two systems talking to each other were plagued by a lack of open mechanisms and tech agreements. C++ just didn’t talk to Java.

CORBA is a cooking!

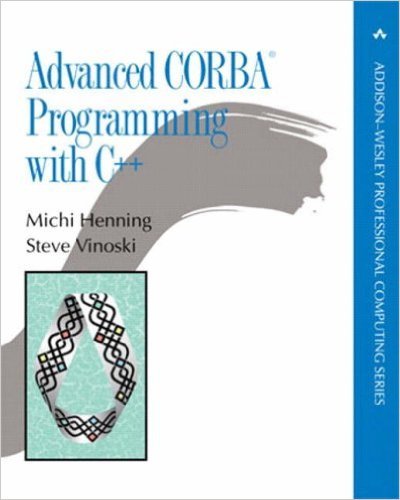

With the rise of CORBA, things started cooking. I loved it! In fact, I was once known as Captain Corba at my old job, due to being really up to snuff on its ins and outs. In a rare fit of nerd nirvana, I purchased Steve Vinoski’s book Advanced CORBA Programming with C++, and had it autographed by the man himself when he came onsite for a talk.

With the rise of CORBA, things started cooking. I loved it! In fact, I was once known as Captain Corba at my old job, due to being really up to snuff on its ins and outs. In a rare fit of nerd nirvana, I purchased Steve Vinoski’s book Advanced CORBA Programming with C++, and had it autographed by the man himself when he came onsite for a talk.

Having written a mixture of Ada and C++ at the beginning of my career, it was super cool watching another team build a separate subsystem on a different stack. Some parts were legacy Ada code, wrapped with an Ada-Java-CORBA bridge. Fresh systems were built in Java. All systems spoke smoothly.

The cost of CORBA

This was nevertheless RPC. Talking to each other required meeting and agreeing on interfaces. Updates to interfaces required updates on both sides. The process to make updates was costly, since it involved multiple people meeting in a room and hammering out these changes.

This was nevertheless RPC. Talking to each other required meeting and agreeing on interfaces. Updates to interfaces required updates on both sides. The process to make updates was costly, since it involved multiple people meeting in a room and hammering out these changes.

The high specificity of these interfaces also made the interface brittle. Rolling out a new version required ALL clients upgrade at once. It was an all or nothing proposition.

At the time, I was involved with perhaps half a dozen teams and the actual users was quite small. So the cost wasn’t that huge like today’s web scale problems.

Anybody need a little SOAP?

After moving off that project, I worked on another system that required integrate remote systems. I rubbed my hands together, ready to my polished CORBA talents to good use again, but our chief software engineer duly informed me a new technology being evaluted: SOAP.

After moving off that project, I worked on another system that required integrate remote systems. I rubbed my hands together, ready to my polished CORBA talents to good use again, but our chief software engineer duly informed me a new technology being evaluted: SOAP.

“Huh?”

The thought of chucking all this CORBA talent did not excite me. A couple of factors transpired FAST that allowed SOAP to break onto the scene.

First of all, this was Microsoft’s response to the widely popular CORBA standard. Fight standards with standards, ehh? In that day and age, Microsoft fought valiantly to own any stack, end-to-end (and they aren’t today???? Wow!) It was built up around XML (another new acronym to me). At the time of its emergence, you could argue it was functionally equivalent to CORBA. Define your interface, generate client-side and server-side code, and its off the races, right?

But another issue was brewing in CORBA land. The OMG, the consortium responsible for the CORBA spec, had gaps not covered by the spec. Kind of like trying to ONLY write SQL queries with ANSI SQL. Simply not good enough. To cover these gaps, very vendor had proprietary extensions. The biggest one was Iona, an Irish company that at one time held 80% of the CORBA marketshare. We knew them as “I-own-ya'” given their steep price.

CORBA was supposed to cross vendor supported, but it wasn’t. You bought all middleware from the same vendor. Something clicked, and LOTS of customers dropped Iona. This galvanized the rise of SOAP.

But there was a problem

SOAP took off and CORBA stumbled. To this day, we have enterprise customers avidly using Spring Web Services, our SOAP integration library. I haven’t seen a CORBA client in years. Doesn’t mean CORBA is dead. But SOAP moved into the strong position.

Yet SOAP still had the same fundamental issue: fixed, brittle interfaces that required agreement between all parties. Slight changes required upgrading everyone.

When you build interfaces designed for machines, you usually need a high degree of specification. Precise types, fields, all that. Change one tiny piece of that contract, and clients and servers are no longer talking. Things were highly brittle. But people had to chug along, so they started working around the specs anyway they could.

I worked with a CORBA-based off the shelf ticketing system. It had four versions of its CORBA API to talk to. A clear problem when using pure RPC (CORBA or SOAP).

Cue the rise of the web

While “rise of the web” sounds like some fancy Terminator sequel, the rampant increase in the web being the platform of choice for e-commerce, email, and so many other things caught the attention of many including Roy Fielding.

While “rise of the web” sounds like some fancy Terminator sequel, the rampant increase in the web being the platform of choice for e-commerce, email, and so many other things caught the attention of many including Roy Fielding.

Roy Fielding was a computer scientist that had been involved in more than a dozen RFC specs that governed how the web operated, the biggest arguably being the HTTP spec. He understood how the web worked.

The web had responded to what I like to call brute economy. If literally millions of e-commerce sites were based on the paradigm of brittle RPC interfaces, the web would never have succeeded. Instead, the web was built up on lots of tiny standards: exchanging information and requests via HTTP, formatting data with media types, a strict set of operations known as the HTTP verbs, hypermedia links, and more.

But there was something else in the web that was quite different. Flexibility. By constraining the actual HTML elements and operations that were available, browsers and web servers became points of communication that didn’t require coordination when a website was updated. Moving HTML forms around on a page didn’t break consumers. Changing the text of a button didn’t break anything. If the backend moved, it was fine as long as the link in the page’s HTML button was updated.

The REST of the story

In his doctoral dissertation published in 2000, Roy Fielding attempted to take the lessons learned from building a resilient web, and apply them to APIs. He dubbed this Representational Transfer of State or REST.

In his doctoral dissertation published in 2000, Roy Fielding attempted to take the lessons learned from building a resilient web, and apply them to APIs. He dubbed this Representational Transfer of State or REST.

So far, things like CORBA, SOAP, and other RPC protocols were based on the faulty premise of defining with high precision the bits of data sent over the wire and back. Things that are highly precise are the easiest to break.

REST is based on the idea that you should send data but also information on how to consume the data. And by adopting some basic constraints, clients and servers can work out a lot of details through a more symbiotic set of machine + user interactions.

For example, sending a record for an order is valuable, but it’s even handier to send over related links, like the customer that ordered it, links to the catalog for each item, and links to the delivery tracking system.

Clients don’t have to use all of this extra data, but by providing enough self discovery, clients can adapt without suffering brittle updates.

The format of data can be dictated by media types, something that made it easy for browsers to handle HTML, image files, PDFs, etc. Browsers were coded once, long ago, to render a PDF document inline including a button to optionally save. Done and done. HTML pages are run through a different parser. Image files are similarly rendered without needing more and more upgrades to the browser. With a rich suite of standardized media types, web sites can evolve rapidly without requiring an update to the browser.

Did I mention machine + user interaction? Instead of requiring the client to consume links, it can instead display the links to the end user and let he or she actually click on them. We call this well known technique: hypermedia.

To version or not to version, that is the question!

A question I get anytime I discuss Spring Data REST or Spring HATEOAS is versioning APIs. To quote Roy Fielding, don’t do it! People don’t version websites. Instead, they add new elements, and gradually implement the means to redirect old links to new pages. A better summary can be found in this interview with Roy Fielding on InfoQ.

A question I get anytime I discuss Spring Data REST or Spring HATEOAS is versioning APIs. To quote Roy Fielding, don’t do it! People don’t version websites. Instead, they add new elements, and gradually implement the means to redirect old links to new pages. A better summary can be found in this interview with Roy Fielding on InfoQ.

When working on REST APIs and hypermedia, your probing question should be, “if this was a website viewed by a browser, would I handle it the same way?” If it sounds crazy in that context, then you’re probably going down the wrong path.

Imagine a record that includes both firstName and lastName, but you want to add fullName. Don’t rip out the old fields. Simply add new ones. You might have to implement some conversions and handlers to help older clients not yet using fullName, but that is worth the cost of avoiding brittle changes to existing clients. It reduces the friction.

In the event you need to REALLY make a big change to things, a simple version number doesn’t cut it. On the web, it’s called a new website. So release a new API at a new path and move on.

People clamor HARD for getting the super secret “id” field from a data record instead of using the “self” link. HINT: If you are pasting together URIs to talk to a REST service, something is wrong. It’s either your approach to consuming the API, or the service itself isn’t giving you any/enough links to navigate it.

When you get a URI, THAT is what you put into your web page, so the user can see the control and pick it. Your code doesn’t have to click it. Links are for users.

Fighting REST

To this day, people are still fighting the concept of REST. Some have fallen in love with URIs that look like http://example.com/orders/523/lineite... and http://example.com/orders/124/customer, thinking that these pretty URLs are the be-all/end-all of REST. Yet they code with RPC patterns.

To this day, people are still fighting the concept of REST. Some have fallen in love with URIs that look like http://example.com/orders/523/lineite... and http://example.com/orders/124/customer, thinking that these pretty URLs are the be-all/end-all of REST. Yet they code with RPC patterns.

In truth, formatting URLs this way, instead of as http://example.com/orders?q=523&l... or http://example.com/orders?q=124&m... is to take advantage of HTTP caching when possible. A Good Idea(tm), but not a core tenet.

As a side effect, handing out JSON records with {orderId: 523} has forced clients to paste together links by hand. These links, not formed by the server, are brittle and just as bad as SOAP and CORBA, violating the whole reason REST was created. Does Amazon hand you the ISBN code for a book and expect you to enter into the “Buy It Now” button? No.

Many JavaScript frameworks have arisen, some quite popular. They claim to have REST support, yet people are coming on to chat channels asking how to get the “id” for a record so they can parse or assemble a URI.

BAD DESIGN SMELL! URIs are built by the server along with application state. If you clone state in the UI, you may end up replicating functionality and hence coupling things you never intended to.

Hopefully, I’ve laid out some the history and reasons that REST is what it is, and why learning what it’s meant to solve can help us all not reinvent the wheel of RPC.

The post REST, SOAP, and CORBA, i.e. how we got here appeared first on Greetings Programs.

March 23, 2016

#opensource is not a charity

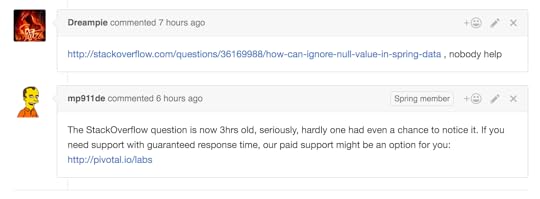

Logging onto my laptop this morning, I have already seen two tickets opened by different people clamoring for SOMEONE to address their stackoverflow question. They appeared to want an answer to their question NOW. The humor in all this is that the issue itself is only seven hours old, with the person begging for a response when their question is barely three hours old. Sorry, but open source is not a charity.

Logging onto my laptop this morning, I have already seen two tickets opened by different people clamoring for SOMEONE to address their stackoverflow question. They appeared to want an answer to their question NOW. The humor in all this is that the issue itself is only seven hours old, with the person begging for a response when their question is barely three hours old. Sorry, but open source is not a charity.

If you have a critical issue, perhaps you should think about paying for support. It’s what other customers need when they want a priority channel. It definitely isn’t free as in no-cost. Something that doesn’t work is opening a ticket with nothing more than a link to your question.

If you have a critical issue, perhaps you should think about paying for support. It’s what other customers need when they want a priority channel. It definitely isn’t free as in no-cost. Something that doesn’t work is opening a ticket with nothing more than a link to your question.

Open source has swept the world. If you don’t get onboard to using it, you risk being left in the dust. But too many think that open source is free, free, FREE. That is not the case. Open source means you can access the source code. Optimally, you have the ability to tweak, edit, refine, and possibly send back patches. But nowhere in there is no-cost support.

Open source has swept the world. If you don’t get onboard to using it, you risk being left in the dust. But too many think that open source is free, free, FREE. That is not the case. Open source means you can access the source code. Optimally, you have the ability to tweak, edit, refine, and possibly send back patches. But nowhere in there is no-cost support.

In a company committed to open source, we focus on building relationships with various communities. The Spring Framework has grown hand over fist in adoption and driven much of how the Java community builds apps today. Pivotal Cloud Foundry frequently has other companies sending in people to pair with us. It’s a balancing act when trying to coach users to not assume their question will be answered instantly.

In a company committed to open source, we focus on building relationships with various communities. The Spring Framework has grown hand over fist in adoption and driven much of how the Java community builds apps today. Pivotal Cloud Foundry frequently has other companies sending in people to pair with us. It’s a balancing act when trying to coach users to not assume their question will be answered instantly.

I frequent twitter, github, stackoverflow, and other forums to try and interact with the community. If at all possible, I shoot to push something through. Many times, if we’re talking about a one-line change, it’s even easier. But at the end of the day, I have to draw a line and focus on priorities. This can irk some members not aware of everything I’m working on. That is a natural consequence.

I frequent twitter, github, stackoverflow, and other forums to try and interact with the community. If at all possible, I shoot to push something through. Many times, if we’re talking about a one-line change, it’s even easier. But at the end of the day, I have to draw a line and focus on priorities. This can irk some members not aware of everything I’m working on. That is a natural consequence.

Hopefully, as open source continues to grow, we can also mature people’s expectations between paid and un-paid support. Cheers!

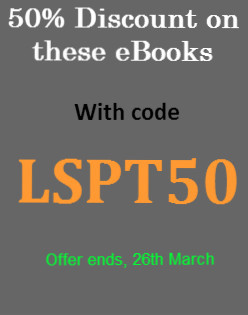

P.S. For a little while longer, there is a coupon code to Learning Spring Boot for 50% off (Python Testing Cookbook as well!)

The post #opensource is not a charity appeared first on Greetings Programs.

February 23, 2016

Spring Boot is still a gem…waiting to be discovered

Last week, I had the good fortune of speaking twice at the DevNexus conference, the 2nd largest Java conference in North America. It was awesome! Apart from being a total geek fest with international attendance, it was a great place to get a bigger picture of the state of the Java community.

Last week, I had the good fortune of speaking twice at the DevNexus conference, the 2nd largest Java conference in North America. It was awesome! Apart from being a total geek fest with international attendance, it was a great place to get a bigger picture of the state of the Java community.

A bunch of people turned up for my Intro to Spring Data where we coded up an employee management system from scratch inside of 45 minutes. You can see about 2/3 of the audience right here.

A bunch of people turned up for my Intro to Spring Data where we coded up an employee management system from scratch inside of 45 minutes. You can see about 2/3 of the audience right here.

It was a LOT of fun. It was like pair programming on steroids when people helped me handle typos, etc. It was really fun illustrating how you don’t have to type a single query to get things off the ground.

What I found interesting was how a couple people paused me to ask questions about Spring Boot! I wasn’t expecting this, so it caught me off guard when asked “how big is the JAR file you’re building?” “How much code do you add to make that work?” “How much code is added to support your embedded container?”

What I found interesting was how a couple people paused me to ask questions about Spring Boot! I wasn’t expecting this, so it caught me off guard when asked “how big is the JAR file you’re building?” “How much code do you add to make that work?” “How much code is added to support your embedded container?”

Something I tackled in Learning Spring Boot was showing people the shortest path to get up and running with a meaningful example. I didn’t shoot for contrived examples. What use is that? People often take code samples and use it as the basis for a real system. That’s exactly the audience I wrote for.

Something I tackled in Learning Spring Boot was showing people the shortest path to get up and running with a meaningful example. I didn’t shoot for contrived examples. What use is that? People often take code samples and use it as the basis for a real system. That’s exactly the audience I wrote for.

People want to write simple apps with simple pages leveraging simple data persistence. That is Spring Boot + Spring Data out of the running gate. Visit http://start.spring.io and get off the ground! (Incidentally, THIS is what my demo was about).

I was happy to point out that the JAR file I built contained a handful of libraries along with a little “glue code” to read JAR-within-a-JAR + autoconfiguration stuff. I also clarified that the bulk of the code is actually your application + Tomcat + Hibernate. The size of Boot’s autoconfiguration is nothing compared to all that. Compare that to time and effort to write deployment scripts, maintenance scripts, and whatever else glue you hand write to deploy to an independent container. Spring Boot is a life saver in getting from concept to market.

I was happy to point out that the JAR file I built contained a handful of libraries along with a little “glue code” to read JAR-within-a-JAR + autoconfiguration stuff. I also clarified that the bulk of the code is actually your application + Tomcat + Hibernate. The size of Boot’s autoconfiguration is nothing compared to all that. Compare that to time and effort to write deployment scripts, maintenance scripts, and whatever else glue you hand write to deploy to an independent container. Spring Boot is a life saver in getting from concept to market.

It was fun to see at least one person in the audience jump to an answer before I could. Many in the audience were already enjoying Spring Boot, but it was fun to see someone else (who by the way came up to ask more questions afterward) discovering the gem of Spring Boot for the first time.

To see the glint in someone’s eye when they realize Java is actually cool. Well, that’s nothing short of amazing.

To see the glint in someone’s eye when they realize Java is actually cool. Well, that’s nothing short of amazing.

The post Spring Boot is still a gem…waiting to be discovered appeared first on Greetings Programs.

February 3, 2016

LVM + RAID1 = Perfect solution to upgrade woes

As said before, I’m rebuilding an old system and have run into sneaky issues. In trying to upgrade from Ubuntu 12.04 to 14.04, it ran out of disk space at the last minute, and retreated to the old install. Unfortunately, this broke its ability to boot.

Digging in, it looks like Grub 2 (the newer version) can’t install itself properly due to insufficient space at the beginning of the partition. Booting up from the other disk drives (from a different computer), I have dug in to solve the problem.

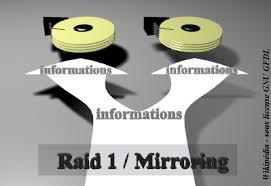

How do you repartition a disk drive used to build a RAID 1 array, that itself is hosting a Linux Volume Group?

It’s not as hard as you think!

A mirror RAID array means you ALWAYS have double the disk space needed. So…I failed half of my RAID array and removed one of the drives from the assembly. Then I wiped the partition table and built a new one…starting at later cylinder.

A mirror RAID array means you ALWAYS have double the disk space needed. So…I failed half of my RAID array and removed one of the drives from the assembly. Then I wiped the partition table and built a new one…starting at later cylinder.

POOF! More disk space instantly available at the beginning of the disk for GRUB2.

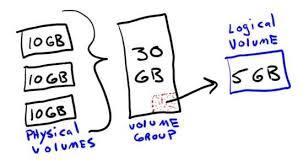

Now what?!? Creating a new RAID array partition in degraded mode, I add it the LVM volume group as a new physical volume.

Then I launch LVM’s handy pvmove command, which moves everything off the old RAID array and onto the new one.

Then I launch LVM’s handy pvmove command, which moves everything off the old RAID array and onto the new one.

Several hours later, I can reduce the volume group and remove the older RAID array. With everything moved onto the newly resize partition, I can then destroy and rebuild the old disk with the same geometry as the new one, pair it up, and BAM! RAID array back in action, and let it sync back up.

This should line things up to do a GRUB2 chroot installation.

With LVM it’s easy to shrink and expand partitions, reclaim some spare space, and move stuff around. But you are nicely decouple from the physical drives.

With RAID1, you have high reliability by mirroring. And as a side effect, you always have a space disk on hand if you need to move data around. I once moved live MythTV video data off the system to reformat my video partition into xfs.

The post LVM + RAID1 = Perfect solution to upgrade woes appeared first on Greetings Programs.

February 2, 2016

Out with old and in with the new

I have been waiting a long time to resurrect an old friend of mine: my MythTV box. I built that machine ten years ago. (I’d show you the specs, but they’re locked away ON the machine in an antique mediawiki web server). It runs Root-on-LVM-on-Raid top to bottom (which, BTW, requires LILO).

I have been waiting a long time to resurrect an old friend of mine: my MythTV box. I built that machine ten years ago. (I’d show you the specs, but they’re locked away ON the machine in an antique mediawiki web server). It runs Root-on-LVM-on-Raid top to bottom (which, BTW, requires LILO).

It was great project to build my own homebrew DVR. But with the advent of digital cable and EVERYTHING getting scrambled, those days are gone. So it’s sat in the corner for four years. FOUR YEARS. I’m reminded of this through periodic reports from CrashPlan.

I started to get this idea in my head that I could rebuild it with Mac OSX and make it a seamless backup server. Until I learned it was too hold to not support OSX. So it continued to sit, until I learned that I could install the right bits for it to speak “Apple”, and hence become a Time Machine capsule.

So here we go! I discovered that I needed a new VGA cable and power cord to hook up the monitor. After that came in, I booted it up…and almost cried. Almost.

As I logged in, I uncovered neat stuff and some old commands I hadn’t typed in years. But suffice it to say, it is now beginning its first distro upgrade (probably more to come after that), and when done, I’ll migrate it off of being a Mythbuntu distro and instead pick mainline Ubuntu (based on GNOME).

One that is done, I hope to install docker so I spin up services needed (like netatalk) much faster and get ahold of its ability to provide an additional layer of home support for both my and my wife’s Mac laptops.

The post Out with old and in with the new appeared first on Greetings Programs.

January 25, 2016

Book Report: Area 51 by Bob Mayer

As indicated before, I started reading break away or debut novels by prominent authors last year. And here I am to deliver another book report!

Area 51 – Bob Mayer

Bob Mayer was one of the speakers at last year’s Clarksville Writer’s Conference. He was hilarious, gung ho, maybe a tad bombastic (retire Green Beret), and best selling author that had no hesitation to brag he makes about $1000/day with his trove of published novels.

Like or hate his personality, he has succeeded so I wanted to read one of his first works. It turns out, this novel was released under the pen name “Robert Doherty” through classic channels. He has since gotten the IP rights for all these past novels reverted back to him, a business move worthy of respect, and moved on to e-books.

Back to the story. It really is pretty neat. The writing is crisp, the dialog cool. I kept turning page after page, wanting to know what happens. I also had an inbuilt curiosity as to what this author would do. I have seen TV shows set in Area 51 like Seven Days, Stargate: SG-1 (based near Area 51 and steeped in similar military conspiracy), and other movies.

There was a bit of investigative journalism gone wrong combined with other historical legends. I must admit that part (won’t give it away!) really whet my appetite.

Bob Mayer indeed knows how to write. He knows how to make you turn the pages. I think I spent 3-4 days tops reading this book. I’ll confess it didn’t match my hunger in reading the debut Jack Reacher novel KILLING FLOOR. But then again, I’m finding it hard to spot the next novel that will compete on that level.

I’ll shoot with you straight on this: it wasn’t as hard to move to another novel by another author when I finished as it was for certain other novels. There were other series novels I read last year that made it hard to stop and move on instead of continuing the series. This one wasn’t the same. Will I ever go back and read more of Bob Mayer’s books?

Maybe/maybe no. I have read some of his other non-fiction books on writing craft, so in a sense, the man has already scored additional sales. It takes a top notch story with top notch characters and top notch writing to score that with me, and Jack Reacher has made me picky. Don’t take it a nock.

If you like SciFi and military conspiracies, you’ll find this book most entertaining.

Happy reading!

The post Book Report: Area 51 by Bob Mayer appeared first on Greetings Programs.

January 18, 2016

Book Report: The Andromeda Strain by Michael Crichton

Over the past year, I have been on a bit of a reading binge. I got this idea at the 2015 Clarksville Writer’s Conference to read the debut novel of top notch authors. Instead of reading a series or stack of novels by one author, I’ve been jumping from author to author, looking for a cross section of writing styles, views on things, and varied tastes.

This is my first of many book reports, so without further ado….

The Andromeda Strain – Michael Crichton

There was a movie by the same name released in 1971. As a kid, I had seen it a dozen times. Okay, maybe not that much, but anytime I spotted it, I had to stop what I was doing and watch it. It’s so cool, despite its dated look. When I learned, years later, that this was the break away novel (not debut) of the famous Harvard doctor Michael Crichton, it blew me away. I finally bit the bullet and read it last year.

A team of scientists battle a strange disease that threatens all of mankind. But instead of being loaded with cliches, the scientists battle it with real science. And they have real, believable issues that hamper their pursuit of a cure.

One scientist spots a key symptom early on that would result in a solution, but a strange, unexplainable incident causes him to forget this epiphany. Having seen the movie, I knew what happened. I won’t spoil it for you and tell you what it is, but suffice it to say that I have suffered the same in the past, and this connected with me on a personal level.

Michael Crichton has a strong basis in biological science with his medical education. He clearly shows preferences for the hard sciences as did Isaac Asimov. He takes things into the realm of “this may not exist today, but I believe it could in the future.”

The novel isn’t as dated as the movie. The scenes with the military sound realistic. I can visualize the parts in the labs where experiments are conducted. I may not be on top of medical research, so perhaps some of the stuff mentioned is ancient. But it gripped me. And it doesn’t slow down and bore you with research, but instead makes things exciting.

I was pleasantly surprised to learn that little was changed from novel to movie. The novel has an all male set of characters whereby they changed a key doctor to a woman in the movie, for the better. delivers a superior performance as a sassy, knows-what-she-knows microbiologist. But the core story and the big wrinkles are all there. Makes me want to go and watch the movie, again.

The whole thing is cutely wrapped up as a government memo you are reading implying this event DID happen. I always enjoy little bits like that, and I hope you do as well.

Happy reading until my next book report!

The post Book Report: The Andromeda Strain by Michael Crichton appeared first on Greetings Programs.