David Weinberger's Blog, page 90

May 23, 2012

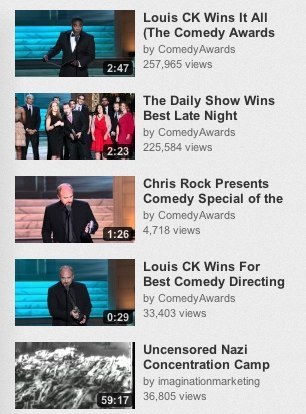

YouTube’s idea of personal relevance

Here’s the queue of videos YouTube suggested for me when I watched a Louis CK segment of the Comedy Awards:

Customer support (and collateral PR) at Reddit: How it’s done

A user who goes by the name Loyal2nes (NES = Nintendo gaming platform) had a problem: the game Civilization 4 kept crashing. So s/he posted about it on the game maker’s customer support site. Two days later, a customer support agent, Alexis L, replied that the problem is that Loyal2nes’s device only has 4096mb of RAM, whereas it needs at least 2 gigabytes. Unfortunately, Alexis did not understand that 4096 megabytes is the same as 4 gigabytes. Ooops.

Loyal2nes posted a screencapture of the exchange under a sarcastic headline, and opened up a thread about it at Reddit, where it climbed to the front page.

And the top-voted comment among the 460+ comments is from the Reddit user dahanese. Here’s her response:

Hey Loyal -

I’m Elizabeth Tobey and I’m the head of customer service – first off, I want to apologize because that’s a pretty embarrassing mistake. Secondly, I want to let you know I’m reopening your ticket and escalating it up. Chances are, I won’t get a response from the team who can help test out tonight and we’ll have a bit more back and forth in the coming days to try and troubleshoot the issue, but I promise I won’t tell you 4096MB is under spec and close your ticket.

Let me know if you have more questions now (although we can use the Support system and not reddit if you want!)

-e.

It doesn’t end there, though. Elizabeth stays with the thread as it expands and diverges. She’s frank, funny, and, as the thread continues, makes it clear that she’s not an interloper at Reddit. In fact, she’s been a Redditor (participant) for a while, participating in the threads that interest her. Often those threads are about gaming, but she also comments on ther serendipitous topics that make Reddit so much fun.

So, what’s so right about how Elizabeth handled this?

Her reply was frank, helpful, non-defensive, and understood the customer’s point of view

She identified herself by name and position

She exhibited a genuine interest in the overall thread, not simply in patching up a problem

She was speaking for 2K but very clearly also as herself and in her own voice

She spoke in a way that did not just serve her employer but, more importantly, served the conversation

She was already a member of the community — an enabler for the rest of this list

The only thing that could have made this a better example of how customer support and public relations is changing would be if Elizabeth were not the head of Customer Support but was an empowered customer support rep. But all the other main themes are there. Clear as day.

May 22, 2012

Documents: Dead or grizzled survivors?

My friend Frank Gilbane has unearthed anissue of my old e-zine from 1998 in which I proposed that documents are dead, and in which he counter-proposed that they are not. This morning Frank writes: “I was gratified to find that I still agree with my 1998-self, and will check with David to see whether he is the same self he was.”

I will acknowledge that there is one itsy-bitsy way in which Frank was right and I was wrong: Documents are not dead. And it’s also undeniably the case that Web sites have not taken over from them. But I do think I was sort of right about some points in that post.

So, we still write and read documents. Just today, for example, I was at a contentious meeting of a task force at which we debated what the structure of our final report should be. The finality of the document is serving as a forcing function for getting us literally on the same page. It’s not a very elegant mechanism, but neither is a 15-pound sledge hammer, yet it’s sometimes the tool for the job.

But, we write and read old-style documents in a context saturated with documents that are more like the Web sites my old post describes. Indeed, the document the task force is working on is a Google Doc where we are tussling by writing (and over-writing) collaboratively, using the built-in, minimal chatting function. Not a lot like an old document…until the moment we have to declare it done and settled. It lives once we pronounce it dead, so to speak.

And the task force is pulling into our report material posted on the Web site we created for the project. The one with drafts and plenty of places for people to comment.

In 1998 blogging wasn’t yet a thing, and a whole bunch of old style document work has moved onto the Web — and has taken up webby characteristics — in that form. The task force’s web site uses blogging software. (Actually, I think it may not. But it could have.)

Then there are the streams of tweets that match some of the characteristics my old post describes as the future of documents. And the rise of wikis that are never fully done. And the wild aggregation and re-aggregation of content. And RSS feeds. Etc. etc.

So, no, documents live. But they are surrounded be an ecosystem that is overflowing with variants on documents that have the characteristics my old post pointed to. I was wrong about the death of documents, but not so wrong about the direction we were headed in.

Still, let me be clear: I was wrong.

Happy, Frank? :)

May 21, 2012

Will tablets always make us non-social consumers?

wr

I know that tablets these days are “lean back” devices on which we “consume” “content.” (“When Life Becomes All Scare Quotes: ‘Film’ at 11″) But I keep hoping that that’s because they’re at the beginning of their tech curve.

After all, we’ve shown pretty convincingly over the past fifteen years that if you lower the barriers sufficiently, we will flood the ecosystem with what we want to say, draw, animate, video, carve, etc. Tablets raise those barriers significantly: I do much less typing and even less linking when I’m using my tablet (a Motorola Xoom, by the way — love it). But that’s because typing on a virtual keyboard is a pain in the butt.

I thus think (= hope) that it’s a mistake to extrapolate from today’s crappy input systems on tablets to a future of tablet-based couch potatoes still watching Hollywood crap. We’re one innovation away from lowering the creativity hurdle on tablets. Maybe it’ll be a truly responsive keyboard. Or something that translates sub-vocalizations into text (because I’m too embarrassed to dictate into my table while in public places). Or, well, something.

The fact that we’re not sharing nearly as much when we use a table is evidence of a design flaw in tablets.

I hope.

May 20, 2012

Lion fixed my SuperDrive. (Alternative title: Snow Leopard broke my SuperDrive)

Yesterday Disk Utility told me to restart my Mac from a boot disk and run the disk repair function (= Disk Utility). Fine. Except I was unable to boot from any of my three Mac boot disks (including the original) whether they were in my laptop’s SuperDrive (= Apple’s plain old DVD drive) or in a USB-connected DVD drive. The system would notice the DVD when asked to look for boot devices (= hold down the Option key when starting up), but froze after I clicked on the DVD (= no change in the screen after 30 mins).

So, what the hell, I installed Lion, which I had been hoping to avoid (= my pathetic resistance to Apple’s creeping Big Brotherism). Thanks to the generosity of Guillaume Gète, I downloaded Lion DiskMaker, followed the simple instructions (= re-downloaded Lion, all part of Apple’s makings things hard by making them easy program), and now have a Lion boot disk. I was able to boot from it and fix my hard drive.

The whole episode was so reminiscent of why I left Windows (= Windows 7 looks pretty good these days).

May 18, 2012

[eim] The actual order of the Top Ten

Rob Burnett, executive producer of Late Night with David Letterman is finishing up five hours of IAMA at Reddit, and 27 seconds ago posted a response to the question “Why is number 5 always the funniest out of the top 10?” What a dumb question! It’s always been obvious to me that #2 is the funniest.

And, well, I don’t mean to brag, but I’m right and gregorkafka (if that’s his real name) is wrong. Here’s Rob’s response to the question:

Don’t get me started. Every headwriter has their own approach to the Top 10. Here was mine:

10 Funny, but also straight forward. Reinforce the topic.

9 Medium strength. Start with two laughs. Get a tailwind.

8 Can be a little experimental. Maybe not everyone gets it, but ok.

7 Back on track. Something medium.

6 Crowd pleaser. One that will get applause. Will help bridge the first panel to the second.

5 Coming off #6, time to take a chance.

4 Starting to land the plane. Gotta be solid.

3 For me always the second funniest one you got.

2 Funniest one you have.

1 Funniest one that is short so the band doesn’t play over it.

I always tried to never give Dave two in a row that didn’t get a laugh. Of course you want all 10 to be killer, but you don’t always have that going in.

Number 2! We’re Number 2!

May 16, 2012

[2b2k] Peter Galison on The Collective Author

Harvard professor Peter Galison (he’s actually one of only 24 University Professors, a special honor) is opening a conference on author attribution in the digital age.

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people.

He points to the vast increase in the number of physicists involved in an experiment, some of which have 3,000 people working on them. This transforms the role of experiments and how physicists relate to one another. “When CERN says in a couple of months that ‘We’ve found the Higgs particle,’ who is the we?”

He says that there has been a “pseudo-I”: A group that functions under the name of a single author. A generation or two ago this was common: The Alvarez Group,” Thorndike Group, ” etc. This is like when the works of a Rembrandt would in fact come from his studio. But there’s also “The Collective Group”: a group that functions without that name — often without even a single lead institution.” This requires “complex internal regulation, governance, collective responsibility, and novel ways of attributing credit.” So, over the past decades physicists have been asked very fundamental questions about how they want to govern. Those 3,000 people have never all met one another; they’re not even in the same country. So, do they stop the accelerator because of the results from one group? Or, when CERN scientists found data suggesting faster than light neutrinos, the team was not unanimous about publishing those results. When the results were reversed, the entire team suffered some reputational damage. “So, the stakes are very high about how these governance, decision-making, and attribution questions get decided.”

He looks back to the 1960s. There were large bubble chambers kept above their boiling point but under pressure. You’d get beautiful images of particles, and these were the iconic images of physics. But these experiments were at a new, industrial scale for physics. After an explosion in 1965, the labs were put under industrial rules and processes. In 1967 Alan Thorndike at Brookhaven responded to these changes in the ethos of being an experimenter. Rarely is the experimenter a single individual, he said. He is a composite. “He might be 3, 5 or 8, possibly as many as 10, 20, or more.” He “may be spread around geographically…He may be epehemral…He is a social phenomenon, varied in form and impossible to define precisely.” But he certainly is not (said Thorndike) a “cloistered scientist working in isolation at his laboratory bench.” The thing that is thinking is a “composite entity.” The tasks are not partitioned in simple ways, the way contractors working on a house partition their tasks. Thorndike is talking about tasks in which “the cognition itself does not occur in one skull.”

By 1983, physicists were colliding beams that moved particles out in all directions. Bigger equipment. More particles. More complexity. Now instead of a dozen or two participants, you have 150 or so. Questions arose about what an author is. In July 1988 one of the Stanford collaborators wrote an internal memo saying that all collaborators ought to be listed as authors alphabetically since “our first priority should be the coherence of the group and the de facto recognition that contributions to a piece of physics are made by all collaborators in different ways.” They decided on a rule that avoided the nightmare of trying to give primacy to some. The memo continues: “For physics papers, all physicist members of the colaboration are authors. In addition, the first published paper should also include the engineers.” [Wolowitz! :)]

In 1990s rules of authorship got more specific. He points to a particular list of seven very specific rules. “It was a big battle.”

In 1997, when you get to projects as large as ATLAS at CERN, the author count goes up to 2,500. This makes it “harder to evaluate the individual contribution when comparing with other fields in science,” according to a report at the time. With experiments of this size, says Peter, the experimenters are the best source of the review of the results.

Conundrums of Authorship: It’s a community and you’re trying to keep it coherent. “You have to keep things from falling apart” along institutional or disciplinary grounds. E.g., the weak neutral current experiment. The collaborators were divided about whether there were such things. They were mockingly accused of proposing “alternating weak neutral currents,” and this cost them reputationally. But, trying to making these experiments speak in one voice can come at a cost. E.g., suppose 1,900 collaborators want to publish, but 600 don’t. If they speak in one voice, that suppresses dissent.

Then there’s also the question of the “identity of physicists while crediting mechanical, cryogenic, electrical engineers, and how to balance with builders and analysts.” E.g., analysts have sometimes claimed credit because they were the first ones to perceive the truth in the data, while others say that the analysts were just dealing with the “icing.”

Peter ends by saying: These questions go down to our understanding of the very nature of science.

Q: What’s the answer?

A: It’s different in different sciences, each of which has its own culture. Some of these cultures are still emerging. It will not be solved once and for all. We should use those cultures to see what part of evaluations are done inside the culture, and which depend on external review. As I said, in many cases the most serious review is done inside where you have access to all the data, the backups, etc. Figuring out how to leverage those sort of reviews could help to provide credit when it’s time to promote people. The question of credit between scientists and engineers/technicians has been debated for hundreds of years. I think we’ve begun to shed some our class anxiety, i.e., the assumption that hand work is not equivalent to head work, etc. A few years ago, some physicists would say that nanotech is engineering, not science; you don’t hear that so much any more. When a Nobel prize in 1983 went to an engineer, it was a harbinger.

Q: Have other scientists learned from the high energy physicists about this?

A: Yes. There are different models. Some big science gets assimilated to a culture that is more like abig engineering process. E.g., there’s no public awareness of the lead designers of the 747 we’ve been flying for 50 years, whereas we know the directors of Hollywood films. Authorship is something we decide. That the 747 has no author but Hunger Games does was not decreed by Heaven. Big plasma physics is treated more like industry, in part because it’s conducted within a secure facility. The astronomers have done many admirable things. I was on a prize committee that give the award to a group because it was a collective activity. Astronomers have been great about distributing data. There’s Galaxy Zoo, and some “zookeepers” have been credited as authors on some papers.

Q: The credits are getting longer on movies as the specializations grow. It’s a similar problem. They tell you how did what in each category. In high energy physics, scientists see becoming too specialized as a bad thing.

A: In the movies many different roles are recognized. And there are questions of distribution of profits, which is not so analogous to physics experiments. Physicists want to think of themselves as physicists, not as sub-specialists. If you are identified as, for example, the person who wrote the Monte Carlo, people may think that you’re “just a coder” and write you off. The first Ph.D. in physics submitted at Harvard was on the Bohr model; the student was told that it was fine but he had to do an experiment because theoretical physics might be great for Europe but not for the US. It’s naive to think that physicists are Da Vinci’s who do everything; the idea of what counts as being a physicist is changing, and that’s a good thing.

[I wanted to ask if (assuming what may not be true) the Internet leads to more of the internal work being done visibly in public, might this change some of the governance since it will be clearer that there is diversity and disagrement within a healthy network of experimenters. Anyway, that was a great talk.]

May 14, 2012

Goodies from Wolfram

Some wonderfully interesting stuff from Stephen Wolfram today.

Here’s his Reddit IAMA.

A post about what’s become of a New Kind of Science in the past ten years. And a part two, about reactions to NKS.

And here’s a post from a couple of months ago that I missed that is, well, amazing. All I’ll say is that it’s about “personal analytics.”

May 13, 2012

[2b2k] The Net as paradigm

Edward Burman recently sent me a very interesting email in response to my article about the 50th anniversary of Thomas Kuhn’s The Structure of Scientific Revolutions: 50th Anniversary Edition . So I bought his 2003 book Shift!: The Unfolding Internet – Hype, Hope and History

. So I bought his 2003 book Shift!: The Unfolding Internet – Hype, Hope and History (hint: If you buy it from Amazon, check the non-Amazon sellers listed there) which arrived while I was away this week. The book is not very long — 50,000 words or so — but it’s dense with ideas. For example, Edward argues in passing that the Net exploits already-existing trends toward globalization, rather than leading the way to it; he even has a couple of pages on Heidegger’s thinking about the nature of communication. It’s a rich book.

(hint: If you buy it from Amazon, check the non-Amazon sellers listed there) which arrived while I was away this week. The book is not very long — 50,000 words or so — but it’s dense with ideas. For example, Edward argues in passing that the Net exploits already-existing trends toward globalization, rather than leading the way to it; he even has a couple of pages on Heidegger’s thinking about the nature of communication. It’s a rich book.

Shift! applies The Structure of Scientific Revolutions to the Internet revolution, wondering what the Internet paradigm will be. The chapters that go through the history of failed attempts to understand the Net — the “pre-paradigms” — are fascinating. Much of Edward’s analysis of business’ inability to grasp the Net mirrors cluetrain‘s themes. (In fact, I had the authorial d-bag reaction of wishing he had referenced Cluetrain…until I realized that Edward probably had the same reaction to my later books which mirror ideas in Shift!) The book is strong in its presentation of Kuhn’s ideas, and has a deep sense of our cultural and philosophical history.

All that would be enough to bring me to recommend the book. But Edward admirably jumps in with a prediction about what the Internet paradigm will be:

This…brings us to the new paradigm, which will condition our private and business lives as the twenty-first century evolves. It is a simple paradigm, and may be expressed in synthetic form in three simple words: ubiquitous invisible connectivity. That is to say, when the technologies, software and devices which enable global connectivity in real time become so ubiquitous that we are completely unaware of their presence…We are simply connected.” [p. 170]

It’s unfair to leave it there since the book then elaborates on this idea in very useful ways. For example, he talks about the concept of “e-business” as being a pre-paradigm, and the actual paradigm being “The network itself becomes the company,” which includes an erosion of hierarchy by networks. But because I’ve just written about Kuhn, I found myself particularly interested in the book’s overall argument that Kuhn gives us a way to understand the Internet. Is there an Internet paradigm shift?

The are two ways to take this.

First, is there a paradigm by which we will come to understand the Internet? Edward argues yes, we are rapidly settling into the paradigmatic understanding of the Net. In fact, he guesses that “the present revolution [will] be completed and the new paradigm of being [will] be in force” in “roughly five to eight years” [p. 175]. He sagely points to three main areas where he thinks there will be sufficient development to enable the new paradigm to take root: the rise of the mobile Internet, the development of productivity tools that “facilitate improvements in the supply chain” and marketing, and “the increased deployment of what have been termed social applications, involving education and the political sphere of national and local government.” [pp. 175-176] Not bad for 2003!

But I’d point to two ways, important to his argument, in which things have not turned out as Edward thought. First, the 5-8 years after the book came out were marked by a continuing series of disruptive Internet developments, including general purpose social networks, Wikipedia, e-books, crowdsourcing, YouTube, open access, open courseware, Khan Academy, etc. etc. I hope it’s obvious that I’m not criticizing Edward for not being prescient enough. The book is pretty much as smart as you can get about these things. My point is that the disruptions just keep coming. The Net is not yet settling down. So we have to ask: Is the Net going to enable continuous disruption and self-transformation? If so will it be captured by a paradigm? (Or, as M. Knight Shyamalan might put it, is disruption the paradigm?)

Second, after listing the three areas of development over the next 5-8 years, the book makes a claim central to the basic formulation of the new paradigm Edward sees emerging: “And, vitally, for thorough implementation [of the paradigm] the three strands must be invisible to the user: ubiquitous and invisible connectivity.” [p. 176] If the invisibility of the paradigm is required for its acceptance, then we are no closer to that event, for the Internet remains perhaps the single most evident aspect of our culture. No other cultural object is mentioned as many times in a single day’s newspaper. The Internet, and the three components the book point to, are more evident to us than ever. (The exception might be innovations in logistics and supply chain management; I’d say Internet marketing remains highly conspicuous.) We’ve never had a technology that so enabled innovation and creativity, but there may well come a time when we stop focusing so much cultural attention on the Internet. We are not close yet.

Even then, we may not end up with a single paradigm of the Internet. It’s really not clear to me that the attendees at ROFLcon have the same Net paradigm as less Internet-besotted youths. Maybe over time we will all settle into a single Internet paradigm, but maybe we won’t. And we might not because the forces that bring about Kuhnian paradigms are not at play when it comes to the Internet. Kuhnian paradigms triumph because disciplines come to us through institutions that accept some practices and ideas as good science; through textbooks that codify those ideas and practices; and through communities of professionals who train and certify the new scientists. The Net lacks all of that. Our understanding of the Net may thus be as diverse as our cultures and sub-cultures, rather than being as uniform and enforced as, say, genetics’ understanding of DNA is.

Second, is the Internet affecting what we might call the general paradigm of our age? Personally, I think the answer is yes, but I wouldn’t use Kuhn to explain this. I think what’s happening — and Edward agrees — is that we are reinterpreting our world through the lens of the Internet. We did this when clocks were invented and the world started to look like a mechanical clockwork. We did this when steam engines made society and then human motivation look like the action of pressures, governors, and ventings. We did this when telegraphs and then telephones made communication look like the encoding of messages passed through a medium. We understand our world through our technologies. I find (for example) Lewis Mumford more helpful here than Kuhn.

Now, it is certainly the case that reinterpreting our world in light of the Net requires us to interpret the Net in the first place. But I’m not convinced we need a Kuhnian paradigm for this. We just need a set of properties we think are central, and I think Edward and I agree that these properties include the abundant and loose connections, the lack of centralized control, the global reach, the ability of everyone (just about) to contribute, the messiness, the scale. That’s why you don’t have to agree about what constitutes a Kuhnian paradigm to find Shift! fascinating, for it helps illuminate the key question: How are the properties of the Internet becoming the properties we see in — or notice as missing from — the world outside the Internet?

Good book.

May 10, 2012

Awesome James Bridle

I am the lucky fellow who got to have dinner with James Bridle last night. I am a big fan of his brilliance and humor. And of James himself, of course.

I ran into him at the NEXT conference I was at in Berlin. His in fact was the only session I managed to get to. (My schedule got very busy all of a sudden.) And his talk was, well, brilliant. And funny. Two points stick out in particular. First, he talked about “code/spaces,” a notion from a book by Martin Dodge and Rob Kitchin. A code/space is an architectural space that shapes itself around the information processing that happens within it. For example, an airport terminal is designed around the computing processes that happen within it; the physical space doesn’t work without the information processes. James is in general fascinated by the Cartesian pituitary glands where the physical and the digital meet. (I am too, but I haven’t pursued it with James’ vigor or anything close to his literary-aesthetic sense.)

Second, James compared software development to fan fiction: People generally base their new ideas on twists on existing applications. Then he urged us to take it to the next level by thinking about software in terms of slash fiction: bringing together two different applications so that they can have hot monkey love, or at least form an innovative hybrid.

Then, at dinner, James told me about one of his earliest projects. a matchbox computer that learns to play “noughts and crosses” (i.e., tic-tac-toe). He explains this in a talk dedicated to upping the game when we use the word “awesome.” I promise you: This is an awesome talk. It’s all written out and well illustrated. Trust me. Awesome.