Jeff Sayre's Blog, page 3

September 17, 2011

Putting the Tech Back into Social Web

This article was originally part of the fifth installment to my smartup series. As I believe the message best fits in its own article bucket, I've placed it here instead.

I want to address an odd trend–although it's not yet clear if this actually is a trend. Over the past several months, I've heard similar statements from several unrelated Internet startups—the notion that they are not tech startups.

Instead of thinking of themselves as tech startups, they believe they have a higher-calling, claiming to be some flavor of socially-focused company. This may be the result of more and more non-tech-oriented business people forming Internet-based startups, but whatever the cause, in my opinion, it must be nipped in the bud.

Now if I had heard that sentiment from two unrelated parties, I would not think much about it. But hearing that statement from several unrelated parties has made me pause and think.

If it Quacks Like a Duck

Were Facebook and Twitter tech startups? Of course. Were they also social startups? Yes to that question as well. At the early stages of your smartup, don't get too bogged down in mission semantics. Whatever label you wish to slap onto your smartup, whatever moniker gives you that warm fuzzy feeling, if you are building a platform that requires the Web-based or Mobile-based Internet–especially one that requires a big-data approach–then your smartup by its very nature is a tech-dependent company at its rock-bottom core.

As smartups are Internet-obligate endeavors, they must be firmly grounded in a tech core. But smartups are greater than the sum of their technologies.

Since smartups can often be classified as Social Web startups as well, the reliance on Internet technologies is even greater. What does this mean? It's essential that your smartup's engine properly models, captures, facilities, and manages vast amounts of social interaction. That's accomplished in large part via your chosen and developed technologies.

This is one of the key differentiators between a startup and a smartup. Whereas a startup might not transcend its technology, a smartup recognizes that it is a tech startup plus a Social Web Engine. Social is built into the smartup stack. But even so, a smartup cannot divorce itself from the primacy of its foundational technology.

An Internet startup is tech at its core. Your smartup is also tech at its core. However else you fancy seeing it, and irregardless of how you envision its future, all other facets of your smartup are either layers on top of or pieces integrated into the core tech platform.

This is the message of this article. Without its defining core technologies, your smartup cannot be anymore than vaporware or an ephemeral dream. Without its defining core technologies, your smartup cannot become an engine of social change.

The Rise of the Data Civilization

In the conclusion of Stephen Wolfram's excellent article entitled Advance of the Data Civilization: A Timeline, he states that the "systematization of data and knowledge provides core infrastructure for the world." Technologies have evolved over time, increasing the rate of collection, processing, and dissemination of that data to help turn them into knowledge.

To our globally-connected and insatiably data-hungry community, in my view, the Internet is perhaps the most relevant class of innovation. The Internet is becoming not only the preferred repository of most of our data but also the accelerator of the systematization of data and knowledge that Wolfram discusses. Our civilization is more dependent on data today than ever before—and that dependence will continue to increase.

As humanity races toward the Internet of Things, data–and lots of it (big data)–will be a fundamental supporting sublayer to our everyday lives. The Internet is becoming the platform on which our society, culture, and economy depends. The Internet is an essential partner in much of our current and future innovations. Don't discount the importance of the Internet and its underlying technologies. Technology is at the core of our society's future and your smartup's success.

Technology as Platform, Engine, and Change Agent

All Internet-obligate companies have some type of a vision and mission, usually backed by a set of closely-held ideals that flavor their implementation of that vision. Whatever that vision may be, the fundamental foundation of any smartup is its technological platform. But as you'll discover in the Layer's of the Smartup Stack article, the platform does encompass more than just core code technology.

The technological platform though is at the center of, the innermost layer of, the smartup stack. Why is this the case? Because technology is the enabler of the wonderful and fantastic vision your smartup has for the world. Your smartup plans to leverage the power, reach, and socially-transmutational forces of the Internet. To do so requires that you envelope your vision with those technologies that can help bring your vision to fruition.

Whereas it is fabulous that you want to change the world, your Internet-obligate company mandates a technological base. Make sure that base is as strong as it can be. Architect it properly and build it correctly from the start.

Don't let some branding game cloud your judgement about the key components to your smartup's future. Remember that your company is at the startup stage. It is not at the growth to maturity stage. You are building the foundation of your vision—a vision that should indisputably be much greater than its technological underpinnings and will be if you do it right. But in order to get to that next stage, you need to come to terms with the seeds of your humble beginnings. There will be plenty of time to expand your focus, to embrace your greater ideals.

A Story About Placing Too Low a Value on Tech

In its earliest stages, a smartup needs technical vision, leadership, and a strong, core smartup engineering team. This cannot be achieved via consultants or outside help. The expertise must be internal to your smartup.

To be a successful smartup, you cannot settle for substandard design or mediocre construction, thinking that you can always retrofit, remodel, or augment your technological platform later. Although you can find stories of companies who did just that, they are the exception and not the norm. They should not be deemed as the virtuous model—unless your goals are slanted toward quick profits and you place a lower value on your user community, or have little desire to create a symbiotic ecosystem.

To defend this point, I'll share with you the story of my brother. As a successful sales executive with a number of large telecom-focused companies, he shifted his sights to working with Internet startups. In his last two positions, the startups he was helping placed too low of a value on the importance of technology. One of them used off-shore, overseas help, the other used in-country contract help. The end results were the same.

Within a year or two of joining, both of these startups were in trouble primarily as a result of their failure to understand the fundamental importance of having high-quality, in-house technical expertise. The first startup was a failure as the quality of the product did not meet the requirements of the vision and the time to execute was too slow. The second was also a failure, even though they contracted local, in-country help from those who were considered experts in their field.

The reasons for failure might seem different in each of the above scenarios, but the heart of the problem is simple. Neither of the startups had an internal technical founder. Neither of the startups had a high-value, internal engineering team.

Why is this important? Only an internal, skilled technical team can fully appreciate the startup's vision. Only an internal, skilled technical team can fully understand which technologies need to be leveraged. A technical founder also has a broader understanding of the business climate, and is fully aligned with the company's vision, having helped craft it from the start. Outside technical help will never have the passion, drive, determination, motivation, and vested interest–both emotionally and financially–in seeing a startup's vision to fruition.

Another crucial reason to have a technical founder? With technology advancing at an accelerating rate, it's not practical to think that hiring outside consultants to keep you abreast of the constantly-changing competitive landscape with respect to your technology will ever be effective. You need someone internal to your team whose job it is to not only understand this changing competitive landscape, but also be able to adeptly leverage new innovations to forward your vision.

If your approach to building your company's tech platform is to contract out-of-company services–via cheap overseas code-cutting sweatshops, in-country consulting companies, or work-for-hire programmers–then you fail to comprehend the intrinsic value that technology plays in your success. Your approach is flawed and living in the past. It is a Web 1.0 and Web 2.0 attitude.

This approach, while often viewed by non-tech founders as an innovative, out-of-the-box solution to tight budgetary constraints, can often be a myopic, closed-minded attitude that is penny wise and pound foolish. The return on investment received by leveraging a seemingly less expensive technological approach upfront is often many orders of magnitude lower than that gained via properly utilizing higher-quality, in-house technical expertise.

The let's-use-cheap-programming-sources attitude is analogous to eating white bread versus wholegrain organic bread. Whereas consuming white bread may seem prudent as it costs you a lot less up front, you may end up paying for that mistake many times over down the road. It can literally be a fatal error in consumptive judgement.

A smartup realizes that it needs to invest its resources wisely. Although a calorie is a calorie–and a dollar is a dollar–the form in which you choose to ingest your calories is essential to good health. Don't setup your smartup for an early demise by allowing it to ingest poor-quality platform design and code execution.

Choose the Wheat, Skip the White

As my bother's story reveals, startups that seek to economize on tech investment upfront are in for a nasty surprise. His story with these two startups is not unique. The odds of that are statistically insignificant. His experience is a powerful lesson and a salient warning. You get what you pay for.

Investing in talent is like investing in the stock market. If you make investment decisions primarily based on the face value (market value) of a given equity, you'll miss great opportunities. What you pay up front is not what matters. What you get in return for any investment should be your primary consideration and concern.

Whereas it is fabulous that you want to change the world, your Internet-obligate company mandates a technological base. Make sure that base is as strong as it can be. Architect it properly and build it correctly from the start.

Remember this one point if you fail to process anything else from this story. Programmers are a dime a dozen, good programmers cost more, but finding the talent capable of executing a bold, visionary idea is difficult. A smartup developer can never be outsourced.

I implore you, at your smartup's inception, do not relegate technology to a lesser position. Building a smartup requires focusing on the proper priorities in the proper sequence. While there will come a time when it is prudent to shift more focus to higher-level layers within the smartup stack, the technological platform has the highest priority in stage one.

Conclusion

It is clear that technology is integral to all Social Web platforms. As smartups are Internet-obligate endeavors, they must be firmly grounded in a tech core. But smartups are greater than the sum of their technologies. The fifth installment of my smartup series lays out the greater ecosystem vision that all startups should strive to embrace. Please read, Building the Social Web: the Layers of the Smartup Stack.

September 8, 2011

Star Trek: The Next Production Frontier

Forty-five years ago on this same day and day of the week (Thursday, September 8, 1966), the first episode of Star Trek aired on NBC. The episode was entitled, The Man Trap. So instead of penning a post about the Social Web or Smartups, I've decided to celebrate this important date in entertainment and science history. I want to share with you where I believe the Star Trek franchise must now boldly go.

As every Trekkie knows, the first Star Trek series was a flop in the eyes of the network executives. The series was canceled into its third session. But it struck a chord with viewers. A letter-writing campaign by fans was responsible for the network re-airing the series and for its eventual syndication. Through syndication and fan-driven Star Trek conventions, the ideals of the series lived on, spawning four more successful series and a growing list of Hollywood movies.

Star Trek struck a chord with viewers for a number of reasons. Through its interracial, intercultural, mixed gender, and mixed species crew, it sent the message that humanity could strive toward a greater ideal, that we would eventually overcome our social, political, and economic conflicts.

The original series (TOS) and the subsequent series in the franchise also sparked the imaginations of many young children and teenagers, helping them dream about science, technology, and the future. Today there are a number of prominent scientists who have stated that Star Trek was a primary reason they got interested in science and math.

But for the first time in almost two decades, there is not an actively-produced, airing Star Trek series. How can a new generation of viewers get inspired by the ideals and vision of the Trek universe? How should Gene Roddenberry's vision live on?

To Boldly Go

Whereas there seems to be renewed interest in the Hollywood-side of the Trek franchise, a new Star Trek movie coming out every two or three years is not sufficient to keep fans satiated nor inspire new fans to get interested in math and science. There have been fan-created Trek series going for some time–one of them even attracting the participation of past TOS stars and screenwriters–but these productions cannot pump out the volume of annual episodes required to keep an audience engaged, to keep the vision of Roddenberry alive.

So what is the solution?

I believe that creating a single, new television-based Star Trek series is not in keeping with Gene Roddenberry's vision. As a futurist, Roddenberry would have been enthralled with the power of today's Web-based Internet.

Roddenberry would have reached out and embraced the Web, leveraging social media, crowdsourcing, crowdfunding, and cloud-based distribution. He surely would have recognized that with the new production toolkits and distribution channels, multiple teams of independent production companies could simultaneously leverage the power of the Web to expand and explore his vision.

Instead of a single, very-expensive-to-produce, television-based Star Trek series being aired at a time, imagine eight or ten cheaper-to-produce, independently-run Star Trek Web franchises running simultaneously. Imagine the richness and diversity of Roddenberry's vision blossoming in the frontiers of Web-based media production.

The Next Production Frontier

CBS Studios–the current copyright holder of the television side of the Star Trek franchise–needs to accept the changing face of media production. It needs to honor and respect Gene Roddenberry's creation and future-focused vision by putting in place a mechanism for the creation of independently-owned and -operated mini Star Trek Production Houses (STPH).

Note: What I'm proposing for the Star Trek franchise can equally apply to the Stargate franchise as well

Here's how the STPH model would work:

Getting Started: An initial small licensing fee would be paid to CBS Studios for the rights to use the Star Trek name and body of work. An initial two-year license would cost a nominal amount—$10k. This would help the fledgling series get established without too much of their precious initial funding going to licensing. The small fee while affordable, is still large enough that only serious production teams would be willing to pay the initial fee.

Licensing Fee Escalation: Starting with year three, licensing could switch to being annually renewable with an escalation of the fee to $25k. Year four would see the fee jump to $50k and year five would see the fee capped at a maximum $100k for that year and each subsequent years. By year three, either a series is doing well enough to afford a higher fee or it is ready to shut down. So, whereas the annual fees in years 4 and onward seem steep compared to the first three years, it is reasonable to assume that a successful series will have more than sufficient annual subscription revenue to easily afford these fees. The increased fees are also a thank you to CBS Studios for allowing young production companies to get up and running without a burdensome initial licensing fee.

Production Requirements: Part of the licensing agreement would establish minimum production requirements that ensure sufficient quality of production output. However, the minimum production requirements should not be a deal killer; they cannot require too expensive of a production toolset; they cannot require that actors be screen actor guild members nor that gaffers, lighting technicians (etcetera) be union. The Web-based production paradigm is much closer to guerilla, shoebox, garage-level production than Hollywood-level cinematic overkill. Requiring big-budget television, or worse, Hollywoord-level production capabilities does not recognize nor appreciate the agility with which Web-based productions must operate.

Production Quality: Whereas costs must be contained, and therefore old-school media production mindsets will not work in the fasted-paced world of Web productions, there are a few essentials to help ensure sufficient production quality. A list of minimum equipment quality, facilities, and production capabilities would be spelled out in the licensing agreement. This list would cover:

* Cameras

* Studio space

* Sets, props, and costumes

* Scripts

* Post production quality, such as VFX capabilites

* Website design and community requirements

* Episode formatting for Web broadcast

Organizational Structure: Each independent STPH must be a chartered corporate organization. In other words, it has to be a business and run like a business. It cannot be three guys in their garage making a fan flick.

Monetization: Each STPH would utilize crowdfunding to fund their series production. This could be done through a combination of funding techniques, but selling annual subscriptions to a series would be the primary revenue source for each STPH.

Profit Sharing: Profit sharing of 70/30 (STPH / CBS Studios). CBS Studios would receive a thirty-precent topline subscription revenue share and a thirty-precent net profit share (on all other revenue streams minus subscription revenue) such as sales of merchandise, DVDs, conferences, onset tours, premium Web member fees, etcetera.

Need for Profit: Each STPH Webseries' creative team and production company need to have sufficient motivation and profit opportunities. They need to have the ability to earn a respectable profit to fund future growth and improvements. A thirty-precent profit share with CBS Studios is more than generous and could add up to a respectable bonus revenue stream all for just agreeing to license the Star Trek brand and let others do the rest of the work.

Creative Freedom: Whereas CBS Studios would approve each new licensee and the proposed Webseries' place in the Star Trek Universe (STU), they would not have creative control over a Webseries' production. The only control they would have is to refuse license renewal at the end of a licensing period or the ability to revoke a license midterm if other contractual obligations have not been met. The license agreement would have to safeguard the original creative team and STPH company so as to prevent license termination for the sole purpose of taking over a popular Webseries (see last bullet point). There would of course be a set of rules that each Webseries would have to follow regarding the expansion of the STU and also a set of production guidelines that should be followed. But Web-based new media projects cannot function under old school, overly ridged, greatly politicized production policies and practices. In the world of new media there is no room for the old school studio executives playing god. There is no room and allowance for script reviews and approval. Web-based cinema is a lean, quick paced production environment. The reason it exists is to get away from the excesses and gross inefficiencies of old school, traditional media. If CBS Studios wants to succeed in the new media Web world, it has to learn the new rules, it has to change its ways of doing business.

Copyright: Each STPH webseries would have a joint copyright between CBS Studios and the STPH Webseries production company with all profits shared as agreed upon in the licensing agreement. The copyright and profit sharing would continue after series completion or termination.

Communication, Participation, Marketing: Regular communication would occur between each licensed STPH and CBS Studios. This would be facilitated via a special CBS Studio STPH Envoy. CBS Studios would help market each licensed STPH via promotion on StarTrek.com and other outlets.

Potential for Television Series: It may make sense for CBS Studios to directly nurture a select few STPH Webseries, providing them with additional funding, and maybe even turning them into full-fledged Star Trek TV series. This would require careful consideration of the impacts to the STPH company (owners, actors, production team, copyright issues, etcetera).

The Web, Star Trek's Final Frontier?

Why should CBS Studios entertain this proposal? Besides that it is more than likely in keeping with Gene Roddenberry's vision, it could provide a nice yearly revenue stream.

It's realistic to project a possible annual income from all licensed STPH Webseries to approach $36m by the fourth year of this program. With ten active, licensed Star Trek webseries each paying $50k per licensing year, that is $500k in licensing fees alone.

The profit sharing arrangement offers the lion's share of the opportunity. Assuming each STPH Webseries has at a minimum 500k annual subscribers each paying $20 per year for the privilege of seeing the series, that would make a total of $10 million per series times ten series divided by 30 percent. Therefore the shared revenue split would bring in $30m per annum.

Furthermore, I guesstimate that each successful fourth-season webseries would have a minimum additional net revenue stream of $2 million per annum through merchandising and other avenues. This means an additional $6 million per year to CBS Studios. That may not sound like much money to a mega-media company like CBS, but it would be $36 million dollars per year that CBS would not have otherwise.

The real payoff to CBS Studios may be in keeping Roddenberry's vision alive and bringing it into the future. With possibly a dozen independent mini Star Trek Production Houses producing hundreds of hours of Web-based programming, the franchise will be reinvigorated. Future television-based series, merchandizing, and renewed syndication revenue from past television series could led to a windfall profit for CBS Studios.

As we celebrate Star Trek's 45th anniversary, let's keep Gene Roddenberry's vision alive and boldly go into a new production frontier.

August 24, 2011

Building the Social Web: the Layers of the Smartup Stack

<Smartups Series Part 5 of 5>

As a Social Web architect and an open source advocate I frequently write, think, and promote the notion and ideals of the Open and Social Web. My work in the areas of user-centric control (identity, privacy, rights, data portability), federated Social Web models, future-of-money projects, and W3C standards groups has shaped my views presented here.

Soon after publishing my 4-part smartup series (almost a year ago), I began to think about key parts of what has become this article. I've had bits and pieces of this article jotted down in various places. Over the past three months, the ideas have coalesced into a cohesive framework. With a recent and lengthy process of helping a potential smartup try to find its foundation, I've been motivated to assemble, clarify, and share my views on what I call the layers of the smartup stack.

If you've carefully read my previous installments in my smartup series you will have discovered–in part–the message that is expressed here. However, in my previous articles, the message was buried in lengthy verbiage, charts, and indirect references. This next installment in the series seeks to clearly present the framework of the smartup stack.

Smartups are Socially Transformative

Smartups look to operate beyond the stale disruptive technology mantra; the smartup vision is not simply a paradigm shift. Instead, smartups are best described as innovating at the intersection of technical, social, and cultural evolution. As such, well thought-out and executed smartups are revolutionary entities.

One key differentiator between a startup and a smartup is that smartups recognize they are tech startups with a Social Web Engine. Nonetheless, smartups cannot divorce themselves from the primacy of their foundational technology.

The layers of the smartup stack embrace the uniqueness of each smartup while recognizing the interconnectedness of the greater community. No matter the grand vision of a given smartup, all smartups share the same DNA at their foundation. They are tech-reliant, Internet-based companies. To drive home this point, I must share some observations before getting into the details of the smartup stack.

If it Quacks Like a Duck

Before we explore the layers of the smartup stack in depth, I first want to address an odd trend–although it's not yet clear if this actually is a trend. Over the past several months, I've heard similar statements from several unrelated Internet startups—the notion that they are not tech startups.

Instead of thinking of themselves as tech startups, they believe they have a higher-calling, claiming to be some flavor of socially-focused company. This may be the result of more and more non-tech-oriented business people forming Internet-based startups, but whatever the cause, in my opinion, it must be nipped in the bud.

Now if I had heard that sentiment from two unrelated parties, I would not think much about it. But hearing that statement from several unrelated parties has made me pause and think.

Were Facebook and Twitter tech startups? Of course. Were they also social startups? Yes to that question as well. At the early stages of your smartup, don't get too bogged down in mission semantics. Whatever label you wish to slap onto your smartup, whatever moniker gives you that warm fuzzy feeling, if you are building a platform that requires the Web-based or Mobile-based Internet–especially one that requires a big-data approach–then your smartup by its very nature is a tech-dependent company at its rock-bottom core.

Smartups are greater than the sum of their technologies. As we explore each additional layer of the smartup stack, greater emphasis is placed on the social, economic, and cultural frameworks.

Since smartups can often be classified as Social Web startups as well, the reliance on Internet technologies is even greater. What does this mean? It's essential that your smartup's engine properly models, captures, facilities, and manages vast amounts of social interaction. That's accomplished in large part via your chosen and developed technologies.

This is one of the key differentiators between a startup and a smartup. Whereas a startup might not transcend its technology, a smartup recognizes that it is a tech startup plus a Social Web Engine. Social is built into the smartup stack. But even so, a smartup cannot divorce itself from the primacy of its foundational technology.

An Internet startup is tech at its core. Your smartup is also tech at its core. However else you fancy seeing it, and irregardless of how you envision its future, all other facets of your smartup are either layers on top of or pieces integrated into the core tech platform.

This is the message of this article. Without its defining core technologies, your smartup cannot be anymore than vaporware or an ephemeral dream. Without its defining core technologies, your smartup cannot become an engine of social change.

The Rise of the Data Civilization

In the conclusion of Stephen Wolfram's excellent article entitled Advance of the Data Civilization: A Timeline, he states that the "systematization of data and knowledge provides core infrastructure for the world." Technologies have evolved over time, increasing the rate of collection, processing, and dissemination of that data to help turn them into knowledge.

To our globally-connected and insatiably data-hungry community, in my view, the Internet is perhaps the most relevant class of innovation. The Internet is becoming not only the preferred repository of most of our data but also the accelerator of the systematization of data and knowledge that Wolfram discusses. Our civilization is more dependent on data today than ever before—and that dependence will continue to increase.

As humanity races toward the Internet of Things, data–and lots of it (big data)–will be a fundamental supporting sublayer to our everyday lives. The Internet is becoming the platform on which our society, culture, and economy depends. The Internet is an essential partner in much of our current and future innovations. Don't discount the importance of the Internet and its underlying technologies. Technology is at the core of our society's future and your smartup's success.

Technology as Platform, Engine, and Change Agent

All Internet-obligate companies have some type of a vision and mission, usually backed by a set of closely-held ideals that flavor their implementation of that vision. Whatever that vision may be, the fundamental foundation of any smartup is its technological platform. And as you will see in this article, the platform does encompass more than just core code technology.

The technological platform is the center of, the innermost layer, of the smartup stack. Why is this the case? Because technology is the enabler of the wonderful and fantastic vision your smartup has for the world. Your smartup plans to leverage the power, reach, and socially-transmutational forces of the Internet. To do so requires that you envelope your vision with those technologies that can help bring your vision to fruition.

Whereas it is fabulous that you want to change the world, your Internet-obligate company mandates a technological base. Make sure that base is as strong as it can be. Architect it properly and build it correctly from the start.

Don't let some branding game cloud your judgement about the key components to your smartup's future. Remember that your company is at the startup stage. It is not at the growth to maturity stage. You are building the foundation of your vision—a vision that should indisputably be much greater than its technological underpinnings and will be if you do it right. But in order to get to that next stage, you need to come to terms with the seeds of your humble beginnings. There will be plenty of time to expand your focus, to embrace your greater ideals.

Whereas technology is at the center of the smartup stack, you will see in this article that smartups are greater than the sum of their technologies. As we explore each additional layer of the smartup stack, the focus shifts more and more to the outside. Greater emphasis is placed on the social, economic, and cultural frameworks. This will help integrate your vision into the real world. It will help bridge your metaspace creations with their meatspace participants.

A Story About Placing Too Low a Value on Tech

In its earliest stages, a smartup needs technical vision, leadership, and a strong, core smartup engineering team. This cannot be achieved via consultants or outside help. The expertise must be internal to your smartup.

To be a successful smartup, you cannot settle for substandard design or mediocre construction, thinking that you can always retrofit, remodel, or augment your technological platform later. Although you can find stories of companies who did just that, they are the exception and not the norm. They should not be deemed as the virtuous model—unless your goals are slanted toward quick profits and you place a lower value on your user community.

To defend this point, I'll share with you the story of my brother. As a successful sales executive with a number of large telecom-focused companies, he shifted his sights to working with Internet startups. In his last two positions, the startups he was helping placed too low of a value on the importance of technology. One of them used off-shore, overseas help, the other used in-country contract help. The end results were the same.

Within a year or two of joining, both of these startups were in trouble primarily as a result of their failure to understand the fundamental importance of having high-quality, in-house technical expertise. The first startup was a failure as the quality of the product did not meet the requirements of the vision and the time to execute was too slow. The second was also a failure, even though they contracted local, in-country help from those who were considered experts in their field.

The reasons for failure might seem different in each of the above scenarios, but the heart of the problem is simple. Neither of the startups had an internal technical founder. Neither of the startups had a high-value, internal engineering team.

Why is this important? Only an internal, skilled technical team can fully appreciate the startup's vision. Only an internal, skilled technical team can fully understand which technologies need to be leveraged. A technical founder also has a broader understanding of the business climate, and is fully aligned with the company's vision, having helped craft it from the start. Outside technical help will never have the passion, drive, determination, motivation, and vested interest–both emotionally and financially–in seeing a startup's vision to fruition.

Another crucial reason to have a technical founder? With technology advancing at an accelerating rate, it's not practical to think that hiring outside consultants to keep you abreast of the constantly-changing competitive landscape with respect to your technology will ever be effective. You need someone internal to your team whose job it is to not only understand this changing competitive landscape, but also be able to adeptly leverage new innovations to forward your vision.

If your approach to building your company's tech platform is to contract out-of-company services–via cheap overseas code-cutting sweatshops, in-country consulting companies, or work-for-hire programmers–then you fail to comprehend the intrinsic value that technology plays in your success. Your approach is flawed and living in the past. It is a Web 1.0 and Web 2.0 attitude.

This approach, while often viewed by non-tech founders as an innovative, out-of-the-box solution to tight budgetary constraints, can often be a myopic, closed-minded attitude that is penny wise and pound foolish. The return on investment received by leveraging a seemingly less expensive technological approach upfront is often many orders of magnitude lower than that gained via properly utilizing higher-quality, in-house technical expertise.

The let's-use-cheap-programming-sources attitude is analogous to eating white bread versus wholegrain organic bread. Whereas consuming white bread may seem prudent as it costs you a lot less up front, you may end up paying for that mistake many times over down the road. It can literally be a fatal error in consumptive judgement.

A smartup realizes that it needs to invest its resources wisely. Although a calorie is a calorie–and a dollar is a dollar–the form in which you choose to ingest your calories is essential to good health. Don't setup your smartup for an early demise by allowing it to ingest poor-quality platform design and code execution.

As my bother's story reveals, startups that seek to economize on tech investment upfront are in for a nasty surprise. His story with these two startups is not unique. The odds of that are statistically insignificant. His experience is a powerful lesson and a salient warning. You get what you pay for.

Investing in talent is like investing in the stock market. If you make investment decisions primarily based on the face value (market value) of a given equity, you'll miss great opportunities. What you pay up front is not what matters. What you get in return for any investment should be your primary consideration and concern.

Whereas it is fabulous that you want to change the world, your Internet-obligate company mandates a technological base. Make sure that base is as strong as it can be. Architect it properly and build it correctly from the start.

Remember this one point if you fail to process anything else from this story. Programmers are a dime a dozen, good programmers cost more, but finding the talent capable of executing a bold, visionary idea is difficult. A smartup developer can never be outsourced.

I implore you, at your smartup's inception, do not relegate technology to a lesser position. Building a smartup requires focusing on the proper priorities in the proper sequence. While there will come a time when it is prudent to shift more focus to higher-level layers within the smartup stack, the technological platform has the highest priority in stage one.

The Layers of the Smartup Stack

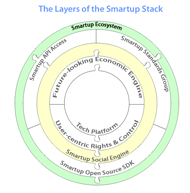

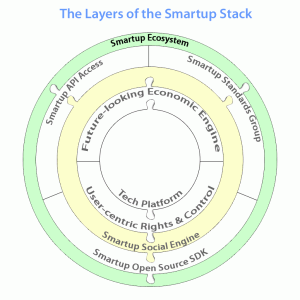

With a clear understanding of the key role that technology plays in all smartups, we can now explore each layer of the smartup stack. Layers can connote horizontal levels upon which other material is placed or stacked. But in the view presented here, layers are rings that surround and bind to any lower and higher concentric-ring partners.

It is practically impossible to singularly architect and build each of the smartup layers without regard to their immediately contiguous layers. However, I will present each layer as if it was a well-defined and self-sufficient entity. The reality is that at all stages of building out your smartup stack, the interconnections to and interdependencies on other layers (inner and outer) must be carefully explored and considered. This is yet another reason why your smartup must have in-house technological expertise from the start.

Smartup's do not build software. Smartup's create ecosystems. Like an ecological food web, your smartup can be viewed as an organism that is linked to and interdependent upon other organisms and system services. Many of these services are outside your smartup's immediate control. A smartup must architect its ecosystem to work in symbiotic harmony with the greater Web community.

To that end, a smartup leverages and relies upon open source tools and open Web standards. As we will discuss in the section about the outermost smartup stack layer, smartups also give back to the Open Web movement as best they can.

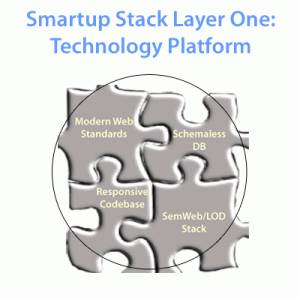

The Inner most Layer: the Technology Platform

As we've already spent considerable time above driving home the message that technology is at the very core of every Internet-obligate smartup, it should come as no surprise that the center of the smartup stack is the technology platform. There are four pieces that comprise the technology platform. As pervious smartup articles discuss two of these pieces in depth, I will not present much additional detail about them.

As we've already spent considerable time above driving home the message that technology is at the very core of every Internet-obligate smartup, it should come as no surprise that the center of the smartup stack is the technology platform. There are four pieces that comprise the technology platform. As pervious smartup articles discuss two of these pieces in depth, I will not present much additional detail about them.

Each piece of the tech platform layer relies on Open Source tools and standards where ever possible. Although a smartup creates its own technology in aggregate, it leverages code libraries, tools, and standards to help make the process of building out their platform quick and efficient.

At this stage you will be proportioning your smartup's time between product iteration (which means more coding), marketing your MVP, and customer development. Although you must find the proper balance between these three activities, the primary focus of this process is on building out your smartup's foundational technology platform.

Here are the four pieces of the tech platform:

Schemaless Backend

Semantic Web / LOD Stack

Responsive Codebase

Modern Web Standards

Schemaless Backend

I've written an entire smartup article on the virtues of NOSQL versus SQL, so I will not repeat anything here except to say that some smartups may need to use an RDBMS as well for part of their overall data warehousing needs. The main point is that smartups are big-data players and as such they need to utilize the best technology for modeling, capturing, and managing that data. NOSQL databases are, by and large, the preferred choice.

Semantic Web / LOD Stack

I've also written an entire smartup article on the Web of Data. Suffice it to say that Semantic Web technologies, which some prefer to refer to as Linked Data technologies, enable the linking of data and allows for the serendipitous discovery of new connections with other datasets.

Smartups understand the value of and participate in the Web of Data. Smartups realize that data is the unit of exchange on the Web, not documents. Instead of Hyperlinks being the engine of exchange, it is Hyperdata. Data is the energy, the food, exchanged between participants in the Social Web. Semantic Web technologies facilitate the flow of information between "habitats", between communities.

Responsive Codebase

This is the most generic-sounding piece in the tech platform layer. I will not delve too much into this piece of the tech platform layer as it deserves its own full-length article (perhaps the sixth installment in my smartup series).

There is not one preferred or recommended framework, language construct, or codebase that all smartups use. Different smartups use different code-creating tools. They pick those that they are most comfortable with and that serve their particular tech needs. However, there are some clear trends and, therefore, advice that can be offered to each smartup.

The broadest bit of advice is that Internet-coding technologies are evolving to catch up with and meet the needs of a more data-intensive world. Although a smartup CTO should use tools with which he or she feels comfortable, that does not mean that they can be complacent, that they should not spend time exploring and learning some of the newer options.

For instance, a smartup will choose an object-oriented coding style versus a procedural-coding style. But that does not mean that all smartups have to code in PHP, Python, or Ruby. There are some promising, new, convention-breaking language platforms that are the current rage in the Web dev world. One of these is NodeJS—a highly-scalable, high-concurrency, event-driven framework.

Another major smartup trend that epitomizes the Responsive Codebase mantra is moving as much of the processing away from the server side as possible (Web-1.0 and 2.0s thick-server approach). The focus is on creating what are referred to as fat- or thick-client applications. In other words, the browser or mobile device handles considerable more of the processing, relying a lot less on the server.

Another trend is the use of light-weight code libraries. When properly utilized, they allow a smartup to react more quickly and be nimble in their coding practices. As an example, one light-weight code library that my newest smartup uses is JSON-LD. It brilliantly facilitates cross-piece integration and as such can be categorized as falling into both pieces two and three in the tech platform layer.

A final smartup trend is preferred data formats. According to a recent report, 55 percent of all new APIs have support for JSON and a staggering 20 percent of new APIs support only JSON. This demonstrates the quickly-growing trend of utilizing JSON as a preferred data format (see slides 22 & 23). It also indicates that for data interchange, the reliance on XML is fading fast.

Modern Web Standards

Smartups support, adopt, and utilize Web standards. HTML5 and CSS3 are currently among the two most important Web standards. There are of course other standards, whose utility will vary among smartups, but these two should be utilized by all smartups.

The Second Layer: User-control and Economic Engine

The next layer of the smartup stack contains two sublayers that interconnect via their direct connections with the technology platform. Once again, this illustrates the importance of the technology platform as being a fundamental, foundational layer to all smartups.

These two sublayers are:

User-centric Rights & Control

Future-looking Economic Engine

User-centric Rights & Control

As I have written much about user-centric control over identity, privacy, usage rights, and data portability in the past, I will gloss over most of the details. If you're interested in learning more about my viewpoints on these topics, simply search my website.

All smartups believe in and understand the importance of returning as much control over data as possible back to the users. They realize that it not only makes sense from the standpoint of being good social stewards, but also it makes good business sense as well.

With support from the smartup's tech platform, users have significant power over each piece of data that they contribute, that they generate. Further support for users' rights and control can be provided through novel, user-friendly legal contracts.

Future-looking Economic Engine

I've been interested in future-of-money projects and theories for sometime—particularly in how technology, specifically Internet tech, is leading to a revolution in how value is exchanged. This is why I am a charter member of the newly-announced W3C Web Payments Standards Community Group.

I believe that new micropayment frameworks and economic models are essential to not only the healthy growth and long-term viability of a truly Social Web, but also to our greater global society. The future of money and of economic self reliance rests in the emergent properties of the social-driven superorganism. Centrally-controlled currencies will eventually give rise to decentralized currencies and instead of tightly controlled and regulated markets, self-regulation via distributed command and control processes will become the norm.

Smartups are on the bleeding edge of this economic revolution. Smartups thus play an important part in helping to push new payment frameworks and economic models. They are intimately involved in evolving economic models and understanding the need for a universal payment mechanism for the Web—a mechanism that will facilitate the proliferation of alternative currencies, friction-less payments, crowdfunding, and general value exchange.

One payment framework that my smartup will be leveraging is PaySwarm. It is described as, "an open standard that enables web browsers and web devices to perform micropayments and copyright-aware, peer-to-peer digital media distribution." I believe that PaySwarm can become one of the central pillars to any smartup's future-looking economic engine.

The Third Layer: the Smartup Social Engine

This layer integrates with the innermost two layers of the smartup stack. The focus is more on the user interface (UI) and the user experience (UX).

When combined with the first two layers, this layer comprises what can best be described as the Smartup's Social Engine. It is the internal platform that contains any intellectual property (IP). It is the fully-functioning application that provides the smartup's unique product and service offering.

Although basic UI/UX considerations were made during the initial MVP testing, proving, and refinement phase, it was a Lean UI and Lean UX process. The Social Engine Layer is where a smartup spends considerable time perfecting its full-blown UI and UX. Issues such as tight integration with the the User-centric Rights & Control and Future-looking Economic Engine sublayers are addressed. Issues with proper social interaction flow are addressed.

At this level in the smartup stack, the focus begins to shift more toward the outside, toward the physical usage of the service, and not its technical underpinnings. Toward that end, pathways with which others can interact, integrate, and extend the smartups services are developed and engineered. These become the domain of the next layer.

The Fourth Layer: Outward-facing Connections

A key vision of the smartup model is to encourage and enable outside parties–3rd-party developers and other smartups–to contribute to and expand upon your smartup's vision. To bring that goal to fruition, a smartup makes anywhere from one to three of the following sublayers available to outside parties. How many sublayers are offered depends on the type of smartup and its overall needs and vision.

A key vision of the smartup model is to encourage and enable outside parties–3rd-party developers and other smartups–to contribute to and expand upon your smartup's vision. To bring that goal to fruition, a smartup makes anywhere from one to three of the following sublayers available to outside parties. How many sublayers are offered depends on the type of smartup and its overall needs and vision.

The three possible sublayers of the fourth smartup stack layer are:

Smartup API Access

Smartup Open Source SDK

Smartup Standards Group

Smartup API Access

By and large, the vast majority of smartups publish a set of APIs that allow outside parties select access to their datasets. As discussed in the final layer section below, the use of APIs by outside parties can be a major catalyst in a smartup's growth and success.

Smartup Open Source SDK

The Software Development Kit (SDK) sublayer is more accurately termed an Application Development Kit (ADK) sublayer. The notion behind this sublayer is that there are core codebase modules that may very well be primed for open sourcing. We will see below in the discussion of the final layer of the smartup stack why open sourcing some (or all) of your smartup's codebase can significantly accelerate the development and evolution of your platform.

Smartup Standards Group

This sublayer is the least-frequently encountered sublayer in the smartup world. The purpose of this sublayer is to standardize key pieces of a smartup's platform.

Above, in the second layer section, I briefly mentioned PaySwarm. That is a perfect example of a smartup opening up some of its work, exposing their efforts to the open standards process. The newly-announced W3C Web Payments Standards Community Group will focus its efforts around core working technology—mainly PaySwarm.

If your smartup has key technologies that could benefit the greater Social Web by becoming a part of an open standard, then you are encouraged to offer up as much of your technology as possible to make that happen.

The Final Layer: the Smartup Ecosystem

This last layer is perhaps the most difficult one to describe in a few paragraphs. The goal is to freely offer unrelated, 3rd-party smartups and developers tools that they can leverage to help build out, evolve, and expand upon your smartup's original vision. At the same time, the access that you provide to your smartup's datasets and technology allows them to create their own paths to success. This is what I term a smartup's ecosystem.

The sublayer offerings in the fourth layer enable the creation of a motivated, loosely-organized team of volunteer coders that can and will help expand upon and evolve your technology—at least that part of your technology to which you allow 3rd-party access. The power that a corps of ecosystem partners can bring to your smartup's success cannot be emphasized enough.

As an example, think of what happened when Automattic–the original makers and copyright holders of WordPress–open sourced the codebase. This led to the eventual, very-large ecosystem of WordPress theme shops, plugin developers, and consultants. It also allowed for Automattic to gain an exceptionally cheap (as in cost) and talented labor force which it continues to use to this day to help it build out the WordPress codebase. That is one of the powers of crowd-sourced software development via open source practices.

Twitter is another great example of the virtues of creating an ecosystem. In its early days, Twitter not only welcomed, but strongly supported and encouraged 3rd-party developers and startups to help expand their ecosystem. They published a rigorous set of APIs that allowed for developers to gain access to many of the datasets Twitter captured. In return, the 3rd-party developers were able to create new features and services that augmented the Twitter experience. This led to a number of successful companies that seemed to pop up over night, swirling around the core of Twitter.

Without these ecosystem partners, Twitter may very well not have succeeded. Unfortunately, as Twitter continues to struggle with figuring out how it can monetize its success, it has cracked down on their ecosystem partners in recent months, making many of them wonder if they can trust Twitter anymore. Twitter's brilliant ecosystem strategy may be coming to a close.

Facebook was also an early creator of an ecosystem of developers. They offered limited API access, created their Open Graph ontology, and even open sourced a few of their key technologies. However, for the most part, Facebook required (and still does) that the apps of 3rd-party developers live within the siloed confines of the Facebook universe. Facebook is not a proponent of the Open Web, Open Standards, or user-centric control.

Of course, neither Automattic, Twitter, or Facebook are considered smartups. Although they do support–each to differing degrees–some level of open source involvement with their projects, they fail the smartup test with respect to many of the other smartup stack layers detailed above.

Conclusion

You don't build a startup, you build a company. Whereas the word startup is an enticing concept, it is nothing more than a brand, it connotes nothing more than the early stages of a company. Each stage has its own specific needs and foci. Smartups are no different in this regard.

As mentioned above, many Internet-based startups do not transcend their technology but smartups have a vision beyond their technology. Even so, smartups recognize that–as Internet-obligate entities–they cannot divorce themselves from their technological foundations.

A smartup first builds a strong, foundational layer of technology upon which it then layers on additional functional components. Each of these components–also called sublayers–help push the smartup closer to its vision. To fully actualize its vision a smartup must create the conditions that enable, encourage, and support a system of ecosystem partners. In unison with its ecosystem partners, a smartup works toward providing services that empower users to pursue some of their passions and fulfill some of their goals.

Past Smartup Series Articles

Part 1: Web 3.0: Powering Startups to Become Smartups

Part 2: Web 3.0 Smartups: the Social Web and the Web of Data

Part 3: Web 3.0 Smartups: Moving Beyond the Relational Database

Part 4: Web 3.0 Smartups: the New Web Business Space

</Smartups Series Part 5 of 5>

How to Get Me Involved in Your Smartup

Interested in getting me involved in your smartup? Please see my 7-by-7 rules.

August 15, 2011

How to Get Me Involved in Your Smartup

I receive six to eight requests for help from startups each year—from angel investing, to advising, to consulting, to joining as a founder. To date, I've never accepted a single offer. Recently, however, I was very intrigued by one startup's vision, so much so that I spent a significant amount of time exploring that opportunity. In the end, it did not work out. A few of the reasons why this opportunity did not pan out will be encapsulated in my below set of guidelines.

I've created this post for one purpose. To help alleviate the emails, requests for Skype convos, and PMs that I periodically receive. I'm guessing that I've spent 200 hours this year alone rehashing, justifying, even debating to the point of arguing, some of the items below. This post will serve as a one-stop-shop to learn about my requirements. If you read this and still think that we should talk, then contact me.

Below you will find what I call my 7-by-7 rules. Whereas this is my current set of criteria, I believe this list is useable by anyone seeking to attract talent or looking to start a smartup. Please feel free to adopt, modifying, or expand upon this list and use it as you see fit.

First an important note. I have my own nascent smartup that requires most of my time. I also have a number of other projects and responsibilities that use up any remaining time. I am active on three W3C standards groups, closely work with a few open source projects, and spend as much spare time as possible with my family.

Thus it will be very difficult to get me to bite on your project. But if you want to maximize your chances of success, here is how.

General Requirements

Your startup must be in the Web-based or mobile-based Internet space. In other words, it is a technology-obligate Internet company. Although in the not-too-distant future, my horizons will broaden to include nanotech startups as well.

Your startup must be a smartup. I am not interested in stale Web-2.0 startups.

Your smartup must be looking to build, or at least contribute to, the Social Web

Your smartup and its founders must be proven participants in or at least supporters of open source projects and principles

Your smartup must primarily use open source tools and technologies to build its technology platform

You understand, believe in, and adhere to the practices and principles of lean startups

I will not sign an NDA. In 2009, I signed a few and requested a few others to do the same. In 2010, I requested zero NDAs and only signed one. Now, I will no longer request nor sign NDAs. To learn why, see this good read on the topic.

Specific Requirements

Note: If you are at the earliest stages of your smartup–having yet to incorporate–and are interested in coaxing me to join as a founder, then I will help you address each of the below points assuming that I agree to come on board.

The smartup founders must be pre-aligned on exit valuation and have a written exit strategy that all founders have signed. Why? See this great resource.

You must understand startup valuation and its impact on future employees and future investors. See this interesting link for one way to assess your smartup's current value. If you think that your smartup has a current value other than zero, you must be able to justify it. Although your sweat equity and early accomplishments of course add value to your smartup, you are initially being compensated for your contributions by receiving a large chunk of very cheap stock. If you are a pre-profit, pre-revenue, pre-product smartup without having cut a single line of code, please don't overvalue your contributions at this stage. Outside investors will certainly not make that mistake.

With respect to point two above, you have a well-reasoned and modeled capitalization table (cap table). This may not seem crucial right now, but it becomes essential if and when you seek outside investment. Creating, understanding, managing, and periodically updating your cap table early on is key to making better business decisions. Remember, you are starting a business, not a charity.

Your smartup must know when to think outside of the box factory and when you must view the box from within. As a founders' team, you will meet some very fascinating, talented, and inspiring people as you promote your project. Don't get too caught up in wanting to hangout with inspiring people all day long. We all want to do that. What matters right now is laying a solid technical foundation for your smartup (see point 1, General Requirements, and point 5, Specific Requirements). Properly allocating scarce resources to accomplish that crucial task at inception is essential to your long-term survivability, investor suitability, and future success.

You firmly understand and agree that at the early stages of your smartup, tech is at the core of your company. To that end, your smartup has an internal technical founder. Whereas having a strong business foundation within the core team is fine, even desirable, not having any technical expertise in the core team is detrimental. I have an upcoming fifth installment to my smartup series that will explain the rationale behind this requirement in detail.

You have sufficient in-house engineering skills to begin the process of building out your technical platform, of creating and iterating your MVP. You do not plan on using contract coding firms or overseas hacking sweatshops for building your platform. Don't be penny wise and pound foolish. Part of my upcoming post will also help explain this requirement.

I have no interest in becoming an employee or a non-founder-level executive. If you want me to be part of your smartup, I will only entertain a founder's position with a healthy ownership stake. I must have the opportunity for significant reward with the opportunity costs that I will incur. The only exception to a founder's position I'll entertain is an advisory or outside board member position.

July 20, 2011

Cybernetics, the Social Web, and the (Coming?) Singularity

Over the past year or so, I have been doing a lot of thinking, reading, and ruminating about several topics: the outdated thinking of Web-2.0 startups, the need for a revolution in the microblogging space , what identity in the Social Web is really all about, and the meaning of a truly user-centric Social Web. As I've been furiously writing about these topics, in the back of my mind, I've been wondering where all of these advancements may eventually lead.

Whereas you will find my insights and thoughts about the Social Semantic Web strewn throughout my website, this article is an attempt to extrapolate a few of those ideas in a more provocative and profound–if not frightening–way. So, you have be forewarned. Any resemblance to reality may be greatly over exaggerated!

Pioneering the Philosophical Study of Cybernetics

First a little background about how I got interested in computers, science, and the natural world. My Father (Kenneth M. Sayre), a well-known expert in ancient Greek philosophy, is also a recognized thought leader in the Philosophy of Mind and Artificial Intelligence (AI). He is one of the pioneers of the philosophical study of cybernetics and AI.

While completing his PhD at Harvard, my Father worked at M.I.T.'s Lincoln Laboratory, joining a team of several AI pioneers—Marvin Minsky, Oliver Selfridge, and Edward Fredkin. My Father shared an office with Fredkin, the two of them spending many hours playing Go.

After leaving M.I.T in the late 1950s he went to the University of Notre Dame (ND), joining the philosophy department. Over his more than 50 years at ND, he has written eighteen academic books, six that deal with cybernetics, AI, and Philosophy of Mind.

Belief and Knowledge: Mapping the Cognitive Landscape (1997)

Cybernetics and the Philosophy of Mind (1976)

Consciousness: A Philosophic Study of Artificial Intelligence (1969)

Philosophy and Cybernetics (1967)

Recognition: A Study in the Philosophy of Artificial Intelligence (1965)

The Modeling of Mind: Computers and Intelligence (1963)

In the past several decades, his work has focused more on ancient Greek philosophy and environmental ethics. His latest book, Unearthed: The Economic Roots of our Environmental Crisis, looks at the relationship between the laws of thermodynamics, ecology, and our current state of economic unrest—topics that are all important with the subject matter presented in this article.

So I guess it is no surprise that as a kid growing up, I was fascinated not only by computers and technology, but also by science and nature, especially ecology—although that was more of my Mother's influence.

With my Father's work in AI, he had access to Notre Dame's mainframe. As a freshman in high school, I learned how to program on the University's very big computer. Once the first personal computers came out, I was hooked on computer technology. Even though computers fascinated me, there were not many career options in programming when I went to college, so I pursued undergraduate degrees in molecular microbiology and ecology.

As I look back at the people with whom my Father rubbed elbows and I consider his early career, I think it's quite fitting that I find myself thinking about the forefront of technology and how humankind is possibly racing toward it's cybernetic destiny.

Cybernetics and the Social Web

Although there are many different definitions of cybernetics, in general, cybernetics covers a range of topics from how systems describe themselves, to how they control themselves, and even to how they organize themselves. On page 18 of his book, Cybernetics and the Philosophy of Mind, my Father defines cybernetics as the "study of communication and control functions of living organisms, particularly human beings, in view of their possible simulation in mechanical systems."

A lot has changed in humanity's intraspecies-communication abilities since my Father's book came out (almost 35 years ago). The biggest change, in my view, is the emergence of the Web-based Internet. With advances in chip architecture, the promise of chip-based photonics, the emergence of quantum computing, and the revolution in manufacturing thanks to nanotechnology, a lot is about to change with regards to humankind's ability to control biological systems using mechanical (albeit nanosized) systems.

I argue that if the technological realities of the next several decades mimic my conjectures below, then cybernetics will not be about the "simulation [of humanity's communications and control functions] in mechanical systems", as my Father states. Instead, it will be about humanity's assimilation with its electromechanical creations. In other words, it will be about the merging of man and machine (women as well).

So how exactly are cybernetics and the Social Web tied to together?

Before we take a closer look at how the Social Web plays a part in humanity's cybernetic destiny, let's set the stage by talking a little bit about technology's exponential growth and the coming singularity.

In the Beginning… or Let There Be Technology

Taking a page out of the creation myth, once the Universe came into existence thanks to the Big Bang, the stage was set for the rise of humanity, its technology, and its eventual cybernetic destiny.

In his intriguing book, The Age of Spiritual Machines, prolific inventor and futurist Ray Kurzweil makes an interesting statement. To summarize his statement in a prophetic manner, physics begets chemistry begets biology begets technology. From the moment that our Universe came into existence, the Laws of Physics quickly became set in stone, paving the way for the eventual rise (albeit very far into the future) of the technological transformations I'll present below in the section Cybernetic Phases of Humankind.

Life Appears Linear Even When Living on a Curve

One of the foundational threads that play an integral role in much of Ray Kurzweil's writings, is the notion of the exponential growth in computational power. In the early 1900s, way before silicon-based chips and Moore's Law, basic mechanical computational devices existed. Going all the way back to these devices, Kurzweil has plotted on a graph the growth of computational power as measured by calculations per second per unit cost of computation. The graph shows an eerily steady exponential growth in computational power over the past 100 years.

Assuming that there is no reason this trend will not continue into the foreseeable future, it can be extrapolated that by the year 2020, a $1,000 computer will have the computational capacity of a human brain. But, and this is an important point, artificial computers are significantly faster at calculations than are our brains as they are electron based and not biochemically based.

By the year 2030, that same $1,000 will purchase a computer that is 1000 times more powerful than the one you purchased it 2020. That means one little computer will be able to perform as many calculations per second as 1000 human brains sitting in a big corporate think tank.

How little will these massively-parallel computers be? Try the size of a sugar cube. Remember that sugar cube as it is the sweet connection that comes into play later.

If you look at where we currently are on the graph of computational power, you'll notice something interesting. It appears that the current state of the growth in computational power is on an asymptote. This is another important point. At this point in the curve, the doubling in processing power begins to accelerate. As Kurzweil points out, the exponential growth of computing power may actually be growing exponentially.

The interesting thing about living on a curve–especially an asymptotic one–is that it is often difficult or impossible to comprehend that accelerated exponential growth is occurring. In fact, exponential growth is often only observed from a historical perspective.

How is the exponential growth of computation related to cybernetics? Why is it important to understand?

At some point in our current asymptotic accession on the computational power scale, we may reach a singularity, more accurately termed the technological singularity. The term singularity is taken from physics, from the theory of black holes. The singularity is the spacetime point at the "bottom" of a black hole's event horizon. It is where all matter and energy that fall into a black hole eventually end up.

It's interesting to note that according to Einstein's general theory of relativity, the Universe started as a singularity. This makes it easier to understand the technological singularity. An observer on the other end of the Big Bang's singularity, for instance in another universe, would have no idea of what is happening in the new universe.

Therefore the technological singularity is a point where the rate at which new technological advances are being made is so great that it is impossible for today's current humans to comprehend. The implications of a technological singularity extend well beyond the continued exponential increases in computational power.

Instead of new advances and innovations happening in a few years or months or days, once the singularity occurs, the mind-boggling computational powers at our disposal will lead to innovations happening in hours, minutes, or seconds. Only those entities that are integrated into the new technological landscape will be able to comprehend this quickly evolving existence.

For a general, high-level view regarding humankind's cybernetic destiny, see my article, The HyperWeb: it's All About Connections.

Cybernetic Phases of Humankind

Now we arrive at the synthesis of all of these seemingly disparate topics. What is the relationship between the Social Web, cybernetics, and the singularity.

Although I have not read about the classification that I'm about to propose, it is possible that someone may have already written about this using these or similar terms.

I will spend less time on the early phases as it is the later phases that have the most intrigue. When reading the below phases, keep in mind that at the juncture between one phase and the next, there are overlaps that make it difficult to clearly determine the proper phase to best classify a given era.

As humanity progresses through each of the phases below, we separate ourselves further and further from the rest of nature, from the natural world, from the original Web of Life. We become more reliant on our technology and less on the services of the global ecosystem.

Phase 1: The Natural Web

This phase is also called the Web of Life. It encompasses all geochemcial and biological activity before humankind and goes right up to the emergence of the Web-based Internet. Humanity is still very dependent on nature and as a result remains relatively outward looking.

Phase 2 The Anthropocentric Web

This phase is also called the Web of Documents and the realm of social networks. It encompasses what is best known as Web 1.0 and Web 2.0. Here the focus shifts inwards, focusing on innovating more efficient and novel ways in which humans communicate.

I believe humanity is on the cusp of its next cybernetic phase. We are at the Web 2.5 stage ready to break through into Web 3.0.

Phase 3: The Social Semantic Web

This phase is also called the Web of Data, the Semantic Web, or the Social Web—the latter term being what I've been heavily promulgating. Human data on a global scale is encoded into machine-understandable data. This enables the linking of data and allows for the serendipitous discovery of new connections with other datasets. Data now becomes the unit of exchange on the Web, not documents. Instead of Hyperlinks being the engine of exchange, it is Hyperdata.

Imagine being able to automatically discover people with whom you share similar skill sets, interests, and ideas. Imagine being able to ditch the social networking silos and instead operate and control your own communications channel that can link up with, share, and communicate with anyone else on the Web in real time. Linked data and new communication protocols will make that possible. The Web will finally become social.

This phase is best known as Web 3.0. It has also be refereed to as the Giant Global Graph.

Phase 4: The Artificial Synaptic Web

This phase is also called the Web of Information which is enhanced by the Web of Sensors. This will be the Web 4.0 era.

Remember those sugar-cubed sized, massively-parallel computers? The Artificial Synaptic Web is where artificial neural networks interface with organic, biologic neural networks. In other words, human brains.

Some humans will opt to augment their bodies by having one of these sugar-cubed sized computers implanted into their brainstems. It will of course be an Internet-enabled device. It will provide new avenues for data exploration and communication.

Data from the Giant Global Graph will now be populated with sensor data from the millions (maybe billions) of ubiquitous micro and nano scale devices — some of which are interfaced with cell clusters within our bodies. We will be able to communicate directly with one another, from one brain to the next.

At this stage in the Web's evolution, the inputs and outputs are not via the Web browser–an archaic interface that differentiated the Web from the rest of the Internet during its first three or four decades.

Whereas we can still think in terms of a Web-based Internet in Web 4.0, that phrase will not mean what it means today. The new Web will not require Web browsers to process client data. The Web will instead be analogous to the Web of Life, to an ecological Web but with fewer connected participants, with fewer dependent species, and objects.

The major difference will be that instead of humanity accessing the Social Web via a browser on a disparate device, our brains will be the Web browsers. For those of us who opt to have a neural network interface implanted into our brainstems, we will no longer need a separate piece of physical hardware like a smartphone, tablet or notebook computer.

Phase 5: The Global Brain

This phase is what I call the Web of Cyborgs, Web of Machines, or Web of One. In essence, this is the new version of humanity as superorganism, as the collective. It is where connective intelligence merges with collective intelligence. It is where the familiar is thrown out the window. What we currently consider normal reality morphs into a surreal, science-fictionesque world.

This will be the Web 5.0 and Web 6.0 era—although I'm not truly clear on what Web 6.0 will encompass.

The biologic and artificial become one with our basic organic infrastructure improved by synthetic biology and enhanced by nanotechnology. Molecular machines combined with exceptionally-powerful computational devices, turn us into human-2.0 types.

This phase occurs around the time of the singularity—which is predicted by Kurzweil to happen in 2045. The singularity will allow human-2.0 types to continually innovate new technologies and do so at increasingly faster rates.

At this stage, cloud computing does not occur between Internet-connected server clusters. Instead, the cloud is the Global Brain—the networked neocortices of all brain-stem augmented humans. The cloud will be grey matter and nanobot powered. Instead of silicon chips crunching calculations, it will be living tissue and graphene-based machines computing in a symbiotic relationship.