Paul Gilster's Blog, page 85

May 30, 2019

Into the Neptunian Desert

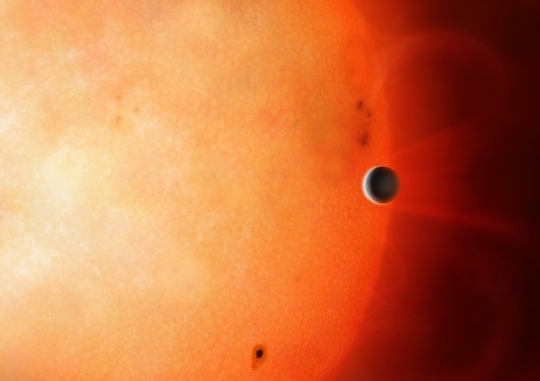

A planet labeled NGTS-4b has turned up in a data space where astronomers had not expected it, the so-called ‘Neptunian desert.’ Three times Earth radius and about 20 percent smaller than Neptune, the world was discovered with data from the Next-Generation Transit Survey (NGTS), which specializes in transiting worlds around bright stars, by researchers from the University of Warwick. It turns out to be a scorcher, with temperatures in the range of 1,000 degrees Celsius.

NGTS-4b is 20 times as massive as the Earth, and its orbit takes it around its star, a K-dwarf 920 light years out, every 1.3 days. The planet is getting attention not so much because of what it is but where it is. Lead author Richard West (University of Warwick) comments:

“This planet must be tough – it is right in the zone where we expected Neptune-sized planets could not survive. It is truly remarkable that we found a transiting planet via a star dimming by less than 0.2% – this has never been done before by telescopes on the ground, and it was great to find after working on this project for a year. We are now scouring out data to see if we can see any more planets in the Neptune Desert – perhaps the desert is greener than was once thought.”

Image: An artist’s conception of exoplanet NGTS-4b. Credit: University of Warwick/Mark Garlick.

The paper on NGTS-4b has me thinking about all of our exoplanet detection methods in terms of their strengths and weaknesses, for there are some things that each of them can’t do well. Consider Jupiter-class worlds in orbits comparable to the Solar System’s largest planet. Depending on where the planet was in its orbit, an astronomer might look for a transit of such a world for years and never see it, even if it were the dominant planet in its system.

Even an Earth-sized planet in a habitable zone comparable to the Sun’s is going to take a year or so to complete its orbit, so we’d need several years to confirm it. When the exoplanet hunt began to take off in the energizing months and years after the discovery of 51 Pegasi b, we were finding ‘hot Jupiters’ in unexpectedly close orbits and more than a few of them. Here again observational bias was in play — we found these early on because radial velocity methods could detect these more easily than the tougher, smaller targets further out in the system.

But the Neptunian desert does not seem to be the result of observational bias. What we discover in looking at Kepler statistics is that there are few short-period Neptune-sized worlds, a ‘desert’ that the paper on this work defines as a lack of exoplanets with masses around 0.1 Jupiter mass and orbital periods less than 2-4 days. The reason this isn’t considered observational bias is that such planets should be easy to spot, and we’ve found many Neptunes with longer orbits from missions like Kepler and CoRoT. We may still find, however, that short-period Neptunes produce transits too shallow for most ground-based surveys to detect.

The issue of ‘deserts’ may well remind you of the better known ‘brown dwarf desert,’ which refers to the lack of such objects in orbits closer than 5 AU around solar mass stars. This desert turned up statistically at a time when numerous free-floating brown dwarfs were being found. Explanations include the possibility of brown dwarf migration into the primary star, but we’re a long way from fully understanding migration within a protoplanetary disk. Various formation models produce different outcomes as the investigation of the phenomenon continues.

Meanwhile, as the University of Warwick researchers note, the transit of NGTS-4b is the shallowest ever detected from the ground, giving us the promise of further such discoveries. From the paper:

The discovery of NGTS-4b is a breakthrough for ground-based photometry, the 0.13 ± 0.02 per cent transit being the shallowest ever detected from a wide-field ground-based photometric survey. It allows us to begin to probe the Neptunian desert and find rare exoplanets that reside in this region of parameter space. In the near future, such key systems will allow us to place constraints on planet formation and evolution models and allow us to better understand the observed distribution of planets. Together with future planet detections by NGTS and TESS we will get a much clearer view on where the borders of the Neptunian desert are and how they depend on stellar parameters.

The scientists offer two possibilities for the survival of this planet in the Neptunian desert, and in particular for the persistence of its atmosphere in these hellish conditions. NGTS-4b may have an unusually high core mass, or it may have migrated in to its current close orbit, probably within the last one million years, so that its atmosphere would still be evaporating. The question would then be whether the ‘desert’ is elsewhere marked by a mechanism stopping such migration.

The paper is West et al., “NGTS-4b: A sub-Neptune transiting in the desert,” Monthly Notices of the Royal Astronomical Society, Volume 486, Issue 4 (July 2019), pp. 5094–5103 (abstract / full text).

May 29, 2019

Triton: Insights into an Icy Surface

Al Jackson reminds me in a morning email that today is the 100th anniversary of the Arthur Eddington expedition that demonstrated the validity of Einstein’s General Relativity. The bending of starlight could be observed by looking at the apparent position of stars in the vicinity of the Sun during a solar eclipse. Eddington’s team made the requisite observations at Principe, off the west coast of Africa, and the famous New York Times headline would result: “Lights All Askew in the Heavens . . . Einstein Theory Triumphs.”

Al also sent along a copy of the original paper in Philosophical Transactions of the Royal Society of London, where authorship is given as “F. W. Dyson, A. S. Eddington and C. Davidson.” This created an agreeable whimsy: I imagined the evidently ageless Freeman Dyson continually traveling through time to provide his insights at major achievements like this, but the reality is that this Dyson was Frank Dyson, then Britain’s Astronomer Royal.

Ron Cowen does a wonderful job on the Eddington eclipse expedition for Scientific American today, if you’d like to read more. And now on to today’s story.

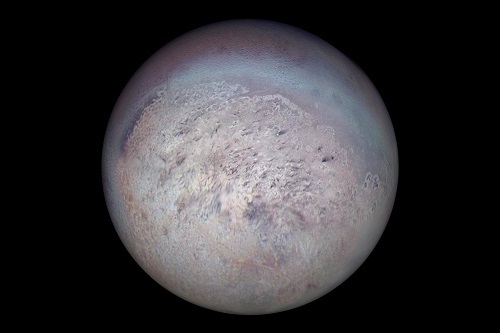

Ices on Neptune’s Largest Moon

Triton has always intrigued me, and in most ways even more than Titan. When I was young, Triton was thought to be much larger than it actually is, and it had the imaginative advantage of being far more distant, so that we had another world almost as large as a planet, we thought, way out there in the farthest reaches of the Solar System. The fact that Triton’s orbit was retrograde also helped, an indication of its likely history as a captured Kuiper Belt object.

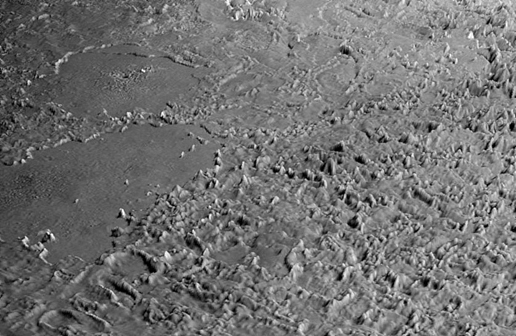

We learned a lot once Voyager made the journey, verifying the fact that even though Triton is Neptune’s largest moon, it’s still only about ⅔ the size of our own Moon. We found temperatures near absolute zero and an atmosphere almost 70,000 times less dense than that of the Earth, one laden with nitrogen, methane and carbon monoxide, with a striking mix of terrains.

Image: This view of the volcanic plains of Neptune’s moon Triton was produced using topographic maps derived from images acquired by NASA’s Voyager spacecraft during its August 1989 flyby. Triton, Neptune’s largest moon, was the last solid object visited by the Voyager 2 spacecraft on its epic 10-year tour of the outer solar system. The rugged terrain in the foreground is Triton’s infamous cantaloupe terrain, most likely formed when the icy crust of Triton underwent wholesale overturn, forming large numbers of rising blobs of ice (diapirs). The numerous irregular mounds are a few hundred meters (several hundred feet) high and a few kilometers (several miles) across and formed when the top of the crust buckled during overturn. The large walled plains are of unknown origin, although the irregular pit in the center of the background walled plain may be volcanic in nature. These plains are approximately 150 meters (0.093 miles) deep and 200 to 250 kilometers (124 to 155 miles) across. Credit: NASA/JPL/Universities Space Research Association/Lunar & Planetary Institute.

Triton also offers a striking example of laboratory work leading to insights into distant places. A paper now in process at the Astronomical Journal looks at a specific wavelength of infrared light that is absorbed when carbon monoxide and nitrogen molecules vibrate in unison. Both absorb their own distinct wavelengths of infrared light, but the vibration of an icy mixture of the two produces a unique separate wavelength that the study has identified.

Now we turn to the 8-meter Gemini South Telescope in Chile, which the team used to confirm the same infrared signature on Triton, using a high resolution spectrograph called IGRINS (Immersion Grating Infrared Spectrometer). Stephen Tegler (Northern Arizona University), who led the study, gets across the satisfaction of closing the gap between analysis and observation:

“While the icy spectral fingerprint we uncovered was entirely reasonable, especially as this combination of ices can be created in the lab, pinpointing this specific wavelength of infrared light on another world is unprecedented.”

The work shows us a Triton where both carbon monoxide and nitrogen freeze as solid ices, but also are able to form an icy mixture that is revealed in the Gemini data. Voyager 2 observed dark streaks on the surface during its 1989 flyby, and we saw geysers at the moon’s south polar region. We may be looking at evidence of an internal ocean providing a source for the geyser material, or possibly heating of the thin layer of volatile surface ices by the Sun.

Image: Voyager 2 image of Triton showing the south polar region with dark streaks produced by geysers visible on the icy surface. Credit: NASA/JPL.

To go any further demands continued investigation, preferably with (one day) an orbiter or lander mission to Triton. For now, though, the fusion of lab work with existing data shows the continuing relevance of the one mission we did manage to fly by distant Neptune, and the ability of Earth-based instruments to build on our emerging understanding of its largest moon.

“Despite Triton’s distance from the Sun and the cold temperatures, the weak sunlight is enough to drive strong seasonal changes on Triton’s surface and atmosphere,” adds Henry Roe, Deputy Director of Gemini and a member of the research team. “This work demonstrates the power of combining laboratory studies with telescope observations to understand complex planetary processes in alien environments so different from what we encounter every day here on Earth.”

Seasonal variation on Triton is slow-moving given Neptune’s 165-year orbit, so that each season lasts about 40 years. Triton’s summer solstice occurred in 2000, meaning we are 20 years out before autumn begins. Patient data gathering will help us see how seasonal variations in the atmosphere and on the surface affect the mixture of ices to be found there. We also now know to look for the infrared signature of mixing ices on other small worlds in the Kuiper Belt.

The paper is Tegler et al., “A New Two-Molecule Combination Band as Diagnostic of Carbon Monoxide Diluted in Nitrogen Ice on Triton,” in press at the Astronomical Journal.

May 28, 2019

A Comet Family with Implications for Earth’s Water

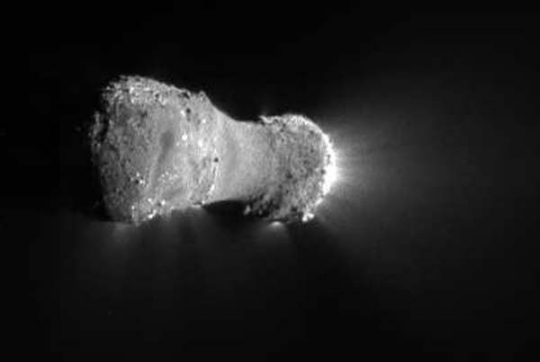

‘Hyperactive’ comets tend to call attention to themselves. Take Comet Hartley 2 (103P/Hartley), which was visited by the EPOXI mission (formerly Deep Impact) in November of 2010. Three months of imaging and 117,000 images and spectra showed us just how much water and carbon dioxide the little comet was producing in the form of asymmetrical jets, a level of cometary activity that made the comet, in the words of one researcher, ‘skittish.’ It was, said EPOXI project manager Tim Larson at the time, “moving around the sky like a knuckleball.”

Image: Comet Hartley 2, in every sense of the term a moving target. Credit: NASA.

Nor is Hartley 2 alone. Scientists had a good look at comet 46P/Wirtanen from the SOFIA airborne observatory [Stratospheric Observatory for Infrared Astronomy] last December. Here again we see a pattern of hyperactivity, with a comet releasing more water than the surface area of the nucleus would seem to allow. The excess draws on an additional source of water vapor in these comets, ice-rich particles originally expelled from the nucleus that have undergone sublimation into the cometary atmosphere, or coma. Moreover, it’s water with a difference.

For if there is one topic that draws the attention of all of us interested in the early Solar System, it’s the question of where Earth’s water comes from. A standard model of the protosolar nebula has temperatures in the terrestrial planet zone too high for water ice to survive, which would mean that the Earth accreted dry, and present-day water would have been delivered later, by comets or asteroids. Other models of in situ production of Earth’s water are also in contention, so the field is far from won by comets, asteroids or other mechanisms.

One way to study the comet possibility is through isotopic ratios, where we have two forms of a chemical element with different mass. Thus deuterium, which is a heavier form of hydrogen, can be measured in the deuterium/hydrogen, or D/H ratio. Data from Oort Cloud comets have tended to run twice to three times the value of ocean water, making them an unlikely contributor to the early Earth. But we also have three hyperactive comets — 03P/Hartley, 45P/Honda-Mrkos-Pajdušáková and 46P/Wirtanen — with the same D/H ratio as Earth’s water. That would imply that such comets could indeed have delivered water to the Earth.

Thus the interest in 46P/Wirtanen shown by European researchers at the Paris Observatory and the Sorbonne, affiliated with the French National Center for Scientific Research, who are behind the recent observations. Their analysis of the D/H ratio in this comet and other comets with known ratios shows that “…a remarkable correlation is present between the D/H ratio and hyperactivity.”

Image: The comet 46P/Wirtanen on January 3, 2019. Credit: © Nicolas Biver.

Although we seem to be seeing a clear distinction between Oort Cloud comets and Jupiter-family comets, we have to tread carefully. We also find that another Jupiter-family comet — 67P/Churyumov-Gerasimenko — has a D/H ratio three times Earth normal, while Oort cloud comet C/2014 Q2 has a ratio like Earth’s. So there’s no easy dividing line, leading the authors to add the suggestion “…that the same isotopic diversity is present in the two comet families.”

If that is the case, what makes cometary activity correlate in an inverse manner with the D/H ratio, so that the ratio in hyperactive comets decreases and approaches that of Earth water?

In a paper just published in Astronomy & Astrophysics, the researchers determined the active fraction — the fraction of nucleus surface area that it would take to produce the observed amount of water in the cometary atmospheres. They did this for all comets with a known D/H ratio. What they found was that the more a comet leans toward hyperactivity, the more its D/H ratio decreases and approaches that of the Earth. Out of this grows a hypothesis.

Hyperactive comets derive part of their water vapor from sublimation of icy grains expelled into their atmosphere, while non-hyperactive comets do not. These are processes that can show distinct D/H ratio signatures. Perhaps hyperactive comets provide a better glimpse of the ice within their nuclei, which turns out to be like that of Earth’s oceans. Those comets whose gas halo is produced only by surface ice do not show detectable ratios that are representative of what is available within the nuclei. If this is the case, then most comets have nuclei similar to terrestrial water, and comets can again be considered a major water source for Earth.

Image: Scientists at work aboard a Boeing 747 SOFIA Credit: © Nicolas Baker/IRAP/NASA/CNRS Photothèque.

We’re early in the game and other hypotheses are likewise in play. Hyperactive comets could belong to a population of ice-rich comets that formed just outside the snow line in the protoplanetary disk, indicating formation in a planetesimal. Or they could have formed in the outer regions of the solar nebula, which could also show a decrease in the D/H ratio.

But the most intriguing hypothesis remains the one suggesting that our measurements of D/H ratios are not representative of what is in the cometary nucleus. From the paper:

An alternative explanation is that the isotopic properties of water outgassed from the nucleus surface and icy grains may be different, owing to fractionation processes during the sublimation of water ice. The observed anti-correlation can be reproduced with two sources of water contributing to the measured water production rate and the active fraction: D-rich water molecules released from the nucleus and an additional source of D-poor water molecules from sublimating icy grains… Laboratory experiments on samples of pure ice show small deuterium fractionation effects… In experiments with water ice mixed with dust, the released water vapor is depleted in deuterium, explained by preferential adsorption of HDO on dust grains…

So a lot of ideas are in play, which means we need more accurate D/H measurements from both Oort comets and Jupiter-family comets. We need to find out whether hyperactive comets are simply showing us the norm; i.e., comets may all contain water very similar to what we have on Earth. And that would put comets back into play as a major contributor to Earth’s oceans.

“This is the first time we could relate the heavy-to-regular water ratio of all comets to a single factor,” notes Dominique Bockelée-Morvan, a scientist at the Paris Observatory and the French National Center for Scientific Research and second author of the paper. “We may need to rethink how we study comets because water released from the ice grains appears to be a better indicator of the overall water ratio than the water released from surface ice.”

The paper is Lis et al., “Terrestrial deuterium-to-hydrogen ratio in water in hyperactive comets,” Astronomy & Astrophysics Vol. 625, L5 (May 2019). Abstract / preprint.

May 24, 2019

Dataset Mining Reveals New Planets

I’m always interested in hearing about new ways to mine our abundant datasets. Who knows how many planets may yet turn up in the original Kepler and K2 data, once we’ve applied different algorithms crafted to tease out their evanescent signatures. On the broader front, who knows how long we’ll be making new discoveries with the Cassini data, gathered in such spectacular fashion over its run of orbital operations around Saturn. And we can anticipate that, locked up in archival materials from our great observatories, various discoveries still lurk.

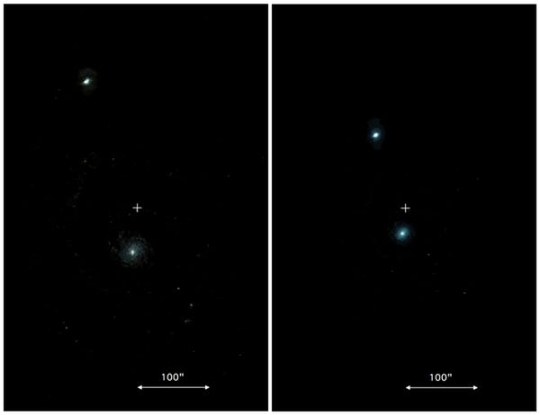

Assuming, of course, we know how to find them and, just as important, how to confirm that we’re not just looking at noise. What scientists at the Max Planck Institute for Solar System Research (MPS), the Georg August University of Göttingen, and the Sonneberg Observatory have come up with is 18 new planets roughly of Earth size that they’ve dug out of K2, looking at 517 stars that, on the basis of earlier analysis, had already been determined to host at least one planet.

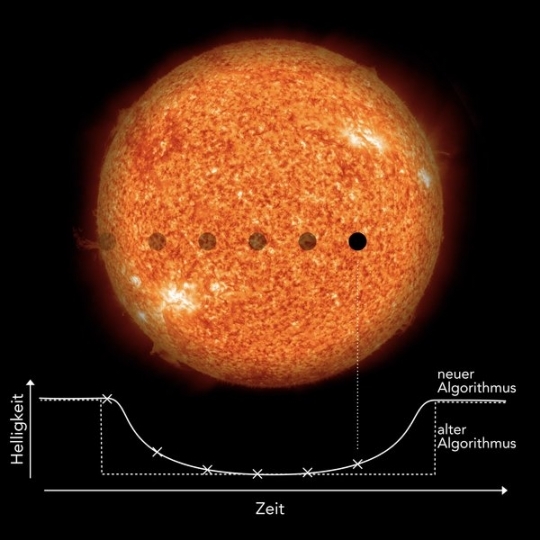

The Kepler mission was jeopardized by the failure of critical system components in its reaction wheel system, out of which emerged K2, looking not at the original 156,000 target stars but at a series of fields and a total of more than 100,000 stars for which light curves could be observed. None of the 18 new worlds were detected in earlier analysis because the search algorithms in play were not sensitive enough. But René Heller (MPS) and Michael Hippke (Sonneberg Observatory), working with Kai Rodenbeck (University of Göttingen) believed the sensitivity of the transit method would be enhanced by modeling a more realistic light curve.

“Standard search algorithms attempt to identify sudden drops in brightness,” explains Heller. “In reality, however, a stellar disk appears slightly darker at the edge than in the center. When a planet moves in front of a star, it therefore initially blocks less starlight than at the mid-time of the transit. The maximum dimming of the star occurs in the center of the transit just before the star becomes gradually brighter again.”

Most of the confirmed planets and those yet to be confirmed from both the original Kepler mission and K2 have been examined with a transit search algorithm called BLS — box least squares — in which, like other algorithms in play, the software looks for box-like decreases in flux from the light curve of the star. Heller and Hippke developed TLS — transit least squares — specifically to look for smaller planets by modeling the stellar limb darkening Heller mentioned above. The method is optimized for the detection of shallow periodic transits. The scientists believe the false-positive rate is suppressed and signal detection enhanced by this method.

From the paper:

In contrast to BLS, the test function of TLS is not a box, but an analytical model of a transit light curve… As a consequence, the residuals between the TLS search function and the observed data are substantially smaller than the residuals obtained with BLS or similar box-like algorithms, resulting in an enhancement of the signal detection efficiency for TLS, in particular for weak signals.

Image: If the orbit of an extrasolar planet is aligned in such a way that it passes in front of its star when viewed from Earth, the planet blocks out a small fraction of the star light in a very characteristic way. This process, which typically lasts only a few hours, is called a transit. From the frequency of this periodic dimming event, astronomers directly measure the length of the year on the planet, and from the transit depth they estimate the size ratio between planet and star. The new algorithm from Heller, Rodenbeck, and Hippke does not search for abrupt drops in brightness like previous standard algorithms, but for the characteristic, gradual dimming and recovery. This makes the new transit search algorithm much more sensitive to small planets the size of the Earth. Credit: © NASA/SDO (Sun), MPS/René Heller.

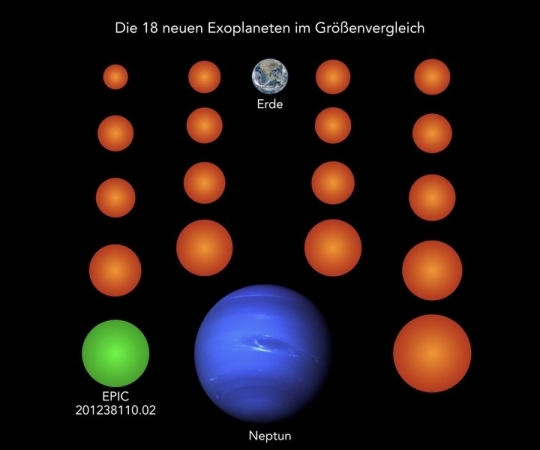

What we find in this initial cut using TLS is 18 planets that have been overlooked in previous work. The new worlds turn out to be, in most of the systems studied, the smallest planets, and most of them orbit their star closer than the previously known planetary companions. So with one exception we’re talking about planets with temperatures between 100 and 1,000 degrees Celsius. The exception is an apparent super-Earth (EPIC 201238110.02) circling a red dwarf star possibly in its habitable zone.

A system that stands out is that around the star known as K2-32. Heller and Hippke are able to identify a fourth transiting world here to join the three Neptune-size planets already known, this one with a radius roughly the size of Earth’s. With four planets orbiting it, K2-32 joins a list of about a dozen K2 stars with four or more transiting planet candidates. As the paper notes, only K2-138 has been found to host five such planets, all in super-Earth to sub-Neptune range.

The authors discuss the K2-32 system in detail in the first of two new papers, with the second examining the 17 other planets their methods have uncovered. Here we find worlds ranging from 0.7 Earth radii to 2.2 R⊕. That smallest world (EPIC 201497682.03) is the second smallest ever discovered with K2. Half of the planets are smaller than 1.2 Earth radius.

The success of the TLS methodology is provocative and leads to expectations of further discoveries. After all, beyond the K2 data, we have thousands of datasets for other stars. From the paper:

Based on our discovery rate of one new planet around about 3.5 % of all stars from K2 with previously known planets, we expect that TLS can find another 100 small planets around the thousands of stars with planets and candidates from the Kepler primary mission that have been missed in previous searches.

Image: Almost all known exoplanets are larger than Earth and typically as large as the gas planet Neptune. The 18 newly discovered planets (here in orange and green), for comparison, are much smaller than Neptune, three of them even smaller than Earth and two more as large as Earth. Planet EPIC 201238110.02 is the only one of the new planets cool enough to potentially host liquid water on its surface. Credit: © NASA/JPL (Neptune), NASA/NOAA/GSFC/Suomi NPP/VIIRS/Norman Kuring (Earth), MPS/René Heller

The papers are Heller, Hippke & Rodenbeck, “Transit least-squares survey. I. Discovery and validation of an Earth-sized planet in the four-planet system K2-32 near the 1:2:5:7 resonance,” Astronomy & Astrophysics, 625, A31 (abstract); and “Transit least-squares survey. II. Discovery and validation of 17 new sub- to super-Earth-sized planets in multi-planet systems from K2,” Astronomy & Astrophysics, 2019, in press (abstract).

May 23, 2019

Is High Definition Astrometry Ready to Fly?

In a white paper submitted to the Decadal Survey on Astronomy and Astrophysics (Astro2020), Philip Horzempa (LeMoyne College) suggests using technology originally developed for the NASA Space Interferometry Mission (SIM), along with subsequent advances, in a mission designed to exploit astrometry as an exoplanet detection mode. I’m homing in on astrometry itself in this post rather than the mission concept, for the technique may be coming into its own as an exoplanet detection method, and I’m interested in new ways to exploit it.

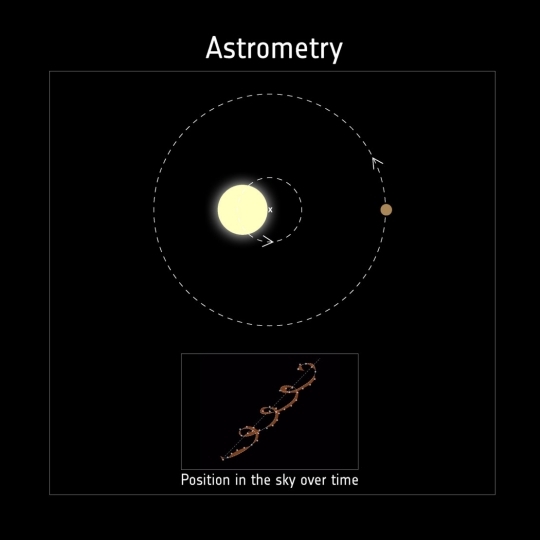

Astrometry is all about refining our measurement of a star’s position in the sky. When I talk to people about detecting exoplanets, I find that many confuse astrometry with radial velocity, for in loose explanatory terminology, both refer to measuring the ‘wobble’ a planet induces on a star. But radial velocity examines Doppler effects in a star’s spectrum as the star moves toward and then away from us, while astrometry looks for tiny changes in the position of the star in the sky, especially the kind of periodic shift that implies an otherwise unseen planet.

The problem has always been the level of precision needed to detect such minute variations. Long-time Centauri Dreams readers will recall Peter Van de Kamp’s work at Swarthmore on what he believed to be a planet detection around Barnard’s Star, but we can also mention Sarah Lippincott’s efforts at the same observatory on Lalande 21185. Kaj Strand, likewise working at Sproul Observatory at Swarthmore, thought he had detected a planet orbiting 61 Cygni all the way back in 1943. Instrumentation-induced errors unfortunately made these detections unlikely.

But astrometry has major advantages if we can reach the necessary accuracy, which is Horzempa’s point. The Gaia mission makes the case that astrometry is already in play in the exoplanet hunt. Thus the mission’s detections of velocity anomalies at Barnard’s Star, Tau Ceti and Ross 128. Gaia also found evidence for a possible gas giant around Epsilon Indi. For more on this, see Kervella et al., “Stellar and Substellar Companions of Nearby Stars from Gaia DR2,” Astronomy & Astrophysics Volume 623, A72 (March 2019). Abstract.

Image: Astrometry is the method that detects the motion of a star by making precise measurements of its position on the sky. This technique can also be used to identify planets around a star by measuring tiny changes in the star’s position as it wobbles around the center of mass of the planetary system. ESA’s Gaia mission, through its unprecedented all-sky survey of the position, brightness and motion of over one billion stars, is generating a large dataset from which exoplanets will be found, either through observed changes in a star’s position on the sky due to planets orbiting around it, or by a dip in its brightness as a planet transits its face. Credit: ESA.

What is significant here is that an era of astrometry 50 to 100 times more precise than Gaia may be at hand. Recall that minutes of arc are used to describe small angles. A circle of 360 degrees can be divided up into arcminutes and then arcseconds (1/60th of an arcminute). A milliarcsecond, then, is one-thousandth of an arcsecond, and a microarcsecond is one millionth of an arcsecond. You can see from this the precision needed for space-based astrometry.

Horzempa believes we are ready for 0.1 micro-arcsecond (μas), or 100 nano-arcseconds (nas) astrometry, a level anticipated for NASA’s canceled Space Interferometry Mission, and one for which hardware had already been constructed. Incorporating advances in laser metrology engineering since 2010, the Star Watch mission he is proposing would leverage the SIM work and make astrometry a valuable tool for complementing radial velocity and transit work. High-definition astrometry at 100 nas, he argues, is the best way to achieve the goal stated in a National Academy of Sciences’ 2018 report, which calls for developing ways to detect and characterize terrestrial-class planets in orbit around Sun-like stars.

Gaia is able to measure the position of brighter objects to a precision of 5 μas, with proper motions established down to 3.5 μas per year. For the faintest stars (magnitude 20.5), reports the European Space Agency, several hundred μas is the working range. Bear in mind that, according to the NAS report, 20 μas is a level of precision “sufficient to detect gas giant planets amenable to direct imaging follow-up with GSMTs” [giant segmented mirror telescopes].

If 10 microarcseconds is the size of a euro coin on the Moon as viewed from Earth, imagine what might be done with 100 nano-arcseconds, a high-definition astrometry that would offer many advantages over conventional radial velocity studies. Radial velocity can only offer a minimum mass determination because we cannot know the angle of inclination of unseen planets, and thus don’t know whether we are seeing a system edge-on or at any other angle.

Horzempa’s case is that astrometry can be used to tune up such radial velocity detections and tease out other worlds in the same systems that are undetected by RV. A case in point is Tau Ceti, which raises questions based on radial velocity work alone. From the white paper, where the author notes that this is a system that has been thought to hold 4 super-Earths::

This would be the case only if the RV m(sin i) mass values are the true values. However, if one assumes that the invariant plane for the system is the same as that of the observed disk (40 degrees), then the masses would range from 2.5 to 10 Me, putting them in the sub-Neptune to Neptune class. That would still be an interesting system but it would not be a system of super Earths. High-Definition astrometry will bring clarity, as these inner worlds of tau Ceti, if they exist, would generate signals that ranged from 1 to 15 uas, well within the reach of the next-generation 100 nano-arcsecond Probe. As a bonus, the Star Watch mission would be able to detect any true terrestrial worlds in the tau Ceti system.

Horzempa cites other intriguing possibilities, such as Zeta Reticuli, a star not known to have any planets through transit or radial velocity methods. Orbital inclination could rule out a transit detection in any case, while smaller planets might be too difficult a catch for RV around this binary system of two G-class stars in a wide orbit. 100-nas astrometry would be able, however, to detect Earth-class planets if they are there around either or both stars. If we are seeing ‘face-on’ systems, astrometry may be the only detection method applicable here.

There is no question about the advantages astrometry presents in being able to measure the true mass of planets it detects as opposed to a minimum mass, and as Horzempa writes, “…it will provide knowledge of the coplanarity of exoplanet systems, and it will discover extremely rare and valuable) planetary system ‘oddballs.’” All this shows the refinement of a method that has disappointed in the past. From the NAS report:

Astrometry has a spotty history involving exoplanet false positive detections and the cancellation of the Space Interferometry Mission. Only two “high-confidence” exoplanets have been discovered with this technique (Muterspaugh et al., 2010), neither of which has been independently confirmed. Despite its intrinsic merit, astrometry has not been viable as a search technique, given that a space mission is needed for large samples and low-mass planets.

It is a space mission that Horzempa now calls for, one building on technology developed for the Space Interferometry Mission and taking astrometry to unpreceded levels of precision. It is far too early to know how the idea will be received in the space community, but given the movement of astrometry toward the 100-nas level, a case can be made for supplementing existing methods with a strategy that at long last is beginning to deliver on its exoplanet promise. We’ll follow the progress of both astrometric measurement and this Star Watch mission concept with interest.

The paper is Horzempa, “High Definition Astrometry,” submitted to Astro2020 (Decadal Survey on Astronomy and Astrophysics) and available as a preprint.

May 22, 2019

Insulating a Plutonian Ocean

An ocean inside Pluto would have implications for many frozen moons and dwarf planets, not to mention exoplanets where conditions at the surface are, like Pluto, inimical to life as we know it. But while a Plutonian ocean has received considerable study (see, for example, Francis Nimmo’s work as discussed in Pluto: Sputnik Planitia Gives Credence to Possible Ocean), working out the mechanisms for liquid ocean survival over these timeframes and conditions has proven challenging. A new paper now suggests a possible path.

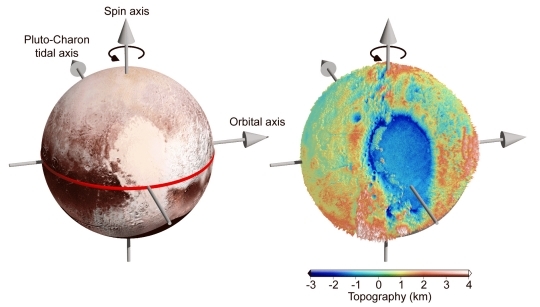

Shunichi Kamata of Hokkaido University led the research, which includes contributions from the Tokyo Institute of Technology, Tokushima University, Osaka University, Kobe University, and the University of California, Santa Cruz. At play are computer simulations, reported in Nature Geosciences, that offer evidence for the potential role of gas hydrates (gas clathrates) in keeping a subsurface ocean from freezing. At the center of the work, as in so much recently written about Pluto, is the ellipsoidal basin that is now known as Sputnik Planitia.

Image: The bright “heart” on Pluto is located near the equator. Its left half is a big basin dubbed Sputnik Planitia. Credit: Figures created using images by NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

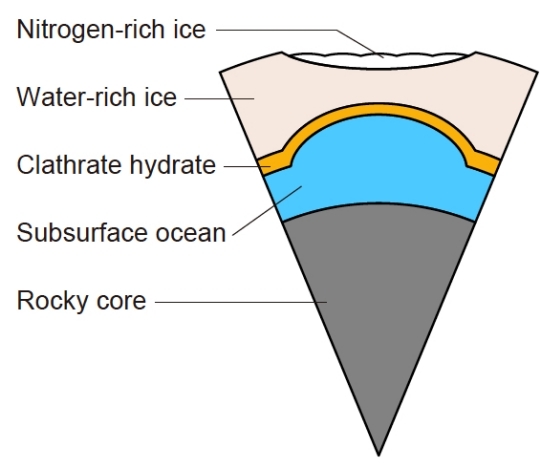

Gas hydrates are crystalline solids, formed of gas trapped within molecular water ‘cages’. The ‘host’ molecule is water, the ‘guest’ molecule a gas or a liquid. The lattice-like frame would collapse into a conventional ice crystal structure without the support of the trapped molecules.

What is illuminated by the team’s computer simulations is that such hydrates are highly viscous and offer low thermal conductivity. They could serve, in other words, as efficient insulation for an ocean beneath the ice. The location and topography of Sputnik Planitia lead the researchers to believe that if a subsurface ocean exists, the ice shell in this area is thin. Uneven on its inner surface, the shell could thus cover liquid water kept viable by the insulating gas hydrates.

We wind up with a concept for Pluto’s interior that looks like the image below.

Image: The proposed interior structure of Pluto. A thin clathrate (gas) hydrate layer works as a thermal insulator between the subsurface ocean and the ice shell, keeping the ocean from freezing. Credit: Kamata S. et al., “Pluto’s ocean is capped and insulated by gas hydrates.” Nature Geosciences, May 20, 2019 (full citation below).

Methane is implicated as the most likely gas to serve within this insulating lattice, a provocative theory because of what we know about Pluto’s atmosphere, which is poor in methane but rich in nitrogen. What exactly is happening to support this composition? From the paper:

CO2 clathrate hydrates at the seafloor could have acted as a thermostat to prevent heat transfer from the core to the ocean. Primordial CO2, however, may have been converted into CH4 through hydrothermal reactions within early Pluto under the presence of Fe–Ni metals. As CH4 and CO predominantly occupy clathrate hydrates, the components that degassed into the surface–atmosphere system would be rich in other species, such as N2.

We arrive at an atmosphere laden with nitrogen but low in methane. This notion of a thin gas hydrate layer as a ‘cap’ on a subsurface ocean is one that could serve as a generic mechanism to preserve subsurface oceans in large, icy moons and KBOs. “This could mean there are more oceans in the universe than previously thought, making the existence of extraterrestrial life more plausible,” adds Kamata.

The authors point out that freezing of the ocean, causing the ice shell to thicken, would cause the radius and surface area of Pluto to increase, producing faults on the surface. New Horizons was able to observe these, and recent studies have shown that the fault pattern supports the global expansion of the dwarf planet. Thus we have a scenario consistent with a global ocean, perhaps one that is still partly liquid. Analyzing surface changes would offer constraints on the thickness of any potential layers of clathrates that could firm up the liquid water hypothesis.

The paper is Kamata et al., “Pluto’s ocean is capped and insulated by gas hydrates,” Nature Geosciences May 20, 2019 (abstract).

May 21, 2019

New Horizons: Results and Interpretations

Another reminder that the days of the lone scientist making breakthroughs in his or her solitary lab are today counterbalanced by the vast team effort required for many experiments to continue. Thus the armies involved in gravitational wave astronomy, and the demands for big money and large populations of researchers at our particle accelerators. So, too, with space exploration, as the arrival of early results from New Horizons in the journals is making clear.

We now have a paper on our mission to Pluto/Charon and the Kuiper Belt that bears the stamp of more than 200 co-authors, representing 40 institutions. How could it be otherwise if we are to credit the many team members who played a role? As the New Horizons site notes: “[Mission principal investigator Alan] Stern’s paper includes authors from the science, spacecraft, operations, mission design, management and communications teams, as well as collaborators, such as contributing scientist and stereo imaging specialist (and legendary Queen guitarist) Brian May, NASA Planetary Division Director Lori Glaze, NASA Chief Scientist Jim Green, and NASA Associate Administrator for the Science Mission Directorate Thomas Zurbuchen.”

New Horizons still has the capacity to surprise us, or maybe ‘awe’ is the better word. That’s what I felt when I saw the now familiar shape of Ultima Thule highlighted on the May 17 Science cover in an image that shows us what the object would look like to the human eye. The paper presents the first peer-reviewed scientific results and interpretations of Ultima a scant four months after the flyby.

As to the beauty of that cover image, something like this was far from my mind during the 2015 Pluto/Charon flyby, when the occasional talk ran to an extended mission and a new Kuiper Belt target. I wouldn’t have expected anything with this degree of clarity or depth of scientific return from what was at that time only a possible future rendezvous, and one that would not be easy to realize. Another indication of how outstanding New Horizons has been and continues to be.

Image: This composite image of the primordial contact binary Kuiper Belt Object 2014 MU69 (nicknamed Ultima Thule) – featured on the cover of the May 17 issue of the journal Science – was compiled from data obtained by NASA’s New Horizons spacecraft as it flew by the object on Jan. 1, 2019. The image combines enhanced color data (close to what the human eye would see) with detailed high-resolution panchromatic pictures. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute//Roman Tkachenko.

I’d be hard-pressed to come up with a space-themed cover from Science that’s more memorable than that. For that matter, here’s an entertaining thought: What would the most memorable space-themed Science cover of, say, 2075 look like? Or 2250? Let’s hope we’re vigorously exploring regions from the Oort Cloud outward by the latter year.

Meanwhile, New Horizons is helping us look back toward our system’s earliest days.

“We’re looking into the well-preserved remnants of the ancient past,” says Stern. “There is no doubt that the discoveries made about Ultima Thule are going to advance theories of solar system formation.”

Indeed. The two lobes of this 36-kilometer long object include an oddly flat surface (nicknamed ‘Ultima’) and the much rounder ‘Thule,’ the two connected by a ‘neck’ that raises the question of how the KBO originally formed. We seem to be looking at a low velocity merger of two objects that had originally been in rotation. Their merger is important; remember, we’re dealing with an ancient planetesimal. From the paper:

For MU69 ’s two lobes to reach their current, merged spin state, they must have lost angular momentum if they initially formed as co-orbiting bodies. The lack of detected satellites of MU69 may imply ancient angular momentum sink(s) via (i) the ejection of formerly co-orbiting smaller bodies by Ultima and Thule, (ii) gas drag, or both. This suggests that contact binaries may be rare in CCKBO [Cold Classical Kuiper Belt Object] systems with orbiting satellites. Another possibility, however, is that the lobes Ultima and Thule impacted one another multiple times, shedding mass along with angular momentum before making final contact. But the alignment of the principal axes of MU69 ’s two lobes tends to disfavor this hypothesis.

Also interesting is the possibility of tidal locking of the two lobes before their merger:

In contrast, tidal locking could quite plausibly have produced the principal axis alignment we observe, once the co-orbiting bodies were close enough and spin-orbit coupling was most effective… Gas drag could also have played a role in fostering the observed coplanar alignment of the Ultima and Thule lobes… Post-merger impacts may have also somewhat affected the observed, final angular momentum state.

And let’s consider what the science team has to say about Ultima Thule’s color. Like many other objects found in the Kuiper Belt, it’s red, much redder than Pluto. This obviously has significant interest because of what it implies about the organic materials on the surface and how they are being modified by solar radiation. Here the mixture of surface organics differs from other icy objects previously examined, though not all:

There are key color slope similarities between the spectrum of MU69 and those of the KBO (55638) 2002 VE 95 (45) and the escaped KBO 5145 Pholus… Also, these objects all exhibit an absorption band near 2.3 mm, tentatively attributed to methanol (CH3OH) or perhaps more complex organic molecules intermediate in mass between simple molecular ices and tholins… Similar spectral features are also apparent on the large, dark red equatorial region of Pluto informally called Cthulhu… suggesting similarities in the chemical feedstock and processes that could operate there and on MU69.

The paper points out that we see evidence for H2O ice in the form of shallow spectral absorption features, indicating low abundance in the object’s upper surface, as compared with planetary satellites and even some Kuiper Belt objects with clearer H2O detections. As expected, we do not see the spectral signatures of volatile ices such as carbon monoxide, nitrogen, ammonia or methane, which were observed at Pluto. These were not expected at MU69 “…owing to the ready thermally driven escape of such ices from this object over time.”

We’re left with a lot of unanswered questions, including the nature and origin of surface features like ‘Maryland crater,’ the largest (8 kilometer wide) scar on the KBO, likely the result of an impact, and the various bright spots and patches. How much of the pitted surface can be related to outgassing via sublimation, or material falling into already existing underground spaces? Bear in mind that the data return from New Horizons is to continue until late summer of 2020. Meanwhile, the spacecraft continues to measure the brightness of other Kuiper Belt objects while also mapping the charged-particle radiation and dust environment of its surroundings.

The paper is Stern et al., “Initial results from the New Horizons exploration of 2014 MU69, a small Kuiper Belt object,” Science Vol. 364, Issue 6441 (17 May 2019). Abstract.

May 17, 2019

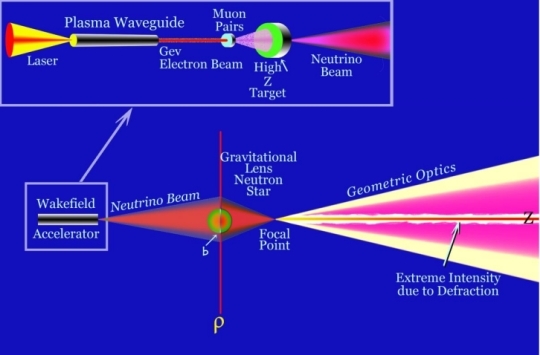

A Neutrino Beam Beacon

If you want to look for possible artifacts of advanced civilizations, as do those practicing what is now being called Dysonian SETI, then it pays to listen to the father of the field. My friend Al Jackson has done so and offers a Dyson quote to lead off his new paper: “So the first rule of my game is: think of the biggest possible artificial activities with limits set only by the laws of physics and look for those.” Dyson wrote that in a 1966 paper that repays study today (citation below). Its title: The Search for Extraterrestrial Technology.” Dysonian SETI is a big, brawny zone where speculation is coin of the realm and the imagination is encouraged to be pushed to the limit.

Jackson is intrigued, as are so many of us, with the idea of using the Sun’s gravitational lens to make observations of other stars and their planets. Our recent email conversation brought up the name of Von Eshleman, the Stanford electrical engineer and pioneer in planetary and radio sciences who died two years ago in Palo Alto at 93. Eshleman was writing about gravitational lensing possibilities at a time when we had no technologies that could take us to 550 AU and beyond, the area where lensing effects begin to be felt, but he saw that an instrument there could make observations of objects directly behind the Sun as their light was focused by it.

Claudio Maccone has been working this terrain for a long time, and the complete concept is laid out in his seminal Deep Space Flight and Communications: Exploiting the Sun as a Gravitational Lens (Springer Praxis, 2009). There is much to be said about lensing and space missions, and it’s heartening to see interest in scientists within the Breakthrough Starshot project — a sail moving at 20 percent of lightspeed gets us to 550 AU and beyond relatively quickly. By my back of the envelope figuring, travel time is just a little short of 16 days.

There would be no need for Starshot to approach 550 AU at 20 percent of c, of course. The focal line runs to infinity, but as Jackson explains when running through gravitational lensing’s calculations, we can assume beam intensity gradually diminished by absorption in the interstellar medium, though all of this with little beam divergence. Just how to use the Sun’s gravity lens (a relay for returning data from a star mission, I assume) and how to configure mission parameters to get to the lensing region and use it are under debate.

Transmitting Through a Gravitational Lens

But back to Al Jackson’s paper, which offers us a take on gravitational lensing that I have never before encountered [see my addendum at the end of this post for a correction]. What he is proposing is that an advanced civilization of the kind Dyson is interested in would have the capability of using a gravitational lens to transmit data. He’s turned the process around, from observation to beacon or other form of communication. And he’s working with neutrinos, where attenuation from the interstellar medium is negligible.

A gravitational lens does not, of course, need to be a star, but could be a higher mass object like a neutron star. In Jackson’s thinking, a KII civilization could place a neutrino beam transmitting station around a neutron star. We make neutrino beams today via the decay of pi mesons, as the author reminds us, when large accelerators boost protons to relativistic energies that strike a target, producing pions and kaons that decay into neutrinos, electrons and muons. What counts for Jackson’s purposes is that pions and kaons can be focused to produce a beam of neutrinos.

For a stellar mass gravitational lens and 1 Gev neutrinos, the wavelength is about 10-14 cm, the gain is approximately 1020! The characteristic radius of main region of concertation is about one micron; however there is an effective flux out to about one centimeter.

And as mentioned above:

This beam intensity extends to infinity only diminished by absorption in the interstellar medium, encounters with a massive object like a planet or star and a very small beam divergence.

Image: This is Figure 6 from the paper. Caption: A schematic illustration of a possible neutrino accelerator-transmitter, the accelerator and lens (nothing to scale). Credit: A. A. Jackson.

A one-centimeter beam creates the problem of focusing on a specific target, one whose phenomenal pointing accuracy could only be left to our putative advanced civilization. Even so, increase the number of transmitters and detection becomes easier. Thus Jackson:

Suppose that a K2 type civilization capable of interstellar flight can reach a neutron star it should have the technological capability to build a beacon consisting of an array of transmitters in a constellation of orbits about the neutron star. Let this constellation consist of 1018 ‘neutrino’ transmitters 1 meter in characteristic size ‘covering’ the area of a sphere 1000 km in radius with 1018 particle accelerators in orbit… At the present time there is the development of plasma Wakefield particle accelerators that are meters in size [21, 22]. It is probable that a K2 civilization may construct Wakefield electron accelerators of very small size.

Jackson has heeded Dyson, that’s for sure. Remember the latter’s injunction: “…think of the biggest possible artificial activities with limits set only by the laws of physics and look for those.”

What emerges is a ‘constellation of neutrino beam transmitters,’ 1018 in orbit at 1000 neutron star radii, increasing the probability of detection, so that the detection rate at 10,000 light years becomes approximately 5 per minute. The transmitters must be configured to appear as point sources to the gravitational lens, again another leap demanding KII levels of performance. But if a civilization is trying to be noticed, a neutrino beam that takes neutrino detection well out of the range produced through stellar events in the galaxy should stand out.

Thus we have a method for producing directed neutrino signal transmission. Why come up with such? Harking back to Dyson, we can consider the need to examine the range of possible ETI technologies, hoping to create a catalog that could explain future anomalous observations. Jackson’s beam could be used as what he calls a ‘honey pot’ to attract attention to an electromagnetic transmitter broadcasting more sophisticated information. But we would not necessarily be able to understand what uses an advanced civilization would make of such capabilities. We might have to content ourselves merely with the possibility of observing them.

As Jackson points out, a KII civilization “…would likely have the resources to finesse the technology in a smarter way,” so what we have here is a demonstration that a thing may be possible, while we are left to wonder in what other ways a neutrino source can be used to produce detections at cosmic distances. Going deep to speculate on technologies far beyond our reach today should, as Dyson says, remind us to stay within the realm of known physics while simultaneously asking about phenomena that advanced engineering could produce. We hope through the labors of Dysonian SETI to recognize such signatures if we see them.

Appreciating Von Eshleman

And a digression: When Al brought up Von Eshleman in our conversations, I began thinking back to early gravitational lensing work and Eshleman’s paper, written back in 1979 but prescient, surely, of what was to come in the form of serious examination of lensing capabilities for space missions. Let me quote Eshleman’s conclusion from the paper, cited below. I have a lot more to say about Eshleman’s work, but let’s get into that at another time. For now:

It has been pointed out that radio, television, radar, microwave link, and other terrestrial transmissions are expanding into space at 1 light-year per year (2). Another technological society near a neighboring star could receive the strongest of these directly with substantial effort and could learn a great deal about the earth and the technology of its inhabitants. The concepts presented here suggest that on an imaginary screen sufficiently far behind that star, the short-wavelength end of this terrestrial activity is now being played out at substantial amplifications. Properly placed receivers with antennas of modest size could in principle scan the earth and discriminate between different sources, mapping such activity over the earth and learning not only about the technology of its inhabitants, but also about their thoughts. It is possible that several or many such focused stories about other worlds are now running their course on such a gigantic screen surrounding our sun, but no one in this theater is observing them…

I learned about Eshleman’s work originally through Claudio Maccone and often reflect on the tenacity of ideas as we go from a concept originally suggested by Einstein to a SETI opportunity realized by Eshleman to a mission concept detailed by Maccone, and now a potential actual mission seriously discussing using gravitational lensing as a relay opportunity for data return from Proxima Centauri (Breakthrough Starshot). As it always has, the interstellar field demands long-term thinking that crosses generations in support of a breathtaking goal.

[Addendum]: Although I hadn’t heard of discussions on using gravitational lensing for transmission, an email just now from Clément Vidal points out that both Claudio Maccone and Vidal himself have looked into this. In Clément’s case, the reference is Vidal, C. 2011, “Black Holes: Attractors for Intelligence?” at Towards a scientific and societal agenda on extra-terrestrial life, 4-5 Oct, Buckinghamshire, Kavli Royal Society International Centre. Abstract here. This quote is to the point:

“For a few decades, researchers have proposed to use the Sun as a gravitational lens. At 22.45AU and 29.59AU we have a focus for gravitational waves and neutrinos. Starting from 550AU, electromagnetic waves converge. Those focus regions offer one of the greatest opportunity for astronomy and astrophysics, offering gains from 2 to 9 orders of magnitude compared to Earth-based telescopes…It is also worth noting that such gravitational lensing could also be used for communication…Indeed, it is easy to extrapolate the maximal capacity of gravitational lensing using, instead of the Sun, a much more massive object, i.e. a neutron star or a black hole. This would probably constitute the most powerful possible telescope. This possibility was envisioned -yet not developed- by Von Eshleman in (1991). Since objects observed by gravitational lensing must be aligned, we can imagine an additional dilating and contracting focal sphere or artificial swarm around a black hole, thereby observing the universe in all directions and depths.”

The author of The Beginning and the End: The Meaning of Life in a Cosmological Perspective (2014), Vidal’s thinking is examined in The Zen of SETI and elsewhere in the archives.

I’m glad Clément wrote, especially as it jogged my memory about Claudio Maccone’s paper on using lenses as a communications tool, where the possibilities are striking. See The Gravitational Lens and Communications for more. I wrote about this back in 2009 and thus have no good excuse for letting it slip my mind!

The paper is Jackson, “A Neutrino Beacon” (preprint). The Dyson paper is “The Search for Extraterrestrial Technology,” in Marshak, ed. Perspectives in Modern Physics: Essays in Honor of Hans Bethe, New York: John Wiley & Sons 1966. The Eshleman paper is “Gravitational Lens of the Sun: Its Potential for Observations and Communications over Interstellar Distances,” Science Vol. 205 (14 September 1979), pp. 1133-1135 (abstract).

May 16, 2019

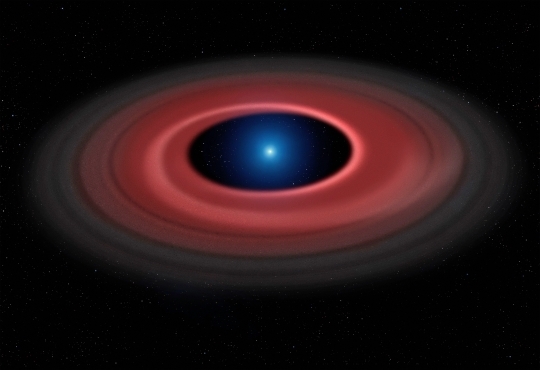

Survivors: White Dwarf Planets

The term ‘destruction radius’ around a star sounds like something out of a generic science fiction movie, probably one with lots of laser battles and starship crews dressed in capes. It’s a descriptive phrase as used in this University of Warwick (UK) news release, but let’s go with ‘Roche radius’ instead. Dimitri Veras, a physicist at the university, probes the term in the context of white dwarfs in a new paper for Monthly Notices of the Royal Astronomical Society. Veras and collaborators are looking at what happens after the challenging transition between red giant and white dwarf, a time when planets will be in high turmoil.

The idea is to model the tidal forces that occur once a star collapses into a super-dense white dwarf, blowing away its outer layers in the process. We see the clear potential for dragging planets into new orbits, with some pushed out of their stellar systems entirely. The Roche radius, or limit, is the distance from the star where a self-gravitating object will disintegrate because of tidal forces, forming a disk of debris around the star. It’s not limited to white dwarfs, of course, and can be applied to the tidal forces acting between any two celestial bodies.

White dwarfs are generally comparable in size to the Earth, while their Roche limit extends outwards to about one stellar radius. They’re also known to have metallic debris in their photosphere, presumably from objects pulled within the Roche radius. Learning about the tidal interactions of such worlds will help us calculate a planet’s inward and/or outward drift, and determine whether an object in a particular orbit will reach the Roche radius and be destroyed.

Image: An asteroid torn apart by the strong gravity of a white dwarf has formed a ring of dust particles and debris orbiting the Earth-sized burnt out stellar core. Credit: University of Warwick/Mark Garlick.

I’m not aware of a lot of work on tidal effects in white dwarf scenarios, but Veras believes that now is the time to begin such investigations, for the number of planets around white dwarfs is sure to rise with the advent of new extremely large telescopes (ELTs). We learn here that according to the authors’ models, the more massive a planet, the more likely it will be destroyed by these tidal forces. We do have to factor in the fact that some simplifications needed to run these calculations will need to be further developed to help us characterize such systems.

“Our study, while sophisticated in several respects, only treats homogenous rocky planets that are consistent in their structure throughout,” adds Veras. “A multi-layer planet, like Earth, would be significantly more complicated to calculate but we are investigating the feasibility of doing so too.”

How likely are planets or asteroids to be pulled into the Roche limit? Tidal forces modeled here imply that an object of low viscosity — think of Enceladus as a local example — is ultimately absorbed by the star if it orbits at separations within five times the distance between the center of the white dwarf and the Roche limit. By contrast, a high viscosity world is likely to be eventually swallowed into the star only if it is found at distances closer than twice the separation between the center of the white dwarf and Roche radius. Viscosity counts. Observationally, we might be on the lookout for disruptions in white dwarf disks just outside the Roche limit.

By ‘high viscosity world,’ the paper refers to planets with a dense core of heavy elements, such as the iron- and nickel-rich SDSS J122859.93+104032.9, recently found in a star-grazing 2-hour orbit around a white dwarf by astronomers at the same university (for more, see White Dwarf Debris Suggests a Common Destiny — Veras was on the team that did this work).

The current work suggests that this object has avoided falling into the star because of its small size. Or as the paper puts it: “Because the magnitude of the stellar tides scales as the mass of the perturber, the orbital dynamics of the asteroids in the WD 1145+017 and SDSS J1228+1040 systems are unaffected by stellar tides.” The takeaway: The best chance for survival near the white dwarf is to be small and dense, packed with heavy elements. And a little distance doesn’t hurt. A distance of just .13 AU, a third of the distance between Mercury and the Sun, is enough to ensure survival for the kind of rocky, homogeneous planet this paper models.

We learn that massive super-Earths are more readily disrupted than lower-mass planets, while as seen above, planets of higher viscosity are more likely to survive than their low-viscosity counterparts. We’re dealing with a challenging environment indeed for surviving objects, but one that repays investigation considering its ubiquity. As the paper points out, almost every known exoplanet currently orbits a star that will become a white dwarf. The authors continue:

Exo-asteroids are already observed orbiting two white dwarfs in real time… Planets which then survive to the white dwarf phase play a crucial role in frequently shepherding asteroids and their observable detritus on to white dwarf atmospheres, even if the planets themselves lie just outside of the narrow range of detectability. Further, planets themselves may occasionally shower a white dwarf with metal constituents through post-impact crater ejecta and when the planetary orbit grazes the star’s Roche radius…

The paper’s goal is to create a computational framework for future observations that can grow out of this early study of a two-body system comprising an idealized solid planet and a white dwarf. Of note: “…the boundary between survival and destruction is likely to be fractal and chaotic,” reinforcing the challenge of characterizing these maximally stressed stellar systems.

An interesting question: Can second- or third-generation planets form from white dwarf debris disks? The paper briefly considers the possibility and notes that such worlds would be in near-circular orbits and possibly be out of the reach of detection by current technologies.

The paper is Veras et al., “Orbital relaxation and excitation of planets tidally interacting with white dwarfs,” Monthly Notices of the Royal Astronomical Society Vol. 486, Issue 3 (July, 2019), pp. 3831-3848 (abstract).

May 15, 2019

Toward a High-Velocity Astronomy

Couple the beam from a 100 gigawatt laser with a single-layer lightsail and remarkable things can happen. As envisioned by scientists working with Breakthrough Starshot, a highly reflective sail made incredibly thin — perhaps formed out of graphene and no thicker than a single molecule — could attain speeds of 20 percent of c. That’s good enough to carry a gram-scale payload to the nearest stars, the Alpha Centauri triple system, with a cruise time of 20 years, for a flyby followed by an agonizingly slow but eventually complete data return.

A key element in the concept, as we saw yesterday, is the payload, which could take advantage of microminiaturization trends that, assuming they continue, could make a functional spacecraft smaller than a cell phone. The first iterations of such a ‘starchip’ are being tested. The Starshot work has likewise caught the attention of Bing Zhang, a professor of astrophysics at the University of Nevada, Las Vegas. Working with Kunyang Li (Georgia Institute of Technology) Zhang explores in a new paper the kind of astronomy that could be done by such a craft.

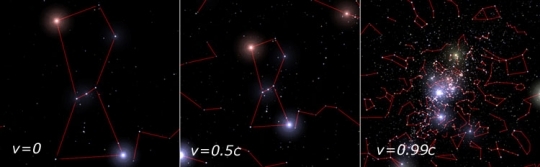

For getting to Proxima Centauri for the exploration of its interesting planet involves a journey that could itself provide a useful scientific return. The paper’s title, “Relativistic Astronomy,” flags its intent to study how movement at relativistic speeds would affect images taken by its camera. As Zhang explains in a recent essay on his work in The Conversation, when moving at 20 percent of lightspeed, an observer in the rest frame of the camera would experience the universe moving at an equivalent speed in the opposite direction to the camera’s motion.

Relativistic astronomy, then, explores these different spacetimes to observe objects we are familiar with from our Earth-based perspective as they are seen in the camera’s rest frame. Zhang and Li consider this “a new mode to study astronomy.” Zhang goes on to say:

…a relativistic camera would naturally serve as a spectrograph, allowing researchers to look at an intrinsically redder band of light. It would act as a lens, magnifying the amount of light it collects. And it would be a wide-field camera, letting astronomers observe more objects within the same field of view of the camera.

Image: Observed image of nearby galaxy M51 on the left. On the right, how the image would look through a camera moving at half the speed of light: brighter, bluer and with the stars in the galaxy closer together. Zhang & Li, 2018, The Astrophysical Journal, 854, 123, CC BY-ND.

Such observations become intriguing when we consider how light from the early universe is red-shifted as a result of the expansion of the cosmos. Zhang and Li point out that a camera moving at the relativistic speeds of the Proxima Centauri probe sees this redshifted light becoming bluer, counteracting the effect of the universe’s expansion. Light from the early universe that would have had to be studied at infrared wavelengths would now be susceptible to study in visible light. The camera, then, becomes a spectrograph allowing the observation of everything from remote galaxies to the cosmic microwave background.

Moreover, other relativistic effects come into play that add value to the fast camera. From the paper on this work:

…unique observations can be carried out thanks to several relativistic effects. In particular, due to Doppler blueshift and intensity boosting, one can use a camera sensitive to the optical band to study the near-IR bands. The light aberration effect also effectively increases the field of view of the camera since astronomical objects are packed in the direction of the camera motion, allowing a more efficient way of studying astronomical objects.

Let me depart for a moment from the Zhang and Li paper to pull information from a University of California at Riverside site, a page written by Alexis Brandeker, and presumably illustrated by him. In the figure below, we see only the effect of aberration at a range of velocities. Notice how the field becomes squeezed at we move from 0.5 c to 0.99 c. At 0.99 c, almost all visible radiation from the universe is confined to a region 10 degrees in radius around the direction of travel.

Image: This figure shows aberration effects for the ship travelling towards the constellation of Orion, assuming a 30 degrees field of view. The field of view is kept constant, only the speed is changed from 0 to 0.99c, showing dramatic effects on the perceived field. No radiative effects are considered, only geometrical aberration. Credit: Alexis Brandeker/UC-R.

But to get the overview, we have to fold in Doppler effects as infrared radiation is shifted into the visible. If we combine these effects in a single image, we get the startling view below.

Image: Both relativistic effects switched on. Credit: Alexis Brandeker/UC-R.

But back to Zhang and Li, whose camera aboard the probe is a spectrograph, a lens, and a wide-field camera all in one. The authors make the case that fast-moving cameras can likewise be used to probe the so-called ‘redshift desert’ (at 1.4 ≲ z ≲ 2.5) that coincides with the epoch of significant star formation (the name comes from the lack of strong spectral lines in the optical band here). Lacking data, we have no large sample of galaxies in a particular range of redshifts, which hinders our understanding of star formation.

Zhang and Li consider relativistic observations of gamma-ray bursts (GRBs) at extreme redshifts, as well as tracing the electromagnetic counterparts to gravitational wave events. Thus a Breakthrough Starshot payload enroute to Alpha Centauri offers a new kind of astronomy if we can master the construction of a camera that can withstand a journey through the interstellar medium without damage from dust as well as one that can transmit its data back to Earth.

What struck me as I began reading this paper is that when it comes to relativistic effects, 20 percent of lightspeed is actually on the slow side, making me wonder how much better the kind of observations the authors describe would be at higher velocities. But Zhang and Li move straight to this question, describing the relativistic effect of a Starshot probe as ‘mild,’ and noting that a Breakthrough laser infrastructure might be used for faster, dedicated astronomy missions.

If one drops the goal of reaching Alpha Centauri, cameras with even higher Doppler factors may be designed and launched. A Doppler factor of 2 and 3 (which gives a factor of 2 and 3 shift of the spectrum) is available at 60% and 80% speed of light, respectively. More interesting astronomical observations can be carried out at these speeds.

While probes in this range would demand ever more powerful acceleration from their laser energy source, they might actually be easier to build, for the need for cosmic ray shielding on a long cruise or data transmission at interstellar distances would be alleviated by sending them on missions closer to home. Of course, pushing probes to speeds much higher than 20 percent of c is even more problematic than the Centauri mission itself. Beyond Starshot, the authors argue that relativistic astronomy will repay the effort if we continue to push in the direction of beamed laser probes with an eye toward ever faster, more capable missions.

The paper is Zhang & Li, “Relativistic Astronomy,” Astrophysical Journal Vol. 854, No. 2 (20 February 2018). Abstract.

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers