Paul Gilster's Blog, page 232

February 8, 2013

Interstellar Flight: Adapting Humans for Space

It’s surprising but gratifying that we can now talk about the ‘interstellar community.’ Just a few years back, there were many scientists and engineers studying the problems of starflight in their spare time, but when they met, it was at conferences dedicated to other subjects. The fact that the momentum has begun to grow is made clear by the explicitly interstellar conferences of recent memory, from the two 100 Year Starship symposia to the second Tennessee Valley Interstellar Workshop. Icarus Interstellar is mounting a conference this August in Dallas, and the Institute for Interstellar Studies plans its own gathering this fall in London.

Of course the Internet is a big part of the picture — Bob Forward and his colleagues could use the telephone and the postal service to keep in touch, but the energizing power of instant document exchange and online discussion was in the future. All this was apparent in Huntsville for the Tennessee Valley event, from which I have just returned. There was an active Twitter channel open and video streaming of the talks, and although I had little time to answer them, I was getting emails from many interested parties who couldn’t attend. Getting copies of papers and presentations after the conference closed can be managed in hours on the Net.

Starflight challenges not only everything we know about propulsion but also our understanding of human nature. If we are seriously considering human travel to such distant destinations, we are looking at decades of travel time at a bare minimum, or the possibility of a generation ship in which people live their lives entirely aboard the craft, which could take hundreds or even thousands of years to reach its destination. Astronaut Jan Davis, who gave the keynote in Huntsville, talked about the various problems of even short duration spaceflight based on her own experience of multiple Shuttle missions.

Image: Rockets dominate the Huntsville skyline in this shot I took from the Calhoun Community College on the final night of the workshop.

Some of these issues are well identified, including the lack of privacy and the loss of muscle and bone mass due to prolonged weightlessness. The privacy issue balances oddly with a sense of isolation, Davis said, as you are cut off from all aspects of your normal life. “You hear a lot of voices, but they’re not the voices you take for granted every day. I missed my dog. I missed sounds like wind, waves hitting the shore. You’re busy, but you’re also isolated.” As medical officer on her two flights, Davis trained on emergency procedures in case a crewmember became incapacitated. The main issue was to stabilize a patient long enough that he or she could be swiftly returned to Earth.

The conclusion from all this is that humans are adapted for Earth, not space, yet they have key advantages over robotic systems, including the ability to discern, judge and learn on a fine-grained basis. Swiftly changing conditions in an on-board experiment she was managing led Davis to alter the schedule on the fly, making changes that would have been difficult for the current generation of robotics. The astronaut sees a combination of the two paradigms as the most likely possibility for long-term missions, perhaps aided by medical breakthroughs in hibernation that would allow the crew to spend most of a long mission in stasis while automatic systems ran the ship.

Robert Hampson (Wake Forest University) extended thinking in this direction by talking about what we need to learn about the human brain before we can contemplate long-duration spaceflight with an interstellar reach. Hampson is an associate professor of physiology and pharmacology with a passion for neuroscience and biology. Given what we know today about risk factors like stroke, epilepsy and Alzheimer’s disease, he notes that if we launch 100 people on a 100 year journey, 25 of them will be incapacitated by the time they arrive even if we can extend their lifetimes significantly. Interstellar flight, then, demands that we learn to predict and prevent degenerative diseases, keeping the brain healthy through entertainment and intellectual stimulation.

One way to do that is with a direct human/machine interface, a kind of TiVo wired into the brain. Hampson told the audience that to fix the brain for long-duration spaceflight, we have to find a way to interface with it, and that means we have to understand its language and coding. It’s a challenge that demands the help not just of the medical community but of mathematicians, physicists and engineers. As to the hibernation that Jan Davis talked about, Hampson asks how much we know about brain activity during hibernation. Is an astronaut under hibernation for fifty years going to have a fifty-year long dream?

I’m jumping around in the schedule here to tie thematic ends together, so I’ll add that my own talk, called “Slow Boat to Centauri,” got into long-duration mode by discussing worldships and how they could sustain themselves along the way. The idea was to show how many resources are available between the stars, suggesting as many space researchers have that our expansion might not involve a direct mission to another star, but rather a step-by-step progression of colonies that gradually moves the human sphere outward. Gradual exploration like this might take thousands of years.

We can all hope for fast propulsion, but suppose the engineering is intractable. Would we still go to the stars if limited to speeds much less than ten percent of c? One-tenth of one percent of lightspeed gets you to Alpha Centauri in about 4300 years, which is also (very roughly) the extent of human history in terms of recoverable documents and written language. A worldship moving at this speed, in other words, recapitulates the human historical experience aboard a craft that would have to be engineered to be a living world, a vast O’Neill cylinder with propulsion.

The right kind of worldship — and Gordon Woodcock (L5 Society) worked through the engineering problems of creating such a vessel in a first-day talk — would have to be one large enough to sustain a population of thousands in conditions that were eminently livable, the complete antithesis of the cramped quarters Jan Davis experienced aboard the Shuttle. We’re all on a worldship of our own, following our star in its 230 million year journey at 220 kilometers per second around the galaxy, so perhaps a livable worldship engineered by the future Kardashev type 1 civilization we hope to grow into would be an acceptable interstellar ark.

Image: A worldship designed to hold generations of humans, as imagined by space artist Adrian Mann.

Putting the speed issue in perspective, one tenth of one percent of the speed of light is 300 kilometers per second, compared to the 17 kilometers per second that our fastest deep space probe, Voyager 1, has attained. There are ways of moving that fast that we can calculate today, but the engineering needed to produce a worldship — and the vast issues raised by creating a closed-loop ecology aboard the craft — demand a multi-disciplinary approach that takes us into biology, philosophy, sociology and the humanities as well as physics. The long-term perspective needed for such thinking was frequently discussed in Huntsville, about which more on Monday.

February 7, 2013

After Huntsville, a Red Dwarf Bonanza

Returning from Huntsville after the Tennessee Valley Interstellar Workshop, I was catching up on emails at the airport when the latest news about exoplanets and red dwarfs popped up on CNN. It was heartening to look around the Huntsville airport and see that people who had been reading or using their computers were all looking up at the screen and following the CNN story, which was no more than a thirty second summary. The interest in exoplanets is out there and may bode good things for public engagement in space matters. At least let’s hope so.

The workshop was a great success, and congratulations are owed to Les Johnson, Robert Kennedy, Eric Hughes and the entire team that made this happen (a special nod to Martha Knowles and Yohon Lo!). This morning I want to focus on the exoplanet news as a way of getting back on schedule, but tomorrow I’ll start going through my notes and talking about the Huntsville gathering. I’m hoping to have several articles in coming weeks from participants in the event on the work they are doing, and I have plenty of comments about the presentations, so the Huntsville coverage that begins tomorrow should extend into next week.

As to the exoplanet news, Courtney Dressing (Harvard-Smithsonian Center for Astrophysics) went to work on the Kepler catalog of 158,000 stars to cull out all the red dwarfs. She and the CfA’s David Charbonneau found that almost all of the identified stars were smaller and cooler than had been thought, which has the effect of lowering the size of the detected planets. An additional result is to move the habitable zone somewhat further in. The duo could find 95 planet candidates among these red dwarfs.

Image: This artist’s conception shows a hypothetical habitable planet with two moons orbiting a red dwarf star. Astronomers have found that 6 percent of all red dwarf stars have an Earth-sized planet in the habitable zone, which is warm enough for liquid water on the planet’s surface. Since red dwarf stars are so common, then statistically the closest Earth-like planet should be only 13 light-years away. Credit: David A. Aguilar (CfA)

Let’s pause for a moment on the analysis. Dressing and Charbonneau were comparing the observed colors of the stars to a model developed by the Dartmouth Stellar Evolutionary Program. The final sample in the study contained 3897 dwarf stars with revised temperatures cooler than 4000 K, and the revisions to stellar temperatures brought the stars down 130 K in temperature while reducing their size by 31 percent. The analysis proceeded to refit the light curves of the planet candidates to get a better understanding of their radii.

I wish I could have tracked the news conference live but was in transit at the crucial moments. Those of you who also missed it may want to check the archived version at the CFA’s site. The key point is on the opening slide: “Earth-like Planets Are Right Next Door.” Which is something of a stretch because we are talking about M-class stars where a planet in the habitable zone is probably tidally locked. Assuming (and it’s an open question) whether a benign climate for carbon-based life could exist on such a planet, it’s still an environment much different from the Earth, with a star that stays in the same position in the sky and night and day are endless.

Still, this is interesting news: The 95 planetary candidates imply statistically that at least 60 percent of red dwarfs have planets smaller than Neptune. Out of the 95, only three were close enough to Earth in terms of size and temperature to be considered ‘Earth-like.’ In other words, about six percent of all red dwarfs are found to have a planet like the Earth. 75 percent of the closest stars to the Sun are red dwarfs, leading Dressing to calculate that the closest Earth-like world is likely to be no more than 13 light years away. Again, this is for red dwarfs. The analysis of other stellar types like the intriguing G- and K-class stars Centauri A and B continues.

Here’s the payoff, from the paper. The authors have just noted that the high rate of habitable zone planets around nearby stars means that future missions designed to study these worlds will have plenty to work with::

Given that there are 248 early M dwarfs within 10 parsecs, we estimate that there are at least 3 Earth-size planets in the habitable zones of nearby M dwarfs awaiting the launch of TESS and JWST. Applying a geometric correction for the transit probability and assuming that the space density of M dwarfs is uniform, we find that the nearest transiting Earth-size planet in the habitable zone of an M dwarf is less than 29 pc away with 95% confidence. Removing the requirement that the planet transits, we find that the nearest non-transiting Earthsize planet in the habitable zone is within 7 pc with 95% confidence. The most probable distances to the nearest transiting and non-transiting Earth-size planets in the habitable zone are 18 pc and 4 pc, respectively.

I mentioned the G- and K-class stars Centauri A and B above, but I don’t want to leave the third element of the trio out, it being a red dwarf. The radial velocity work on Proxima Centauri continues, allowing us to constrain the size of possible planets usefully. This is not part of Dressing and Charbonneau’s study, but I’ll mention it here because it’s obviously germane. Using seven years of UVES spectrograph data from the European Southern Observatory, Michael Endl (University of Texas) and team have found no planet of Neptune mass or above out to 1 AU from Proxima, and no ‘super-Earths’ above 8.5 Earth masses in orbits of less than 100 days.

As to Proxima’s tight habitable zone (0.022 to 0.054 AU), no ‘super-Earths’ above about two to three Earth masses exist here. The habitable zone around Proxima corresponds to orbits ranging from 3.6 to 13.8 days, and you can see that we still have plenty of room for an interesting Earth or Mars-sized world around this closest of all stars to Earth. Adding more data points to what we already have on Proxima should gradually allow us to get to a better idea of what’s actually there.

But back to Dressing and Charbonneau’s red dwarfs. The three habitable zone candidates are Kepler Object of Interest (KOI) 1422.02 (90 percent Earth size in a 20-day orbit); KOI 2626.01 (1.4 Earth size in a 38-day orbit); and KOI 854.01 (1.7 times Earth size in a 56-day orbit). None of these are closer than 300 light years. The paper points out that while Kepler will need several more years of observation to detect Earth-size planets in the habitable zones of G-class stars (this is due to higher than expected stellar noise), the observatory is already able to detect Earth-size planets in the habitable zone of red dwarfs. We get not one but many transits per year and we have 1.8 times more likelihood of a transit than around a star like the Sun.

Thus we get this:

…the transit signal of an Earth-size planet orbiting a 3800K M star is 3.3 times deeper than the transit of an Earth-size planet across a G star because the star is 45% smaller than the Sun. The combination of a shorter orbital period, an increased transit probability, and a deeper transit depth greatly reduces the difficulty of detecting a habitable planet and has motivated numerous planet surveys to target M dwarfs…

Another advantage of M dwarfs is that confirming a planetary candidate is made easier because the radial velocity signal of a habitable planet here is considerably larger than that of a habitable zone planet around a G-class star. Given that the James Webb Space Telescope should be able to take spectra of Earth-sized planets in the habitable zone around M-dwarfs — and that it cannot do this for comparable planets around more massive stars — our first atmospheric readings from a habitable zone planet are probably going to come from these small red stars.

The paper is Dressing and Charbonneau, “The Occurrence Rate of Small Planets Around Small Stars,” to be published in The Astrophysical Journal (draft version online).

February 3, 2013

Tennessee Valley Interstellar Workshop

Air travel presents us with challenges we seldom anticipate. Flying into Charlotte on Sunday I had developed a ferocious headache. I was headed to the Tennessee Valley Interstellar Workshop in Huntsville and had a long enough layover in Charlotte to seek out a pain reliever with decongestant properties. The ridiculous thing was that I couldn’t get the plastic mini-pack open. The little symbol showed me tearing off the corner of the packet, but it wouldn’t tear for me, and it wouldn’t tear for the guy who happened to be sitting next to me at the USAir gate.

It became clear that I needed something sharp to get into this packet, but it was also clear that I was in an airport, the very definition of which these days is to prevent passengers from having anything sharp. I finally took the packet back to the kiosk I bought it from and demanded redress. The lady looked askance at me, looked at the packet, and opened it effortlessly. Further comment seems superfluous.

By the time I got off the plane in Huntsville the headache was much better, and I was surprised to find my shoulder tapped by Andreas Hein, who heads Icarus Interstellar’s worldship project, called Hyperion. We shared a taxi to the hotel, where the lobby was stuffed with interstellar folk like Rob Swinney, now project head for Project Icarus, Claudio Maccone (who will give a keynote on Tuesday), Kelvin Long (head of the Institute for Interstellar Studies) and my buddy Al Jackson, just in from Houston. Al was kind enough later to drive me over to the venue for the evening reception, pictures of which you see here. Al and I owe much to his Garmin GPS.

Image: Andreas Hein (left) and Rob Swinney, of Icarus Interstellar.

The conference — or in this case ‘workshop’ — dilemma is again facing me. There was a day when I thought ‘live blogging’ would make sense for Centauri Dreams, but the more examples of live blogging I read, the less I like the idea. At both 100 Year Starship conferences I mainly sent out tweets, finding Twitter an interesting platform for conference coverage, but one that nonetheless distracted me from making the kind of detailed notes I needed to be working with, just as live blogging did. So this time around, although I may do the occasional tweet (@centauri_dreams), I’ll mostly be paying attention to the speakers and making notes that will turn into more coverage after the workshop is over.

Image: A cordial host, MSFC’s Les Johnson, co-editor of Going Interstellar (Baen, 2012).

So bear with me if Centauri Dreams is off its regular schedule for a few days, though I’ll slip things in wherever possible, and next week we’ll take a close look at the papers here. Meanwhile, there should be no interruption in comment moderation, which I’ll get to as time allows. I will tell you this for now. Robert Kennedy (The Ultimax Group) makes a drink called the Alpha Centauri Sunrise, and I have not only had one, but have also snapped a surreptitious photo of the recipe. Robert later said it would be OK for me to share it, so at some point during the week, I’ll explain how to make this estimable drink. Hint: Three berries are involved. And moonshine.

February 1, 2013

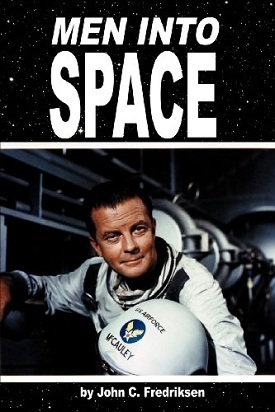

New Book Recalls “Men Into Space”

These days we know that perhaps a million objects the size of the Tunguska impactor or larger are moving through nearby space, and talk of how to deflect asteroids has become routine. Given our increasing awareness of near-Earth objects, it wouldn’t be a surprise to hear of a new Hollywood treatment involving an Earth-threatening asteroid. But I wouldn’t have expected a science fiction series that ran from 1959 to 1960 would have depicted an asteroid mission and the dangers such objects represent.

Nonetheless, I give you “Asteroid,” from the show Men Into Space, with script by Ted Sherdeman. Viewers on November 25, 1959 saw the show’s protagonist Col. Edward McCauley (William Lundigan) take a crew to ‘Skyra,’ a 3.5-kilometer long rock that scientists believed might hit the Earth. The crew assesses whether the asteroid is salvageable for use as a space station and decides there is no other choice but to destroy Skyra, which they do at the cost of considerable suspense as McCauley works to save an astronaut separated from the others while the clock ticks down. The suspense would have been heightened by the fact that this was a show on which astronauts sometimes died and hard sacrifices were the order of the day.

I report on all this with the help of John Fredriksen’s new book Men Into Space (BearManor Media, 2013), which arrived in the mail the other day. Like me, Fredriksen had watched the show in its all too short run while growing up in the Sputnik era. He was taken with the understated but tough role of McCauley, who was depicted as participating in all the significant space missions of his time, from the first lunar journeys to building a space station and, at the time of the show’s cancellation, two attempted flights to Mars that were plagued by problems and aborted.

You could say that Mars as a destination hovers over all this show’s plots. Its final episode, “Flight to the Red Planet,” did get McCauley and team as far as Phobos, where their ship was damaged enough to force an early departure without landing on Mars itself. The Mars of this episode is a compelling target, because from Phobos, in these years not long before Mariner 4, the crew can see waterways that seem to be feeding an irrigation system. This is Percival Lowell’s Mars in an episode surely designed to build into a second season, but that season was unfortunately not to be.

Fredriksen’s book walks fans through all the episodes, with extensive quotes from the scripts and stills that capture the look and feel of the production. If some of these images seem familiar, it may be because Chesley Bonestell was asked to produce concept art for the show, resulting in sharply defined lunar landscapes reminiscent of his paintings. Lewis Rachmil, who produced Men Into Space, would have been familiar with Bonestell’s Hollywood work, which included Destination Moon (1950), When Worlds Collide (1951) and Conquest of Space (1955), not to mention the famous space series in Collier’s.

Frederic Ziv, who headed up ZIV Productions, didn’t stop with Bonestell when it came to making his show as realistic as the times would allow. Sputnik had been launched in 1957 and Ziv had been exploring doing a different kind of space show for CBS ever since. From the book:

Unlike the children-oriented science fiction programming of a few years previous, the tenor of the times now demanded an approach that was rigorously scientific to appease more mature audiences. Ziv, who prized flaunting the technical expertise assisting his programs, also believed that obtaining Department of Defense cooperation facilitated access to their extensive and elaborate space facilities. At length, his show acknowledged help from the Air Force Air Research and Development Command, the Office of the Surgeon General, and the School of Aviation Medicine. Ziv’s credibility was further enhanced with Air Force technical experts who were brought into the scripting and consulting process, receiving credits in the end titles.

Couple this with location shooting at research facilities like Edwards Air Force Base and Cape Canaveral and stock footage of missile launches of the time, along with special effects crews working with von Braun-style three-stage rockets launching capsules that were almost as tiny and cramped as Apollo. Men Into Space turned out to be complicated and expensive. Fredriksen notes that ZIV gave the Air Force the final say in keeping the show realistic, which is why the more fantastic tropes of 1950s science fiction make no appearance. Personality conflicts and equipment malfunctions took the place of ray guns and aliens.

Fredriksen gives all the details, including a summary of each of the show’s 38 episodes. It’s a nostalgic trip for those who remember watching Men Into Space, and it brings back to life memories many of us had long forgotten. After all, this was a short-lived series that survived only in occasional syndication and in some of the space suits and ship interiors that wound up being used again in episodes of The Outer Limits (I knew they looked familiar!). Nominated for a Hugo Award in 1960, the show lost out to The Twilight Zone, and critics sniped at its mundane special effects and earnest quest for authenticity. Despite the promise of Mars, the show was axed in September of that year.

But early impressions count, and it’s safe to say that this show captured more than a few young minds, not the least of them being Fredriksen’s, for whom the experience was indelible:

May future generations rekindle that sense of awe, the ability to dream a better future, and a fixed determination to cross the gulf separating imagination from reality as we did, and joyously so, in the 1950s. If Men Into Space encapsulates the essence of a departed, heroic ideal, it is also a good measure of everything we have lost as a space-faring culture.

As for me, I’ve always been a William Lundigan fan. This is a guy who walked away from Hollywood in 1943 to join the Marines, where he served with the 1st Marine Division on Peleliu, an operation that ranks with Iwo Jima in terms of the ferocity of combat and the staggering percentage of casualties. He went on to Okinawa as a combat photographer and, having received two Bronze Stars, returned to acting after the war. 1954’s Riders to the Stars was his first science fictional outing in a show about astronauts trying to capture meteorites in flight. His work with Ivan Tors on that film fed into a role in the series debut of ZIV’s Science Fiction Theater.

Of Men Into Space, Lundigan would say: “…this was not some Buck Rogers type show. It was not a science-fiction series but a science-fact series. You might even say it’s a combination of a public service show and a dramatic series.” Even he would become exasperated with the quality of writing in some of the later shows, but as Fredriksen’s book makes clear, there was still inspiration to be found here of the kind that awakens young people to careers in engineering and science. With a little better luck and a second season landing on Mars, Men Into Space might be far more than the obscure recollection it is today, and the name ‘McCauley’ might be as recognizable as ‘Kirk.’

January 31, 2013

Habitable Zones: A Moving Target

Habitable zones are always easy enough to explain when you invoke the ‘Goldilocks’ principle, but every time I talk about these matters there’s always someone who wants to know how we can speak about places being ‘not too hot, not too cold, but just right.’ After all, we’re a sample of one, and why shouldn’t there be living creatures beneath icy ocean crusts or on worlds hotter than we could tolerate? I always point out that we have to work with what we know, that water and carbon-based life are what we’re likely to be able to detect, and that we need to fund the missions to find it.

The last word on habitable zone models has for years been Kasting, Whitmire and Reynolds on “Habitable Zones around Main Sequence Stars.” Now Ravi Kopparapu (Penn State) has worked with Kasting and a team of researchers to tune-up the older model, giving us new boundaries based on more recent insights into how water and carbon dioxide absorb light. Both models work with well defined boundaries, the inner edge of the habitable zone being determined by a ‘moist greenhouse effect,’ where the stratosphere becomes saturated by water and hydrogen begins to escape into space.

The outer boundary is defined by the ‘maximum greenhouse limit,’ where the greenhouse effect fails as CO2 begins to condense out of the atmosphere and the surface becomes too cold for liquid water. When worked out for our own Solar System in terms of astronomical units, the 1993 model showed the habitable zone parameters extending from 0.95 to 1.67 AU. Earth was thus near the inner edge.

The new model improves the climate model and works out revised estimates for the habitable zones around not just Sun-like G-class stars but F, K and M stars as well. The definition uses atmospheric databases called HITRAN (high-resolution transmission molecular absorption) and HITEMP (high-temperature spectroscopic absorption parameters) that characterize planetary atmospheres in light of how both carbon dioxide and water are absorbed. The revision of these databases allows the authors to move the HZ boundaries further out from their stars than they were before.

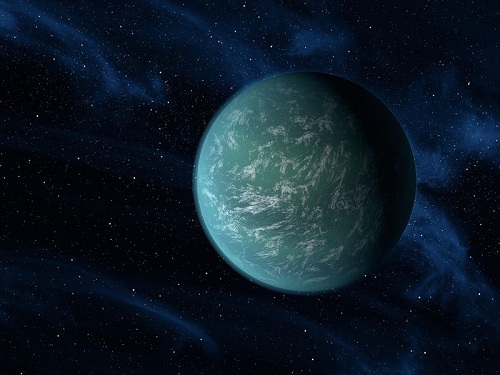

Image: An artist’s conception of Kepler-22b, once thought to be positioned in its star’s habitable zone. New work on habitable zones suggests the planet is actually too hot to be habitable. Credit: NASA/Ames/JPL-Caltech.

This looks to be an important revision, one that people like Rory Barnes (University of Washington) are already calling ‘the new gold standard for the habitable zone’ (see Earth and others lose status as Goldilocks worlds) in New Scientist. In Solar System terms, the limits now become 0.99 AU and 1.70 AU. We see that the Earth moves closer to the inner edge of the habitable zone, causing the authors to comment about an important part of their analysis, that it does not factor in the effect of clouds:

…this apparent instability is deceptive, because the calculations do not take into account the likely increase in Earth’s albedo that would be caused by water clouds on a warmer Earth. Furthermore, these calculations assume a fully saturated troposphere that maximizes the greenhouse effect. For both reasons, it is likely that the actual HZ inner edge is closer to the Sun than our moist greenhouse limit indicates. Note that the moist greenhouse in our model occurs at a surface temperature of 340 K. The current average surface temperature of the Earth is only 288 K. Even a modest (5-10 degree) increase in the current surface temperature could have devastating affects on the habitability of Earth from a human standpoint. Consequently, though we identify the moist greenhouse limit as the inner edge of the habitable zone, habitable conditions for humans could disappear well before Earth reaches this limit.

While the small change to the Earth’s position in the habitable zone is getting most of the press attention, I’m more interested in what the new numbers say about M-dwarfs. These small red stars would have habitable zones close enough to the star that the likelihood of a transit increases. The 1993 habitable zone work did not model M-dwarfs with effective temperatures lower than 3700 K whereas the new work takes effective temperatures down to 2600 K. In an article run by NBC News, Abel Mendez (University of Puerto Rico at Arecibo) mentions that Gliese 581d, thought to skirt the outer limits of its star’s habitable zone, may now move toward the habitable zone’s center, increasing the possibility of life emerging there. Other planets catalogued by the Planetary Habitability Laboratory at UPR will be affected as some thought to have been in the habitable zone may move out of it. See A New Habitable Zone for more.

There are other factors to consider about M-dwarfs, especially the fact that planets close enough to these stars to be in the habitable zone are most likely tidally locked, presenting the same face to the star at all times. Neither the 1993 model or this revised one does well at representing a tidally locked world and the authors say they have not tried to explore synchronously rotating planets in different parts of the habitable zone around M-dwarfs. The paper does note that a planet near the outer edge of the HZ with a dense CO2 atmosphere should be more effective at moving heat to the night side, perhaps increasing the chances of habitability.

The overall effect of adjusting our parameters for habitable zones around the various stellar classes will be to improve our accuracy as we look toward producing lists of targets for future space-based observatories. The authors note that the James Webb Space Telescope, for example, is thought to be marginally capable of taking a transit spectrum of an Earth-like planet orbiting an M-dwarf. We’ll need the maximum chance for success before committing resources to specific planets once we get into the business of trying to identify biomarkers on possibly habitable worlds.

The paper is Kopparapu et al., “Habitable Zones Around Main-Sequence Stars: New Estimates,” accepted at the The Astrophysical Journal (preprint). Note that a habitable zone calculator based on this work is available online. The 1993 paper is Kasting, Whitmire and Reynolds, “Habitable Zones around Main Sequence Stars,” Icarus 101 (1993), pp. 108-128 (full text).

January 30, 2013

TW Hydrae: An Infant Planetary System Analyzed

You have to like the attitude of Thomas Henning (Max-Planck-Institut für Astronomie). The scientist is a member of a team of astronomers whose recent work on planet formation around TW Hydrae was announced this afternoon. Their work used data from ESA’s Herschel space observatory, which has the sensitivity at the needed wavelengths for scanning TW Hydrae’s protoplanetary disk, along with the capability of taking spectra for the telltale molecules they were looking for. But getting observing time on a mission like Herschel is not easy and funding committees expect results, a fact that didn’t daunt the researcher. Says Henning, “If there’s no chance your project can fail, you’re probably not doing very interesting science. TW Hydrae is a good example of how a calculated scientific gamble can pay off.”

I would guess the relevant powers that be are happy with this team’s gamble. The situation is this: TW Hydrae is a young star of about 0.6 Solar masses some 176 light years away. The proximity is significant: This is the closest protoplanetary disk to Earth with strong gas emission lines, some two and a half times closer than the next possible subjects, and thus intensely studied for the insights it offers into planet formation. Out of the dense gas and dust here we can assume that tiny grains of ice and dust are aggregating into larger objects and one day planets.

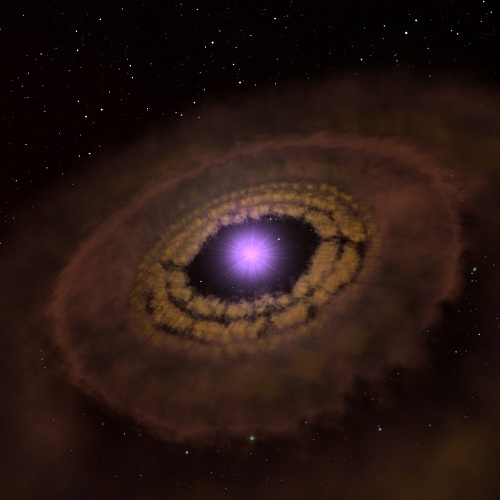

Image: Artist’s impression of the gas and dust disk around the young star TW Hydrae. New measurements using the Herschel space telescope have shown that the mass of the disk is greater than previously thought. Credit: Axel M. Quetz (MPIA).

The challenge of TW Hydrae, though, has been that the total mass of the molecular hydrogen gas in its disk has remained unclear, leaving us without a good idea of the particulars of how this infant system might produce planets. Molecular hydrogen does not emit detectable radiation, while basing a mass estimate on carbon monoxide is hampered by the opacity of the disk. For that matter, basing a mass estimate on the thermal emissions of dust grains forces astronomers to make guesses about the opacity of the dust, so that we’re left with uncertainty — mass values have been estimated anywhere between 0.5 and 63 Jupiter masses, and that’s a lot of play.

Error bars like these have left us guessing about the properties of this disk. The new work takes a different tack. While hydrogen molecules don’t emit measurable radiation, those hydrogen molecules that contain a deuterium atom, in which the atomic nucleus contains not just a proton but an additional neutron, emit significant amounts of radiation, with an intensity that depends upon the temperature of the gas. Because the ratio of deuterium to hydrogen is relatively constant near the Sun, a detection of hydrogen deuteride can be multiplied out to produce a solid estimate of the amount of molecular hydrogen in the disk.

The Herschel data allow the astronomers to set a lower limit for the disk mass at 52 Jupiter masses, the most useful part of this being that this estimate has an uncertainty ten times lower than the previous results. A disk this massive should be able to produce a planetary system larger than the Solar System, which scientists believe was produced by a much lighter disk. When Henning spoke about taking risks, he doubtless referred to the fact that this was only the second time hydrogen deuteride has been detected outside the Solar System. The pitch to the Herschel committee had to be persuasive to get them to sign off on so tricky a detection.

But 36 Herschel observations (with a total exposure time of almost seven hours) allowed the team to find the hydrogen deuteride they were looking for in the far-infrared. Water vapor in the atmosphere absorbs this kind of radiation, which is why a space-based detection is the only reasonable choice, although the team evidently considered the flying observatory SOFIA, a platform on which they were unlikely to get approval given the problematic nature of the observation. Now we have much better insight into a budding planetary system that is taking the same route our own system did over four billion years ago. What further gains this will help us achieve in testing current models of planet formation will be played out in coming years.

The paper is Bergin et al., “An Old Disk That Can Still Form a Planetary System,” Nature 493 ((31 January 2013), pp. 644–646 (preprint). Be aware as well of Hogerheijde et al., “Detection of the Water Reservoir in a Forming Planetary System,” Science 6054 (2011), p. 338. The latter, many of whose co-authors also worked on the Bergin paper, used Herschel data to detect cold water vapor in the TW Hydrae disk, with this result:

Our Herschel detection of cold water vapor in the outer disk of TW Hya demonstrates the presence of a considerable reservoir of water ice in this protoplanetary disk, sufficient to form several thousand Earth oceans worth of icy bodies. Our observations only directly trace the tip of the iceberg of 0.005 Earth oceans in the form of water vapor.

Clearly, TW Hydrae has much to teach us.

Addendum: This JPL news release notes that although a young star, TW Hydrae had been thought to be past the stage of making giant planets:

“We didn’t expect to see so much gas around this star,” said Edwin Bergin of the University of Michigan in Ann Arbor. Bergin led the new study appearing in the journal Nature. “Typically stars of this age have cleared out their surrounding material, but this star still has enough mass to make the equivalent of 50 Jupiters,” Bergin said.

January 29, 2013

Explaining Retrograde Orbits

While radial velocity and transit methods seem to get most of the headlines in exoplanet work, there are times when direct imaging can clarify things found by the other techniques. A case in point is the HAT-P-7 planetary system some 1000 light years from Earth in the constellation Cygnus. HAT-P-7b was interesting enough to begin with given its retrograde orbit around the primary (meaning its orbit was opposite to the spin of its star). Learning how a planet can emerge in a retrograde orbit demands learning more about the system at large, which is why scientists from the University of Tokyo began taking high contrast images of the HAT-P-7 system.

It had been Norio Narita (National Astronomical Observatory of Japan) who, in 2008, discovered evidence of HAT-P-7b’s retrograde orbit. Narita’s team has now used adaptive optics at the Subaru Telescope to measure the proper motion of what turns out to be a small companion star now designated HAT-P-7B. The team was also able to confirm a second planet candidate that had been first reported in 2009. The latter, a gas giant dubbed HAT-P-7c, orbits between the orbits of the retrograde planet (HAT-P-7b) and the newfound companion star.

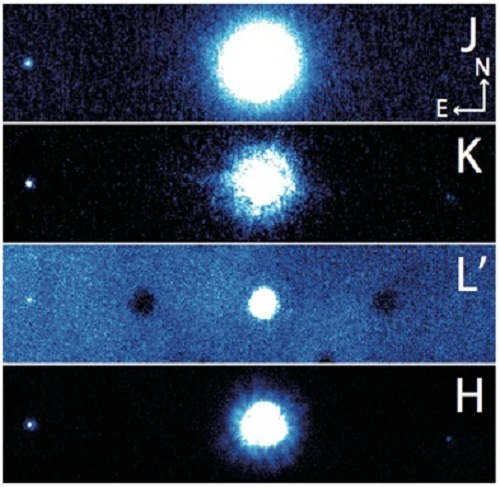

Image: HAT-P-7 and its companion star in images obtained with the Subaru Telescope. IRCS (Infrared Camera and Spectrograph) captured the images in J band (1.25 micron), K band (2.20 micron), and L’ band (3.77 micron) in August 2011, and HiCIAO captured the image in H band (1.63 micron) in July 2012. North is up and east is left. The star in the middle is the central star HAT-P-7, and the one on the east (left) side is the companion star HAT-P-7B, which is separated from HAT-P-7 by more than about 1200 AU. The companion is a star with a low mass only a quarter of that of the Sun. The object on the west (right) side is a very distant, unrelated background star. (Credit: NAOJ)

This Subaru Telescope news release notes the current thinking of Narita’s team on how the retrograde orbit emerged in this system. Key to the puzzle is the Kozai mechanism, first described in the 1960s, which has been found to explain the irregular orbits of everything from irregular planetary moons to trans-Neptunian objects, and has been applied to various exoplanets. The Kozai mechanism says that orbital eccentricity can become orbital inclination, with perturbations leading to the periodic exchange of the two. In other words, what had been a circular but highly-inclined orbit can become an eccentric orbit at a lower inclination.

Ultimately, the effects can be far-reaching as planetary orbits change over time. Can the process be sequential? In the HAT-P-7 system, the researchers are suggesting that the companion star (HAT-P-7B) affected the orbit of the newly confirmed planet HAT-P-7c through the Kozai mechanism, causing orbital eccentricity to be exchanged for inclination. With its orbit now significantly inclined in relation to the central star, Hat-P-7c then affected the inner planet (HAT-P-7b) through the same mechanism, causing its orbit to become retrograde.

The researchers go on to make the case for direct imaging to check for stellar companions that can have a significant effect on planetary orbits. From the paper:

Thus far, the existence of possible faint outer companions around planetary systems has not been checked and is often overlooked, even though the Kozai migration models assume the presence of an outer companion. To further discuss planetary migration using the information of the RM [Rossiter-McLaughlin] effect / spot-crossing events as well as significant orbital eccentricities, it is important to incorporate information regarding the possible or known existence of binary companions. This is also because a large fraction of the stars in the universe form binary systems… Thus it would be important to check the presence of faint binary companions by high-contrast direct imaging. In addition, if any outer binary companion is found, it is also necessary to consider the possibility of sequential Kozai migration in the system, since planet-planet scattering, if it occurs, is likely to form the initial condition of such planetary migration.

We have much to learn about retrograde orbits and the rippling effects of the Kozai mechanism are a possibility that will have to be weighed against other observations. Whether the researchers can make this case stick or not, the fact that so many ‘hot Jupiters’ are themselves in highly inclined or even retrograde orbits tells us how important it will be to work these findings into our theories of planet formation and migration. Direct imaging from the SEEDS project (Strategic Exploration of Exoplanets and Disks with Subaru Telescope) should prove useful as we continue to work on direct imaging of exoplanets around hundreds of nearby stars.

The paper is Narita et al., “A Common Proper Motion Stellar Companion to HAT-P-7,” Publications of the Astronomical Society of Japan, Vol. 64, L7 (preprint).

January 28, 2013

Data Storage: The DNA Option

One of the benefits of constantly proliferating information is that we’re getting better and better at storing lots of stuff in small spaces. I love the fact that when I travel, I can carry hundreds of books with me on my Kindle, and to those who say you can only read one book at a time, I respond that I like the choice of books always at hand, and the ability to keep key reference sources in my briefcase. Try lugging Webster’s 3rd New International Dictionary around with you and you’ll see why putting it on a Palm III was so delightful about a decade ago. There is, alas, no Kindle or Nook version.

Did I say information was proliferating? Dave Turek, a designer of supercomputers for IBM (world chess champion Deep Blue is among his creations) wrote last May that from the beginning of recorded time until 2003, humans had created five billion gigabytes of information (five exabytes). In 2011, that amount of information was being created every two days. Turek’s article says that by 2013, IBM expects that interval to shrink to every ten minutes, which calls for new computing designs that can handle data density of all but unfathomable proportions.

A recent post on Smithsonian.com’s Innovations blog captures the essence of what’s happening:

But how is this possible? How did data become such digital kudzu? Put simply, every time your cell phone sends out its GPS location, every time you buy something online, every time you click the Like button on Facebook, you’re putting another digital message in a bottle. And now the oceans are pretty much covered with them.

And that’s only part of the story. Text messages, customer records, ATM transactions, security camera images…the list goes on and on. The buzzword to describe this is “Big Data,” though that hardly does justice to the scale of the monster we’ve created.

The article rightly notes that we haven’t begun to catch up with our ability to capture information, which is why, for example, so much fertile ground for exploration can be found inside the data sets from astronomical surveys and other projects that have been making observations faster than scientists can analyze them. Learning how to work our way through gigantic databases is the premise of Google’s BigQuery software, which is designed to comb terabytes of information in seconds. Even so, the challenge is immense. Consider that the algorithms used by the Kepler team, sharp as they are, have been usefully supplemented by human volunteers working with the Planet Hunters project, who sometimes see things that computers do not.

But as we work to draw value out of the data influx, we’re also finding ways to translate data into even denser media, a prerequisite for future deep space probes that will, we hope, be gathering information at faster clips than ever before. Consider work at the European Bioinformatics Institute in the UK, where researchers Nick Goldman and Ewan Birney have managed to code Shakespeare’s 154 sonnets into DNA, in which form a single sonnet weighs 0.3 millionths of a millionth of a gram. You can read about this in Shakespeare and Martin Luther King demonstrate potential of DNA storage, an article on their paper in Nature which just ran in The Guardian.

Image: Coding The Bard into DNA makes for intriguing data storage prospects. This portrait, possibly by John Taylor, is one of the few images we have of the playwright (now on display at the National Portrait Gallery in London).

Goldman and Birney are talking about DNA as an alternative to spinning hard disks and newer methods of solid-state storage. Their work is given punch by the calculation that a gram of DNA could hold as much information as more than a million CDs. Here’s how The Guardian describes their method:

The scientists developed a code that used the four molecular letters or “bases” of genetic material – known as G, T, C and A – to store information.

Digital files store data as strings of 1s and 0s. The Cambridge team’s code turns every block of eight numbers in a digital code into five letters of DNA. For example, the eight digit binary code for the letter “T” becomes TAGAT. To store words, the scientists simply run the strands of five DNA letters together. So the first word in “Thou art more lovely and more temperate” from Shakespeare’s sonnet 18, becomes TAGATGTGTACAGACTACGC.

The converted sonnets, along with DNA codings of Martin Luther King’s ‘I Have a Dream’ speech and the famous double helix paper by Francis Crick and James Watson, were sent to Agilent, a US firm that makes physical strands of DNA for researchers. The test tube Goldman and Birney got back held just a speck of DNA, but running it through a gene sequencing machine, the researchers were able to read the files again. This parallels work by George Church (Harvard University), who last year preserved his own book Regenesis via DNA storage.

The differences between DNA and conventional storage are striking. From the paper in Nature (thanks to Eric Davis for passing along a copy):

The DNA-based storage medium has different properties from traditional tape- or disk-based storage.As DNA is the basis of life on Earth, methods for manipulating, storing and reading it will remain the subject of continual technological innovation.As with any storage system, a large-scale DNA archive would need stable DNA management and physical indexing of depositions.But whereas current digital schemes for archiving require active and continuing maintenance and regular transferring between storage media, the DNA-based storage medium requires no active maintenance other than a cold, dry and dark environment (such as the Global Crop Diversity Trust’s Svalbard Global Seed Vault, which has no permanent on-site staff) yet remains viable for thousands of years even by conservative estimates.

The paper goes on to describe DNA as ‘an excellent medium for the creation of copies of any archive for transportation, sharing or security.’ The problem today is the high cost of DNA production, but the trends are moving in the right direction. Couple this with DNA’s incredible storage possibilities — one of the Harvard researchers working with George Church estimates that the total of the world’s information could one day be stored in about four grams of the stuff — and you have a storage medium that could handle vast data-gathering projects like those that will spring from the next generation of telescope technology both here on Earth and aboard space platforms.

The paper is Goldman et al., “Towards practical, high-capacity, low-maintenance information storage in synthesized DNA,” Nature, published online 23 January 2013.

January 24, 2013

The Velocity of Thought

How fast we go affects how we perceive time. That lesson was implicit in the mathematics of Special Relativity, but at the speed most of us live our lives, easily describable in Newtonian terms, we could hardly recognize it. Get going at a substantial percentage of the speed of light, though, and everything changes. The occupants of a starship moving at close to 90 percent of the speed of light age at half the rate of their counterparts back on Earth. Push them up to 99.999 percent of c and 223 years go by on Earth for every year they experience.

Thus the ‘twin paradox,’ where the starfaring member of the family returns considerably younger than the sibling left behind. Carl Sagan played around with the numbers in the 1960s to show that a spacecraft moving at an acceleration of one g would be able to reach the center of the galaxy in 21 years (ship-time), while tens of thousands of years passed on Earth. Indeed, keep the acceleration constant and our crew can reach the Andromeda galaxy in 28 years, a notion Poul Anderson dealt with memorably in the novel Tau Zero.

Image: A Bussard ramjet in flight, as imagined for ESA’s Innovative Technologies from Science Fiction project. Credit: ESA/Manchu.

Not long after Monday’s post on fast spacecraft I received an email from a young reader who wanted to know a bit more about humans and speed. He had been interested to learn that the fastest man-made object thus far was the Helios II solar probe, while Voyager I’s 17 kilometers per second make it the fastest probe now leaving the system, well above New Horizons’ anticipated 14 kilometers per second at Pluto/Charon. But that being the case for automated probes, what was the fastest speed ever attained by a human being?

Speeds like this are well below those that cause noticeable relativistic effects, of course, but it’s an interesting question because of how much it changed at the beginning of the 20th Century, so let’s talk about it. Lee Billings recently looked into speed in a fine essay called Incredible Journey: Can We Reach the Stars Without Breaking the Bank? and found that in 1906, a man named Fred Marriott managed to surpass 200 kilometers per hour in (the mind boggles) a steam-powered car at Daytona Beach, Florida. This is worth thinking about because Lee points out that before this time, the fastest anyone could have traveled was 200 kilometers per hour, which happens to be the terminal velocity of the human body as it is slowed by air resistance.

So the advent of fast machines finally changed the speed record in 1906, and it would be a scant forty years later that Chuck Yeager pushed the X-1 up past 1000 kilometers per hour, faster than the speed of sound. I can remember checking out a library book back in the 1950s called The Fastest Man Alive. Before I re-checked the reference so I could write this post, I was assuming that the book had been about X-15 pilot Scott Crossfield, but I discovered that this 1958 title was actually the story of Frank Kendall Everest, Jr., known as ‘Pete’ to his buddies.

Everest flew in North Africa, Sicily and Italy and went on to complete 67 combat missions in the Pacific theater, including a stint as a prisoner of war of the Japanese in 1945. If there was an experimental aircraft he didn’t fly in the subsequent decade, I don’t know what it was, but if memory serves, the bulk of The Fastest Man Alive was about his work with the X-2, in which he reached Mach 2.9 in 1954. Everest was one of the foremost of that remarkable breed of test pilots who pushed winged craft close to space in the era before Gagarin.

But to get back to my friend’s question. Lee Billings identifies the fastest humans alive today as ‘three elderly Americans, all of whom Usain Bolt could demolish in a footrace.’ These are the Apollo 10 astronauts, whose fiery re-entry into the Earth’s atmosphere began at 39,897 kilometers per hour, a speed that would take you from New York to Los Angeles in less than six minutes. No one involved with the mission would have experienced relativistic effects that were noticeable, but in the tiniest way the three could be said to be slightly younger than the rest of us thanks to the workings of Special Relativity.

Sometimes time slows in the way we consider our relation to it. I noticed an interesting piece called Time and the End of History Illusion, written for the Long Now Foundation. The essay focuses on a paper recently published in Science that asked participants to evaluate how their lives — their values, ideas, personality traits — had changed over the past decade, and how much they expected to see them change in the next. Out of a statistical analysis of the findings came what the researchers are calling an ‘End of History Illusion.’

The illusion works like this: We tend to look back at our early lives and marvel at our naïveté. How could we not, seeing with a certain embarrassment all the mistakes we made, and knowing how much we have changed, and grown, over the years. One of the study authors, Daniel Gilbert, tells The New York Times, “What we never seem to realize is that our future selves will look back and think the very same thing about us. At every age we think we’re having the last laugh, and at every age we’re wrong.”

The older we get, in other words, the wiser we think we are in relation to our younger selves. We always think that we have finally arrived, that now we see what we couldn’t see before, and assume that we can announce our final judgment about various aspects of our lives. The process seems to be at work not only in our personal lives but in how we evaluate the world around us. How else to explain the certitude behind some of the great gaffes of intellectual history? Think of US patent commissioner Charles Duell, who said in 1899: “Everything that can be invented has been invented.” Or the blunt words of Harry Warner: “Who the hell wants to hear actors talk?”

The Long Now essay quotes Francis Fukuyama, who wrote memorably about the ‘end of history’ and French philosopher Jean Beaudrillard, who sees such ideas as nothing more than an illusion, one made possible by what he called ‘the acceleration of modernity.’ Long Now adds:

Illusion or not, the Harvard study shows that a sense of being at the end of history has real-world consequences: underestimating how differently we’ll feel about things in the future, we sometimes make decisions we later come to regret. In other words, the end of history illusion could be thought of as a lack of long-term thinking. It’s when we fail to consider the future impact of our choices (and imagine alternatives) that we lose all sense of meaning, and perhaps even lose touch with time itself.

We’ve come a long way from my reader’s innocent question about the fastest human being. But I think Long Now is on to something in talking about the dangers of misunderstanding how we may think, and act, in the future. By assuming we have reached some fixed goal of insight, we grant ourselves too many powers, thinking in our hubris that we are wiser than we really are. Time is elastic and can be bent around in interesting ways, as Einstein showed. Time is also deceptive and leads us as we age to become more doctrinaire than can be warranted.

Sometimes, of course, time and memory mingle inseparably. I’m remembering how my mother used to sit on the deck behind her house when I would go over there to make her coffee. We would look into the tangle of undergrowth and trees up the hill as the morning sun sent bright shafts through the foliage, and as Alzheimer’s gradually took her, she would often remark on how tangled the hillside had become. I always assumed she meant that it had become such because she was no longer maintaining it with the steady pruning of her more youthful years.

Then, not long before her death, I suddenly realized that she was not seeing the same hill that I was. At the end of her life, she was seeing the hill in front of her house in a small river town in Illinois. Like her current hill, it rose into the east so that while the house stood in shadow, sunlight would blaze across the Mississippi to paint the farmlands of Missouri on the bright mornings when she would get up to walk to school. When I went back there after her funeral, the hill was still open as she had remembered it, grassy, free of brush, though the house was gone. It was the hill she had returned to in her mind after 94 years, as vividly hers in 2011 as it had been in 1916. In such ways are we all time travelers, moving inexorably at the velocity of thought.

January 23, 2013

Talking Back from Alpha Centauri

Back when I was working on my Centauri Dreams book, JPL’s James Lesh told me that the right way to do communications from Alpha Centauri was to use a laser. The problem is simple enough: Radio signals fall off in intensity with the square of their distance, so that a spacecraft twice as far from Earth as another sends back a signal with four times less the strength. Translate that into deep space terms and you’ve got a problem. Voyager puts out a 23-watt signal that has now spread to over one thousand times the diameter of the Earth. And we’re talking about a signal 20 billion times less powerful than the power to run a digital wristwatch.

Now imagine being in Alpha Centauri space and radiating back a radio signal that is 81,000,000 times weaker than what Voyager 2 sent back from Neptune. But lasers can help in a major way. Dispersion of the signal is negligible compared to radio, and optical signals can carry more information. Lesh is not a propulsion man so he leaves the problem of getting to Alpha Centauri to others. But his point was that if you could get a laser installation about the size of the Hubble Space Telescope into Centauri space, you could send back a useful datastream to Earth.

The probe would do that using a 20-watt laser system that would lock onto the Sun as its reference point and beam its signals to a 10-meter telescope in Earth orbit (placed there to avoid absorption effects in the atmosphere). It’s still a tough catch, because you’d have to use optical filters to remove the bright light of the Alpha Centauri system while retaining the laser signal.

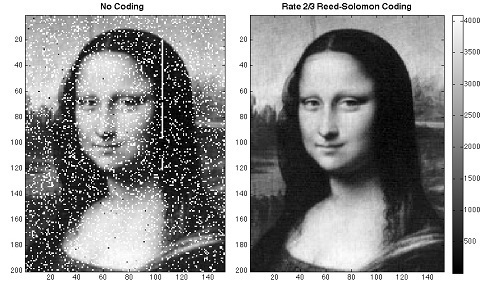

But while the propulsion conundrum continues to bedevil us, progress on the laser front is heartening, as witness this news release from Goddard Space Flight Center. Scientists working with the Lunar Reconnaissance Orbiter have successfully beamed an image of the Mona Lisa to the spacecraft, sending the image embedded in laser pulses that normally track the spacecraft. It’s a matter of simultaneous laser communication and tracking, says David Smith (MIT), principal investigator on the LRA’s Lunar Orbiter Laser Altimeter instrument:

“This is the first time anyone has achieved one-way laser communication at planetary distances. In the near future, this type of simple laser communication might serve as a backup for the radio communication that satellites use. In the more distant future, it may allow communication at higher data rates than present radio links can provide.”

The Lunar Reconnaissance Orbiter, I was surprised to find, is the only satellite in orbit around a body other than Earth that is being tracked by laser, making it the ideal tool for demonstrating at least one-way laser communications. The work involved breaking the Mona Lisa into a 152 x 200 pixel array, with each pixel converted into a shade of gray represented by a number between 0 and 4095. According to the news release: “Each pixel was transmitted by a laser pulse, with the pulse being fired in one of 4,096 possible time slots during a brief time window allotted for laser tracking. The complete image was transmitted at a data rate of about 300 bits per second.”

Image: NASA Goddard scientists transmitted an image of the Mona Lisa from Earth to the Lunar Reconnaissance Orbiter at the moon by piggybacking on laser pulses that routinely track the spacecraft. Credit: NASA Goddard Space Flight Center

The image was then returned to Earth using the spacecraft’s radio telemetry system. We’ll soon see where this leads, for NASA’s Lunar Atmosphere and Dust Environment Explorer mission will include further laser communications demonstrations. The robotic mission is scheduled for launch this year, and will in turn be followed by the Laser Communications Relay Demonstration (LCRD), scheduled for a 2017 launch aboard a Loral commercial satellite. LCRD will be NASA’s first long-duration optical communications mission, one that the agency considers part of the roadmap for construction of a space communications system based on lasers.

If we can make this work, data rates ten to one hundred times higher than available through traditional radio frequency systems can emerge using the same mass and power. Or you can go the other route (especially given payload constraints for deep space missions) and get the same data rate using much less mass and power. The LCRD demonstrator will help us see what’s ahead.

In any case, it’s clear that something has to give when we think about leaving the Solar System. Claudio Maccone has gone to work on bit error rate, that essential measure of signal quality that takes the erroneous bits received divided by the total number of bits transmitted. Suppose you tried to monitor a probe in Alpha Centauri space using one of the Deep Space Network’s 70-meter dishes. Maccone assumes a 12-meter inflatable antenna aboard the spacecraft, a link frequency in the Ka band (32 GHz), a bit rate of 32 kbps, and forty watts of transmitting power.

The result: A 50 percent probability of errors. We discussed all this in these pages a couple of years back in The Gravitational Lens and Communications, so I won’t rehash the whole thing other than to say that using the same parameters but working with a FOCAL probe using the Sun’s gravitational lens at 550 AU and beyond, Maccone shows that forty watts of transmitting power produce entirely acceptable bit error rates. Here again you have to have a probe in place before this kind of data return can begin, but getting a FOCAL probe into position could pay off in lowering the mass of the communications package aboard the interstellar probe.

Whether using radio frequencies or lasers, communicating with a probe around another star presents us with huge challenges. James Lesh’s paper on laser communications around Alpha Centauri is Lesh, C. J. Ruggier, and R. J. Cesarone, “Space Communications Technologies for Interstellar Missions,” Journal of the British Interplanetary Society 49 (1996): 7–14. Claudio Maccone’s paper is “Interstellar radio links enhanced by exploiting the Sun as a Gravitational Lens,” Acta Astronautica Vol. 68, Issues 1-2 (2011), pp. 76-84.

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers