Paul Gilster's Blog, page 212

December 11, 2013

Encounter with a Blue/Green Planet

When I first read about Project Daedalus all those many years ago, I remember the image of a probe passing through a planetary system, in this case the one assumed to be around Barnard’s Star, at high velocity, taking measurements all the way through its brief encounter. And wouldn’t it be a fabulous outcome if something rare happened, like the reception of some kind of radio activity or some other sign of intelligent life on one of the planets there? That thought stayed with me when, much later, I read Greg Matloff and Eugene Mallove’s The Starflight Handbook and began thinking seriously about interstellar flight.

The chance of making such a detection would be all but non-existent if we just chose a destination at random, but by the time we get to actual interstellar spacecraft, we’ll also have an excellent idea — through space- and Earth-based instruments — about what we might find there. These days that ETI detection, while always interesting, isn’t at the top of my list of probabilities, but getting a close-up view of a world with an apparent living environment at whatever stage of evolution would be a stunning outcome. Let’s hope our descendants see such a thing.

Nearby Celestial Encounters

Triggering these musings was a space event much closer to home, the recent passage of the Juno spacecraft, which moved within 560 kilometers of the Earth’s surface on October 9 in a gravity assist maneuver designed to hasten its way to a 2016 encounter with Jupiter. Juno will orbit the giant planet 33 times, studying its northern and southern lights and passing through the vast electrical fields that generate them. I was fascinated to see that the recent close pass marked a milestone of sorts, for the spacecraft detected a shortwave message sent by amateur radio operators on Earth.

Image: Tony Rogers, the president of the University of Iowa ham radio club, mans the equipment used to send a message to the Juno spacecraft in October. The simple message “Hi” was sent repeatedly by ham radio operators around the world. Photos by Tim Schoon.

It’s not a starship picking up an alien civilization’s signals, but Juno did something that previous missions like Galileo and Cassini were unable to do. The latter were able to detect shortwave radio transmissions during their own Earth encounters, but it was not possible to decode intelligent information from the data they acquired. The Waves instrument on Juno, built at the University of Iowa, collected enough data that a simple Morse code message from amateur radio operators could be decoded, leading Bill Kurth, lead investigator for the Waves instrument, to say “We believe this was the first intelligent information to be transmitted to a passing interplanetary space instrument, as simple as the message may seem.”

The Juno team evaluated the Waves instrument data after the October 9 flyby and noted that the message appeared in the data when the spacecraft was still over 37,000 kilometers from Earth. The University of Iowa Amateur Radio Club contacted hundreds of ham stations in 40 states and 17 countries as part of the effort, which was managed through a website and a temporary station set up at the university. Operators from every continent coordinated transmissions carrying the same coded message, with results that can be heard in this video. As public outreach for an ongoing mission, this is prime stuff. Kurth continues:

“This was a way to involve a large number of people—those not usually associated with Juno—in a small portion of the mission. This raises awareness, and we’ve already heard from some that they’ll be motivated to follow the Juno mission through its science phase at Jupiter.”

Also qualifying as excellent public relations is the image below, which came as one of Juno’s sensors offered a low-resolution look at what a fast passage by our planet would be like.

Image: This cosmic pirouette of Earth and our moon was captured by the Juno spacecraft as it flew by Earth on Oct. 9, 2013. Image Credit: NASA/JPL-Caltech.

Scott Bolton, Juno principal investigator, likens the view to a rather famous TV show:

“If Captain Kirk of the USS Enterprise said, ‘Take us home, Scotty,’ this is what the crew would see. In the movie, you ride aboard Juno as it approaches Earth and then soars off into the blackness of space. No previous view of our world has ever captured the heavenly waltz of Earth and moon.”

Getting these images wasn’t easy. The sensors involved, cameras designed to track faint stars, are part of Juno’s Magnetic Field Investigation (MAG) and are normally used to adjust the orientation of the spacecraft’s magnetic sensors. The spacecraft was spinning at 2 rpm during the encounter, so a frame was captured each time the cameras were facing the Earth, with the results processed into video format. Juno now moves on to a Jupiter arrival on July 4 of 2016. If you want to see the Earth flyby with musical accompaniment, see this video and ponder what John Jørgensen (Danish Technical University), who designed the star tracker, says about the event: “Everything we humans are and everything we do is represented in that view.”

Rings a bell, doesn’t it? And why not. This is indeed another of those ‘pale blue dot’ moments.

December 10, 2013

Brown Dwarfs at the Boundary

We spend a lot of time probing the borderlines of astronomy, wondering what the boundaries are between a large gas giant and a brown dwarf, for example. The other end of that question is also intriguing: When does a true star get small enough to be a brown dwarf? For main sequence stars don’t operate the same way brown dwarfs do. Add hydrogen to a main sequence star and its radius increases. But brown dwarfs work the opposite way, with additional mass causing them to shrink. We see this beginning to happen at the high end of the brown dwarf mass range, somewhere between 60 and 90 Jupiter masses.

Electron degeneracy pressure, which occurs when electrons are compressed into a very small volume, is at play here. No two electrons with the same spin can occupy the same energy state in the same volume — this is the Pauli exclusion principle. When the lowest energy level is filled, added electrons are forced into higher energy states and travel at faster speeds, creating pressure. We see this in other kinds of objects as well. A star of less than four solar masses, for example, having gone through its red giant phase, will collapse and move off the main sequence until its collapse is halted by the pressure of electron degeneracy — a white dwarf is the result.

New work out of the RECONS (Research Consortium on Nearby Stars) group at Georgia State has now found observational evidence that helps us pinpoint the distinction between very low mass stars and brown dwarfs. Let me quote from the preprint to their upcoming paper, slated to appear in The Astronomical Journal, to clarify the electron degeneracy issue:

One of the most remarkable facts about VLM [Very Low Mass] stars is the fact that a small change in mass or metallicity can bring about profound changes to the basic physics of the object’s interior, if the change in mass or metallicity places the object in the realm of the brown dwarfs, on the other side of the hydrogen burning minimum mass limit. If the object is unable to reach thermodynamic equilibrium through sustained nuclear fusion, the object’s collapse will be halted by non-thermal electron degeneracy pressure. The macroscopic properties of (sub)-stellar matter are then ruled by different physics and obey a different equation of state… Once electron degeneracy sets in at the core, the greater gravitational force of a more massive object will cause a larger fraction of the brown dwarf to become degenerate, causing it to have a smaller radius. The mass-radius relation therefore has a pronounced local minimum near the critical mass attained by the most massive brown dwarfs…

With these facts in mind, the RECONS team used data from two southern hemisphere observatories, the SOAR (Southern Observatory for Astrophysical Research) 4.1-m telescope and SMARTS (Small and Moderate Aperture Research Telescope System) in Chile, to take measurements of objects thought to lie at the star/brown dwarf boundary. Says Sergio Dieterich, lead author of the paper:

“In order to distinguish stars from brown dwarfs we measured the light from each object thought to lie close to the stellar/brown dwarf boundary. We also carefully measured the distances to each object. We could then calculate their temperatures and radii using basic physical laws, and found the location of the smallest objects we observed. We see that radius decreases with decreasing temperature, as expected for stars, until we reach a temperature of about 2100K. There we see a gap with no objects, and then the radius starts to increase with decreasing temperature, as we expect for brown dwarfs.“

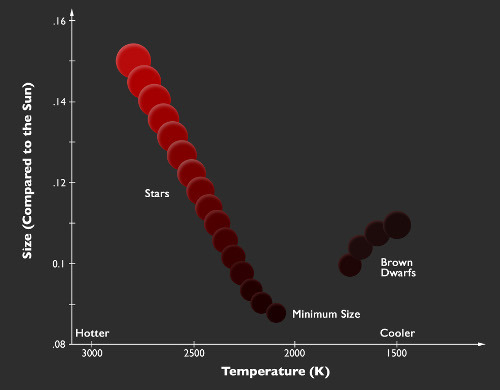

The diagram below makes the distinction clearer:

Image: The relation between size and temperature at the point where stars end and brown dwarfs begin (based on a figure from the publication). Credit: P. Marenfeld & NOAO/AURA/NSF.

Notice the temperature drop as the size of main sequence stars declines, then the break between true stars and brown dwarfs. The RECONS research indicates that the boundaries can be precisely drawn: Below temperatures of 2100 K, a radius 8.7 percent that of the Sun, and a luminosity of 1/8000th of the Sun, we leave the main sequence. The team identifies the star 2MASS J0513-1403 as an example of the smallest of main sequence stars. Is the gap between true stars and brown dwarfs right after 2MASS J0513-1403 real or is it the effect of an insufficient sample? To find out, the team is planning a larger search to test these initial results.

Note, too, the interesting distinction in the ages of these objects. Small M-dwarfs can live for trillions of years. Brown dwarfs, on the other hand, have much shorter lifetimes, continually fading over time. Whether they could produce a habitable zone over timeframes sufficient to support life is an open question, one that we’ve looked at in Brown Dwarf Planets and Habitability. Our study of small, dim objects and astrobiology is only beginning.

The paper is Dieterich et al., “The Solar Neighborhood XXXII. The Hydrogen Burning Limit,” accepted at the Astronomical Journal and available as a preprint. See this NOAA/SOAR news release for more.

December 9, 2013

Distant Companions: The Case of HD 106906 b

When the pace of discovery is as fast as it has been in the realm of exoplanet research, we can expect to have our ideas challenged frequently. The latest instance comes in the form of a gas giant known as HD 106906 b, about eleven times as large as Jupiter in a young system whose central star is only about 13 million years old. It’s a world still glowing brightly in the infrared, enough so to be spotted through direct imaging, about which more in a moment. For the real news about HD 106906 b is that it’s in a place our planet formation models can’t easily explain.

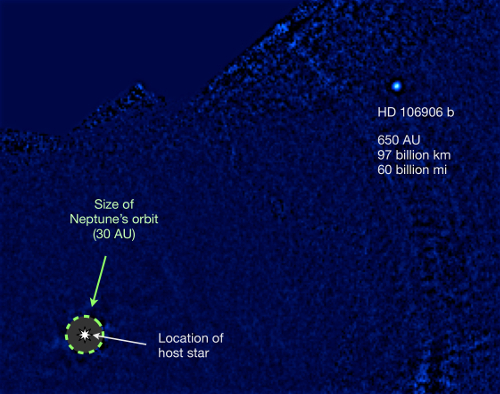

Image: This is a discovery image of planet HD 106906 b in thermal infrared light from MagAO/Clio2, processed to remove the bright light from its host star, HD 106906 A. The planet is more than 20 times farther away from its star than Neptune is from our Sun. AU stands for Astronomical Unit, the average distance of the Earth and the Sun. (Image: Vanessa Bailey).

Start with the core accretion model and you immediately run into trouble. Core accretion assumes that the core of a planet like this forms from the accretion of small bodies of ice and rock called planetesimals. The collisions of these objects bulk up the core, which then attracts an outer layer of gas. The problem with applying this model to HD 106906 b is that it’s about 650 AU from its star, far enough out that the core doesn’t have enough time to form before radiation from the hot young star dissipates the already thin gas of the outer protoplanetary disk. Get beyond 30 AU or so and it gets harder and harder to explain how a gas giant forms here.

Gravitational instability is the key to the other major theory of planet formation, but it’s challenged by HD 106906 b as well. Here the instabilities in a dense debris disk cause it to collapse into knots of matter, a process that can theoretically form planets in mere thousands rather than the millions of years demanded by core accretion. But at distances like 650 AU, there shouldn’t be enough material in the outer regions of the protoplanetary disk to allow a gas giant to form.

Grad student Vanessa Bailey (University of Arizona), lead author on the paper presenting this work, describes one of two alternative hypotheses that could explain the planet’s placement:

“A binary star system can be formed when two adjacent clumps of gas collapse more or less independently to form stars, and these stars are close enough to each other to exert a mutual gravitational attraction and bind them together in an orbit. It is possible that in the case of the HD 106906 system the star and planet collapsed independently from clumps of gas, but for some reason the planet’s progenitor clump was starved for material and never grew large enough to ignite and become a star.”

Making this explanation problematic is the fact that the mass ratio of the two stars in a binary system is usually more like 10 to 1 rather than this system’s 100 to 1, but the fact that the remnants of HD 106906’s debris disk are still observable may prove useful in untangling the mystery. A second formation mechanism suggested for planets at this distance from their star is that the planet may have formed elsewhere in the disk and been forced into its current position by gravitational interactions. The preprint notes the problem with this scenario:

Scattering from a formation location within the current disk is unlikely to have occurred without disrupting the disk in the process… We also note that the perturber must be > 11 MJup; we do not detect any such object beyond 35 AU…, disfavoring formation just outside the disk’s current outer edge. While it is possible that the companion is in the process of being ejected on an inclined trajectory from a tight initial orbit, this would require us to observe the system at a special time, which is unlikely. Thus we believe the companion is more likely to have formed in situ in a binary-star-like manner, possibly on an eccentric orbit.

HD 106906 b was found through deliberate targeting of stars with unusual debris disks in the hope of learning more about planet/disk interactions. The team used the Magellan Adaptive Optics system and Clio2 thermal infrared camera mounted on the Magellan telescope in Chile. The Folded-Port InfraRed Echellette (FIRE) spectrograph at Magellan was then used to study the planetary companion. The Magellan data were compared to Hubble Space Telescope data taken eight years earlier to confirm that the planet is indeed moving with the host star.

This unusual gas giant thus joins the small but growing group of widely separated planetary-mass companions and brown dwarf companions that challenge our planet formation models. The massive, ring-like debris disk around HD 106906 helps us constrain the formation possibilities, but we’ll need more data from systems whose disk/planet interactions can be observed before we can speak with any confidence about the origin of these objects.

The paper is Bailey et al., “HD 106906 b: A planetary-mass companion outside a massive debris disk,” accepted at The Astrophysical Journal Letters and available as a preprint. This University of Arizona news release is also useful.

December 6, 2013

Cosmic Loneliness and Interstellar Travel

Nick Nielsen’s latest invokes the thinking of Carl Sagan, who explored the possibilities of interstellar ramjets traveling at close to the speed of light in the 1960’s. What would the consequences be for the civilization that developed such technologies, and how would such starships affect their thinking about communicating with other intelligent species? Sagan’s speculations took humans not just to the galactic core but to M31, journeys made possible within a human lifetime by time dilation. Nielsen, an author and contributing analyst with strategic consulting firm Wikistrat, ponders how capabilities like that would change our views of culture and identity. Fast forward to the stars, after all, means you can’t go home again.

by Nick Nielsen

In my previous Centauri Dreams post, I discussed some of the possible explanations of what Paul Davies has called the “eerie silence” – the fact that we hear no signs of alien civilizations when we listen for them – in connection with existential risk. Could the eerie silence be a sign that older civilizations than ours have been risk averse to the point of plunging the galaxy into silence, perhaps even silencing others (making use of the Rezabek maneuver)? It is a question worth considering.

For one answer is that we are alone, or very nearly alone, in our galaxy, and probably also in our local cluster of galaxies, and perhaps also alone even in our local supercluster of galaxies. I think this may be the case partly due to the eerie silence when we listen, but also due to what may be called our cosmic loneliness. Not only are our efforts to listen for other intelligences greeted with silence, but also the attempts to demonstrate any alien visitation of our planet or our solar system have turned up nothing. When we listen, we hear only silence, and when we look, we find nothing.

The question, “Are we alone?” has come to take on a scientific poignancy that few other questions hold for us, and we ask this question because of our cosmic loneliness. We are beginning to understand the Copernican revolution not only on an intellectual level, but also on a visceral level, and for many who experience this visceral understanding the result is what psychoanalyst Viktor Frankl called the existential vacuum; the whole cosmos now appears as an existential vacuum devoid of meaning, and that is why we ask, “Are we alone?” We ask the question out of need.

Image credit: TM-1970, Russia (via Dark Roasted Blend).

While talk of alien visitation is usually dominated by discussions of UFOs (and merely by mentioning the theme I risk being dismissed as a crackpot), due to the delay involved in EM spectrum communications, it is at least arguable that communication is less likely than travel and visitation. That being said, I do not find any of the claimed accounts of extraterrestrial visitation to be credible, and I will not discuss them, but I will try to show why visitation is more likely than communication via electromagnetic means.

An organic life form having established an industrial-technological civilization on its homeworld – rational beings that we might think of as peer species – would, like us, have risen from biological deep time, possessing frail and fragile bodies as we do, subject to aging and deterioration. An advanced technological civilization could greatly extend the lives of organic beings, but how long such lives could be extended (without being fully transformed into non-organic beings, i.e., without becoming post-biological) is unknown at present.

For EM spectrum communications across galactic distances, even the most long-lived organic being would be limited in communications to only a small portion of its home galaxy. If civilizations are a rarity within the galaxy, the likelihood of living long enough to engage in even a single exchange is quite low. In fact, we can precisely map the possible sphere of communication of a being with a finite life span within our galaxy (or any given galaxy) based on the longevity of that life form. Even an extraordinarily long-lived and patient ETI would not wish to wait thousands of years between messages, especially in view of the quickening pace of civilization that comes about with the advent of telecommunications.

It could be argued that non-organic life forms take up where organic life forms leave off, and for machines to take over our civilization would mean that length of life becomes much less relevant, but the relative merits and desirability of mechanistic vs. organic bearers of industrial-technological civilization (not to speak of being bearers of consciousness) is a point that needs to be argued separately, so I will not enter into this at present. But whether ETI is biological or post-biological, no advanced intellect is going to send out a signal and wait a thousand years for a response, since in that same thousand year period it would be possible to invent the technologies that would allow for travel to the same object of your communication in a few years’ time (i.e, a few years in terms of elapsed shipboard time).

Our perfect ETI match as a peer civilization in the Milky Way will have already realized that electromagnetic communications mean waiting too long to talk to planetary systems that can be visited directly. If they are a hundred years ahead of us, they may already have started out and may find us soon. If they are a hundred years behind us, they will not yet even have the science to conceive of these possibilities as realizable technological aims. But what is the likelihood, in the universe in which intelligent life is rare (and we know by now that there are no “super-civilizations” nearby us in cosmic terms – cf. my Searching the Sky), that in all the vast space and time of the universe, a peer civilization should arise within a hundred years’ development of our own civilization? Not very likely.

The further we push out the temporal parameters of this observation, the more likely there is another civilization within these temporal parameters, but the further such a civilization is from being a peer civilization. Take a species a thousand years behind us or a thousand years ahead of us: the former cannot form a conception of the universe now known to observational cosmology; the latter will have technological abilities so far beyond ours (having had an industrial-technological civilization that has been in existence five times longer than ours) that we would not be in any sense their peer. And they would have already visited us. If we set the parameters of temporal radius from the present at ten thousand years, or a hundred thousand years, we are much more likely to find life on other worlds, but the further from our present level of development, the less likely any life found would be recognizable as a peer civilization.

How would we visit other worlds directly? With the breakthrough technology of a 1G starship (i.e., a starship than can accelerate or decelerate at a constant of one gravity) [2], all of the waiting to discover the universe and what lies beyond virtually disappears for those willing to make the journey. And while I have called this 1G starship a “breakthrough technology,” it is not likely to happen all at once in a breakthrough, but will probably take decades (if not centuries) of development. Our first interstellar probes, Voyager 1 and Voyager 2, are already headed to the stars [3]. It would take tens of thousands of years for the Voyager probes to arrive at another solar system, should they survive so long. Incremental improvements even in known propulsion technologies will yield gradually more efficient and effective interstellar travel (and will not require any violations of the laws of physics). While we don’t yet have full breakeven in inertial confinement fusion [4], we can in fact achieve inertial confinement fusion at an energy loss, meaning that an inertial confinement fusion starship drive is nearly within the capability of present technology. All of this leaves aside the possibility of breakthrough technologies that would be game-changers (such as the Alcubierre drive).

If we assume that a peer species would emerge from an Earth twin, we can assume that such a peer species would be subject to roughly similar gravitational limitations, so that an ETI 1G starship would be something similar in terms of velocity. Human beings or a peer ETI species, while unable to engage in any but the most limited EM spectrum communications over galactic distances, would find the galaxy opened up to them by a 1G starship, able to explore the farthest reaches of the universe within the ordinary biological lifetime of intelligent life forms even as we know such life forms today (i.e., ourselves), limited to a mere three score and ten, or maybe a bit more.

I have mentioned inertial confinement fusion above as a possible starship propulsion system, but this example is not necessary to my argument. If there existed only a single propulsion proposal for interstellar travel, and all our hopes for such travel rested on an unknown science and an unknown technology, we would have good reason to be skeptical that interstellar travel would ever be possible under any circumstances. This, however, is not the case. There are a wide variety of potential interstellar propulsion technologies, including inertial confinement fusion, matter-antimatter, quantum vacuum thrusters, and other even more exotic ideas. As long as industrial-technological civilization continues its development, some advanced propulsion idea is likely to prove successful, if only marginally so, but marginally will be enough for the first pioneers who are willing to sacrifice all for the chance at a new world.

It is humbling that we know so little about these technologies and the science that underlies them that we are not today in a position to say which among these might prove to be robust and durable drives for a starship, but the very fact that we know so little implies that we have much to learn and we cannot yet exclude any of these exotic starship drive possibilities, much less dismiss them as impossible. While no one has yet produced a proof of concept of any of these proposed forms of propulsion, it is also the case that no one has yet falsified the science upon which they are based.

Even the most successful of the drives mentioned above (with the exception of the Alcubierre drive) will involve time dilation as a condition of interstellar travel. There has been a tendency to view time dilation as a cosmic “fun spoiler” that prevents us seeing the universe on our own terms, since the elapsed time on one’s home world means that no one can return to the world that they left. We need to get beyond this limiting idea and come to see time dilation as a resource that will allow us to travel throughout the galaxy. It is true that time dilation is a limitation, but it is also an opportunity. As Carl Sagan noted:

“Relativity does set limits on what humans can ultimately do. But the universe is not required to be in perfect harmony with human ambition. Special relativity removes from our grasp one way of reaching the stars, the ship that can go faster than light. Tantalizingly, it suggests another and quite unexpected method.” [5]

Human ambition, as Sagan suggests, wants interstellar travel without the price exacted by time dilation, but the universe is not going to accommodate this particular ambition. We have had to reconcile ourselves with the fact that historical transmission is a unidirectional process. We can read Shakespeare, but we cannot talk to Shakespeare. Shakespeare’s contemporary Queen Elizabeth could make it known that she wanted to see Falstaff in love, and “The Merry Wives of Windsor” resulted, but we cannot approach the Bard to write the perfect comedy of manners in which smart phones and text messages figure in the plot.

Just so, we are all unidirectional time travelers, and the eventual development of travel at relativistic velocities will not give us the ability to travel backward in time, nor will it allow us to travel to the stars without giving thought to the inertial frame of reference of our homeworld, but it will give us more alternatives for going forward in time. We will have the opportunity to choose between an inertial framework at rest (presumably relative to our homeworld), and some accelerated inertial framework in which time passes more slowly, allowing us to travel farther and, incidentally, to see more of the universe. Even if we can never go back, we can always go forward. Relativistic interstellar travel will mean that we have a choice as to how rapidly we move forward in time. The possibility of always going farther forward in time has consequences for existential risk mitigation that I will discuss in my next Centauri Dreams post.

Notes

[1] Cf. Viktor Frankl, Man’s Search for Meaning

[2] Carl Sagan discussed the 1G starship in his Cosmos, Chapter VIII, “Travels in Space and Time”; I quote from this same chapter below.

[3] As of this writing, Voyager 1 has passed into interstellar space, while Voyager 2 has not yet emerged from the heliosheath and into interstellar space.

[4] A near breakeven in inertial confinement fusion was recently achieved, in which produced more energy that was absorbed by the fuel for the reaction, but this is not the same as producing as much energy from the fusion reaction as was pumped into the lasers making the reaction happen. (Cf. Nuclear fusion milestone passed at US lab.)

[5] Carl Sagan, Cosmos, Chapter VIII, “Travels in Space and Time” (cf. note [2] above)

December 5, 2013

The Winds of Deep Space

If we can use solar photons to drive a sail, and perhaps use their momentum to stabilize a threatened observatory like Kepler, what about that other great push from the Sun, the solar wind? Unlike the stream of massless photons that exert a minute but cumulative push on a surface like a sail, the solar wind is a stream of charged particles moving at speeds of 500 kilometers per second and more, a flow that has captured the interest of those hoping to create a magnetic sail to ride it. A ‘magsail’ interacts with the solar wind’s plasma. The sailing metaphor remains, but solar sails and magsails get their push from fundamentally different processes.

Create a magnetic field around your spacecraft and interesting things begin to happen. Those electrons and positively charged ions flowing from the Sun experience a force as they move through the field, one that varies depending on the direction the particles are moving with respect to the field. The magsail is then subjected to an opposing force, producing acceleration. The magsail concept envisions large superconducting wire loops that produce a strong magnetic field when current flows through them, taking advantage of the solar wind’s ‘push.’

A magsail sounds like a natural way to get to the outer Solar System or beyond, but the solar wind introduces problems that compromise it. One is that it’s a variable wind indeed, weakening and regaining strength, and although I cited 500 kilometers per second in the introductory paragraph, the solar wind can vary anywhere from 350 to 800 kilometers per second. An inconstant wind raises questions of spacecraft control, an issue Gregory Matloff, Les Johnson and Giovanni Vulpetti are careful to note in their 2008 title Solar Sails: A Novel Approach to Interplanetary Travel (Copernicus, 2008). Here’s the relevant passage:

While technically interesting and somewhat elegant, magsails have significant disadvantages when compared to solar sails. First of all, we don’t (yet) have the materials required to build them. Second, the solar wind is neither constant nor uniform. Combining the spurious nature of the solar wind flux with the fact that controlled reflection of solar wind ions is a technique we have not yet mastered, the notion of sailing in this manner becomes akin to tossing a bottle into the surf at high tide, hoping the currents will carry the bottle to where you want it to go.

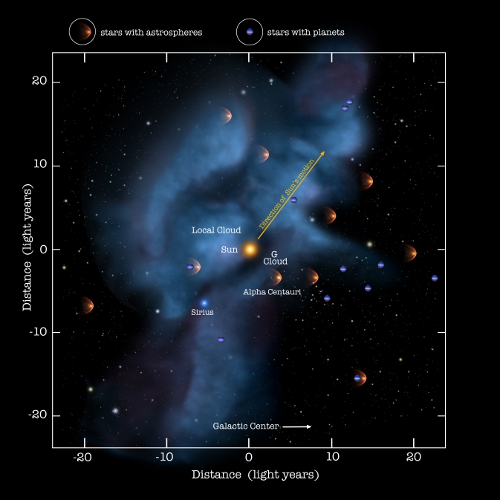

Interstellar Tradewinds and the Local Cloud

We have much to learn about the solar wind, but missions like Ulysses and the Advanced Composition Explorer have helped us understand its weakenings and strengthenings and their effect upon the boundaries of the heliosphere, that vast bubble whose size depends upon the strength of the solar wind and the pressures exerted by interstellar space. For we’re not just talking about a wind from the Sun. Particles are also streaming into the Solar System from outside, and data from four decades and eleven different spacecraft have given us a better idea of how these interactions work.

A paper from Priscilla Frisch (University of Chicago) and colleagues notes that the heliosphere itself is located near the inside edge of an interstellar cloud, with the two in motion past each other at some 22 kilometers per second. The result is an interstellar ‘wind,’ says Frisch:

“Because the sun is moving through this cloud, interstellar atoms penetrate into the solar system. The charged particles in the interstellar wind don’t do a good job of reaching the inner solar system, but many of the atoms in the wind are neutral. These can penetrate close to Earth and can be measured.”

Image: The solar system moves through a local galactic cloud at a speed of 50,000 miles per hour, creating an interstellar wind of particles, some of which can travel all the way toward Earth to provide information about our neighborhood. Credit: NASA/Adler/U. Chicago/Wesleyan.

We’re learning that the interstellar wind has been changing direction over the years. Data on the matter go back to the 1970s, and this NASA news release mentions the U.S. Department of Defense’s Space Test Program 72-1 and SOLRAD 11B, NASA’s Mariner, and the Soviet Prognoz 6 as sources of information. We also have datasets from Ulysses, IBEX (Interstellar Boundary Explorer), STEREO (Solar Terrestrial Relations Observatory), Japan’s Nuzomi observatory and others including the MESSENGER mission now in orbit around Mercury.

Usefully, we’re looking at data gathered using different methods, but the flow of neutral helium atoms is apparent with each, and the cumulative picture is clear: The direction of the interstellar wind has changed by some 4 to 9 degrees over the past forty years. The idea of the interstellar medium as a constant gives way to a dynamic, interactive area that varies as the heliosphere moves through it. What we don’t know yet is why these changes occur when they do, but our local interstellar cloud may experience a turbulence of its own that affects our neighborhood.

The interstellar winds show us a kind of galactic turbulence that can inform us not only about the local interstellar medium but the lesser known features of our own heliosphere. Ultimately we may learn how to harness stellar winds, perhaps using advanced forms of magnetic sails to act as brakes when future probes enter a destination planetary system. As with solar sails, magsails give us the possibility of accelerating or decelerating without carrying huge stores of propellant, an enticing prospect indeed as we sort through how these winds blow.

The paper is Frisch et al., “Decades-Long Changes of the Interstellar Wind Through Our Solar System,” Science Vol. 341, No. 6150 (2013), pp. 1080-1082 (abstract)

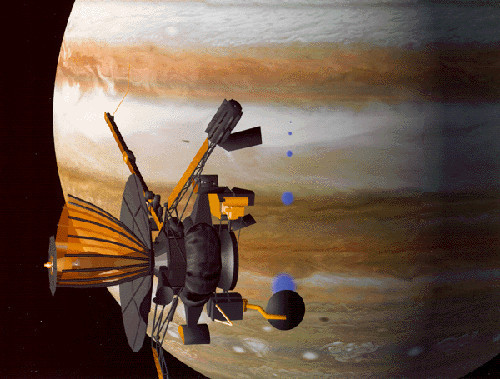

December 4, 2013

Can Kepler be Revived?

Never give up on a spacecraft. That seems to be the lesson Kepler is teaching us, though it’s one we should have learned by now anyway. One outstanding example of working with what you’ve got is the Galileo mission, which had to adjust to the failure of its high-gain antenna. The spacecraft’s low-gain antenna came to the rescue, aided by data compression techniques that raised its effective data rate, and sensitivity upgrades to the listening receivers on Earth. Galileo achieved 70 percent of its science goals despite a failure that had appeared catastrophic, and much of what we’ve learned about Europa and the other Galilean satellites comes from it.

Image: Galileo at Jupiter, still functioning despite the incomplete deployment of its high gain antenna (visible on the left side of the spacecraft). The blue dots represent transmissions from Galileo’s atmospheric probe. Credit: NASA/JPL.

Can we tease more data out of Kepler? The problem has been that two of its four reaction wheels, which function like gyroscopes to keep the spacecraft precisely pointed, have failed. Kepler needs three functioning wheels to maintain its pointing accuracy because it is constantly being bathed in solar photons that can alter its orientation. But mission scientists and Ball Aerospace engineers have been trying to use that same issue — solar photons and the momentum they impart — to come up with a mission plan that can still operate.

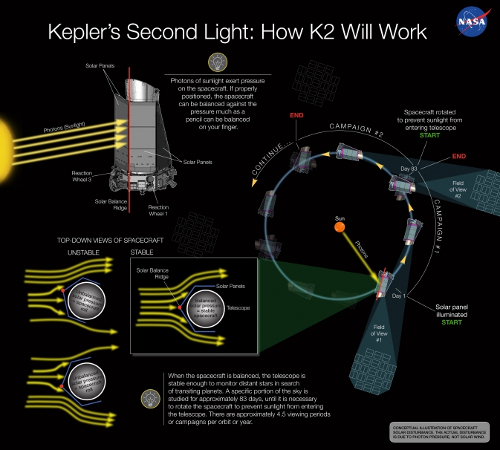

You can see the result in the image below — be sure to click to enlarge it for readability. By changing Kepler’s orientation so that the spacecraft is nearly parallel to its orbital path around the Sun, mission controllers hope to keep the sunlight striking its solar panels symmetric across its long axis. We may not have that third reaction wheel, but we do have the possibility of this constant force acting as a surrogate. Re-orientation of the probe four times during its orbit will be necessary to keep the Sun out of its field of view. And the original field of view in the constellations of Cygnus, Lyra and Draco gives way to new regions of the sky.

Testing these methods, mission scientists have been able to collect data from a distant star field of a quality within five percent of the primary mission image standards. That’s a promising result and an ingenious use of the same photon-imparted momentum that’s used in the design of solar sails like JAXA’s IKAROS and the upcoming Sunjammer sail from NASA. We now continue with testing to find out whether this method will work not just for hours but for days and weeks.

Image (click to enlarge): This concept illustration depicts how solar pressure can be used to balance NASA’s Kepler spacecraft, keeping the telescope stable enough to continue searching for transiting planets around distant stars. Credit: NASA Ames/W Stenzel.

If a decision is made to proceed, the revised mission concept, called K2, will need to make it through the 2014 Senior Review, in which operating missions are assessed. We should expect further news by the end of 2013 even as data from the original mission continue to be analyzed.

All of this may take you back to the Mariner 10 mission to Mercury in 1973, itself plagued by problems. Like Galileo, its high-gain antenna malfunctioned early in the flight, although it would later come back to life, and like Kepler, Mariner 10 proved difficult to stabilize. Problems in its star tracker caused the spacecraft to roll, costing it critical attitude control gas as it tried to stabilize itself. The guidance and control team at the Jet Propulsion Laboratory was able to adjust the orientation of Mariner 10’s solar panels, testing various tilt angles to counter the spacecraft’s roll. It was an early demonstration of the forces at work in solar sails.

We can hope that ingenuity and judicious use of solar photons can also bring Kepler back to life in an extended mission no one would have conceived when the spacecraft was designed. What we’ll wind up with is about 4.5 viewing periods (‘campaigns’) per orbit of the Sun, each with its own field of view and the capability of studying it for approximately 83 days. As the diagram shows, the proper positioning of the spacecraft to keep sunlight balanced on its solar panels is crucial. It’s a tricky challenge but one that could provide new discoveries ahead.

Addendum, from a news release just issued by NASA:

Based on an independent science and technical review of the Kepler project’s concept for a Kepler two-wheel mission extension, Paul Hertz, NASA’s Astrophysics Division director, has decided to invite Kepler to the Senior Review for astrophysics operating missions in early 2014.

The Kepler team’s proposal, dubbed K2, demonstrated a clever and feasible methodology for accurately controlling the Kepler spacecraft at the level of precision required for scientifically valuable data collection. The team must now further validate the concept and submit a Senior Review proposal that requests the funding necessary to continue the Kepler mission, with sufficient scientific justification to make it a viable option for the use of NASA’s limited resources.

To be clear, this is not a decision to continue operating the Kepler spacecraft or to conduct a two-wheel extended mission; it is merely an opportunity to write another proposal and compete against the Astrophysics Division’s other projects for the limited funding available for astrophysics operating missions.

December 3, 2013

Putting the Solar System in Context

Yesterday I mentioned that we don’t know yet where New Horizons will ultimately end up on a map of the night sky like the ones used in a recent IEEE Spectrum article to illustrate the journeys of the Voyagers and Pioneers. We’ll know more once future encounters with Kuiper Belt objects are taken into account. But the thought of New Horizons reminds me that Jon Lomberg will be talking about the New Horizons Message Initiative, as well as the Galaxy Garden he has created in Hawaii, today at the Arthur C. Clarke Center at UC San Diego. The talk will be streamed live at: http://calit2.net/webcasting/jwplayer/index.php, with the webcast slated to begin at approximately 2045 EST, or 0145 UTC.

While both the Voyagers and the Pioneers carried artifacts representing humanity, New Horizons may have its message uploaded to the spacecraft’s memory, its collected images and perhaps sounds ‘crowdsourced’ from people around the world after the spacecraft’s encounter with Pluto/Charon. That, at least, is the plan, but we need your signature on the New Horizons petition to make it happen. The first 10,000 to sign will have their names uploaded to the spacecraft, assuming all goes well and NASA approval is forthcoming. Please help by signing. In backing the New Horizons Message Initiative, principal investigator Alan Stern has said that it will “inspire and engage people to think about SETI and New Horizons in new ways.”

Artifacts, whether in computer memory or physical form like Voyager’s Golden Record, are really about how we see ourselves and our place in the universe. On that score, it’s heartening to see the kind of article I talked about yesterday in IEEE Spectrum, discussing where our probes are heading. When the Voyagers finished their planetary flybys, many people thought their missions were over. But even beyond their continued delivery of data as they cross the heliopause, the Voyagers are now awakening a larger interest in what lies beyond the Solar System. Even if they take tens of thousands of years to come remotely close to another star, the fact is that they are still traveling, and we’re seeing our system in this broader context.

The primary Alpha Centauri stars — Centauri A and B — are about 4.35 light years away. Proxima Centauri is actually a bit closer, at 4.22 light years. It’s easy enough to work out, using Voyager’s 17.3 kilometers per second velocity, that it would take over 73,000 years to travel the 4.22 light years that separate us from Proxima, but as we saw yesterday, we have to do more than take distance into account. Motion is significant, and the Alpha Centauri stars (I am assuming Proxima Centauri is gravitationally bound to A and B, which seems likely) are moving with a mean radial velocity of 25.1 ± 0.3 km/s towards the Solar System.

We’re talking about long time frames, to be sure. In about 28,000 years, having moved into the constellation Hydra as seen from Earth, Alpha Centauri will close to 3.26 light years of the Solar System before beginning to move away. So while we can say that Voyager 1 would take 73,000 years to cross the 4.22 light years that currently separate us from Proxima Centauri, the question of how long it would take Voyager 1 to get to Alpha Centauri given the relative motion of each remains to be solved. I leave this exercise to those more mathematically inclined than myself, but hope one or more readers will share their results in the comments.

Image: A Hubble image of Proxima Centauri taken with the observatory’s Wide Field and Planetary Camera 2. Centauri A and B are out of the frame. Credit: ESA/Hubble & NASA.

We saw yesterday that both Voyagers are moving toward stars that are moving in our direction, Voyager 1 toward Gliese 445 and Voyager 2 toward Ross 248. When travel times are in the tens of thousands of years, it helps to be moving toward something that is coming even faster towards you, which is why Voyager 1 closes to 1.6 light years of Gl 445 in 40,000 years. But these are hardly the only stars moving in our direction. Barnard’s Star, which shows the largest known proper motion of any star relative to the Solar System, is approaching at around 140 kilometers per second. Its closest approach should be around 9800 AD, when it will close to 3.75 light years. By then, of course, Alpha Centauri will have moved even closer to the Sun.

When we talk about interstellar probes, we’re obviously hoping to move a good deal faster, but it’s interesting to realize that our motion through the galaxy sets up a wide variety of stellar encounters. Epsilon Indi, currently some 11.8 light years away, is moving at about 90 kilometers per second relative to the Sun, and will close to 10.6 light years in about 17,000 years, a distance roughly similar to Tau Ceti’s as it will be in the sky of 43,000 years from now.

And as I learned from Erik Anderson’s splendid Vistas of Many Worlds, the star Gliese 710 is one of the most interesting in terms of close encounters. It’s currently 64 light years away in the constellation Serpens, but give it 1.4 million years and Gl 710 will move within 50,000 AU. That’s clearly in our wheelhouse, for 50,000 AU is the realm of the Oort Cloud comets, and we can only imagine what effects the passage of a star this close to the Sun will have on disturbing the cometary cloud. If humans are around this far in the future, GL 710 will give us an interstellar destination right on our doorstep as it swings by on its galactic journey.

December 2, 2013

The Stars in their Courses

Here’s hoping Centauri Dreams readers in the States enjoyed a restful Thanksgiving holiday, though with travel problems being what they are, I often find holidays can turn into high-stress drama unless spent at home. Fortunately, I was able to do that and, in addition to a wonderful meal with my daughter’s family, spent the rest of the time on long-neglected odds and ends, like switching to a new Linux distribution (Mint 16 RC) and fine-tuning it as the platform from within which I run this site and do other work (I’ve run various Linux flavors for years and always enjoy trying out the latest release of a new version).

Leafing through incoming tweets over the weekend, I ran across a link to Stephen Cass’ article in IEEE Spectrum on Plotting the Destinations of 4 Interstellar Probes. We always want to know where things are going, and I can remember digging up this information with a sense of awe when working on my Centauri Dreams book back around 2002-2003. After all, the Voyagers and the Pioneers we’ve sent on their journeys aren’t going to be coming back, but represent the first spacecraft we’ve sent on interstellar trajectories.

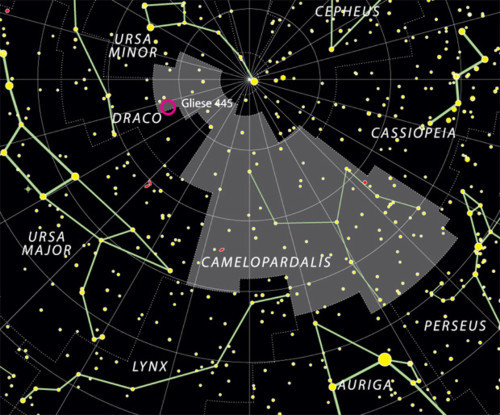

And if the Pioneers have fallen silent, we do have the two Voyagers still sending back data, as they will for another decade or so, giving us a first look at the nearby interstellar medium. For true star traveling, of course, even these doughty probes will fall silent at the very beginning of their journeys. Voyager 1 is headed on a course that takes it in the rough direction of the star AC+79 3888 (Gliese 445), a red dwarf 17.6 light years away in the constellation Camelopardalis. Here’s the star chart the IEEE Spectrum ran to show Voyager 1’s path — the article offers charts for the other interstellar destinations as well, but I’ll send you directly to it for those.

Notice anything interesting about Voyager 1? The IEEE Spectrum article says the spacecraft will pass within 1.6 light years of the star in 40,000 years, and indeed, this is a figure you can find mentioned in two authoritative papers: Rudd et al., “The Voyager Interstellar Mission” (Acta Astronautica 40, pp. 383-396) and Cesarone et al. ,”Prospects for the Voyager Extra-Planetary and Interstellar Mission” (JBIS 36, pp. 99-116). NASA used the 40,000 year figure as recently as September, when Voyager project manager Suzanne Dodd (JPL) spoke of the star to Space.com: “Voyager’s on its way to a close approach with it in about 40,000 years.” I used the figure as well in my Centauri Dreams book.

But wait: I’ve often cited how long it would take Voyager 1 to get to Alpha Centauri if it happened to be aimed in that direction. The answer, well over 70,000 years, is obviously much longer than the 40,000 years NASA is citing to get to the neighborhood of Gliese 445. How can this be? After all, Voyager 1 is moving at 17 kilometers per second, and should take 17,600 years to travel one light year. In 40,000 years, it should be a little over 2 light years from Earth.

I’m drawing these numbers from Voyager 1 and Gliese 445, a fascinating piece that ran in the Math Encounters Blog in September of this year. The author, listed as ‘an engineer trying to figure out how the world works,’ became interested in Voyager 1’s journey and decided to work out the math. It turns out NASA is right because the star Gliese 445 is itself moving toward the Solar System at a considerable clip, about 119 kilometers per second. Gliese 445 should be making its closest approach to the Solar System approximately 46,000 years from now, closing to within 3.485 light years, a good deal closer than the Alpha Centauri stars are today.

Never forget, in other words, that we’re dealing with a galaxy in constant motion! Voyager 1’s sister craft makes the same point. It’s headed toward the red dwarf Ross 248, 10 light years away but also moving briskly in our direction. In fact, within 36,000 years, the star should have approached within 3.024 light years, much closer indeed than Proxima Centauri. Voyager 2, headed outbound at roughly 15 kilometers per second, thus closes on Ross 248 in about the same time as Voyager 1 nears Gliese 445, 40,000 years. Voyager 2 then presses on in the direction of Sirius, but 256,000 years will have gone by before it makes its closest approach.

As for the Pioneers, Pioneer 10 is moving in the direction of Aldebaran, some 68 light years out, and will make its closest approach in two million years, while Pioneer 11 heads toward Lambda Aquilae, an infant star (160 million years old) some 125 light years away, approaching it four million years from now. We could throw New Horizons into the mix, but remember that after the Pluto/Charon encounter, mission controllers hope to re-direct the craft toward one or more Kuiper Belt objects, so we don’t know exactly where its final course will take it. Doubtless I’ll be able to update this article with a final New Horizons trajectory a few years from now. Until then, be sure to check out the IEEE star charts for a graphic look at these distant destinations.

November 27, 2013

Is Energy a Key to Interstellar Communication?

I first ran across David Messerschmitt’s work in his paper “Interstellar Communication: The Case for Spread Spectrum,” and was delighted to meet him in person at Starship Congress in Dallas last summer. Dr. Messerschmitt has been working on communications methods designed for interstellar distances for some time now, with results that are changing the paradigm for how such signals would be transmitted, and hence what SETI scientists should be looking for. At the SETI Institute he is proposing the expansion of the types of signals being searched for in the new Allen Telescope Array. His rich discussion on these matters follows.

By way of background, Messerschmitt is the Roger A. Strauch Professor Emeritus of Electrical Engineering and Computer Sciences at the University of California at Berkeley. For the past five years he has collaborated with the SETI institute and other SETI researchers in the study of the new domain of “broadband SETI”, hoping to influence the direction of SETI observation programs as well as future METI transmission efforts. He is the co-author of Software Ecosystem: Understanding an Indispensable Technology and Industry (MIT Press, 2003), author of Understanding Networked Applications (Morgan-Kaufmann, 1999), and co-author of a widely used textbook Digital Communications (Kluwer, 1993). Prior to 1977 he was with AT&T Bell Laboratories as a researcher in digital communications. He is a Fellow of the IEEE, a Member of the National Academy of Engineering, and a recipient of the IEEE Alexander Graham Bell Medal recognizing “exceptional contributions to the advancement of communication sciences and engineering.”

by David G. Messerschmitt

We all know that generating sufficient energy is a key to interstellar travel. Could energy also be a key to successful interstellar communication?

One manifestation of the Fermi paradox is our lack of success in detecting artificial signals originating outside our solar system, despite five decades of SETI observations at radio wavelengths. This could be because our search is incomplete, or because such signals do not exist, or because we haven’t looked for the right kind of signal. Here we explore the third possibility.

A small (but enthusiastic and growing) cadre of researchers is proposing that energy may be the key to unlocking new signal structures more appropriate for interstellar communication, yet not visible to current and past searches. Terrestrial communication may be a poor example for interstellar communication, because it emphasizes minimization of bandwidth at the expense of greater radiated energy. This prioritization is due to an artificial scarcity of spectrum created by regulatory authorities, who divide the spectrum among various uses. If interstellar communication were to reverse these priorities, then the resulting signals would be very different from the familiar signals we have been searching for.

Starships vs. civilizations

There are two distinct applications of interstellar communication: communication with starships and communication with extraterrestrial civilizations. These two applications invoke very different requirements, and thus should be addressed independently.

Starship communication. Starship communication will be two-way, and the two ends can be designed as a unit. We will communicate control information to a starship, and return performance parameters and scientific data. Effectiveness in the control function is enhanced if the round-trip delay is minimized. The only parameter of this round-trip delay over which we have influence is the time it takes to transmit and receive each message, and our only handle to reduce this is a higher information rate. High information rates also allow more scientific information to be collected and returned to Earth. The accuracy of control and the integrity of scientific data demands reliability, or a low error rate.

Communication with a civilization. In our preliminary phase where we are not even sure other civilizations exist, communication with a civilization (or they with us) will be one way, and the transmitter and receiver must be designed independently. This lack of coordination in design is a difficult challenge. It also implies that discovery of the signal by a receiver, absent any prior information about its structure, is a critical issue.

We (or they) are likely to carefully compose a message revealing something about our (or their) culture and state of knowledge. Composition of such a message should be a careful deliberative process, and changes to that message will probably occur infrequently, on timeframes of years or decades. Because we (or they) don’t know when and where such a message will be received, we (or they) are forced to transmit the message repeatedly. In this case, reliable reception (low error rate) for each instance of the message need not be a requirement because the receiving civilization can monitor multiple repetitions and stitch them together over time to recover a reliable rendition. In one-way communication, there is no possibility of eliminating errors entirely, but very low rates of error can be achieved. For example, if an average of one out of a thousand bits is in error for a single reception, after observing and combining five (seven) replicas of a message only one out of 100 megabits (28 gigabits) will still be in error.

Message transmission time is also not critical. Even after two-way communication is established, transmission time won’t be a big component of the round-trip delay in comparison to large one-way propagation delays. For example, at a rate of one bit per second, we can transmit 40 megabyles of message data per decade, and a decade is not particularly significant in the context of a delay of centuries or millennia required for speed-of-light propagation alone.

At interstellar distances of hundreds or thousands of light years, there are additional impairments to overcome at radio wavelengths, in the form of interstellar dispersion and scattering due to clouds of partially ionized gases. Fortunately these impairments have been discovered and “reverse engineered” by pulsar astronomers and astrophysicists, so that we can design our signals taking these impairments into account, even though there is no possibility of experimentation.

Propagation losses are proportional to distance-squared, so large antennas and/or large radiated energies are necessary to deliver sufficient signal flux at the receiver. This places energy as a considerable economic factor, manifested either in the cost of massive antennas or in energy utility costs.

The remainder of this article addresses communication with civilizations rather than starships.

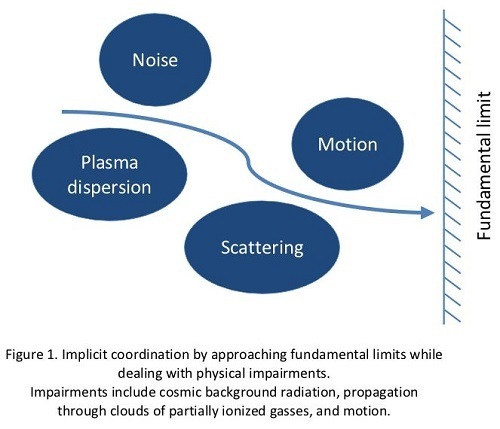

Compatibility without coordination

Even though one civilization is designing a transmitter and the other a receiver, the only hope of compatibility is for each to design an end-to-end system. That way, each fully contemplates and accounts for the challenges of the other. Even then there remains a lot of design freedom and a world (and maybe a galaxy) full of clever ideas, with many possibilities. I believe there is no hope of finding common ground unless a) we (and they) keep things very simple, b) we (and they) fall back on fundamental principles, and c) we (and they) base the design on physical characteristics of the medium observable by both of us. This “implicit coordination” strategy is illustrated in Fig. 1. Let’s briefly review all three elements of this three-pronged strategy.

The simplicity argument is perhaps the most interesting. It postulates that complexity is an obstacle to finding common ground in the absence of coordination. Similar to Occam’s razor in philosophy, it can be stated as “the simplest design that meets the needs and requirements of interstellar communication is the best design”. Stated in a negative way, as designers we should avoid any gratuitous requirements that increase the complexity of the solution and fail to produce substantive advantage.

Regarding fundamental principles, thanks to some amazing theorems due to Claude Shannon in 1948, communications is blessed with mathematically provable fundamental limits on our ability to communicate. Those limits, as well as ways of approaching them, depend on the nature of impairments introduced in the physical environment. Since 1948, communications has been dominated by an unceasing effort to approach those fundamental limits, and with good success based on advancing technology and conceptual advances. If both the transmitter and receiver designers seek to approach fundamental limits, they will arrive at similar design principles even as they glean the performance advantages that result.

We also have to presume that other civilizations have observed the interstellar medium, and arrived at similar models of impairments to radio propagation originating there. As we will see, both the energy requirements and interstellar impairments are helpful, because they drastically narrow the characteristics of signals that make sense.

Prioritizing energy simplifies the design

Ordinarily it is notoriously difficult and complex to approach the Shannon limit, and that complexity would be the enemy of uncoordinated design. However, if we ask “limit with respect to what?”, there are two resources that govern the information rate that can be achieved and the reliability with which that information can be extracted from the signal. These are the bandwidth which is occupied by the signal and the “size” of the signal, usually quantified by its energy. Most complexity arises from forcing a limit on bandwidth. If any constraint on bandwidth is avoided, the solution becomes much simpler.

Harry Jones of NASA observed in a paper published in 1995 that there is a large window of microwave frequencies over which the interstellar medium and atmosphere are relatively transparent. Why not, Jones asked, make use of this wide bandwidth, assuming there are other benefits to be gained? In other words, we can argue than any bandwidth constraint is a gratuitous requirement in the context of interstellar communication. Removing that constraint does simplify the design. But another important benefit emphasized by Jones is reducing the signal energy that must be delivered to the receiver. At the altar of Occam’s razor, constraining bandwidth to be narrow causes harm (an increase in required signal energy) with no identifiable advantage. Peter Fridman of the Netherlands Institute for Radio Astronomy recently published a paper following up with a specific end-to-end characterization of the energy requirements using techniques similar to Jones’s proposal.

I would add to Jones’s argument that the information rates are likely to be low, which implies a small bandwidth to start with. For example, starting at one bit per second, the minimum bandwidth is about one Hz. A million-fold increase in bandwidth is still only a megahertz, which is tiny when compared to the available microwave window. Even a billion-fold increase should be quite feasible with our technology.

Why, you may be asking, does increasing bandwidth allow the delivered energy to be smaller? After all, a wide bandwidth allows more total noise into the receiver. The reason has to do with the geometry of higher dimensional Euclidean spaces, since permitting more bandwidth allows more degrees of freedom in the signal, and a higher dimensional space has a greater volume in which to position signals farther apart and thus less likely to be confused by noise. I suggest you use this example to motivate your kids to pay better attention in geometry class.

Another requirement that we have argued is gratuitous is high reliability in the extraction of information from the signal. Achieving very low bit error rates can be achieved by error-control coding schemes, but these add considerable complexity and are unnecessary when the receiver has multiple replicas of a message to work with. Further, allowing higher error rates further reduces the energy requirement.

The minimum delivered energy

For a message, the absolute minimum energy that must be delivered to the receiver baseband processing while still recovering information from that signal can be inferred from the Shannon limit. The cosmic background noise is the ultimate limiting factor, after all other impairments are eliminated by technological means. In particular the minimum energy must be larger than the product of three factors: (1) the power spectral density of the cosmic background radiation, (2) the number of bits in the message, and (3) the natural logarithm of two.

Even at this lower limit, the energy requirements are substantial. For example, at a carrier frequency of 5 GHz at least eight photons must arrive at the receiver baseband processing for each bit of information. Between two Arecibo antennas with 100% efficiency at 1000 light years, this corresponds to a radiated energy of 0.4 watt-hours for each bit in our message, or 3.7 megawatt-hours per megabyte. To Earthlings today, this would create a utility bill of roughly $400 per megabyte. (This energy and cost scale quadratically with distance.) This doesn’t take into account various non-idealities (like antenna inefficiency, noise in the receiver, etc.) or any gap to the fundamental limit due to using practical modulation techniques. You can increase the energy by an order of magnitude or two for these effects. This energy and cost per message is multiplied by repeated transmission of the message in multiple directions simultaneously (perhaps thousands!), allowing that the transmitter may not know in advance where the message will be monitored. Pretty soon there will be real money involved, at least at our Earthly energy prices.

Two aspects of the fundamental limit are worth noting. First, we didn’t mention bandwidth. In fact, the stated fundamental limit assumes that bandwidth is unconstrained. If we do constrain bandwidth and start to reduce it, then the requirement on delivered energy increases, and rapidly at that. Thus both simplicity and minimizing energy consumption or reducing antenna area at the transmitter are aligned with using a large bandwidth in relation to the information rate. Second, this minimum energy per message does not depend on the rate at which the message is transmitted and received. Reducing the transmission time for the message (by increasing the information rate) does not affect the total energy, but does increase the average power correspondingly. Thus there is an economic incentive to slow down the information rate and increase the message transmission time, which should be quite okay.

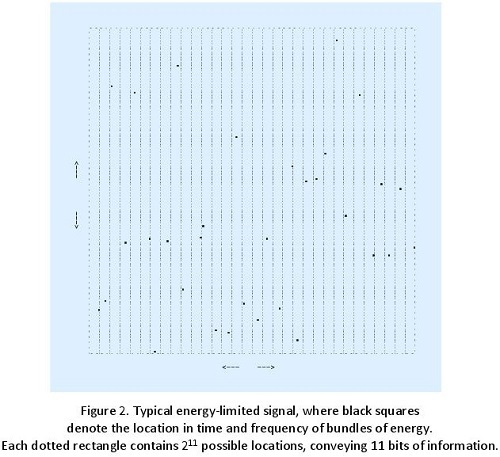

What do energy-limited signals actually look like?

A question of considerable importance is the degree to which we can or cannot infer enough characteristics of a signal to significantly constrain the design space. Combined with Occam’s razor and jointly observable physical effects, the structure of an energy-limited transmitted signal is narrowed considerably.

Based on models of the interstellar medium developed in pulsar astronomy, I have shown that there is an “interstellar coherence hole” consisting of an upper bound on the time duration and bandwidth of a waveform such that the waveform is for all practical purposes unaffected by these impairments. Further, I have shown that structuring a signal around simple on-off patterns of energy, where each “bundle” of energy is based on a waveform that falls within the interstellar coherence hole, does not compromise our ability to approach the fundamental limit. In this fashion, the transmit signal can be designed to completely circumvent impairments, without a compromise in energy. (This is the reason that the fundamental limit stated above is determined by noise, and noise alone.) Both the transmitter and receiver can observe the impairments and thereby arrive at similar estimates of the coherence hole parameters.

The interstellar medium and motion are not completely removed from the picture by this simple trick, because they still announce their presence through scintillation, which is a fluctuation of arriving signal flux similar to the twinkling of the stars (radio engineers call this same phenomenon “fading”). Fortunately we know of ways to counter scintillation without affecting the energy requirement, because it does not affect the average signal flux. The minimum energy required for reliable communication in the presence of noise and fading was established by Robert Kennedy of MIT (a professor sharing a name with a famous politician) in 1964. My recent contribution has been to extend his models and results to the interstellar case.

Signals designed to minimize delivered energy based on these energy bundles have a very different character from what we are accustomed to in terrestrial radio communication. This is an advantage in itself, because another big challenge I haven’t yet mentioned is confusion with artificial signals of terrestrial or near-space origin. This is less of a problem if the signals (local and interstellar) are quite distinctive.

A typical example of an energy-limited signal is illustrated in Fig. 2. The idea behind energy-limited communication is to embed energy in the locations of energy bundles, rather than other (energy-wasting but bandwidth-conserving) parameters like magnitude or phase. In the example of Fig. 2, each rectangle includes 2048 locations where an energy bundle might occur (256 frequencies and 8 time locations), but an actual energy bundle arrives in only one of these locations. When the receiver observes this unique location, eleven bits of information have been conveyed from transmitter to receiver (because 211 = 2048). This location-based scheme is energy-efficient because a single energy bundle conveys eleven bits.

The singular characteristic of Fig. 2 is energy located in discrete but sparse locations in time and frequency. Each bundle has to be sufficiently energetic to overwhelm the noise at the receiver, so that its location can be detected reliably. This is pretty much how a lighthouse works: Discrete flashes of light are each energetic enough to overcome loss and noise, but they are sparse in time (in any one direction) to conserve energy. This is also how optical SETI is usually conceived, because optical designers usually don’t concern themselves with bandwidth either. Energy-limited radio communication thus resembles optical, except that the individual “pulses” of energy must be consciously chosen to avoid dispersive impairments at radio wavelengths.

This scheme (which is called frequency-division keying combined with pulse-position modulation) is extremely simple compared to the complicated bandwidth-limited designs we typically see terrestrially and in near space, and yet (as long as we don’t attempt to violate the minimum energy requirement) it can achieve an error probability approaching zero as the number of locations grows. (Some additional measures are needed to account for scintillation, although I won’t discuss this further.) We can’t do better than this in terms of the delivered energy, and neither can another civilization, no matter how advanced their technology. This scheme does consume voluminous bandwidth, especially as we attempt to approach the fundamental limit, and Ian S. Morrison of the Australian Centre for Astrobiology is actively looking for simple approaches to achieve similar ends with less bandwidth.

What do “they” know about energy-limited communication?

Our own psyche is blinded by bandwidth-limited communication based on our experience with terrestrial wireless. Some might reasonably argue that “they” must surely suffer the same myopic view and gravitate toward bandwidth conservation. I disagree, for several reasons.

Because energy-limited communication is simpler than bandwidth-limited communication, the basic design methodology was well understood much earlier, back in the 1950’s and 1960’s. It was the 1990’s before bandwidth-limited communication was equally well understood.

Have you ever wondered why the modulation techniques used in optical communications are usually so distinctive from radio? One of the main differences is this bandwidth- vs energy-limited issue. Bandwidth has never been considered a limiting resource at the shorter optical wavelengths, and thus minimizing energy rather than bandwidth has been emphasized. We have considerable practical experience with energy-limited communication, albeit mostly at optical wavelengths.

If another civilization has more plentiful and cheaper energy sources or a bigger budget than us, there are plenty of beneficial ways to consume more energy other than being deliberately inefficient. They could increase message length, or reduce the message transmission time, or transmit in more directions simultaneously, or transmit a signal that can be received at greater distances.

Based on our Earthly experience, it is reasonable to expect that both a transmitting and receiving civilization would be acutely aware of energy-limited communication and I expect that they would choose to exploit it for interstellar communication.

Discovery

Communication isn’t possible until the receiver discovers the signal in the first place. Discovery of an energy-limited signal as illustrated in Fig. 2 is easy in one respect, since the signal is sparse in both time and frequency (making it relatively easy to distinguish from natural phenomenon as well as artificial signals of terrestrial origin) and individual energy bundles are energetic (making them easier to detect reliably). Discovery is hard in another respect, since due to that same sparsity we must be patient and conduct multiple observations in any particular range of frequencies to confidently rule out the presence of a signal with this character.

Criticisms of this approach

What are some possible shortcomings or criticisms of this approach? None of us have yet studied possible issues in design of a high-power radio transmitter generating a signal of this type. Some say that bandwidth does need to be conserved, for some reason such as interference with terrestrial services. Others say that we should expect a “beacon”, which is a signal designed to attract attention, but simplified because it carries no information. Others say that an extraterrestrial signal might be deliberately disguised to look identical to typical terrestrial signals (and hence emphasize narrow bandwidth rather low energy) so that it might be discovered accidently.

What do you think? In your comments to this post, the Centauri Dreams community can be helpful in critiquing and second guessing my assumptions and conclusions. If you want to delve further into this, I have posted a report at http://arxiv.org/abs/1305.4684 that includes references to the foundational work.

November 26, 2013

Moving Stars: The Shkadov Thruster

Although I didn’t write about the so-called ‘Shkadov thruster’ yesterday, it has been on my mind as one mega-engineering project that an advanced civilization might attempt. The most recent post was all about moving entire stars to travel the galaxy, with reference to Gregory Benford and Larry Niven’s Bowl of Heaven (Tor, 2012), where humans encounter an object that extends and modifies Shkadov’s ideas in mind-boggling ways. I also turned to a recent Keith Cooper article on Fritz Zwicky, who speculated on how inducing asymmetrical flares on the Sun could put the whole Solar System into new motion, putting our star under our directional control.

The physicist Leonid Shkadov described a Shkadov thruster in a 1987 paper called “Possibility of Controlling Solar System Motion in the Galaxy” (reference at the end). Imagine an enormous mirror constructed in space so as to reflect a fraction of the star’s radiation pressure. You wind up with an asymmetrical force that exerts a thrust upon the star, one that Shkadov believed could move the star (with accompanying planets) in the event of a dangerous event, like a close approach from another star. Shkadov thrusters fall into the category of ‘stellar engines,’ devices that extract significant resources from the star in order to generate their effect.

Image: A Shkadov thruster as conceived by the artist Steve Bowers.

There are various forms of stellar engines that I’ll be writing about in future posts. But to learn more about the ideas of Leonid Shkadov, I turned to a recent paper by the always interesting Duncan Forgan (University of Edinburgh). Forgan points out that Shkadov thrusters are not in the same class as Dyson spheres, for the latter are spherical shells built so that radiation pressure from the star and the gravitational force on the sphere remain balanced, the purpose being to collect solar energy, with the additional benefit of providing vast amounts of living space.

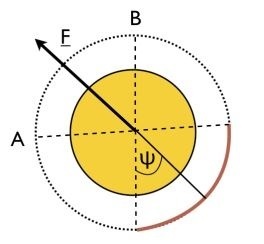

Where Shkadov thrusters do remind us of Dyson spheres, as Forgan notes, is in their need for huge amounts of construction material. The scale becomes apparent in his description, which is clarified in the diagram below:

A spherical arc mirror (of semi-angle ψ) is placed such that the radiation pressure force generated by the stellar radiation field on its surface is matched by the gravitational force of the star on the mirror. Radiation impinging on the mirror is reflected back towards the star, preventing it from escaping. This force imbalance produces a thrust…

Here I’m skipping some of the math, for which I’ll send you to the preprint. But here is his diagram of the Shkadov thruster:

Figure 1: Diagram of a Class A Stellar Engine, or Shkadov thruster. The star is viewed from the pole – the thruster is a spherical arc mirror (solid line), spanning a sector of total angular extent 2ψ. This produces an imbalance in the radiation pressure force produced by the star, resulting in a net thrust in the direction of the arrow.

Forgan goes on to discuss the effects of the thruster upon the star:

In reality, the reflected radiation will alter the thermal equilibrium of the star, raising its temperature and producing the above dependence on semi-angle. Increasing ψ increases the thrust, as expected, with the maximum thrust being generated at ψ = π radians. However, if the thruster is part of a multi-component megastructure that includes concentric Dyson spheres forming a thermal engine, having a large ψ can result in the concentric spheres possessing poorer thermal efficiency.

The sheer size of Dyson spheres, Shkadov thrusters and other stellar engines inevitably makes us think about such constructions in the context of SETI, and whether we might be able to pick up the signature of such an object by looking at exoplanet transits. Richard Carrigan is among those who have conducted searches for Dyson spheres (see Archaeology on an Interstellar Scale), but Forgan thinks a Shkadov thruster should also be detectable. For the light curve produced by an exoplanet during transit would show particular characteristics if a Shkadov thruster were near the star, a signature that could be untangled by follow-up radial velocity measurements.