Paul Gilster's Blog, page 210

January 14, 2014

Electric Sails: Fast Probe to Uranus

For years now Pekka Janhunen has been working on his concept of an electric sail with the same intensity that Claudio Maccone has brought to the gravitational focus mission called FOCAL. Both men are engaging advocates of their ideas, and having just had a good conversation with Dr. Maccone (by phone, unfortunately, as I’ve been down with the flu), I was pleased to see Dr. Janhunen’s electric sail pop up again in online discussions. It turns out that the physicist has been envisioning a sail mission to an unusual target.

Let’s talk a bit about the mission an electric sail enables. This is a solar wind-rider, taking advantage not of the momentum imparted by photons from the Sun but the stream of charged particles pushing from the Sun out to the heliopause (thereby blowing out the bubble’ in the interstellar medium we call the heliosphere). As Janhunen (Finnish Meteorological Institute) has designed it, the electric sail taps the Coulomb interaction in which particles are attracted or repulsed by an electric charge. The rotational motion of the spacecraft would allow the deployment of perhaps 100 tethers, thin wires that would be subsequently charged by an electron gun with the beam sent out along the spin axis.

Image: The electric sail is a space propulsion concept that uses the momentum of the solar wind to produce thrust. Credit: Alexandre Szames.

The electron gun keeps the spacecraft and tethers charged, with the electric field of the tethers extending tens of meters into the surrounding solar wind plasma — as the solar wind ‘blows,’ it pushes up against thin tethers that act, because of their charge, as wide surfaces against which the wind can push. The sail uses the attraction or repulsion of particles caused by the electric charge to ride the wind, the positively charged solar wind protons repelled by the positive voltage they meet in the charged tethers.

One disadvantage that electric sails bring to the mix, as opposed to solar sails like IKAROS, is that the solar wind is much weaker — Janhunen’s figures have it 5000 times weaker — than solar photon pressure at Earth’s distance from the Sun. This has come up before in comments here and it’s worth quoting Janhunen on the matter, from a site he maintains on electric sails:

The solar wind dynamic pressure varies but is on average about 2 nPa at Earth distance from the Sun… Due to the very large effective area and very low weight per unit length of a thin metal wire, the electric sail is still efficient, however. A 20-km long electric sail wire weighs only a few hundred grams and fits in a small reel, but when opened in space and connected to the spacecraft’s electron gun, it can produce several square kilometre effective solar wind sail area which is capable of extracting about 10 millinewton force from the solar wind.

Computer simulations using tethers up to 20 kilometers in length have yielded speeds of 100 kilometers per second, a nice step up from the 17 kps of Voyager 1, and enough to get a payload into the nearby interstellar medium in fifteen years. Or, as Janhunen describes in the recent paper on a Uranus atmospheric probe, an electric sail could reach the 7th planet in six years. Janhunen sees such a probe as equally applicable for a Titan mission and, indeed, missions to Neptune and Saturn itself, but notice that none of these are conceived as orbiter missions. A significant amount of chemical propellant is needed for orbital insertion unless we were to try aerocapture, but the problem with the latter is that it is at a much lower technical readiness level.

A demonstrator electric sail mission, then, is designed to keep costs down and reach its destination as fast as possible, with the interesting spin that, because we’re in need of no gravitational assists, the Uranus probe will have no launch window constraints. As defined in the paper on this work, the probe would consist of three modules stacked together: The electric sail module, a carrier module and an entry module. The entry module would be composed of the atmospheric probe and a heat-shield.

At approximately Saturn’s distance from the Sun, the electric sail module would be jettisoned and the carrier module used to adjust the trajectory as needed with small chemical thrusters (50 kg of propellant budgeted for here). And then the fun begins:

About 13 million km (8 days) before Uranus, the carrier module detaches itself from the entry module and makes a ~ 0.15 km/s transverse burn so that it passes by the planet at ~ 105 km distance, safely outside the ring system. Also a slowing down burn of the carrier module may be needed to optimise the link geometry during flyby.

Now events happen quickly. The entry module, protected by its heat shield, enters the atmosphere. A parachute is deployed and the heat shield drops away, with the probe now drifting down through the atmosphere of Uranus (think Huygens descending through Titan’s clouds), making measurements and transmitting data to the high gain antenna on the carrier module.

Thus we get atmospheric measurements of Uranus similar to what the Galileo probe was able to deliver at Jupiter, measuring the chemical and isotopic composition of the atmosphere. A successful mission builds the case for a series of such probes to Neptune, Saturn and Titan. Thus far Jupiter is the only giant planet whose atmosphere has been probed directly, and a second Jupiter probe using a similar instrument package would allow further useful comparisons. Our planet formation models, which predict chemical and isotope composition of the giant planet atmospheres, can thus be supplemented by in situ data.

Not to mention that we would learn much about flying and navigating an electric sail during the testing and implementation of the Uranus mission. The paper is Janhunen et al., “Fast E-sail Uranus entry probe mission,” submitted to the Meudon Uranus workshop (Sept 16-18, 2013) special issue of Planetary and Space Science (preprint).

January 13, 2014

Cloudy Encounter at the Core

The supermassive black hole at the center of our galaxy comes to Centauri Dreams‘ attention every now and then, most recently on Friday, when we talked about its role in creating hypervelocity stars. At least some of these stars that are moving at speeds above galactic escape velocity may have been flung outward when a binary pair approached the black hole too closely, with one star being captured by it while the other was given its boost toward the intergalactic deeps.

At a mass of some four million Suns, Sagittarius A* (pronounced ‘Sagittarius A-star’) is relatively quiet, but we can study it through its interactions. And if scientists at the University of Michigan are right, those interactions are about to get a lot more interesting. A gas cloud some three times the mass of the Earth, dubbed G2 when it was found by German astronomers in 2011, is moving toward the black hole, which is 25,000 light years away near the constellations of Sagittarius and Scorpius.

What’s so unusual about this is the time-frame. We’re used to thinking in million-year increments at least when discussing astronomical events, but G2 was expected to encounter Sagittarius A* late last year. The event hasn’t occurred yet but astronomers think it will be a matter of only a few months before it happens. Exactly what happens next isn’t clear, says Jon Miller (University of Michigan), who along with colleague Nathalie Degenaar has been making daily images of the gas cloud’s approach using NASA’s orbiting Swift telescope.

“I would be delighted if Sagittarius A* suddenly became 10,000 times brighter,” Miller adds. “However it is possible that it will not react much—like a horse that won’t drink when led to water. If Sagittarius A* consumes some of G2, we can learn about black holes accreting at low levels—sneaking midnight snacks. It is potentially a unique window into how most black holes in the present-day universe accrete.”

Image: The galactic center as imaged by the Swift X-ray Telescope. This image is a montage of all data obtained in the monitoring program from 2006-2013. Credit: Nathalie Degenaar.

We have much to learn about the feeding habits of black holes. The Milky Way’s black hole isn’t nearly as bright as those in some galaxies. While we can’t see black holes directly because no light can escape from within, we can see the evidence of material falling into them, and it would be useful to know why some black holes consume matter at a slower pace than others. The X-ray wavelengths that Swift studies should give us our best data on the upcoming black hole encounter. A sudden spike in X-ray brightness would presumably mark the event, and the researchers will post the images online.

In studying black hole behavior, we’re also looking at key information about how galaxies live out their lives. After all, these objects are consuming matter and radically affecting the region around the very heart of the galaxy. “The way they do that influences the evolution of the entire galaxy—how stars are formed, how the galaxy grows, how it interacts with other galaxies,” says Nathalie Degenaar. Those of us of a certain age can delight in the recollection of Fred Hoyle’s 1957 novel The Black Cloud, in which a gas cloud approaching the Solar System turns out to be a bit more than astronomers had bargained for. Don’t miss this classic if you haven’t read it yet — you should have plenty of time to finish it before the G2 event.

January 10, 2014

Stars at Galactic Escape Velocity

How do you boost the velocity of a star up to 540 kilometers per second? Somewhere in that region, with a generous error range on either side, is the speed it would take to escape the galaxy if you left from our Solar System’s current position. Here on Centauri Dreams we often discuss exotic technologies that could propel future vehicles, but it’s hard to imagine mechanisms that would drive natural objects out of the galaxy at such speeds. Even so, there are ways, as explained by Vanderbilt University’s Kelly Holley-Bockelmann:

“It’s very hard to kick a star out of the galaxy. The most commonly accepted mechanism for doing so involves interacting with the supermassive black hole at the galactic core. That means when you trace the star back to its birthplace, it comes from the center of our galaxy.”

The mechanism works like this: A binary pair of stars moving a bit too close to the massive black hole at the center of the Milky Way loses one star to the black hole while flinging the other outward at high velocity. When you calculate that the black hole has a mass equal to some four million Suns, this works: Stars can indeed be accelerated to galactic escape velocity, and so far a number of blue hypervelocity stars have been found that could be explained this way.

But Holley-Bockelmann and grad student Lauren Palladino have run into something that casts doubt on this explanation, or at least makes us wonder about other methods for making stars travel this fast. Calculating stellar orbits with data from the Sloan Digital Sky Survey, the duo have found about twenty stars the size of the Sun that appear to be hypervelocity stars. Moreover, these are stars whose composition mirrors normal disk stars, leading the researchers to believe they were not formed in the galaxy’s central bulge or its halo.

Image: Top and side views of the Milky Way galaxy show the location of four of the new class of hypervelocity stars. These are sun-like stars that are moving at speeds of more than a million miles per hour relative to the galaxy: fast enough to escape its gravitational grasp. The general directions from which the stars have come are shown by the colored bands. (Graphic design by Julie Turner, Vanderbilt University. Top view courtesy of the National Aeronautics and Space Administration. Side view courtesy of the European Southern Observatory).

Holley-Bockelmann and Palladino are working on possible causes for the movement of these stars, including interaction with globular clusters, dwarf galaxies or even supernovae in the galactic disk. The list of possibilities is surprisingly long, as noted in the paper on this work (internal references omitted for brevity):

While the SMBH [supermassive black hole] at the Galactic center remains the most promising culprit in generating HSVs [hypervelocity stars], other hypervelocity ejection scenarios are possible, such as a close encounter of a single star with a binary black hole… In this case, the star gains energy from the binary black hole and is flung out of the Galaxy while the orbit of the black hole binary shrinks…Another alternative hypervelocity ejection model involves the disruption of a stellar binary in the Galactic disk; here a supernova explosion in the more massive component can accelerate the companion to hypervelocities…

We may know more soon, for the paper points out that a nearby supernova should have contaminated the spectrum of a hypervelocity star. As they delve into these and other possibilities, the researchers are also expanding their search for hypervelocity stars to a larger sample within the Sloan data to include all spectral types.

The findings were announced at the meeting of the American Astronomical Society in Washington this week. The paper is Palladino et al., “Hypervelocity Star Candidates in the SEGUE G and K Dwarf Sample,” The Astrophysical Journal Vol. 780, No. 1 (2014), with abstract and preprint available.

January 9, 2014

Stormy Outlook for Brown Dwarfs

“Weather on Other Worlds” is an observation program that uses the Spitzer Space Telescope to study brown dwarfs. So far 44 brown dwarfs have fallen under its purview as scientists try to get a read on the conditions found on these ‘failed stars,’ which are too cool to sustain hydrogen fusion at their core. The variation in brightness between cloud-free and cloudy regions on the brown dwarf gives us information about what researchers interpret as torrential storms, and it turns out that half of the brown dwarfs investigated show these variations.

Given the chance nature of their orientation, this implies that most, if not all, brown dwarfs are wracked by high winds and violent lightning. The image below could have come off the cover of a 1950’s copy of Astounding, though there it would have illustrated one of Poul Anderson’s tales with Jupiter as a violent backdrop (“Call Me Joe” comes to mind). Brown dwarfs are, of course, a much more recent find, and in many ways a far more fascinating one.

Image: This artist’s concept shows what the weather might look like on cool star-like bodies known as brown dwarfs. These giant balls of gas start out life like stars, but lack the mass to sustain nuclear fusion at their cores, and instead, fade and cool with time. Credit: NASA/JPL-Caltech/University of Western Ontario/Stony Brook University.

Storms like these inevitably suggest Jupiter’s Great Red Spot, too, but we want to be careful with analogies considering how much we still have to learn about brown dwarfs themselves. What we can say is this: Brown dwarfs are too hot for water rain, leading most researchers to conclude that any storms associated with them are made up of hot sand, molten iron or salts.

The idea that brown dwarfs have turbulent weather is not surprising, but it is interesting to learn that such storms are evidently commonplace on them. Even more interesting is what the Spitzer work has revealed about brown dwarf rotation. Some of the Spitzer measurements found rotation periods much slower than any previously measured. Up to this point the assumption had been that brown dwarfs began rotating quickly shortly after they formed, a rotation that did not slow down as the objects aged. Aren Heinze (Stony Brook University) had this to say:

“We don’t yet know why these particular brown dwarfs spin so slowly, but several interesting possibilities exist. A brown dwarf that rotates slowly may have formed in an unusual way — or it may even have been slowed down by the gravity of a yet-undiscovered planet in a close orbit around it.”

Whatever the case, brown dwarfs do seem to be opening a window into weather systems in exotic places, systems that can be studied and characterized by their variations in brightness. Heinze presented this work at the 223rd annual meeting of the American Astronomical Society in Washington for principal investigator Stanimir Metchev (University of Western Ontario).

January 8, 2014

Will We Find Habitable ‘Super-Earths?’

As the 223rd meeting of the American Astronomical Society continues in Washington, we’re continuing to see activity on the subject of mini-Neptunes and ‘super-Earths,’ the latter often thought to be waterworlds. Given how fast our picture of planets in this domain is changing, I was intrigued to see that Nicolas Cowan (Northwestern University) and Dorian Abbot (University of Chicago) have come up with a model that allows a super-Earth with active plate tectonics to have abundant water in its mantle and oceans as well as exposed continents.

If Cowan and Abbot are right, such worlds could feature a relatively stable climate even if the amount of water there is far higher than Earth. Focusing on the planetary mantle, the authors point to a deep water cycle that moves water between oceans and mantle, a movement made possible by plate tectonics. The Earth itself has a good deal of water in its mantle. The paper argues that the division of water between ocean and mantle is controlled by seafloor pressure, which is proportional to gravity.

As planetary size increases, in other words, a super-Earth’s gravity and seafloor pressure go up as well. Rather than a waterworld with surface completely covered by water, the planet could have many characteristics of a terrestrial-class world, leading Cowan to say: “We can put 80 times more water on a super-Earth and still have its surface look like Earth. These massive planets have enormous sea floor pressure, and this force pushes water into the mantle.”

Image: Artist’s impression of Kepler-62f, a potential super-Earth in its star’s habitable zone. Could a super-Earth like this maintain oceans and exposed continents, or would it most likely be a water world? Credit: NASA/Ames/JPL-Caltech.

The implications for habitability emerge when we regard super-Earth oceans as relatively shallow. Exposed continents allow a planet to undergo the deep carbon cycle, producing the kind of stabilizing feedback that cannot exist on a waterworld. A super-Earth with exposed continents is much more likely to have an Earth-like climate, with all of this being dependent on whether or not the super-Earth has plate tectonics, and on the amount of water it stores in its mantle. Cowan calls the argument ‘a shot from the hip,’ but it’s an interesting addition to our thinking about this category of planet as we probe our ever growing database of new worlds.

On the matter of the amount of water, not all super-Earths would fit the bill, and it shouldn’t be difficult to turn a planet into a waterworld — Cowan argues that if the Earth were 1 percent water by mass, not even the deep water cycle could save the day. Instead, the researchers are considering planets that are one one-thousandth or one ten-thousandth water. From the paper:

Exoplanets with sufficiently high water content will be water-covered regardless of the mechanism discussed here, but such ‘ocean planets’ may betray themselves by their lower density: a planet with 10% water mass fraction will exhibit a transit depth 10% greater than an equally-massive planet with Earth-like composition… Planets with 1% water mass fraction, however, are almost certainly waterworlds, but may have a bulk density indistinguishable from truly Earth-like planets. Given that simulations of water delivery to habitable zone terrestrial planets predict water mass fractions of 10-5 – 10-2… we conclude that most tectonically active planets — regardless of mass — will have both oceans and exposed continents, enabling a silicate weathering thermostat.

The paper is Cowan and Abbot, “Water Cycling Between Ocean and Mantle: Super-Earths Need Not be Waterworlds,” The Astrophysical Journal Vol. 781, No. 1 (2014). Abstract and preprint available.

January 7, 2014

Thinking About ‘Mini-Neptunes’

Yesterday’s look at the exoplanet KOI-314c showed us a world with a mass equal to the Earth, but sixty percent larger than the Earth in diameter. This interesting planet may be an important one when it comes to studying exoplanet atmospheres, for KOI-314c is a transiting world and we can use transmission spectroscopy to analyze the light that passes through the atmosphere as the planet moves in front of and then behind its star. A space-based observatory like the James Webb Space Telescope should be able to tease useful information out of KOI-314c.

But the American Astronomical Society meeting in Washington DC continues, and it’s clear that the technique of studying transit timing variations (TTV) is coming into its own as a tool for exoplanet investigation. David Kipping and colleagues use TTV to look for exomoons, and it was during such a search that they discovered KOI-314c. But consider the other AAS news. At Northwestern University, Yoram Lithwick has been measuring the masses of approximately sixty exoplanets larger than the Earth and smaller than Neptune.

Learn the mass and the size of a planet, and you can make a call on its density, and thus learn something about its probable composition. And guess what?

“We were surprised to learn that planets only a few times bigger than Earth are covered by a lot of gas,” said Lithwick. “This indicates these planets formed very quickly after the birth of their star, while there was still a gaseous disk around the star. By contrast, Earth is thought to have formed much later, after the gas disk disappeared.”

That resonates nicely with Kipping and company’s work on KOI-314c, and Lithwick, working with graduate student Sam Hadden, used transit timing variation to achieve his results. Among the duo’s sample, planets two to three times larger than the Earth have very low density (compare with KOI-314c, which turned out to be only thirty percent denser than water). These are worlds something like Neptune except smaller and covered in massive amounts of gas.

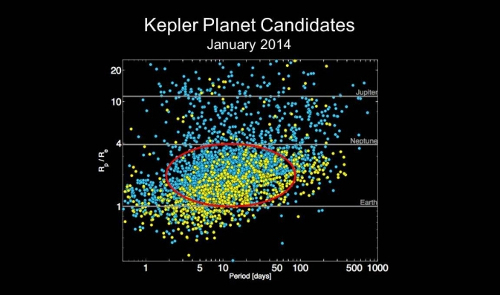

Image: Chart of Kepler planet candidates as of January 2014. Credit: NASA Ames.

Transit timing variations occur when two planets orbiting the same star pull on each other gravitationally, so that the exact time of transit for each planet is affected. These are complicated interactions, to be sure, but we’re beginning to see radial velocity measurements confirming trends that have been originally uncovered with TTV. I ran this by David Kipping, asking whether TTV wasn’t coming into its own, and he agreed. “My bet is that when we measure the mass of Earth 2.0,” Kipping wrote, “it will be via TTVs.”

We can also look at the work of Ji-Wei Xie (University of Toronto), who used TTV to measure the masses of fifteen pairs of Kepler planets. These ranged in size from close to Earth to a little larger than Neptune, The results appeared in The Astrophysical Journal in December and were presented at the AAS meeting. The work complements reports from the Kepler team at AAS presenting mass measurements of worlds between Earth and Neptune in size. Here the follow-up used for the Kepler findings was based on Doppler measurements. In fact, six of the planets under investigation are non-transiting and seen only in Doppler data.

So we’re seeing both radial velocity and TTV used to study this interesting category of planets. 41 planets discovered by Kepler were validated by the program of ground-based observation, and the masses of sixteen of these were determined, allowing scientists to make the call on planetary density. In the Kepler study, ‘mini-Neptune’ planets with a rocky core show up with varying proportions of hydrogen, helium and hydrogen-rich molecules surrounding the core. The variation is dramatic, and some of these worlds show no gaseous envelope at all.

Kepler mission scientist Natalie Batalha sums up the questions all this raises:

“Kepler’s primary objective is to determine the prevalence of planets of varying sizes and orbits. Of particular interest to the search for life is the prevalence of Earth-sized planets in the habitable zone. But the question in the back of our minds is: are all planets the size of Earth rocky? Might some be scaled-down versions of icy Neptunes or steamy water worlds? What fraction are recognizable as kin of our rocky, terrestrial globe?”

Plenty of questions emerge from these findings, but the Kepler team’s report tells us that more than three-quarters of the planet candidates the mission has discovered have sizes between Earth and Neptune. Clearly this kind of planet, which is not found in our own Solar System, is a major player in the galactic population, and learning how such planets form and what they are made of will launch numerous further investigations. The usefulness of transit timing variations at determining mass will likely place the technique at the forefront of this ongoing work.

The paper by Ji-Wei Xie is “Transit Timing Variation of Near-Resonance Planetary Pairs: Confirmation of 12 Multiple-Planet Systems,” Astrophysical Journal Supplement Series Vol. 208, No. 2 (2013), 22 (abstract). I don’t have the citation for the Kepler report, about to be published in The Astrophysical Journal, but will run it as soon as I can. Yoram Lithwick’s presentation at AAS was based on Hadden & Lithwick, “Densities and Eccentricities of 163 Kepler Planets from Transit Time Variations,” to be published in The Astrophysical Journal and available as a preprint.

January 6, 2014

A Gaseous, Earth-Mass Transiting Planet

Search for one thing and you may run into something just as interesting in another direction. That has been true in the study of exoplanets for some time now, where surprises are the order of the day. Today David Kipping (Harvard-Smithsonian Center for Astrophysics) addressed the 223rd meeting of the American Astronomical Society in Washington to reveal a planetary discovery made during the course of a hunt for exomoons, satellites of planets around other stars. Kipping’s team has uncovered the first Earth-mass planet that transits its host star.

Just how that happened is a tale in itself. Kipping heads up the Hunt for Exomoons with Kepler project, which mines the Kepler data looking for tiny but characteristic signatures. Transit timing variations are the key here, for a planet with a large moon may show telltale changes in its transits that point to the presence of the orbiting body. In the case of the red dwarf KOI-314, it became clear Kepler was seeing two planets repeatedly transiting the primary. No exomoon here, it turned out, but as David Nesvorny (Southwest Research Institute) puts it:

“By measuring the times at which these transits occurred very carefully, we were able to discover that the two planets are locked in an intricate dance of tiny wobbles giving away their masses.”

The star, located about 200 light years away, was orbited by KOI-314b, about four times as massive as the Earth and circling the star every thirteen days. Transit timing variations, it became clear, flagged the presence not of an exomoon but another planet further out in the system, dubbed KOI-314c. And while the latter turns out to have the same mass as the Earth, it is anything but an ‘Earth-like’ planet. KOI-314c is, in Kipping’s words, ‘the lowest mass planet for which we have a size *and* mass measurement.’ With size and mass in hand we can work out the density. This is a world only thirty percent denser than water, as massive as the Earth but sixty percent bigger, evidently enveloped in a thick atmosphere of hydrogen and helium.

Are we dealing with something like a ‘mini-Neptune,’ perhaps one that has lost some of its atmosphere over time due to radiation from the star? Whatever the answer, KOI-314c forces us to re-examine our assumptions about small planets and how they are made. In a recent email, Kipping told me:

“If you asked an astronomer yesterday what they would guess the composition of a newly discovered Earth-mass planet was, they probably would have said rocky. Today, we know that is not true since now we have an Earth-mass planet with a huge atmosphere sat on top of it. Nature continues to surprise us with the wonderful diversity of planets which can be built.”

Image: Exomoon hunter David Kipping, whose fine-tuning of TTV (transit timing variations) is opening up new possibilities in exoplanet and exomoon detection.

Exactly so, and what a potent lesson in minding our assumptions! Kipping continues:

“We now know that one can’t simply draw a line in the sand at X Earth masses and claim “everything below this mass is rocky”. It hints at the fact that the recipe book for building planets is a lot more complicated than we initially thought and so far we’ve perhaps only been looking at the first page.”

A humbling notion indeed. Finding solar systems like our own, with rocky worlds in an inner region and gas giants further out, has proven surprisingly difficult, making us think that our system may be anything but typical. Now we’re examining a small planet that forces questions about planetary mass and composition and leaves us without easy answers. Its discovery highlights the significance of transit timing variations, a technique that is clearly coming into its own as we reach these levels of precision studying low mass planets. We’re still looking for that first exomoon, but the hunt for these objects is pushing the envelope of detection.

Kipping’s email mentioned yet another significant fact. KOI-314c circles a red dwarf close enough for detailed observations with the upcoming James Webb Space Telescope. The size of the atmosphere around this world could make it ideal for detecting molecules in its atmosphere, adding to our knowledge of this particular planet but also giving us another venue on which to sharpen the tools of atmospheric characterization. A worthy find indeed, and a reminder of how much we have yet to discover in Kepler’s hoard of data.

January 3, 2014

Living at a Time of Post Natural Ecologies

When technologies converge from rapidly fermenting disciplines like biology, information science and nanotech, the results become hard to predict. Singularities can emerge that create outcomes we cannot always anticipate. Rachel Armstrong explores this phenomenon in today’s essay, a look at emerging meta-technologies that are themselves life-like in their workings. The prospects for new forms of design in our living spaces, including future spacecraft environments, are profound, as ongoing work in various venues shows. Dr. Armstrong, a regular Centauri Dreams contributor, explores these issues through her work at AVATAR (Advanced Virtual and Technological Architectural Research) at the University of Greenwich, London.

by Rachel Armstrong

Three lumps of muck hit the breathing membrane. A scattering of fragments blew back at the boys as they shattered like crumbs.

‘You’re right! They disappeared!’ crowed the smallest of the trio. ‘That building literally – ate dirt!’

Despite its lumpy shell-like appearance, the irregular wall was strong – and warm to the touch, like a freshly boiled egg.

The tallest boy rolled out a makeshift stethoscope listening to the internal rumblings of the architectural fluids. “Come on! Lets’ get on with it.”

He motioned to the others with a beckoning hand. “Did you get the drill bit? He asked. “Let’s tap this thing before it feels we are here!”

With one standing watch, the other two pushed and ground the pointed metal extension into the surprisingly resilient surface. They finally forced the tip through with a hammer tap, into the soft interior, as the self-healing edges of the lesion squirmed, trying to close over the hole.

“Okay, where’re the containers?” the small guy barked, “We’ve hit liquid gold!”

Nanosensors in the broken crust transformed the semiconductor surface of the building skin into a sensor array. This awoke the surveillance gaze, which shivered at the intrusion, like a cow flicks its skin at a fly.

The maintenance doctor looked over at the disturbance, “Pesky tappers!” he sneered, as the dripping hole quickly closed over like a blood clot.

……………………………………………………………………………..

In 2003 Roco and Bainbridge prepared a National Science Foundation report (Roco and Bainbridge, 2003) that proposed a new kind of singularity, where cutting edge technologies – that were directly engaged with the building blocks of matter – converged on a common platform. Roco and Bainbridge proposed that when these NBIC (nano-bio-info-cogno) technologies were combined, the emerging platforms could radically improve human lives in many ways that would impact on a broad spectrum of human and economic activity. What is unique about the NBIC ‘singularity’ is that a method was actually proposed to induce such a technological tipping point and government money was invested to support the initiative in ‘sand pit’ initiatives.

During these events, participants were required to find new ways of working where the sciences could reach out across other disciplines to challenge traditional boundaries of theory, practice and innovation. Indeed, pursuit of the NBIC convergence led to funded ‘sand pits’ both by the NSF and EU, where multi disciplinary practitioners, predominantly scientists, collaboratively addressed ‘grand’ challenges such as, artificial photosynthesis. Additionally, further convergence across the Two Cultures is also being actively supported by the UK government as a STEAM (science, technology, engineering, arts and mathematics) initiative, where the contributory role of arts in innovation is supported and recognised as being integral to this process (Else, 2012).

An example of one of these NBIC ‘sand pit’ technologies is the ‘cyberplasm’ robot, which was constructed from cellular, bacterial and electronic components. Projects like these have arisen from the NBIC ‘sand pits’, a mélange of biological and mechanical systems (Cyberplasm Team, 2010). Yet such projects deal with the possibilities of NBIC in abstracted environments and potentially could be more meaningfully challenged in applied contexts.

A range of NBIC projects are currently in development. For example, at the University of Florida – when coated with carbon nanotubes and pressed between two sheets of Teflon, spider silk forms a composite that is sufficiently flexible and electrically conductive to detect the electrical signals from the heart (Steven et al, 2013).

While not a formal NBIC ‘sand pit’– my work as part of the AVATAR group at the University of Greenwich – embodies principles of NBIC convergence to produce ‘living technologies’ that are specifically designed for use in the built environment. These experiments have produced a wide range of drawings, models and prototypes that aim to provoke new forms of ecological design through collaborative partnerships with scientists working with experimental, cutting-edge technologies such as, chemically programmable dynamic droplets (Armstrong and Hanczyc, 2013).

The challenge of collaboration between the disciplines is to explore how it is possible to venture into the very space in which our current predictive models break down when convergent technologies reach transformational tipping points – or singularities. These existence landscapes are not situated within the classical scientific frameworks but propose methods that deal with uncertainty and probability. This does not mean that ‘anything goes’, but that it is possible to be propositional about the exploration of possibilities within definable limits of probability. In other words we are encouraged to find unity in achieving a particular goal, through a diverse range of approaches. For example, there are a range of methods to produce self-healing materials that include seeding traditional materials such as, concrete with extremophile bacteria, as in H.M Jonkers work on ‘bio-concrete’ – to developing slow-release chemical delivery systems that respond to environmental changes (Armstrong and Spiller, 2011).

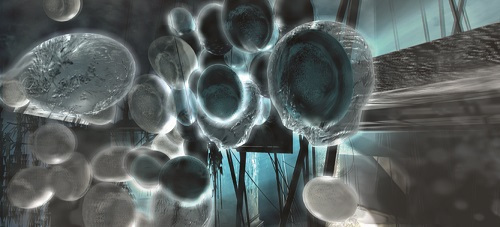

Since NBIC technologies form a conduit for different information fields, they may be explored using experimental methods to map and explore the material consequences of their impacts. These are most evident and accessible when materials themselves become processes, at far from equilibrium states. For example, the computational properties of matter can be demonstrated using programmable droplets designed with a specific metabolism so that one set of substances can be transformed into another. Droplets may be programmed to respond to carbon dioxide produced by visitors by changing colour and acting as artificial ‘smell and taste’ organs in the Hylozoic Ground installation, designed by Philip Beesley for the Venice 2010 Architecture Biennale (Armstrong and Beesley, 2011). These chemistries detect chemical changes in their environment in a manner reminiscent of our own sensory nervous systems.

A range of lifelike emerging new (meta) technologies pose very different opportunities and challenges to machines (Myers and Antonelli, 2012) and work in ways that are much more like ‘life’. They possess resilience, environmental sensitivity, and adaptability, are able to deal with unpredictable events (within limits) and possess the capacity for surprise. Ultimately such convergent technologies may be able to evolve. They can and should reshape how we think about how to do the things we take for granted – so that the performance of our living spaces is not always measured in terms of mechanical efficiencies, but may relate to the qualities of experience such as, potency, or fertility.

Metaphors already associated with living bodies may best help us construct frameworks for imagining and working with these kinds of systems. For example, NBIC technologies may enable buildings to have organs, made from bioprocesses that can transform say, waste products into compost as suggested in Philips Microbial Home project (Microbial home, 2011). The outcomes of these technologies could be used to make native soils and form the fertile substrate on green roofs – rather than for example, transplanting rare bog soils, which is currently a common practice in our cities (Sams, 2012). They might also provide biofuels from algae, biogas from bacteria and organic waste, recycle water, provide food, adsorb toxins, grow building materials or even provide entertainment if their lively bodies are synchronized say, by speakers transmitting music from an i-Pod through the water system nurturing an algae colony.

NBIC technologies change the possibilities for design in our living spaces by opening up the kind of information we can use to construct spatial programs– perhaps like an advanced form of 3D printing where information is not separate from the material platform that it instructs. Dune is a speculative proposal by Magnus Larssen, which imagines an architectural scale biofilm with a metabolism that fixes sand into sandstone (Myers and Antonelli, 2012). Other systems can precision process matter at the molecular scale such as, the production of molecular objects reminiscent of floral arrangements (Villar, 2013).

Lifelike NBIC technologies may help us find abundance and facilitate growth within our cities by changing the way that we think about what these terms mean and enable to deal with them differently. Rather than establishing what kinds of distribution systems can bring raw materials to centralised production centres, NBIC technologies allow the distributed harvesting of resources by systematically searching a broader solution space than is available for traditional industrial processes, as they are able to connect across a variety of different information media. NBIC technologies scour what Stuart Kauffman calls the ‘adjacent possible’, by identifying local processes that already exist within environments (Kauffman, 2008). They draw together the chemical fabric that underpins the metabolic hubs of organization, which draw the threads of transformation together in the presence of abundant resources such as, waste, carbon dioxide, or naturally occurring cellular processes in microorganisms. NBIC platforms then orchestrate these processes, shaping their material outcomes through understanding the material limits of possibility that define their individual interactions.

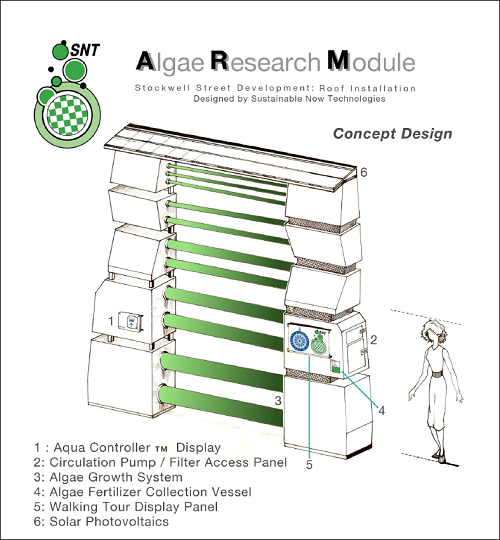

For example, an algaeponics unit is being installed on the green roof of the new Stockwell Street building at the University of Greenwich is a research unit, which will establish the parameters of operation of such a system in the London area. Having been built in Longbeach, California, where the current expertise lies, it will spend its first year harvesting data on the performance of the local, London algae species that inhabits its liquid infrastructure. This will give us information about how much biomass a unit can produce and therefore help us design say, a biofuel station on the Thames estuary for local river traffic.

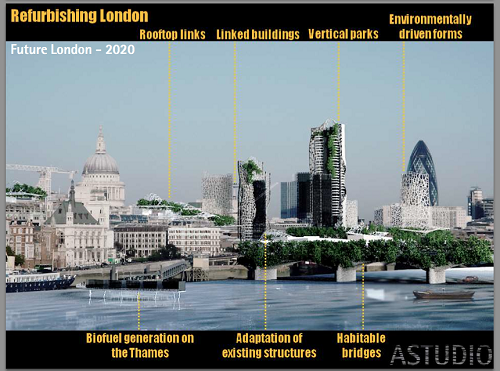

A powerful historical precedent using much simpler techniques than are currently available by applying biotechnology, has already been established for natural computing during the agrarian revolution, where livestock lived underneath, or shared our spaces at times when space and natural resources were much less constrained than in megacities today. Yet, NBIC technologies may be thought of as being a form of agriculture for the 21st century where tiny synthetic ecologies are optimised for the megacity environment through hyper-efficient biochemical networks. It is not inconceivable that miniaturised forms of natural processing may be developed a whole palette of synthetic physiologies through harnessing the metabolic power of microorganisms since only decades ago our modern computers filled an entire room when now – they fit within the palms of our hands. Such systems could conceivably be housed in specially designed synthetic ‘organs’ within under-designed areas in our homes and workspaces – such as, in cavity walls, ceilings and under floors. Over the last year or so, I have been working with Astudio on their 2050 postcard project, where alternative futures for the city have been shaped.

It appears that these new sources of abundance in the flow of matter through urban spaces potentially make most economic sense at a community level. Potentially the pooling of organic matter from urban markets could be anaerobically digested to provide under floor heating, native soils or biogas for local homes and businesses.

Indeed, NBIC technologies may punctuate the desert-like concrete urban environment with micro-oases of material abundance. Initially, these fertile islands may offset the need for nonlocal provisions and later, may even replace some of them entirely. At an urban scale, these extra metabolic pathways forge a giant biochemistry network that enable us to go from one substance to another without any intermediaries, or by increasing the biotic value of substances. Within these systems, innovation may freely flow as inventors find ways to divert, uncover and reshape the fundamental exchanges in material flows within cities by using different kinds of NBIC species. Perhaps these could be thought of as being ‘metabolic apps.’

By subverting the expectations of the material realm, NBIC technologies may transform urban landscapes into post natural fabrics. Designed, or synthetic, ecologies could therefore be produced by the seamless entangling between technology and natural systems that work with the innate creativity of the material ream and reinvent conditions for urban living – where landscape and building are not separate, but entwined. In embracing these new processes, notions of sustainability do not make best sense when evaluated according to globalised LEED and BREEAM standards that constrain these potential new fusions through geometric barriers that currently form our buildings but are much more meaningful when they are locally shaped as community contracts. Such treaties between participants may go beyond human needs and extend to agreements for nonhuman agents such as, trees, flowers and other wildlife.

How these kinds of technologies articulate new values attributed to productive living spaces, is yet to be established. Indeed, an intrinsic aspect of my research and exploration of the potential impacts of NBIC technologies is in using speculative fictions to reflect on their possible social, ethical and economic implications. For example, smart droplets may be programmed to accrete an artificial limestone reef under the city of Venice, which stands on woodpiles, to attenuate it from sinking into the soft delta soils on which it is founded.

Future Venice is an architectural project that articulates how programmable chemical agents may be designed to move away from the light and produce solid matter as a way of growing an accretion technology under the foundations of the city, which rests of woodpiles. The dynamic properties of droplets have been demonstrated in the laboratory (Armstrong and Hanczyc, 2013) and also on the lagoon side in Venice (Rowlinson, 2012).]

Having a working experimental knowledge of these systems enables informed reflection on their performance in the laboratory, which can be extrapolated and compared with our expectations of other lifelike systems such as, biology, meteorological events or geological processes and combine these with our current ambitions for ‘ecological’ living.

Obviously, a singularity such as NBIC convergence may significantly contribute to changes in environmental, social and economic interactions – the specific details of which are not yet – and by definition, cannot yet be known. However, it is likely that the creation of new sources of wealth generation within our proximate spaces will affect our perception and expectations of what we mean by abundance and growth within resource-constrained environments such as, megacities – or worldships.

For architecture, a set of values that are interwoven with ecological systems requires the built environment to be considered differently – not as a machine, or as an extension of industrialization – but as an engagement with the technology of Nature, which is robust, unpredictable and resilient. Indeed, with the increasing likelihood of climate related events and severe weather patterns over the coming century, it is vital that our homes may not only provide us shelter but also become our life support systems when central power systems are hit by floods, winds or even terrorist attacks. Under these circumstances, the very fabric of our homes may then supply us with a limited amount of food, water, heat and process our waste so that we buy time before the traditional infrastructures are repaired and rebooted.

In everyday terms this means that we may experience our megacities very differently. For example, districts may potentially be mapped according to their local metabolisms where for example, water rich communities may set up local trading systems with compost-dense societies. Since matter is transformable, urban environments may even be cleaner as matter will be considered harvestable and not scattered as garbage in the street. Potentially then, fewer vermin infiltrate the city as they have less to feed on. Maybe, when our homes become organ stressed, we will send for building doctors who will collect the failing ecology and replace them with seeded matrixes that will evolve into mature metabolic ecosystems to nurture, warm and protect us. Collectively they may establish a new platform for human development that is primarily life promoting – that work in synergy with our current model of resource conservation – where our homes, businesses and cities are actually an ecological fabric that is forged by the continual, collaborative expression of a lively, material world, which increases the probability of our collective survival both here on earth – and also in constructed habitats beyond the earth’s surface.

……………………………………………………….……………………

The guts of the system were soon full of the potent golden liquid. It gurgled joyfully as the energy stomach growled, satiated.

It wasn’t the usual bio-engine that metabolised for a household but had been super-boosted by the maker community to provide enough organic power for the whole neighbourhood. Helpers had brought water vessels, which were on stand-by in case a sudden surge of chemical activity caused the system to break out into a sweat.

“Stand back!” commanded the tall boy as a mulling crowd became a little too curious about the workings of the operating system that had started to bubble as if it was boiling.

“We have enough embodied energy here to power up all the organs in your buildings! It’s too late now if you’re not already plumbed in to the community physiology. It’s going to get hot!”

But they didn’t need coercing. The standers-by quickly shuffled back as the heat from chemical bonfire surged. They watched the precious juice pump from the makeshift container into their living spaces, keeping time with its amber heartbeat and swore amongst themselves they’d keep it fed.

An old woman soaked in shadows shook her head, her applauding eyes full of wonder.

“I just can’t believe it!” she muttered through a mouth full of tooth implants. “We are finally ‘off the grid!’”

……………………………………………………………………………..

References

Armstrong, R. and Beesley, P. 2011. Soil and Protoplasm: The Hylozoic Ground project, Protocell Architecture, Architectural Design, Volume 81, Issue 2, March/April 201, pp. 78-89.

Armstrong, R. and Hanczyc, M.M. 2013. Bütschli Dynamic Droplet System. Artificial Life Journal, 19(3-4), pp. 331-346.

Armstrong, R. and Spiller, N. 2011. Synthetic Biology: Living Quarters, Nature 467, pp.916–918.

Cyberplasm Team. 2010. Cyberplasm: a micro-scale biohybrid robot developed using principles of synthetic biology. [online] Available at: http://cyberplasm.net/. [Accessed 18 April 2013].

Else, L. 8 August 2012. From STEM to STEAM. Culture Lab, New Scientist. [online] Available at: http://www.newscientist.com/blogs/cul.... [Accessed 19 April 2013].

Kauffman, S.A. 2008. Reinventing the Sacred: A New View of Science, Reason, and Religion, First trade paper edition. New York: Basic Books.

Microbial home. 19 October 2011. Design Probes, Design Portfolio, Philips. [online] Available at: http://www.design.philips.com/about/d...- bial_home.page. [Accessed 17 April 2013].

Myers, W. and Antonelli, P. 2013. Bio Design: Nature, Science, Creativity. London: Thames & Hudson /New York: MOMA.

Roco, M.C. and Bainbridge, W.S. 2003. Converging Technologies for Improving Human Performance, Nanotechnology, Biotechnology, Information technology and Cognitive science. NSF/DOC-sponsored report. Dordrecht: Springer.

Rowlinson, A, 2012. The woman regrowing the planet. Red Bulletin, March 2012, pp. 76-81.

Sams, C. 20 April 2012. Gardeners should end their love affair with peat, Green Living Blog, The Guardian. [online]. Available at: http://www.theguardian.com/environmen.... [Accessed 20 December 2013].

Steven, E. Saleh, W.R. Lebedev, V. Acquah, S.F.A. Laukhin, V. Alamo, R.G. and Brooks, J.S. 2013. Carbon nanotubes on a spider silk scaffold. Nature Communications 4, Article number: 2435 doi:10.1038/ncomms3435.

Villar, G. Graham, A.D. and Bayley, H. 2013. A tissue-like printed material. Science, 340(6128), pp. 48-52.

January 2, 2014

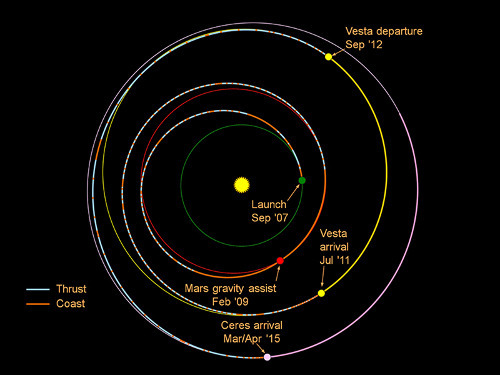

Ceres, Pluto: Looking Toward the Next New Year

Over the New Year transition I saw a number of tweets to the effect that as of January 1, the first flyby of Pluto was going to occur next year, a notable thought when I ponder how fast this long journey has seemed to move. Was it really way back in 2006 that New Horizons launched? We can only wonder what surprises the Pluto/Charon system has in store for us in 2015. The same can be said for Ceres, a body which, as of December 27, is now closer to the Dawn spacecraft than Vesta, the asteroid around which it orbited so many interesting times.

Christopher Russell (UCLA) is Dawn’s principal investigator, a man whose thoughts on the mission naturally carry weight:

“This transition makes us eager to see what secrets Ceres will reveal to us when we get up close to this ancient, giant, icy body. While Ceres is a lot bigger than the candidate asteroids that NASA is working on sending humans to, many of these smaller bodies are produced by collisions with larger asteroids such as Ceres and Vesta. It is of much interest to determine the nature of small asteroids produced in collisions with Ceres. These might be quite different from the small rocky asteroids associated with Vesta collisions.”

The departure from Vesta occurred in September of 2012 — Dawn spent almost fourteen months there. Both Vesta and Ceres are considered ‘protoplanets,’ bodies that came close to becoming planets of their own, and the lessons learned in the mission should be useful in firming up our ideas on planet formation in the earliest days of the system. We’re also learning about a doughty spacecraft that will now attempt something that has never been done before, to orbit not just one but two destinations beyond the Earth.

Image: This graphic shows the planned trek of NASA’s Dawn spacecraft from its launch in 2007 through its arrival at the dwarf planet Ceres in early 2015. When it gets into orbit around Ceres, Dawn will be the first spacecraft to go into orbit around two destinations in our solar system beyond Earth. Its journey involved a gravity assist at Mars and a nearly 14-month-long visit to Vesta. Credit: NASA/JPL.

We have a year to go before the Dawn controllers begin approach operations, and not long after that we’ll be getting imagery from Ceres that will be useful both for science and for navigation. Arrival at the diminutive world will occur some time in late March of 2015. The first full study of Ceres is slated for April at an altitude of 13,500 kilometers, after which the spacecraft will spiral down to an altitude of 4,430 kilometers for its survey science orbit. Continued lower spirals will culminate in a closest orbit approaching to within 375 kilometers in late November.

This NASA news release describes the ‘hybrid’ mode — a combination of reaction wheels and thrusters — that controllers will use to point the spacecraft during this close, low-altitude mapping orbit. Dawn has been using hydrazine thruster jets for orientation and pointing, but using two of the spacecraft’s reaction wheels, which are gyroscope-like devices that can be spun up on demand, will help to conserve hydrazine. Bear in mind that two of the four reaction wheels aboard the spacecraft failed after Dawn left Vesta in 2012, but the hybrid mode using just two of the reaction wheels has now been thoroughly tested.

As we continue the long journey to Ceres, you’ll want to keep an eye on Dawn chief engineer Marc Rayman’s Dawn Journal, which offers regular updates on the mission’s progress. Here’s Rayman’s take on Dawn’s early imaging of Ceres, beginning just over a year from now:

Starting in early February 2015, Dawn will suspend thrusting occasionally to point its camera at Ceres. The first time will be on Feb. 2, when they are 260,000 miles (420,000 kilometers) apart. To the camera’s eye, designed principally for mapping from a close orbit and not for long-range observations, Ceres will appear quite small, only about 24 pixels across. But these pictures of a fuzzy little patch will be invaluable for our celestial navigators. Such “optical navigation” images will show the location of Ceres with respect to background stars, thereby helping to pin down where it and the approaching robot are relative to each other. This provides a powerful enhancement to the navigation, which generally relies on radio signals exchanged between Dawn and Earth. Each of the 10 times Dawn observes Ceres during the approach phase will help navigators refine the probe’s course, so they can update the ion thrust profile to pilot the ship smoothly to its intended orbit.

Image: NASA’s Hubble Space Telescope color image of Ceres, the largest object in the asteroid belt. Astronomers optimized spatial resolution to about 18 km per pixel, enhancing the contrast in these images to bring out features on Ceres’ surface, that are both brighter and darker than the average which absorbs 91% of sunlight falling on it. Credit: NASA, ESA, J. Parker (Southwest Research Institute), P. Thomas (Cornell University), and L. McFadden (University of Maryland, College Park).

Dawn’s view of Ceres by February 11 should be marginally better, says Rayman, than the sharpest views we’ve captured from the Hubble instrument, as seen above. Soon after that we’ll be seeing numerous features we’ve never known about before, including the possibility of small moons. Yet another indistinct sphere in the night sky will have been resolved into a sharply imaged object, while New Horizons continues its approach to Pluto/Charon. Even as we begin 2014 we can say with confidence that its successor will be an extremely interesting year.

December 31, 2013

Lasers in our Future

Best wishes for the New Year! I got a resigned chuckle — not a very mirthful one, to be sure — out of a recent email from Adam Crowl, who wrote: “Look at that date! Who imagined we’d still be stuck in LEO in 2014???” Indeed. It’s hard to imagine there really was a time when the ‘schedule’ set by 2001: A Space Odyssey seemed about right. Mars at some point in the 80’s, and Jupiter by the turn of the century, a steady progression outward that, of course, never happened. The interstellar community hopes eventually to reawaken those dreams.

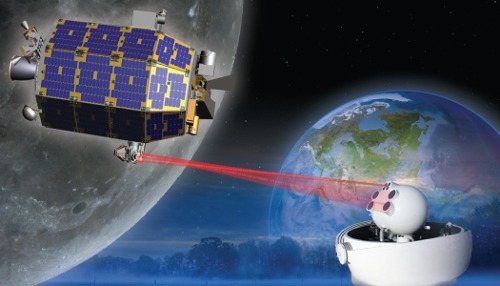

Yesterday’s post on laser communications makes the point as well as any that incremental progress is being made, even if at an often frustrating pace. We need laser capabilities to take the burden off a highly overloaded Deep Space Network and drastically improve our data transfer and networking capabilities in space. The Lunar Laser Communication Demonstration (LLCD) equipment aboard the LADEE spacecraft transmitted data from lunar orbit to Earth at a 622 megabits per second (Mbps) rate in October, a download rate six times faster than any radio systems that had been flown to the Moon. It was an extremely encouraging outcome.

“These first results have far exceeded our expectation,” said Don Cornwell, LLCD manager. “Just imagine the ability to transmit huge amounts of data that would take days in a matter of minutes. We believe laser-based communications is the next paradigm shift in future space communications.”

LLCD is actually an overall name for the ground- and space-based components of this laser experiment. What’s aboard the LADEE spacecraft is the Lunar Laser Space Terminal (LLST), which communicates with a Lunar Laser Ground Terminal (LLGT) located in White Sands, New Mexico, a joint project developed between MIT and NASA. There are also two secondary terminals, one at the European Space Agency’s La Teide Observatory (Tenerife), the other at JPL’s Table Mountain Facility in California, where previous laser experiments like GOLD — the Ground-to-Orbit Laser Communication Demonstration — have taken place.

The laser communication between LLCD and ground stations on Earth is the longest two-way laser communication ever demonstrated and a step in the direction of building the next generation of communications capability we’ll need as we explore the Solar System. Imagine data rates a hundred times faster than radio frequencies can provide operating at just half the power of radio and taking up far less space aboard the vehicle. Improvements in image resolution and true moving video would radically improve our view of planetary targets.

Laser methods are proving as workable as we had hoped. The LLCD demonstrated error-free communications during daylight, and could operate when the Moon was within three degrees of the Sun as seen from Earth. Communications were also possible when the Moon was less than four degrees from the horizon as seen from the ground station, and were successful even through thin layers of cloud, which NASA describes as ‘an unexpected bonus.’ A final plus: The demonstrated ability to hand off the laser connection from one ground station to another.

The scientific benefits of lasers are tangible but they’re matched by what could be a rise in public engagement with space if we can produce a networked infrastructure in which video plays a major role. Immersive gaming systems give way to the thought of rovers sending back high-resolution video from exotic places like Titan or Callisto — is this one way to rekindle the passion for exploration that sometimes seems to have died with Apollo? Robotic missions lack the immediacy and glamor of human crews but high bandwidth may help make up the slack, perhaps building momentum for later crewed missions to many of the same targets.

Up next is the Laser Communications Relay Demonstration (LCRD), which recently passed a preliminary design review. LRCD is to be a long-duration optical mission that will tweak optical relay services over a two-year period onboard a commercial satellite built by Space Systems Loral. We’re in the transitional period between demonstrators and reliable flight hardware. After its 2017 launch, LCRD will be positioned above the equator to carry that process forward.

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers