Martin Fowler's Blog, page 5

June 22, 2021

Versioned Value

When a distributed system has mutable data, nodes need to know which is the most recent value, so a versioned value stores a version number with every value.

June 17, 2021

Gossip Dissemination

Unmesh Joshi is completing another batch of his series on Patterns of Distributed Systems. First of these is Gossip Dissemination, which uses a random selection of nodes to pass on information to ensure it reaches all the nodes in the cluster without flooding the network

June 2, 2021

On the Diverse And Fantastical Shapes of Testing

There are arguments about whether a testing portfolio should be a pyramid or more like honeycomb. My second biggest issue with this argument is that it's rendered opaque by the fact that it's not clear what people see as the difference between unit and integration tests.

April 27, 2021

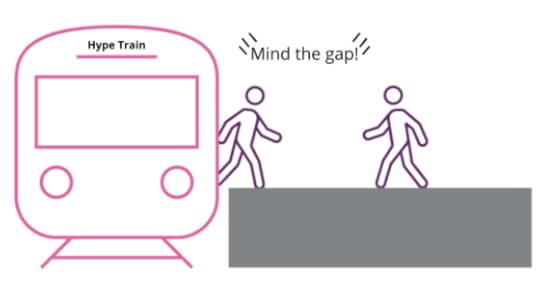

Mind the platform execution gap

Recently there's been a lot of interest, indeed hype, around building developer productivity platforms. Done well, they make it easier for developers to build systems aligned with the technology strategy and allow them to build useful features more quickly. However many organizations struggle because in order to do a good job of platforms, you need to have a number of baseline capabilities in place first. That way the platform can be designed and operated as if it were a a software product with a demanding public customer-base.

April 7, 2021

Bitemporal History

It's often necessary to access the historical values of some property. But sometimes this history itself needs to be modified in response to retroactive updates. Bitemporal history treats time as two dimensions: actual history records what history should be given perfect transmission of information, while record history captures how our knowledge of history changes.

January 28, 2021

Bliki: RefinementCodeReview

When people think of code reviews, they usually think in terms of an explicit step in a development team's workflow. These days the Pre-Integration Review, carried out on a Pull Request is the most common mechanism for a code review, to the point that many people witlessly consider that not using pull requests removes all opportunities for doing code review. Such a narrow view of code reviews doesn't just ignore a host of explicit mechanisms for review, it more importantly neglects probably the most powerful code review technique - that of perpetual refinement done by the entire team.

One of the most pervasive perspectives in software is the notion that it's something we build and complete - hence the endless metaphor of building construction and architecture. Yet the key property of software is that it is soft, and can be as easily modified after it's released as it was when initially composed in the programmer's editor. That's why Erik Dörnenburg wisely argues that architecture is a poor metaphor and would be better replaced by town planning. Valuable software is usually in a constant state of change, as we add features from a better understanding of the value it can bring. But the opportunity is not just to add new features, but also to refine that software - incorporating the lessons the team steadily learns about how best that software can enable these changes.

With the right environment, I can look a bit of code written six months ago, see some problems with how it's written, and quickly fix them. This may be because this code was flawed when it was written, or that changes in the code base since led to the code no longer being quite right. Whichever the cause, the important thing is to fix problems as soon as they start getting in our way. As soon as I have an understanding about the code that wasn't immediately apparent from reading it, I have the responsibility to (as Ward Cunningham so wonderfully said) take that understanding out of my head and put it into the code. That way the next reader won't have to work so hard.

This process of refinement is exactly the same as what happens in a code review, but it's triggered each time the code is looked at rather than when the code is added to the codebase. This was, for me, a crucial insight. After all, many problems that code reviews seek to remedy are problems that only become problems when the code is read in the future. There's a strong argument for not worrying about them until then. After all, just like adding a large apartment complex changes traffic patterns, we may have altered the context of the code six months later, altering the kind of fix that code needs. It also involves more people, in this scheme every developer that reads the code is a reviewer, and one that's able to review based on their actual use of the code rather than on some general, but often hazily-justified guidelines.

A way to think about the validity of a practice is by thinking about what happens if it's a monopoly. What if the only code review mechanism we have is the iteration from later programmers? One consequence is that the review attention gets concentrated on the areas of code that are read more often - which is mostly the areas that ought to get the attention. One concern is that code that's never read will never get reviewed - but mostly that's fine. A team with good testing practices can be confident that the code works, performance tests can identify performance issues. Given that, if the code never needs to be looked at again, we don't need to spend effort on making it comprehensible. I'd expect such cases to be vanishingly rare, but it's an informative thought experiment.

But most ≠ all. One obvious exception here is security issues. Code can work just fine for years until an attacker finds an exploit, at that point we'll lament its lack of review. This is an example of high-impact but rare safety concerns which deserve special scrutiny. However that doesn't mean we shouldn't make conscious use of refinement as a code review mechanism. Instead it means we should be aware of rare-high-impact concerns and adjust our workflow to watch for that kind of specific problem to the degree that it's needed in our circumstances. Threat analysis should alert us to the modules that need additional attention and the kinds of risks they face. Targeted code reviews might be scheduled for security concerns, these can run more effectively because they are focused on a specific kind of problem.

In order to do this perpetual code refinement we require other practices. If I'm going to change code I need to have confidence that it won't break existing functionality, so I need something like Self Testing Code. I need to know that it won't cause big merge conflicts for others, so I need Continuous Integration. We all need to be good at refactoring so we can change code effectively. Since this relies on many developers being expected to modify any part of the code base, we are best off with collective (or at least weak) code ownership. But given a team that has these skills, they can rely on using their regular refinement as a substantial part of their code review strategy.

If nothing else, I think it's important that we put more thought into the role of refinement as code review. One of the dangers of focusing solely on Pre-Integration Reviews is that it can lead teams to neglect how change works in a code base. If I have a pristine mainline, and ensure that every commit merged into that mainline is pristine - can I be sure that the codebase is still pristine after six months? I'd argue that I can't, because the changes mean a good decision about some code six months ago is no longer a good decision now. Refining the code allows us to evaluate old code against this changing usage, allowing us to sustain its health.

AcknowledgementsBen Noble, Chris Ford, Evan Bottcher, Ian Cartwright, Jeremy Huiskamp, Ken Mugrage, Mario Giampietri, Martha Rohte, Omar Bashir, Peter Gillard-Moss, and Simon Brunning commented on drafts of this post on our internal mailing list.Bliki: PullRequest

Pull Requests are a mechanism popularized by github, used to help facilitate merging of work, particularly in the context of open-source projects. A contributor works on their contribution in a fork (clone) of the central repository. Once their contribution is finished they create a pull request to notify the owner of the central repository that their work is ready to be merged into the mainline. Tooling supports and encourages code review of the contribution before accepting the request. Pull requests have become widely used in software development, but critics are concerned by the addition of integration friction which can prevent continuous integration.

Pull requests essentially provide convenient tooling for a development workflow that existed in many open-source projects, particularly those using a distributed source-control system (such as git). This workflow begins with a contributor creating a new logical branch, either by starting a new branch in the central repository, cloning into a personal repository, or both. The contributor then works on that branch, typically in the style of a Feature Branch, pulling any updates from Mainline into their branch. When they are done they communicate with the maintainer of the central repository indicating that they are done, together with a reference to their commits. This reference could be the URL of a branch that needs to be integrated, or a set of patches in an email.

Once the maintainer gets the message, she can then examine the commits to decide if they are ready to go into mainline. If not, she can then suggest changes to the contributor, who then has opportunity to adjust their submission. Once all is ok, the maintainer can then merge, either with a regular merge/rebase or applying the patches from the final email.

Github's pull request mechanism makes this flow much easier. It keeps track of the clones through its fork mechanism, and automatically creates a message thread to discuss the pull request, together with behavior to handle the various steps in the review workflow. These conveniences were a major part of what made github successful and led to "pull request" becoming a fundamental part of the developer's lexicon.

So that's how pull requests work, but should we use them, and if so how? To answer that question, I like to step back from the mechanism and think about how it works in the context of a source code management workflow. To help me think about that, I wrote down a series of patterns for managing source code branching. I find understanding these (specifically the Base and Integration patterns) clarifies the role of pull requests.

In terms of these patterns, pull requests are a mechanism designed to implement a combination of Feature Branching and Pre-Integration Reviews. Thus to assess the usefulness of pull requests we first need to consider how applicable those patterns are to our situation. Like most patterns, they are sometimes valuable, and sometimes a pain in the neck - we have to examine them based on our specific context. Feature Branching is a good way of packaging together a logical contribution so that it can be assessed, accepted, or deferred as a single unit. This makes a lot of sense when contributors are not trusted to commit directly to mainline. But Feature Branching comes at a cost, which is that it usually limits the frequency of integration, leading to complicated merges and deterring refactoring. Pre-Integration Reviews provide a clear place to do code review at the cost of a significant increase in integration friction. [1]

That's a drastic summary of the situation (I need a lot more words to explain this further in the feature branching article), but it boils down to the fact that the value of these patterns, and thus the value of pull requests, rest mostly on the social structure of the team. Some teams work better with pull requests, some teams would find pull requests a severe drag on the effectiveness. I suspect that since pull requests are so popular, a lot of teams are using them by default when they would do better without them.

While pull requests are built for Feature Branches, teams can use them within a Continuous Integration environment. To do this they need to ensure that pull requests are small enough, and the team responsive enough, to follow the CI rule of thumb that everybody does Mainline Integration at least daily. (And I should remind everyone that Mainline Integration is more than just merging the current mainline into the feature branch).

The wide usage of pull requests has encouraged a wider use of code review, since pull requests provide a clear point for Pre-Integration Review, together with tooling that encourages it. Code review is a Good Thing, but we must remember that a pull request isn't the only mechanism we can use for it. Many teams find great value in the continuous review afforded by Pair Programming. To avoid reducing integration frquency we can carry out post-integration code review in several ways. A formal process can record a review for each commit, or a tech lead can examine risky commits every couple of days. Perhaps the most powerful form of code review is one that's frequently ignored. A team that takes the attitude that the codebase is a fluid system, one that can be steadily refined with repeated iteration carries out Refinement Code Review every time a developer looks at existing code. I often hear people say that pull requests are necessary because without them you can't do code reviews - that's rubbish. Pre-integration code review is just one way to do code reviews, and for many teams it isn't the best choice.

AcknowledgementsChris Ford, Dan Mutton, Jeremy Huiskamp, Kief Morris, Pramod Sadalage, and Ryan Boucher commented on drafts of this post on our internal mailing list. Notes1: A colleague of mine recently calculated the time a client spent waiting for pull requests that had no comments (true of 91% of them). Total time waiting in 2020 for 7000 PRs was 130,000 hours. This figure included time elapsed over nights and weekends.

January 26, 2021

Distributed Systems Pattern: Idempotent Receiver

Clients send requests to servers but might not get a response. It's impossible for clients to know if the response was lost or the server crashed before processing the request. To make sure that the request is processed, the client has to re-send the request. If the server had already processed the request and crashed after that, servers will get duplicate requests when the client retries.

Maximizing Developer Effectiveness: Organizational Effectiveness

Tim finishes his article by looking at how highly effective organizations design their engineering organization to optimize for effectiveness and feedback loops. He illustrates what this looks like by the example of Etsy, who actively measures their ability to put valuable products into production quickly and safely, adjusting their technical investments to fix any blockers or slowness.

January 19, 2021

Distributed Systems Pattern: State Watch

Clients are interested in changes to the specific values on the server. It's difficult for clients to structure their logic if they need to poll the server continuously to look for changes. If clients open too many connections to the server for watching changes, it can overwhelm the server.

Martin Fowler's Blog

- Martin Fowler's profile

- 1102 followers