Peter Smith's Blog, page 27

November 30, 2022

Adrian Moore, on Gödel’s Theorem, briefly

There has just been published another in the often splendid OUP series of “Very Short Introductions”: this time, it’s the Oxford philosopher Adrian Moore, writing on Gödel’s Theorem. I thought I should take a look.

There has just been published another in the often splendid OUP series of “Very Short Introductions”: this time, it’s the Oxford philosopher Adrian Moore, writing on Gödel’s Theorem. I thought I should take a look.

This little book is not aimed at the likely readers of this blog. But you could safely place it in the hands of a bright high-school maths student, or a not-very-logically-ept philosophy undergraduate, and they should find it intriguing and perhaps reasonably accessible, and they won’t be led (too far) astray. Which is a lot more than can be said for some other attempts to present the incompleteness theorems to a general reader.

I do like the way that Moore sets things up at the beginning of the book, explaining in a general way what a version of Gödel’s (first) theorem shows and why it matters — and, equally importantly, fending off some initial misunderstandings.

Then I like the way that Moore first gives the proof that he and I both learnt very long since from Timothy Smiley, where you show that (1) a consistent, negation-complete, effectively axiomatized theory is decidable, and (2) a consistent, sufficiently strong, effectively axiomatized theory is not decidable, and conclude (3) a consistent, sufficiently strong, effectively axiomatized theory can’t be complete. Here, being “sufficiently strong” is a matter of the theory’s proving enough arithmetic (being able to evaluate computable functions). Moore also gives the close relation of this proof which, instead of applying to theories which prove enough (a syntactic condition), applies to theories which express enough arithmetical truths (a semantic condition). That’s nice. I only presented the syntactic version early in IGT and GWT and (given that I elsewhere stress that proofs of incompleteness come in two flavours, depending on whether we make semantic or proof-theoretic assumptions) maybe I should have explicitly spelt out the semantic version too.

Moore then goes on to outline a proof involving the Gödelian construction of a sentence for PA which “says” it is unprovable in PA, and then generalizes from PA. (Oddly, he remarks that “the main proof in Gödel’s article … showed that no theory can be sufficiently strong, sound, complete and axiomatizable”, which is misleading because Gödel in 1931 didn’t have the notion of sufficient strength available, and arguably also misleading about the role of semantics, even granted the link between  -soundness and

-soundness and  -consistency, given the importance that Gödel attached to avoiding dependence on semantic notions.) Moore then explains the second theorem reasonably enough.

-consistency, given the importance that Gödel attached to avoiding dependence on semantic notions.) Moore then explains the second theorem reasonably enough.

The last part of the book touches on some more philosophical reflections. Moore briefly discusses Hilbert’s Programme (I’m not sure he has the measure of this) and the Lucas-Penrose argument (perhaps forgivably pretty unclear); and the book finishes with some limply Wittgensteinean remarks about how we understand arithmetic despite the lack of a complete axiomatization. I can’t myself get enthused by these final sections — though I suppose that if they spur the intended reader to get puzzled and interested in the topics, they will have served a good purpose.

My main trouble with the book, however, is with Moore’s presentational style when it comes to the core technicalities. He doesn’t have the gift for mathematical exposition. Yes, all credit for trying to get over the key ideas in a non-scary way. But I, for one, find his somewhat conversational mode of proceeding doesn’t work that well. I suspect that for many, a more conventionally crisp mathematical mode of presentation at the crucial stages, nicely glossed with accompanying explanations, could actually promote greater understanding. Though don’t let that reaction stop you trying the book out on some suitable potential reader, next time you are asked what logicians get up to!

The post Adrian Moore, on Gödel’s Theorem, briefly appeared first on Logic Matters.

November 29, 2022

Avigad, MLC — 3: are domains sets?

The next two chapters of MLC are on the syntax and proof systems for FOL — in three flavours again, minimal, intuitionstic, and classical — and then on semantics and a smidgin of model theory. Again, things proceed at pace, and ideas come thick and fast.

The next two chapters of MLC are on the syntax and proof systems for FOL — in three flavours again, minimal, intuitionstic, and classical — and then on semantics and a smidgin of model theory. Again, things proceed at pace, and ideas come thick and fast.

One tiny stylistic improvement which could have helped the reader (ok, this reader) would have been to chunk up the sections into subsections — e.g. by simply marking the start of a new subsection/new subtheme by leaving a blank line and beginning the next paragraph “(a)”, “(b)”, etc. A number of times, I found myself re-reading the start of a new para to check whether it was supposed to flow on from the previous thought. Yes, yes, this is of course a minor thing! But readers of dense texts like this need all help we can get!

So in a bit more detail, how do Chapters 4 and 5 proceed? Broadly following the pattern of the two chapters on PL, in §4.1 we find a brisk presentation of FOL syntax (in the standard form, with no syntactic distinction made between variables-as-bound-by-quantifiers and variables-standing-freely). Officially, recall, wffs that result from relabelling bound variables are identified. But this seems to make little difference: I’m not sure what the gain is.

§4.2 presents axiomatic and ND proof systems for the quantifiers, adding to the systems for PL in the standard ways. §4.3 deals with identity/equality and says something about the “equational fragment” of FOL. §4.4 says more than usual about equational and quantifier-free subsystems of FOL, noting some (un)decidability results. §4.5 briefly touches on prenex normal form. §4.6 picks up the topic (dealt with in much more detail than usual) of translations between minimal, intuitionist, and classical logic. §4.7 is titled “Definite Descriptions” but isn’t as you might expect about how to add a description operator, a Russellian iota, but rather about how — when we can prove  — we can add a function symbol

— we can add a function symbol  such that

such that  holds when

holds when  , and all goes as we’d hope. Finally, §4.8 treats two topics: first, how to mock up sorted quantifiers in single-sorted FOL; and second, how to augment our logic to deal with partially defined terms. That last subsection is very brisk: if you are going to treat any varieties of free logic (and I’m all for that in a book at this level, with this breadth) there’s more worth saying.

, and all goes as we’d hope. Finally, §4.8 treats two topics: first, how to mock up sorted quantifiers in single-sorted FOL; and second, how to augment our logic to deal with partially defined terms. That last subsection is very brisk: if you are going to treat any varieties of free logic (and I’m all for that in a book at this level, with this breadth) there’s more worth saying.

Then, turning to semantics, §5.1 is the predictable story about full classical logic with identity, crisply done, with soundness and completeness theorems proved. §5.2 tells us more about equational and quantifier-free logics. §5.3 extends Kripke semantics to deal with quantified intuitionistic logic. We then get algebraic semantics for classical and intuitionistic logic in §5.4 (so, as before, A is casting his net more widely than usual — though the treatment of the intuitionistic case is indeed pretty compressed). The chapter finishes with a fast-moving 10 pages giving us two sections on model theory. §5.5 deals with some (un)definability results, and talks briefly about non-standard models of true arithmetic. §5.6 gives us the L-S theorems and some results about axiomatizability. So that’s a lot packed into this chapter. And at a sophisticated level too — it is telling that A’s note at the end of the chapter gives Peter Johnstone’s book on Stone Spaces as a “good reference” for one of the constructions!

What more is there to say? I’m enjoying polishing off some patches of rust! — but as is probably already clear, these initial chapters are pitched at what many student readers will surely find a pretty demanding level, unless they bring quite a bit to the party. That’s not a criticism, I‘m just locating the book on the spectrum of texts. I’d have slowed down the exposition a bit at a number of points, but that (as I said in the last post) is a judgement call, and it would be unexciting to go into details here.

One general comment, though. I note that A does define a model for a FOL language as having a set for quantifiers to range over, but with a function (of the right arity) over that set as interpretation for each function symbol, and a relation (of the right arity) over that set as interpretation for each relation symbol. My attention might have flickered, but A seems happy to treat functions and relations as they come, not explicitly trading them in for set-theoretic surrogates (sets of ordered tuples). But then it is interesting to ask — if we treat functions and relations as they come, without going in for a set-theoretic story, then why not treat the quantifiers as they come, as running over some objects plural? That way we can interpret e.g. the first-order language of set theory (whose quantifiers run over more than set-many objects) without wriggling.

A does nicely downplay the unnecessary invocation of sets — though not consistently. E.g. he later falls into saying “the set of primitive recursive functions is the set of functions [defined thus and so]” when he could equally well have said, simply, “the primitive recursive functions are the functions [defined thus and so]”. I’d go for consistently avoiding unnecessary set talk from the off — thus making it much easier for the beginner at serious logic to see when set theory starts doing some real work for us. Three cheers for sets: but in their proper place!

The post Avigad, MLC — 3: are domains sets? appeared first on Logic Matters.

November 28, 2022

Avigad, MLC — 2: And what is PL all about?

After the chapter of preliminaries, there are two chapters on propositional logic (substantial chapters too, some fifty-five large format pages between them, and they range much more widely than the usual sort of introductions to PL in math logic books).

After the chapter of preliminaries, there are two chapters on propositional logic (substantial chapters too, some fifty-five large format pages between them, and they range much more widely than the usual sort of introductions to PL in math logic books).

The general approach of MLC foregrounds syntax and proof theory. So these two chapters start with §2.1 quickly reviewing the syntax of the language of PL (with  as basic — so negation has to be defined by treating

as basic — so negation has to be defined by treating  as

as  ). §2.2 presents a Hilbert-style axiomatic deductive system for minimal logic, which is augmented to give systems for intuitionist and classical PL. §2.3 says more about the provability relations for the three logics (initially defined in terms of the existence of a derivation in the relevant Hilbert-style system). §2.4 then introduces natural deduction systems for the same three logics, and outlines proofs that we can redefine the same provability relations as before in terms of the availability of natural deductions. §2.5 notes some validities in the three logics and §2.6 is on normal forms in classical logic. §2.7 then considers translations between logics, e.g. the Gödel-Gentzen double-negation translation between intuitionist and classical logic.

). §2.2 presents a Hilbert-style axiomatic deductive system for minimal logic, which is augmented to give systems for intuitionist and classical PL. §2.3 says more about the provability relations for the three logics (initially defined in terms of the existence of a derivation in the relevant Hilbert-style system). §2.4 then introduces natural deduction systems for the same three logics, and outlines proofs that we can redefine the same provability relations as before in terms of the availability of natural deductions. §2.5 notes some validities in the three logics and §2.6 is on normal forms in classical logic. §2.7 then considers translations between logics, e.g. the Gödel-Gentzen double-negation translation between intuitionist and classical logic.

As you’d expect, this is all technically just fine. Now, A says in his Preface that “readers who have had a prior introduction to logic will be able to navigate the material here more quickly and comfortably”. But I suspect that in fact some prior knowledge will be pretty essential if you are really going get much out the discussions here. To be sure, the point of the exercise isn’t (for example) to get the reader to be a whizz at knocking off complex Gentzen-style natural deduction proofs; but are there quite enough worked examples for the real newbie to get a good feel for the claimed naturalness of such proofs? Is a single illustration of a Fitch-style alternative helpful? I’m doubtful.

And actually, even if you do have some prior logical knowledge, I’m pretty sure that the chapter’s final §2.8 — which takes a very brisk look at other sorts of deductive system, and issues about decision procedures — goes too fast to be likely to carry the majority of readers along.

To continue, Chapter 3 is on semantics. We get the standard two-valued semantics for classical PL, along with soundness and completeness proofs, in §3.1. Then we get interpretations in Boolean algebras in §3.2. Next, §3.3 introduces Kripke semantics for intuitionistic (and minimal) logic — as I said, A is indeed casting his net more widely that usual in introducing PL. §3.4 gives algebraic and topological interpretations for intuitionistic logic. And the chapter ends with §3.5, ‘Variations’, introducing what A calls generalised Beth semantics.

Again I really do wonder about the compression and speed of some of the episodes in this chapter; certainly, those new to logic could find them very hard going. Filters and ultrafilters in Boolean algebras are dealt with at speed; some more examples of Kripke semantics at work might have helped to fix ideas; Heyting semantics is again dealt with at speed. And §3.5 will (surely?) be found pretty challenging.

Still, I think that for someone coming to MLC who already does have enough logical background (perhaps half-baked, perhaps rather fragmentary) and who is mathematically adept enough, these chapters — perhaps initially minus their last sections — should bring a range of technical material into a nicely organised story in a helpful way, giving a good basis for pressing on through the book.

For me, the interest here in the early chapters of MLC is in thinking through what I might do differently and why. As just implied, without going into more detail here, I’d have gone rather more slowly at quite a few points (OK, you might think too slowly — these things are of course a judgment call). But perhaps as importantly, I’d have wanted to add some more explanatory/motivational chat.

For example, what’s so great about minimal logic? Why talk about it at all? We are told that minimal logic “has a slightly better computational interpretation” than intuitionistic logic. But so what? On the face of it doesn’t treat negation well, its Kripke semantics doesn’t take the absurdity constant seriously, and lacking disjunctive syllogism it isn’t a sane candidate for regimenting mathematical reasoning (platonistic or constructive). And if your beef with intuitionist logic are relevantist worries about its explosive character, then minimal logic is hardly any better (since from a contradiction, we can derive any and every negated wff). So why seriously bother with it?

Another example. We are introduced to the language of propositional logic (with a fixed infinite supply of propositional letters). Glancing ahead, in Chapter 4 we meet a multiplicity of first-order languages (with their various proprietary and typically finite supplies of constants and/or relation symbols and/or function symbols). Why the asymmetry?

Connectedly but more basically, what actually are the propositional variables doing in a propositional language? Different elementary textbooks say different things, so A can’t assume that everyone is approaching the discussions here with the same background of understanding of what PL is all about. A cheerfully says that the propositional variables “stand for” propositions. Which isn’t very helpful given that neither “stand for” nor “proposition” is at all clear! Now, of course, you can get on with the technicalities of PL (and FOL) while blissfully ignoring the question of what exactly the point of it all is, what exactly the relation is between the technical games being played and the real modes of mathematical argumentation you are, let’s hope, intending to throw light on when doing mathematical logic. But I still think that an author owes us some story about their understanding of these rather contentious issues, an understanding which must shape their overall approach. A says something in his Preface about the use of formal systems to understand patterns of mathematical inference (though he then oddly says that “the role of a proof system” for PL “is to derive tautologies”, when you would expect something like “is to establish tautological entailments”); and perhaps more will come out as the book progresses about how he conceives things. But I’d have liked to have seen rather more upfront.

The post Avigad, MLC — 2: And what is PL all about? appeared first on Logic Matters.

November 25, 2022

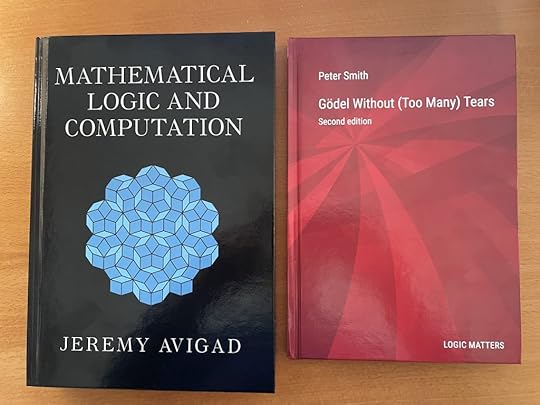

Things ain’t what they used to be, CUP edition

Two new books — the published-today hardback of Jeremy Avigad’s new book from CUP, and the just-arrived, published-next-week, hardback of my GWT. Both excellent books, it goes without saying! But they have more in common than that. These copies are both produced in just the same way, as far as I can see to exactly the same standard, by Lightning Source, one of the main printers of print-on-demand books for the UK. So both copies have the same paper quality (reasonable, though with more see-through than we’d ideally like) and the same sort of case binding (a flat spine, with the pages not gathered in signatures, but glued: how robust will this binding be in the long term?).

Two new books — the published-today hardback of Jeremy Avigad’s new book from CUP, and the just-arrived, published-next-week, hardback of my GWT. Both excellent books, it goes without saying! But they have more in common than that. These copies are both produced in just the same way, as far as I can see to exactly the same standard, by Lightning Source, one of the main printers of print-on-demand books for the UK. So both copies have the same paper quality (reasonable, though with more see-through than we’d ideally like) and the same sort of case binding (a flat spine, with the pages not gathered in signatures, but glued: how robust will this binding be in the long term?).

Now, I’m perfectly happy with the hardback of my book. It is serviceable and looks professional enough (maybe one day I’ll get round to redesigning the covers of the Big Red Logic Books; but I quite like their simplicity). I’m just noting that, although Avigad’s book will cost you £59.99 (if you don’t happen to be able to call on the very large discount for press authors), it is another print-on-demand book produced to no higher standard. Once upon a time CUP’s books were often rather beautifully printed and produced. Now, I’m afraid, not quite so much.

The post Things ain’t what they used to be, CUP edition appeared first on Logic Matters.

November 23, 2022

Lea Desandre & Iestyn Davies sing Handel

“To thee, thou glorious son of worth”, from the new CD of music by Handel with the quite stellar Lea Desandre, Iestyn Davies, and Thomas Dunford’s Jupiter ensemble. Just wonderful.

The post Lea Desandre & Iestyn Davies sing Handel appeared first on Logic Matters.

Avigad, MLC — 1: What are formulas?

I noted before that Jeremy Avigad’s new book Mathematical Logic and Computation has already been published by CUP on the Cambridge Core system, and the hardback is due any day now. The headline news is that this looks to me the most interesting and worthwhile advanced-student-orientated book that has been published recently.

I noted before that Jeremy Avigad’s new book Mathematical Logic and Computation has already been published by CUP on the Cambridge Core system, and the hardback is due any day now. The headline news is that this looks to me the most interesting and worthwhile advanced-student-orientated book that has been published recently.

I’m inspired, then, to blog about some of the discussions in the book that interest me for one reason or another, either because I might be inclined to do things differently, or because they are on topics that I’m not very familiar with, or (who knows?) maybe for some other reason. I’m not planning a judicious systematic review, then: these will be scattered comments shaped by the contingencies of my own interests!

Chapter 0 of MLC is on “Fundamentals”, aiming to “develop a foundation for reasoning about syntax”. So we get the usual kinds of definitions of inductively defined sets, structural recursion, definitions of trees, etc. and applications of the abstract machinery to defining the terms and formulas of FOL languages, proving unique parsing, etc.

This is done in a quite hard-core way (particularly on trees), and I think you’d ideally need to have already done a mid-level logic course to really get the point of various definitions and constructions. But A [Avigad, the Author, of course!] notes that he is here underwriting patterns of reasoning that are intuitively clear enough, so the reader can at this point skim, returning later to nail down various details on a need-to-know basis.

But there is one stand-out decision that is worth pausing over. Take the two expressions  and

and  The choice of bound variable is of course arbitrary. It seems we have two choices here:

The choice of bound variable is of course arbitrary. It seems we have two choices here:

-equivalent, as they say) are always interderivable, are logically equivalent too.Say that formulas proper are what we get by quotienting expressions by

-equivalent, as they say) are always interderivable, are logically equivalent too.Say that formulas proper are what we get by quotienting expressions by  -equivalence, and lift our first-shot definitions of e.g. wellformedness for expressions of FOL to become definitions of wellformedness for the more abstract formulas proper of FOL.

-equivalence, and lift our first-shot definitions of e.g. wellformedness for expressions of FOL to become definitions of wellformedness for the more abstract formulas proper of FOL.Now, as A says, there is in the end not much difference between these two options; but he plumps for the second option, and for a reason. The thought is this. If we work at expression level, we will need a story about allowable substitutions of terms for variables that blocks unwanted variable-capture. And A suggests there are three ways of doing this, none of which is entirely free from trouble.

Distinguish free from bound occurrences of variables, define what it is for a term to be free for a variable, and only allow a term to be substituted when it is free to be substituted. Trouble: “involves inserting qualifications everywhere and checking that they are maintained.”Modify the definition of substitution so that bound variables first get renamed as needed — so that the result of substituting for

for  in

in  is something like

is something like  . Trouble: “Even though we can fix a recipe for executing the renaming, the choice is somewhat arbitrary. Moreover, because of the renamings, statements we make about substitutions will generally hold only up to

. Trouble: “Even though we can fix a recipe for executing the renaming, the choice is somewhat arbitrary. Moreover, because of the renamings, statements we make about substitutions will generally hold only up to  -equivalence, cluttering up our statements.”Maintain separate stocks of free and bound variables, so that the problem never arises. Trouble: “Requires us to rename a variable whenever we wish to apply a binder.”

-equivalence, cluttering up our statements.”Maintain separate stocks of free and bound variables, so that the problem never arises. Trouble: “Requires us to rename a variable whenever we wish to apply a binder.”I’m not quite sure how we weigh the complications of the first two options against the complications involved in going abstract and defining formulas proper by quotienting expressions by  – equivalence. But be that as it may. The supposed trouble counting against the third option is, by my lights, no trouble at all. In fact A is arguably quite misdescribing what is going on in that case.

– equivalence. But be that as it may. The supposed trouble counting against the third option is, by my lights, no trouble at all. In fact A is arguably quite misdescribing what is going on in that case.

Taking the Gentzen line, we distinguish constants with their fixed interpretations, parameters or temporary names whose interpretation can vary, and bound variables which are undetachable parts of a quantifier-former we might represent ‘ ’. And when we quantify

’. And when we quantify  to get

to get  we are not “renaming a variable” (a trivial synactic change) but replacing the parameter which has one semantic role with an expression which is part of a composite expression with a quite different semantic role. There’s a good Fregean principle, use different bits of syntax to mark different semantic roles: and that’s what is happening here when we replace the ‘

we are not “renaming a variable” (a trivial synactic change) but replacing the parameter which has one semantic role with an expression which is part of a composite expression with a quite different semantic role. There’s a good Fregean principle, use different bits of syntax to mark different semantic roles: and that’s what is happening here when we replace the ‘ ’ by the ‘

’ by the ‘ ’, and at the same time bind with the quantifier

’, and at the same time bind with the quantifier  (all in one go, so to speak).

(all in one go, so to speak).

So its seems to me that option 1c is markedly more attractive than A has it (it handles issues about substitution nicely, and meshes with the elegant story about semantics which has  true on an interpretation when

true on an interpretation when  is true however we extend that interpretation to give a referent to the temporary name

is true however we extend that interpretation to give a referent to the temporary name  ). The simplicity of 1c compared with option 2 in fact has the deciding vote for me.

). The simplicity of 1c compared with option 2 in fact has the deciding vote for me.

The post Avigad, MLC — 1: What are formulas? appeared first on Logic Matters.

November 15, 2022

Gödel Without (Too Many) Tears — 2nd edition published!

Good news! The second edition of GWT is available as a (free) PDF download. This new edition is revised throughout, and is (I think!) a significant improvement on the first edition which I put together quite quickly as occupational therapy during lockdown last year.

Good news! The second edition of GWT is available as a (free) PDF download. This new edition is revised throughout, and is (I think!) a significant improvement on the first edition which I put together quite quickly as occupational therapy during lockdown last year.

In fact, the PDF has been available for a week or so. But I held off making a splash about this until today, when the book also becomes available as a large-format 154pp. paperback from Amazon. You can get it at the extortionate price of £4.50 UK, $6.00 US — and it should be €5 or so on various EU Amazons very shortly, and similar prices elsewhere. Obviously the royalties are going to make my fortune. ISBN 1916906354.

The paperback is Amazon-only, as they offer by far the most convenient for me and the cheapest for you print-on-demand service. A more widely distributed hardback for libraries (and for the discerning reader who wants a classier copy) will be published on 1 December and can already be ordered at £15.00, $17.50. ISBN: 1916906346. Do please remember to request a copy for your university library: since GWT is published by Logic Matters and not by a university press, your librarian won’t get to hear of it through the usual marketing routes.

Right. And now to get back to other projects …

The post Gödel Without (Too Many) Tears — 2nd edition published! appeared first on Logic Matters.

November 14, 2022

Book note: Topology, A Categorical Approach

Having recently been critical of not a few books here(!), let me mention a rather good one for a change. I’ve had on my desk for a while a copy of Topology: A Categorical Approach by Tai-Danae Bradley, Tyler Bryson and John Terilla (MIT 2020). But I have only just got round to reading it, making a first pass through with considerable enjoyment and enlightenment.

Having recently been critical of not a few books here(!), let me mention a rather good one for a change. I’ve had on my desk for a while a copy of Topology: A Categorical Approach by Tai-Danae Bradley, Tyler Bryson and John Terilla (MIT 2020). But I have only just got round to reading it, making a first pass through with considerable enjoyment and enlightenment.

The cover says that the book “reintroduces basic point-set topology from a more modern, categorical perspective”, and that frank “reintroduces” rather matters: a reader who hasn’t already encountered at least some elementary topology would have a pretty hard time seeing what is going on. But actually I’d say more. A reader who is innocent of entry-level category theory will surely have quite a hard time too. For example, in the chapter of ‘Prelminaries’ we get from the definition of a category on p. 3 to the Yoneda Lemma on p. 12! To be sure, the usual definitions we need are laid out clearly enough in between; but I do suspect that no one for whom all these ideas are genuinely new is going to get much real understanding from so rushed an introduction.

But now take, however, a reader who already knows a bit of topology and who has read Awodey’s Category Theory (for example). Then they should find this book very illuminating — both deepening their understanding of topology but also rounding out their perhaps rather abstract view of category theory by providing a generous helping of illustrations of categorial ideas doing real work (particularly in the last three chapters). Moreover, this is all attractively written, very nicely organized, and (not least!) pleasingly short at under 150 pages before the end matter.

In short, then: warmly recommended. And all credit too to the authors and to MIT Press for making the book available open-access. So I need say no more here: take a look for yourself!

The post Book note: Topology, A Categorical Approach appeared first on Logic Matters.

November 3, 2022

Back to business …

A break from logical matters, away for half-a-dozen busy days in Athens, followed by visiting family on Rhodes for a week. Both most enjoyable in very different ways. Then we needed a holiday to recover …

But I’m back down to business. The first item on the agenda has been to deal with some very useful last comments on the draft second edition of Gödel Without (Too Many) Tears, and to make a start on a final proof-reading for residual typos, bad hyphenations, and the like. I hope the paperback will be available in about three weeks. If you happen to be reading this while thinking of buying the first edition, then save up your pennies. The second edition is worth the short wait (and will be again as cheap as I can make it).

I’m still finding the occasional slightly clumsy or potentially unclear sentence in GWT. I can’t claim to be a stylish writer, but I can usually in the end hit a decently serviceable level of straightforward and lucid prose. But it does take a lot of work. Still, it surely is the very least any author of logic books or the like owes their reader. I certainly find it irksome — and more so with the passing of the years — when authors don’t seem to put in the same level of effort and serve up laborious and uninviting texts. As with, for example, Gila Sher’s recent contribution to the Cambridge Elements series, on Logical Consequence.

I’m still finding the occasional slightly clumsy or potentially unclear sentence in GWT. I can’t claim to be a stylish writer, but I can usually in the end hit a decently serviceable level of straightforward and lucid prose. But it does take a lot of work. Still, it surely is the very least any author of logic books or the like owes their reader. I certainly find it irksome — and more so with the passing of the years — when authors don’t seem to put in the same level of effort and serve up laborious and uninviting texts. As with, for example, Gila Sher’s recent contribution to the Cambridge Elements series, on Logical Consequence.

Mind you, the more technical bits have to fight against CUP’s quite shamefully bad typesetting. But waiving that point, I really have to doubt that any student who needs to have the Tarskian formal stuff about truth and consequence explained is going to smoothly get a good grasp from the presentation here. And I found the ensuing philosophical discussion quite unnecessarily hard going. And if I did, I’m sure that will apply to the the intended student reader. So I’m pretty unimpressed, and suggest you can give this Element a miss unless you have a special reason for tackling it.

Another book which readers of this blog will probably want to give a miss to is Eugenia Cheng’s latest, The Joy of Abstraction:An Exploration of Math, Category Theory and Life (also CUP). This comes garlanded with a lot of praise. I suppose it might work for some readers.

Another book which readers of this blog will probably want to give a miss to is Eugenia Cheng’s latest, The Joy of Abstraction:An Exploration of Math, Category Theory and Life (also CUP). This comes garlanded with a lot of praise. I suppose it might work for some readers.

But the stuff supposedly showing that abstract thought of a vaguely categorial kind is relevant to ‘Life’ is embarrassingly jejune. The general musings about mathematics will seem very thin gruel (and too often misleading to boot) to anyone who knows enough mathematics and a bit of philosophy of mathematics. Which leaves the second half of the book where Cheng is on much safer home ground “Doing Category Theory”.

So I tried to approach this part of the book with fresh eyes and without prejudice, shelving what has gone before. But, to my surprise, I found the level of exposition to rather less good than I was expecting (knowing, e.g., Cheng’s Catsters videos). She is aiming to get some of the Big Ideas across in an amount of detail, and I was hoping for some illuminating “look at it like this” contributions — the sort of helpful classroom chat which tends to get edited out of the more conventional textbooks. But I’m no sure that what she does offer works particularly well. Try the chapter on products, for example, and ask: if you haven’t met the categorial treatment of products before, would this give you a good enough feel for what is going on and why it so compellingly natural? Or later, try the chapter on the Yoneda Lemma and ask: would this give someone a good understanding of why it might be of significance? I’m frankly a bit dubious.

So that’s a couple of recent CUP books that I did acquire, electronically or physically, and am sadly not enthused by. But in their bookshop there is another new publications which looks wonderful and extremely covetable, a large format volume on The Villa Farnesina. On the one hand, acquiring this would of course be quite disgracefully self-indulgent. On the other hand …

The post Back to business … appeared first on Logic Matters.

October 21, 2022

Time to send them home …

I confess to have given little thought in the past to questions of just when objects of problematic provenance in our museums should be repatriated. But, better late than never, I realize I can’t conjure any cogent reason why the “Elgin Marbles”, the Parthenon Frieze and the rest, shouldn’t now be returned by the British Museum and displayed in the beautiful Acropolis Museum. That museum, as we found last week, is already worth a trip to Athens in itself, and the huge gallery waiting for the originals of the rest of the frieze is just stunning. Time the marbles went home.

The post Time to send them home … appeared first on Logic Matters.