Sandy Stone's Blog

December 4, 2024

Hello world!

Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

October 5, 2021

Musings On Auto-Mation

Many of you have read about Tesla’s imminent wide release of their “Full Self Driving” (snerk) software to the general auto ownership. Consequently I’ve been reading many articles and watching a metric ton of YouTube videos created by the current (small) group of beta testers— a kind of virtual driver’s training class. You can decide if this is a case of anticipating Santa or getting to know one’s enemy.

Of course, I may not ever see FSD in any form in my Model X. I’ve not decided if FSD falls into the category of Zeno’s Software or Godot’s Software. I guess it’ll depend upon whether the ever-slipping deadline (up to four years and counting) represents a converging or diverging series. Your guess is as good as mine. I figure if I’m lucky I’ll get the “beta” in November. Maybe. (Side note: some components of the Tesla software have been in “beta” release for years. Beta is no guarantee of there ever being a final release when it comes to Tesla.)

Unfortunately, for my sanity, I also find myself reading some of the comments accompanying these articles and videos. Always a bad idea. Note to self (and everyone): do NOT read online comments if you wish to retain a favorable view of humanity. Ever!

The conversations inevitably degenerate into flame wars between the Tesla Fanbois and the Deniers, with neither side showing particular understanding the science or technologies involved. I hope, with this column, to introduce a modest degree of enlightenment.

The current approach to autonomous vehicles, used by all the players, is some flavor of machine learning a.k.a. “Artificial Intelligence.” (But not really — a computer-hype marketing term: These programs bear as much resemblance to real artificial intelligence as Tesla’s FSD does to real self-driving cars.) There are many different approaches to machine learning, many different architectures. Designing the best one for problem is, in itself, something of an art. So is successfully implementing it.

Will this approach, in fact, lead to self-driving vehicles? At this point, we have no idea. On the one hand, machine learning is capable of generating some truly astonishing programs. The Topaz image processing programs I’ve touted in several columns are based on AI-derived algorithms. The results they produce are sometimes extraordinarily, even unbelievably good. There are times when I honestly can’t tell if the programs are simply making shit up, because it is such utterly convincing shit. Other times, they are meh. (That’s an important point, and I’ll get back to it.)

In areas of scientific imaging and image construction, the results have been, on occasion, even more unbelievable. Algorithms for doing synthetic microscopy or holographic rendering that run a thousand to a million times faster than anything a human being ever came up with. Machine learning can be utterly amazing.

But… to achieve some real level of automotive autonomy, it’s going to have to be. Tesla’s current Autopilot, which is probably the best commercially available system, barely hits a low Level III on the autonomy scale. That is, you don’t have to pay CONSTANT attention to drive safely, but you do have to pay very frequent attention, because the car will make mistakes. I’ve discussed that in previous columns. Depending upon where I’m driving, I have to wrest control away from Autopilot (lest it crash my car) once every 30 to 500 miles. (Yes, awfully variable results.)

To get to any useful level of autonomy the car has to do a whole lot better than that! What would I consider useful? Well, if I could ignore the car from the time I get on Interstate 280 in Daly City until I transfer from I 85 to Highway 17, I would be very happy! That’s 50-60 minutes of time I could spend reading, or writing, or whatever. Call it a very low Level IV capability.

(Oh sure, I’d love to have a car that would take me from my house to, say, Santa Cruz all by itself and even let me nap, if I so desired. But let’s get real!)

That’ll require a thousand-to-ten-thousand improvement in software reliability before I’m going to trust it with my life. If not my life, my $85,000 car!

Is current AI technology up to the task? Nobody knows… Although everyone is betting tens of billions of dollars on it.

Here’s something we do know, though. Despite what you may read on the Internet, it’s not primarily a sensor issue! It’s a judgment issue! Watch a bunch of the YouTube videos and you’ll see what I’m talking about. Rarely is a driver having to take over from the car because of a problem that radar, or lidar, or stereovision would solve. Yes, one can argue that — in theory — this that or the other combination of sensor technology ought to be better. But the real world results, so far, say that isn’t where the fundamental problems lie.

(In fact, I was thinking of making this column about several such cases I encountered on the road in recent travels, where no sensor technology or combination thereof would’ve handled the problem. Instead, I decided to go more general. Maybe I’ll write about those next time. Or not. I’m fickle.)

One of the common misconceptions out there is that this can be solved on a case-by-case basis. The programmer’s version of, “Doctor it hurts when I do that. Well, then don’t do that!” Problem is, machine learning algorithms really HATE to be told what to do in the specific. The way they work is you give them a goal, then you hand them a gazillion individual cases to look at (lessons, if you will), and they trial-and-error their way to that goal. Of course, it’s not a random walk — the magic of these architectures is that they’re really good at figuring out algorithmic tricks that get to that goal.

But they do it through a generalized interpretation of all those gazillion cases. Oh sure, you can give them a certain number of specific rules; you have to! But get too specific and give them too many, and you either end up with contradictory answers that result in very unpredictable behavior or the algorithm becomes too focused on satisfying the specific rules, to the degradation of the overall results.

In other words, you can’t fix all those driver overrides by just piling on the IF-THEN-ELSE or CASE statements, like you would in conventional programming. Do that and you’ll end up with a result a lot like HAL 9000.

Somehow, someway, you have to make sure that those gazillion cases you feed the program are an accurate and sufficiently complete representation of what a car is going to encounter in real life.

In truth, it’s impossible. When you get it wrong, bad things can happen. Like facial recognition software that brands people of color as gorillas, because a predominantly white-techbro culture didn’t notice that their training cases were insufficiently diverse.

Or systems used by the police misidentifying 20% of the California legislature as known criminals (sure, insert your obvious jokes here). That likely goes to a second problem with these systems. called “underdeterminism,” and it seems to be inherent in known architectures. It’s like this:

Suppose you do come up with what appears to be a perfectly real-world-representative data set to feed your baby AI. Depending on exactly what starting point you give it, it’ll come to different conclusions about the best fit to that data. Those answers are local minima in very large very-many-dimensional solution space; they are like little individual gravitational wells that the program settles into, depending upon which one it started off “closest” to. You can easily get a half-dozen very different algorithmic solutions, all of which do extremely well on your teaching set.

Set those solutions loose in the real world and they will be wildly different in their accuracy. It doesn’t matter how good you thought your teaching set was. There is no way you can produce a finite set that accurately and proportionately represents every possible variable and combination of variables where the program might decide to look for a pattern that it can home in on.

The only way you end up with a really robust and reliable algorithm is to take it out into the real world and pile on more gazillions upon gazillions of test cases from everyday life. You keep your fingers crossed and hope and pray that the algorithm continues to improve and doesn’t merely level out at some unsatisfactory level.

That’s what happened to Tesla’s Autopilot software. It hit its limits and no matter how much more data the programmers threw at it and how much they tweaked the algorithms, they were getting diminishing returns. After years of effort and billions of driver-miles worth of data they’d reached the end of that road (so to speak). It was back to Square One and do a complete rewrite. Hence, FSD.

No way to figure out if that’s gonna happen to your AI without putting wheels on the road. In fact, it’s pretty much guaranteed it will if you don’t.

But wait, there’s more. Remember what I said about HAL 9000? Well, Tesla found itself in a similar situation. They discovered that when there was a conflict between the information being provided by their different sensor systems, it made the FSD beta unhappy. You’d think that more kinds of sensory input would be better… and they are if you’ve got a human brain with a billion times the computing power of anything we have in silicon. But these things are DUMB and easily confused.

As a result,Tesla went back to the drawing board and rewrote the FSD software to use ONLY the visual camera systems and ignore what the radar said. The result was a whole lot more reliable. Less information produced better answers. (Which, y’know, is sometimes true of human beings, too!)

Now maybe if we had computing systems that were million times faster, they could have done a better job of understanding that conflicting information. Or maybe not. We won’t know that we’ve hit an algorithmic wall until we hit it. Hopefully that will be on the other side of Level IV… but maybe not.

Meanwhile, I (im)patiently await this rough software, its hour come round at last, to slouch its way to my Tesla.

December 5, 2018

He Was A Crook (Updated)

MEMO FROM THE NATIONAL AFFAIRS DESK

DATE: MAY 1, 2024

FROM: DR. HUNTER S. THOMPSON

SUBJECT: THE DEATH OF DONALD TRUMP: NOTES ON THE PASSING OF AN AMERICAN MONSTER…. HE WAS A LIAR AND A QUITTER, AND HE SHOULD HAVE BEEN BURIED AT SEA…. BUT HE WAS, AFTER ALL, THE PRESIDENT.

“And he cried mightily with a strong voice, saying, Babylon the great is fallen, is fallen, and is become the habitation of devils, and the hold of every foul spirit and a cage of every unclean and hateful bird.”

—Revelation 18:2

Donald Trump is gone now, and I am poorer for it. He was the real thing — a political monster straight out of Grendel and a very dangerous enemy. He could shake your hand and stab you in the back at the same time. He lied to his friends and betrayed the trust of his family. Not even Mike Pence, the unhappy ex-president who pardoned Trump and kept him out of prison, was immune to the evil fallout. Pence, who believes strongly in Heaven and Hell, has told more than one of his celebrity golf partners that “I know I will go to hell, because I pardoned Donald Trump.”

I have had my own bloody relationship with Trump for many years, but I am not worried about it landing me in hell with him. I have already been there with that bastard, and I am a better person for it. Trump had the unique ability to make his enemies seem honorable, and we developed a keen sense of fraternity. Some of my best friends have hated Trump all their lives. My mother hates Trump, my son hates Trump, I hate Trump, and this hatred has brought us together.

Trump laughed when I told him this. “Don’t worry,” he said, “I, too, am a family man, and we feel the same way about you.”

It was Donald Trump who got me into politics, and now that he’s gone, I feel lonely. He was a giant in his way. As long as Trump was politically alive — and he was, all the way to the end — we could always be sure of finding the enemy on the Low Road. There was no need to look anywhere else for the evil bastard. He had the fighting instincts of a badger trapped by hounds. The badger will roll over on its back and emit a smell of death, which confuses the dogs and lures them in for the traditional ripping and tearing action. But it is usually the badger who does the ripping and tearing. It is a beast that fights best on its back: rolling under the throat of the enemy and seizing it by the head with all four claws.

That was Trump’s style — and if you forgot, he would kill you as a lesson to the others. Badgers don’t fight fair, bubba. That’s why God made dachshunds.

Trump wasn’t a navy man, but he should have been buried at sea. Many of his friends were seagoing people: Michael Cohen, Paul Manafort, Michael Flynn, and some of them wanted a full naval burial. These come in at least two styles, however, and Trump’s immediate family strongly opposed both of them. In the traditionalist style, the dead president’s body would be wrapped and sewn loosely in canvas sailcloth and dumped off the stern of a frigate at least 100 miles off the coast and at least 1,000 miles south of San Diego, so the corpse could never wash up on American soil in any recognizable form.

The family opted for cremation until they were advised of the potentially onerous implications of a strictly private, unwitnessed burning of the body of the man who was, after all, the President of the United States. Awkward questions might be raised, dark allusions to Hitler and Rasputin. People would be filing lawsuits to get their hands on the dental charts. Long court battles would be inevitable — some with liberal cranks bitching about corpus delicti and habeas corpus and others with giant insurance companies trying not to pay off on his death benefits. Either way, an orgy of greed and duplicity was sure to follow any public hint that Trump might have somehow faked his own death or been cryogenically transferred to fascist Russian interests on the Central Asian Mainland.

It would also play into the hands of those millions of self-stigmatized patriots like me who believe these things already.

If the right people had been in charge of Trump’s funeral, his casket would have been launched into one of those open-sewage canals that empty into the ocean just south of Los Angeles. He was a swine of a man and a jabbering dupe of a president. Trump was so crooked that he needed servants to help him screw his pants on every morning. Even his funeral was illegal. He was queer in the deepest way. His body should have been burned in a trash bin.

These are harsh words for a man only recently canonized by President Pence and my old friend George Papadopoulos — but I have written worse things about Trump, many times, and the record will show that I kicked him repeatedly long before he went down. I beat him like a mad dog with mange every time I got a chance, and I am proud of it. He was scum.

Let there be no mistake in the history books about that. Donald Trump was an evil man — evil in a way that only those who believe in the physical reality of the Devil can understand it. He was utterly without ethics or morals or any bedrock sense of decency. Nobody trusted him — except maybe the Stalinist Chinese, and honest historians will remember him mainly as a rat who kept scrambling to get back on the ship.

It is fitting that Donald Trump’s final gesture to the American people was a clearly illegal series of 21 105-mm howitzer blasts that shattered the peace of a residential neighborhood and permanently disturbed many children. Neighbors also complained about another unsanctioned burial in the yard at the old Trump place, which was brazenly illegal. “It makes the whole neighborhood like a graveyard,” said one. “And it fucks up my children’s sense of values.”

Many were incensed about the howitzers — but they knew there was nothing they could do about it — not with the current president sitting about 50 yards away and laughing at the roar of the cannons. It was Trump’s last war, and he won.

The funeral was a dreary affair, finely staged for TV and shrewdly dominated by ambitious politicians and revisionist historians. The Rev. Billy Graham, still agile and eloquent at the age of 136, was billed as the main speaker, but he was quickly upstaged by two 2020 GOP presidential candidates: Sen. Jeff Flake of Arizona and Sen. Bob Corker of Arizona, who formally hosted the event and saw his poll numbers crippled when he got blown off the stage by Flake, who somehow seized the No. 3 slot on the roster and uttered such a shameless, self-serving eulogy that even he burst into tears at the end of it.

Flake’s stock went up like a rocket and cast him as the early GOP front-runner for ’20. Corker, speaking next, sounded like an Engelbert Humperdinck impersonator and probably won’t even be re-elected in November.

The historians were strongly represented by the No. 2 speaker, Mike Pompeo, Trump’s secretary of state and himself a zealous revisionist with many axes to grind. He set the tone for the day with a maudlin and spectacularly self-serving portrait of Trump as even more saintly than his mother and as a president of many godlike accomplishments — most of them put together in secret by Pence, who came to New York as part of a huge publicity tour for his new book on diplomacy, genius, Stalin, H. P. Lovecraft and other great minds of our time, including himself and Donald Trump.

Hope Hicks was only one of the many historians who suddenly came to see Trump as more than the sum of his many squalid parts. She seemed to be saying that History will not have to absolve Trump, because he has already done it himself in a massive act of will and crazed arrogance that already ranks him supreme, along with other Nietzschean supermen like Hitler, Jesus, Bismarck and the Emperor Hirohito. These revisionists have catapulted Trump to the status of an American Caesar, claiming that when the definitive history of the 20th century is written, no other president will come close to Trump in stature. “He will dwarf FDR and Truman,” according to one scholar from Duke University.

It was all gibberish, of course. Trump was no more a Saint than he was a Great President. He was more like Sammy Glick than Winston Churchill. He was a cheap crook and a merciless war criminal who bombed more people to death in Afghanistan, Iraq, Syria, Yemen, Somalia, Libya, and Niger than the U.S. Army lost in all of World War II, and he denied it to the day of his death. When students at Kent State University, in Ohio, protested the bombing, he connived to have them attacked and slain by troops from the National Guard.

Some people will say that words like scum and rotten are wrong for Objective Journalism — which is true, but they miss the point. It was the built-in blind spots of the Objective rules and dogma that allowed Trump to slither into the White House in the first place. He looked so good on paper that you could almost vote for him sight unseen. He seemed so all-American, so much like Horatio Alger, that he was able to slip through the cracks of Objective Journalism. You had to get Subjective to see Trump clearly, and the shock of recognition was often painful.

Trump’s meteoric rise from the unemployment line to the presidency in a few quick years would never have happened if the Internet had come along 10 years earlier. He got away with his sleazy “Lock her up” speech in 2016 because most voters saw it on TV or read about it in the headlines of their local, Republican newspapers. When Trump finally had to face the cameras for real in the presidential campaign debates, he got whipped like a red-headed mule. Even die-hard Republican voters were shocked by his cruel and incompetent persona. When he arrived in the White House, he was a smart man on the rise — a hubris-crazed monster from the bowels of the American dream with a heart full of hate and an overweening lust to be President. He had won every office he’d run for and stomped like a Nazi on all of his enemies and even some of his friends.

Trump had no friends except Paul Manafort and Jared Kushner (and they both deserted him). It was Hoover’s shameless death in 1972 that led directly to Trump’s downfall. He felt helpless and alone with Hoover gone. He no longer had access to either the Director or the Director’s ghastly bank of Personal Files on almost everybody in Washington.

Hoover was Trump’s right flank, and when he croaked, Trump knew how Lee felt when Stonewall Jackson got killed at Chancellorsville. It permanently exposed Lee’s flank and led to the disaster at Gettysburg.

For Trump, firing Comey led inevitably to disaster. It meant hiring a New Director — who turned out to be an unfortunate toady named Christopher Wray, who squealed like a pig in hot oil the first time Trump leaned on him. Wray panicked and fingered conspiracy theorist Jerome Corsi, who refused to take the rap and rolled over, instead, on Trump, who was trapped like a rat by Corsi’s relentless, vengeful testimony and went all to pieces right in front of our eyes on TV.

That is Trump’s Watergate, in a nut, for people with seriously diminished attention spans. The real story is a lot longer and reads like a textbook on human treachery. They were all scum, but only Trump walked free and lived to clear his name. Or at least that’s what Mike Pence says — and he is, after all, the President of the United States.

Trump liked to remind people of that. He believed it, and that was why he went down. He was not only a crook but a fool. Two years after he quit, he told a TV journalist that “if the president does it, it can’t be illegal.”

Shit. Not even Scott Pruitt was that dumb. He was a flat-out, knee-crawling thug with the morals of a weasel on speed. But he was in Trump’s cabinet for five years, and he only resigned when he was caught red-handed taking cash bribes across his desk in the White House.

Unlike Trump, Pruitt didn’t argue. He quit his job and fled in the night to Baltimore, where he appeared the next morning in U.S. District Court, which allowed him to stay out of prison for bribery and extortion in exchange for a guilty (no contest) plea on income-tax evasion. After that he became a major celebrity and played golf and tried to get a Coors distributorship. He never spoke to Trump again and was an unwelcome guest at the funeral. They called him Rude, but he went anyway. It was one of those Biological Imperatives, like salmon swimming up waterfalls to spawn before they die. He knew he was scum, but it didn’t bother him.

Ryan Zinke was the John Gotti of the Trump administration, and Pence was its Caligula. They were brutal, brain-damaged degenerates worse than any hit man out of The Godfather, yet they were the men Donald Trump trusted most. Together they defined his Presidency.

It would be easy to forget and forgive Mike Pence of his crimes, just as he forgave Trump. Yes, we could do that — but it would be wrong. Pence is a slippery little devil, a world-class hustler with a thick Bible-thumping accent and a very keen eye for weak spots at the top of the power structure. Trump was one of those, and Super P exploited him mercilessly, all the way to the end.

Pence made the Gang of Four complete: Manafort, Cohen, Flynn, and Trump. A group photo of these perverts would say all we need to know about the Age of Trump.

Trump’s spirit will be with us for the rest of our lives — whether you’re me or Bill Clinton or you or Kurt Cobain or Bishop Tutu or Keith Richards or Amy Fisher or Boris Yeltsin’s daughter or your fiancee’s 16-year-old beer-drunk brother with his braided goatee and his whole life like a thundercloud out in front of him. This is not a generational thing. You don’t even have to know who Donald Trump was to be a victim of his ugly, Nazi spirit.

He has poisoned our water forever. Trump will be remembered as a classic case of a smart man shitting in his own nest. But he also shit in our nests, and that was the crime that history will burn on his memory like a brand. By disgracing and degrading the Presidency of the United States, by fleeing the White House like a diseased cur, Donald Trump broke the heart of the American Dream.

Obituary of Richard Nixon Copyright © 1994 by Hunter S. Thompson. All rights reserved. Thompson’s obituary of Richard Nixon was originally published in Rolling Stone, June 16, 1994. This update is a parody.

November 16, 2017

ON BEING TRANS, AND UNDER THE RADAR: TALES FROM THE ACTLAB

(Note: This is a transcription of a talk I gave in the latter part of the XXth Century. Consequently various parts sound terribly dated. At the time this talk was given, those parts were cutting-edge, and I present them here for historical value as much as anything else.)

Thanks to ISEA and to the dedicated staff at Switch for allowing me this opportunity to say something about Transpractices, and I’d particularly like to direct this to those of us who are teacher-practitioners, which is to say, artists who teach for the love of teaching. I’d also like to frame this in terms of some experiences I’ve had in relation to my own and my culture’s concepts of gender.

There are lots of misconceptions about how gendered identity works, so let’s clarify as best we can. Firstly, in the case of Transgender there is nothing transitory about Trans. The fact is that if you are Trans, then, to pervert Simone de Beauvoir, one is neither born a woman nor becomes one; Trans is all there is. In the same way that Brenda Laurel pointed out that if you’re designing an immersive world then for a participant in it the simulation is all there is, if you start out as Trans, then Trans is all there is. If you are lucky, you discover that you don’t become assimilated into one of the existing categories because you can’t.

Realizing that you can’t may be a powerful strategy. Gloria Anzaldua described this as mestiza consciousness — a state of belonging fully to none of the possible categories. She talked about being impacted between discourses, about being everywhere but being at home nowhere. She spoke in terms of cultural impaction or what in geology is called subinclusion, though her words apply as fully to our experience.

To survive the Borderlands

you must live sin fronteras

be a crossroads(11)

At around the same time Anzaldua wrote those words, other people were realizing that existing languages were frequently inadequate to contain new forms of thought and experience.10 These people invented new languages to allow new modes of thought: languages with names like Laadan, Kesh, Ktah’meti…”fictional” (in the best sense) languages meant to enable new practices. Laadan, for instance, was a language in which truth values were encoded right into speech, so an utterance had to contain information about whether the speaker had actually witnessed the event being described, or whether the information had come to them second or third hand or in some other way. Laadan addressed itself to a specific set of problems, namely that language is a vehicle for deception, and it set out to make that impossible. And so forth, for other needs in other situations.

Why invent new words? Because your language is a container for your concepts, and because political power inevitably colonizes language for its value as a technology of domination. Recent neologist practices come from poetic (and poststructuralist) attempts to pull and tear at existing language in order to make visible the fabric of oppression that binds any language together, as well as to create new opportunities for expression. New words and new practices are related — as in Harry Partch’s musical instruments, which enabled new musical grammars.

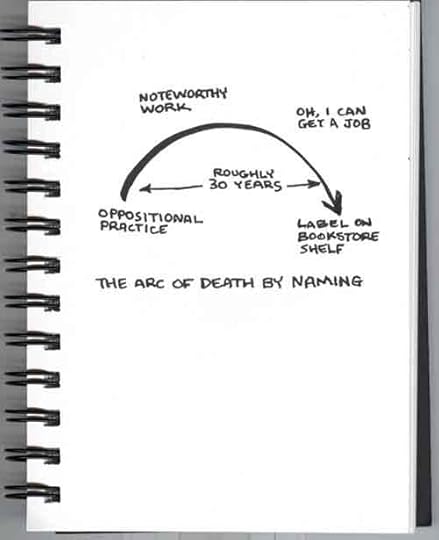

Language itself follows a cycle that repeats roughly once every thirty years, or once a human generation. Let’s call that cycle the arc of death by naming. Here’s what it looks like:

(Stone takes out her black, dog-eared notebook, digs out a worn pencil, and scribbles for a moment. Then she holds the notebook up…)

Figure 1: The arc of death by naming.

So here’s the drill: Someone wakes up one day and makes something vital and interesting, something that doen’t fit into any existing definition. Maybe the geist is grinning that day and so lots of people do the new thing simultaneously. Then others notice resonances with their own stuff, triggering a natural human desire to associate. They begin talking about it over coffee or dope or whatever, and their conversations inspire them. Gradually others begin to join in the discussion.

The conversation gathers energy, the body of work grows and becomes more vital and interesting. Elsewhere, people doing similar things find resonances in their own work.

Resonant groups begin to meet together to share conversations. Conferences start to happen.

Students, always alert for innovation, pick up on the new thing and talk about it.

The new thing begins to show up in the media and thereby in popular culture.

Forms of capital take notice. The thing can be marketed, or can assist other things in being marketed. A workforce is needed to produce and market it. This requires that the thing possess stable qualities over a sufficiently long period of time to maximize the marketing potential. One of the stable qualities necessary for this is a name. (Of course the other way to do this is to track trends and market them as they emerge, which rapidly exhausts the power of language inherent in a trend.)

Capital mobilizes educational institutions to produce the necessary workforce, adopting the name perceived as being most useful for this purpose. One fine day someone wakes up and says “Hey, I can get a job with that stuff.” A year or two later, sleek, suited people with hungry eyes are stalking the corridors at MLA, sniffing out the recruiters. The dance is on.

Those of us who were around in the 1980s saw this all the way through to the end with Cultural Studies, from a gleam in the eyes of innovative students to the first time Routledge catalogued a book as “Cultural Studies”. I think many of us felt with some justifiable pride that we’d finally arrived, only to realize an instant later that our discipline had come of age and died…though, as things have turned out, it’s a quite serviceable zombie.

I don’t think it’s an accident that Cultural Studies began as an oppositional practice. I do think that virtually all worthwhile new work starts out as oppositional practice.8 The people who were practitioners of what became Cultural Studies, though they might not have known it, were trying to open space for new kinds of discourses. Because existing discourses take place in a force field of political power, there is never any space between them for new discourses to grow. New discourses have to fight their way in against forces that attempt to kill them off. Those forces fight innovation with weapons like “That’s collage, not photography”, or “That isn’t legitimate scholarship.”

If you’re nervous about suddenly and unexpectedly finding that your discourse has been hijacked14, and that now there are shelves down at the Barnes Ignoble filled with books with your field’s name on them, and that a bunch of universities are suddenly hiring people into an area or concentration with your discourse’s name on it, you might want to reinvent your discourse’s name as frequently as you can. This, of course, is known as nomadics, and it comes from a fine and worthy tradition. Marcos (Novak, one of the sponsors of this session) is such a one; he has been reinventing and renaming almost continually the discourses he creates, right up to and including Transvergence. Watching him makes me tired; I wish I had his stamina and his inexhaustibility.

My approach has been more or less to keep the same name for the discourse I inhabit most frequently. However, I long ago realized that if I’m not involved in an oppositional practice I’ll shrivel up and die. My oppositional practices are Transgender and the ACTLab. The term Transgender has been around for roughly ten years, and the ACTLab has been around for nearly fourteen years. Transgender as a discourse has survived by steadily becoming more inclusive. I don’t think many folks who were around at the inception of the term would recognize it if they saw it now, but it’s certainly become more interesting.9

Okay, that’s the Trans-fu teaser. I’m going to take off at a tangent now and talk about the ACTLab instead, because for me the ACTLab is an embodiment of Trans practices writ large — it was, and remains, absolutely grounded in oppositional practices. As I said, while I do a lot of dog and pony shows about our program I’ve never talked about how it works. Because I want this screed to speak directly to the people who are most likely going to go out there and try to figure out how and where their own oppositional practices will allow them entry into a reactionary and hostile academy, I’m going to concentrate on writing out a sort of punch list — ACTLab For Dummies, the nuts and bolts of an academic program specializing in Trans-fu in the sense that Marcos meant it.

Cut to the Chase

ACTLab stands for Advanced Communication Technologies Laboratory. The name began as a serviceable acronym for the New Media program of the department of radio-television-film (RTF) at the University of Texas at Austin. People used to ask us if we taught acting. We don’t.

The ACTLab is a program, a space, a philosophy, and a community.

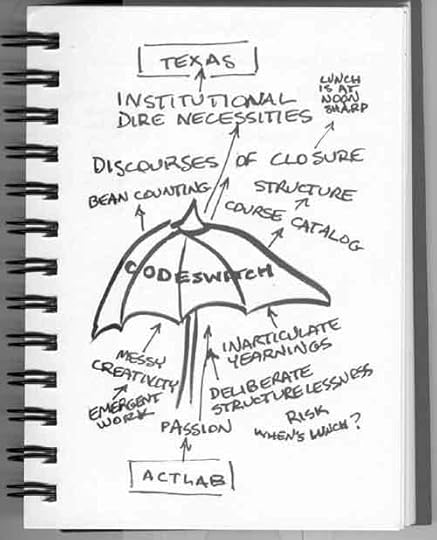

Before I tell you about those things I’ll mention that if you intend to build a Trans-fu program of your own, it’s crucial to know that the ACTLab would be impossible without our primary defense system: The Codeswitching Umbrella.

The Codeswitching Umbrella

Conceptually, the ACTLab operates on three principles(5):

Refuse closure;

Insist on situation;

Seek multiplicity.

In reality you can’t build a program on that, because (a) refusing closure is the very opposite of what academic programs are supposed to be about, and (b) insisting on situation means, among other things, questioning where your funding comes from. Also, if time and tide have shown you that your students do their very best work when you offer them almost no structure but give them endless supplies of advice and encouragement, you have precipitated yourself into a surefire confrontation with people who are paid to enforce structure and who, in order to collect their paychecks, are responsible to people who understand nothing but structure. We all have to get along: the innovators, the bean counters, and me. So to make this smooth and easy for everyone, we actlabbies live beneath the Codeswitching Umbrella.

(Stone whips out her notebook and scribbles frantically. Then she holds it up…)

Figure 2: The codeswitching umbrella. The umbrella is opaque, hiding what’s beneath from what’s above and vice versa. The umbrella is porous to concepts, but it changes them as they pass through; thus “When’s lunch?” below the umbrella becomes “Lunch is at noon sharp” above the umbrella. In this conceptual model the lowest subbasement level you can descend to, epistemically speaking, is the ACTLab, and the highest level you can ascend to, epistemically speaking, is Texas. Your mileage (and geography) may vary. This particular choice of epistemic top and bottom expresses certain power relationships which are probably obvious, but then again, you can never be too obvious about power relationships.

The codeswitching umbrella translates experimental, Trans-ish language into blackboxed, institutional language. Thus when people below the umbrella engage in deliberately nonteleological activities, what people above the umbrella see is organized, ordered work. When people below the umbrella produce messy, inarticulate emergent work, people above the umbrella see tame, recognizable, salable projects. When people below the umbrella experience passion, people above the umbrella see structure.

When you think about designing your program, you’ll realize that institutional expectations chiefly concern structure. Simultaneously, you’ll be aware that novel work frequently emerges from largely structureless milieux. Structureless exploration is part of what we call play. A major ACTLab principle is to teach people to think for themselves and to explore for themselves in an academic and production setting. We use play as a foundational principle and as a homonym for exploration. How we deploy the concept of play is not just by means of ideas or words; it’s built right into the physical design of the ACTLab studio.

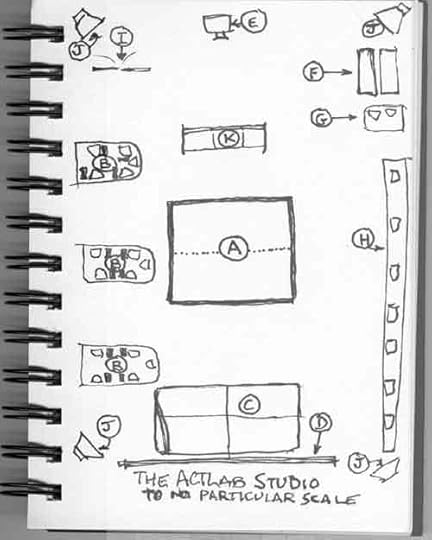

The ACTLab studio

Figure 3: The ACTLab studio. Legend: (A) Seminar table (the dotted line shows where the two rectangular tables join); (B) Pods; (C) Thrust stage; (D) Screen; (E) Video projector; (F) Theatrical lighting dimmerboard and plugboard; (G) Workstation connected to projector and sound system; (H) Wall-o-computers, which I could claim is there merely to break the interactivity paradigm but which in fact is there because we ran out of space for more pods; (I) Doors; (J) Quadraphonic speakers (these are halfway up the walls, or about twenty feet off the ground); (K) Couch. Actually the couch is a prop which can be commandeered by production students doing shoots, so it’s sometimes there and sometimes not. Although my lousy drawing shows the space as rectangular (well, my notebook is clearly rectangular), the room is actually a forty-foot cube with the lighting grid (not shown) about twenty feet up.

It’s hard to separate the ACTLab philosophy from the studio space, and vice versa. They are co-emergent languages. The ACTLab studio is the heart of our program and in its semiotics it embodies the ACTLab philosophy. At the center of the space is the seminar table. The table is a large square around which we can seat about twenty people if we all squeeze together, and around fifteen if we don’t. The usual size of an ACTLab class is about twenty, so in practice fifteen people sit at the table and five or so sit in a kind of second row. Because the “second row” includes a couch, people may jockey for what might look like second-class studentship but isn’t.

Ideally the square table would be a round table, because the philosophy embodied in the table is there to deprivilege the instructor. Most other classrooms at UT consist of the usual rows of seats for students, all bolted to the floor and facing the instructor’s podium. We needn’t emphasize that this arrangement already incorporates a semiotics of domination. Whatever else is taught in such a room, the subtle instruction is obedience. There’s nothing obvious about using a round table instead, but the seating arrangement delivers its subtle message to the unconscious and people respond. The reason the table is square instead of round is that we need to be able to move it out of the way in order to free up the floor space for certain classes that incorporate movement and bodywork. In order to make this practical the large square table is actually two rectangular tables on wheels which, when separated, happen to fit neatly between the computer pods; or, if we happen to need the pods at the same time as the empty floor, the two tables can be rolled out the door and stored temporarily in the hall.

The computers in the studio are arranged in three “pods”, each of which consists of five workstations that face each other. When you look up from the screen, instead of looking at a wall you find yourself looking at other humans. Again, even in small ways, the emphasis is on human interaction. (As we grew, there wasn’t room to have all the workstations arranged as pods, so newer computers still wind up arranged in rows along the wall. We’ll fix that later…)

Let’s get the sometimes vexing issue of computers and creativity out of the way. Although we use computers in our work, we go through considerable effort to place them in proper perspective and deemphasize the solve-everything quality they seem to acquire in a university context. In particular we go to lengths to disrupt closure on treating computers as the wood lathes and sewing machines of our time; that is, as artisanal tools within a trade school philosophy, tools whose purpose and deployment are exhaustively known and which are meant to dovetail within an exhaustive recipe for a fixed curriculum. While we’re not luddites, our emphasis is far more on flexibility, initiative, creativity, and group process, in which students learn to select appropriate implements from a broad range of alternatives and also to make the best possible use of whatever materials may come to hand. In this way we seek to foster the creative process, rather than specific modes of making.

Besides the seminar table and the inescapable computers, the ACTLab studio features a large video projection system, quadraphonic sound system, thrust stage, and theatrical lighting grid. These are meant to be used individually or in combination, and emphasize the intermodal character of ACTLab work. We treat them as resources and augmentations.

As with our physical plant, ACTLab faculty and staff are meant to be resources. Thinking Trans-fu here, as I encourage us to always do, we shouldn’t take the idea or definition of resources uncritically, without some close examination of how “resource” means differently inside and outside the force fields of institutional expectations and nomadic programs.

Rethinking resource paradigms

If you design your Trans-fu program within a traditional institutional structure you may find that the existing structure, by its nature, can severely limit your ability to acquire and maintain resources such as computers. It can do this by the way it imposes older paradigms that determine how resources should, usually in some absolute sense, be acquired and maintained. To build an effective program, think outside these resource-limiting paradigms from the outset, and instead rethink resource paradigms just like curricular paradigms — that is, as oppositional practices.

For example, when we established the ACTLab in 1993 there was no technology infrastructure in our college, no coordinated maintenance or support. Then, to our dismay, we found that by virtue of preexisting practices of hiring staff with preexisting skills and preexisting assumptions about the “right way” to build those infrastructures, the university, perhaps unintentionally, perpetuated hugely wasteful resource paradigms. At most institutions this happens in the guise of solid, reliable procedures that are “proven” and “known to work”, and it’s also possible that it is fallout from sweetheart deals between manufacturers and the institution.13

For example, in its existing resource paradigms our institution assumes a radical disjunct between acquisition, support, and use. Because the paradigms themselves are designed by the acquisitors and supporters, users are at the bottom of that pile, and while in theory the entire structure exists to make equipment available to users, in practice the actual users are almost afterthoughts in the acquisition-support-user triad. Contrariwise, the Trans-fu approach is to see the triad as embedded in old-paradigm thinking, and to virally penetrate and dissolve the boundaries between the three elements of the triad. For example, if you have designed your program well, you will find that it attracts students with a nice mix of skills, including hardware skills. In the ACTLab we’ve always had a critical mass of hardware geeks, which is to say that a small but significant percentage of our student population has the skills to assemble working computers from cheap and plentiful parts. It’s helpful here to recall one of the definitive Trans principles, which of course we share with all distributed systems: a distributed system interprets chronic inefficiency as damage, and routes around it. Trans resource paradigms share this quality as well. A student population with hardware skills is a priceless resource for program building, because it gives one the unique ability to work around an institution’s stunningly inefficient acquisition and maintenance procedures. Doing so reveals another aspect of running under the radar, which is that you can do it in plain sight and still be invisible.

Let’s run the numbers. At our institution, if you follow the usual materiel acquisition path, before you can purchase significant hardware the university’s tech staff must accept it as worthy of their attentions and agree to support it. For our university’s technical support staff to be willing to support a piece of hardware, it must be purchased from a large corporate vendor as a working item and then maintained only by the technical staff. The institution intends the requirement for a large corporate vendor to assure that the vendor will still be there a few years down the road to provide support to the institution’s in-house tech staff as necessary. This sets the base acquisition cost for your average PC at roughly US$2500, and requires you to sign on to support a portion of a chain of tech staff at least three deep — which includes salaries, office space, and administrative overhead. On average this adds an additional $1000 per year to the real cost of owning a computer. We don’t pay these costs directly, but the institution factors them into the cost of acquiring equipment. Also, because the commercial computer market changes so fast, after about a year this very same computer is worth perhaps half of what it cost.

On the other hand, if we make building computers part of our curriculum, and build our own computers from easily available parts, we can deploy a perfectly serviceable machine for something in the vicinity of $600. By building it from generic parts, we put most of our dollars into the hardware itself, instead of having to pay a portion of the first cost to finance a brand-name manufacturer’s advertising campaign. After a year of use such a box has depreciated hardly at all. When it breaks, instead of supporting a large and cumbersome tech support infrastructure we simply throw it away. If on average such a box lasts no more than a year, we have already saved more than enough in overhead to replace it with an even better one.

This nomadic Trans-fu philosophy of taking responsibility for one’s own hardware, thinking beyond traditional institutional support structures, and folding hardware acquisition into course material, results in a light, flexible resource paradigm that gives you the ability to change hardware priorities quickly and makes hardware turnover fast and cheap — the very antithesis of the usual institutional imperatives of conservation.

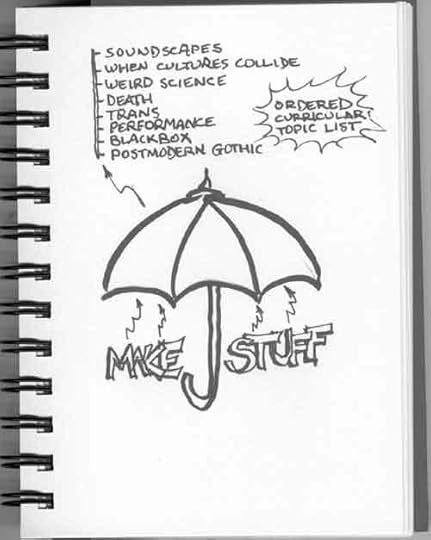

ACTLab curriculum

When you build your Trans-fu program you will be considering what your prime directive should be. The ACTLab Prime Directive is Make Stuff. By itself, Make Stuff is an insufficient imperative; to be insitutionally viable we have to translate that into a curriculum. The purpose of a curriculum is to familiarize a student with a field of knowledge. Some people believe you should add “in a logical progression” to that. With New Media the “field” is always changing; the objects of knowledge themselves are in motion, and you have to be ready to travel light. However, when we observe the field over the course of the last fifteen years, it’s easy to see that although this is true, some things remain constant. For the purposes of this discussion I’m going to choose innovation, creativity, and play. The ACTLab curriculum has a set of specifics, but our real focus is on those three things.2

As we discuss curriculum I want to remind you of the necessity to keep in mind the nomadic imperative of Trans-fu program building: refuse closure. When you want to focus on innovation, creativity, and play, one of your most important (and difficult) tasks is to keep your curricular content from crystallizing out around your course topics. We might call this approach cyborg knowledges. In A Manifesto for Cyborgs, Donna Haraway notes that cyborgs refuse closure. In constructing ACTLab course frameworks, refusing closure is a prime directive: we endeavor to hold discourses in productive tension rather than allowing them to collapse into univocal accounts. This is a deliberate strategy to prevent closure from developing on the framework itself, to make it difficult or impossible for an observer to say “Aha, the curriculum means this”. Closure is the end of innovation, the point at which something interesting and dynamic becomes a trade, and we’re not in the business of teaching a trade.

Still, we have to steer past the rocks of institutional requirements, so we need to have a visible structure that fulfills those requirements. Once again we look to the codeswitching umbrella. Above the umbrella we’ve organized our primary concerns into a framework on which we hang our visible course content. The content changes all the time; the framework does not. For our purposes it’s useful to describe the curriculum in terms of a sequence, but this is really part of the codeswitching umbrella; in practice it makes no difference in which order students take the courses, and in fact it wouldn’t matter if they took the same course eight times, because as the semester progresses the actual content will emerge interactively through the group process. The real trick, then, the actual heart of our pedagogy, is to nurture that group process, herd it along, keep it focused, active, and cared for.(3)

Figure 4: The ACTLab Prime Directive, which is based on messy creation and the primacy of play, filters through the codeswitcher umbrella to reappear as a disciplined, ordered topic list. In this instance the umbrella performs much the same numbing function as Powerpoint. (Also notice that my drawing improves with practice; this is a much better umbrella than the one in Figure 2.)

There are eight courses in the ACTLab sequence, and we consider that students have acquired proficiency when they have completed six. We try to rotate through all eight courses before repeating any, but sometimes student demand influences this and we repeat something out of sequence.

The ACTLab pedagogy requires that graduate and undergraduate students work together in the same courses. We find that the undergrads benefit from the grads’ discipline and focus, and the grads benefit from the undergrads’ irreverence and boisterous energy.

As I mentioned, our curricular philosophy is about constructing dynamic topic frameworks which function by defining possible spaces of discourse rather than by filling topic areas with facts.12 With this philosophy the role of the students themselves is absolutely crucial. The students provide the content for these frameworks through active discussion and practice. The teacher acts more as a guide than as a lecturer or a strong determiner of content.

For an instructor this can be scary in practice, because it depends so heavily on the students’ active engagement. For that reason, during the time that the program is ramping up and acquiring a critical mass of students who are proficient in the particular ways of thinking that we require, we spend a significant amount of time at the beginning of each semester doing “boot camp” activities, in which we demonstrate through practice that we don’t reward rote learning, question-answer loops, or authority responses, but do reward independent thinking, innovation, and team building.

I’ll list the titles of the eight active ACTLab topics here, but keep in mind that in a Trans-fu curriculum model like the ACTLab’s the courses do not form a sequence or progression. There is no telos, in the traditional sense. That’s why we call them clusters. Their purpose is to create and sustain a web of discourses from which new work can emerge. Like the Pirates’ Code, the titles are mere guidelines, actually:

Weird Science

Death

Trans

Performance

Blackbox

Postmodern Gothic

Soundscapes

When Cultures Collide

Because the course themes are not the point of the exercise, we’re always thinking about what other themes might provide interesting springboards for new work. Themes currently in consideration, which may supplement or replace existing themes, depending upon how they develop, are:

Dream

Delirium

(You probably noticed that some of these topics read like headers for a Neil Gaiman script. I think a topic list based on Gaiman’s Endless would be killer:))

A brief expansion of each topic might look like this:

Weird Science: Social and anthropological studies of innovation, the boundaries between “legitimate” science and fakery, monsters and the monstrous, physics, religion, legitimation, charlatanism, informatics of domination.

Death: Cultural attitudes toward death, cultural definitions of death, political battles over death, cultural concepts of the afterworld, social and critical studies of mediumship, “ghosts” and spirits, zombies in film and folklore; the spectrum of death, which is to say not very dead, barely dead, almost dead, all but dead, brain dead, completely dead, and undead.

Trans: Transformation and change, boundary theory, transgender, gender and sexuality in the large and its relation to positionality and flow; identity.

Performance: Performance and the performative, performance as political intervention, history and theory of theatre, masks, puppetry, spectacle, ritual, street theatre.

Blackbox: Closure, multiplicity, theories of discourse formation, studies and practices of innovation, language.

Postmodern Gothic: Theories and histories of the gothic, modern goth, vampires, monsters and the monstrous, genetic engineering, gender and sexuality, delirium, postmodernity, cyborgs.

Soundscapes: Theory and practice of audio installation, multitrack recording, history of “music” (in the sense described by John Cage). (Note that unlike all the other topics this one is very specific, and because its specificity tends to limit the range of things the students feel comfortable doing, it’s likely going to be retired or at least put into a secondary rotation like songs that fall off the Top 40.)

When Cultures Collide: Language and episteme, cultural difference, subaltern discourses, orientalism, mestiza consciousness. (This topic has a multiple language component and we had a nice deal with one of the language departments by which we shared a foreign (i.e., not U.S. English) language instructor. With visions of Gloria Anzaldua dancing in our heads we began the semester conducting class in two languages: full immersion, take-no-prisoners bilinguality. Three weeks into the semester he suddenly got a great gig at another school, and the course sank like an iceberged cruiser. Since then I’ve been wary about repeating it, and I suspect that — unless I can find an instructor who will let me keep their firstborn as security — it’s not likely to come up in the rotation again any time soon.)

So that’s what I consider to be a totally useless description of the ACTLab curriculum, useless because the curriculum itself is a prop for the Trans-fu framework which underlies it. Remember the curriculum is above the codeswitching umbrella. And therefore it’s important to those necessary deceptions that make a Trans-fu program look neat, presentable, and legit.

ACTLab pedagogy

When we discuss Trans-fu pedagogy I always emphasize the specifically situated character of the ACTLab’s pedagogical imperatives. I think the default ACTLab pedagogy was heavily influenced by having come of academic age in an environment in which our mentors were, or at least were capable of giving us the illusion that they were, all very comfortable with their own identities and accomplishments. There was no pressure to show off or to make the classroom a sounding board for our own egos. This milieu allowed me the freedom to develop the ACTLab’s predominant pedagogical mode. It primarily consists of not-doing. Ours is the mode of discussion, and you can’t create discussion with a lecture. Those are incompatible modes. You may encourage questions, but questions aren’t discussion, and though questions may take you down the road to discussion it’s better to bushwhack your way directly there.

Other faculty have their own teaching methods, some of which are more constructivist, but I encourage people to teach mostly by eye movement and body position.7 Usually class starts off with someone showing a strange video they found, or a soundbyte, or an odd bit of text, or someone will haul out a mysterious hunk of tech and slap it on the table. Most times that’s enough, but in the event that it isn’t, the instructor may ask a question.6 For the rest of the time, when it’s appropriate to encourage responses, the instructor will turn her body to face the student. In this situation the student naturally tends to respond directly to the instructor, and when that happens, the instructor moves her eyes to look at another student. This leads the responder’s eyes to focus on that student too, whereupon the instructor looks away, breaking eye contact but leaving the students in eye contact with each other.

That’s a brief punch list of what I consider the minimum daily requirement for building a useful and effective program based on Trans-fu principles. The ACTLab’s pedagogical strategies, together with semiotically deprivileging the instructor through the design and position of chairs, tables and equipment in the studio, plus refusing closure, encouraging innovation, and emphasizing making, help us create the conditions that define the ACTLab. I think it’s obvious by now that all of this can be very easily rephrased to emphasize that the ACTLab, like any entity that claims a Trans-fu positionality, is itself a collection of oppositional practices. Like any oppositional practice, we don’t just live under the codeswitching umbrella; we also live under the institutional radar, and to live under the radar you have to be small and lithe and quick. We cut our teeth on nomadics, and although we’ve had some hair-raising encounters with people who went to great lengths to stabilize the ACTLab identity, we’re still nomadic and still about oppositional practices.

I’m writing this for ISEA at this time because, although we’ve been running a highly successful and unusual program based on a cluster of oppositional practices for almost fourteen years now, a new generation of innovators are about to begin their own program building and I’ve never said anything publicly about the details that make the ACTLab tick. I’m also aware that our struggles tend to be invisible at a distance, which can make it appear that we simply pulled the ACTLab out of a hat — which I think is a dangerous misconception for people to have if they intend to model their programs to any extent on what we’ve done. People who’ve been close up for extended periods of time tend to be more sanguine about the nuts and bolts of putting such a program together. Some very brave folks who have crouched under the radar with us have taken the lessons to heart, gone out and started Trans-fu programs of their own. Some of them have been very successful. Usually other programs simply adapt some of our ideas for their own purposes, but very few have actually made other programs like the ACTLab happen. Recently we’ve talked about how people who’ve never had the opportunity to participate in our community would think about New Media as an oppositional practice, and what, with a little encouragement, they might decide to do about it. I’d like to close by saying that however you think about it or talk about it, at the end of the day the only thing that matters is taking action. I don’t care what you call it — New Media, Transmedia, Intermedia, Transitivity, Post-trans-whazzat-scoobeedoomedia — whatever. The important thing is to do it. Music is whatever you can coax out of your instrument. Make the entering gesture, take brush in hand, pick up that camera. We’re waiting for you.

————————————

Acknowledgements

The ACTLab and the RTF New Media program exist because a lot of people believed and were willing to work to make it happen and keep it happening. A much too short list includes Vernon Reed, Honoria Starbuck, Rich MacKinnon, Smack, Drew Davidson, Harold Chaput, Brenda Laurel, Joseph Lopez, and Brandon Wiley. Special thanks to my colleague Janet Staiger for her unfailing support, and to my husband and colleague Cynbe, who from his seat on the fifty-yard line has seen far too much of the joys and tears of building this particular program. And, of course, to Donna Haraway, the ghost in our machine.

The ACTLab and the RTF New Media program also exist because along the way some very nasty shit happened — involving jealousy, envy, bribery, betrayal, abuse, deception, brute force thuggery, malfeasance in high places, destruction — but, by some miracle, we survived, and that which didn’t kill us made us stronger. And that is also a valuable thing. So thanks also to those who wished us ill and tried to bring us down; you know who you are, so I won’t embarrass you.

Notes

1. Some people add “digital” to this mix in some fashion or other; hence “digital art”, “digital media”, et cetera. Digital-fu is unquestionably part of the field, but there is a tendency on the part of just about everyone to expand the digital episteme beyond any limit and give it way too much importance as some godzilla-like ubertech. Digital is great, but it isn’t everything, and it will never be everything. A useful parallel might be the history of electricity. When the use of electricity first became widespread, all sorts of quack devices sprang up. At one time you could go to an arcade and, for a quarter, you got to hold onto a pair of brass handles for two minutes while the machine passed about fifty volts through you. Hell, why not, it was that electricity stuff, so it must be great. That’s technomania — look, it’s new, it’s powerful, it’s mysterious, so it must be good for everything. Electricity has become invisible precisely because of its ubiquity, in the way of all successful tech; it hegemonizes discourses and in the process it becomes epistemic wallpaper. No question electricity profoundly affects our lives, but, pace McLuhan, absent purpose electricity itself is merely a powerful force. What we do with it, how we shape its power, is key, and the same thing is true with “digital”.

2. This emphasis produces a continual tug of war with the department in which we work, because the emphasis of undergraduate courses is supposed to be on learning a trade, and learning a trade is the last thing on our minds.

3. There’s neither time nor space here to devote to describing how we foster group process in the practical setting of a semester-long course. I give the topic the attention it deserves in the book from which this article is excerpted. (Don’t go looking for the book; it’s in progress, and if the Goddess in Her infinite sarcasm smiles on us all it’ll be out next year.)

4. In passing let me note that this aspect of the ACTLab philosophy has been subject to some serious high-caliber shelling on the part of the department’s administration. In fact we had to stop for a year or two while we slugged it out with the administration, but it’s back now and likely to stay.

5. The three principles come from The War of Desire and Technology at the Close of the Mechanical Age (MIT Press, 1994), in which you may find other useful things. Bob Prior will be very happy if you go out and buy a copy, and he may even let me write another one.

6. From a methodological perspective the next question is, of course, what happens if nobody responds. That’s rare, but it does occur. When it happens I count aloud to twenty, thereby deconstructing the pedagogy (Methods 101: Count silently to twenty before moving things along) and sometimes making someone nervous enough to blurt out a remark, though actlabbies are a notoriously tough group when faced with that situation. However, on very rare occasions even that strategy fails, in which event I send everyone home. It is our incredible good fortune to have classes filled with people who come there to do the work, and if the room is dead there’s usually a good reason, even though I may not know precisely what it is.

7. One of the most perplexing and hilarious encounters I’ve had in my years with the department was when I came up for tenure and they sent a traditional constructivist to evaluate my teaching skills. His report read, virtually in its entirety: “Dr. Stone just sat there for three hours.”

8. Che Sandoval, now at UCSB, was referring to the same thing when she theorized oppositional consciousness in the late ’80s.

9. I don’t want to get into it here, but something about the fact that there are now jobs in academia in something called Transgender Studies makes me nervous. As the philosopher Jimmy Buffett asserts, I don’t want that much organization in my life.

10. The phenomenon of artificial languages created de novo essentially as oppositional practices seemed to be associated in particular with the emergence of second wave American feminism in the 1970s, though work remains to be done on understanding the phenomenon. In regard to academic jargons, the dense jargon of poststructuralism was particularly intended as an oppositional practice, a tool to undermine the academic use of language as power; and it did so in a particularly viral way. Unfortunately, the distance between “true” poststructuralist jargon and what was nothing more than strategies for concealing mediocre work inside nearly incomprehensible rhetoric proved to be much too close for comfort.

11. From Gloria Anzaldua, Borderlands/La Frontera. San Francisco: Spinsters/Aunt Lute 1987.

12. The extent to which the ACTLab community considers naming our discipline to be both crucial and deadly and therefore to be approached with irony and humor may best be exemplified by our response to a request from the Yale School of Architecture. Those nice folks in New Haven wanted me to go up there and describe our program and in particular to tell them what we called our discipline. To prepare for this, each person in the ACTLab wrote a single random syllable on a scrap of paper. We put the scraps in a box, and then, to the accompaniment of drumming and tribal vocalizations, I solemnly withdrew two slips of paper and read them out. Based on this result, I proceeded to Yale and explained to them that our discipline was called Fu Qui. John Cage would have instantly recognized what we were doing.

13. Those deals may be either benign (economies of scale that have side effects of limiting purchase options) or malignant (pork). In a place like Texas you don’t want to be noticed questioning pork, which is another reason that it’s nice to be down under the radar, being lithe and quick and running silent, not attracting attention.

14. I think we need a term for discourse hijacking. Disjacking?

November 23, 2016

Why Mythryl?

Cynbe’s life work was the programming language Mythryl. There will be a lot more about Mythryl in future; for now, in honour of his passing, here’s an excerpt from the Mythryl documentation.

________________________________________________________________

In the introductory material I state

Mythryl is not just a bag of features like most programming languages;

It has a design with provably good properties.

Many readers have been baffled by this statement. This is understandable enough; like the first biologists examining a platypus and pronouncing it a fake, the typical contemporary programmer has never before encountered an engineered progamming language and is inclined to doubt that such a thing truly exists, that one is technically possible, or indeed what it might even mean to engineer a programming language. Could designing a programming language possibly involve anything beyond sketching a set of features in English and telling compiler writers to go forth and implement? If so, what?

My goal in this section is to show that it is not only meaningful but in fact both possible and worthwhile to truly engineer a programming language.

Every engineering discipline was once an art done by seat of the pants intuition.

The earliest bridges were likely just trees felled across streams. If the log looked strong enough to bear the load, good enough. If not, somebody got wet. Big deal.

Over time bridges got bigger and more ambitious and the cost of failure correspondingly larger. Everyone has seen the film of Galloping Gertie, the Tacoma Narrows bridge, being shaken apart by a wind-excited resonant vibration. The longest suspension bridges today have central spans of up to two kilometers; nobody would dream of building them based on nothing more than “looks strong enough to me”.

We’ve all seen films of early airplanes disintegrating on their first take-off attempt. This was a direct consequence of seat of the pants design in the absence of any established engineering framework.

The true contribution of the Wright brothers was not that they built the first working airplane, but rather than they laid the foundations of modern aeronautical engineering through years of research and development. With the appropriate engineering tools in hand, building the aircraft itself was a relatively simple exercise. The Wright Flyer was the first controllable, workable airplane because the Wright brothers did their homework while everyone else was just throwing sticks, cloth and wire together and hoping. Sometimes hoping just isn’t enough.

Large commercial aircraft today weigh hundreds of tons and carry hundreds of passengers; nobody would dream of building one without first conducting thorough engineering analysis to ensure that the airframe will withstand the stresses placed upon it. Airplanes no longer fall out of the sky due to simple inadequacy of airframe design.

Airplanes do however fall out of the sky due to inadequacy of flight software design. Software today is still an art rather than an engineering discipline. It ships when it looks “good enough”. Which means it often is not good enough — and people die.

Modern bridges stand up, and modern airplanes stay in the sky, because we now have a good understanding of the load bearing capacity of materials like steel and aluminum, of their typical failure modes, and of how to compute the load bearing capacity of engineered structures based upon that understanding.

If we are to reach the point where airliners full of passengers no longer fall out of the sky due to software faults, we need to have a similarly thorough understanding of software systems.

Modern software depends first and foremost on the compiler. What steel and concrete are to bridge design, and what aluminum and carbon composites are to airframe design, compilers are to software design. If we do not understand the load bearing limits of steel or aluminum we have no hope of building consistently reliable brdiges or airframes. So long as we do not understand what our compilers are doing, we have no hope of building consistently reliable software systems, and people will continue to die every year due to simple, preventable software faults in everything from radiological control software to flight control software to nuclear reactor control software to car control software.

Our minimal need is to know what meaning a compiler assigns to a given program. So long as we have no way of agreeing on the meaning of our programs, as software engineers we have lost the battle before the first shot is fired. Only when we know the precise semantics assigned to a given program by our compiler can we begin to develop methodologies to validate required properties of our software systems.

I do not speak here of proving a program “correct”. There is no engineering analysis which concludes with “and thus the system is correct”. What we can do is prove particular properties. We can prove that a given program will not attempt to read values from outside its address space. We can prove that a given program will always eventually return to a given abstract state. We can prove that a given program will always respond within one hundred milliseconds. We can prove that a given program will never enter a diverging oscillation. We can prove that a given program will never read from a file descriptor before opening it and will always eventually close that file descriptor. We can prove that certain outputs will always stand in given relationships to corresponding inputs. Given time, tools and effort, we can eventually prove enough properties of a flight control program to give us reasonable confidence in trusting hundreds of lives to it.

Traditional programming language “design” does not address the question of the meaning of the language. In an engineering sense, traditional programming languages are not designed at all. A list of reasonable-sounding features is outlined in English, and the compiler writer then turned loose to try and produce something vaguely corresponding to the text.

The first great advance on this state of affairs came with Algol 60, which for the first time defined clearly and precisely the supported syntax of the language. It was then possible for language designers and compiler writers to agree on which programs the compiler should accept and which it should reject, and to develop tools such as YACC which automate significant parts of the compiler construction task, dramatically reducing the software fault frequency in that part of the compiler. But we still had no engineering-grade way of agreeing on what the programs accepted should actually be expected to do when executed.

The second great advance on this state of affairs came with the 1990 release of The Definition of Standard ML, which specified formally and precisely not only the syntax but also the semantics of a complete usable programming language. Specifying the syntax required a hundred phrase structure rules spread over ten pages. Specifying the semantics required two hundred rules spread over another thirty pages. The entire book ran to barely one hundred pages including introduction, exposition, core material, appendices and index.

As with the Wright brother’s first airplane, the real accomplishment was not the artifact itself, but rather the engineering methodology and analysis underlying it. Languages like Java and C++ never had any real engineering analysis, and it shows. For example, the typechecking problem is for both of those languages undecidable, which is mathematical jargon for saying that the type system is so broken that it is mathematically impossible to produce an entirely correct compiler for either of them. This is not a property one likes in a programming language, and it is not one intended by the designers of either language; it is a simple consequence of the fact that the designed of neither language had available to them an engineering methodology up to the task of testing for and eliminating such problems. Like the designers of the earliest airplanes, they were forced to simply glue stuff together and pray for it to somehow work.

The actual engineering analysis conducted for SML is only hinted at in the Defintion. To gain any real appreciation for it, one must read the companion volume Commentary on Standard ML.

Examples of engineering goals set and met by the designs of SML include:

Each valid program accepted by the language definition (and thus eventually compiler) should have a clearly defined meaning. In Robin Milner’s famous phrase, “Well typed programs can’t go wrong.” No segfaults, no coredumps, no weird clobbered-stack behavior.

Each expression and program must have a uniquely defined type. In mathematical terminlogy, the type system should define a unique most general principal type to each syntactically valid expression and program.

It must be possible in principle to compute that type. In mathematical terminology, the problem of computing the principal type for an expression or program must be decidable. This is where Java and C++ fall down.

In general it is excruciatingly easy for the typechecking problem to become undecidable because one is always stretching the type system to accept as many valid expressions as possible.

Any practical type system must err on the side of safety, of rejecting any program which is not provably typesafe, and will consequently wind up throwing out some babies with the bathwater, rejecting programs which are in fact correct because the type system was not sophisticated enough to realize their correctness. One is always trying to minimize the number of such spuriously rejected by being just a little more accomodating, and in the process creeping ever closer to the precipice of undecidability. The job of the programming language type system designer is to teeter on the very brink of that precipice without ever actually falling over it.

It must be possible in practice to compute that type with acceptable efficiency. In modern praxis that means using syntax-directed unification-driven analysis to compute principal types in time essentially linear in program size. (Hindley-Milner-Damas type inference.)

There must be a clear phase separation between compile-time and run-time semantics — in essence, between typechecking and code generation on the one hand and runtime execution on the other. Only then is it possible to write compilers that generate efficient code, and only then is it possible to give strong compile-time guarantees of typesafety.

The type system must be sound: The actual value computed at runtime (i.e., specified by the dynamic semantics must always possess the type assigned to it by the compiletime typechecker (i.e., static semantics.

The runtime semantics must be complete, assigning a value to every program accepted as valid by the compiletime typechecker.

The design process for SML involved explicitly verifying these properties by informal and formal proofs, repeatedly modifying the design as necessary until these properties could be proved. This intensive analysis and revision process yielded a number of direct and indirect benefits, some obvious, some less so:

Both the compiletime and runtime semantics of SML are precise and complete. there are no direct or indirect conflicting requirements, nor are there overlooked corners where the semantics is unspecified.