Simon Ings's Blog, page 21

February 24, 2021

Just dump your filth on somebody else

Watching Space Sweepers, directed by Jo Sung-hee, for New Scientist, 24 February 2021

Tae-Ho is a sweeper-up of other people’s orbital junk, a mudlark in space scavenging anything of value. In Jo Sung-hee’s new movie Space Sweepers, he is someone who is most alone in a crowd – that is to say, among his crewmates on the spaceship Victory. They are a predictable assortment: a feisty robot with detachable feet; a heavily armed yet disarmingly gamine captain; a gnarly but lovable engineer with a past.

Tae-ho is played by Song Joong-ki, who also starred in Jo’s romantic smash hit A Werewolf Boy (2012). Song is the latest in a long line of South Korean actors whose utter commitment and lack of ego can bring the sketchiest script to life (think Choi Min-sik in revenge tragedy Oldboy, or Gong Yoo in zombie masterpiece Train to Busan).

Tae-ho has a secret. As a child soldier, culling troublemakers in orbit, he once saved the life of a little girl, adopted her, was ostracised for it, hit the skids and lost his charge in a catastrophic orbital collision. Now he wants her back, at any cost.

The near-magical mega-corp UTS can resurrect her using her DNA signature. This is the same outfit that is making Mars ready for settlement, but only for an elite 5 per cent of Earth’s population. The rest are left to perish on the desertified planet. All that is needed to restore Tae-ho’s ward is more money than he will ever see in his life, no matter how much junk he and his mates clear.

Then, as they tear apart a crashed shuttle, the crew discovers 7-year-old Kang Kot-nim (Park Ye-rin), a girl with a secret. She may not even be a girl at all, but a robot; a robot who may not be a robot at all, but a bomb. Selling her to the highest bidder will get Tae-ho’s daughter back, but at what moral cost?

South Korea’s first space-set blockbuster is, in one aspect at least, a very traditional film. Like so much of South Korean cinema, it explores the ethical consequences of disparities of wealth – how easily poorer people can be corrupted, while the rich face no moral tests at all.

But what do all these high-minded, high-octane shenanigans have to do with space junk, like the 20,000 artificial objects with orbits around Earth that can be tracked? Or the 900,000 bits of junk between 1 and 10 centimetres long? Or the staggering 128 million pieces that are smaller still and yet could wreak all kinds of havoc, from scratching the lens of a space telescope to puncturing a space station’s solar array?

Nothing, and everything. Space Sweepers is a space opera, not Alfonso CuarÓn’s Gravity. The director’s interest in the physics of low orbit begins and ends with the mechanics of rapidly rotating bodies. And boy, do they rotate. On a surprisingly small budget, the movie ravishes the eye and overwhelms the ear as Victory hurtles through a cluttered, industrialised void, all right angles and vanishing perspectives. You can’t help but think that while space may never look like this, it could easily feel like it: frenetic, crowded, unreasonable, ungiving, a meat grinder for the soul.

Similarly, while the very real problem of space junk won’t be solved by marginalised refugees in clapped-out spaceships, this film has hit on some truth. Cleanliness isn’t a virtue because it is too easy to fake: just dump your filth on somebody else. It is just wealth, admiring itself in the mirror. Real virtue, says this silly but very likeable film, comes with dirt on its hands.

February 3, 2021

Dispersing the crowds

In November 2020, the International Council of Museums estimated that 6.1 per cent of museums globally were resigned to permanent closure due to the pandemic. The figure was welcomed with enthusiasm: in May, it had reported nearly 13 per cent faced demise.

Something is changing for the better. This isn’t a story about how galleries and museums have used technology to save themselves during lockdowns (many didn’t try; many couldn’t afford to try; many tried and failed). But it is a story of how they weathered lockdowns and ongoing restrictions by using tech to future-proof themselves.

One key tool turned out to be virtual tours. Before 2020, they were under-resourced novelties; quickly, they became one of the few ways for galleries and museums to engage with the public. The best is arguably one through the Tomb of Pharaoh Ramses VI, by the Egyptian Tourism Authority and Cairo-based studio VRTEEK.

And while interfaces remain clunky, they improved throughout the year, as exhibition-goers can see in the 360-degree virtual tour created by the Museum of Fine Arts Ghent in Belgium to draw people through its otherwise-mothballed Van Eyck exhibition.

The past year has also forced the hands of curators, pushing them into uncharted territory where the distinctions between the real and the virtual become progressively more ambiguous.

With uncanny timing, the V&A in London had chosen Lewis Carroll’s Alice books for its 2020 summer show. Forced into the virtual realm by covid-19 restrictions, the V&A, working with HTC Vive Arts, created a VR game based in Wonderland, where people can follow their own White Rabbit, solve the caterpillar’s mind-bending riddles, visit the Queen of Hearts’ croquet garden and more. Curious Alice is available through Viveport; the real-world show is slated to open on 27 March.

Will museums grow their online experiences into commercial offerings? Almost all such tours are free at the moment, or are used to build community. If this format is really going to make an impact, it will probably have to develop a consolidated subscription service – a sort of arts Netflix or Spotify.

What the price point should be is anyone’s guess. It doesn’t help for institutions to muddy the waters by calling their video tours virtual tours.

But the advantages are obvious. The crowded conditions in galleries and museums have been miserable for years – witness the Mona Lisa, imprisoned behind bulletproof glass under low-level diffuse lighting and protected by barricades. Art isn’t “available” in any real sense when you can only spend 10 seconds with a piece. I can’t be alone in having staggered out of some exhibitions with no clear idea of what I had seen or why. Imagine if that was your first experience of fine art.

Why do we go to museums and galleries expecting to see originals? The Victorians didn’t. They knew the value of copies and reproductions. In the US in particular, museums lacked “real” antiquities, and plaster casts were highly valued. The casts aren’t indistinguishable from the original, but what if we produced copies that were exact in information as well as appearance? As British art critic Jonathan Jones says: “This is not a new age of fakery. It’s a new era of knowledge.”

With lidar, photogrammetry and new printing techniques, great statues, frescoes and chapels can be recreated anywhere. This promises to spread the crowds and give local museums and galleries a new lease of life. At last, they can become places where we think about art – not merely gawp at it.

January 27, 2021

A Faustian bargain, freely made

Reading The Rare Metals War by Guillaume Pitron for New Scientist, 27 January 2021

We reap seven times as much energy from the wind, and 44 times as much energy from the sun, as we did just a decade ago. Is this is good news? Guillaume Pitron, a journalist and documentary-maker for French television, is not sure.

He’s neither a climate sceptic, nor a fan of inaction. But as the world begins to adopt a common target of net-zero carbon emissions by 2050, Pitron worries that we’re becoming selectively blind to the costs that effort will incur. His figures are stark. Changing our energy model means doubling rare metal production approximately every fifteen years, mostly to satisfy our demand for non-ferrous magnets and lithium-ion batteries. “At this rate,” says Pitron, “over the next thirty years we will need to mine more mineral ores than humans have extracted over the last 70,000 years.”

Before the Renaissance, humans had found a use for just seven metals. Over the course of the industrial revolution, this number increased to just a dozen. Today, we’ve found uses for all 86 of them, and some of them are very rare indeed. For instance, neodymium and gallium are found in iron ore, but there’s 1,200 times less neodymium and up to 2,650 times less gallium than there is iron.

Zipping from an abandoned Mountain Pass mine in the Mojave Desert to the toxic lakes and cancer villages of Baotou in China, Pitron weights the terrible price paid for refining such materials, ably blending his investigative journalism with insights from science, politics and business.

There are two sides to Pitron’s story, woven seamlessly together. First there’s the economic story, of how the Chinese government elected to dominate the global energy and digital transition, so that it now controls 95 per cent of the rare metals market, manufacturing between 80 to 90 per cent of the batteries for electric vehicles, and over half the magnets used in wind turbines and electric motors.

Then there’s the ecological story in which, to ensure success, China took on the West’s own ecological burden. Now 10 per cent of its arable land is contaminated by heavy metals, 80 per cent of its ground water is unfit for consumption and 1.6 million people die every year due to air pollution alone (a recent paper in The Lancet reckons only 1.24 million people die each year — but let’s not quibble.

China’s was a Faustian bargain, freely entered into, but it would not have been possible had Europe and the rest of the Western world not outsourced their own industrial activities, creating a world divided, as Pitron memorably describes it, “between the dirty and those who pretend to be clean”.

The West’s economic comeuppance is now at hand, as its manufacturers, starved of the rare metals they need, are coerced into taking their technologies to China. And we in the West really should have seen this coming: how our reliance on Chinese raw materials would quickly morph into a reliance on China for the very technologies of the energy and digital transition. (Piron tells us that without magnets produced by China’s ChengDu Magnetic Material Science & Technology Company, the United States’ F-35 fifth-generation stealth fighter cannot fly.)

By 2040, in our pursuit of ever-greater connectivity and a cleaner atmosphere, we will need to mine three times more rare earths, five times more tellurium, twelve times more cobalt, and sixteen times more lithium than we do today. China’s ecological ruination and its global technological dominance advance in lockstep, unstoppably — unless we start mining for rare metals ourselves — in the United States, Brazil, Russia, South Africa, Thailand, Turkey, and in the “dormant mining giant” of Pitron’s native France.

Better, says Pitron, that we attain some small shred of supply security, and start mining our own land. At least if mining takes place in the backyards of vocal First World consumers, they can agitate for (and pay for) cleaner processes. And nothing will change “so long as we do not experience, in our own backyards, the full cost of attaining our standard of happiness.”

Seventy minutes of concrete

Watching Last and First Men (2020) directed by Jóhann Jóhannsson for New Scientist

“It’s a big ask for people to sit for 70 minutes and look at concrete,” mused the Icelandic composer Jóhann Jóhannsson, about his first and only feature-length film. He was still working on Last and First Men at the time of his death, aged 48, in February 2018.

Admired in the concert hall for his subtle, keening orchestral pieces, Jóhann Jóhannsson was well known for his film work: Prisoners (2013) and Sicario (2015) are made strange by his sometimes terrifying, thumping soundtracks. Arrival (2016) — about the visitation of aliens whose experience of time proves radically different to our own — inspired a yearning, melancholy score that is, in retrospect, a kind of blockbuster-friendly version of Last and First Men. (It’s worth noting that all three films were directed by Denis Villeneuve, himself no stranger to the aesthetics of concrete — witness 2017’s Blade Runner 2049.)

Jóhannsson’s Last and First Men is, by contrast, contemplative and surreal. It’s no blockbuster. A series of zooms and tracking shots against eerie architectural forms, mesmerisingly shot in monochrome 16mm by Norwegian cinematographer Sturla Brandth Grøvlen, it draws its inspiration and its script (a haunting, melancholy, sometimes chilly off-screen monologue performed by Tilda Swinton) from the 1930 novel by British philosopher William Olaf Stapledon.

Stapledon’s day job — lecturing on politics and ethics at the University of Liverpool — seems now of little moment, but his science fiction novels have never been out of print, and continue to set a dauntingly high bar for successors. Last and First Men is a history of the solar system across two billion years, detailing the dreams and aspirations, achievements and failings of 17 different kinds of future Homo (not including sapiens).

In the light of our ageing sun, these creatures evolve, blossom, speciate, and die, and it’s in the final chapters, and the melancholy moment of humanity’s ultimate extinction, that Jóhannsson’s film is set. Last and First Men is not a drama. There are no actors. There is no action. Mind you, it’s hard to see how any attempt to film Stapledon’s future history could work otherwise. It’s not really a novel; more a haunting academic paper from the beyond.

The idea to use passages from the book came quite late in Jóhannsson project, which began life as a film essay on (and this is where the concrete comes in) the huge, brutalist war memorials, called Spomenik, erected in the former Republic of Yugoslavia between the 1960s and the 1980s.

“Spomeniks were commissioned by Marshal Tito, the dictator and creator of Yugoslavia,” Jóhannsson explained in 2017 when the film, accompanied by a live rendition of an early score, was screened at the Manchester International Festival. “Tito constructed this artificial state, a Utopian experiment uniting the Slavic nations, with so many differences of religion. The spomeniks were intended as symbols of unification. The architects couldn’t use religious iconography, so instead, they looked to prehistoric, Mayan and Sumerian art. That’s why they look so alien and otherworldly.”

Swinton’s cool, regretful, monologue proves an ideal foil for the film’s architectural explorations, lifting what would otherwise be a stunning but slight art piece into dizzying, speculative territory: the last living human, contemplating the leavings of two billion years of human history.

The film was left unfinished at Jóhannsson’s death; it took his friend, the Berlin-based composer and sound artist Yair Elazar Glotman, about a year to realise Jóhannsson’s scattered and chaotic notes. No-one, hearing the story of how Last and First Men was put together, would imagine it would ever amount to anything more than a tribute piece to the composer.

Sometimes, though, the gods are kind. This is a hugely successful science fiction film, wholly deserving of a place beside Tarkovsky’s Solaris and Kubrick’s 2001. Who knew that staring at concrete, and listening to the end of humanity, could wet the watcher’s eye, and break their heart?

It is a terrible shame that Jóhannsson’s did not live to see his hope fulfilled; that, in his own words, “we’ve taken all these elements and made something beautiful and poignant. Something like a requiem.”

January 12, 2021

More than the naughty world deserves

In 2015 the US talk show host and comedian Stephen Colbert coined “truthiness”, one of history’s more chilling buzzwords. “We’re not talking about truth,” he declared, “we’re talking about something that seems like truth — the truth we want to exist.”

Colbert thought the poster-boy for our disinformation culture would be Wikipedia, the open-source internet encyclopedia started, more or less as an afterthought, by Jimmy Wales and Larry Sanger in 2001. If George Washington’s ownership of slaves troubles you, Colbert suggested “bringing democracy to knowledge” by editing his Wikipedia page.

Three years later the magazine Atlantic was calling Wikipedia “the last bastion of shared reality in Trump’s America”. Yes, its coverage is lumpy, idiosyncratic, often persnickety , and not terribly well written. But it’s accurate to a fault, extensive beyond all imagining, and energetically policed. (Wikipedia nixes toxic user content within minutes. Why can’t YouTube? Why can’t Twitter?)

Editors Joseph Reagle and Jackie Koerner — both energetic Wikipedians — know better than to go hunting for Wikipedia’s secret sauce. (A community adage goes that Wikipedia always works better in practice than in theory.) They neither praise nor blame Wikipedia for what it has become, but — and this comes across very strongly indeed — they love it with a passion. The essays they have selected for this volume (you can find the full roster of contributions on-line) reflect, always readably and almost always sympathetically, on the way this utopian project has bedded down in the flaws of the real world.

Wikipedia says it exists “to benefit readers by acting as an encyclopedia, a comprehensive written compendium that contains information on all branches of knowledge”. Improvements are possible. Wikipedia is shaped by the way its unvetted contributors write about what they know and delete what they do not. That women represent only about 12 per cent of the editing community is, then, not ideal.

Harder to correct is the wrinkle occasioned by language. Wikipedias written in different languages are independent of each other. There might not be anything actually wrong, but there’s certainly something screwy about the way India, Australia, the US and the UK and all the rest of the Anglophone world share a single English-language Wikipedia, while only the Finns get to enjoy the Finnish one. And it says something (obvious) about the unevenness of global development that Hindi speakers (the third largest language group in the world) read a Wikipedia that’s 53rd in a ranking of size.

To encyclopedify the world is an impossible goal. Surely the philosophes of eighteenth century France knew that much when they embarked on their Encyclopédie. Paul Otlet’s Universal Repertory and H. G. Wells’s World Brain were similarly Quixotic.

Attempting to define Wikipedia through its intellectual lineage may, however, be to miss the point. In his stand-out essay “Wikipedia As A Role-Playing Game” Dariusz Jemielniak (author of the first ethnography of Wikipedia, Common Knowledge?, in 2014) stresses the playfulness of the whole enterprise. Why else, he asks, would academics avoid it? “”When you are a soldier, you do not necessarily spend your free time playing paintball with friends.”

Since its inception, pundits have assumed that it’s Wikipedia’s reliance on the great mass of unwashed humanity — sorry, I mean “user-generated content” — that will destroy it. Contributor Heather Ford, a South African open source activist, reckons it’s not its creators that will eventually ruin Wikipedia but its readers — specifically, data aggregation giants like Google, Amazon and Apple, who fillet Wikipedia content and disseminate it through search engines like Chrome and personal assistants like Alexa and Siri. They have turned Wikipedia into the internet’s go-to source of ground truth, inflating its importance to an unsustainable level.

Wikipedia’s entries are now like swords of Damocles, suspended on threads over the heads of every major commercial and political actor in the world. How long before the powerful find a way to silence this capering non-profit fool, telling motley truths to power? As Jemielniak puts it, “”A serious game that results in creating the most popular reliable knowledge source in the world and disrupts existing knowledge hierarchies and authority, all in the time of massive anti-academic attacks — what is there not to hate?”

Though one’s dislike of Wikipedia needn’t spring from principles or ideas or even self-interest. Plain snobbery will do. Wikipedia has pricked the pretensions of the humanities like no other cultural project. Editor Joseph Reagle discovered as much ten years ago in email conversation with founder Jimmy Wales (a conversation that appears in Good Faith Collaboration, Reagle’s excellent, if by now slightly dated study of Wikipedia). “One of the things that I noticed,” Wales wrote, “is that in the humanities, a lot of people were collaborating in discussions, while in programming… people weren’t just talking about programming, they were working together to build things of value.”

This, I think, is what sticks in the craw of so many educated naysayers: that while academics were busy paying each other for the eccentricity of their beautiful opinions, nerds were out in the world winning the culture wars; that nerds stand ready on the virtual parapet to defend us from truthy, Trumpist oblivion; that nerds actually kept the promise held out by the internet, and turned it into the fifth biggest site on the Web.

Wikipedia’s guidelines to its editors include “Assume Good Faith” and “Please Do Not Bite the Newcomers.” This collection suggests to me that this is more than the naughty world deserves.

January 6, 2021

An inanimate object worshipped for its supposed magical powers

Watching iHuman dircted by Tonje Hessen Schei for New Scientist, 6 January 2021

In 2010 she made Play Again, exploring digital media addiction among children. In 2014 she won awards for Drone, about the CIA’s secret role in drone warfare.

Now, with iHuman, Tonje Schei, a Norwegian documentary maker who has won numerous awards for her explorations of humans, machines and the environment, tackles — well, what, exactly? iHuman is a weird, portmanteau diatribe against computation — specifically, that branch of it that allows machines to learn about learning. Artificial general intelligence, in other words.

Incisive in parts, often overzealous, and wholly lacking in scepticism, iHuman is an apocalyptic vision of humanity already in thrall to the thinking machine, put together from intellectual celebrity soundbites, and illustrated with a lot of upside-down drone footage and digital mirror effects, so that the whole film resembles nothing so much as a particularly lengthy and drug-fuelled opening credits sequence to the crime drama Bosch.

That’s not to say that Schei is necessarily wrong, or that our Faustian tinkering hasn’t doomed us to a regimented future as a kind of especially sentient cattle. The film opens with that quotation from Stephen Hawking, about how “Success in creating AI might be the biggest success in human history. Unfortunately, it might also be the last.” If that statement seems rather heated to you, go visit Xinjiang, China, where a population of 13 million Turkic Muslims (Uyghurs and others) are living under AI surveillance and predictive policing.

Not are the film’s speculations particularly wrong-headed. It’s hard, for example, to fault the line of reasoning that leads Robert Work, former US under-secretary of defense, to fear autonomous killing machines, since “an authoritarian regime will have less problem delegating authority to a machine to make lethal decisions.”

iHuman’s great strength is its commitment to the bleak idea that it only takes one bad actor to weaponise artificial general intelligence before everyone else has to follow suit in their own defence, killing, spying and brainwashing whole populations as they go.

The great weakness of iHuman lies in its attempt to throw everything into the argument: :social media addiction, prejudice bubbles, election manipulation, deep fakes, automation of cognitive tasks, facial recognition, social credit scores, autonomous killing machines….

Of all the threats Schei identifies, the one conspicuously missing is hype. For instance, we still await convincing evidence that Cambrdige Analytica’s social media snake oil can influence the outcome of elections. And researchers still cannot replicate psychologist Michal Kosinski’s claim that his algorithms can determine a person’s sexuality and even their political leanings from their physiology.

Much of the current furore around AI looks jolly small and silly one you remember that the major funding model for AI development is advertising. Most every millennial claim about how our feelings and opinions can be shaped by social media is a retread of claims made in the 1910s for the billboard and the radio. All new media are terrifyingly powerful. And all new media age very quickly indeed.

So there I was hiding behind the sofa and watching iHuman between slitted fingers (the score is terrifying, and artist Theodor Groeneboom’s animations of what the internet sees when it looks in the mirror is the stuff of nightmares) when it occurred to me to look up the word “fetish”. To refresh your memory, a fetish is an inanimate object worshipped for its supposed magical powers or because it is considered to be inhabited by a spirit.

iHuman’s is a profoundly fetishistic film, worshipping at the altar of a God it has itself manufactured, and never more unctiously as when it lingers on the athletic form of AI guru Jürgen Schmidhuber (never trust a man in white Levis) as he complacently imagines a post-human future. Nowhere is there mention of the work being done to normalise, domesticate, and defang our latest creations.

How can we possibly stand up to our new robot overlords?

Try politics, would be my humble suggestion.

January 2, 2021

An engine for understanding

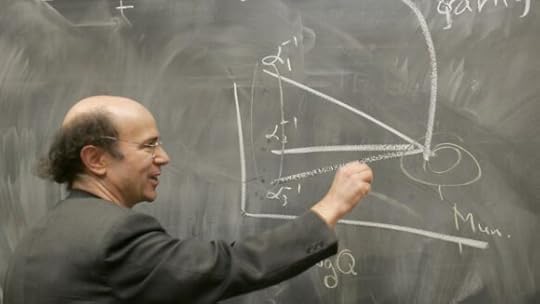

Reading Fundamentals by Frank Wilczek for the Times, 2 January 2021

It’s not given to many of us to work at the bleeding edge of theoretical physics, discovering for ourselves the way the world really works.

The nearest most of us will ever get is the pop-science shelf, and this has been dominated for quite a while now by the lyrical outpourings of Italian theoretical physicist Carlo Rovelli. Rovelli’s upcoming one, Helgoland, promises to have his reader tearing across a universe made, not of particles, but of the relations between them.

It’s all too late, however: Frank Wilczek’s Fundamentals has gazzumped Rovelli handsomely, with a vision that replaces our classical idea of physical creation — “atoms and the void” — with one consisting entirely of spacetime, self-propagating fields and properties.

Born in 1951 and awarded the Nobel Prize in Physics in 2004 for figuring out why atoms don’t just fly apart, Wilczek is out to explain why “the history of Sweden is more complicated than the history of the universe”. The ingredients of the universe are surprisingly simple, but their fates, playing out through time in accordance with just a handful of rules, generate a world of unimaginable complexity, contingency and abundance. Measures of spin, charge and mass allow us to describe the whole of physical reality, but they won’t help us at all in depicting, say, the history of the royal house of Bernadotte.

Wilczek’s “ten keys to reality”, mentioned in his subtitle, aren’t to do with the 19 or so physical constants that exercised Martin Rees, the UK’s Astronomer Royal, in his 1990s pop-science heyday. The focus these days has shifted more to the spirit of things. When Wilczek describes the behaviour of electrons around an atom, for example, gone are the usual Böhr-ish mechanics, in which electrons leap from one nuclear orbit to another. Instead we get a vibrating cymbal, the music of the spheres, a poetic understanding of fields, and not a fragment of matter in sight.

So will you plump for the Wilzcek, or will you wait for the Rovelli? A false choice, of course; this is not a race. Popular cosmology is more like the jazz scene: the facts (figures, constants, models) are the standards everyone riffs off. After one or two exposures you find yourself returning for the individual performances: their poetry, their unique expression.

Wilczek’s ten keys are more like ten book ideas, exploring the spatial and temporal abundance of the universe; how it all began; the stubborn linearity of time; how it all will end. What should we make of his decision to have us swallow the whole of creation in one go?

In one respect this book was inevitable. It’s what people of Wilczek’s peculiar genius and standing do. There’s even a sly name for the effort: the philosopause. The implication here being that Wilczek has outlived his most productive years and is now pursuing philosophical speculations.

Wilzcek is not short of insights. His idea of what the scientific method consists of is refreshingly robust: a style of thinking that “combines the humble discipline of respecting the facts and learning from Nature with the systematic chutzpah of using what you think you’ve learned aggressively”. If you apply what you think you’ve discovered everywhere you can, even in situations that have nothing to do with your starting point, then, if it works, “you’ve discovered something useful; it it doesn’t, then you’ve learned something important.”

However, works of the philosopause are best judged on character. Richard Dawkins seems to have discovered, along with Johnny Rotten, that anger is an energy. Martin Rees has been possessed by the shade of that dutiful bureaucrat C P Snow. And in this case? Wilczek, so modest, so straight-dealing, so earnest in his desire to conciliate between science and the rest of culture, turns out to be a true visionary, writing — as his book gathers pace — a human testament to the moment when the discipline of physics, as we used to understand it, came to a stop.

Wilczek’s is the first generation whose intelligence — even at the far end of the bell-curve inhabited by genius — is insufficient to conceptualise its own scientific findings. Machines are even now taking over the work of hypothesis-making and interpretation. “The abilities of our machines to carry lengthy yet accurate calculations, to store massive amounts of information, and to learn by doing at an extremely fast pace,” Wilczek explains, “are already opening up qualitatively new paths toward understanding. They will move the frontier of knowledge in directions, and arrive at places, that unaided human brains can’t go.”

Or put it this way: physicists can pursue a Theory of Everything all they like. They’ll never find it, because if they did find it, they wouldn’t understand it.

Where does that leave physics? Where does that leave Wilczek? His response is gloriously matter-of-fact:

“… really, this should not come as fresh news. Humans themselves know many things that are not available to human consciousness, such as how to process visual information at incredible speeds, or how to make their bodies stay upright, walk and run.”

Right now physicists have come to the conclusion that the vast majority of mass in the universe reacts so weakly to the bits of creation we can see, we may never know its nature. Though Wilczek makes a brave stab at the problem of so-called “dark matter”, he is equally prepared to accept that a true explanation may prove incomprehensible.

Human intelligence turns out to be just one kind of engine for understanding. Wilzcek would have us nurture it and savour it, and not just for what it can do, but because it is uniquely ours.

December 20, 2020

The seeds of indisposition

Reading Ageless by Andrew Steele for the Telegraph, 20 December 2020

The first successful blood transfusions were performed in 1650, by the English physician Richard Lower, on dogs. The idea, for some while, was not that transfusions would save lives, but that they might extend them.

Turns out they did. The Philosophical Transactions of the Royal Society mentions an experiment in which “an old mongrel curr, all over-run with the mainge” was transfused with about fifteen ounces of of blood from a young spaniel and was “perfectly cured.”

Aleksandr Bogdanov, who once vied with Vladimir Lenin for control of the Bolsheviks (before retiring to write science fiction novels) brought blood transfusion to Russia, and hoped to rejuvenate various exhausted colleagues (including Stalin) by the method. On 24 March 1928 he mutually transfused blood with a 21-year-old student, suffered a massive transfusion reaction, and died, two weeks later, at the age of fifty-four.

Bogdanov’s theory was stronger than his practice. His essay on ageing speaks a lot of sense. “Partial methods against it are only palliative,” he wrote, “they merely address individual symptoms, but do not help fight the underlying illness itself.” For Bogdanov, ageing is an illness — unavoidable, universal, but no more “normal” or “natural” than any other illness. By that logic, ageing should be no less invulnerable to human ingenuity and science. It should, in theory, be curable.

Andrew Steele agrees. Steele is an Oxford physicist who switched to computational biology, drawn by the field of biogerontology — or the search for a cure for ageing. “Treating ageing itself rather than individual diseases would be transformative,” he writes, and the data he brings to this argument is quite shocking. It turns out that curing cancer would add less than three years to a person’s typical life expectancy, and curing heart disease, barely two, as there are plenty of other diseases waiting in the wings.

Is ageing, then, simply a statistical inevitability — a case of there always being something out there that’s going to get us?

Well, no. In 1825 Benjamin Gompertz, a British mathematician, explained that there are two distinct drivers of human mortality. There are extrinsic events, such as injuries or diseases. But there’s also an internal deterioration — what he called “the seeds of indisposition”.

It’s Steele’s job here to explain why we should treat those “seeds” as a disease, rather than a divinely determined limit. In the course of that explanation Steele gives us, in effect, a tour of the whole of human biology. It’s an exhilarating journey, but by no means always a pretty one: a tale of senescent cells, misfolded proteins, intracellular waste and reactive metals. Readers of advanced years, wondering why their skin is turning yellow, will learn much more here than they bargained for.

Ageing isn’t evolutionarily useful; but because it comes after our breeding period, evolution just hasn’t got the power to do anything about it. Mutations whose negative effects occur late in our lives accumulate in the gene pool. Worse, if they had a positive effect on our lives early on, then they will be actively selected for. Ageing, in other words, is something we inherit.

It’s all very well conceptualising old age as one disease. But if your disease amounts to “what happens to a human body when 525 million years of evolution stop working”, then you’re reduced to curing everything that can possibly go wrong, with every system, at once. Ageing, it turns out, is just thousands upon thousands of “individual symptoms”, arriving all at once.

Steele believes the more we know about human biology, the more likely it is we’ll find systemic ways to treat these multiple symptoms. The challenge is huge, but the advances, as Steele describes them, are real and rapid. If, for example, we can persuade senescent cells to die, then we can shed the toxic biochemical garbage they accumulate, and enjoy once more all the benefits of (among other things) young blood. This no fond hope: human trials of senolytics started in 2018.

Steele is a superb guide to the wilder fringes of real medicine. He pretends to nothing else, and nothing more. So whether you find Ageless an incredibly focused account, or just an incredibly narrow one, will come down, in the end, to personal taste.

Steele shows us what happens to us biologically as we get older — which of course leaves a lot of blank canvas for the thoughtful reader to fill. Steele’s forebears in this (frankly, not too edifying) genre have all to often claimed that there are no other issues to tackle. In the 1930s the surgeon Alexis Carrel declared that “Scientific civilization has destroyed the world of the soul… Only the strength of youth gives the power to satisfy physiological appetites and to conquer the outer world”.

Charming.

And he wasn’t the only one. Books like Successful Aging (Rowe & Kahn, 1998) and How and Why We Age (Hayflick, 1996) aspire to a sort of overweaning authority, not by answering hard questions about mortality, long life and ageing, but merely by denying a gerontological role for anyone outside their narrow specialism: philosophers, historians, theologians, ethicists, poets — all are shown the door.

Steele is much more sensible. He simply sticks to his subject. To the extent that he expresses a view, I am confident that he understands that ageing is an experience to be lived meaningfully and fully, as well as a fascinating medical problem to be solved.

Steele’s vision is very tightly controlled: he wants us to achieve “negligible senescence”, in which, as we grow older, we suffer no obvious impairments. What he’s after is a risk of death that stays constant no matter how old we get. This sounds fanciful, but it does happen in nature. Giant tortoises succumb to statistical inevitability, not decrepitude.

I have a fairly entrenched problem with books that treat ageing as a merely medical phenomenon. But I heartily recommend this one. It’s modest in scope, and generous in detail. It’s an honest and optimistic contribution to a field that tips very easily indeed into Tony Stark-style boosterism.

Life expectancy in the developed world has doubled from 40 in the 1800s to over 80 today. But it is in our nature to be always craving for more. One colourful outfit called Ambrosia is offering anyone over 35 the opportunity to receive a litre of youthful blood plasma for $8000. Steele has some fun with this: “At the time of writing,” he tells us, “a promotional offer also allows you to get two for $12000 — buy one, get one half-price.”

December 18, 2020

Soaked in ink and paint

Reading Dutch Light: Christiaan Huygens and the making of science in Europe

by Hugh Aldersey-Williams for the Spectator, 19 December 2020

This book, soaked, like the Dutch Republic itself, “in ink and paint”, is enchanting to the point of escapism. The author calls it “an interior journey, into a world of luxury and leisure”. It is more than that. What he says of Huygen’s milieu is true also of his book: “Like a ‘Dutch interior’ painting, it turns out to contain everything.”

Hugh Aldersey-Williams says that Huygens was the first modern scientist. This is a delicate argument to make — the word “scientist” didn’t enter the English language before 1834. And he’s right to be sparing with such rhetoric, since a little of it goes a very long way. What inadvertent baggage comes attached, for instance, to the (not unreasonable) claim that the city of Middleburg, supported by the market for spectacles, became “a hotbed of optical innovation” at the end of the 16th century? As I read about the collaboration between Christiaan’s father Constantijn (“with his trim dark beard and sharp features”) and his lens-grinder Cornelis Drebbel (“strapping, ill-read… careless of social hierarchies”) I kept getting flashbacks to the Steve Jobs and Steve Wozniak double-act in Aaron Sorkin’s film.

This is the problem of popular history, made double by the demands of explaining the science. Secretly, readers want the past to be either deeply exotic (so they don’t have to worry about it) or fundamentally familiar (so they, um, don’t have to worry about it).

Hugh Aldersey-Williams steeps us in neither fantasy for too long, and Dutch Light is, as a consequence, an oddly disturbing read: we see our present understanding of the world, and many of our current intellectual habits, emerging through the accidents and contingencies of history, through networks and relationships, friendships and fallings-out. Huygens’s world *is* distinctly modern — disturbingly so: the engine itself, the pipework and pistons, without any of the fancy fairings and decals of liberalism.

Trade begets technology begets science. The truth is out there but it costs money. Genius can only swim so far up the stream of social prejudice. Who your parents are matters.

Under Dutch light — clean, caustic, calvinistic — we see, not Enlightenment Europe emerging into the comforts of the modern, but a mirror in which we moderns are seen squatting a culture, full of flaws, that we’ve never managed to better.

One of the best things about Aldersey-Williams’s absorbing book (and how many 500-page biographies do you know feel too short when you finish them?) is the interest he shows in everyone else. Christiaan arrives in the right place, in the right time, among the right people, to achieve wonders. His father, born 1596 was a diplomat, architect, poet (he translated John Donne) and artist (he discovered Rembrandt). His longevity exasperated him: “Cease murderous years, and think no more of me” he wrote, on his 82nd birthday. He lived eight years more. But the space and energy Aldersey-Williams devotes to Constantijn and his four other children — “a network that stretched across Europe” — is anything but exasperating. It immeasurably enriches our idea of Christiaan’s work meant, and what his achievements signified.

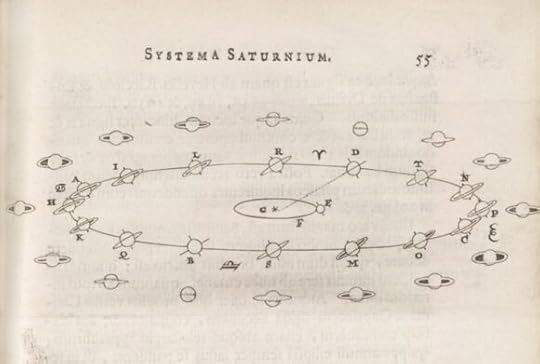

Huygens worked at the meeting point of maths and physics, at a time when some key physical aspects of reality still resisted mathematical description. Curves provide a couple of striking examples. The cycloid is the path made by a point on the circumference of a turning wheel. The catenary is the curve made by a chain or rope hanging under gravity. Huygens was the first to explain these curves mathematically, doing more than most to embed mathematics in the physical sciences. He tackled problems in geometry and probability, and had some fun in the process (“A man of 56 years marries a woman of 16 years, how long can they live together without one or the other dying?”) Using telescopes he designed and made himself, he discovered Saturn’s ring system and its largest moon, Titan. He was the first to describe the concept of centrifugal force. He invented the pendulum clock.

Most extraordinary of all, Huygens — though a committed follower of Descartes (who was once a family friend) — came up with a model of light as a wave, wholly consistent with everything then known about the nature of light apart from colour, and streets ahead of the “corpuscular” theory promulgated by Newton, which had light consisting of a stream of tiny particles.

Huygens’s radical conception of light seems even stranger, when you consider that, as much as his conscience would let him, Huygens stayed faithful to Descartes’ vision of physics as a science of bodies in collision. Newton’s work on gravity, relying as it did on an unseen force, felt like a retreat to Huygens — a step towards occultism.

Because we turn our great thinkers into fetishes, we allow only one per generation. Newton has shut out Huygens, as Galileo shut out Kepler. Huygens became an also-ran in Anglo-Saxon eyes; ridiculous busts of Newton, meanwhile, were knocked out to adorn the salons of Britain’s country estates, “available in marble, terracotta and plaster versions to suit all pockets.”

Aldersey-Williams insists that this competition between the elder Huygens and the enfant terrible Newton was never so cheap. Set aside their notorious dispute over calculus, and we find the two men in lively and, yes, friendly correspondence. Cooperation and collaboration were on the rise: “Gone,” Aldersey-Williams writes, “is the quickness to feel insulted and take umbrage that characterised so many exchanges — domestic as well as international — in the early days of the French and English academies of science.”

When Henry Oldenburg, the prime mobile of the Royal Society, died suddenly in 1677, a link was broken between scientists everywhere, and particularly between Britain and the continent. The 20th century did not forge a culture of international scientific cooperation. It repaired the one Oldenburg and Huygens had built over decades of eager correspondence and clever diplomacy.

November 18, 2020

Run for your life

Watching Gints Zilbalodis’s Away for New Scientist, 18 November 2020

A barren landscape at sun-up. From the cords of his deflated parachute, dangling from the twisted branch of a dead tree, a boy slowly wakes to his surroundings, just as a figure appears out of the dawn’s dreamy desert glare. Humanoid but not human, faceless yet somehow inexpressibly sad, the giant figure shambles towards the boy and bends and, though mouthless, tries somehow to swallow him.

The boy unclips himself from his harness, falls to the sandy ground, and begins to run. The strange, slow, gripping pursuit that follows will, in the space of an hour and ten minutes, tell the story of how the boy comes to understand the value of life and friendship.

That the monster is Death is clear from the start: not a ravenous ogre, but unstoppable and steady. It swallows, without fuss or pain, the lives of any creature it touches. Perhaps the figure pursuing the boy is not a physical threat at all, but more the dawning of a terrible idea — that none of us lives forever. (In one extraordinary dream sequence, we see the boy’s fellow air passengers plummet from the sky, each one rendered as a little melancholy incarnation of the same creature.)

Away is the sole creation of 26-year-old Latvian film-maker Gints Zilbalodis, and it’s his first feature-length animation. Zabalodis is Away’s director, writer, animator, editor, and even composed its deceptively simple synth score — a constant back-and-forth between dread and wonder.

There’s no shading in Zabalodis’s CGI-powered animation, no outlining, and next to no texture, and the physics is rudimentary. When bodies enter water, there’s no splash: instead, deep ripples shimmer across the screen. A geyser erupts, and water rises and falls against itself in a churn of massy, architectonic white blocks. What drives this strange retro, gamelike animation style?

Away feels nostalgic at first, perhaps harking back to the early days of videogames, when processing speeds were tiny, and a limited palette and simplified physics helped players explore game worlds in real time. Indeed the whole film is structured like a game, with distinct chapters and a plot arranged around simple physical and logical puzzles. The boy finds a haversack, a map, a water canteen, a key and a motorbike. He finds a companion — a young bird. His companion learns to fly, and departs, and returns. The boy runs out of water, and finds it. He meets turtles, birds, and cats. He wins a major victory over his terrifying pursuer, only to discover that the victory is temporary. By the end of the film, it’s the realistic movies that seem odd, the big budget animations, the meticulously composited Nolanesque behemoths. Even dialogue feels clumsy and lumpen, after 75 minutes of Away’s impeccable, wordless storytelling.

Away reminds us that when everything in the frame and on the soundtrack serves the story, then the elements themselves don’t have to be remarkable. They can be simple and straightforward: fields of a single colour, a single apposite sound-effect, the tilt of a simply drawn head.

As CGI technology penetrates the prosumer market, and super-tool packages like Maya become affordable, or at any rate accessible through institutions, then more artists and filmmakers are likely to take up the challenge laid down by Away, creating, all by themselves, their own feature-length productions.

Experiments of this sort — ones that change the logistics and economies of film production — are often ugly. The first films were virtually unfollowable. The first sound films were dull and stagey. CGI effects were so hammy at first, they kicked viewers out of the movie-going experience entirely. It took years for Pixar’s animations to acquire their trademark charm.

Away is different. In an industry that makes films whose animation credits feature casts of thousands, Zabalodis’s exquisite movie sets a very high bar indeed for a new kind of artisanal filmmaking.

Simon Ings's Blog

- Simon Ings's profile

- 147 followers