Daniel Miessler's Blog, page 11

May 14, 2024

UL NO. 432: Can You Summarize Your Work in a Sentence?

👉 Continue reading online to avoid the email cutoff issue 👈

Unsupervised Learning is a Security/AI newsletter about how to transition from Human 2.0 to Human 3.0 in order to thrive in the post-AI world.

TOCNOTESHey there,

Lots of stuff!

New Fabric Pattern: get_wow_per_minute

This brand-new Fabric Pattern allows you to figure out the value density of any piece of content, rated from 0 to 10.

OpenAI’s Event

OpenAI released their new model, GPT-4o (the “o” stands for omni)

The big news is that it’s just about as smart as 4, but it’s 4x faster and 2x cheaper.

It’s also capable of having real-time conversations in a very realistic way, allowing you to use it for real-time translation and other stuff.

I did a predictions post that anticipated more agent stuff besides just the DA component, but alas we’ll have to wait for that…

It’ll soon have vision as well, so you’ll be able to have it watching your screen, and you can just ask it questions, and it can help you.

They released a desktop app as well, which is where that functionality will eventually live. Only a few people have it so far but I’m evidently one of the lucky ones…but it doesn’t have the screen monitoring piece yet.

💡They’re basically working to create the Digital Assistants I talked about in my Predictable Path video, which is the most obvious but awesome move ever. Tons of people are doing it, but it’s great to see OpenAI jump ahead in this space.

RSA

So, RSA was really good. Like really, really good. Caught up with so many friends and had so many nourishing conversations. Lots of them were about trying to convince friends to get out of their jobs where they’re unhappy, and to get into something AI.

Did a couple of talks and a couple of panels. Lots of fun there.

Probably should have brought something to sell, and had like a sales pitch, but I just hate that vibe. I think I’ll just buy marketing / ads and/or pay sales people for that so I don’t have to do it.

I did show some people Threshold though, which was a total hit.

The coolest thing I saw at RSA was the energy. We’re definitely back. And when I say we, I mean optimism and energy around security/entrepreneurship. Not exactly sure about the mix there, but it definitely felt lively to be around the conference in a way that hasn’t been true in like 5-6 years.

Speaking of Threshold, holy crap! I am LOVING this thing. It’s now my #1 way of finding my highest quality content. Plus we send out an email every day with your feed in case you didn’t get a chance to check it. Here’s my latest one:

My personal Threshold feed

I am not joking when I tell you that every single one of these were hits for me. Every. One. I can’t believe we’ve actually built the content discovery tool that I’ve wanted my whole life. And we’re just getting started with the features. The stuff coming in a few weeks will be insanity.

You should get it. Oh, and UL Members get half-off the first year with a code. It’s in UL chat once you sign up.

Ok, let’s get to it…

MY WORKCheck out this new sponsored conversation I had with Corey Ranslem, CEO of Dryad—and the resident expert on Maritime Attacks—and Ismael Valenzuela, VP of Threat Intelligence and Research at Blackberry.

We talked about all things Maritime Security, and I learned a whole lot from the conversation.

—

Not my work, but my Dad just went on a live studio podcast in San Francisco. He talked about how he approaches music, and performed multiple songs. Go check it out! THE FULL SHOW | A CLIP OF HIM PERFORMING HIS SONG: CHILDREN OF THE NIGHT

SECURITYDell got big-hacked (49 million accounts) by someone scraping an API. One message I’ve not heard enough from ASM vendors is having APIs be part of the scope. DISCUSSION | MORE

💡 If I had one existing security space to invest in, like from companies that existed 5 years ago, I’d probably go with API Security.

The whole world of value is about to be presented as APIs, including companies. It’s core, underlying infrastructure. And they will be getting probed/tested constantly by armies of AI agents.

Yeah, API Security.

Speaking of API security and agents, my buddy Joseph Thacker wrote about this recently but I messed up the link last week. Here’s the real link, plus his new piece on a similar topic. MORE | MORE

CISA has a new alert system companies can sign up for where you sign up and get notified if you have any of their Known Exploitable Vulnerabilities (KEVs). They said about half of the companies they notified had fixed them, and that over 7,000 orgs have signed up. Super impressive. MORE

Attackers are using Microsoft Graph API for malware comms. MORE

Sponsor

Enhance Enterprise Security: Trust Every Device with 1Password!

What do you call an endpoint security product that works perfectly but makes users miserable? A failure. The old approach to endpoint security is to lock down employee devices and roll out changes through forced restarts, but it just. Doesn't. Work.

IT is miserable because they've got a mountain of support tickets, employees start using personal devices just to get their work done, and executives opt out the first time it makes them late for a meeting. You can't have a successful security implementation unless you work with end users. That's where 1Password comes in.

1Password’s user-first device trust solution notifies users as soon as it detects an issue on their device, and teaches them how to solve it without needing help from IT. That way, untrusted devices are blocked from authenticating, but users don't stay blocked.

1Password is designed for companies with Okta and it works on macOS, Windows, Linux, and mobile devices.

So if you have Okta and you're looking for a device trust solution that respects your team, visit 1Password.com/unsupervisedlearning to watch a demo and see how it works.

1Password.com/unsupervisedlearning

Watch a DemoA Russian influence campaign is exploiting college campus protests to deepen divisions in the US. The Kremlin's Doppelganger network generated over 130,000 views on X by spreading fake news about the protests, using bot accounts to mimic credible news sources. MORE

Google's making 2FA setup smoother by letting you skip the phone number for options like authenticator apps or security keys. . MORE

Sponsor

Wednesday Wisdom: Virtual Cybersecurity Showcases

Looking for a concentrated dose of cybersecurity knowledge? Join us for one (or several!) of our quick-take, 20-minute “Wednesday Wisdom” webinars. We cover timely topics around ASPM, CTEM, DevSecOps, vulnerability, and more—all led by pros in the know.

This month:

May 15: Hot Takes on Hot Topics from RSA

May 22: Your Checklist for Application Security Posture Management Buy-In.

US Marines are testing robot dogs with AI-aimed rifles. These robotic "dogs" can autonomously detect and track targets, yet require a human operator to make the final decision to fire. For now. MORE

Nearly 95% of international data travels through undersea cables, and we’re starting to see more attacks on them. MORE

💡I’ve always wondered why these weren’t a huge terrorism target. Seems like most anyone can basically turn off lots of the internet whenever they want.

Like, forget the US border—how are you going to secure cables that span thousands of miles?

I think the only real answer is to focus on the threat actors rather than the vulnerability.

The US just stopped Intel and Qualcomm's ability to ship certain goods to Huawei, right after Huawei launched an AI computer with an Intel chip. MORE

👉 Continue reading online to avoid the email cutoff issue 👈

TECHNOLOGYA VC from Andreessen Horowitz reckons half of Google's staff are just pretending to work. David Ulevitch's claims a concerning trend of "fake work" within tech giants. MORE

💡It’s actually fake in multiple ways. It’s fake in the sense that people aren’t actually working, but it’s also fake in the sense that a lot of that work shouldn’t even exist.

I highly suggest David Graeber’s Bullshit Jobs. So good.

In this frame, AI is about to be the sunlight that’s needed to disinfect a very nasty surface. Unfortunately, a whole lot of societal infrastructure is in that filth.

The vast majority of the jobs that universities are training for simply won’t be there anymore. Middle management. Paper pushing. Spreadsheet management. Lots of project management. Customer service. Cold calling. The list continues.

Millions and millions of jobs.

Like I’ve said before other places, the safest place in this new world is building new things. Which means you need to be highly motivated and broad-spectrum in terms of your skills.

In short, the easiest way to have a job in the world that’s coming is to create something people want or need.

MITRE is partnering with Nvidia to create a $20 million AI supercomputer aimed at making U.S. government operations, from Medicare to taxes, more efficient. Simultaneously cool and terrifying. MORE

President Joe Biden is converting the Foxconn flop in Wisconsin into a $3.3 billion Microsoft AI data center. MORE

The "Acquired" podcast has become a staple for a lot of business/tech people in Silicon Valley. It basically looks at one company per episode. Kind of like the podcasts that do biographies. Super compelling. MORE

👉 Continue reading online to avoid the email cutoff issue 👈

HUMANSBiden's quadrupling tariffs on Chinese EVs, making it super tough for their cheaper cars to hit the US market. MORE

California's about to change how you're billed for electricity, introducing a fixed fee that varies with your income starting in 2025. This shift aims to lower the overall cost of electricity, with reductions between 8% and 18%, but introduces a monthly charge regardless of consumption. MORE

Scientists have found all DNA and RNA bases in meteorites, hinting life's building blocks might be extraterrestrial. This discovery, made using a novel extraction method akin to cold brewing, challenges the notion that life's ingredients originated solely on Earth. MORE

Scientists have reconstructed a 1mm square piece of the human cerebral cortex at nanoscale resolution, a breakthrough in brain research. This reconstruction allows us to see the brain's complexity like never before, offering insights into how neural networks connect and function. MORE

Vaccination has prevented 154 million deaths, according to a new landmark study. MORE

Streaming is cable now. MORE

The Emotional Support Animal Racket MORE

👉 Continue reading online to avoid the email cutoff issue 👈

IDEAS & ANALYSISJevons’ Paradox Misunderstood

Marc Andreessen was on a podcast recently and I think he mischaracterized Jevons’ Paradox as it would apply to software security. He said AI would make it harder to compete as a new business, and I think it’s the opposite.

I could be wrong here, but I don't think this is a correct application of Jevons' Paradox.

Jevons' Paradox deals with how much people use a limited resource, such as coal. The expectation is that if you make it more efficient they'll use it less, but they just use more.

1/n

— ᴅᴀɴɪᴇʟ ᴍɪᴇssʟᴇʀ 🧠📚✍️🗣️👥 (@DanielMiessler)

May 13, 2024

Courage is Everything

An idea I’ve been throwing around recently:

Courage is Action vs. fear

Discipline is Courage vs. laziness

Success is Discipline vs. mediocrity

Courage —> Discipline —> Success

So everything you want is on the other side of courage, whether that’s courage against fear or courage against laziness. And this applies to all sorts of real-life situations:

Hard relationship conversations

Becoming fit

Not wasting so much time with games/TV

Eating right

Quitting the soul-crushing job to pursue your calling

In this frame, it’s all Courage.

RECOMMENDATION OF THE WEEKKnow Yourself

Think about what someone should say when they introduce you.

This is _________, he __________________.

Someone introducing you

What is that sentence for you?

Mine is something like,

This is Daniel, he has a company that builds products and services that help people transition to what he calls Human 3.0 so they can survive what’s happening in AI.

The exact sentence is different for different audiences and contexts, but it’s more crucial than ever that you are able to articulate your mission to others. And that your broadcast is strong and clear enough that others can do it for you.

APHORISM OF THE WEEKThank you for reading.

UL is a personal and strange combination of security, AI, tech, and lots of content about human meaning and flourishing. And because it’s so diverse, it’s harder for it to go as viral as something more niche.

So—if you know someone weird like us—please share the newsletter with them. 🫶

Share UL with someone like us…Happy to be sharing the planet with you,

Powered by beehiiv

May 12, 2024

One Apple Fanboy's White-hot Anger at the iPad Commercial

Before I go into this, I want to articulate exactly how much of an Apple fanboy I am.

I camped for the first iPhone

I have camped for every single one since

I also camp for all other major releases, like new watches, etc.

I’ve been writing about Apple and how it’s better than its competition since 2006

I worked there for three years and it was wonderful

My whole home-tech ecosystem is Apple. I probably own 30-something of their devices, and have given away at least triple that. I spread the religion.

Anyway. Fanboy.

And that’s why I’m so fucking mad right now.

The adIf you haven’t seen it it’s basically this visually impressive crushing scene as we’ve seen before in various other places. Where you have a giant compressor and can you put things inside of it and you smash them.

They decided to put a whole bunch of creative instruments inside instead. So we’re talking like:

Pianos

Guitars

Record Players

Video Games

Paint

Books

Fucking books

You know, the things that humans actually use to create art.

And then they proceeded to fucking smash them into smithereens.

Did I mention books? They crushed books.

I actually just figured out what bothers me so much about the whole thing.

It’s like opening an ad to a violent snuff film of a whole family being killed on camera. Graphically. In detail.

Then at the end—as the punchline—they fade to black and put text on the screen.

WE PREVENT THIS

Oh, fantastic. Like the message is strong enough to counter what you just put me through.

Too fucking late guys. You just made me watch that.

You didn’t compress the instruments, and somehow put all their goodness into the iPad. That would have been kind of cool.

No. You fucking destroyed them.

And made me watch.

And then:

Ta-da! The iPad is the same! It’s the replacement!

No. It’s not. And fuck you for implying so.

This the opposite of what the company stands for. Which is human art. Human creativity.

The tech is a lens for focusing and magnifying that human creation, not for snuffing out the humanity and replacing it.

Jesus.

I worked at Apple. How did this get through the many levels of people required to approve something?

Cool so we destroy all this beautiful artistic stuff. But then we show an iPad that nobody will be able to tell the difference from the one from two years ago? Yeah, sounds good.

Someone who’s since been stuffed in a box and launched at the moon

I’m fucking ashamed. As an Apple person. Like I’m reaching out to my people and apologizing for us. Because they know how Apple I am.

Why did you make me do that?

No. Bad. Goddammit. Shit. Damn. Shit.

Anger.

—

NOTESIf I try to think objectively about how this could have happened, I see the two threads. First, compacting things is cool. Second, showing that they all fit in an iPad is cool. Nice. Two cool things. But they fucking missed that compactors DESTROY.

I saw a brilliant analysis online that said a commercial should never be better in reverse, which this one was. Nailed it.

No, I’m not worried Apple has lost its way. Shit like this happens. And when it does, people like me write pieces like this. It would take a trend to get me worried.

Powered by beehiiv

My OpenAI Event Predictions (May 2024)

I don’t have any insider knowledge—unless you count the Information article that just leaked about a possible Her-like assistant—but I think I have a good feeling for what’s coming.

How? Or what am I basing my hunches on? Two things:

Building actively in AI since the end of 2022, and

Stalking Watching Sam Altman’s comments very closely

What I anticipate

So here’s what I think is going to happen.

In a word—agents.

Here’s my thought process…

He’s basically sandbagging us. Which means saying not to anticipate much—that it’ll be incremental—and that it’ll slowly build up over time.

He’s been telling us to not expect great things in the short term

He keeps prepping us for incremental gains

This tells me he’s planning to under-promise and over-deliver in a way that surprises and delights

He’s also been telling us that the capabilities they’ll release won’t always come from models themselves, but often from supplemental and stacking (think D&D) capabilities. Like 2 + 1 + 7 + 1 = 1249.

That to me means the next functionality isn’t a 4.5 or 5 model release

This means to me things like: cooperation between models, additional sensors on models, additional integrations with models, etc.

Basically, ecosystem things that magnify models in extraordinary ways.

And I think agents are one of those things.

All about agents

Sam has talked a lot about agents—kind of in passing and in the same humble way that sets off my alarm bells. It makes me think he’s working hard on them.

It’s precisely the type of thing that could amaze people like the last live event but without announcing GPT-5.

So here’s what I’m thinking (some of this will be for future releases and not necessarily on Monday).

Agents Move Into the Prompt — The problem with CrewAI or AutoGen or any of those frameworks is that you need to do them separately from normal conversations you have with AI (prompts).

I think this will soon seem silly and antiquated.

Talking to agents (vocally or in writing) is THE PENULTIMATE (next to brain link) interface to AI. Prompting is explaining yourself clearly, and that’s the thing AI needs most.

So I think that we’ll soon be able to simply describe what we want and the model will figure out:

What needs to be a zero-shot to the model

What needs stored state

What needs live internet lookups

And how to combine all those into the answer

It’ll also be able to figure out how fast you need it based on the context. And if it needs additional information, it’ll just ask you.

So instead of defining agents with langchain, langgraph, AutoGen, or CrewAI, you’ll just say something human and rambling and flawed, like:

When a request comes in, validate it’s safe, and then see if it’s business related or personal, and then figure out what kind of business task it is. Once you know what kind of business task it is, if it’s a document for review then have a team of people look at it with different backgrounds. It needs to be pristine when it comes out the other side. Like spellcheck, grammar, but also that it uses the proper language for that profession. If it’s a request to do research on a company, go gather tons of data on the company, from mergers and acquisitions to financial performance, to what people are saying about the company leadership, to stock trends, whatever. That should output a super clean one-pager with all that stuff along with current data in graphs and infographics.

How we’ll soon create agent tasks via prompting

From there, the model will break that into multiple pieces—by itself:

Security check on initial input (we’ll use an agent that has 91 different prompt injection and trust/safety checks).

Categorize for business or personal

Categorize within business

Team of writer, proofreader, and editor agents specialized in different professions

Team of agents for researching the performance of companies by pulling X and Reddit conversations, Google results, Bloomberg dashboard analysis

An agent team that creates the financial report

An agent team that creates insanely beautiful infographics specialized towards finance

And it’ll figure out how big and expensive those agent teams need to be by either knowing the person asking, and the projects they’re working on, or by asking a few clarifying questions.

A personal DA

There’s a rumor that they’ll be launching what I have been calling a Digital Assistant since 2016. Which at this point everyone is talking about. The best version I’ve seen of this was from the movie Her, where Scarlett Johansen was the voice of the AI.

If that’s happening—which it looks like it is—that could be the whole event and it would also feel like a GPT-5-level announcement.

And that would definitely be agent themed as well, but I am hoping it’ll be more of what I talked about above.

A mix of agent stuffOne possibility is that they basically have an Agent-themed event.

They launch the Digital Assistant

They launch native agents in a new GPT-4 model, which allows you to create and control agents through direct instructions (prompting)

They give you the ability to call the agents in the prompt, like I’m talking about above, and like you currently can with Custom GPTs

If they go that route, I think they’ll likely sweeten the event with a couple extra goodies:

Increased context windows

Better haystack performance

Updated knowledge dates

A slight intelligence improvement of the new version of 4

SummarySo that’s my guess.

Agents.

Sam is sandbagging us in order to under-promise and over-deliver

This ensures we’ll be delighted whenever he releases something

He’s been hinting at agents for a long time now

Prompting is the natural interface to AI

Agent instructions will merge into prompts

Eventually we’re heading towards DAs, which he might start at the event

I’ll be watching this event with as much anticipation as for WWDC this year. No meetings. No work. Just watching and cheering. Can’t wait.

—

And if you’re curious about where I see this all going in the longer term, here’s my hour long video explaining the vision…

Powered by beehiiv

May 6, 2024

UL NO. 431: Companies are Graphs of Algorithms

This content is reserved for premium subscribers of Unsupervised Learning Membership. To Access this and other great posts, consider upgrading to premium.

A subscription gets you: Access to the UL community and chat (the thinking and sharing zone) Exclusive UL member content (tutorials, private tool demos, etc.) Exclusive UL member events (currently two a month) More coming!Powered by beehiiv

Companies Are Just a Graph of Algorithms

This content is reserved for premium subscribers of Unsupervised Learning Membership. To Access this and other great posts, consider upgrading to premium.

A subscription gets you: Access to the UL community and chat (the thinking and sharing zone) Exclusive UL member content (tutorials, private tool demos, etc.) Exclusive UL member events (currently two a month) More coming!Powered by beehiiv

AI is Mostly Prompting

This content is reserved for premium subscribers of Unsupervised Learning Membership. To Access this and other great posts, consider upgrading to premium.

A subscription gets you: Access to the UL community and chat (the thinking and sharing zone) Exclusive UL member content (tutorials, private tool demos, etc.) Exclusive UL member events (currently two a month) More coming!Powered by beehiiv

April 30, 2024

UL NO. 430: The Courage to be Disliked

This content is reserved for premium subscribers of Unsupervised Learning Membership. To Access this and other great posts, consider upgrading to premium.

A subscription gets you: Access to the UL community and chat (the thinking and sharing zone) Exclusive UL member content (tutorials, private tool demos, etc.) Exclusive UL member events (currently two a month) More coming!Powered by beehiiv

April 23, 2024

The Value of Elite Colleges is Relationships with Elite People

There’s been an unsolved mystery for a while that I want to solve in public.

I didn’t solve it. It’s been solved. But few people know the answer.

The mystery is:

Why do so many people pay so much for elite college educations when the actual education they receive isn’t that much better than online courses or state schools?

And a second mystery is:

And if the education is so similar, why do so many of the most successful people come from those elite schools.

Paul Graham solved this for me in a number of his essays.

Basically it comes down to relationships. Associations. Connections.

If you go to an elite school, it’s because you’re lucky to have great parents with lots of intelligence and lots of resources.

And when you get to an elite school you’re surrounded by lots of other people like that.

Those are people who are likely to have the luxury of not just extra IQ points and self-discipline, but the time and money to be able to go and do things with their talents.

Being surrounded by lots of people like that means you’re likely to:

Have more ideas

Have the resources to do something with them

Have the network to share and magnify those efforts

It’s a stacked deck in their favor. Not from the education, but from the network of people you meet there and the permanent bonds that are formed with them.

Nothing is more powerful in life than being surrounded by highly talented and motivated people. And there’s no better place to find people like that, early in life, than at an elite university.

RecommendationsSo, does that mean the rest of us are doomed?

No.

What you need to do is break the problem into two separate pieces.

Education

Connections to talented and driven people

You can get #1 from most anywhere.

For #2, if you can’t go to an elite school, you have to find other places to force those connections.

Like:

Moving to the Bay Area and going to lots of AI meetups, hacker spaces, etc.

Having an online presence where you talk about your learning journey, and you build things in public

Sharing those things you build with other builders

SummaryThings are stacked in favor of those at elite colleges, and if you are a parent it’s still a great way to give your kids an advantage if that’s an option.

But it’s not the only way to hack the system.

Find a path to surrounding your kids—or yourself—with the most creative and driven people possible.

Powered by beehiiv

April 22, 2024

UL NO. 429: Build Your Career Around Problems

👉 Continue reading online to avoid the email cutoff issue 👈

Unsupervised Learning is about the transition from Human 2.0 to Human 3.0 in order to survive and thrive in a post-AI world. It combines original ideas and analysis to provide not just the most important stories and trends—but why they matter, and how to respond.

TOCNOTESHey there,

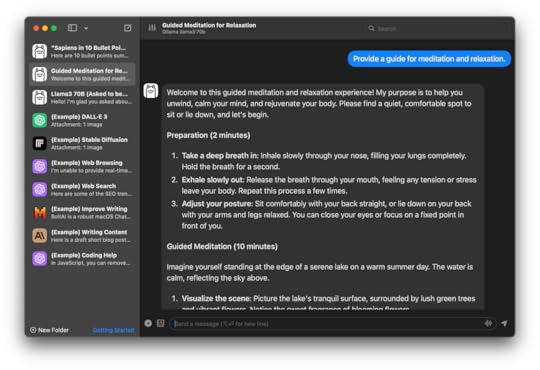

So Llama3 came out last week, and it’s really impressive. I’ve been playing with this new AI UI called Bolt.ai, which is quite nice. It’s basically a full application with a lot of the UX behavior of ChatGPT, but with the ability to use lots of different models.

There are many web versions of this type of thing, but this is much easier to install on a Mac.

Bolt.ai

Anyway, Llama3 has been pretty impressive at the 70B level. I haven’t done full testing yet, but I’ve had it generate at least a few responses that felt GPT-4-ish, and many that felt way worse. Remember that shaping open models with good system prompts is super important, and that going over the context window (8K for Llama3) makes it act crazy.

Also, Llama3 is significantly less restricted than previous models. In a lot of ways it behaves more like an uncensored model, especially if you tell it to act like one.

It’s insane to me that we’ll soon have GPT-4 level local models. Free. Local. And the resources required to run them will keep coming down. This is especially trippy when you realize that our standards for their performance will plateau for most tasks. Meaning, we’ll soon be able to do some massive percentage of everyday human tasks using local models that cost virtually nothing.

More stuff going on:

Continued prep for B-Sides/RSA shenanigans.

Remember to come say hi or should from across the room if you see me around BSides or RSA. Hugs, waves, finger guns, or fist-bumps all accepted, according to your preference. If I seem distracted, not very social, shy, introverted, awkward, etc., it’s because I am those things at that moment. Apologies. We can re-sync after.

My last few talks have gone extremely well. And one of them I didn’t even present that well due to some technical issues with the venue. There is just tremendous power in speaking to share an idea rather than trying to “execute a presentation” that people hopefully don’t think sucks. Night and day difference.

Updated the intro to the newsletter, focusing on Human 2.0 to Human 3.0. Let me know what you think by replying!

Another experiment this week: I sprinkled DISCOVERY into each of the SECURITY, TECH, HUMAN sections rather than being dedicated. Let me know what you think of that by replying as well! I like it because it’s clean, but don’t like it because it mixes news with links. Let me know your throughs.

🔥Oh, and you HAVE to go listen to this conversation between Tyler Cowen and Peter Thiel. I’m not a Peter Thiel fan because reasons but this conversation has caused me to re-think my assessment of his intelligence and understanding of the world. This conversation went from The Bible, to Shakespeare, to Star Wars, to the Antichrist. Seriously impressive. And if you’re wondering how I of all people could recommend Peter Thiel, see the Ideas section below. MORE

Tyler Cowen and Peter Thiel on Political Theology (Ep. 210)

Unveiling the dangers of just trying to muddle through

conversationswithtyler.com/episodes/peter-thiel-political-theology

Ok, let’s get to it…

MY WORKWrote a new essay on how the old paradigm of planning a career no longer works. READ IT

Plan Your Career Around Problems

It's no longer safe to work in an "industry" without knowing what problems you're solving

danielmiessler.com/p/plan-career-around-problems

SECURITYThe US House just passed a bill making it illegal for the government to buy your data without a warrant, calling it "The Fourth Amendment is Not For Sale." MORE

💡This is in response to people finding out that government agencies were just outright buying US citizens’ data from data brokers. I love this move.

Sandworm, a notorious Russian hacking group, has been linked to a cyberattack on a Texas water facility. MORE

The House just passed a bill that could ban TikTok in the U.S. if it's not sold of to a US company. MORE

MITRE was compromised by state-affiliated attackers using two Ivanti VPN zero-days. China-based attackers are suspected. MORE

A flaw in PuTTY versions 0.68-0.80 lets attackers with 60 cryptographic signatures from a user figure out their private keys offline. MORE

Sponsor

🔍Learn How to Demonstrate Secure AI Practices with ISO 42001

How are you proving your AI practices are secure? ISO 42001 was recently introduced to help companies demonstrate their security practices around AI, in a verifiable way.

Join Vanta and A-LIGN for a to dig into ISO 42001— what it is, what types of organizations need it, and how it works.

Discover the components of the framework

into which organizations can benefit most

and best practices for successfully integrating ISO 42001 into your organization

to save your spot.

FBI Director Christopher Wray highlights an urgent shift in Chinese hacking strategies, saying they’re aiming to gain the ability to disrupt U.S. critical infrastructure by 2027 as part of prep for going into Taiwan. MORE

Moxie Marlinspike says he’s no longer affiliated with Signal.

💡I’ve never loved Signal so I’m going to be asking more people to switch back to Messages. Moxie was the only reason I saw it as equal or superior, and with him gone I see no reason to stay. MORE

Sponsor

VIRTUAL OPEN SOURCE POWERED SECURITY CONFERENCE

Join us for Hardly Strictly Security: The Ultimate Open Source Cybersecurity Conference. This Thursday, April 25th! This free, virtual conference is for security engineers, red teamers, bug bounty hunters, and security leaders. Hear from speakers from Vercel, Hashicorp, Datadog, Fastly, and others who have leveraged open source tools to make themselves - and all of us - more secure.

Join Us!Sacramento International Airport had to stop flights due to a deliberately cut AT&T internet cable that provided internet to the airport. MORE

🔧 Tailscale SSH, now generally available, simplifies SSH by managing authentication and authorization. | by Tailscale | MORE

👉 Continue reading online to avoid the email cutoff issue 👈

TECHNOLOGYDeepMind's boss says Google's set to outspend everyone in AI, hinting at dropping over $100 billion into the tech. MORE

💡Outspending isn’t the same as outproducing or outshipping. The company has lost the ability to ship good products because they’re not guided by vision and customer needs anymore. They’re guided by an ancient GMail culture of engineers making stuff and throwing it at the wall to see if someone likes it.

I think they need a fresh start with new senior leadership.

Stanford's released a quality report on the state of AI models. Here’s a Fabric create_micro_summary: MORE

ONE SENTENCE SUMMARY:

The 2024 AI Index Report by Stanford University highlights AI's growing societal impact, technical advancements, and investment trends.

MAIN POINTS:

AI surpasses humans in specific tasks but not in complex reasoning and planning.

U.S. leads in AI model development with industry-dominating frontier research.

Investment in generative AI surged, reaching $25.2 billion in 2023.

TAKEAWAYS:

Training costs for top AI models are reaching unprecedented levels.

Lack of standardization in responsible AI evaluations complicates risk assessment.

AI's role in accelerating scientific progress and productivity is expanding

An interesting argument about how search engines, especially Google with its 90% market share, can sway election outcomes a lot more than we talk about. MORE

💡Another example of the power pendulum swinging back to companies.

Google fired 28 employees for protesting a $1.2 billion contract with Israel, citing policy violations and workplace disruption. MORE

Google merged its Android and hardware teams to innovate faster. MORE

Netflix runs FreeBSD CURRENT for its edge network due to a unique blend of stability and features. MORE

Reddit's showing up a lot more in Google results. MORE

Apple's AirPlay is starting to show up in hotel rooms. MORE

The TinySA is a budget-friendly spectrum analyzer. MORE

Programming is mostly thinking. MORE

A broad introduction to AWS logs sources and relevant events for detection engineering. | MORE

👉 Continue reading online to avoid the email cutoff issue 👈

HUMANSGeneration Z is outperforming previous generations at their age. MORE

This article says societal decline mirrors the "Death Spiral" seen in ants, where companies and societies fall into self-destructive patterns, often ignoring early warning signs until it's too late to reverse the damage. MORE

Why Everything is Becoming a Game. MORE

A study found that jobs that require you to think a lot are protective against Alzeimer’s. MORE

Bayer is doing an experiment where they remove most of middle management and let 100,000 employees self-organize. They’re hoping it’ll save $2.15 billion. MORE

💡This will be another effect of AI. And I don’t mean AI tech, but AI’s influence on how to think about a business. AI implementations for businesses will look at everything in a business, from the products they’re making, the people they have, and the organizational structure, and recommend ways to massively improve efficiency by removing waste.

And that will often mean getting down to vision people and executors, with very little friction in between.

The term "brainwashing" morphed into a blanket term for any unconventional behavior in the US, sparking wild government experiments like MK-Ultra. MORE

👉 Continue reading online to avoid the email cutoff issue 👈

IDEAS & ANALYSISHarvesting Ideas from Questionable People

This episode of the newsletter talks about Peter Thiel, who is basically one of the 7 anti-Christs in a lot of liberal circles. I dismissed him years ago because he supported Trump.

I feel like I’ve grown quite a bit in the last few years though. And I am conscious of making sure I just haven’t become more right-wing. I actually feel more grounded as a progressive than ever. Not a modern liberal, or leftist, but a progressive.

I guess my evolution is similar to Jonathan Haidt’s. He was super liberal before writing The Righteous Mind, which I highly recommend. It is the book that most influenced me to become a centrist. Not a move to the right, not a move away from the left, but something new.

The way I would describe it, which is not in that book, is to first define what you believe to be true, and the world you think we should live in. Don’t think about politics. Don’t think about parties. Those are all silly and ephemeral. Instead, imagine the actual society you would like to live in.

For me it’s something like (VERY raw/crude):

An understanding that evolutionary biology is the foundation of most tendencies and natural patterns for human and other animal societies

An understanding that we as humans can build on top of those tendencies to make something better

The lack of belief in libertarian (absolute) free will, such that criminals aren’t considered garbage, and billionaires aren’t considered gods

Free speech and free press, up to the point of actively/directly inciting violence against someone

It being both illegal and socially reprehensible to deny someone privileges because of their race or gender identity

Human first, tech second

Humanities first, sciences second

People’s reputations are harmed when they say things that are untrue

Simultaneous embrace of progressive and conservative ideas, accepting that for each given situation one might be better than the other to accomplish the goals of a given individual, family, or society.

A belief that most people are capable of being good and useful, if they’re properly supported when growing up

A belief that it is everyone’s responsibility to try to help everyone get that proper support growing up. Not technically, but as a society

Taxation is unpleasant but necessary, but we can’t let out of control government become so useless that it turns the rich against the idea

The rich (see lucky) see the raising of the poorest (least lucky) as not only good, but good for them as well

The primary goal of a member of society is to be useful

The successful (especially the self-made hustlers) are celebrated because hustle and usefulness are celebrated

Society is built on a blend of conservative ideas that respect our animal natures and progressive ideas that lift us beyond them, with the unifying factor being the lifting of all humans to lower amounts of suffering, and higher amounts of meaning and fulfillment

So let’s take those (but a better version, obviously, since I didn’t even use AI to write those out), and let’s say that’s our society.

Well, now I don’t care about liberal or conservative. Or right or left. Or any of those labels. They’re stuck in the current time, in the current Overton Window.

What we do instead is see parties—and the people within them—as idea sources. Because now I can discard good or bad ideas based on how they propel or distract from the world we’re trying to build.

And that brings me to Peter Thiel.

I discarded him because he supported Trump. Fair enough. Maybe he was dumb at the time. Maybe I was. That’s my own value judgement. But the point is that he could have changed (and I think I heard him say that actually).

But the point is that if I hear Peter Thiel say something smart, I’m going to listen. And if I hear him say something dumb, I’m going to stop listening.

Same for Joe Rogan. Or Andrew Huberman. Or even Sam Harris.

In an extreme form of this, if Ghengis Khan has the best bagel recipe on planet earth, I might use it. And if Peter Thiel wants to get Trump elected again, which I think would be horrible for the planet, but he also has something to teach me about political philosophy, I’m going to listen.

I. Will. Harvest. Good. Ideas.

My goal is to have the best models possible for how the world works. And if Peter Thiel or Ghengis Khan has better models than me for bagels, or supply side economics, then I will adopt them.

I can do this because I already know the society I want to help build. I know what goodness is. I know what evil is.

And because I have that footing, a bagel recipe isn’t going to somehow convince me to want a shittier society.

So, my recommendation…

RECOMMENDATION OF THE WEEKEstablish your ground truth in terms of morality and the society you want to live in. Lock that in without labeling it left, right, or whatever

Widely explore ideas from anyone and everyone

Do not discard people as a source of ideas just because you disagree with them on something, even if it’s major. That’s only hurting you, and the good you could do in the world as a result of being upgraded

Feel free to label people as overall bad, or stupid, but realize it doesn’t mean they’re wrong about everything. Example, I know know after seeing Tucker Carlson on Joe Rogan that Carlson is an actual idiot. Like, not a little bit. So I’ve closed my aperture to him largely, but not all the way. Again, if he has a great coffee recipe I’ll listen.

Regularly revisit your #1 and refactor everything

Regularly do #2

In short, don’t limit yourself by closing your ears to everyone who’s stupid about something. Most of us are.

And on that note, go listen to the conversation between Tyler Cowen and Peter Thiel. It was extraordinary, and it resulted in me buying a LOT of books. MORE

APHORISM OF THE WEEKThank you for reading.

UL is a personal and strange combination of security, AI, tech, and lots of content about human meaning and flourishing. And because it’s so diverse, it’s harder for it to go as viral as something more niche.

So—if you know someone weird like us—please share the newsletter with them. 🫶

Share UL with someone like us…Happy to be sharing the planet with you,

Powered by beehiiv

April 21, 2024

Plan Your Career Around Problems

I see a lot of people who want to work in cybersecurity. I said the same when I got started, but now I think this is the wrong way to frame things, especially because of AI.

My thinking now is that working in an “industry” is too vague and unfocused to give you any stability in a world where AI can do most jobs.

I think the only way to get stability in this future—or at least as much as possible—is to be very talented at working on really hard problems.

Crucially, that requires that you can clearly articulate those problems, and describe how your approach and results are superior to alternatives.

My caseThe past version of myself would’ve said I wanted to be a security expert and have a long, fruitful career in security.

OK, but what do you want to work on?

I would’ve said something like:

Well I don’t really know, but I really like Recon, Web Testing, Risk Assessment, Testing Methodology Optimization, OSINT, and stuff like that.

And then my career would sort of accidentally fall into that direction, and I would hopefully become known for those things.

That path worked for me over the last couple of decades, but I don’t think it would work for me again. Which is why I don’t recommend it.

A different modelI think what people should say today, is that I am fascinated by all sorts of security problems, but my favorites include the Many Eyes problem within open source, the time delay in Attack Surface Management, and the problem of not knowing who is doing what in a world of nonhuman identities, or the problem of establishing trust in a world where anything could be deepfaked, i.e., how do you know if you’re actually interacting with a person you think you are interacting with?

These are still security. They’re still cyber. And in some ways it’s no different than where I started with my legacy narrative. But there is a crucial difference in that this doesn’t lead with “wanting to be in security”, and then rattling off some potential, ambiguous interests.

Instead, this narrative says I like security problems, and here are some examples of ones I want to work on.

I think this approach is going to be far more robust in competition with other job-seekers, and with AI. And perhaps even more importantly, it’s a way to clarify direction for those entering the field.

Get fascinated by problems.

That fascination leads to curiosity

That curiosity leads to work

That work leads to skill

And that skill over time leads to competence

Comparing old vs. new narrativesHere are some examples.

OLD: I’d like to get into security. Maybe something with pentesting.

NEW: I’m fascinated by problems in the security space, especially around the difficulty of automating manual pentesting.

OLD: I’d like to get into security. Maybe something in identity or something. I have a Github.

NEW: I’m fascinated by problems in the security space, especially around how we’re going to tell the difference between AI agents and humans. I’ve posted some small projects that start to address the issues on my Github.

The difference between these two is small but massive.

To a hiring manager, the OLD version sounds like someone who needs guidance and handholding, which virtually no company has time to give anymore.

The NEW versions capture someone who is self-motivated by purpose, and who is using their skills in a tangible way to solve real problems.

That’s someone to hire. And it’s also someone to replace last with AI.

SummaryThis is an extraordinarily bad time to not know what you want to do with your career. AI is coming for those people first.

This is why I’m so obsessed with questions and problems. They provide clarity, and they focus curiosity and talent to an edge that produces results.

So that’s my advice. Don’t think about entering an industry.

Think about problems that you want to solve because they fascinate you, and articulate/pursue the different ways that you intend to address them.

Powered by beehiiv

Daniel Miessler's Blog

- Daniel Miessler's profile

- 18 followers