Bruce Clay's Blog, page 28

June 16, 2015

In-House SEO or SEO Agency: Which is Right for Your Business?

In-House SEO or SEO Agency: Which is Right for Your Business? was originally published on BruceClay.com, home of expert search engine optimization tips.

Devising or revising an SEO strategy for your business and wondering whether or not to go with an in-house SEO or an SEO agency? It’s a common – and good – question to be asking. The answer depends on your budget and your goals.

Each option comes with its own benefits – and in a perfect world you would likely have both. Here’s Bruce Clay’s take on the issue: “It’s crucial to stay current with the latest SEO methodology – that’s very time-consuming, though. It requires several hours a day that a solo in-house SEO probably doesn’t have. A consultant can be a powerful ally, filling in the gaps by mentoring and guiding an in-house SEO.”

If it’s a matter of one or the other, however, it’s important to align the benefits of each and determine which is a better fit for your needs. Read on to find out more about the benefits of each option, with food for thought from our SEO Manager, Robert Ramirez.

SEO Agency Advantages

An SEO agency is basically a think tank of highly experienced, savvy analysts. Their experience and talent, coupled with carte blanche access to premiere tools and extensive data, is an invaluable resource for any business. And there’s no surprising a strong SEO agency — they are, after all, consumed with every aspect of SEO day-in and day-out.

“An SEO agency is entrenched in the industry,” said Ramirez. “They’re on top of all algorithm changes as they happen. Part of an agency’s job is to know everything about search engine optimization as it happens. Bruce Clay, for example, spends two to three hours a day reading.”

SEO agencies usually produce faster results because they have more experience. They’ve worked with hundreds (or thousands!) of clients in many industries. Search engine optimization agencies have a bird’s eye view of search.

“There’s less guesswork because — whatever the problem is — they’ve probably encountered it before,” Ramirez said.

Moreover, because SEO agencies work with multiple clients, they avoid the tunnel vision that an in-house SEO can be vulnerable to.

“Sometimes it can be hard for an in-house SEO to see a site’s issues because they’re looking at it too much. An SEO agency can offer a fresh perspective,” said Ramirez.

As far as cost is concerned, sometimes the hourly rate that an SEO agency charges can seem high. But with an SEO agency, you avoid the higher cost of maintaining an in-house SEO as an employee. An in-house SEO costs more than just salary alone — along with the salary, you are responsible for equipment, tools, education, benefits, etc. With an agency, those costs are not part of the package — you pay for the SEO agency’s expertise and time.

In-House SEO Advantages

An in-house SEO is thoroughly devoted to a business and focused on your brand 100 percent of the time. This SEO pro will have a robust knowledge of your industry, as well as your business’ unique needs. He or she is a go-to expert on search engine optimization and your business. Furthermore, the in-house SEO will have the advantage of working on-site with other team members.

“An in-house SEO is able to build relationships with other teams. They might have a stronger influence on IT or marketing, etc., since they’re in the building and have a day-to-day relationship with them,” said Ramirez. “On the other hand, an agency typically has the ability to escalate things to the C-Suite when teams are unresponsive. If an in-house’s words are falling on deaf ears, the agency can be the outside voice that their company will listen to. The agency can be key in making the higher-ups fall in line.”

For businesses who are in the process of building brand identity, an in-house SEO can make a lot of sense. An SEO working in-house is up to his elbows in your business’ message and methodology.

“They live and breathe your brand, which naturally makes them better equipped to represent the company’s message in marketing,” Ramirez said.

Which Is Right for You?

At the end of the day, it all boils down to your budget and needs. Whether you choose to work with an SEO agency or work with an in-house SEO, make sure you do your homework and find an experienced, ethical and effective agency or individual. When interviewing a prospective analyst, we recommend asking these “25 SEO Interview Questions” pulled straight from Bruce Clay, Inc. interviews.

If you’re looking to vet an agency, remember that a strong digital marketing firm should have an impressive track record of successful projects, longevity in the industry, seasoned SEO consultants on staff that are recognized leaders, and a reputation that speaks for itself.

In your experience, what combination of in-house and consultant works for getting search marketing initiatives done? Share in the comments! And for more talk on in-house vs. agency, check out this recent discussion from #SEMRushChat.

June 10, 2015

How to Set Up Google Search Console – Free Search Engine Optimization & Webmaster Tools for Your Website

How to Set Up Google Search Console – Free Search Engine Optimization & Webmaster Tools for Your Website was originally published on BruceClay.com, home of expert search engine optimization tips.

If you have a website, then you ought to know about Google Search Console. Formerly known as Google Webmaster Tools, this free software is like a dashboard of instruments that let you manage your site. Seriously, unless you prefer running your online business blindfolded, getting this set up should be any webmaster’s SEO priority. In this article, you learn step-by-step how to set up a Google Search Console account.

The Google Search Console mascot looks ready to help.

(Image from Google)

What Is Google Search Console

Google Search Console is the new name for Google Webmaster Tools, a name change that makes the tools sound less technical and more inclusive. Search Console is free software provided by Google that reveals information you can’t find anywhere else about your own website. For any search engine optimization project we undertake, we make sure Google Search Console is set up. Google’s tools work for apps, as well, so app developers also benefit from the data.

These webmaster tools tell you how the search engine sees your site, straight from the horse’s mouth. Here you can see site errors, check for broken pages, confirm site indexing, and more. Plus, this is where you pick up any messages sent directly from Google. Whether it’s a malware warning, a detected hack on your site, or a notice that you’ve been dealt a manual penalty — the search engine alerts you through Google Search Console.

In addition, these tools show you how people interact with your site in search results. It’s like a private keyhole letting you spy on your website’s behavior in the larger world of search. For example, you can see data such as:

What search terms your site shows up for in Google search (Yes, you can see keywords here!)

Your average site rankings in search results for those keywords

How many users are clicking on your site’s listing in Google results

Side note: You may have set up Google Analytics for your site already. While there’s some overlap between the two products, they work best hand-in-hand to give you a more complete picture of your website traffic. For monitoring your SEO efforts and maintaining your website, set up Google Search Console as your primary tool.

Start by Signing In to Google

Google wants to connect the dots between your website (or app) and you, so first you must log in to your Google account. You can sign in through Gmail, Google+, or anywhere you have a Google account.

If you don’t have a Google account, you must set one up. Go to Google.com and click Sign in. Then choose Create an account and complete the form. (Bonus: You now have access to Gmail and all other Google applications.)

Set Up Google Search Console

While signed in to your Google account, navigate to this page in any browser: http://www.google.com/webmasters/tools/. Type in your website (the domain URL, such as http://www.example.com/) or app (for example, android-app://com.eample/) and then click Add Property.

Note: If you already have at least one property set up, you will see that display instead of the URL entry shown below. To add another website or app, just click the Add a Property button and then enter the new site’s URL.

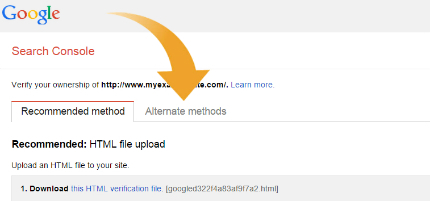

Next, Google needs to confirm that this website or app belongs to you. To verify that you are the site owner, you have several choices. Click the “Alternate Methods” tab to view them all.

Method #1: Google Analytics

If you’ve set up Google Analytics for your website AND you have “administrator” permissions, you can verify your site ownership instantly. This is the preferred method of SEOs and webmasters alike because it’s usually the easiest one. Here’s what to do:

Choose the “Google Analytics” option.

Click the Verify button. That’s the whole procedure. You’re ready to use Google Search Console!

If you DO NOT have Google Analytics, there are three methods to verify your site ownership. Read on to decide which will be the easiest verification method for you.

Method #2: HTML tag

If you have access to edit your site’s HTML code, choose the “HTML tag” option.

Copy the text line that Google displays in the shaded box (beginning with ). Now open your home page in your preferred website editor and paste that text into the Head section (near the top) of your home page. This creates a new meta tag. Save your changes in the editor program.

Next, back in the Google Search Console setup page, click the Verify button. That’s it!

Method #3: Domain name provider

If you cannot use the first two methods, try the “Domain name provider” option.

From the drop-down list, choose the company where you registered your domain name. Then follow the instructions Google gives you, which vary. When you’re finished, click the Verify button.

Method #4: Google Tag Manager

The last option for verifying your website works for people who have a Google Tag Manager account (and the “manage” permission). If that’s you, choose the fourth radio button and click the Verify button.

Get Started Using Google Search Console

Once Google verifies your site or app ownership, you can log in and start using your newly set up Google Search Console. Keep in mind that data takes time to collect, so it may be a few days before your new account has data worth looking at. This is an excellent time to take care of a basic but important search engine optimization task: creating a sitemap that will help Google find and index your pages faster.

To access the tools, go to http://www.google.com/webmasters/tools/. Sign in, then click the name of the website or app you set up in Google Search Console. Once inside, explore the left-hand menu to try out the many tools and reports. Here are some Google Help resources to help get you started:

Refine your personal and site settings – You can customize the way you set up your Google Search Console account. For instance, if your site operates in different countries, or if you have multiple domain versions, here’s where you define your preferred settings (find out more).

Set up app indexing and more – App developers can use Google Search Console to add deep linking to their apps (which allows app pages to show up in users’ mobile search results), link up their app with a website, handle any crawl errors and more (learn how).

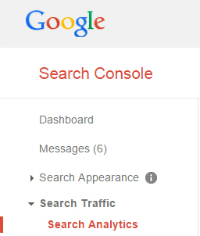

Master the Search Analytics report – Among the dozens of powerful features set up for Google Search Console, we have to highlight one. The Search Analytics report, which has replaced the old Search Queries from Google Webmaster Tools days, is a powerful tool in any comprehensive search engine optimization campaign. This is where you’ll find out which web pages rank for which search terms and much, much more. Open the tool by clicking Search Traffic > Search Analytics (for more guidance, read the help article).

June 5, 2015

Google Wants You to Make Your Site Faster or They’ll Do It For You. Will You Like the Result?

Google Wants You to Make Your Site Faster or They’ll Do It For You. Will You Like the Result? was originally published on BruceClay.com, home of expert search engine optimization tips.

In April, around the time of Google’s “Mobilegeddon” mobile ranking update, the search engine announced another mobile optimization in testing. Via the Webmaster Central Blog, Google said they’d “developed a way to optimize pages to be faster and lighter, while preserving most of the relevant content.” In other words, if you don’t optimize your site so that it loads quickly for mobile devices, Google will try to do it for you.

(Get your All-In-One Mobile SEO and Design Checklist here.)

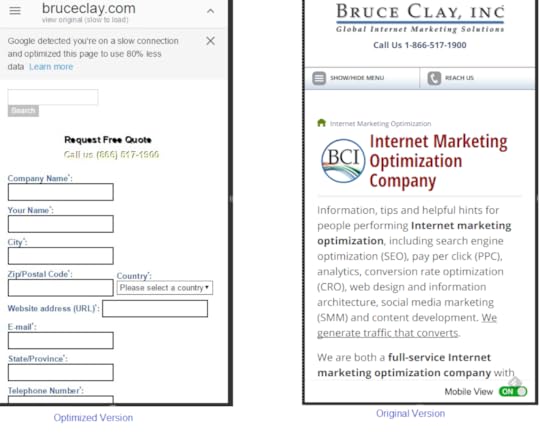

Called transcoding, Google says it’s a feature intended to help deliver results quickly to searchers on slow mobile connections. Google’s early tests show that transcoding returns pages with 80 percent fewer bytes and 50 percent faster load times. Indonesia has been the staging ground for early field tests, displaying transcoded sites when a mobile searcher is on a slow connection, like 2G.

Sounds cool, right? Now website owners and SEOs don’t need to worry about optimizing sites to be fast; Google is going to do it for us! What a magnanimous thing for Google to do. Except that there are a couple of reasons why Google’s low bandwidth transcoder should give developers and webmasters pause.

The Cons of Transcoding

Google says the optimized versions preserve “most of the relevant content.” There are two editorial decisions in that phrase: most and relevant. The biggest problem here is that Google, not you, decides which of your content is relevant, and how much of it to show.

You probably didn’t hire a bot to design your website; do you want a bot optimizing it? Take, for example, the BruceClay.com home page, where it becomes evident that a lot of styling gets stripped out:

A page optimized by Google transcoding (left) next to the original version of the page (right). Click to enlarge.

The Pros of Transcoding

For each of the cons, some sites could see real benefits from Google’s transcoding:

For many websites owners, users’ seeing a stripped-down version of a site is better than not seeing it at all.

Google includes a link to the original page on the transcoded version, so users have the option to see the page how you built it.

Transcoded pages are undoubtedly fast. For a detailed comparison, I ran the two pages above through GTMetrix and came up with the following results:

Page Load Time

Total Page Size

Total Requests

Original Version

3.71 seconds

1.94 MB

155

Transcoded Page

0.56 seconds

17.3 KB

2

Viewing Your Transcoded Page

If you’re curious to see how your site renders when it’s been transcoded by Google, there’s a tool to show you just that. You’ll need to do a little workaround if you’re outside of Indonesia:

Using the Chrome browser, go to the Low Bandwidth Transcoder emulator at https://www.google.com/webmasters/tools/transcoder.

Click the toggle menu in the top right corner of the browser window, then click More tools and then Developer tools.

Along the top of the window you’ll see two drop-down menus: Device and Network. Select any smartphone device from the menu selection, like the Google Nexus 4.

Enter the URL you want to test in the “Your website” field and click the Preview button.

Click the URL that appears below the text “Transcoded page:” and you will see how the page renders as Google transcodes it.

When Will We See Transcoding in the Wild?

A lot of this has to do with being lightweight; it’s not enough to use CDNs or have a high-end server. Google cares about the experience of people who access the web at the narrow end of a bottleneck, which is completely out of the hands of web developers. Your fast server only means so much to users on a 2G network.

At this point, we have no idea when, if at all, this functionality will be implemented anywhere outside of Indonesia. However, Google’s underlying statement is clear: websites should be really, really fast.

For information on how to optimize your pages for speed and mobile SEO, we recommend starting with these resources:

The All-In-One Mobile SEO & Design Checklist

SEO Tutorial: Mobile SEO and UX Optimization

Image Optimization: The #1 Thing You Can Do to Improve Mobile UX

Webmaster’s Mobile Guide by Google Developers

June 3, 2015

SMX Liveblog: Baidu Revealed: An Inside Look At ‘China’s Google’

SMX Liveblog: Baidu Revealed: An Inside Look At ‘China’s Google’ was originally published on BruceClay.com, home of expert search engine optimization tips.

Liang Zeng, Vice President, Baidu Inc.

This is a real-time report from SMX Advanced 2015.

Baidu Vice President Liang Zeng has flown 3,000 miles from Beijing to spend 25 minutes with SMX Advanced digital marketers. Thank you, Zeng!

Baidu is often referred to as the “Chinese Google.” Would you be surprised to learn that in China, Baidu is even more dominant in the search space than Google is in the U.S.? As we’re told in this session at SMX Advanced, Baidu has 96.3 percent market share among mobile users in China.

If you or your client is looking to crack the Chinese search market, it’s imperative that you learn more about the Chinese search behemoth. So, without further ado, get to know Baidu from Zeng.

Baidu Fast Facts

Baidu was founded in 2000.

Baidu was listed on NASDAQ in 2005.

Baidu was the first Chinese company to be listed on the NASDAQ 100 in 2007.

Baidu has an $80 billion market cap.

Baidu has 45,000 employees.

Baidu is the No. 1 Chinese search engine with 74 percent market share.

Baidu has 96.3 percent market share among mobile users in China.

The Chinese Search Market & What’s Next for Baidu

Zeng also shares some insight on the Chinese search market. He notes that there are 600 million smartphone users in China. One in three Chinese citizens bought a new smartphone last year.

He also tells us that only a very small percentage of people in China use browsers on mobile to search – they prefer to search within apps.

As Baidu moves forward, Zeng says that the search engine wants to serve as a bridge between people and service rather than people and search engines. In the next generation of search, Baidu wants to be a mind reader by “enhancing the user experience by showing highly relevant search results on an easy-to-read page.”

SMX Liveblog: Paid Search: Focusing on Audiences & Categories Instead of Keywords

SMX Liveblog: Paid Search: Focusing on Audiences & Categories Instead of Keywords was originally published on BruceClay.com, home of expert search engine optimization tips.

Kevin Ryan at SMX Advanced suggests that in the next few years, search engines might not let advertisers buy keywords, but instead will allow targeting on audiences and categories.

This is a real-time report from SMX Advanced 2015.

In this bite-sized liveblog, Kevin Ryan shares where he sees paid search headed as an industry. Ryan is the founder of MotivityMarketing, a columnist for Search Marketing Land and the author of “Taking Down Goliath: Digital Marketing Strategy for Beating Competitors with 100 Times Your Spending Power.” (Try saying that title ten times fast!)

“Search is declining as a percentage of share – we’re getting less data on search … Our dependence on keywords is a little ridiculous. We need to think about moving away from potentially misleading keywords,” Ryan says.

As an illustration of how keywords can be misleading, he points out that if you type in “cocaine” in Amazon, one of the suggestions that comes up is “Cocaine in Groceries.” Which is obviously nonsense.

“Better, then, to think about audiences and interest categories,” says Ryan.

Ryan even posits that perhaps, in a year or two, search engines might not let us buy keywords, but only audiences and categories.

At this point Ryan throws out that, for the record, he thinks Yahoo is making a comeback. (Noted.)

Other PPC points?

Paid search costs have increased tremendously since the early 2000s. Cost grows about 13 percent per year.

In 2003, there was a 174 percent increase in U.S. paid search advertising spending growth; “the industry literally exploded overnight.”

Give consistent messages across multiple screens.

Practical knowledge is not to be abandoned even with all search’s technology – we just have new ways of interacting with media.

Every media outlet is talking about how to connect with your potential audience as a means of advertising – this is where you should be moving your initiatives.

Ryan leaves us with this (creepy but true) thought: “If the average person knew how well we could target them, it would really freak them out. But … they’re freaked out for approximately 30 seconds and then they go back to watching kittens.”

SMX Liveblog: Keynote Conversation with Google AdWords VP Jerry Dischler

SMX Liveblog: Keynote Conversation with Google AdWords VP Jerry Dischler was originally published on BruceClay.com, home of expert search engine optimization tips.

This is a real-time report from SMX Advanced 2015.

Google AdWords VP of Product Management, Jerry Dischler (left), on stage at SMX Advanced with Ginny Marvin (center) and Danny Sullivan (right).

Jerry Dischler, who heads AdWords, is full of contagious energy as he gears up to talk about what’s working and what’s not in search ads and AdWords in this morning’s keynote conversation. Whenever there’s a Googler onstage, the crowd is packed and this session is no exception.

Everyone wants to know the latest news in paid search, and Dischler won’t disappoint as he delves into topics near and dear to search marketers hearts, including:

How advertisers can capitalize on the opportunity of Micromoments

The future of text ads and buy buttons

Advances in attribution models

The reliability of the “Estimated Total Conversions” metric

And how marketers are marrying online and in-store strategy

Facilitated by Search Engine Land Editor Danny Sullivan and Search Land Paid Search Correspondent Ginny Marvin, the keynote conversation transpired as follows.

Microments

Google confirmed last month that mobile queries (meaning smartphone only) had exceeded desktop queries in ten countries. Dischler talks about what that means to Google.

“This is a watershed moment. The reason mobile is growing so fast is that consumer behavior has changed fundamentally – the average consumer is bouncing across devices. The linear and measurable process that was the hallmark of search marketing for a long time. Now, however, we have a much more fragmented journey which we’re calling micromoments. Micromoments are snippets of intent you have throughout your day,” Dischler says.

Micromoments can happen anywhere at any time. Dischler gives of an example of how mobile has truly changed the way we can accomplish tasks:

“There’s this woman who bought a plot of land to build a house on. She’s doing the entire process in her spare time on her mobile phone. How could she have done that two, three, five years ago? She would have had to devote a block of hours at home on a desktop. Now she does it in the spare five minutes she’s waiting for her kids, has a break between meetings, etc. She’s interacting with builders, etc. through the mobile device. Builders have traditionally expected this big purchasing decisions to happen through desktop, but that’s changed. So builders and similar businesses like that need to think about mobile, too. And frankly they’re not,” Dischler says. “When people are ready to make commercial decisions, businesses need to be able to capture those moments.”

Text Ads: Here to Stay

Marvin asks if Google will move away from text ads.

“They’ll be here to stay for a long while. Richer formats have already been seen in extensions. We’re trying to enhance the text ads to make them more valuable for users. When session lengths are as compressed as they are on mobile, we need to deliver the information faster. Users are also expected more than ever before,” Dischler says. “We build a lot of these formats with structured data, which lets businesses advertise in a way that’s more natural to them.”

Jerry Dischler, Google AdWords Product Management VP

The ‘Buy Button’

“The ‘Buy Button’ is coming to search ads – you’re going to be able to buy straight from the search. Can you tell us more about it?” Sullivan asks.

“First of all, we have no intention of being a retailer. We want to allow retailers to have effective experiences where they can drive conversions. We want to provide a great experience. Buy buttons were created to drive mobile transactions. Mobile conversion rates are a little lower than desktop – keyboard input can be difficult, pin information may not be readily available, etc. So we thought what if we can short-circuit this a little?” Dischler explains.

“There’s much more motivation in a mobile context than a desktop context. We’re trying it out and we think it’s likely to be great for users and advertisers if we can get it out. We just want to make the process easier for consumers and increase online conversions and we think this is going to be an effective way to do this. Pinterest and Instagram are testing this also,” Dischler concludes.

The “Buy Button,” which as-of-yet has no official name will be initially available on Android and may come to iOS in the future.

Data Driven Attribution

“We think that finding the right attribution model for your business is really important when we survey, and most people were using a last click attribution model and they’re looking for new ways. We’re going to offer a bunch of different attribution choices, including data driven attribution,” Discher says. (For Bruce Clay, Inc.’s report on the newly announced AdWords attribution models, see our May 11, 2015, article AdWords Upgrades!)

Barriers to Data Driven Attribution

Dischler explains that there are two barriers that lead to people to not adopt this model: the complexity of implementing tools and organizational challenges.

“If you’ve got different groups, for examples, and you take a look across all your ad formats you may find by ad on display it imp the per of your search campaigns but might involve reevaluating the success criteria for each group. While we can’t fix that we can offer advice, and we can work on the tools. My big message for attribution is do holistic measurement and have a holistic consideration when you advertise. Everything we’re working on is designed to achieve that holistic objective,” he says.

Offline Measurement & Holistic Thinking

Dischler says that the work being done at AdWords is really exciting. He says that estimated total conversions reporting in AdWords has been around for a while. They have been doing releases for offline conversions – one is to measure store transactions. They also announced the store visits tools — they take data that users have shared and use to it approximate store visits. Google thinks this is really powerful. If you’re only taking a look at the online value or mobile you’re really missing out. For advertisers who are doing store visit measurement, a lot of these folks are finding that Google delivers more value in stores than online. The best advertisers are doing some really neat things with this data.

Famous Footwear found that 18 percent of ad clicks were leading to store visits. They took the top keywords that were being used for their ad campaigns and rearranged their stores around those products. That’s a holistic approach. They kind of turned their store into a landing page.

Estimated Total Conversions: Should We Trust Them?

Marvin points out that Google has made a big push with estimated total conversions. She asks Dischler what that data means for marketers, and why marketers should trust data from estimated total conversions.

Dischler explains that Google’s process for estimating total conversions is both conservative and precise.

“The approach we have is pretty unique – we have a large population of users who share location history with us. We have hundreds of millions of buildings mapped with Google maps. On top of that, we have a panel of more than a million people that we are able to push out questions to. For example, if we’re not sure one of the panelists is actually in REI, we can ask – are you in REI? They tell us, and we use all of these things to constantly improve precision,” Dischler explains.

What are a marketer’s choices, then, when it comes to estimated conversions? They can:

Ignore the data

Take a look at the data and see if it works for you

Accept the data 100 percent

Dischler recommends accepting the data 100 percent, and says that soon, Estimated Total Conversions will be a bidding factor.

Staying Ahead of the Competition

As the keynote conversation with Dischler comes to a close, Marvin asks how Google plans to stay ahead of the competition.

“We want to build a great platform for those moments of intent and we want to do so across webs and apps and I think Google has a great platform for those moments. We have a number of other properties like Gmail, Maps and YouTube. We build on our platforms year over year,” Dischler says. “If you take a look at media and entertainment, we have rich formats where you can watch things, for example, straight from the SERP. We have app download ads and we think that a huge change to the model is the fact that you can get ad distribution in the Play Store. And then there’s app deep linking – between the Chrome and Android team, we’re trying to push apps closer together.”

June 2, 2015

SEO AMA: Googler Gary Illyes Answers Burning Search Questions at #SMX Advanced

SEO AMA: Googler Gary Illyes Answers Burning Search Questions at #SMX Advanced was originally published on BruceClay.com, home of expert search engine optimization tips.

Googler Gary Illyes sits, cool and collected, on the SMX Advanced stage. Across from him is Search Engine Land Editor Danny Sullivan. Sullivan is about to ask Illyes anything and everything digital marketers have been dying to know in this “Ask Me Anything” session.  In the weeks prior to SMX, SEOs have been sending in their questions. Sullivan will ask Illyes those questions and anything else he sees fit to quiz the Google Webmaster Trends Analyst on.

In the weeks prior to SMX, SEOs have been sending in their questions. Sullivan will ask Illyes those questions and anything else he sees fit to quiz the Google Webmaster Trends Analyst on.

It seems like every SMX attendee is in the standing-room only audience. Read on to find out everything Illyes had to say, including his insights on:

Mobilegeddon (a term, for the record, which he hates)

The Quality Update

The future of Panda and Penguin

App Indexing

Danny Sullivan: What’s it like being the new Matt Cutts?

Gary Illyes: There’s no new Matt Cutts. There’s a team taking on his role. For example, someone is leading the web spam team and I’ve taken on the PR role.

DS: What’s the deal with Panda and Penguin?

GI: Panda – there will be an update/data refresh in 2-4 weeks probably. Our goal is to refresh Panda more often.

Penguin – that’s a hard one. We are working on making Penguin update continuously and not have big updates, but that reality is months away.

Will there be a way in the future to tell if major automatic penalties or actions have been levied against your site?

GI: What we are working on is trying to be more transparent about what we are launching. We want to something similar to what we did the mobile-friendly update – or, as you prefer, ‘Mobilegeddon.’ We would probably handpick a few launches that would be very visible and talk more about those. We are looking into how we could make an algorithm that would show webmasters when they have hacked content, for example. (That’s somewhere we might be transparent, for example). We also have a few other launches that I’d consider good candidates for being more transparent.

DS: Last month, we had the Quality Update. How is Google assessing the quality? How do clicks factor in?

GI: We use clicks in different ways. The main things that we use clicks for evaluation and experimentation. There are many, many people who are trying to induce noise in clicks. Rand Fishkin, for example is experimenting with clicks. Using clicks directly in ranking would be a mistake. In personalized results, if you search for apple, we would most likely serve you a disambiguation box. We have to figure out if you mean the company or the food. Then, we’d look at the click you made.

DS: How is the Mobile-Friendly update going?

GI: I think there were two things – first, we weren’t clear enough on what “impact” meant for us. I said at one point the impact would be bigger than panda and Penguin combined. The number of affected URLs was much greater. The number of search queries affected was much greater. The other thing was that sites actually did switch right before 4/21 to mobile-friendly and that was great. That worked pretty good.

DS: What’s going on with app indexing? Do we all need an app?

GI: Sometimes it makes sense to have an app, but it’s not for everyone. If you’re an informational site, you probably don’t need one. But if you’re a car dealer, you probably want one.

We do have universal results, as you know. For news, we have universal results. Apps are like that. We want to present users another way to consume content. Taking out one step from the user journey (by suggesting downloading an app) is a good thing.

Will direct answers eat all the other results? Is SEO dead?

GI: SEO is definitely not dead and SEO is good for the users, except for when people try to game the system.

When someone is a featured snippet, what kind of traffic does that send?

GI: Eric Enge talked about this at SMX West and really nailed it. It can be extremely helpful for a site to have feature snippets. It can send traffic to your site and it can boost brand reputation.

Does being in a featured snippet mean your site is more trusted by Google?

GI: No. It means you provided a direct answer.

DS: How strong a signal are brand entities vs. links?

GI: Most entities come from structured data and Wiki data. If there’s no structured data, it would be a pretty hard thing to extract from.

DS: Will you be using data from Twitter as search signals?

GI: To the best of my knowledge, we are not using anything like that. We are in the very early stages of Twitter integration.

DS: You’re going to be depreciating Ajax?

GI: It’s not that Ajax is going away and we won’t support that – since we are rendering the content anyway, we just want to save you from doing that. We can interpret the content on our end without any help from the webmaster. You don’t have to do anything special.

DS: How important is URL structure for today’s ranking?

GI: The length definitely doesn’t matter. The structure matters for discovery, not ranking. If something is far away from the homepage it signals that you consider that as less important.

DS: How is that the reported number sitemap links can decrease day over day?

GI: Sometimes we have to get rid of things that people will never search for from our index (because of storage issues).

SMX Liveblog: Mobile Is Still the New Black Says Google’s Gary Illyes

SMX Liveblog: Mobile Is Still the New Black Says Google’s Gary Illyes was originally published on BruceClay.com, home of expert search engine optimization tips.

This is a real-time report from SMX Advanced 2015.

April 21, 2015, arrived with a bang. Google announced it was the day that mobile-friendliness would officially be a ranking signal for Google mobile search results. That day, dubbed “Mobilegeddon,” wasn’t the the day search marketing changed, however. Improving a website’s experience for mobile users has been an SEO mission a long time in the making. SMX Advanced speakers, including a Google representative, share thoughts on improving a site in light of Google’s mobile-friendly update.

Left to right: Lara Scott, Mitul Gandhi, Barry Schwartz and Gary Illyes

Speakers:

Gary Illyes, Webmaster Trends Analyst, Google (@methode)

Laura Scott, Strategy Lead, Merkle | RKG (@RKG_LauraScott)

Mitul Gandhi, Chief Architect, seoClarity (@seoclarity)

Gary Illyes: Mobile is Still the New Black

“Ten years ago we (Google) noticed that mobile would become a huge thing.” 52 percent of US smartphone users use their phone to do product research before buying things. 40 percent of UK online sales take place on a smartphone or tablet.

Reasons Not to Invest in Mobile? #Myths

These are the misnomers Gary has heard from people pushing back against mobile-friendly:

People sit in front of the TV more than they’re on their phones.

Those who have a smartphone are usually just young people

Most young people use phones for social media and entertainment

Mobile devices are difficult to type on; the screen is tiny and purchasing anything is a nightmare. Plus, you can’t trust things you can only see on a small screen.

The Reality of Mobile

This is the year where the number of mobile searches exceed desktop searches in many countries.

People watch more videos on smartphones than PCs and tablets combined.

30% of UK consumers look at their smartphones within five minutes of waking.

Nomophobia: the fear of being without a mobile device

66% of commercial purchases are made on the mobile web

5% uptick in the mobile friendliness since Feb. 26, 2015.

Mobile is still the new black.

A quality mobile experience is super important for web users — all numbers show that. If you start looking at people on the subway, they are doing all sorts of things. People, in fact, are investing in cutting-edge devices so they can better access mobile technology.

Why Am I Not Mobile-Friendly?

Most sites that are not mobile friendly are top tier sites – it’s hard for them to go mobile friendly – they have other priorities. Mobile friendliness is calculated during our rendering process. For example, if you disallow crawling and JavaScript files, we will have a hard time understanding the page and then we can’t consider it mobile-friendly.

When you don’t configure the viewport, we can’t label you mobile-friendly.

Font size is important. You need to be able to read the content.

You shouldn’t ignore the rise of mobile. Internet penetration means that we are getting more and more smart phone devices. In 2015 Google had more smart phones activated than the two previous years.

Laura Scott: How to Optimize Your Site for Mobile Users

Mobile has changed so much – it went from a phone that connected us to our friends and family to a device that connects us to … everything.

Mobile solutions:

Separate URLS

Dynamic serving

Responsive design

Optimizing Your Mobile Solution

Don’t block important resources like JavaScript or CSS. Google recently announced that they’re crawling and understanding JavaScript.

Using the Vary: User-agent HTTP header

Implement user-agent Redirects

Annotations in HTML or the XML Sitemap

What should we be working on doing now:

Fixing faulty redirects

Removing redirect Chains

Improving latency (look at Page Speed Insights)

Fixing smartphone-only errors (look in Crawl Errors Report)

Mitul Gandhi: Mobilegeddon and the Theory of Evolution

Ghandi, the chief architect and cofounder of SEO Clarity, says that a lot of expectations were shattered when April 21 came and went. Many people, he posits, expected bigger SERP swings. The mildness of the fallout can be attributed to the fact that people had already taken action prior to April 21. Remember – Google told us on Feb. 26 this was coming. Ghandi did a massive study that showed that more than 75 percent of the top ten results were already mobile-friendly prior to 4/21

The Feb. 26 announcement galvanized the industry to take action.

Google made us jump from one level of evolution to the next as an industry. It made us look at what we should already have been doing: building better search experiences for the users.

Build your sites for users. It’s time to redefine SEO. Search engine optimization emphasizes the wrong thing. Let’s call it search experience optimization.

Conversion rates on mobile are 50% lower than desktop – let’s focus on improving

Test Mobile Friendliness

See what Googlebot-Mobile sees with Fetch as Google

Review mobile errors

Learn from your users

Benchmark and track performance

Q & A with Gary Illyes

What might you focus on next when it comes to mobile?

We are looking into interstitials. There are several sites, including Search Engine Land, that throw interstitials that I can’t for my life, close. I think interstitials will be the next thing.

How accurate is the mobile usability report in the Search Console?

It’s pretty damn accurate. It’s using the same specification that we use in rankings. It’s very, very close to 100 percent.

Do you foresee mobile search indexes being separate?

We are looking into whether or not we can do that. We are still working on that. It’s a huge change. And it’s nothing that would happen tomorrow. People don’t link to mobile URLs, for example – if people don’t link to mobile URLs, we can’t use Page Rank.

So this might not happen?

It might not happen. But I do hope we can move to a stage where we (can’t look at each separately).

What is the major mobile trends you see other than the rise of searches?

Video. I travel a lot and I like looking at what people are doing on their mobile phones. In Singapore, there is way better internet than the U.S. On the subway I was looking at what people are doing – they’re watching videos or listening to videos. In Sydney, surprise, they’re also watching videos. In Melbourne, they’re watching videos. In California, guess what – they were watching videos. The next big thing will have something to do with mobile video. There are lots of opportunities there.

Some sites that aren’t mobile-friendly still rank highly even when their competitors are mobile friendly (like Full Tilt Poker for example). What’s that about?

At SMX West 2015, someone asked if navigational queries would be affected. Back then I couldn’t answer directly, but now I can: navigational queries are protected. If we know that a URL is ex navigational for a query, we do want to show that on a user’s radar. If you search for Barry’s cats, let’s say, and we know that rustybrick/cats is super relevant for that query, we still want to show it even if it’s not mobile-friendly.

If the content on a mobile page is lengthy, is it considered good practice to hide content?

Don’t do that.

Do you plan on pre-announcing future?

I would love to. Announcing the mobile-friendly update was awesome – I really loved how transparent we could be and how much we could communicate things updates.

We have a few changes that we could talk about, probably not as transparently, but my personal ultimate goal is to become super transparent about our big launches. We might, for example, do some changes for hacked sites. It’s pretty obvious for people what hacked means.

SMX Liveblog: Mad Scientists of Paid Search

SMX Liveblog: Mad Scientists of Paid Search was originally published on BruceClay.com, home of expert search engine optimization tips.

This is a near-real-time report from SMX Advanced 2015. Please feel free to ask any questions you may have in the comments and we will reach out to the speakers for clarification.

2015’s “The Mad Scientists of Paid Search” panel features PPC pros at the top of their game: Soren Ryherd, Andrew Goodman and Andy Taylor. Learn why current tools aren’t ideal for the multi-channel landscape, hear what influences a conversion, and get other deep thoughts for paid search professionals to think about.

Left to right: Andrew Goodman, Soren Ryherd and Andy Taylor

Soren Ryherd: The Problem with Tools Today

Soren Ryherd is president and co-founder of Working Planet. His presentation is Don’t Murder the Prophet – aka Don’t Murder Profit. Ryherd warns the audience that tools that report on single-channel performance or aim to optimize a single channel (i.e., search) don’t cut it. Why? Most of the available analytics tools are based on search, but user behavior is dramatically different on search compared to other media.

Bid management, channel assessment and programmatic buying break down when viewed independently, because they are based solely on in-channel performance – but users don’t behave that way. Uses don’t care about your channels.

The user’s path of engagement is determined by the type of media. Search behavior is highly influenced by behavior taking place in other channels. However, the same is not true of how users may be influenced when interacting with other media. For example, when a user is interrupted during something like viewing a video or reading an article, they’re much less likely to interact with your ad.

Out-of-channel robs value from in-channel. Our job as optimizers is to support value on things that are likely to break – and the further you get away from search, the more likely channels are to break.

We need to move away from the constraints and assumptions of current tools. Tools and tracking were built for device as proxy for person and a click as the only path of engagement. Both of these are wrong.

Remember, brand traffic is not a channel. Brand traffic is the end of a conversation you created some other way. It should only be looked at in combination with other channels/activity/data.

Channel optimization can lead to inefficient results.

Baseline for out-of-channel behavior cannot be zero.

Ad structure should reflect user behavior.

Work backwards from sales:

Revenues are real

Models cannot predict more sales than actually occur

Feeding real financial data into optimization is critical

Out-of-channel behavior shows up in the unknown bucket of brand and direct.

Understand what drives the unknown bucket lets you better optimize campaigns.

Be smart: complexity of user behavior offers opportunity – but only if we embrace out-of-channel behavior. Don’t murder profit.

Andrew Goodman: Getting Real about ‘Enhanced CPC’

Andrew Goodman is the president of Page Zero Media. An article published by Google tells us that “Enhanced CPC could be described as an ROI turbocharge setting for your existing Max CPC campaigns.”

Even though it says this, it’s very hard for advertisers to know whether Enhanced CPC is better or not.

Here’s a recent experiment Goodman performed with Enhanced CPC:

Turned enhanced CPC off

Turned it back on a short time later

Did the test on five small-to-midsized campaigns

Also tested on one very large campaign (that included hundreds of brands)

Result: Although performance didn’t conclusively improve, enhanced campaigns show up in a higher CPC neighborhood. If Google can go from advertiser to advertiser and increases their bid, it might work for a while, but not ultimately.

How to Best Look at Data

We need to take greater stock in long-term data.

Ignoring numbers leads to worse results. But over-interpreting every number would be managing shadows.

Many foreign orders in travel and tourism may be large group orders. For years, we may have been missing out on international opportunities, bidding too low overall. Use the right KPIs.

In sports, streakiness is a non-phenomenon that is generally reduced to pure randomness in bunching. Take a coin flip experiment – sometimes you’ll have six heads in a row, but it doesn’t mean anything.

Looking for any anomaly is less valid when you look for it after it’s happened.

What influences a conversion? It’s not just “the words you wrote.” It’s also:

Ad position

Ad extensions

Top or side

Long headline or short

Mobile or computer

Time of day

Season

Initial vs. lifetime value

Universal or product specific appeal

Best landing page

Filtering function

Andy Taylor: A Look at the Paid Search Landscape

Andy Taylor, Senior Research Analyst at Merkle | RKG, will present an overview of paid search — what matters in an AdWords auction plus trends and forecasts for search advertising on Google and Bing.

What goes into the AdWords auction?

Bid

Quality

Format impact

All this leads to AdRank and cost.

“Only ads with sufficiently high rank appear at all,” said Hal Varian, Google’s chief economist, in a Google webinar that’s a must-watch for paid search pros: “Insights into AdWords Auctions.”

“Greater importance is placed on the bid rather than the CPC.” — 2007 AdWords update

Google Year-over-Year Growth

CPC growth is accelerating.

In mid-2014 Google.com impressions began to decline.

First Page minimums have been on the rise since 2014 Q2

Paid search landscape is becoming more competitive everyday

Google is reducing their propensity to show ads:

Users are shown fewer ads to choose from – this could be good or bad but Google has a lot of data to determine which users want ads and which don’t.

Google revenue is still going up.

Reassess goals budgets and expectations. Past performance may not provide a good benchmark for future growth. Also, note that Bings ad clicks are becoming less expensive.

Organic search has picked up over the past three years. SEO is not dead.

The auction is Google’s game. There’s nothing inherently wrong with Google changing the rules, but it does impact us as advertisers so it’s important for scientists to dig in and find out when the rules of that games have changed and adjust accordingly.

June 1, 2015

Introducing DisavowFiles: Free Crowdsourced Tool Brings Google Disavow Link Data to Light

Introducing DisavowFiles: Free Crowdsourced Tool Brings Google Disavow Link Data to Light was originally published on BruceClay.com, home of expert search engine optimization tips.

Ever wonder what’s inside the search engines’ black box of disavowed backlink data?

Google and Bing are the only parties who can see the disavow data given to them by site owners. We, the webmaster community, can’t access this data to help us make informed decisions when vetting backlinks, researching sites, or creating our own disavow files.

Let’s change that.

Today we’re launching DisavowFiles, a free, crowdsourced tool aimed at bringing transparency to disavow data. Sign up for free at DisavowFiles.com.

Disavow Files Are a Fact of Life for SEOs

Webmasters have to stay on the defensive in the battle against link spam. The first Google Penguin algorithm update penalizing link manipulation rolled out in 2012. Since then, black-hat linking schemes (such as link farms, buying links, and link comment spam) mostly don’t work.

But Penguin’s side effect for site owners has been harsh: Links from external sites can and do hurt your site — even if you did nothing to create those links. Too many spammy or unnatural-looking links aimed at your site can torpedo your site in the rankings. In the age of Penguin penalties, SEO-minded webmasters have to be vigilant about their sites’ link profiles.

Unfortunately, the process of backlink auditing, removal and disavowal is tedious.

First you have to comb through usually thousands of backlinks, looking at each domain and web page to try to identify the shady ones. Even SEOs who do it all the time can spend days evaluating a new client’s backlink profile. And that’s just the first step!

Next begins the process of contacting the site owner to request the link be removed, tracking the contact, following up to make sure the link is really gone, rinse, repeat. As a last resort, the search engines let you disavow stubborn links. The entire painstaking link pruning process has become an SEO necessity in today’s world of link penalties.

Disavowing links can also be dangerous. We caution users of the search engines’ disavow links tools to always work with a professional and consider the risks of disavowing links before using the tool. As SEOs, we do our best to seek and destroy just the bad links without disturbing the good ones that are actually helping a site rank in search results. Webmasters have no way to see how search engines judge their inbound links. Your site could have a horde of hooded bandits pointing links at it, and Google would never tell you.

Wouldn’t it be nice to know which sites have been voted as offenders? And see which links are bad according to everybody else? Enter the new Google disavow tool for link intelligence, DisavowFiles.

What Is DisavowFiles?

DisavowFiles.com is a crowdsourced tool that sheds light on disavow data. To be used with wisdom, it is a Google disavow service that focuses and simplifies the disavow file creation process. DisavowFiles is powered by three elements:

Many disavow files, submitted by participants into an anonymized database.

Reliable backlink data for each participating site, pulled from Majestic’s API.

Software tools and reports that let participants extract useful data.

Find out whether your site has been disavowed by others in the database.

What’s the Cost?

There is no cost to sign up for DisavowFiles. You share your disavow file with other members, and you get tools and reports for free.

To provide a crowdsourced database, we need disavow file data to produce useful results — and the more, the merrier. Crowdsourcing means that the more participating sites that join, the greater the benefit for all. So we’ve thrown the door open wide and invite as many SEOs and webmasters as possible to sign up. We have plans for additional upgrade features in the future. However, the basic service as it is launching today will be free forever.

The idea behind DisavowFiles.com is not to make a profit, but to solve a problem affecting the whole SEO community — a need for better intel to protect our sites from link spam.

What Participants Get

When you join and upload your site’s disavow file to DisavowFiles.com, you will be able to see:

Whether any backlinks to your site have been disavowed by other participants.

Whether your site has been disavowed by others in the database.

Click to enlarge.

1. Whether any backlinks to your site have been disavowed by other participants:

You will be able to simply run a tool to see any pages linking to your site that other participating webmasters have vetted as spam. This red-flags links that may be hurting your site’s rankings so you can investigate whether you, too, should disavow the links. Such intelligence may ultimately help a community of webmasters clean up link spam.

2. Whether your site has been disavowed by others in the database:

You will be able to find out whether your domain or any of your web pages have been disavowed. A report tells you which site URLs were disavowed, and how many times, by DisavowFiles participants. Think of this information as a chance to look at your own outbound links and ask whether your site is doing something unnatural that needs to be corrected. Since a site’s link profile includes both inbound and outbound links, this feature could be an eye-opener that saves you from a Penguin eyebrow-raise.

Regular Email Alerts

Ongoing email alerts tell you if there’s any news — any new disavow files uploaded that mention your site, or any backlinks to your pages disavowed by others. This keeps you informed without having to go into the application regularly to check for updates.

This database can also be used to vet external sites as potential link acquisition targets and for competitive research. The Domain Look-up tool lets you type in any domain to see:

How many times the domain has been disavowed in the database

Number of external backlinks

Number of referring domains

Alexa rank (a measure of site prominence)

Trust Flow and Citation Flow measurements from Majestic, to help you determine the site’s trustworthiness.

An export function lets you download all your newfound backlink information and other data as a CSV file, so you can work with it in Excel.

Want to check your own site against the DisavowFiles.com database? Try the free look-up .

Privacy for Members, Protection for Data

To make this service work, privacy is paramount. Participants remain anonymous in DisavowFiles. The tools and reports do not reveal the participants’ names or the websites whose disavow files are uploaded. When a disavow file is uploaded, the software automatically anonymizes the source and stores the links separately. Further, DisavowFiles.com is a secure site to help protect everyone.

Protections are built in against bad data entering the database. Similar to the way the search engines’ webmaster tools verify a site, participants will be given a customized HTML file to add to their website. Only if that page is found on the site will DisavowFiles.com then accept an uploaded disavow file. Each file is also put through a series of checks to make sure it is, indeed, a valid file. And to keep the data current, whenever a site uploads a new disavow file, it overwrites the previous one from that site.

Let’s Do This

DisavowFiles started as a wish list tool project at Bruce Clay, Inc. because, in a world of link penalties, why wouldn’t you want more disavow link data? SEOs and site owners can help each other have better intelligence on backlink disavowals with this new crowdsourced tool.

Sign up for free at DisavowFiles.com.